3d91b28b71fe80265f259480f54ed3dc.ppt

- Количество слайдов: 49

Staged Database Systems Thesis Oral Stavros Harizopoulos Databases @Carnegie Mellon

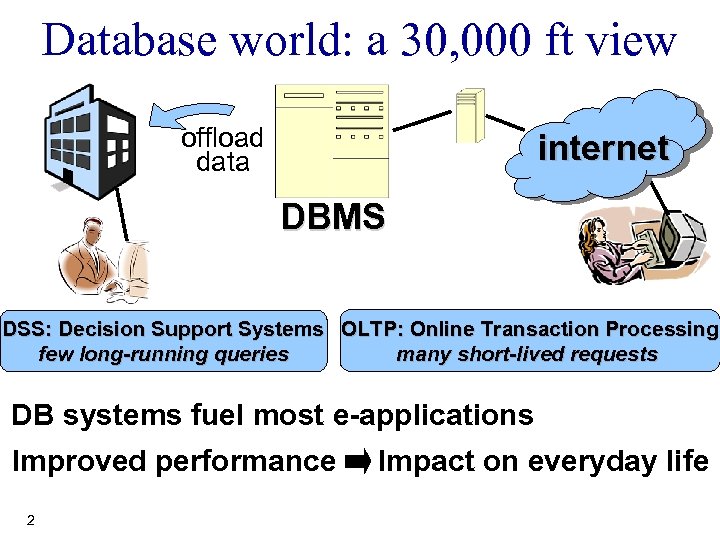

Database world: a 30, 000 ft view offload data internet DBMS DSS: Decision Support needs OLTP: Online Transaction Processing Sarah: “Buy this book” Jeff: “Which store Systems few long-running queries many short-lived requests more advertising? ” DB systems fuel most e-applications Improved performance 2 Impact on everyday life

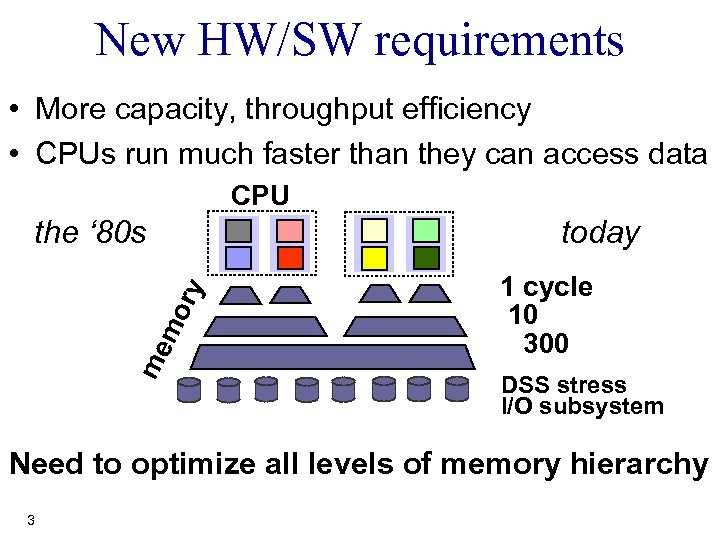

New HW/SW requirements • More capacity, throughput efficiency • CPUs run much faster than they can access data CPU today me mo ry the ‘ 80 s 1 cycle 10 300 DSS stress I/O subsystem Need to optimize all levels of memory hierarchy 3

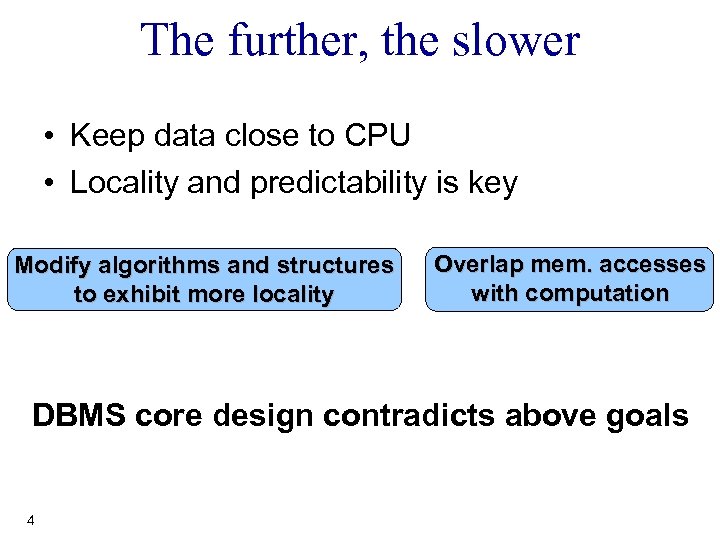

The further, the slower • Keep data close to CPU • Locality and predictability is key Modify algorithms and structures to exhibit more locality Overlap mem. accesses with computation DBMS core design contradicts above goals 4

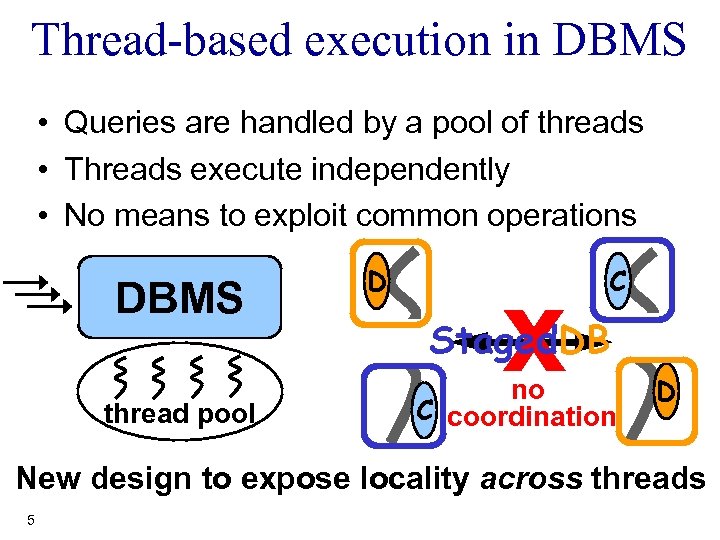

Thread-based execution in DBMS • Queries are handled by a pool of threads • Threads execute independently • No means to exploit common operations DBMS thread pool D x C Staged. DB no C coordination D New design to expose locality across threads 5

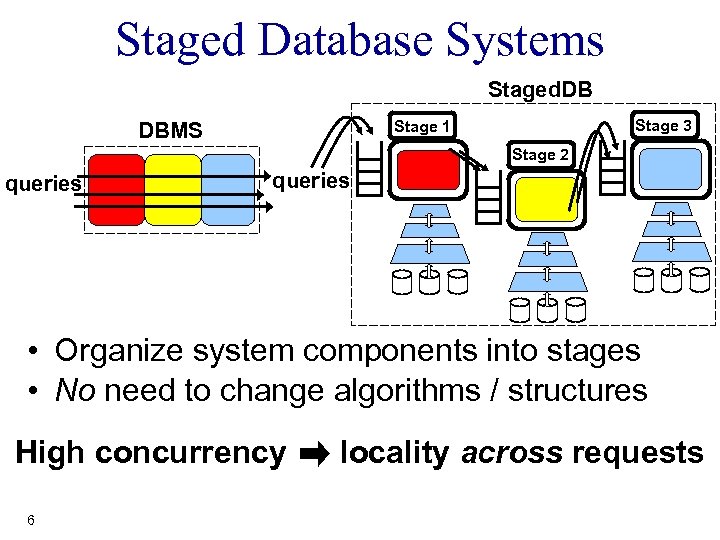

Staged Database Systems Staged. DB Stage 3 Stage 1 DBMS Stage 2 queries • Organize system components into stages • No need to change algorithms / structures High concurrency 6 locality across requests

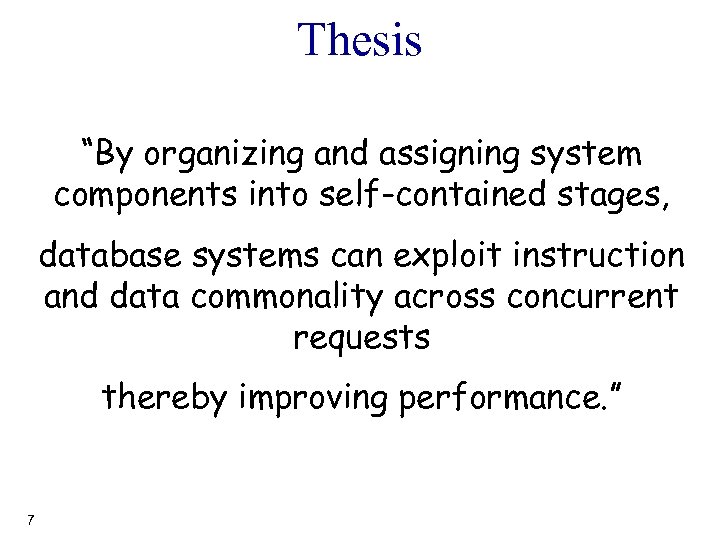

Thesis “By organizing and assigning system components into self-contained stages, database systems can exploit instruction and data commonality across concurrent requests thereby improving performance. ” 7

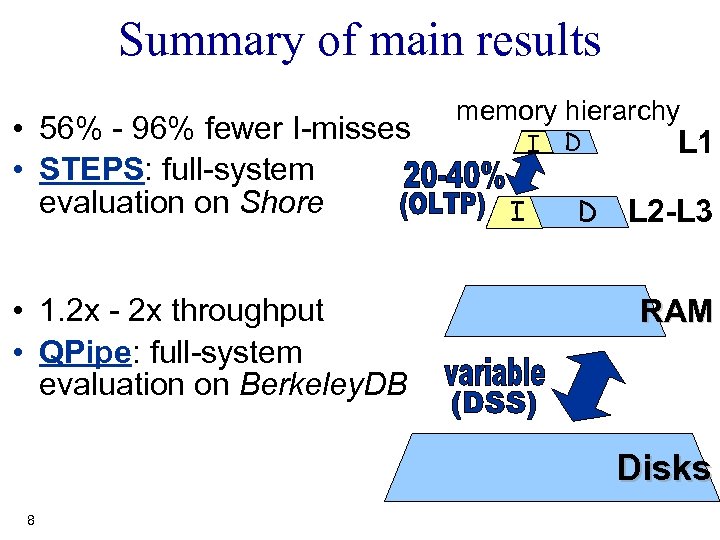

Summary of main results • 56% - 96% fewer I-misses • STEPS: full-system evaluation on Shore • 1. 2 x - 2 x throughput • QPipe: full-system evaluation on Berkeley. DB memory hierarchy I I D D L 1 L 2 -L 3 RAM Disks 8

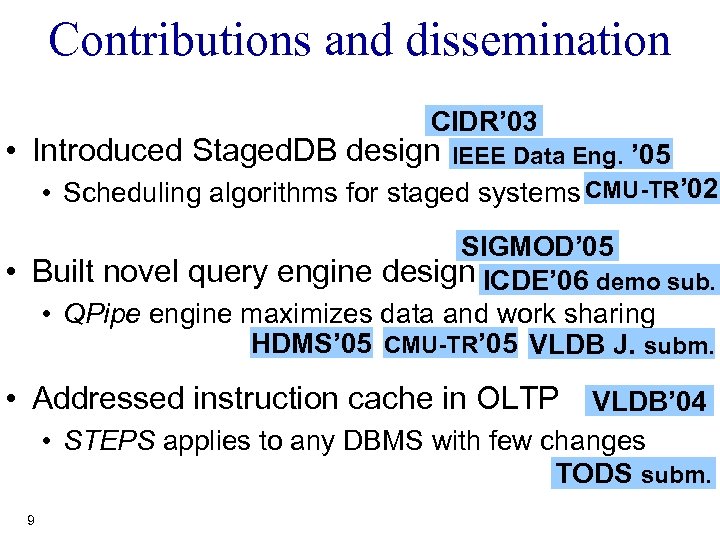

Contributions and dissemination CIDR’ 03 • Introduced Staged. DB design IEEE Data Eng. ’ 05 • Scheduling algorithms for staged systems CMU-TR’ 02 SIGMOD’ 05 • Built novel query engine design ICDE’ 06 demo sub. • QPipe engine maximizes data and work sharing HDMS’ 05 CMU-TR’ 05 VLDB J. subm. • Addressed instruction cache in OLTP VLDB’ 04 • STEPS applies to any DBMS with few changes TODS subm. 9

Outline • Introduction I D • QPipe • STEPS • Conclusions 10 DSS

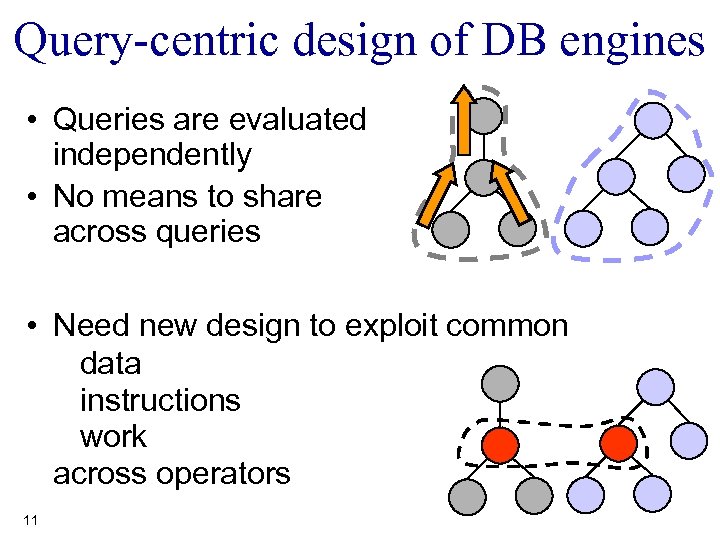

Query-centric design of DB engines • Queries are evaluated independently • No means to share across queries • Need new design to exploit common data instructions work across operators 11

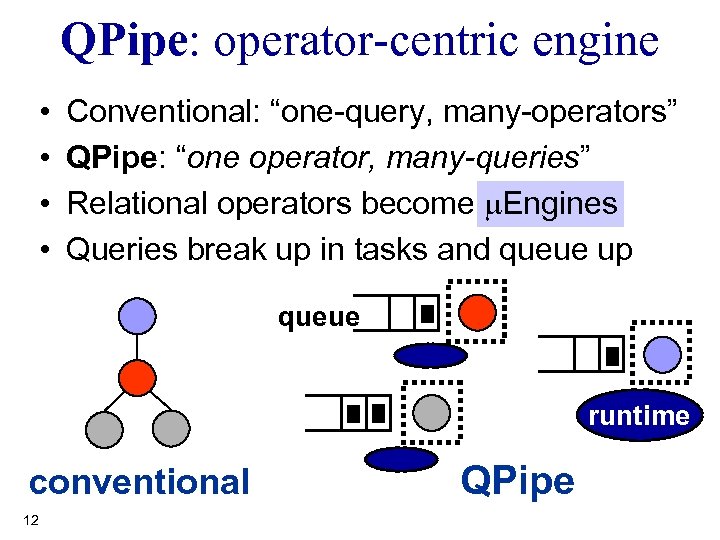

QPipe: operator-centric engine • • Conventional: “one-query, many-operators” QPipe: “one operator, many-queries” Relational operators become m. Engines Queries break up in tasks and queue up queue runtime conventional 12 QPipe

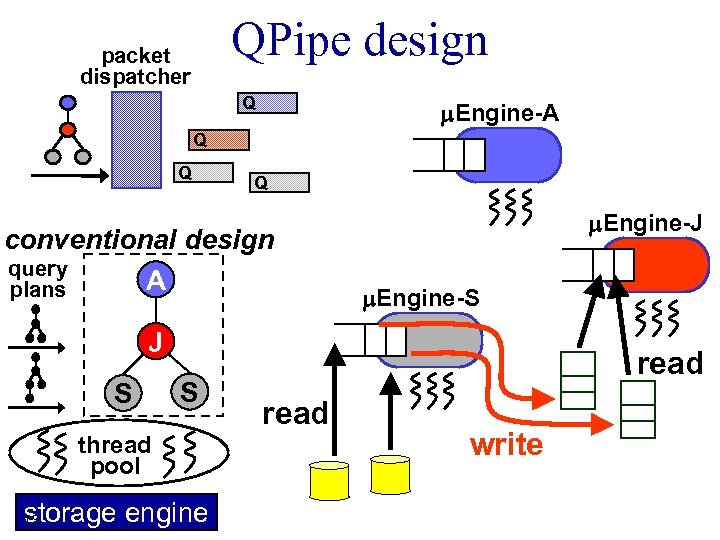

QPipe design packet dispatcher Q m. Engine-A Q Q Q conventional design query A plans m. Engine-J m. Engine-S J S S thread pool storage engine 13 read write

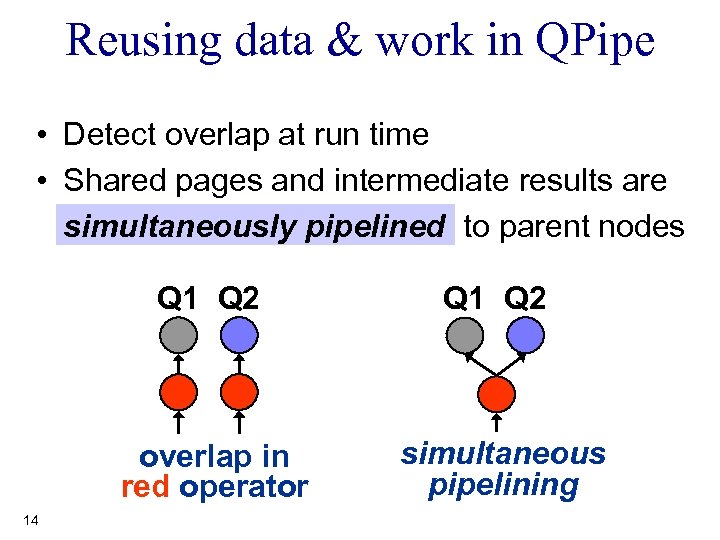

Reusing data & work in QPipe • Detect overlap at run time • Shared pages and intermediate results are simultaneously pipelined to parent nodes Q 1 Q 2 overlap in red operator 14 Q 1 Q 2 simultaneous pipelining

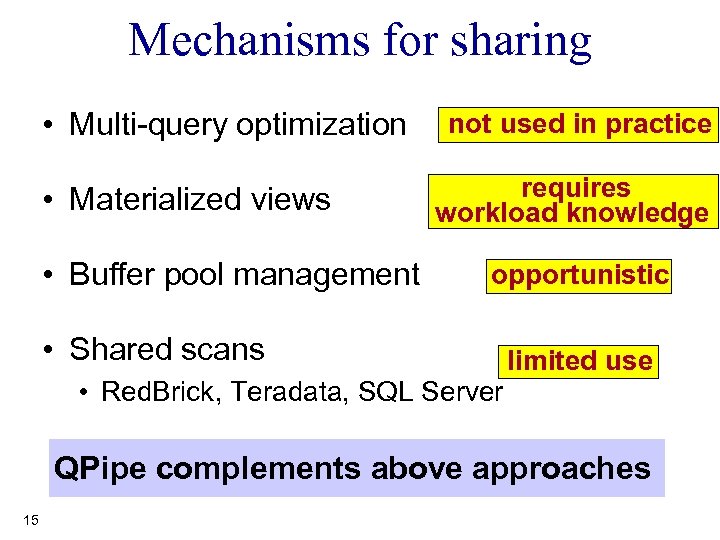

Mechanisms for sharing • Multi-query optimization • Materialized views • Buffer pool management not used in practice requires workload knowledge opportunistic • Shared scans • Red. Brick, Teradata, SQL Server limited use QPipe complements above approaches 15

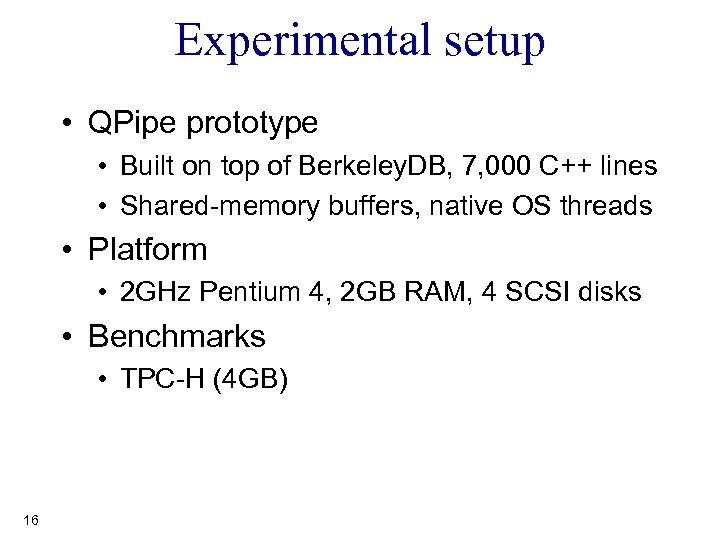

Experimental setup • QPipe prototype • Built on top of Berkeley. DB, 7, 000 C++ lines • Shared-memory buffers, native OS threads • Platform • 2 GHz Pentium 4, 2 GB RAM, 4 SCSI disks • Benchmarks • TPC-H (4 GB) 16

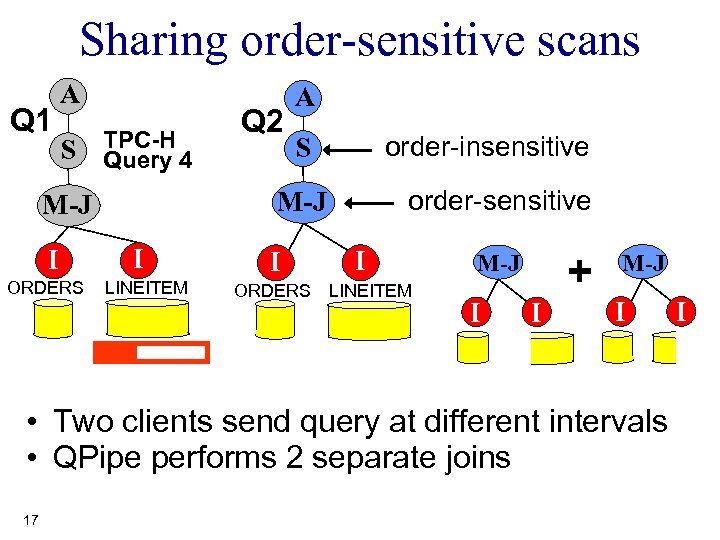

Sharing order-sensitive scans Q 1 A S TPC-H 4 Query ORDERS order-insensitive S order-sensitive M-J I Q 2 A I LINEITEM I I ORDERS LINEITEM + M-J I I M-J I • Two clients send query at different intervals • QPipe performs 2 separate joins 17 I

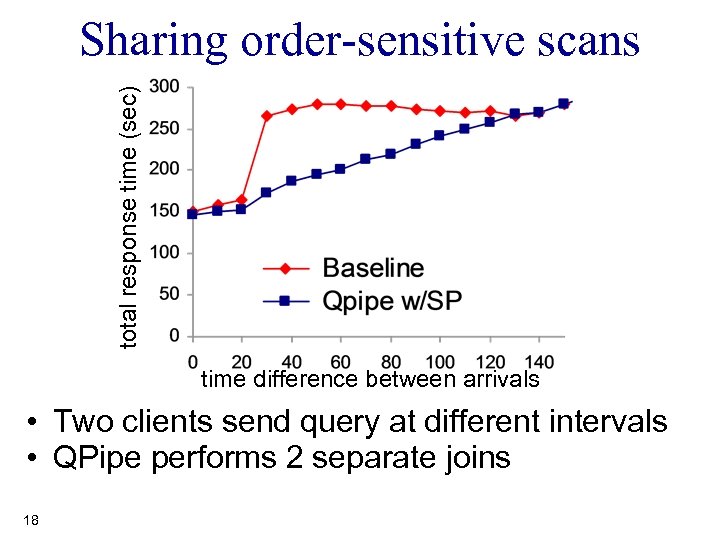

total response time (sec) Sharing order-sensitive scans time difference between arrivals • Two clients send query at different intervals • QPipe performs 2 separate joins 18

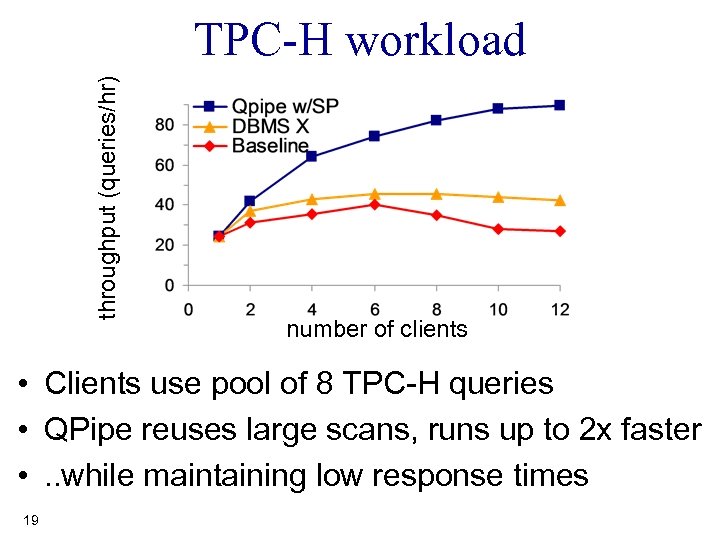

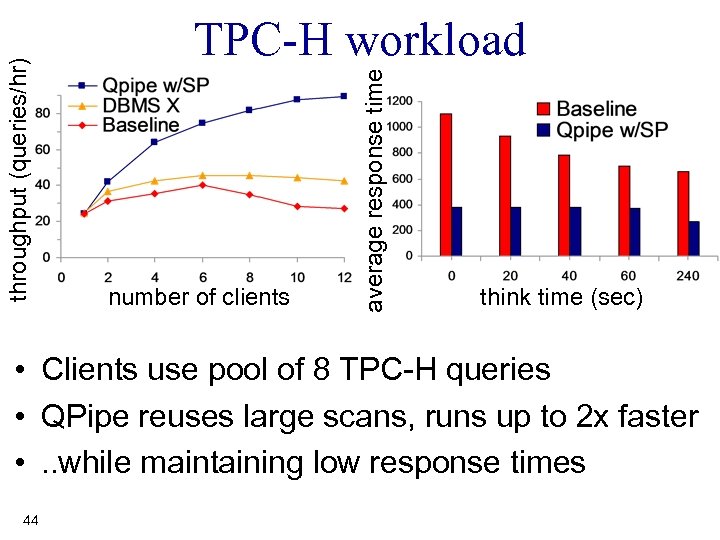

throughput (queries/hr) TPC-H workload number of clients • Clients use pool of 8 TPC-H queries • QPipe reuses large scans, runs up to 2 x faster • . . while maintaining low response times 19

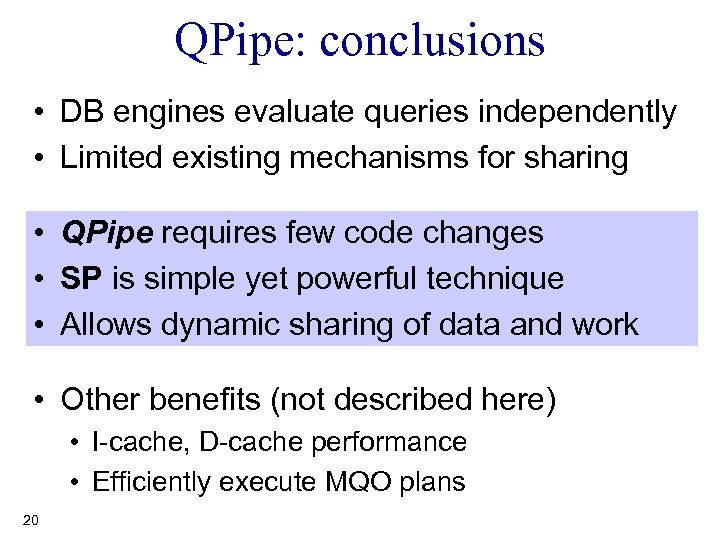

QPipe: conclusions • DB engines evaluate queries independently • Limited existing mechanisms for sharing • QPipe requires few code changes • SP is simple yet powerful technique • Allows dynamic sharing of data and work • Other benefits (not described here) • I-cache, D-cache performance • Efficiently execute MQO plans 20

Outline • Introduction • QPipe OLTP • STEPS • Conclusions 21 I D

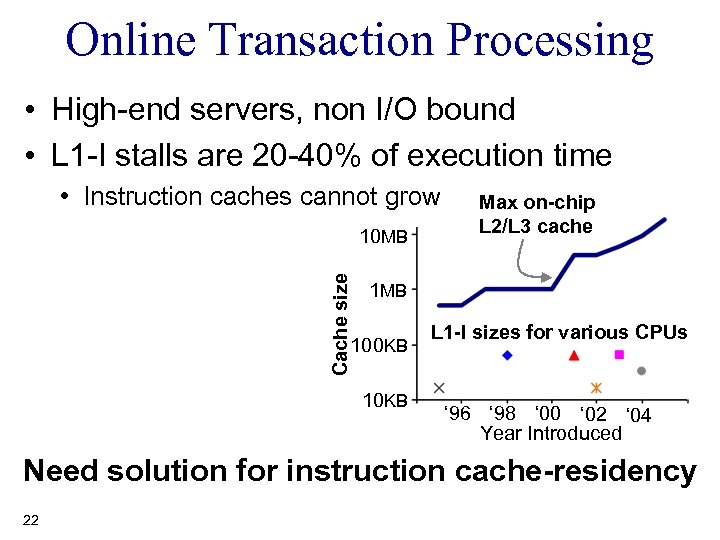

Online Transaction Processing • High-end servers, non I/O bound • L 1 -I stalls are 20 -40% of execution time • Instruction caches cannot grow Cache size 10 MB Max on-chip L 2/L 3 cache 1 MB 100 KB 10 KB L 1 -I sizes for various CPUs ‘ 96 ‘ 98 ‘ 00 ‘ 02 ‘ 04 Year Introduced Need solution for instruction cache-residency 22

Related work • Hardware and compiler approaches • Increased block size, stream buffer [Ranganathan 98] • Code layout optimizations [Ramirez 01] • Database software approaches • Instruction cache for DSS [Padmanabhan 01][Zhou 04] • Instruction cache for OLTP: Challenging! 23

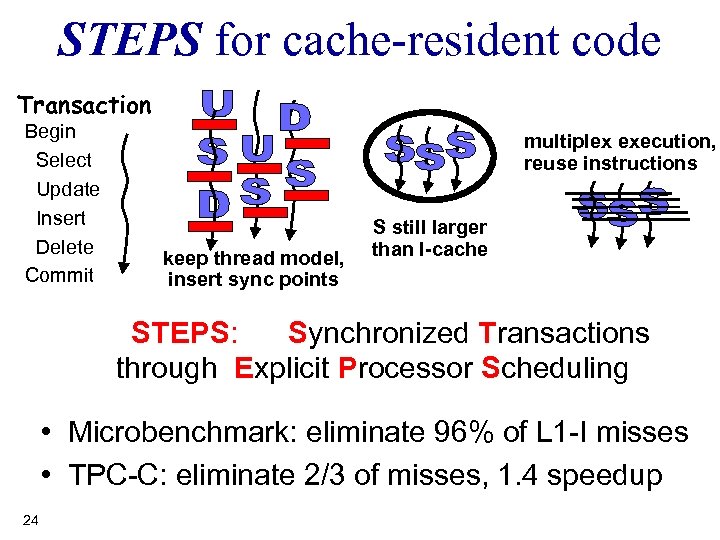

STEPS for cache-resident code Transaction Begin Select Update Insert Delete Commit multiplex execution, reuse instructions keep thread model, insert sync points S still larger than I-cache STEPS: Synchronized Transactions through Explicit Processor Scheduling • Microbenchmark: eliminate 96% of L 1 -I misses • TPC-C: eliminate 2/3 of misses, 1. 4 speedup 24

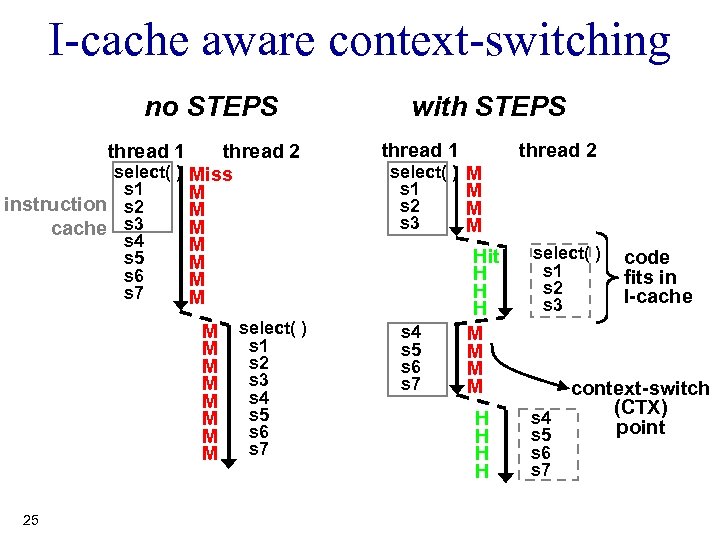

I-cache aware context-switching no STEPS thread 2 select( ) Miss s 1 M instruction s 2 M s 3 M cache s 4 M s 5 M s 6 M s 7 M thread 1 M M M M 25 select( ) s 1 s 2 s 3 s 4 s 5 s 6 s 7 with STEPS thread 1 select( ) s 1 s 2 s 3 s 4 s 5 s 6 s 7 thread 2 M M Hit H H H M M H H select( ) s 1 s 2 s 3 code fits in I-cache context-switch (CTX) s 4 point s 5 s 6 s 7

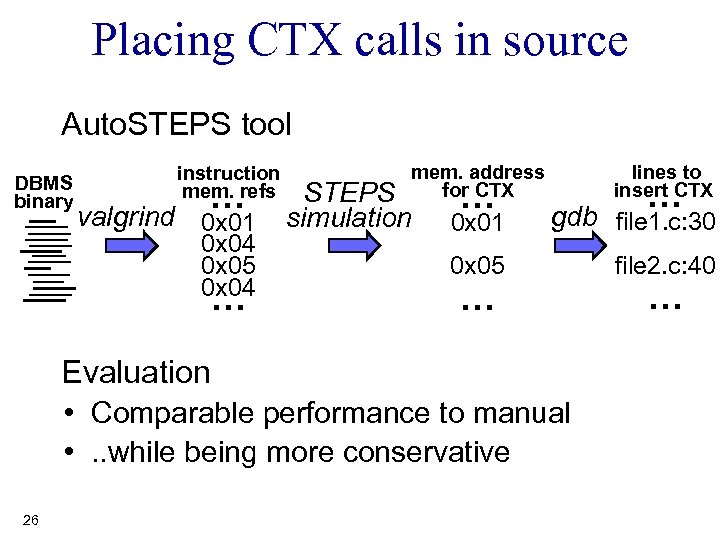

Placing CTX calls in source Auto. STEPS tool DBMS binary instruction mem. refs … valgrind 0 x 01 0 x 04 0 x 05 0 x 04 … mem. address for CTX STEPS simulation … … 0 x 01 gdb file 1. c: 30 0 x 05 file 2. c: 40 … Evaluation • Comparable performance to manual • . . while being more conservative 26 lines to insert CTX …

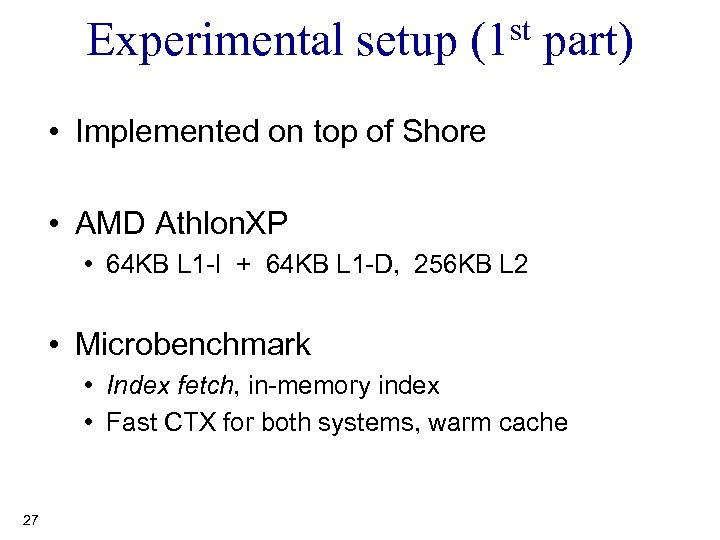

Experimental setup (1 st part) • Implemented on top of Shore • AMD Athlon. XP • 64 KB L 1 -I + 64 KB L 1 -D, 256 KB L 2 • Microbenchmark • Index fetch, in-memory index • Fast CTX for both systems, warm cache 27

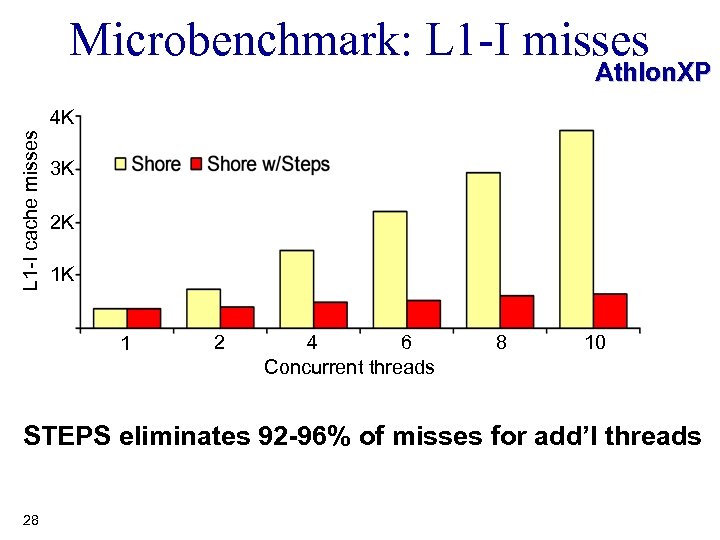

Microbenchmark: L 1 -I misses Athlon. XP L 1 -I cache misses 4 K 3 K 2 K 1 K 1 2 6 4 Concurrent threads 8 10 STEPS eliminates 92 -96% of misses for add’l threads 28

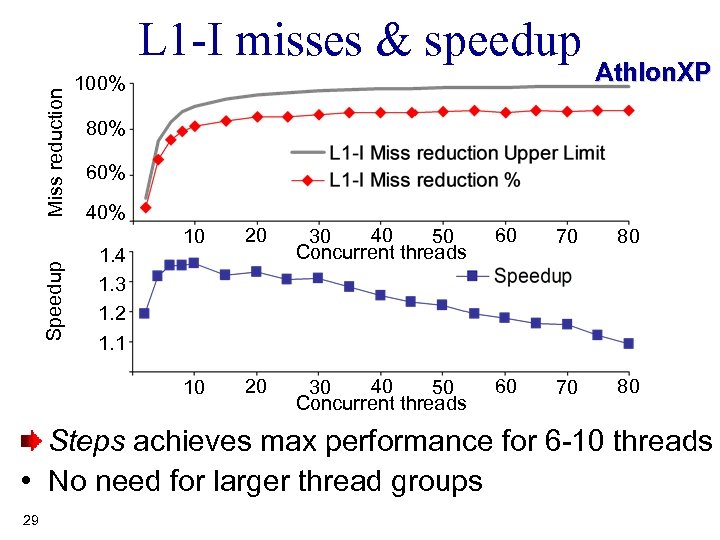

Miss reduction L 1 -I misses & speedup 100% Athlon. XP 80% 60% 40% Speedup 20 40 30 50 Concurrent threads 60 70 80 10 1. 4 1. 3 1. 2 1. 1 10 20 40 30 50 Concurrent threads 60 70 80 Steps achieves max performance for 6 -10 threads • No need for larger thread groups 29

Challenges in full-system operation So far: • Threads are interested in same Op • Uninterrupted flow • No thread scheduler Full-system requirements • High concurrency on similar Ops • Handle exceptions • Disk I/O, locks, latches, abort • Co-exist with system threads • Deadlock detection, buffer pool housekeeping 30

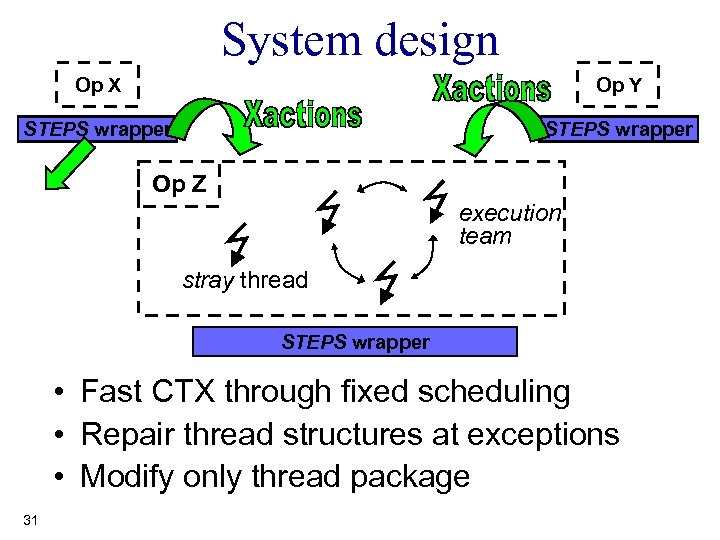

System design Op X Op Y STEPS wrapper to other Op Op Z execution team stray thread STEPS wrapper • Fast CTX through fixed scheduling • Repair thread structures at exceptions • Modify only thread package 31

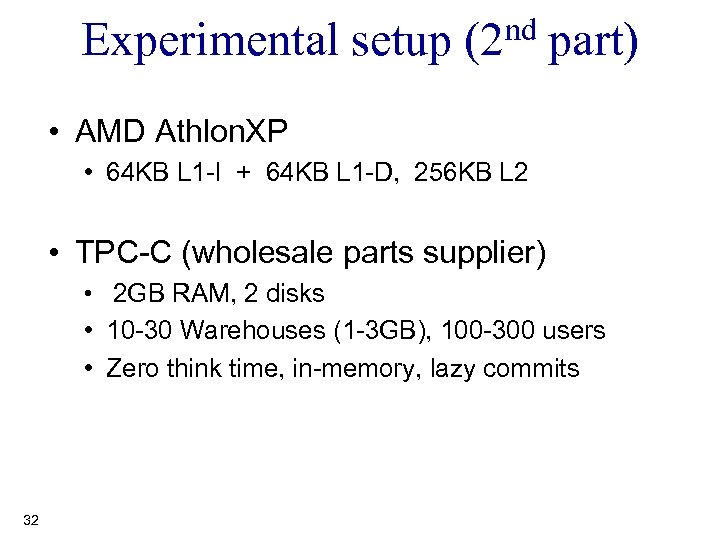

Experimental setup (2 nd part) • AMD Athlon. XP • 64 KB L 1 -I + 64 KB L 1 -D, 256 KB L 2 • TPC-C (wholesale parts supplier) • 2 GB RAM, 2 disks • 10 -30 Warehouses (1 -3 GB), 100 -300 users • Zero think time, in-memory, lazy commits 32

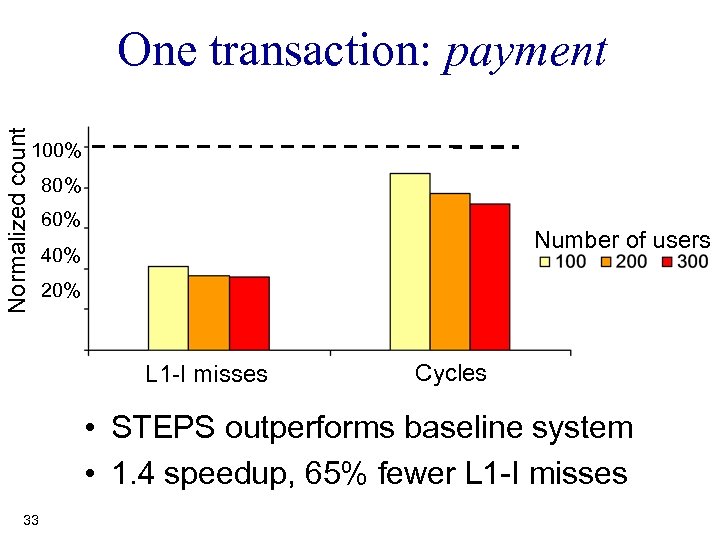

Normalized count One transaction: payment 100% 80% 60% Number of users 40% 20% L 1 -I misses Cycles • STEPS outperforms baseline system • 1. 4 speedup, 65% fewer L 1 -I misses 33

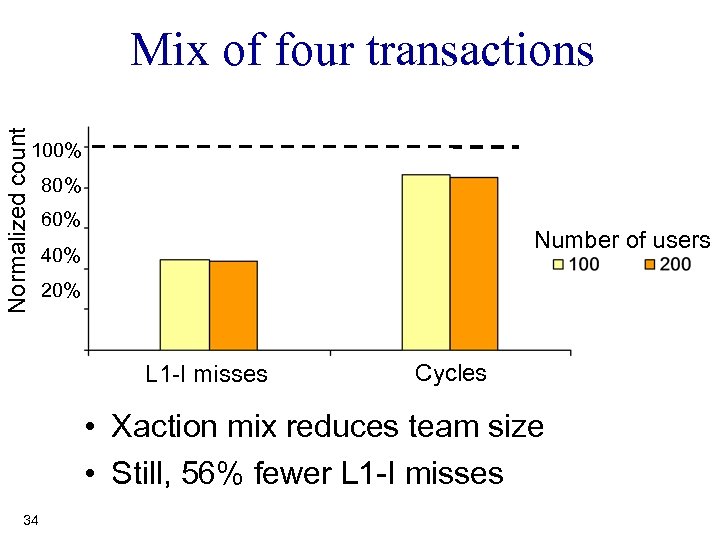

Normalized count Mix of four transactions 100% 80% 60% Number of users 40% 20% L 1 -I misses Cycles • Xaction mix reduces team size • Still, 56% fewer L 1 -I misses 34

STEPS: conclusions • STEPS can handle full OLTP workloads • Significant improvements in TPC-C • 65% fewer L 1 -I misses • 1. 2 – 1. 4 speedup STEPS minimizes both capacity / conflict misses without increasing I-cache size / associativity 35

Staged. DB: future work • Promising platform for Chip-Multiprocessors • DBMS suffer from CPU-to-CPU cache misses • Staged. DB allows work to follow data -- not the other way around! • Resource scheduling • Stages cluster requests for DB locks, I/O • Potential for deeper, more effective scheduling 36

Conclusions • New hardware, new requirements • Server core design remains the same • Need new design to fit modern hardware Staged. DB: Optimizes all memory hierarchy levels Promising design for future installations 37

The speaker would like to thank: Thank you his academic advisor Anastassia Ailamaki his thesis committee members Panos K. Chrysanthis, Christos Faloutsos, Todd C. Mowry, and Michael Stonebraker and his coauthors Kun Gao, Vladislav Shkapenyuk, and Ryan Williams 38

QPipe backup 39

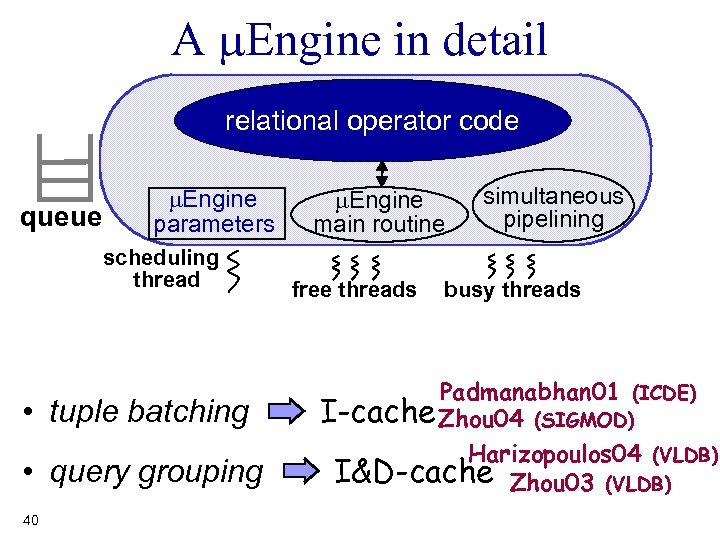

A m. Engine in detail relational operator code queue m. Engine parameters scheduling thread • tuple batching • query grouping 40 m. Engine main routine free threads simultaneous pipelining busy threads Padmanabhan 01 (ICDE) I-cache Zhou 04 (SIGMOD) Harizopoulos 04 (VLDB) I&D-cache Zhou 03 (VLDB)

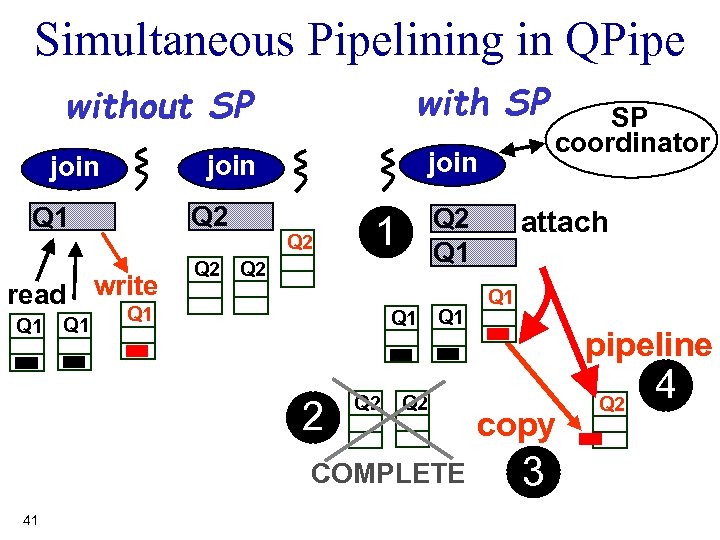

Simultaneous Pipelining in QPipe with SP without SP Q 2 Q 1 read write Q 1 join Q 2 Q 1 1 Q 2 Q 1 Q 2 COMPLETE 41 SP coordinator attach Q 1 pipeline copy 3 Q 2 4

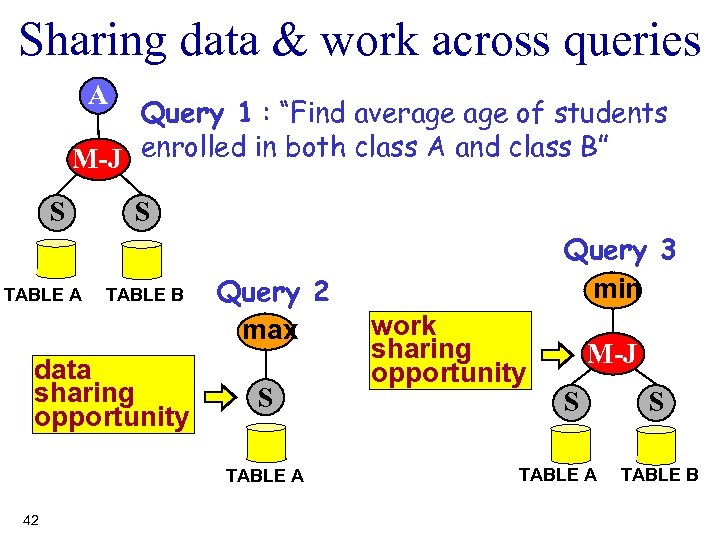

Sharing data & work across queries A Query 1 : “Find average of students enrolled in both class A and class B” M-J S TABLE A S TABLE B data sharing opportunity Query 2 max S TABLE A 42 Query 3 min work sharing opportunity M-J S TABLE A S TABLE B

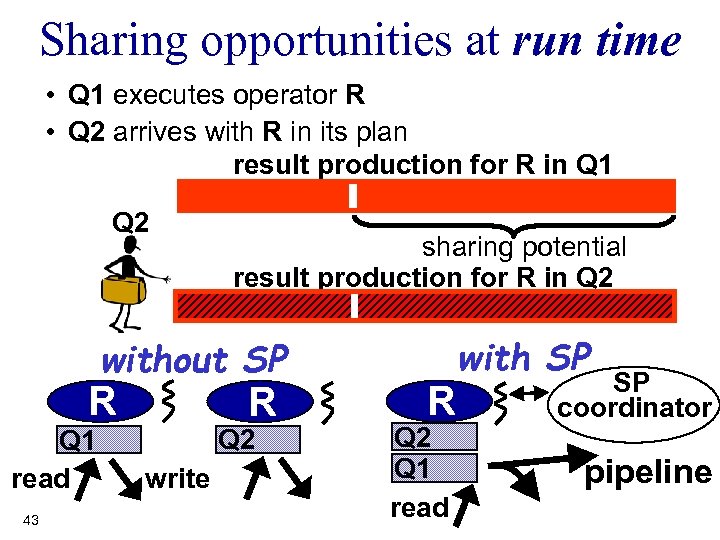

Sharing opportunities at run time • Q 1 executes operator R • Q 2 arrives with R in its plan result production for R in Q 1 Q 2 sharing potential result production for R in Q 2 without SP R Q 1 read 43 R Q 2 write R Q 2 Q 1 read with SP SP coordinator pipeline

number of clients average response time throughput (queries/hr) TPC-H workload think time (sec) • Clients use pool of 8 TPC-H queries • QPipe reuses large scans, runs up to 2 x faster • . . while maintaining low response times 44

STEPS backup 45

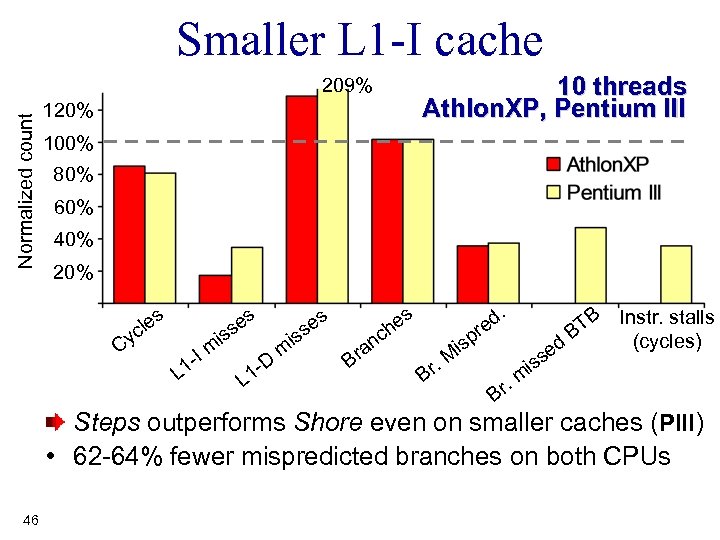

Smaller L 1 -I cache 10 threads Athlon. XP, Pentium III Normalized count 209% 120% 100% 80% 60% 40% 20% les c Cy is Im 1 L s se D 1 L is m d. re s he s se Br nc a . Br TB Instr. stalls B (cycles) d e isp M . Br iss m Steps outperforms Shore even on smaller caches (PIII) • 62 -64% fewer mispredicted branches on both CPUs 46

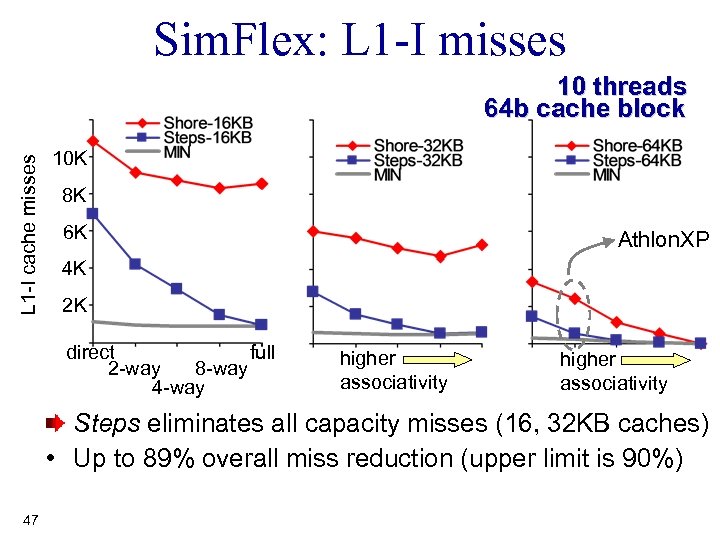

Sim. Flex: L 1 -I misses L 1 -I cache misses 10 threads 64 b cache block 10 K 8 K 6 K Athlon. XP 4 K 2 K full direct 8 -way 2 -way 4 -way higher associativity Steps eliminates all capacity misses (16, 32 KB caches) • Up to 89% overall miss reduction (upper limit is 90%) 47

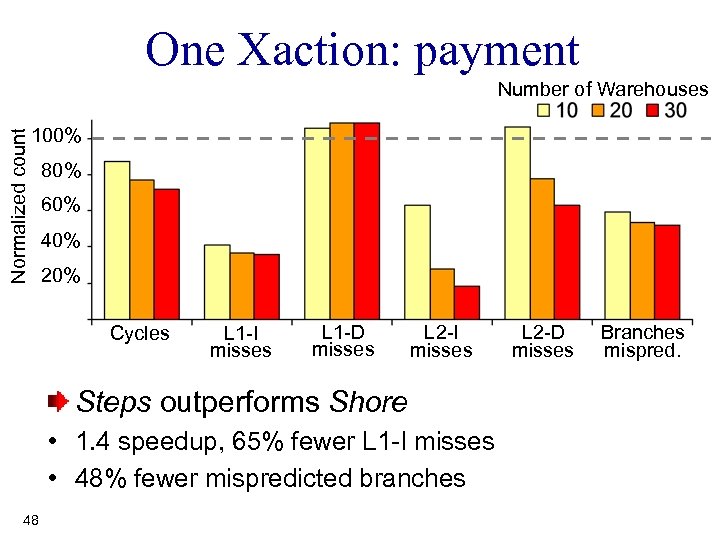

One Xaction: payment Normalized count Number of Warehouses 100% 80% 60% 40% 20% Cycles L 1 -I misses L 1 -D misses L 2 -I misses Steps outperforms Shore • 1. 4 speedup, 65% fewer L 1 -I misses • 48% fewer mispredicted branches 48 L 2 -D misses Branches mispred.

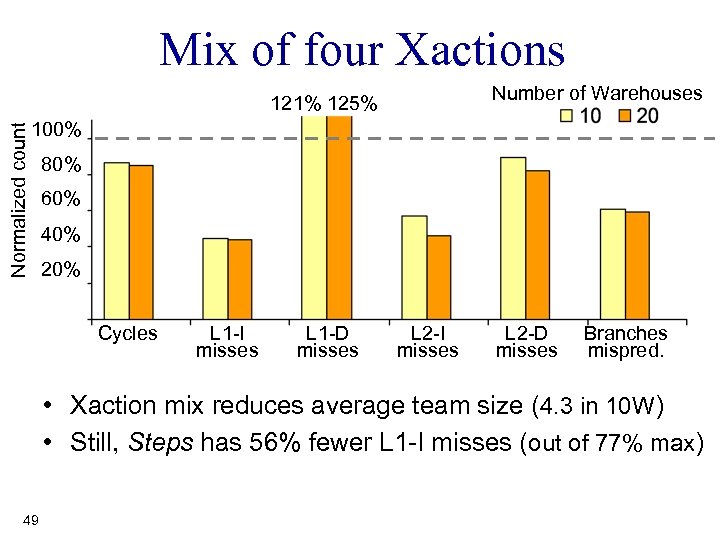

Mix of four Xactions Number of Warehouses Normalized count 121% 125% 100% 80% 60% 40% 20% Cycles L 1 -I misses L 1 -D misses L 2 -I misses L 2 -D misses Branches mispred. • Xaction mix reduces average team size (4. 3 in 10 W) • Still, Steps has 56% fewer L 1 -I misses (out of 77% max) 49

3d91b28b71fe80265f259480f54ed3dc.ppt