b5b93b93d5cdcd1940d25485dbfea2a9.ppt

- Количество слайдов: 19

SRB @ CC-IN 2 P 3 Jean-Yves Nief, CC-IN 2 P 3 KEK-CCIN 2 P 3 meeting on Grids. September 11 th – 12 th, 2006 KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006

Overview. • 3 SRB servers: – – 1 Sun V 440, 1 Sun V 480 (Ultra Sparc III), 1 Sun v 20 z (AMD Opteron). OS: Solaris 9 and 10. Total disk space: ~ 8 TB HPSS driver (non DCE): 2003. Using HPSS 5. 1. • MCAT: – Oracle 10 g. • Environment with multiple OS for clients or other SRB servers: – – Linux: Red. Hat, Scientific Linux, Debian. Solaris. Windows. Mac OS. • Interfaces: – – Scommands invoked from the shell (script based on them). Java APIs. Perl APIs. Web interface my. SRB. KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 2

Who is using SRB @ CC-IN 2 P 3 ? In green = pre-production. • High Energy Physics: – Ba. Bar (SLAC, Stanford). – CMOS (International Linear Collider R&D). – Calice (International Linear Collider R&D). • Astroparticle: – Edelweiss (Modane, France). – Pierre Auger Observatory (Argentina). • Astrophysics: – Super. Novae Factory (Hawaii). • Biomedical applications: – Neuroscience research. – Mammography project. – Cardiology research. KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 3

Babar, SLAC & CC-IN 2 P 3. • Ba. Bar: High Energy Physics experiment closed to Stanford (California). • SLAC and CC-IN 2 P 3 first opened to the Ba. Bar collaborators data analysis. • Both held complete copies of data (Objectivity). • Now only SLAC hold a complete copy of the data. • Natural candidates for testing and deployment of grid middleware. • Data should be available in a delay of 24/48 hours. • SRB: chosen for data distribution of hundreds of TBs of data. KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 4

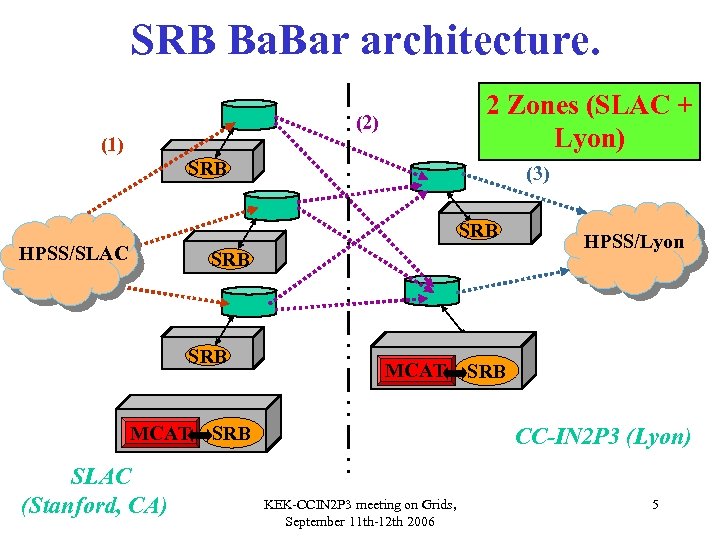

SRB Ba. Bar architecture. 2 Zones (SLAC + Lyon) (2) (1) SRB (3) SRB HPSS/SLAC SRB MCAT SLAC (Stanford, CA) MCAT SRB HPSS/Lyon SRB CC-IN 2 P 3 (Lyon) KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 5

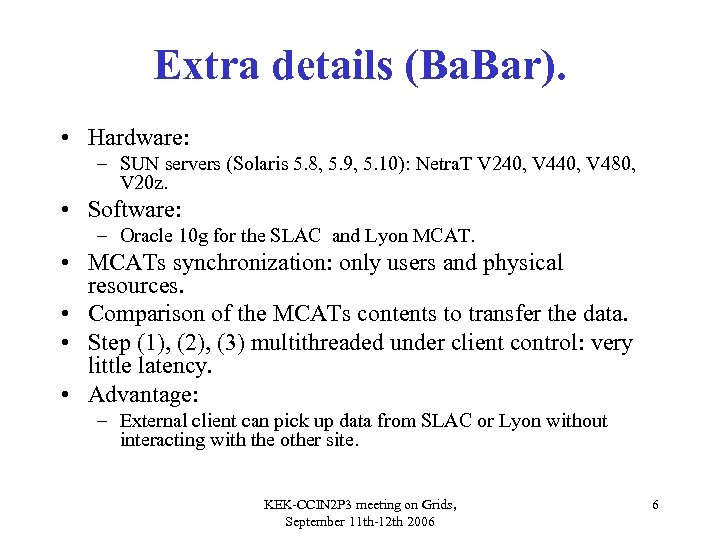

Extra details (Ba. Bar). • Hardware: – SUN servers (Solaris 5. 8, 5. 9, 5. 10): Netra. T V 240, V 480, V 20 z. • Software: – Oracle 10 g for the SLAC and Lyon MCAT. • MCATs synchronization: only users and physical resources. • Comparison of the MCATs contents to transfer the data. • Step (1), (2), (3) multithreaded under client control: very little latency. • Advantage: – External client can pick up data from SLAC or Lyon without interacting with the other site. KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 6

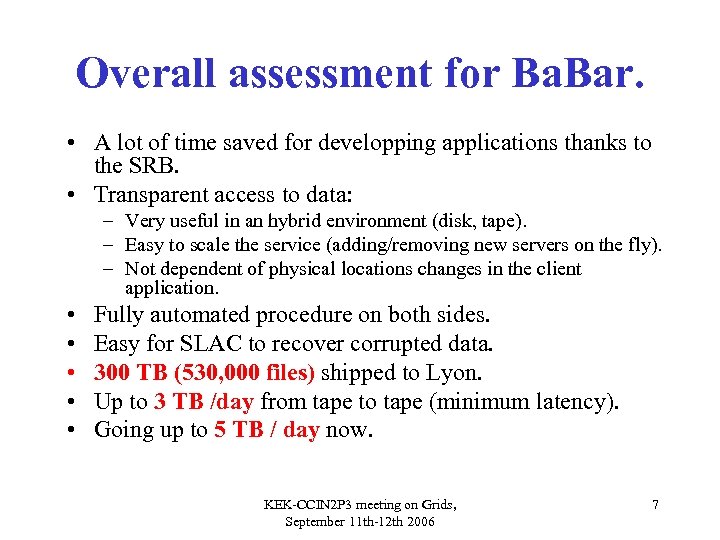

Overall assessment for Ba. Bar. • A lot of time saved for developping applications thanks to the SRB. • Transparent access to data: – Very useful in an hybrid environment (disk, tape). – Easy to scale the service (adding/removing new servers on the fly). – Not dependent of physical locations changes in the client application. • • • Fully automated procedure on both sides. Easy for SLAC to recover corrupted data. 300 TB (530, 000 files) shipped to Lyon. Up to 3 TB /day from tape to tape (minimum latency). Going up to 5 TB / day now. KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 7

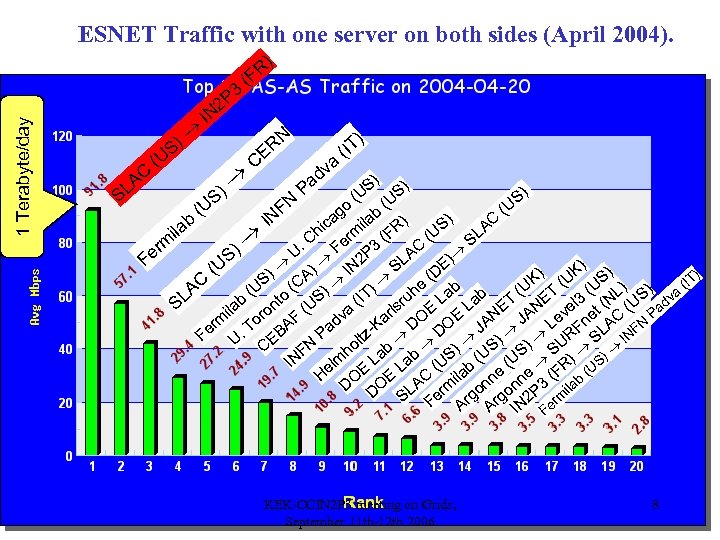

ESNET Traffic with one server on both sides (April 2004). 1 Terabyte/day 3 SL AC S) (U 2 P IN R) (F ) S (U C RN E a v ad P ) IT ( ) ) US US ( S) N o b( F (U ag ila ) ) C b IN c S A hi rm (FR ila (U SL U. C Fe P 3 C m er A S) F ) E) ) IN 2 SL (U ) UK S) ) ) A (D K ( S (C C (IT e b ) (U ) ) (U h (U to LA S) La Lab T NET el 3 (NL (US adva b (IT lsru S E E t a ila ron F (U r v m To dv -Ka DO OE JAN JA Le Fne AC FN P r L BA Pa Fe U. tz D S) ) UR S IN E N ol C ) b h F S S La Lab (US b (U (U R) S) m IN l He OE E AC ila nne ne (F b (U n 3 ila D DO L m S Fer rgo 2 P erm A A IN F KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 8

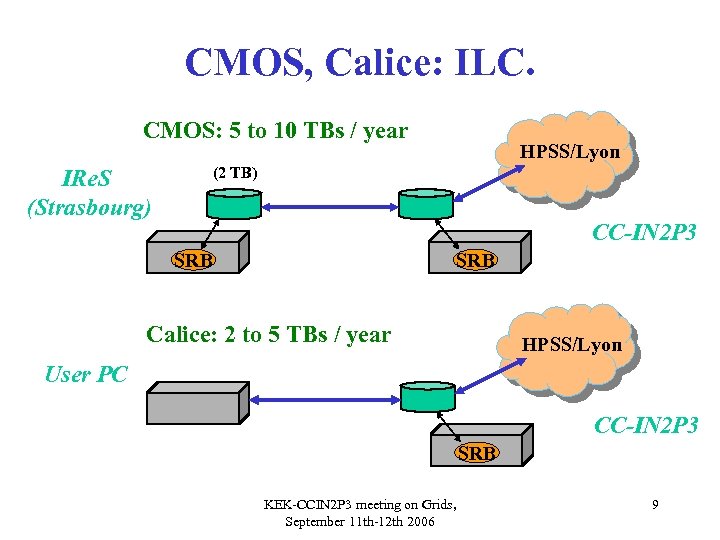

CMOS, Calice: ILC. CMOS: 5 to 10 TBs / year HPSS/Lyon (2 TB) IRe. S (Strasbourg) CC-IN 2 P 3 SRB Calice: 2 to 5 TBs / year HPSS/Lyon User PC CC-IN 2 P 3 SRB KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 9

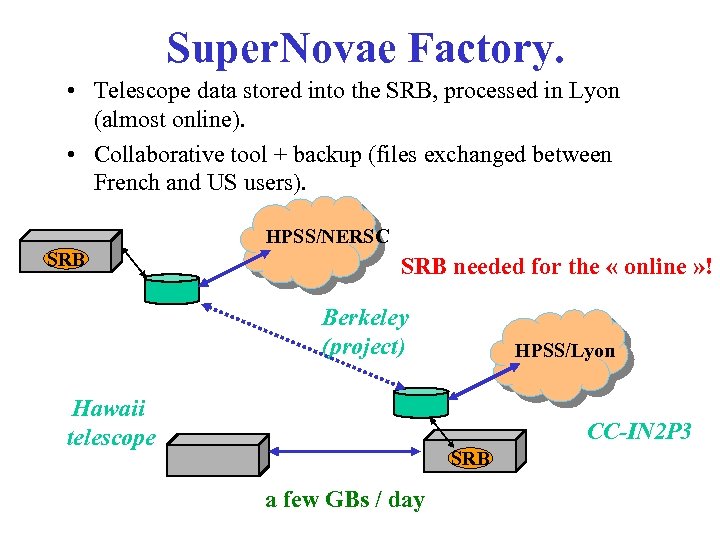

Super. Novae Factory. • Telescope data stored into the SRB, processed in Lyon (almost online). • Collaborative tool + backup (files exchanged between French and US users). HPSS/NERSC SRB needed for the « online » ! Berkeley (project) Hawaii telescope HPSS/Lyon CC-IN 2 P 3 SRB a few GBs / day

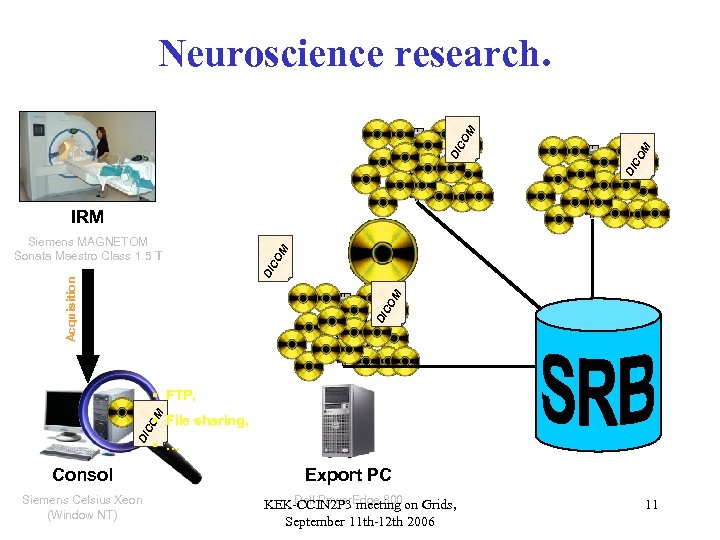

CO M DI DI CO M Neuroscience research. DI CO M Acquisition DI Siemens MAGNETOM Sonata Maestro Class 1. 5 T CO M IRM Consol Siemens Celsius Xeon (Window NT) § … DI DI CO M § File sharing, CO M § FTP, Export PC Dell Power. Edge 800 KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 11

Neuroscience research (II). • Goal: make SRB invisible to the end user. • More than 500, 000 files registered. • Data pushed from Lyon, Strasbourg hospital: – Automated procedure including anonymization. • Now interfaced within the MATLAB environment. • ~ 1. 5 FTE for 6 months… • Next step: – Ever growing community (a few TBs / year). • Goal: – Join the BIRN network (US biomedical network). KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 12

Mammography. • Database of X ray pictures (Florida) stored into SRB: – Reference pictures of various type of breast cancers. • Analyze a X ray picture of a breast: – Submitting a job in EGEE framework. • Compare with the ones in the reference database: – Pick up from the SRB. KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 13

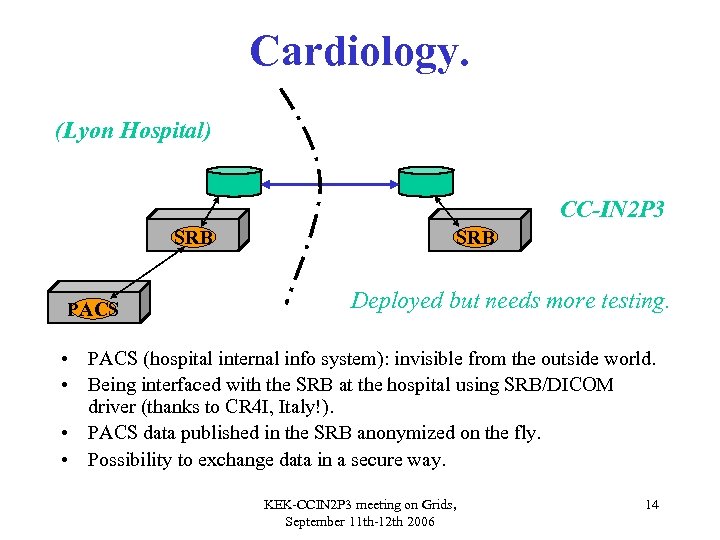

Cardiology. (Lyon Hospital) CC-IN 2 P 3 SRB PACS SRB Deployed but needs more testing. • PACS (hospital internal info system): invisible from the outside world. • Being interfaced with the SRB at the hospital using SRB/DICOM driver (thanks to CR 4 I, Italy!). • PACS data published in the SRB anonymized on the fly. • Possibility to exchange data in a secure way. KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 14

GGF Data Grid Interoperability Demonstration. • Goals: – Demonstrate federation of 14 SRB data grids (shared name spaces). – Demonstrate authentication, authorization, shared collections, remote data access. – CC-IN 2 P 3 part of it. • Organizers: Erwin Laure (Erwin. Laure@cern. ch) Reagan Moore (moore@sdsc. edu) Arun Jagatheesan (arun@sdsc. edu) Sheau-Yen Chen (sheauc@sdsc. edu) KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 15

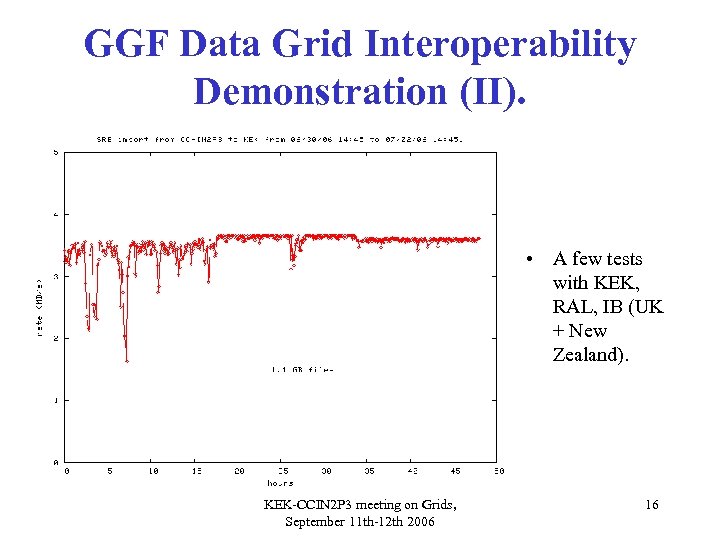

GGF Data Grid Interoperability Demonstration (II). • A few tests with KEK, RAL, IB (UK + New Zealand). KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 16

Summary. • Lightweight administration for the entire system. • Fully automated monitoring of the system health. • For each project: – Training of the administrator(s) of the project. – Proposing the architecture. – User support and « consulting » on SRB. • Different project = different needs, various aspects of SRB used. • Over 1 million of files for some catalogs very soon. • More projects coming to SRB: – Auger: CC-IN 2 P 3 Tier 0, import from Argentina, real data and simulation distribution. – 1 Mega. Star project (Eros, astro): usage of HDF 5 driver ? – Bio. Emergence. KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 17

What’s next ? • Monitoring tools of the SRB systems for the users needed: (like Adil, Roger Downing did for CCLRC). • Build with Adil some kind of European forum on SRB: – – – Already contacts in Italy, Netherlands, Germany. Gather everybody experience on SRB. Put in common tools, scripts developped. Adil will host the first meeting in the UK. Big party in his new appartment: everybody welcome! • SRB-DSI. KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 18

Involvement in i. RODS. • Many possibilities, some examples: – Interface with MSS: • HPSS driver. • Improvement of the compound resources (rules for migration, etc…). • Mixing compound and logical resources. • Containers ( see Adil @ CCLRC). – Optimization of the transfer protocol on long distance network wrt SRB (? ). – Databases performance (RCAT, DAI). – Improvement of data encryption services. – Web interface (php ? ). KEK-CCIN 2 P 3 meeting on Grids, September 11 th-12 th 2006 19

b5b93b93d5cdcd1940d25485dbfea2a9.ppt