e3948d57a30d99882e30deabb4e772fe.ppt

- Количество слайдов: 20

SPUD A Distributed High Performance Publish-Subscribe Cluster Uriel Peled and Tal Kol Guided by Edward Bortnikov Software Systems Laboratory Faculty of Electrical Engineering, Technion

Project Goal Design and implement a general-purpose Publish-Subscribe server Push traditional implementations into global scale performance demands 1 million concurrent clients Millions of concurrent topics High transaction rate Demonstrate server abilities with a fun client application

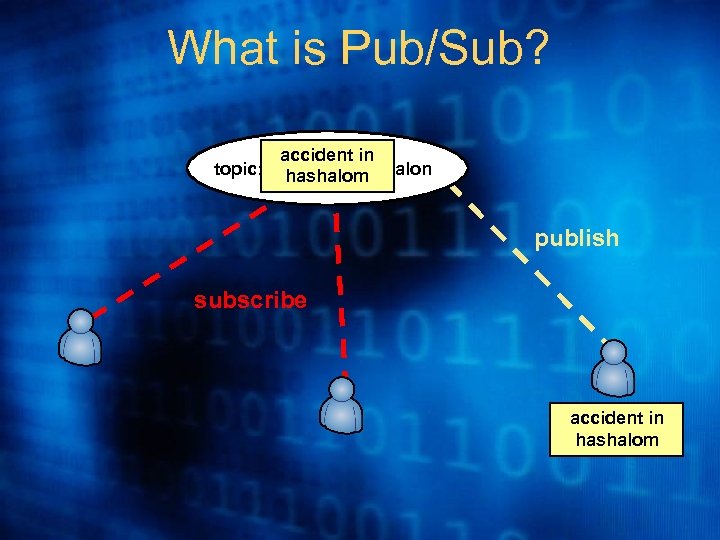

What is Pub/Sub? accident in topic: //traffic-jams/ayalon hashalom publish subscribe accident in hashalom

What Can We Do With It? Collaborative Web Browsing others:

What Can We Do With It? Instant Messaging Hi buddy!

Seems Easy To Implement, But… “I’m behind a NAT, I can’t connect!” Not all client setups are server friendly “Server is too busy, try again later? !” 1 million concurrent clients is simply too much “The server is so slow!!!” Service time grows exponentially with load “A server crashed, everything is lost!” Single points of failure will eventually fail

Naïve Implementation (example 1) Simple UDP for client-server communication No need for sessions since we send messages Very low cost-per-client Sounds perfect? NAT

NAT Traversal UDP hole punching NAT will accept UDP reply for a short window Our measurements: 15 -30 seconds Keep UDP pinging from each client every 15 s Days-long TCP sessions NAT remembers current sessions for replies If WWW works - we should work Increases dramatically cost-per-client Our research: all IM’s do exactly this

Naïve Implementation (example 2) Blocking I/O with one thread per client Basic model for most servers (JAVA default) Traditional UNIX – fork for every client Sounds perfect? 500 clients

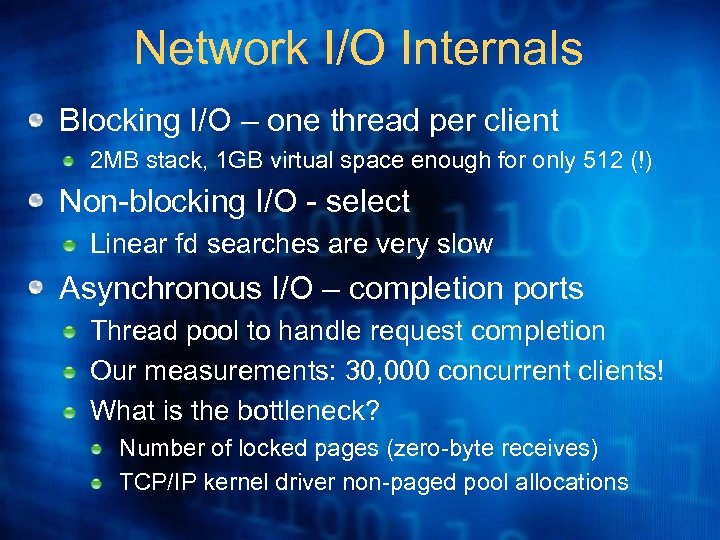

Network I/O Internals Blocking I/O – one thread per client 2 MB stack, 1 GB virtual space enough for only 512 (!) Non-blocking I/O - select Linear fd searches are very slow Asynchronous I/O – completion ports Thread pool to handle request completion Our measurements: 30, 000 concurrent clients! What is the bottleneck? Number of locked pages (zero-byte receives) TCP/IP kernel driver non-paged pool allocations

Scalability Scale up Buy a bigger box Scale out Buy more boxes Which one to do? Both! Push each box to its hardware maximum 1000’s of servers is impractical Add relevant boxes as load increases The Google way (cheap PC server farms)

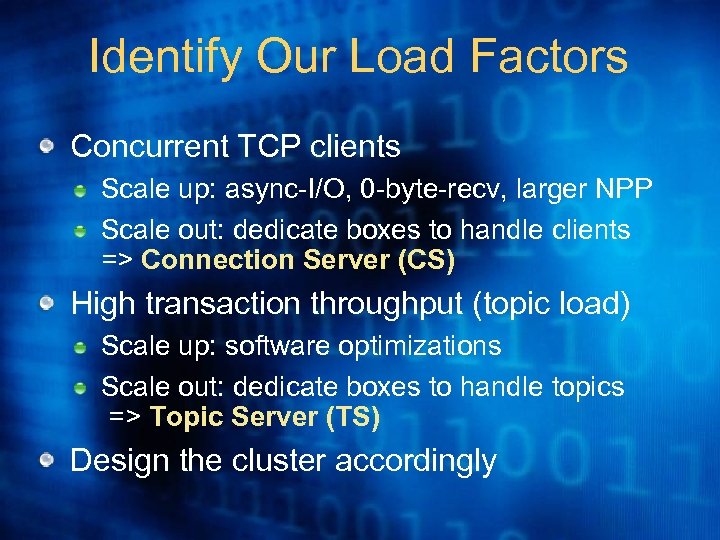

Identify Our Load Factors Concurrent TCP clients Scale up: async-I/O, 0 -byte-recv, larger NPP Scale out: dedicate boxes to handle clients => Connection Server (CS) High transaction throughput (topic load) Scale up: software optimizations Scale out: dedicate boxes to handle topics => Topic Server (TS) Design the cluster accordingly

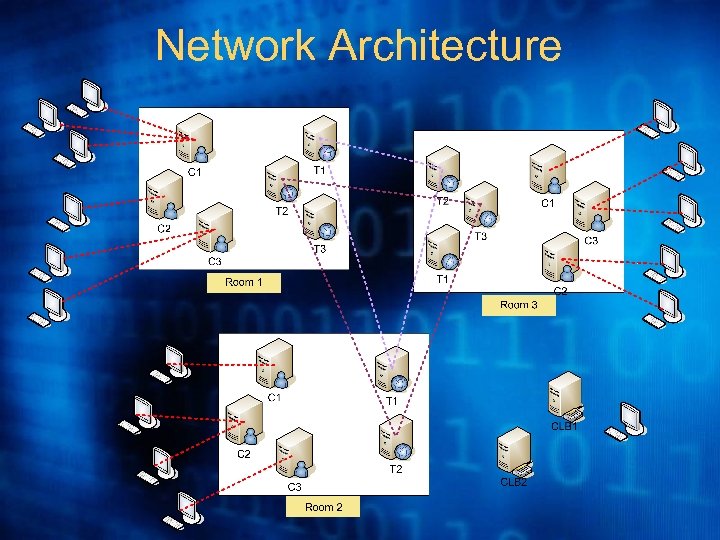

Network Architecture

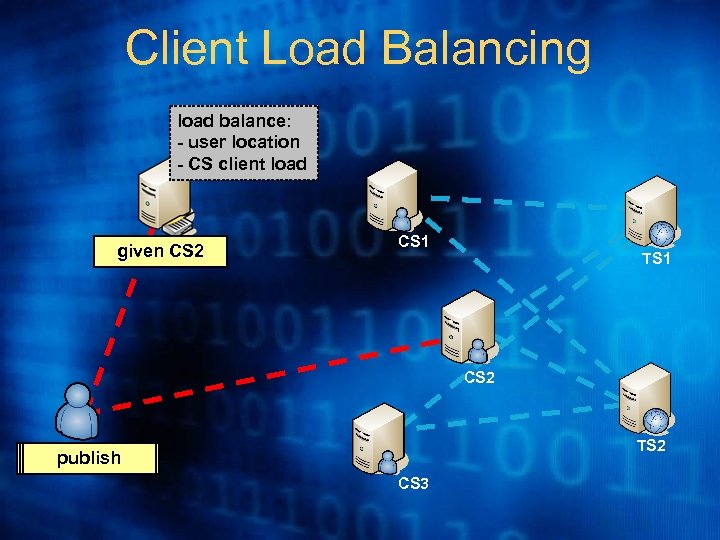

Client Load Balancing load balance: - user location - CS client load CLB given CS 2 CS 1 TS 1 CS 2 TS 2 request CS subscribe publish login CS 3

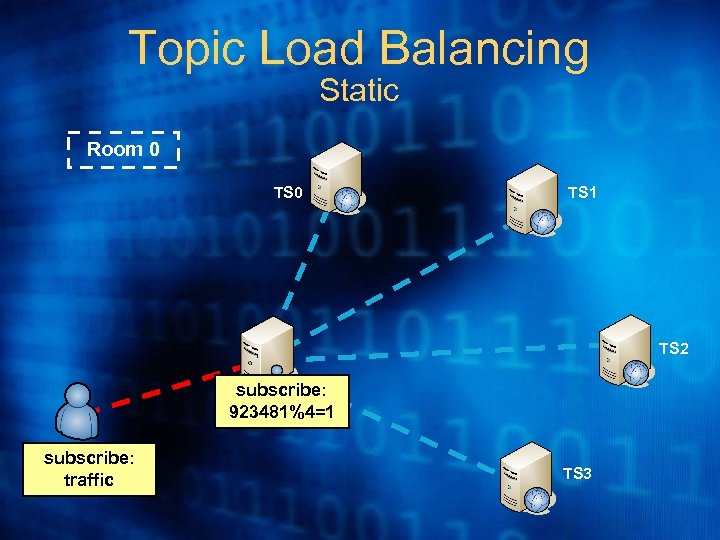

Topic Load Balancing Static Room 0 TS 1 TS 2 subscribe: CS 923481%4=1 subscribe: traffic TS 3

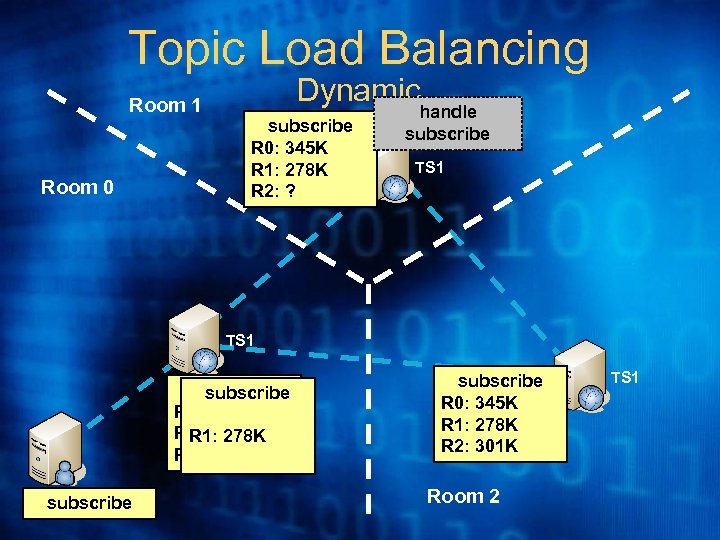

Topic Load Balancing Dynamic Room 1 Room 0 subscribe R 0: 345 K R 1: 278 K R 2: ? handle subscribe TS 1 subscribe R 0: 345 K R 1: ? 278 K R 1: R 2: ? subscribe CS subscribe R 0: 345 K R 1: 278 K R 2: 301 K Room 2 TS 1

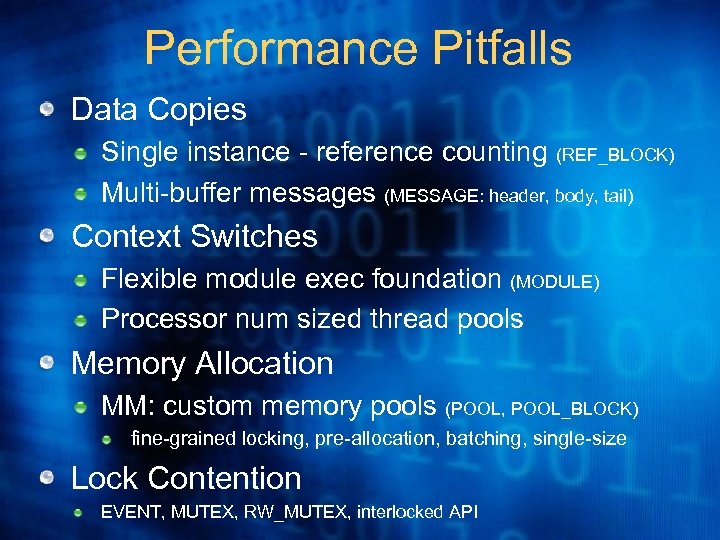

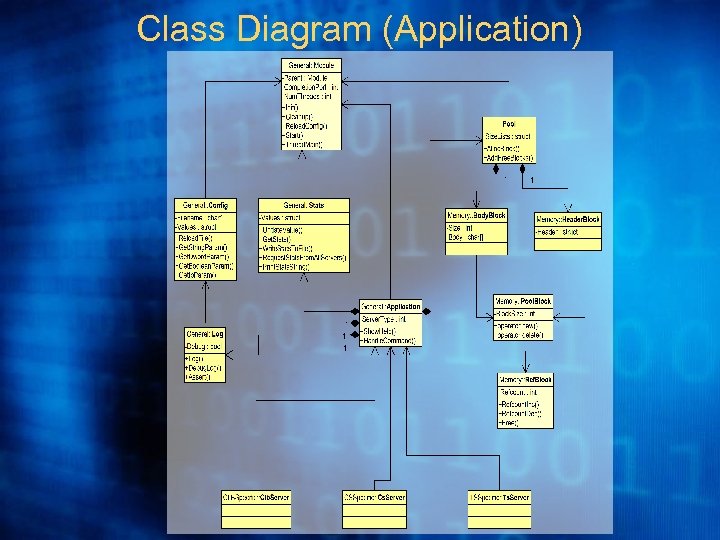

Performance Pitfalls Data Copies Single instance - reference counting (REF_BLOCK) Multi-buffer messages (MESSAGE: header, body, tail) Context Switches Flexible module exec foundation (MODULE) Processor num sized thread pools Memory Allocation MM: custom memory pools (POOL, POOL_BLOCK) fine-grained locking, pre-allocation, batching, single-size Lock Contention EVENT, MUTEX, RW_MUTEX, interlocked API

Class Diagram (Application)

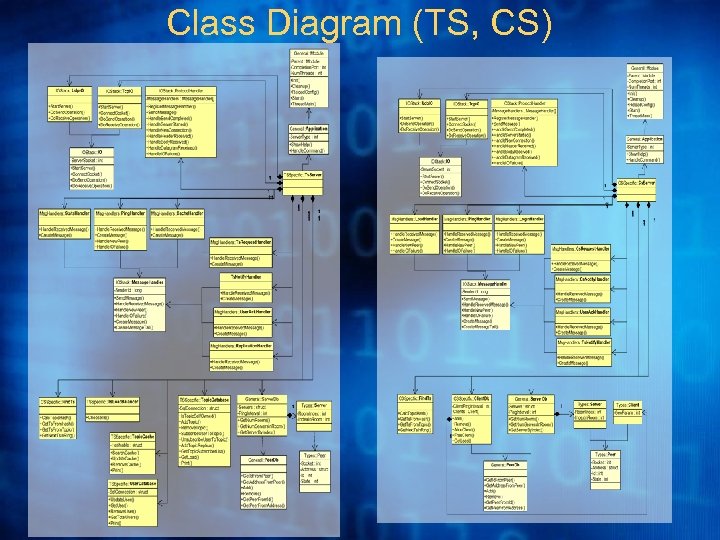

Class Diagram (TS, CS)

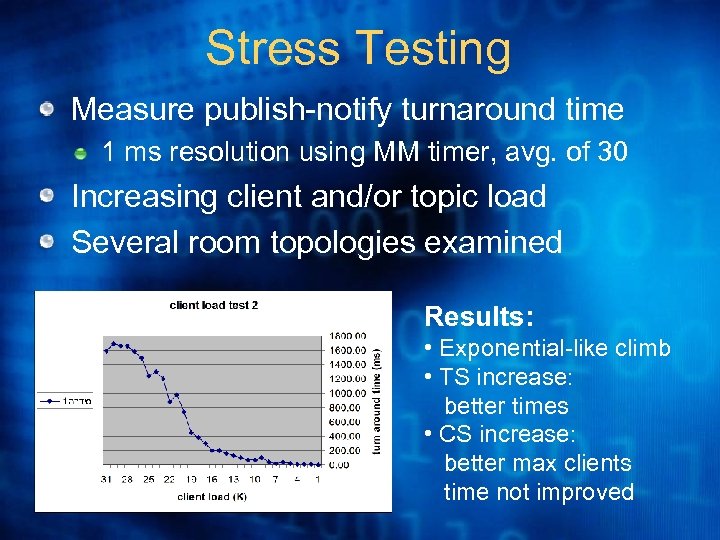

Stress Testing Measure publish-notify turnaround time 1 ms resolution using MM timer, avg. of 30 Increasing client and/or topic load Several room topologies examined Results: • Exponential-like climb • TS increase: better times • CS increase: better max clients time not improved

e3948d57a30d99882e30deabb4e772fe.ppt