3cd8a33fe14e75083b368c1ef8d8118d.ppt

- Количество слайдов: 25

Spring 2008 CSE 591 Compilers for Embedded Systems Aviral Shrivastava Department of Computer Science and Engineering Arizona State University

Lecture 5: Scratch Pad Memories Motivation

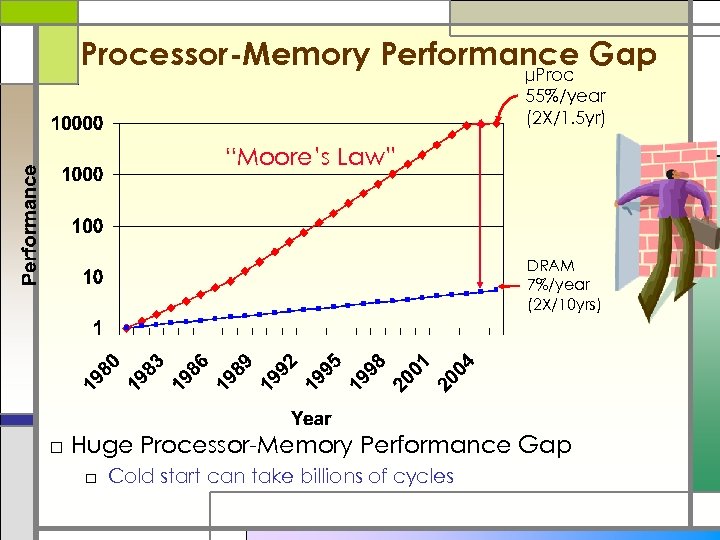

Processor-Memory Performance Gap µProc 55%/year (2 X/1. 5 yr) “Moore’s Law” DRAM 7%/year (2 X/10 yrs) □ Huge Processor-Memory Performance Gap □ Cold start can take billions of cycles

More serious dimensions of the memory problem Sub-banking Energy Access times □ Applications are getting larger and larger …

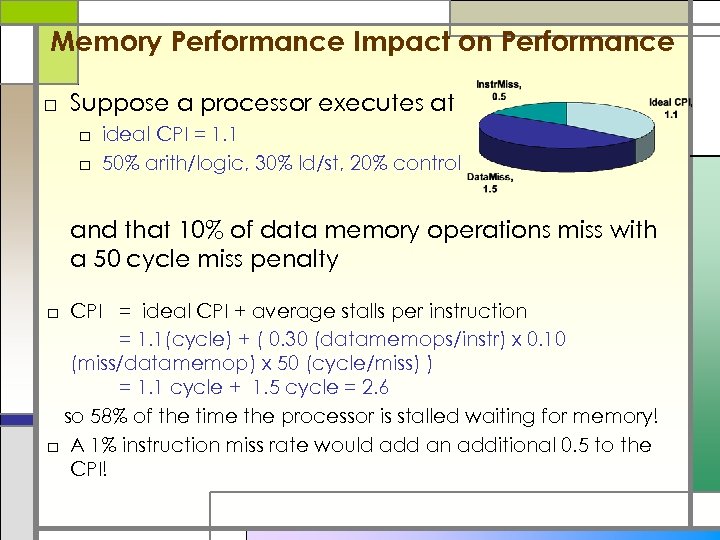

Memory Performance Impact on Performance □ Suppose a processor executes at □ ideal CPI = 1. 1 □ 50% arith/logic, 30% ld/st, 20% control and that 10% of data memory operations miss with a 50 cycle miss penalty □ CPI = ideal CPI + average stalls per instruction = 1. 1(cycle) + ( 0. 30 (datamemops/instr) x 0. 10 (miss/datamemop) x 50 (cycle/miss) ) = 1. 1 cycle + 1. 5 cycle = 2. 6 so 58% of the time the processor is stalled waiting for memory! □ A 1% instruction miss rate would add an additional 0. 5 to the CPI!

The Memory Hierarchy Goal: Create an illusion □ Fact: Large memories are slow and fast memories are small □ How do we create a memory that gives the illusion of being large, cheap and fast (most of the time)? □ With hierarchy □ With parallelism

A Typical Memory Hierarchy q By taking advantage of the principle of locality l Can present the user with as much memory as is available in the cheapest technology l at the speed offered by the fastest technology On-Chip Components Control DTLB Instr Data Cache ITLB Reg. File Datapath Speed (%cycles): ½’s 1’s Size (bytes): K’s Cost: 100’s highest e. DRAM Second Level Cache (SRAM) Main Memory (DRAM) Secondary Memory (Disk) 10’s 100’s 1, 000’s 10 K’s M’s G’s to T’s lowest

Memory system frequently consumes >50 % of the energy used for processing Uni-processor without caches Multi-processor with cache [M. Verma, P. Marwedel: Advanced Memory Optimization Techniques for Low-Power Embedded Processors, Springer, May 2007] Osman S. Unsal, Israel Koren, C. Mani Krishna, Csaba Andras Moritz, U. of Massachusetts, Amherst, 2001 [Segars 01 according to Vahid@ISSS 01]

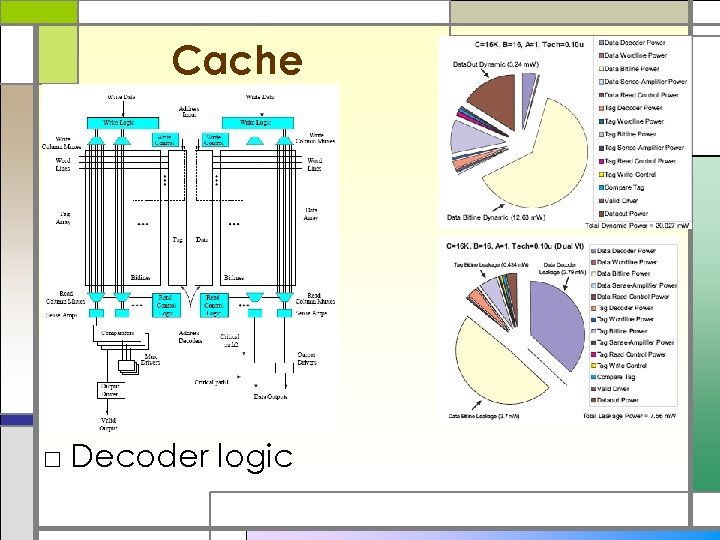

Cache □ Decoder logic

![Energy Efficiency Operations/Watt [GOPS/W] Ambient Intelligence 10 1 0. 1 g mputin le Co Energy Efficiency Operations/Watt [GOPS/W] Ambient Intelligence 10 1 0. 1 g mputin le Co](https://present5.com/presentation/3cd8a33fe14e75083b368c1ef8d8118d/image-10.jpg)

Energy Efficiency Operations/Watt [GOPS/W] Ambient Intelligence 10 1 0. 1 g mputin le Co ASIC urab config Re DSP-ASIPs rs so Proces µPs poor design techniques 0. 01 1. 0µ 0. 5µ 0. 25µ 0. 13µ 0. 07µ Technology Necessary to optimize; otherwise the price for flexibility cannot be paid! [H. de Man, Keynote, DATE‘ 02; T. Claasen, ISSCC 99]

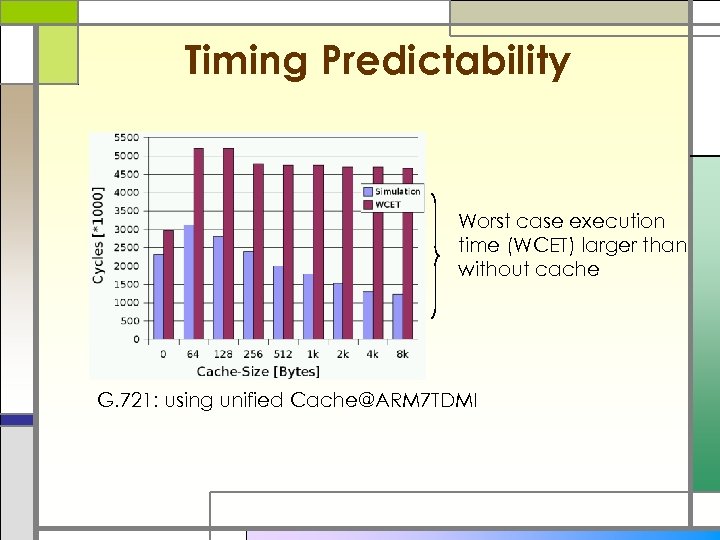

Timing Predictability Worst case execution time (WCET) larger than without cache G. 721: using unified Cache@ARM 7 TDMI

Objectives for Memory System Design □ (Average) Performance □ Throughput □ Latency □ Energy consumption □ Predictability, good worst case execution time bound (WCET) □ Size □ Cost □ ….

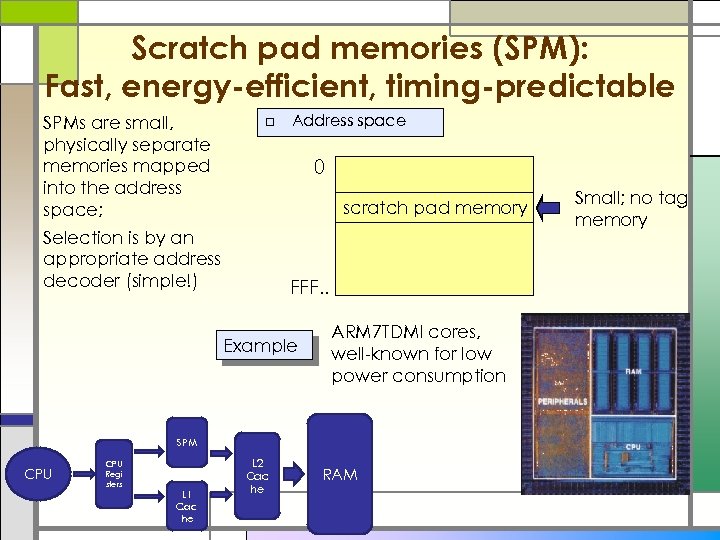

Scratch pad memories (SPM): Fast, energy-efficient, timing-predictable SPMs are small, physically separate memories mapped into the address space; □ Address space 0 scratch pad memory Selection is by an appropriate address decoder (simple!) FFF. . Example ARM 7 TDMI cores, well-known for low power consumption SPM CPU Regi sters L 1 Cac he L 2 Cac he RAM Small; no tag memory

Comparison of currents E. g. : ATMEL board with ARM 7 TDMI and ext. SRAM

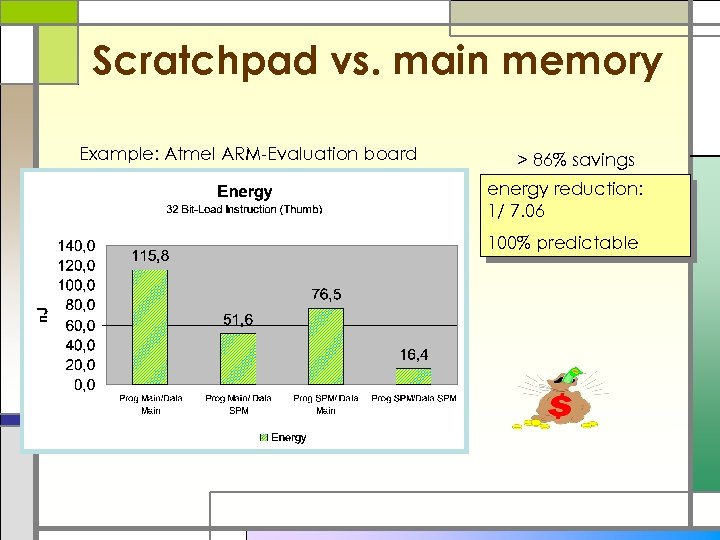

Scratchpad vs. main memory Example: Atmel ARM-Evaluation board > 86% savings energy reduction: 1/ 7. 06 100% predictable

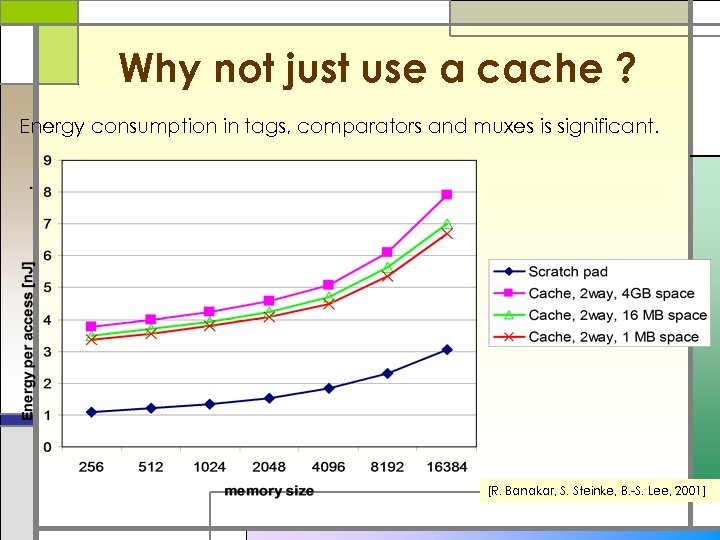

Why not just use a cache ? Energy consumption in tags, comparators and muxes is significant. [R. Banakar, S. Steinke, B. -S. Lee, 2001]

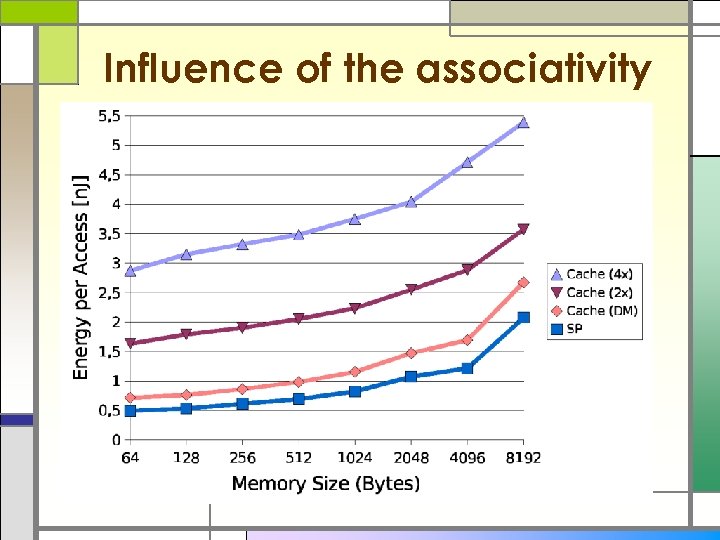

Influence of the associativity

Systems with SPM □ Most of the ARM architectures have an on-chip SPM termed as Tightly-coupled memory (TCM) □ GPUs such as Nvidia’s 8800 have a 16 KB SPM □ Its typical for a DSP to have scratch pad RAM □ Embedded processors like Motorola Mcore, TI TMS 370 C □ Commercial network processors – Intel IXP □ And many more …

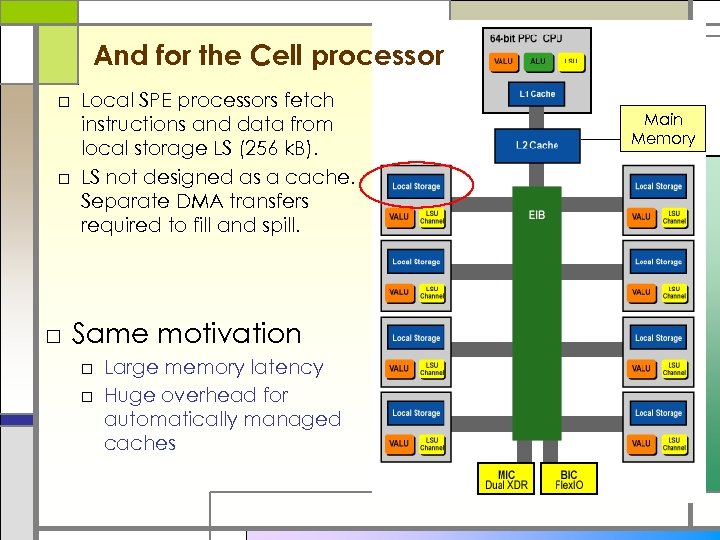

And for the Cell processor □ Local SPE processors fetch instructions and data from local storage LS (256 k. B). □ LS not designed as a cache. Separate DMA transfers required to fill and spill. □ Same motivation □ Large memory latency □ Huge overhead for automatically managed caches Main Memory

Advantages of Scratch Pads □ Area advantage - For the same area, we can fit more memory of SPM than in cache (around 34%) □ SPM consists of just a memory array & address decoding circuitry □ Less energy consumption per access □ Absence of tag memory and comparators □ Performance comparable with cache □ Predictable WCET – required for RTES

Challenges in using SPMs □ In SPMs, application developer, or compiler has explicitly move data between memories □ Data mapping is transparent in cache based architectures □ Binary compatible? □ Do advantages translate to a different machine?

Data Allocation on SPM □ Techniques focus on mapping □ Global data □ Stack data □ Heap data □ Broadly, we can classify as □ Static – Mapping of data decided at compile time and remains constant throughout the execution □ Compile-time Dynamic – Mapping of data decided at compile time and data in SPM changes throughout execution □ Goals are □ To minimize off-chip memory access □ To reduce energy consumption □ To achieve better performance

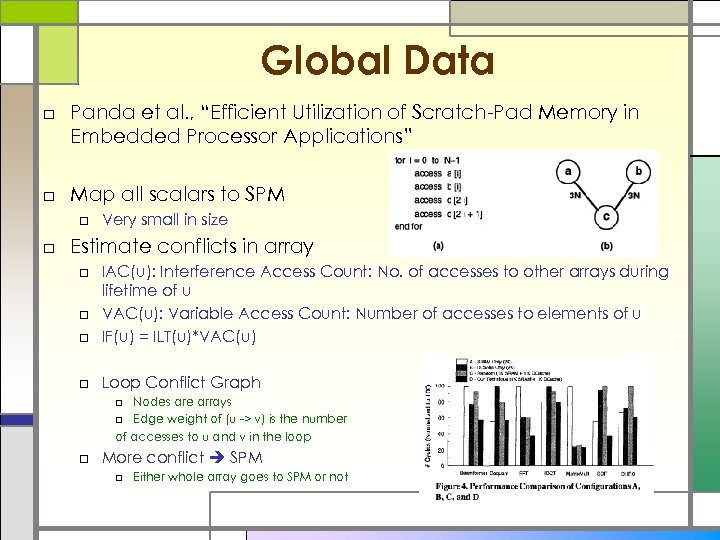

Global Data □ Panda et al. , “Efficient Utilization of Scratch-Pad Memory in Embedded Processor Applications” □ Map all scalars to SPM □ Very small in size □ Estimate conflicts in array □ IAC(u): Interference Access Count: No. of accesses to other arrays during lifetime of u □ VAC(u): Variable Access Count: Number of accesses to elements of u □ IF(u) = ILT(u)*VAC(u) □ Loop Conflict Graph □ Nodes are arrays □ Edge weight of (u -> v) is the number of accesses to u and v in the loop □ More conflict SPM □ Either whole array goes to SPM or not

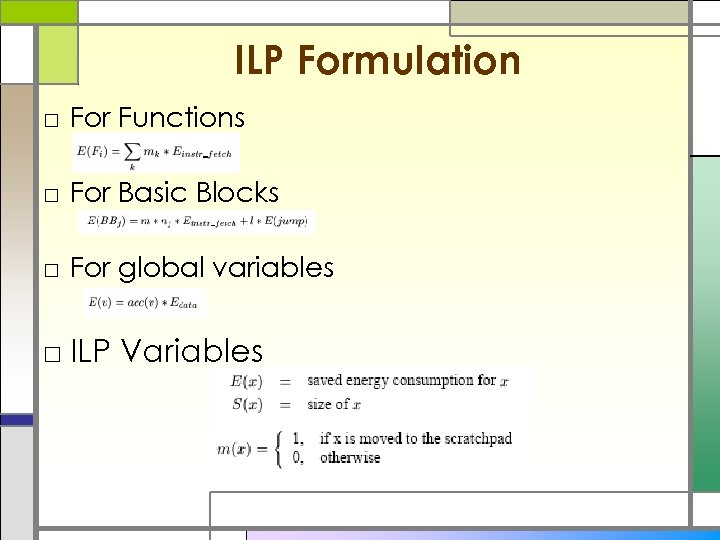

ILP Formulation □ For Functions □ For Basic Blocks □ For global variables □ ILP Variables

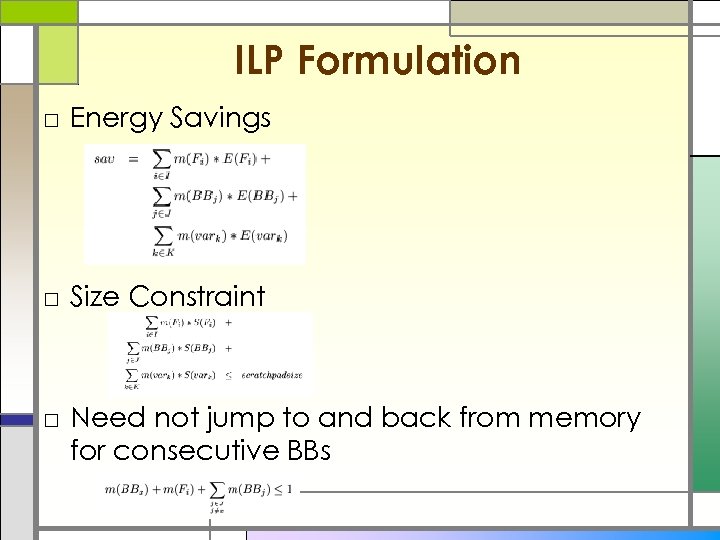

ILP Formulation □ Energy Savings □ Size Constraint □ Need not jump to and back from memory for consecutive BBs

3cd8a33fe14e75083b368c1ef8d8118d.ppt