343a6368cf7151c50fcc9b518c2c76a5.ppt

- Количество слайдов: 95

Spring 2004 Prof. Gheorghe Tecuci Learning Agents Center Computer Science Department George Mason University 1

Spring 2004 Prof. Gheorghe Tecuci Learning Agents Center Computer Science Department George Mason University 1

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 2

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 2

Course topic Study theoretical, methodological and practical foundations for mixed-initiative intelligent systems. Students will learn about the open research issues in the development of such systems, to make progress with their own research related to mixed-initiative reasoning. 3

Course topic Study theoretical, methodological and practical foundations for mixed-initiative intelligent systems. Students will learn about the open research issues in the development of such systems, to make progress with their own research related to mixed-initiative reasoning. 3

Mixed-initiative intelligent system Definition: A mixed-initiative intelligent system is a collaborative multi-agent system where the component agents work together to achieve a common goal, in a way that takes advantage of their complementary capabilities. A mixed-initiative intelligent system includes complementary agents, and can perform tasks that are beyond the capabilities of any of the component agents. This means that it can achieve goals unachievable by the component agents, if they work independently, or it can achieve the same goals more effectively. 4

Mixed-initiative intelligent system Definition: A mixed-initiative intelligent system is a collaborative multi-agent system where the component agents work together to achieve a common goal, in a way that takes advantage of their complementary capabilities. A mixed-initiative intelligent system includes complementary agents, and can perform tasks that are beyond the capabilities of any of the component agents. This means that it can achieve goals unachievable by the component agents, if they work independently, or it can achieve the same goals more effectively. 4

What is mixed-initiative? Mixed-initiative refers to a flexible collaboration strategy, where each agent can contribute to a joint task with what it does best. In the most general cases, the agents’ roles are not determined in advance, but opportunistically negotiated between them as the problem is being solved: - at one time, one agent has the initiative — controlling the problem solving process — while the others work to assist it, contributing to this process as required; - at another time, the roles are reversed, another agent taking the initiative; and - at other times the agents might be working independently, assisting each other only when specifically asked. The agents dynamically adapt their interaction style to best address the problem at hand. Mixed-initiative interaction lets agents work most effectively as a team — that’s the key. The secret is to let the agents who currently know best how to proceed coordinate the other agents. (James Allen) 5

What is mixed-initiative? Mixed-initiative refers to a flexible collaboration strategy, where each agent can contribute to a joint task with what it does best. In the most general cases, the agents’ roles are not determined in advance, but opportunistically negotiated between them as the problem is being solved: - at one time, one agent has the initiative — controlling the problem solving process — while the others work to assist it, contributing to this process as required; - at another time, the roles are reversed, another agent taking the initiative; and - at other times the agents might be working independently, assisting each other only when specifically asked. The agents dynamically adapt their interaction style to best address the problem at hand. Mixed-initiative interaction lets agents work most effectively as a team — that’s the key. The secret is to let the agents who currently know best how to proceed coordinate the other agents. (James Allen) 5

Human-agent systems Some of the component agents may include human agents. A mixed-initiative intelligent system which includes a human agent integrates human and automated reasoning to take advantage of their complementary knowledge, reasoning styles and computational strengths. Effective mixed-initiative interaction is required to build computer systems that can seamlessly interact with humans as they perform complex tasks. What are some of the complementary abilities of human and computer agents? 6

Human-agent systems Some of the component agents may include human agents. A mixed-initiative intelligent system which includes a human agent integrates human and automated reasoning to take advantage of their complementary knowledge, reasoning styles and computational strengths. Effective mixed-initiative interaction is required to build computer systems that can seamlessly interact with humans as they perform complex tasks. What are some of the complementary abilities of human and computer agents? 6

Research opportunity Research in mixed-initiative interaction is still in its infancy, and the research problems are significant, but its potential of developing effective human-machine systems (where humans interact seamlessly with computer agents) and powerful multi-agent systems (well above individual agents) is enormous. This course offers you an opportunity to embark in this exciting journey. 7

Research opportunity Research in mixed-initiative interaction is still in its infancy, and the research problems are significant, but its potential of developing effective human-machine systems (where humans interact seamlessly with computer agents) and powerful multi-agent systems (well above individual agents) is enormous. This course offers you an opportunity to embark in this exciting journey. 7

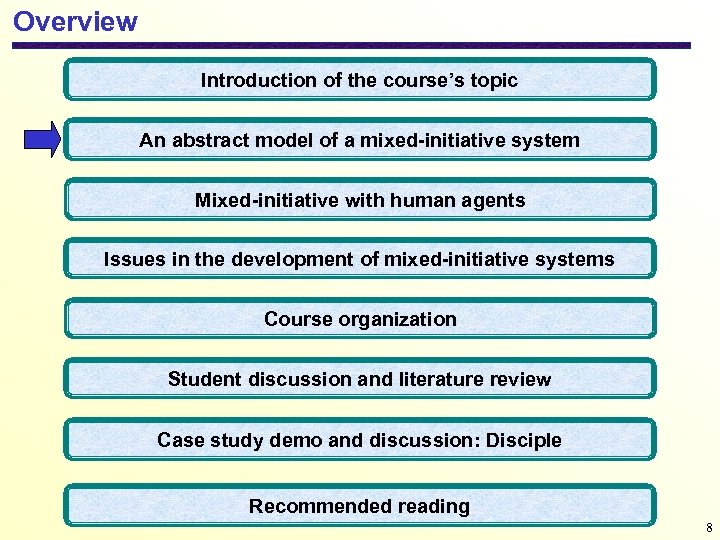

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 8

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 8

An abstract model of a mixed-initiative system Based on Guinn (1998) Collaboration as an extension of single-agent problemsolving The agents in human–human collaboration are individuals. Each participant is a separate entity. The mental structures and mechanisms of one participant are not directly accessible to the other. During collaboration the two participants satisfy goals and share this information by some mean of communication. Effective collaboration takes place when each participant depends on the other in solving a common goal or in solving a goal more efficiently. It is the synergistic effect of the two problem-solvers working together that makes the collaboration beneficial for both parties. 9

An abstract model of a mixed-initiative system Based on Guinn (1998) Collaboration as an extension of single-agent problemsolving The agents in human–human collaboration are individuals. Each participant is a separate entity. The mental structures and mechanisms of one participant are not directly accessible to the other. During collaboration the two participants satisfy goals and share this information by some mean of communication. Effective collaboration takes place when each participant depends on the other in solving a common goal or in solving a goal more efficiently. It is the synergistic effect of the two problem-solvers working together that makes the collaboration beneficial for both parties. 9

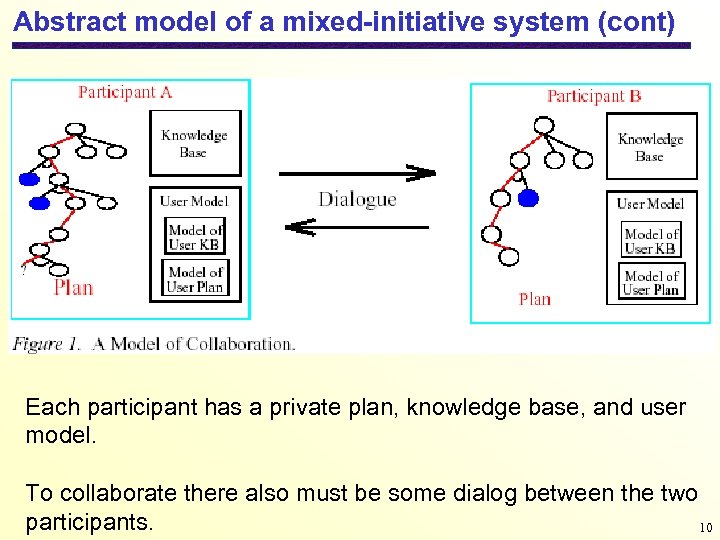

Abstract model of a mixed-initiative system (cont) Each participant has a private plan, knowledge base, and user model. To collaborate there also must be some dialog between the two participants. 10

Abstract model of a mixed-initiative system (cont) Each participant has a private plan, knowledge base, and user model. To collaborate there also must be some dialog between the two participants. 10

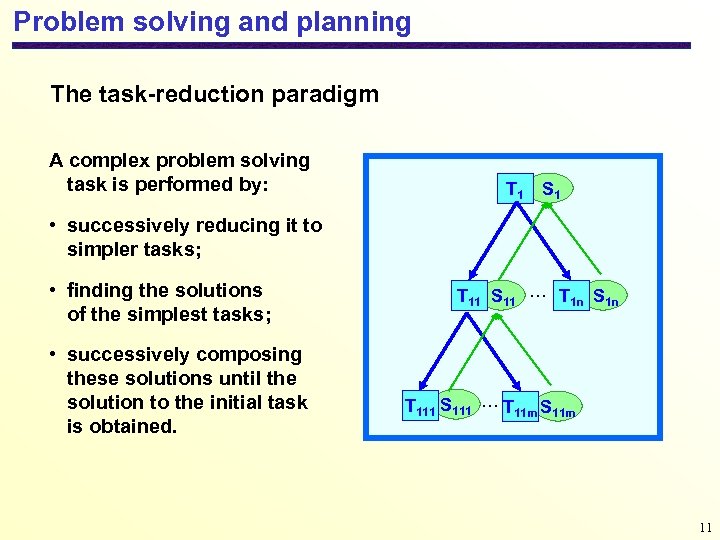

Problem solving and planning The task-reduction paradigm A complex problem solving task is performed by: T 1 S 1 • successively reducing it to simpler tasks; • finding the solutions of the simplest tasks; • successively composing these solutions until the solution to the initial task is obtained. T 11 S 11 … T 1 n S 1 n T 111 S 111 … T 11 m S 11 m 11

Problem solving and planning The task-reduction paradigm A complex problem solving task is performed by: T 1 S 1 • successively reducing it to simpler tasks; • finding the solutions of the simplest tasks; • successively composing these solutions until the solution to the initial task is obtained. T 11 S 11 … T 1 n S 1 n T 111 S 111 … T 11 m S 11 m 11

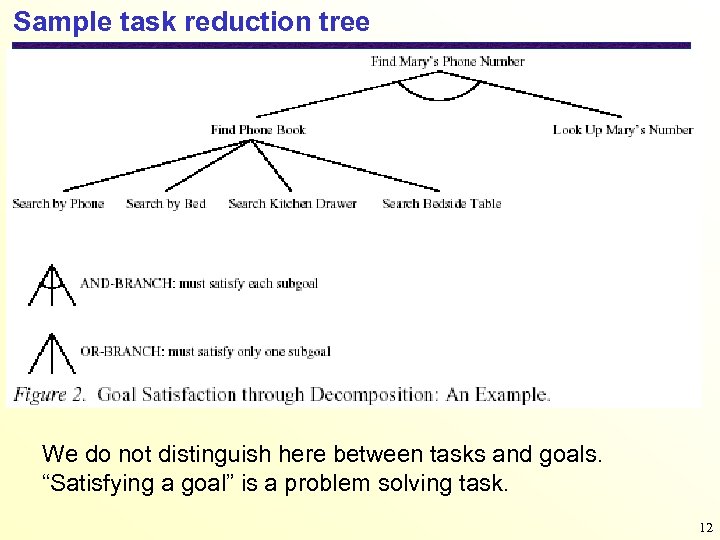

Sample task reduction tree We do not distinguish here between tasks and goals. “Satisfying a goal” is a problem solving task. 12

Sample task reduction tree We do not distinguish here between tasks and goals. “Satisfying a goal” is a problem solving task. 12

The structure of the knowledge base Knowledge Base = Object ontology + Task reduction rules The object ontology is a hierarchical description of the objects from the domain, specifying their properties and relationships. It includes both descriptions of types of objects (called concepts) and descriptions of specific objects (called instances). The task reduction rules specify generic problem solving steps of reducing complex tasks to simpler tasks. They are described using the objects from the ontology. 13

The structure of the knowledge base Knowledge Base = Object ontology + Task reduction rules The object ontology is a hierarchical description of the objects from the domain, specifying their properties and relationships. It includes both descriptions of types of objects (called concepts) and descriptions of specific objects (called instances). The task reduction rules specify generic problem solving steps of reducing complex tasks to simpler tasks. They are described using the objects from the ontology. 13

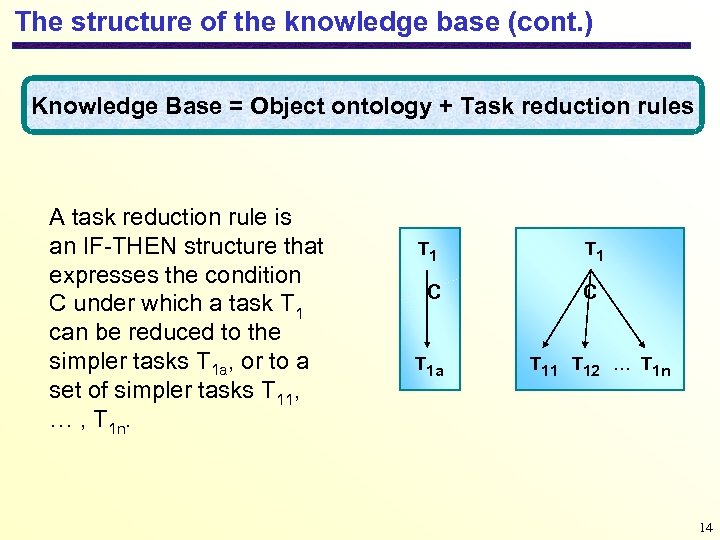

The structure of the knowledge base (cont. ) Knowledge Base = Object ontology + Task reduction rules A task reduction rule is an IF-THEN structure that expresses the condition C under which a task T 1 can be reduced to the simpler tasks T 1 a, or to a set of simpler tasks T 11, … , T 1 n. T 1 C T 1 a T 1 C T 11 T 12 … T 1 n 14

The structure of the knowledge base (cont. ) Knowledge Base = Object ontology + Task reduction rules A task reduction rule is an IF-THEN structure that expresses the condition C under which a task T 1 can be reduced to the simpler tasks T 1 a, or to a set of simpler tasks T 11, … , T 1 n. T 1 C T 1 a T 1 C T 11 T 12 … T 1 n 14

Impenetrability The only knowledge one participant has of the other is indirect. A participant may have a set of beliefs about the other. The set of beliefs a participant has about what knowledge and abilities the other participant has is called user (or agent) model. How could the information in the user/agent model be acquired? 15

Impenetrability The only knowledge one participant has of the other is indirect. A participant may have a set of beliefs about the other. The set of beliefs a participant has about what knowledge and abilities the other participant has is called user (or agent) model. How could the information in the user/agent model be acquired? 15

Impenetrability (cont. ) How could the information in the model be acquired? The information may be acquired in many ways: - stereotypes; - previous contact with the other participant - each participant may be given a set of facts about the other participant. In general, the user model is dynamic. During a problemsolving session, information can be learned about the knowledge or capabilities of the other participant. What else, besides modeling the knowledge of its collaborator, is required? Why? 16

Impenetrability (cont. ) How could the information in the model be acquired? The information may be acquired in many ways: - stereotypes; - previous contact with the other participant - each participant may be given a set of facts about the other participant. In general, the user model is dynamic. During a problemsolving session, information can be learned about the knowledge or capabilities of the other participant. What else, besides modeling the knowledge of its collaborator, is required? Why? 16

Impenetrability (cont. ) What else, besides modeling the knowledge of its collaborator, is required? Why? Each participant must also model the current plan of the other participant. Without knowing the current intentions of the other participant, a problem-solver will not be able to respond appropriately to goal requests, announcements and other dialog behaviors of the other participant. When a problem-solver cannot satisfy a goal (i. e. the goal is not known to be true, and there is no rule to reduce/decompose it), it has the option of requesting that the other participant to satisfy that goal. However, the problemsolver should only exercise that option if it believes the other participant is capable of satisfying that goal. 17

Impenetrability (cont. ) What else, besides modeling the knowledge of its collaborator, is required? Why? Each participant must also model the current plan of the other participant. Without knowing the current intentions of the other participant, a problem-solver will not be able to respond appropriately to goal requests, announcements and other dialog behaviors of the other participant. When a problem-solver cannot satisfy a goal (i. e. the goal is not known to be true, and there is no rule to reduce/decompose it), it has the option of requesting that the other participant to satisfy that goal. However, the problemsolver should only exercise that option if it believes the other participant is capable of satisfying that goal. 17

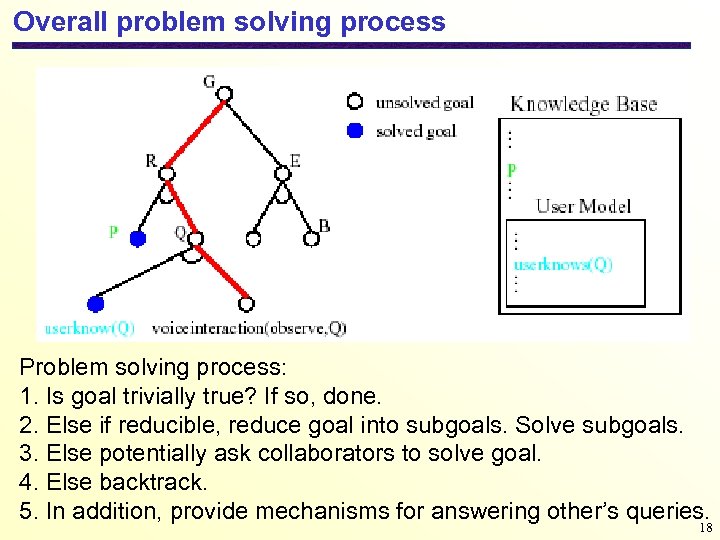

Overall problem solving process Problem solving process: 1. Is goal trivially true? If so, done. 2. Else if reducible, reduce goal into subgoals. Solve subgoals. 3. Else potentially ask collaborators to solve goal. 4. Else backtrack. 5. In addition, provide mechanisms for answering other’s queries. 18

Overall problem solving process Problem solving process: 1. Is goal trivially true? If so, done. 2. Else if reducible, reduce goal into subgoals. Solve subgoals. 3. Else potentially ask collaborators to solve goal. 4. Else backtrack. 5. In addition, provide mechanisms for answering other’s queries. 18

Conflicts in collaboration Even when agents want to work together, there can be conflicts. What kind of conflicts could be? Provide an example situation with a conflict. 19

Conflicts in collaboration Even when agents want to work together, there can be conflicts. What kind of conflicts could be? Provide an example situation with a conflict. 19

Conflicts in collaboration (cont) What kind of conflicts could be? Types of conflicts: - conflict over resource control, - conflict over computational effort, and - conflict over locus of problem-solving responsibility. Provide an example situation with a conflict. Two carpenters working together may both require a drill for the tasks they are doing. Or one carpenter may need help carrying a board. If the other carpenter is concurrently erecting a wall, that carpenter must interrupt his or her work to help. Thus there is a conflict of task processing effort. 20

Conflicts in collaboration (cont) What kind of conflicts could be? Types of conflicts: - conflict over resource control, - conflict over computational effort, and - conflict over locus of problem-solving responsibility. Provide an example situation with a conflict. Two carpenters working together may both require a drill for the tasks they are doing. Or one carpenter may need help carrying a board. If the other carpenter is concurrently erecting a wall, that carpenter must interrupt his or her work to help. Thus there is a conflict of task processing effort. 20

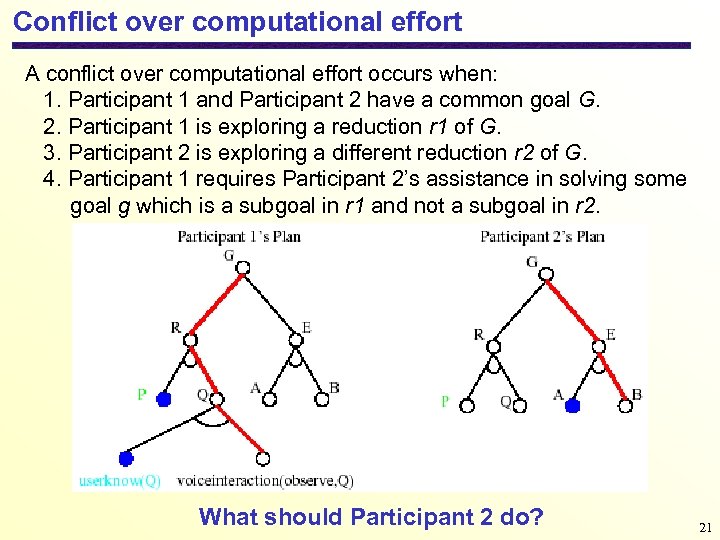

Conflict over computational effort A conflict over computational effort occurs when: 1. Participant 1 and Participant 2 have a common goal G. 2. Participant 1 is exploring a reduction r 1 of G. 3. Participant 2 is exploring a different reduction r 2 of G. 4. Participant 1 requires Participant 2’s assistance in solving some goal g which is a subgoal in r 1 and not a subgoal in r 2. What should Participant 2 do? 21

Conflict over computational effort A conflict over computational effort occurs when: 1. Participant 1 and Participant 2 have a common goal G. 2. Participant 1 is exploring a reduction r 1 of G. 3. Participant 2 is exploring a different reduction r 2 of G. 4. Participant 1 requires Participant 2’s assistance in solving some goal g which is a subgoal in r 1 and not a subgoal in r 2. What should Participant 2 do? 21

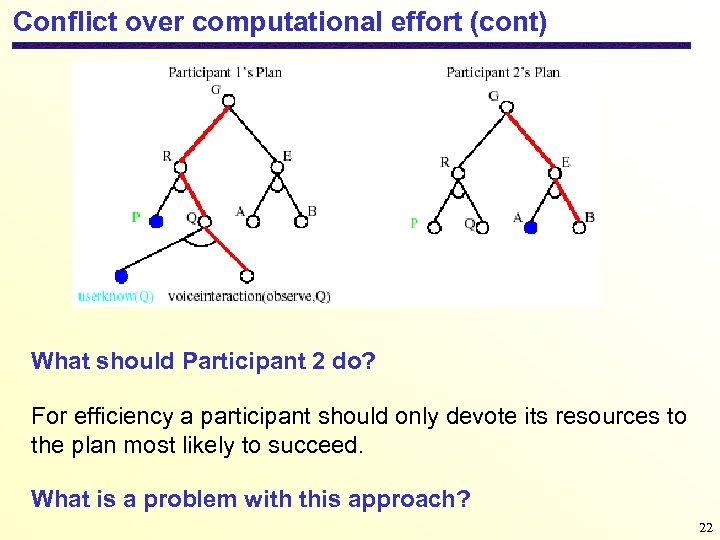

Conflict over computational effort (cont) What should Participant 2 do? For efficiency a participant should only devote its resources to the plan most likely to succeed. What is a problem with this approach? 22

Conflict over computational effort (cont) What should Participant 2 do? For efficiency a participant should only devote its resources to the plan most likely to succeed. What is a problem with this approach? 22

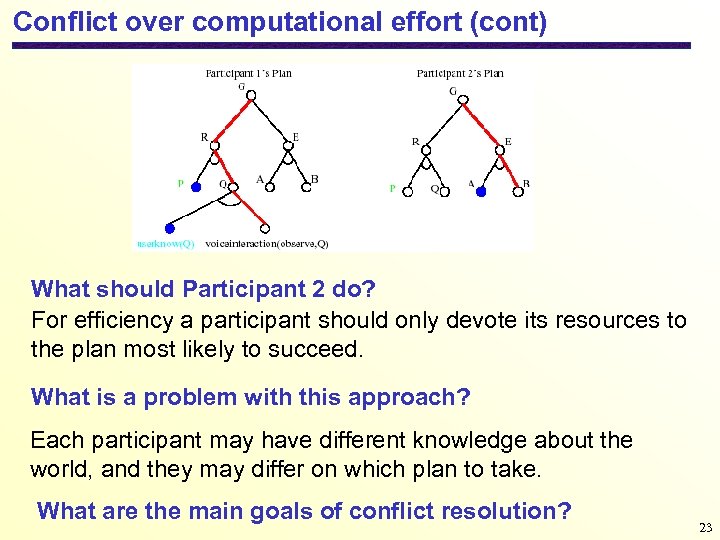

Conflict over computational effort (cont) What should Participant 2 do? For efficiency a participant should only devote its resources to the plan most likely to succeed. What is a problem with this approach? Each participant may have different knowledge about the world, and they may differ on which plan to take. What are the main goals of conflict resolution? 23

Conflict over computational effort (cont) What should Participant 2 do? For efficiency a participant should only devote its resources to the plan most likely to succeed. What is a problem with this approach? Each participant may have different knowledge about the world, and they may differ on which plan to take. What are the main goals of conflict resolution? 23

Conflict resolution What are the main goals of conflict resolution? Goals: - avoidance of deadlock - efficient allocation of resources If there is more than one concurrent demand on a resource, one demand must succeed in obtaining that resource. Otherwise, neither participant will be able to continue. Furthermore, resources should be allocated so that the collaborative problem-solving is more efficient. 24

Conflict resolution What are the main goals of conflict resolution? Goals: - avoidance of deadlock - efficient allocation of resources If there is more than one concurrent demand on a resource, one demand must succeed in obtaining that resource. Otherwise, neither participant will be able to continue. Furthermore, resources should be allocated so that the collaborative problem-solving is more efficient. 24

Task initiative Conflicts can arise when each participant believes it should control the reduction of a goal. Even though both participants may be trying to solve the same goal, they may choose different ways of solving that goal. If there is a conflict because the participants have chosen different branches or reductions of a goal, then one participant must be given control of that goal’s reduction in order to resolve the conflict. Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: No, let’s check the battery. Hand me the voltmeter. Who should be given the task initiative? 25

Task initiative Conflicts can arise when each participant believes it should control the reduction of a goal. Even though both participants may be trying to solve the same goal, they may choose different ways of solving that goal. If there is a conflict because the participants have chosen different branches or reductions of a goal, then one participant must be given control of that goal’s reduction in order to resolve the conflict. Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: No, let’s check the battery. Hand me the voltmeter. Who should be given the task initiative? 25

Task initiative (cont) Who should be given the task initiative? Ideally, the participant best able to guide a goal’s solution should be given the task initiative. Consider again the preceding dialog where both participants appear to have decided to take task initiative in determining the origin of the car’s problems. Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: No, let’s check the battery. Hand me the voltmeter. Give an example of a different dialog where Chris had instead decided that Jordan should have task initiative. 26

Task initiative (cont) Who should be given the task initiative? Ideally, the participant best able to guide a goal’s solution should be given the task initiative. Consider again the preceding dialog where both participants appear to have decided to take task initiative in determining the origin of the car’s problems. Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: No, let’s check the battery. Hand me the voltmeter. Give an example of a different dialog where Chris had instead decided that Jordan should have task initiative. 26

Task initiative (cont) Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: No, let’s check the battery. Hand me the voltmeter. Give an example of a different dialog where Chris had instead decided that Jordan should have task initiative. Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: Ok. We’re going to need a screwdriver. 27

Task initiative (cont) Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: No, let’s check the battery. Hand me the voltmeter. Give an example of a different dialog where Chris had instead decided that Jordan should have task initiative. Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: Ok. We’re going to need a screwdriver. 27

Task initiative (cont) The initiative may be attached to each goal. During problemsolving, initiative may change back and forth between participants depending on which goals the two participants are working on. Definition: A participant is said to have task initiative over a goal if the participant dictates which reduction of the goal will be used by both participants during problem-solving. 28

Task initiative (cont) The initiative may be attached to each goal. During problemsolving, initiative may change back and forth between participants depending on which goals the two participants are working on. Definition: A participant is said to have task initiative over a goal if the participant dictates which reduction of the goal will be used by both participants during problem-solving. 28

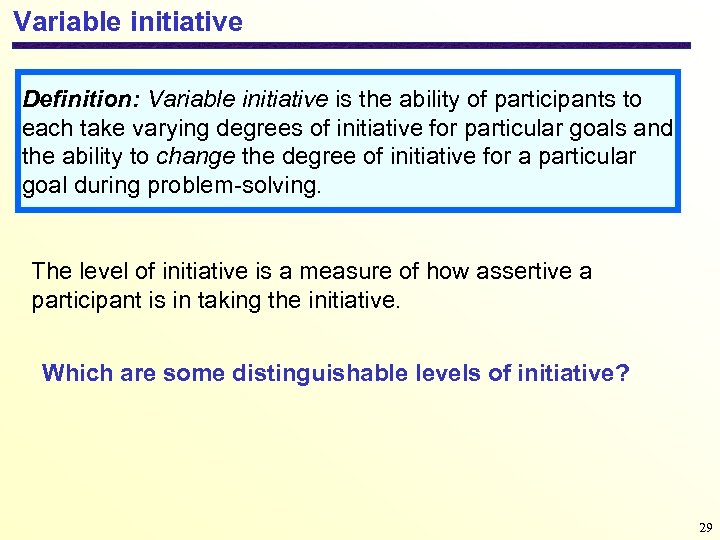

Variable initiative Definition: Variable initiative is the ability of participants to each take varying degrees of initiative for particular goals and the ability to change the degree of initiative for a particular goal during problem-solving. The level of initiative is a measure of how assertive a participant is in taking the initiative. Which are some distinguishable levels of initiative? 29

Variable initiative Definition: Variable initiative is the ability of participants to each take varying degrees of initiative for particular goals and the ability to change the degree of initiative for a particular goal during problem-solving. The level of initiative is a measure of how assertive a participant is in taking the initiative. Which are some distinguishable levels of initiative? 29

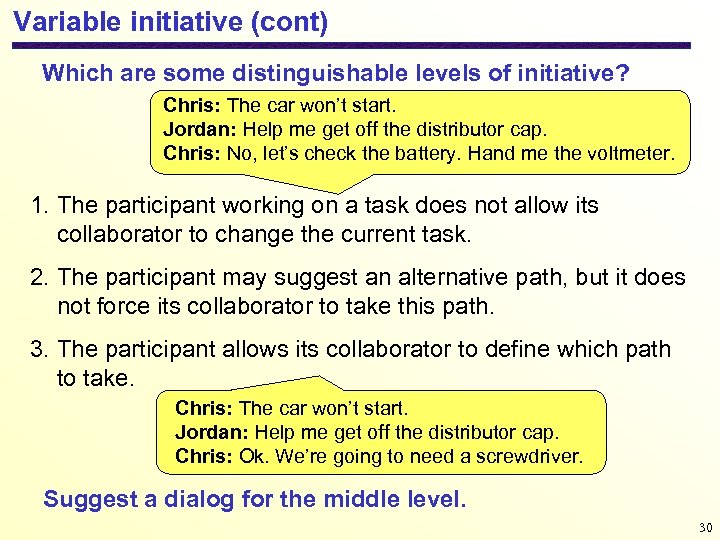

Variable initiative (cont) Which are some distinguishable levels of initiative? Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: No, let’s check the battery. Hand me the voltmeter. 1. The participant working on a task does not allow its collaborator to change the current task. 2. The participant may suggest an alternative path, but it does not force its collaborator to take this path. 3. The participant allows its collaborator to define which path to take. Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: Ok. We’re going to need a screwdriver. Suggest a dialog for the middle level. 30

Variable initiative (cont) Which are some distinguishable levels of initiative? Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: No, let’s check the battery. Hand me the voltmeter. 1. The participant working on a task does not allow its collaborator to change the current task. 2. The participant may suggest an alternative path, but it does not force its collaborator to take this path. 3. The participant allows its collaborator to define which path to take. Chris: The car won’t start. Jordan: Help me get off the distributor cap. Chris: Ok. We’re going to need a screwdriver. Suggest a dialog for the middle level. 30

Conflict resolution through negotiation Negotiation is a process by which problem-solvers resolve conflict through the interchange of information. We will focuses on using negotiation to resolve disputes over which reduction or branch to select for solving a goal. Negotiation resolves conflicts after they occur, being used to recover from conflicts. During negotiation each participant argues for its choice for reducing a goal. What types of arguments are provided in a negotiation? 31

Conflict resolution through negotiation Negotiation is a process by which problem-solvers resolve conflict through the interchange of information. We will focuses on using negotiation to resolve disputes over which reduction or branch to select for solving a goal. Negotiation resolves conflicts after they occur, being used to recover from conflicts. During negotiation each participant argues for its choice for reducing a goal. What types of arguments are provided in a negotiation? 31

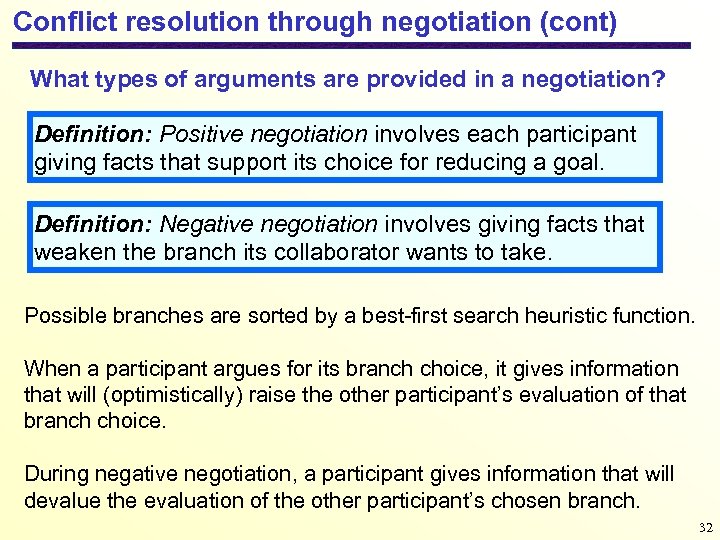

Conflict resolution through negotiation (cont) What types of arguments are provided in a negotiation? Definition: Positive negotiation involves each participant giving facts that support its choice for reducing a goal. Definition: Negative negotiation involves giving facts that weaken the branch its collaborator wants to take. Possible branches are sorted by a best-first search heuristic function. When a participant argues for its branch choice, it gives information that will (optimistically) raise the other participant’s evaluation of that branch choice. During negative negotiation, a participant gives information that will devalue the evaluation of the other participant’s chosen branch. 32

Conflict resolution through negotiation (cont) What types of arguments are provided in a negotiation? Definition: Positive negotiation involves each participant giving facts that support its choice for reducing a goal. Definition: Negative negotiation involves giving facts that weaken the branch its collaborator wants to take. Possible branches are sorted by a best-first search heuristic function. When a participant argues for its branch choice, it gives information that will (optimistically) raise the other participant’s evaluation of that branch choice. During negative negotiation, a participant gives information that will devalue the evaluation of the other participant’s chosen branch. 32

Conflict resolution through negotiation (cont) The winner of the negotiation is the participant whose chosen branch is ranked highest after the negotiations. If the heuristic evaluations are effective, the branch of the winner of the negotiation should be more likely to succeed than the loser’s branch. Negotiation should result in more efficient problem-solving. 33

Conflict resolution through negotiation (cont) The winner of the negotiation is the participant whose chosen branch is ranked highest after the negotiations. If the heuristic evaluations are effective, the branch of the winner of the negotiation should be more likely to succeed than the loser’s branch. Negotiation should result in more efficient problem-solving. 33

Conflict resolution through negotiation (cont) Example: Two mechanics disagree on how to proceed in repairing a car. Chris gives a fact that lends evidence to the battery being the problem (positive negotiation). Jordan then gives a fact that reduces the likelihood of the battery’s failure (negative negotiation). Jordan: Help me get the distributor cap off so we can check the spark plugs. Chris: The lights were probably left on last night. It’s the battery. Jordan: The voltage on the battery is fine. 34

Conflict resolution through negotiation (cont) Example: Two mechanics disagree on how to proceed in repairing a car. Chris gives a fact that lends evidence to the battery being the problem (positive negotiation). Jordan then gives a fact that reduces the likelihood of the battery’s failure (negative negotiation). Jordan: Help me get the distributor cap off so we can check the spark plugs. Chris: The lights were probably left on last night. It’s the battery. Jordan: The voltage on the battery is fine. 34

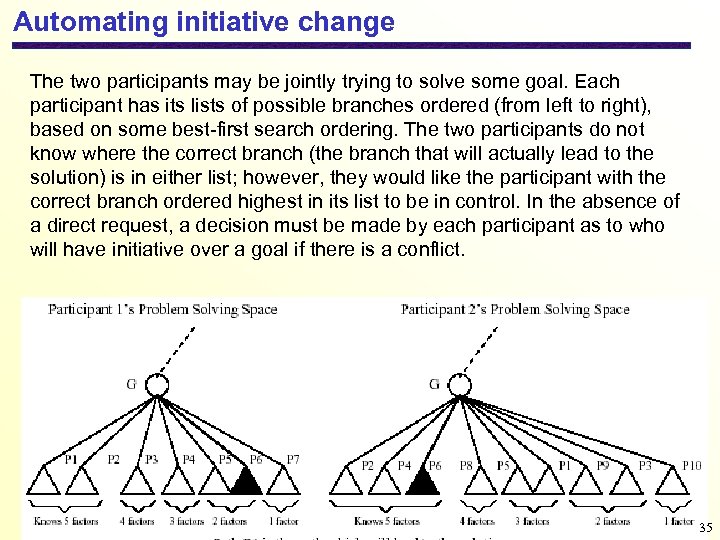

Automating initiative change The two participants may be jointly trying to solve some goal. Each participant has its lists of possible branches ordered (from left to right), based on some best-first search ordering. The two participants do not know where the correct branch (the branch that will actually lead to the solution) is in either list; however, they would like the participant with the correct branch ordered highest in its list to be in control. In the absence of a direct request, a decision must be made by each participant as to who will have initiative over a goal if there is a conflict. 35

Automating initiative change The two participants may be jointly trying to solve some goal. Each participant has its lists of possible branches ordered (from left to right), based on some best-first search ordering. The two participants do not know where the correct branch (the branch that will actually lead to the solution) is in either list; however, they would like the participant with the correct branch ordered highest in its list to be in control. In the absence of a direct request, a decision must be made by each participant as to who will have initiative over a goal if there is a conflict. 35

Automating initiative change (cont) Definition: An agent is said to have initiative over a mutual goal when that agent controls how that goal will be solved by the collaborators. Consider that an initiative level is attached to each goal in the task tree: - an agent may have initiative over one goal but not another; - as goals are achieved and new goals are pursued, initiative changes accordingly; - many initiative changes are done implicitly based on which goal is being solved. 36

Automating initiative change (cont) Definition: An agent is said to have initiative over a mutual goal when that agent controls how that goal will be solved by the collaborators. Consider that an initiative level is attached to each goal in the task tree: - an agent may have initiative over one goal but not another; - as goals are achieved and new goals are pursued, initiative changes accordingly; - many initiative changes are done implicitly based on which goal is being solved. 36

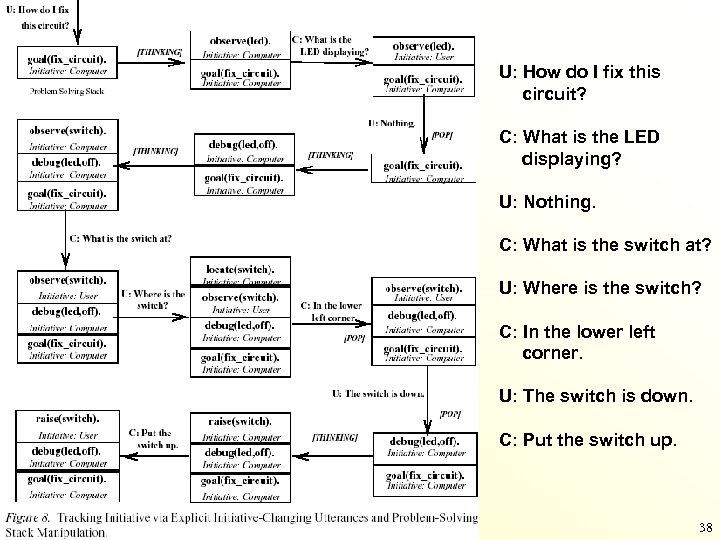

Automating initiative change (cont) When an agent A 1 asks another agent A 2 to satisfy a goal G, agent A 2 gains initiative over goal G and all subgoals of G until agent A 2 passes control of one of those subgoals back to agent A 1. A similar initiative-setting mechanism is fired if agent A 1 announces that it cannot satisfy goal G. When G has been satisfied, the initiative will change again, based on the current goal. 37

Automating initiative change (cont) When an agent A 1 asks another agent A 2 to satisfy a goal G, agent A 2 gains initiative over goal G and all subgoals of G until agent A 2 passes control of one of those subgoals back to agent A 1. A similar initiative-setting mechanism is fired if agent A 1 announces that it cannot satisfy goal G. When G has been satisfied, the initiative will change again, based on the current goal. 37

U: How do I fix this circuit? C: What is the LED displaying? U: Nothing. C: What is the switch at? U: Where is the switch? C: In the lower left corner. U: The switch is down. C: Put the switch up. 38

U: How do I fix this circuit? C: What is the LED displaying? U: Nothing. C: What is the switch at? U: Where is the switch? C: In the lower left corner. U: The switch is down. C: Put the switch up. 38

Some initiative selection schemes Assume a goal and two agents competing to take the initiative to achieve it. What kind of initiative selection schemes could you imagine? 39

Some initiative selection schemes Assume a goal and two agents competing to take the initiative to achieve it. What kind of initiative selection schemes could you imagine? 39

Some initiative selection schemes Random Selection One agent is given initiative at random in the event of a conflict. The randomly selected agent will then begin to initiate the solution of the goal using its ordered lists of possible branches as a guide. It is possible that the chosen participant will not have the correct branch in its list. In this case, after exhausting its list, the agent will pass the initiative to the other participant(s). What would be the usefulness of using such a scheme? 40

Some initiative selection schemes Random Selection One agent is given initiative at random in the event of a conflict. The randomly selected agent will then begin to initiate the solution of the goal using its ordered lists of possible branches as a guide. It is possible that the chosen participant will not have the correct branch in its list. In this case, after exhausting its list, the agent will pass the initiative to the other participant(s). What would be the usefulness of using such a scheme? 40

Some initiative selection schemes What would be the usefulness of using the random selection scheme? This scheme provides a baseline for initiative setting algorithms. Hopefully, a proposed algorithm will do better than Random selection also assures that system’s behavior is not predictable. 41

Some initiative selection schemes What would be the usefulness of using the random selection scheme? This scheme provides a baseline for initiative setting algorithms. Hopefully, a proposed algorithm will do better than Random selection also assures that system’s behavior is not predictable. 41

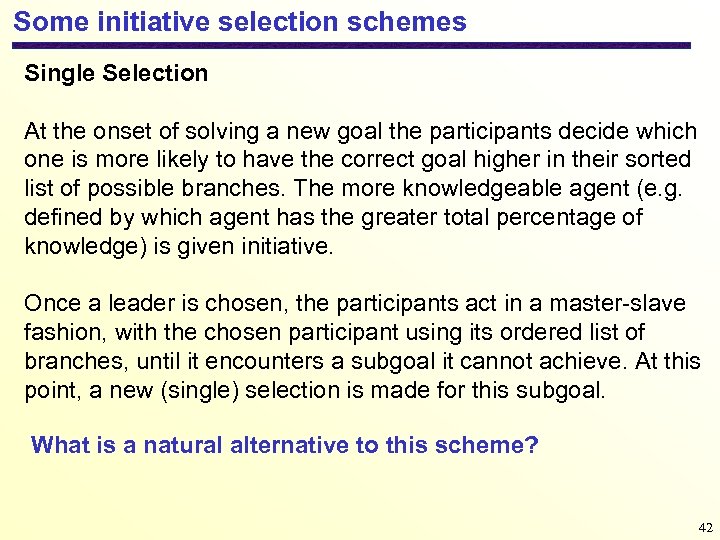

Some initiative selection schemes Single Selection At the onset of solving a new goal the participants decide which one is more likely to have the correct goal higher in their sorted list of possible branches. The more knowledgeable agent (e. g. defined by which agent has the greater total percentage of knowledge) is given initiative. Once a leader is chosen, the participants act in a master-slave fashion, with the chosen participant using its ordered list of branches, until it encounters a subgoal it cannot achieve. At this point, a new (single) selection is made for this subgoal. What is a natural alternative to this scheme? 42

Some initiative selection schemes Single Selection At the onset of solving a new goal the participants decide which one is more likely to have the correct goal higher in their sorted list of possible branches. The more knowledgeable agent (e. g. defined by which agent has the greater total percentage of knowledge) is given initiative. Once a leader is chosen, the participants act in a master-slave fashion, with the chosen participant using its ordered list of branches, until it encounters a subgoal it cannot achieve. At this point, a new (single) selection is made for this subgoal. What is a natural alternative to this scheme? 42

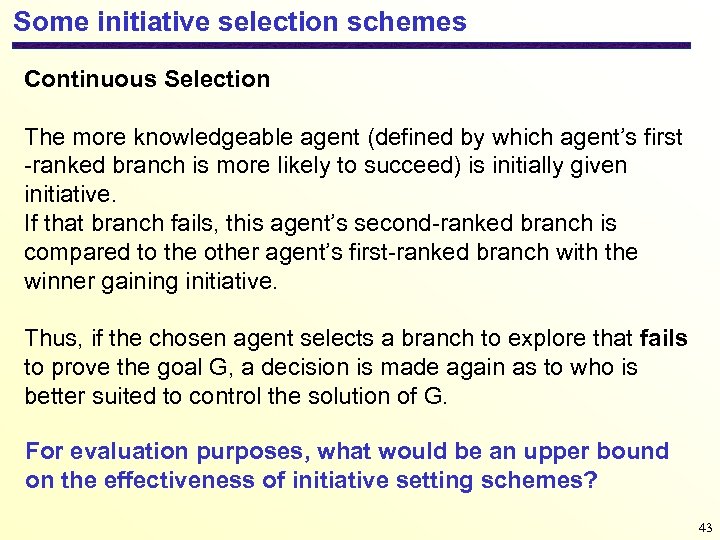

Some initiative selection schemes Continuous Selection The more knowledgeable agent (defined by which agent’s first -ranked branch is more likely to succeed) is initially given initiative. If that branch fails, this agent’s second-ranked branch is compared to the other agent’s first-ranked branch with the winner gaining initiative. Thus, if the chosen agent selects a branch to explore that fails to prove the goal G, a decision is made again as to who is better suited to control the solution of G. For evaluation purposes, what would be an upper bound on the effectiveness of initiative setting schemes? 43

Some initiative selection schemes Continuous Selection The more knowledgeable agent (defined by which agent’s first -ranked branch is more likely to succeed) is initially given initiative. If that branch fails, this agent’s second-ranked branch is compared to the other agent’s first-ranked branch with the winner gaining initiative. Thus, if the chosen agent selects a branch to explore that fails to prove the goal G, a decision is made again as to who is better suited to control the solution of G. For evaluation purposes, what would be an upper bound on the effectiveness of initiative setting schemes? 43

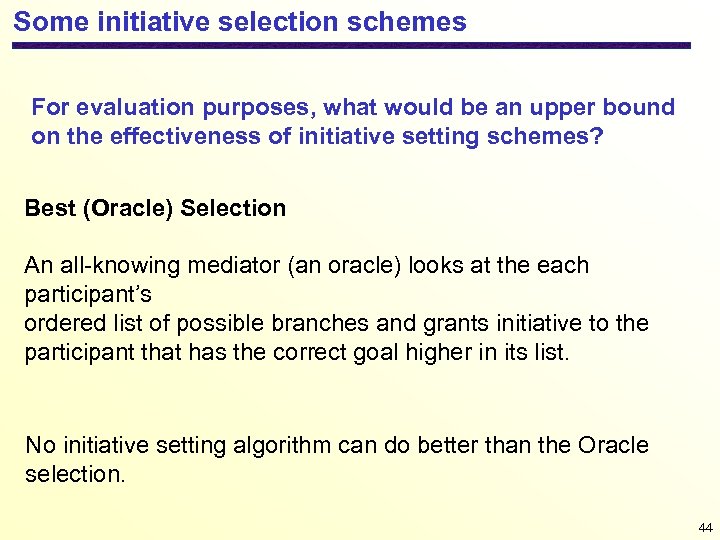

Some initiative selection schemes For evaluation purposes, what would be an upper bound on the effectiveness of initiative setting schemes? Best (Oracle) Selection An all-knowing mediator (an oracle) looks at the each participant’s ordered list of possible branches and grants initiative to the participant that has the correct goal higher in its list. No initiative setting algorithm can do better than the Oracle selection. 44

Some initiative selection schemes For evaluation purposes, what would be an upper bound on the effectiveness of initiative setting schemes? Best (Oracle) Selection An all-knowing mediator (an oracle) looks at the each participant’s ordered list of possible branches and grants initiative to the participant that has the correct goal higher in its list. No initiative setting algorithm can do better than the Oracle selection. 44

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 45

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 45

Mixed-initiative with human agents What challenges and opportunities are associated with involving human agents in mixed-initiative systems? 46

Mixed-initiative with human agents What challenges and opportunities are associated with involving human agents in mixed-initiative systems? 46

Mixed-initiative with human agents What challenges and opportunities are associated with involving human agents in mixed-initiative systems? Challenge: Involving a human in the interaction adds the complication that the system agents must use an interaction mode convenient to the human and support human-style problem solving. To do this, computer agents must be able to focus on different key subproblems, collaborate to find solutions—filling in details and identifying problem areas— and work with the person to resolve problems as they arise. Opportunity: Allow humans to solve more complex tasks, and to solve ther tasks better, based on the complementarity of human and automated agents. 47

Mixed-initiative with human agents What challenges and opportunities are associated with involving human agents in mixed-initiative systems? Challenge: Involving a human in the interaction adds the complication that the system agents must use an interaction mode convenient to the human and support human-style problem solving. To do this, computer agents must be able to focus on different key subproblems, collaborate to find solutions—filling in details and identifying problem areas— and work with the person to resolve problems as they arise. Opportunity: Allow humans to solve more complex tasks, and to solve ther tasks better, based on the complementarity of human and automated agents. 47

Principles of mixed-Initiative user interfaces Eric Horvitz (1) Developing significant value-added automation. It is important to provide automated services that provide genuine value over solutions attainable with direct manipulation. 48

Principles of mixed-Initiative user interfaces Eric Horvitz (1) Developing significant value-added automation. It is important to provide automated services that provide genuine value over solutions attainable with direct manipulation. 48

Principles of mixed-Initiative user interfaces (2) Considering uncertainty about a user’s goals. Computers are often uncertain about the goals and the current focus of attention of a user. In many cases, systems can benefit by employing machinery for inferring and exploiting the uncertainty about a user’s intentions and focus. 49

Principles of mixed-Initiative user interfaces (2) Considering uncertainty about a user’s goals. Computers are often uncertain about the goals and the current focus of attention of a user. In many cases, systems can benefit by employing machinery for inferring and exploiting the uncertainty about a user’s intentions and focus. 49

Principles of mixed-Initiative user interfaces (cont) (3) Considering the status of a user’s attention in the timing of services. The nature and timing of automated services and alerts can be a critical factor in the costs and benefits of actions. Agents should employ models of the attention of users and consider the costs and benefits of deferring action to a time when action will be less distracting. 50

Principles of mixed-Initiative user interfaces (cont) (3) Considering the status of a user’s attention in the timing of services. The nature and timing of automated services and alerts can be a critical factor in the costs and benefits of actions. Agents should employ models of the attention of users and consider the costs and benefits of deferring action to a time when action will be less distracting. 50

Principles of mixed-Initiative user interfaces (cont) (4) Inferring ideal action in light of costs, benefits, and uncertainties. Automated actions taken under uncertainty in a user’s goals and attention are associated with context-dependent costs and benefits. The value of automated services can be enhanced by guiding their invocation with a consideration of the expected value of taking actions. 51

Principles of mixed-Initiative user interfaces (cont) (4) Inferring ideal action in light of costs, benefits, and uncertainties. Automated actions taken under uncertainty in a user’s goals and attention are associated with context-dependent costs and benefits. The value of automated services can be enhanced by guiding their invocation with a consideration of the expected value of taking actions. 51

Principles of mixed-Initiative user interfaces (cont) (5) Employing dialog to resolve key uncertainties. If a system is uncertain about a user’s intentions, it should be able to engage in an efficient dialog with the user, considering the costs of potentially bothering a user needlessly. 52

Principles of mixed-Initiative user interfaces (cont) (5) Employing dialog to resolve key uncertainties. If a system is uncertain about a user’s intentions, it should be able to engage in an efficient dialog with the user, considering the costs of potentially bothering a user needlessly. 52

Principles of mixed-Initiative user interfaces (cont) (6) Allowing efficient direct invocation and termination. A system operating under uncertainty will sometimes make poor decisions about invoking—or not invoking—an automated service. The value of agents providing automated services can be enhanced by providing efficient means by which users can directly invoke or terminate the automated services. 53

Principles of mixed-Initiative user interfaces (cont) (6) Allowing efficient direct invocation and termination. A system operating under uncertainty will sometimes make poor decisions about invoking—or not invoking—an automated service. The value of agents providing automated services can be enhanced by providing efficient means by which users can directly invoke or terminate the automated services. 53

Principles of mixed-Initiative user interfaces (cont) (7) Minimizing the cost of poor guesses about action and timing. Designs for services and alerts should be undertaken with an eye to minimizing the cost of poor guesses, including appropriate timing out and natural gestures for rejecting attempts at service. 54

Principles of mixed-Initiative user interfaces (cont) (7) Minimizing the cost of poor guesses about action and timing. Designs for services and alerts should be undertaken with an eye to minimizing the cost of poor guesses, including appropriate timing out and natural gestures for rejecting attempts at service. 54

Principles of mixed-Initiative user interfaces (cont) (8) Scoping precision of service to match uncertainty, variation in goals. We can enhance the value of automation by giving agents the ability to gracefully degrade the precision of service to match current uncertainty. A preference for “doing less” but doing it correctly under uncertainty can provide the user with a valuable advance towards a solution and minimize the need for costly undoing or backtracking. 55

Principles of mixed-Initiative user interfaces (cont) (8) Scoping precision of service to match uncertainty, variation in goals. We can enhance the value of automation by giving agents the ability to gracefully degrade the precision of service to match current uncertainty. A preference for “doing less” but doing it correctly under uncertainty can provide the user with a valuable advance towards a solution and minimize the need for costly undoing or backtracking. 55

Principles of mixed-Initiative user interfaces (cont) (9) Providing mechanisms for efficient agent-user collaboration to refine results. We should design agents with the assumption that users may often wish to complete or refine an analysis provided by an agent. 56

Principles of mixed-Initiative user interfaces (cont) (9) Providing mechanisms for efficient agent-user collaboration to refine results. We should design agents with the assumption that users may often wish to complete or refine an analysis provided by an agent. 56

Principles of mixed-Initiative user interfaces (cont) (10) Employing socially appropriate behaviors for agent-user interaction. An agent should be endowed with tasteful default behaviors and courtesies that match social expectations for a benevolent assistant. 57

Principles of mixed-Initiative user interfaces (cont) (10) Employing socially appropriate behaviors for agent-user interaction. An agent should be endowed with tasteful default behaviors and courtesies that match social expectations for a benevolent assistant. 57

Principles of mixed-Initiative user interfaces (cont) (11) Maintaining working memory of recent interactions. Systems should maintain a memory of recent interactions with users and provide mechanisms that allow users to make efficient and natural references to objects and services included in “shared” short-term experiences. 58

Principles of mixed-Initiative user interfaces (cont) (11) Maintaining working memory of recent interactions. Systems should maintain a memory of recent interactions with users and provide mechanisms that allow users to make efficient and natural references to objects and services included in “shared” short-term experiences. 58

Principles of mixed-Initiative user interfaces (cont) (12) Continuing to learn by observing. Automated services should be endowed with the ability to continue to become better at working with users by continuing to learn about a user’s goals and needs. 59

Principles of mixed-Initiative user interfaces (cont) (12) Continuing to learn by observing. Automated services should be endowed with the ability to continue to become better at working with users by continuing to learn about a user’s goals and needs. 59

Principles of mixed-Initiative user interfaces (cont) When designing your system, think to what extent do you follow these principles. Are there any other useful principles? 60

Principles of mixed-Initiative user interfaces (cont) When designing your system, think to what extent do you follow these principles. Are there any other useful principles? 60

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 61

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 61

Issues in the development of MIIS The Task Issue The Control Issue The Awareness Issue The Communication Issue The Architecture Issue The Evaluation Issue 62

Issues in the development of MIIS The Task Issue The Control Issue The Awareness Issue The Communication Issue The Architecture Issue The Evaluation Issue 62

The task issue The division of responsibility between the human and the agent(s) for the tasks that need to be performed. What are some of the aspects related to this issue? Give some examples of tasks requiring a mixedinitiative approach. 63

The task issue The division of responsibility between the human and the agent(s) for the tasks that need to be performed. What are some of the aspects related to this issue? Give some examples of tasks requiring a mixedinitiative approach. 63

The task issue (cont) What are some of the aspects related to the task issue? What are the tasks that the MI system has to perform? Why do these tasks require a mixed-initiative approach? What are the relative competences of the agents with respect to these tasks? How should the tasks be changed for a mixed-initiative approach? 64

The task issue (cont) What are some of the aspects related to the task issue? What are the tasks that the MI system has to perform? Why do these tasks require a mixed-initiative approach? What are the relative competences of the agents with respect to these tasks? How should the tasks be changed for a mixed-initiative approach? 64

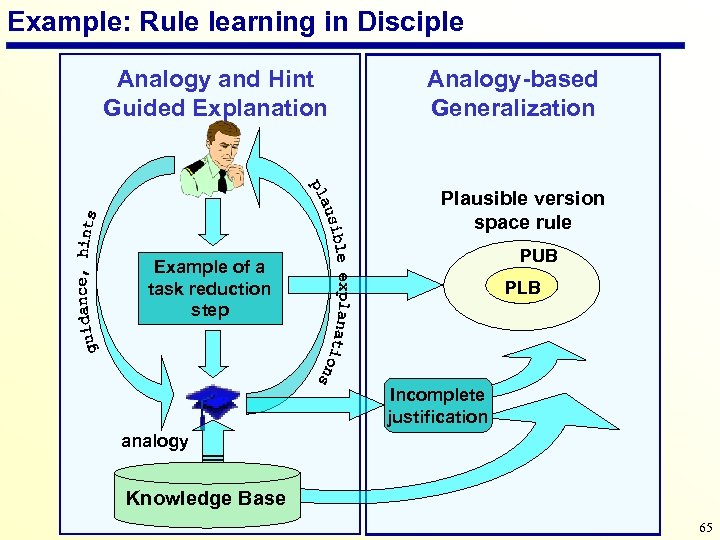

Example: Rule learning in Disciple Analogy and Hint Guided Explanation Analogy-based Generalization Plausible version space rule PUB Example of a task reduction step PLB Incomplete justification analogy Knowledge Base 65

Example: Rule learning in Disciple Analogy and Hint Guided Explanation Analogy-based Generalization Plausible version space rule PUB Example of a task reduction step PLB Incomplete justification analogy Knowledge Base 65

The control issue The shift of initiative and control between the human and the agent(s), including proactive behavior. What are some of the aspects related to this issue? 66

The control issue The shift of initiative and control between the human and the agent(s), including proactive behavior. What are some of the aspects related to this issue? 66

The control issue (cont) What are some of the aspects related to the control issue? What are the most appropriate strategies to shift the initiative and control (for the tasks considered)? What are some strategies? Random selection Single selection Continuous selection, … 67

The control issue (cont) What are some of the aspects related to the control issue? What are the most appropriate strategies to shift the initiative and control (for the tasks considered)? What are some strategies? Random selection Single selection Continuous selection, … 67

The awareness issue The maintenance of a shared awareness with respect to the current state of the human and agent(s) involved. What are some of the aspects related to this issue? 68

The awareness issue The maintenance of a shared awareness with respect to the current state of the human and agent(s) involved. What are some of the aspects related to this issue? 68

The awareness issue (cont) What are some of the aspects related to this issue? What are the most appropriate models of: - agent’s own capabilities - the capabilities of other agents - the world How could the models be built and maintained? What are some strategies for awareness maintenance? 69

The awareness issue (cont) What are some of the aspects related to this issue? What are the most appropriate models of: - agent’s own capabilities - the capabilities of other agents - the world How could the models be built and maintained? What are some strategies for awareness maintenance? 69

The awareness issue (cont) What are some strategies for awareness maintenance? - inferred state - explicit questioning - explicit information 70

The awareness issue (cont) What are some strategies for awareness maintenance? - inferred state - explicit questioning - explicit information 70

The communication issue The protocols that facilitate the exchange of knowledge and information between the human and the agent(s), including mixed-initiative dialog and multi-modal interfaces. What are some of the aspects related to this issue? 71

The communication issue The protocols that facilitate the exchange of knowledge and information between the human and the agent(s), including mixed-initiative dialog and multi-modal interfaces. What are some of the aspects related to this issue? 71

The communication issue (cont) What are some of the aspects related to the communication issue? Complementarity of communication abilities Humans: Agents: Horvitz’s principles for mixed-initiative interactions. 72

The communication issue (cont) What are some of the aspects related to the communication issue? Complementarity of communication abilities Humans: Agents: Horvitz’s principles for mixed-initiative interactions. 72

The architecture issue The design principles, methodologies and technologies for different types of mixed-initiative roles and behaviors. What are some of the aspects related to this issue? 73

The architecture issue The design principles, methodologies and technologies for different types of mixed-initiative roles and behaviors. What are some of the aspects related to this issue? 73

The architecture issue (cont) What are some of the aspects related to this issue? What are some architectural frameworks? What are the necessary components and their functionality? 74

The architecture issue (cont) What are some of the aspects related to this issue? What are some architectural frameworks? What are the necessary components and their functionality? 74

The evaluation issue The human and automated agent(s) contribution to the emergent behavior of the system, and the overall system's performance (e. g. , versus fully automated, fully manual, or alternative mixed-initiative approaches). What are some of the aspects related to this issue? 75

The evaluation issue The human and automated agent(s) contribution to the emergent behavior of the system, and the overall system's performance (e. g. , versus fully automated, fully manual, or alternative mixed-initiative approaches). What are some of the aspects related to this issue? 75

Case Study: The evaluation issue How to Evaluate a Mixed-initiative System? Mike Pazzani’s caution: Don’t lose sight of the goal. • The metrics are just approximations of the goal. • Optimizing the metric may not optimize the goal. 76

Case Study: The evaluation issue How to Evaluate a Mixed-initiative System? Mike Pazzani’s caution: Don’t lose sight of the goal. • The metrics are just approximations of the goal. • Optimizing the metric may not optimize the goal. 76

Question: What is the goal to be optimized? Possible goals of mixed-initiative systems: General goal Mixed-initiative systems integrate human and automated reasoning to take advantage of their complementary reasoning styles and computational strengths. More specific goal Mixed-initiative systems combine the human’s experience, flexibility, creativity, … with the agent’s speed, memory, tirelessness … to take advantage of these complementary strengths. 77

Question: What is the goal to be optimized? Possible goals of mixed-initiative systems: General goal Mixed-initiative systems integrate human and automated reasoning to take advantage of their complementary reasoning styles and computational strengths. More specific goal Mixed-initiative systems combine the human’s experience, flexibility, creativity, … with the agent’s speed, memory, tirelessness … to take advantage of these complementary strengths. 77

Question: What is the goal to be optimized? Possible goals of mixed-initiative systems: Even more specific goal Mixed-initiative systems increase human’s speed, memory, accuracy, competence, creativity … Other goals? : … Why to we need precise goals? 78

Question: What is the goal to be optimized? Possible goals of mixed-initiative systems: Even more specific goal Mixed-initiative systems increase human’s speed, memory, accuracy, competence, creativity … Other goals? : … Why to we need precise goals? 78

Question: What is the goal to be optimized? Why to we need precise goals? The more precise the goal the easier to evaluate it: - simpler experiment design; - was the goal achieved? - Why? or Why not? 79

Question: What is the goal to be optimized? Why to we need precise goals? The more precise the goal the easier to evaluate it: - simpler experiment design; - was the goal achieved? - Why? or Why not? 79

Question: How to evaluate the goal (or claim)? Mixed-initiative system X increases a human’s speed, memory, accuracy, competence, creativity … MI What are some sub-questions to answer in order to do this evaluation? 80

Question: How to evaluate the goal (or claim)? Mixed-initiative system X increases a human’s speed, memory, accuracy, competence, creativity … MI What are some sub-questions to answer in order to do this evaluation? 80

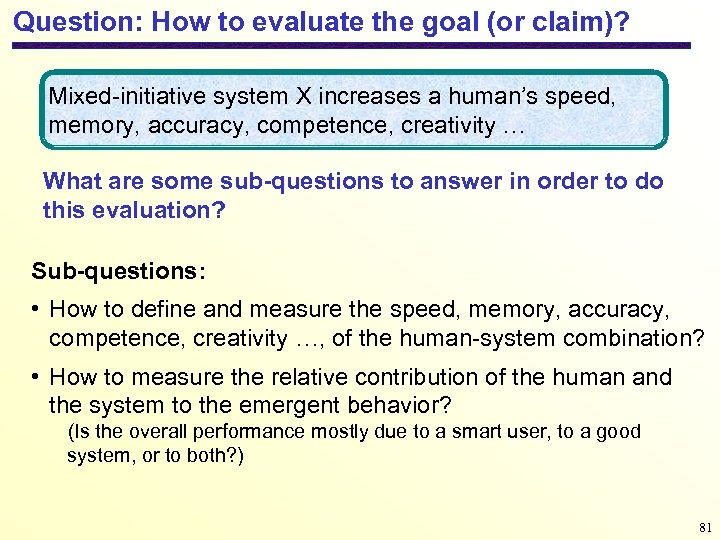

Question: How to evaluate the goal (or claim)? Mixed-initiative system X increases a human’s speed, memory, accuracy, competence, creativity … What are some sub-questions to answer in order to do this evaluation? Sub-questions: • How to define and measure the speed, memory, accuracy, competence, creativity …, of the human-system combination? • How to measure the relative contribution of the human and the system to the emergent behavior? (Is the overall performance mostly due to a smart user, to a good system, or to both? ) 81

Question: How to evaluate the goal (or claim)? Mixed-initiative system X increases a human’s speed, memory, accuracy, competence, creativity … What are some sub-questions to answer in order to do this evaluation? Sub-questions: • How to define and measure the speed, memory, accuracy, competence, creativity …, of the human-system combination? • How to measure the relative contribution of the human and the system to the emergent behavior? (Is the overall performance mostly due to a smart user, to a good system, or to both? ) 81

Compare to baseline behavior? Measure and compare speed, memory, accuracy, competence, creativity … for solving a class of problems in different settings. What are some of the settings to consider? 82

Compare to baseline behavior? Measure and compare speed, memory, accuracy, competence, creativity … for solving a class of problems in different settings. What are some of the settings to consider? 82

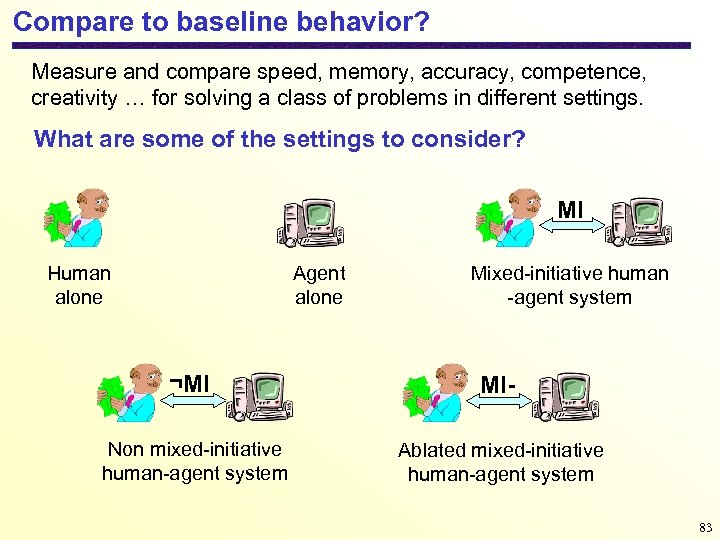

Compare to baseline behavior? Measure and compare speed, memory, accuracy, competence, creativity … for solving a class of problems in different settings. What are some of the settings to consider? MI Human alone Agent alone Mixed-initiative human -agent system ¬MI MI- Non mixed-initiative human-agent system Ablated mixed-initiative human-agent system 83

Compare to baseline behavior? Measure and compare speed, memory, accuracy, competence, creativity … for solving a class of problems in different settings. What are some of the settings to consider? MI Human alone Agent alone Mixed-initiative human -agent system ¬MI MI- Non mixed-initiative human-agent system Ablated mixed-initiative human-agent system 83

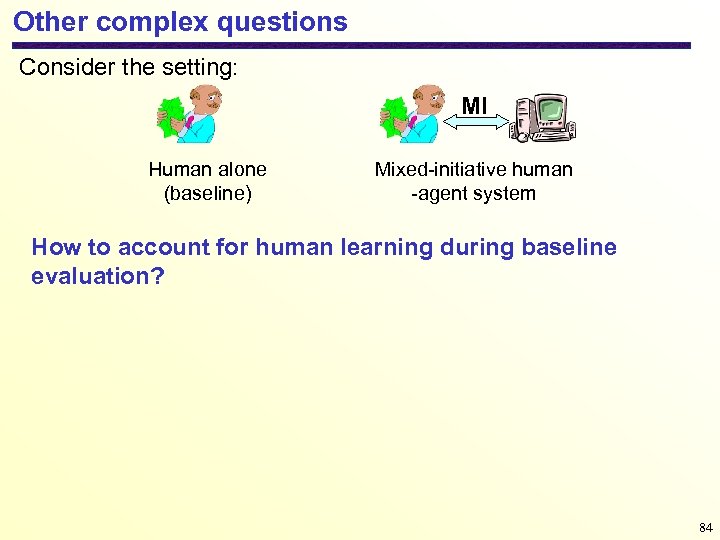

Other complex questions Consider the setting: MI Human alone (baseline) Mixed-initiative human -agent system How to account for human learning during baseline evaluation? 84

Other complex questions Consider the setting: MI Human alone (baseline) Mixed-initiative human -agent system How to account for human learning during baseline evaluation? 84

Other complex questions Consider the setting: MI Human alone (baseline) Mixed-initiative human -agent system How to account for human learning during baseline evaluation? Use other humans? How to account for human variability? Use many humans? How to pay for the associated cost? ? ? Replace a human with a simulation? How well does the simulation actually represents a human? Since the simulation is not perfect, how good is the result? How much does a good simulation cost? 85

Other complex questions Consider the setting: MI Human alone (baseline) Mixed-initiative human -agent system How to account for human learning during baseline evaluation? Use other humans? How to account for human variability? Use many humans? How to pay for the associated cost? ? ? Replace a human with a simulation? How well does the simulation actually represents a human? Since the simulation is not perfect, how good is the result? How much does a good simulation cost? 85

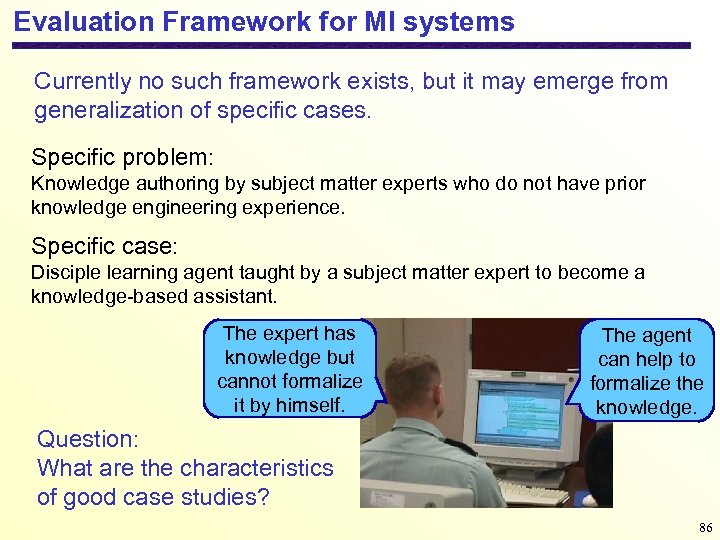

Evaluation Framework for MI systems Currently no such framework exists, but it may emerge from generalization of specific cases. Specific problem: Knowledge authoring by subject matter experts who do not have prior knowledge engineering experience. Specific case: Disciple learning agent taught by a subject matter expert to become a knowledge-based assistant. The expert has knowledge but cannot formalize it by himself. The agent can help to formalize the knowledge. Question: What are the characteristics of good case studies? 86

Evaluation Framework for MI systems Currently no such framework exists, but it may emerge from generalization of specific cases. Specific problem: Knowledge authoring by subject matter experts who do not have prior knowledge engineering experience. Specific case: Disciple learning agent taught by a subject matter expert to become a knowledge-based assistant. The expert has knowledge but cannot formalize it by himself. The agent can help to formalize the knowledge. Question: What are the characteristics of good case studies? 86

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 87

Overview Introduction of the course’s topic An abstract model of a mixed-initiative system Mixed-initiative with human agents Issues in the development of mixed-initiative systems Course organization Student discussion and literature review Case study demo and discussion: Disciple Recommended reading 87

Course organization The mixed-initiative issues mentioned in the previous section will be discussed in the context of current research on: • Mixed-initiative development of intelligent systems (e. g. knowledge engineering, knowledge acquisition, teaching and learning); • Specific mixed-initiative intelligent systems (e. g. , planning systems, dialog systems, discovery systems, learning systems, design systems, tutoring systems); • Mixed-initiative maintenance of intelligent systems (e. g. knowledge base refinement and optimization); • Knowledge representation for mixed-initiative reasoning (e. g. , ontologies and other shared representations suitable for both human and agents). 88

Course organization The mixed-initiative issues mentioned in the previous section will be discussed in the context of current research on: • Mixed-initiative development of intelligent systems (e. g. knowledge engineering, knowledge acquisition, teaching and learning); • Specific mixed-initiative intelligent systems (e. g. , planning systems, dialog systems, discovery systems, learning systems, design systems, tutoring systems); • Mixed-initiative maintenance of intelligent systems (e. g. knowledge base refinement and optimization); • Knowledge representation for mixed-initiative reasoning (e. g. , ontologies and other shared representations suitable for both human and agents). 88

Student expected work Main course’s objective: The course is intended to help the students make progress with their own dissertation research, in a synergistic framework, where each ones progress will contribute to the progress of the others. 89

Student expected work Main course’s objective: The course is intended to help the students make progress with their own dissertation research, in a synergistic framework, where each ones progress will contribute to the progress of the others. 89

Student expected work (cont) - Study mixed-initiative in the context of their dissertation research. - Perform a bibliography research and contribute to the creation of an extended and up to date bibliography on mixed-initiative systems. - Study several state of the art papers in mixed-initiative reasoning. - Analyze the papers from the point of view of the research topics mentioned in the previous section (i. e. task, control, awareness, communication, evaluation, and architecture). - Present the papers and their analysis to the class. - Actively participate in the weekly class discussions. - Actively participate to brainstorming discussions on applying these concepts to practical systems of interest to the students. 90

Student expected work (cont) - Study mixed-initiative in the context of their dissertation research. - Perform a bibliography research and contribute to the creation of an extended and up to date bibliography on mixed-initiative systems. - Study several state of the art papers in mixed-initiative reasoning. - Analyze the papers from the point of view of the research topics mentioned in the previous section (i. e. task, control, awareness, communication, evaluation, and architecture). - Present the papers and their analysis to the class. - Actively participate in the weekly class discussions. - Actively participate to brainstorming discussions on applying these concepts to practical systems of interest to the students. 90

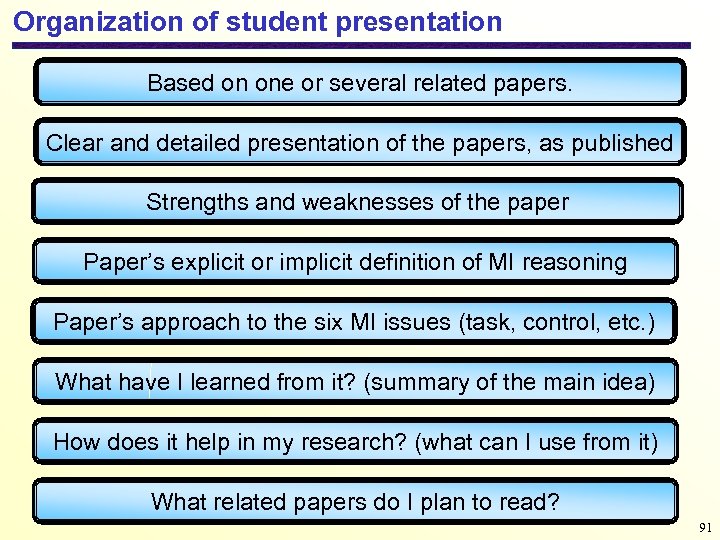

Organization of student presentation Based on one or several related papers. Clear and detailed presentation of the papers, as published Strengths and weaknesses of the paper Paper’s explicit or implicit definition of MI reasoning Paper’s approach to the six MI issues (task, control, etc. ) What have I learned from it? (summary of the main idea) How does it help in my research? (what can I use from it) What related papers do I plan to read? 91

Organization of student presentation Based on one or several related papers. Clear and detailed presentation of the papers, as published Strengths and weaknesses of the paper Paper’s explicit or implicit definition of MI reasoning Paper’s approach to the six MI issues (task, control, etc. ) What have I learned from it? (summary of the main idea) How does it help in my research? (what can I use from it) What related papers do I plan to read? 91

Grading There will be no exam. The final grade will be based on students contributions, defined as follows: - topic study and presentation to the class (significance, organization, clarity of presentation and analysis); - powerpoint presentation(s) (with many questions to audience); - contribution to the bibliography on mixed-initiative systems; - active participation to the class discussions (very important). 92

Grading There will be no exam. The final grade will be based on students contributions, defined as follows: - topic study and presentation to the class (significance, organization, clarity of presentation and analysis); - powerpoint presentation(s) (with many questions to audience); - contribution to the bibliography on mixed-initiative systems; - active participation to the class discussions (very important). 92

Student discussion What is your research area of interest? What would you like to accomplish in this course? 93

Student discussion What is your research area of interest? What would you like to accomplish in this course? 93

Case study demo and discussion: Disciple Demonstrate some Disciple’s tools and discuss them from the point of view of mixed-initiative reasoning Disciple 94

Case study demo and discussion: Disciple Demonstrate some Disciple’s tools and discuss them from the point of view of mixed-initiative reasoning Disciple 94

Recommended reading Curry I. Guinn, An Analysis of Initiative Selection in Collaborative Task-Oriented Discourse User Modeling and User-Adapted Interaction 8(3): 255 -314; Jan 1998. Search at: http: //www. kluweronline. com/issn/0924 -1868 and download pdf file (from a GMU computer). James F. Allen, Mixed-initiative interaction. In Marti A. Hearst Trends & Controversies: Mixed-initiative interaction. IEEE Intelligent Systems 14(5): 14 -23 (1999). http: //www. cs. duke. edu/~cig/papers/ieee. pdf Eric Horvitz, Principles of Mixed-Initiative User Interfaces. In: Proceedings of CHI '99, ACM SIGCHI Conference on Human Factors in Computing Systems, Pittsburgh, PA, May 1999. ACM Press. pp 159 -166. http: //research. microsoft. com/~horvitz/uiact. htm Tecuci G. , Boicu M. , Wright K. and Lee S. W. , "Mixed-Initiative Development of Knowledge Bases", in Proceedings of the Sixteenth National Conference on Artificial Intelligence Workshop on Mixed-Initiative Intelligence, July 18 -19, Orlando, Florida, AAAI Press, Menlo Park, CA. 1999. http: //lalab. gmu. edu/publications/data/MIDKB-sent 1999. pdf 95

Recommended reading Curry I. Guinn, An Analysis of Initiative Selection in Collaborative Task-Oriented Discourse User Modeling and User-Adapted Interaction 8(3): 255 -314; Jan 1998. Search at: http: //www. kluweronline. com/issn/0924 -1868 and download pdf file (from a GMU computer). James F. Allen, Mixed-initiative interaction. In Marti A. Hearst Trends & Controversies: Mixed-initiative interaction. IEEE Intelligent Systems 14(5): 14 -23 (1999). http: //www. cs. duke. edu/~cig/papers/ieee. pdf Eric Horvitz, Principles of Mixed-Initiative User Interfaces. In: Proceedings of CHI '99, ACM SIGCHI Conference on Human Factors in Computing Systems, Pittsburgh, PA, May 1999. ACM Press. pp 159 -166. http: //research. microsoft. com/~horvitz/uiact. htm Tecuci G. , Boicu M. , Wright K. and Lee S. W. , "Mixed-Initiative Development of Knowledge Bases", in Proceedings of the Sixteenth National Conference on Artificial Intelligence Workshop on Mixed-Initiative Intelligence, July 18 -19, Orlando, Florida, AAAI Press, Menlo Park, CA. 1999. http: //lalab. gmu. edu/publications/data/MIDKB-sent 1999. pdf 95