23c6e3924fc9f2a510999cced5290f3d.ppt

- Количество слайдов: 28

Sparse Word Graphs: A Scalable Algorithm for Capturing Word Correlations in Topic Models Ramesh Nallapati Joint work with John Lafferty, Amr Ahmed, William Cohen and Eric Xing Machine Learning Department Carnegie Mellon University 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Introduction • Statistical topic modeling: an attractive framework for topic discovery – Completely unsupervised – Models text very well • Lower perplexity compared to unigram models – Reveals meaningful semantic patterns – Can help summarize and visualize document collections – e. g. : PLSA, LDA, DPM, DTM, CTM, PA 2 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Introduction • A common assumption in all the variants: – Exchangeability: “bag of words” assumption – Topics represented as a ranked list of words • Consequences: – Word Correlation information is lost • e. g. : “white-house” vs. “white” and “house” • Long distance correlations 3 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Introduction • Objective: – To capture correlations between words within topics • Motivation: – More interpretable representation of topics as a network of words rather than a list – Helps better visualize and summarize document collections – May reveal unexpected relationships and patterns within topics 4 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

![Past Work: Topic Models • Bigram topic models [Wallach, ICML 2006] • Requires KV(K-1) Past Work: Topic Models • Bigram topic models [Wallach, ICML 2006] • Requires KV(K-1)](https://present5.com/presentation/23c6e3924fc9f2a510999cced5290f3d/image-5.jpg)

Past Work: Topic Models • Bigram topic models [Wallach, ICML 2006] • Requires KV(K-1) parameters • Only captures local dependencies • Does not model sparsity of correlations • Does not capture “within-topic” correlations 5 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

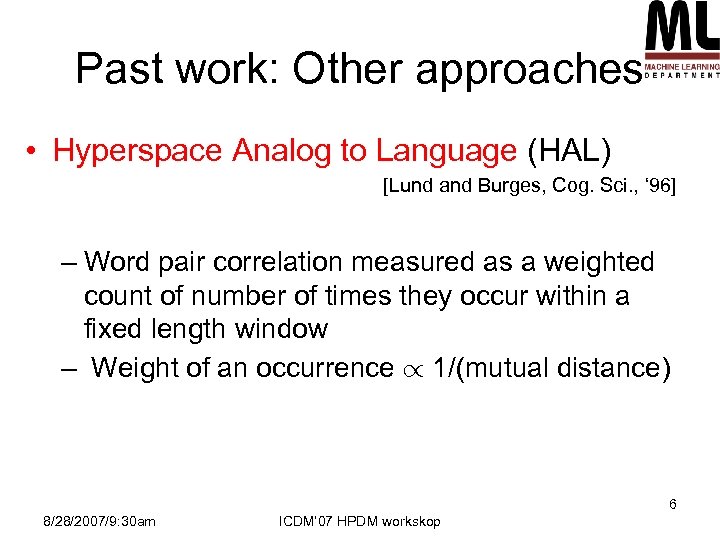

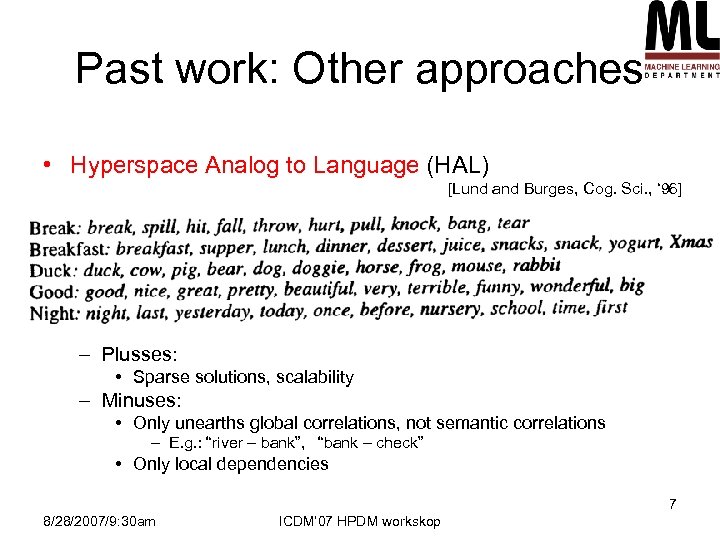

Past work: Other approaches • Hyperspace Analog to Language (HAL) [Lund and Burges, Cog. Sci. , ‘ 96] – Word pair correlation measured as a weighted count of number of times they occur within a fixed length window – Weight of an occurrence / 1/(mutual distance) 6 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Past work: Other approaches • Hyperspace Analog to Language (HAL) [Lund and Burges, Cog. Sci. , ‘ 96] – Plusses: • Sparse solutions, scalability – Minuses: • Only unearths global correlations, not semantic correlations – E. g. : “river – bank”, “bank – check” • Only local dependencies 7 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Past work: Other approaches • Query expansion in IR – Similar in spirit: finds words that highly cooccur with the query words – However, not a corpus visualization tool: requires a context to operate on • Wordnet – Semantic networks – Human labeled: not directly related to our goal 8 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

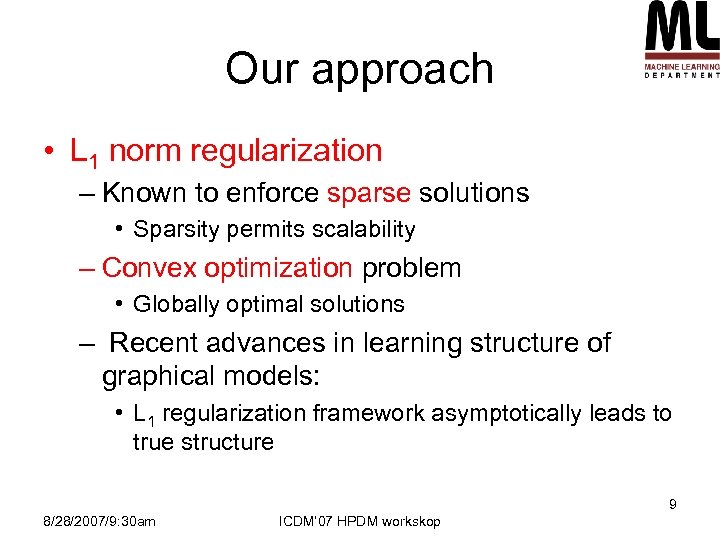

Our approach • L 1 norm regularization – Known to enforce sparse solutions • Sparsity permits scalability – Convex optimization problem • Globally optimal solutions – Recent advances in learning structure of graphical models: • L 1 regularization framework asymptotically leads to true structure 9 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

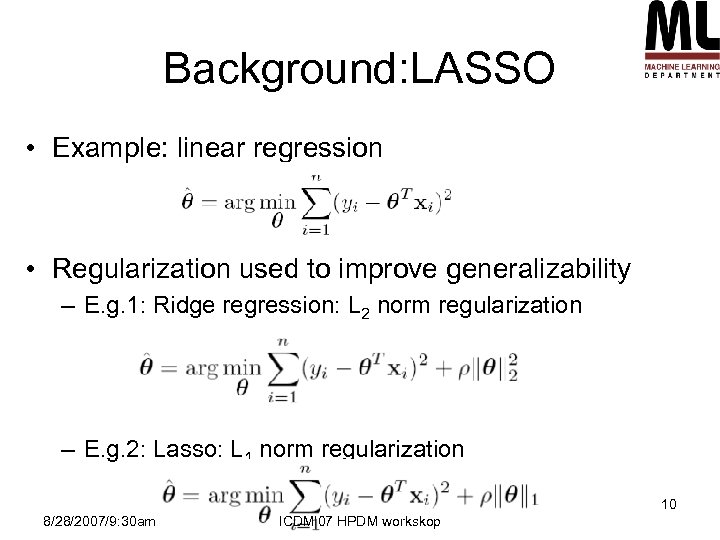

Background: LASSO • Example: linear regression • Regularization used to improve generalizability – E. g. 1: Ridge regression: L 2 norm regularization – E. g. 2: Lasso: L 1 norm regularization 10 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

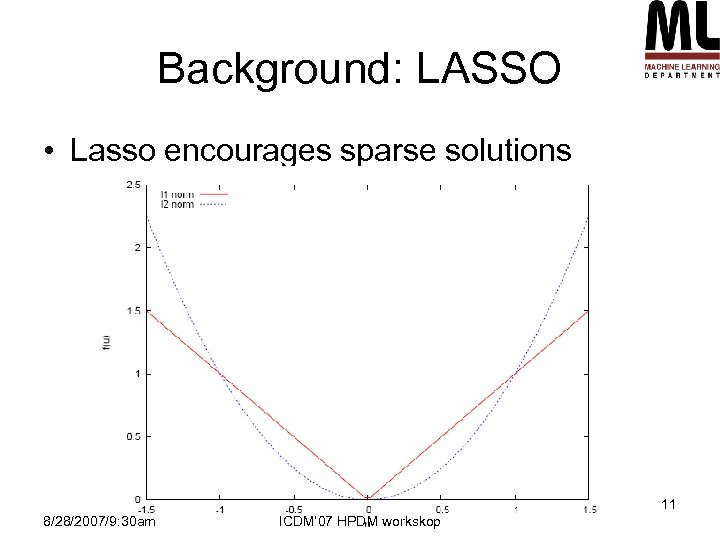

Background: LASSO • Lasso encourages sparse solutions 11 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

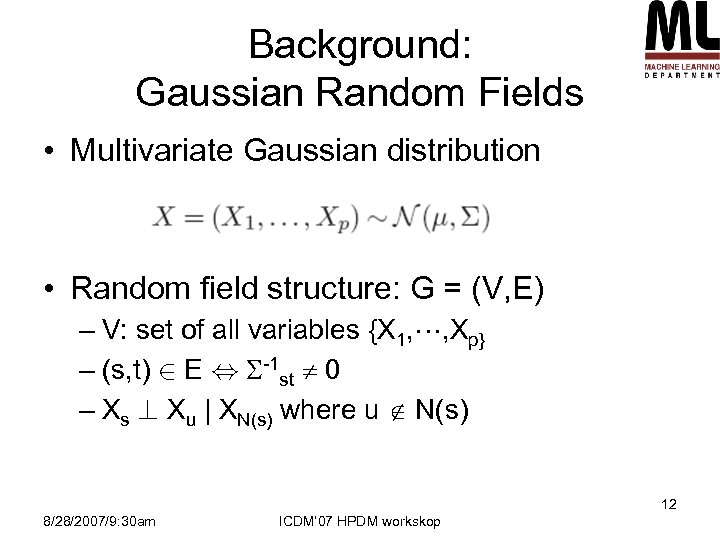

Background: Gaussian Random Fields • Multivariate Gaussian distribution • Random field structure: G = (V, E) – V: set of all variables {X 1, , Xp} – (s, t) 2 E , -1 st 0 – Xs ? Xu | XN(s) where u N(s) 12 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

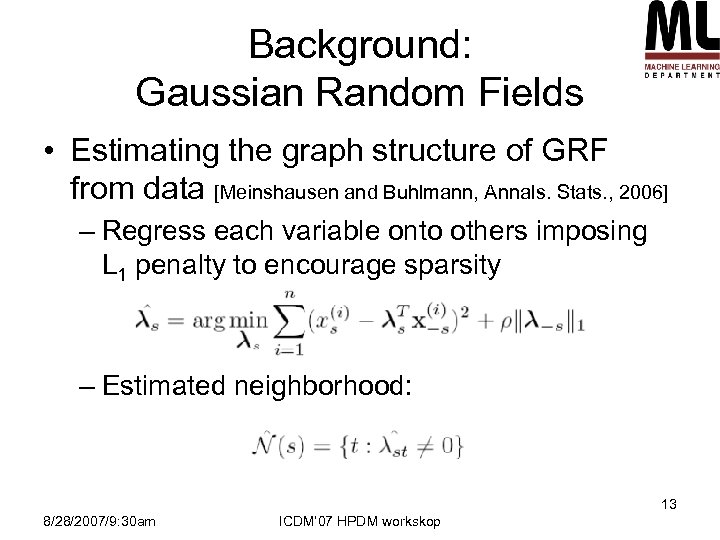

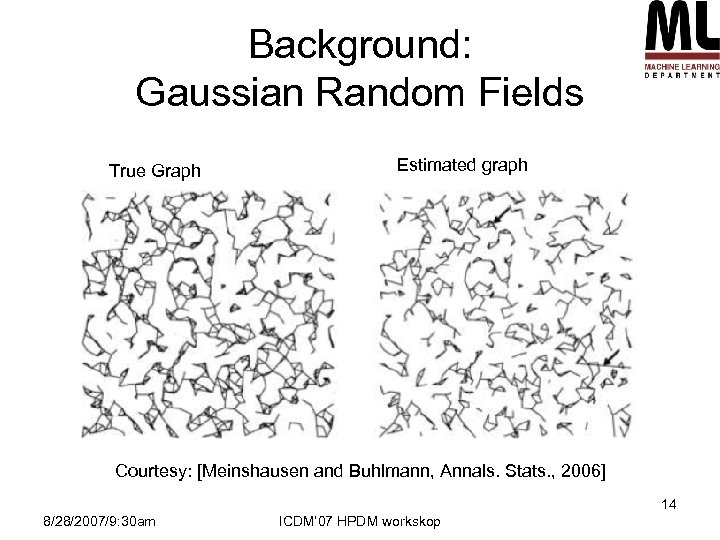

Background: Gaussian Random Fields • Estimating the graph structure of GRF from data [Meinshausen and Buhlmann, Annals. Stats. , 2006] – Regress each variable onto others imposing L 1 penalty to encourage sparsity – Estimated neighborhood: 13 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Background: Gaussian Random Fields True Graph Estimated graph Courtesy: [Meinshausen and Buhlmann, Annals. Stats. , 2006] 14 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

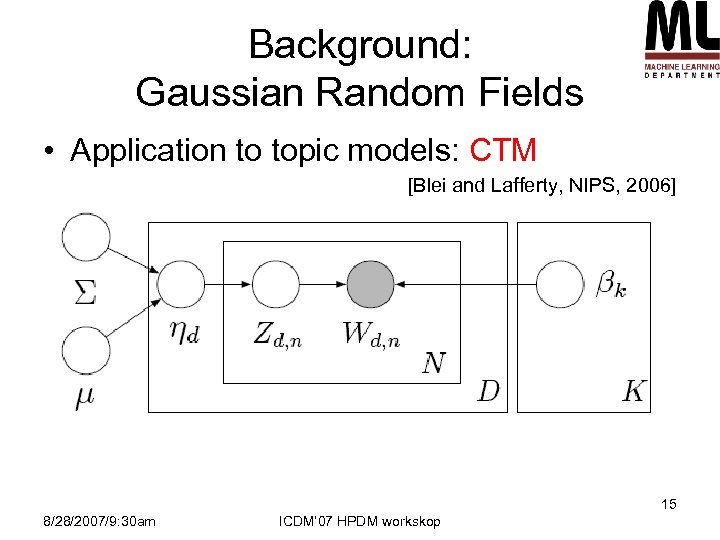

Background: Gaussian Random Fields • Application to topic models: CTM [Blei and Lafferty, NIPS, 2006] 15 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

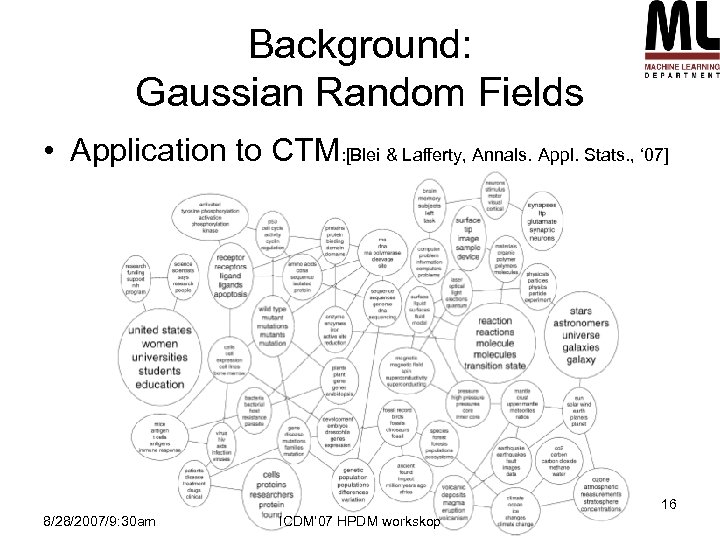

Background: Gaussian Random Fields • Application to CTM: [Blei & Lafferty, Annals. Appl. Stats. , ‘ 07] 16 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

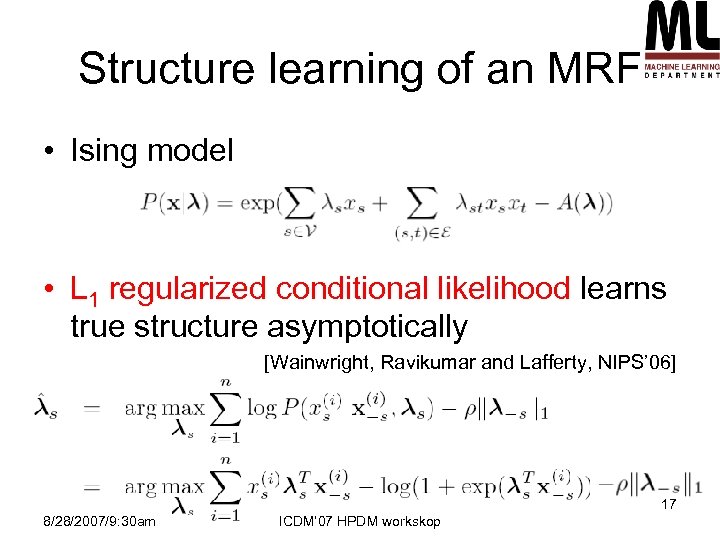

Structure learning of an MRF • Ising model • L 1 regularized conditional likelihood learns true structure asymptotically [Wainwright, Ravikumar and Lafferty, NIPS’ 06] 17 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

![Structure learning of an MRF Courtesy: [Wainwright, Ravikumar and Lafferty, NIPS’ 06] 18 8/28/2007/9: Structure learning of an MRF Courtesy: [Wainwright, Ravikumar and Lafferty, NIPS’ 06] 18 8/28/2007/9:](https://present5.com/presentation/23c6e3924fc9f2a510999cced5290f3d/image-18.jpg)

Structure learning of an MRF Courtesy: [Wainwright, Ravikumar and Lafferty, NIPS’ 06] 18 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

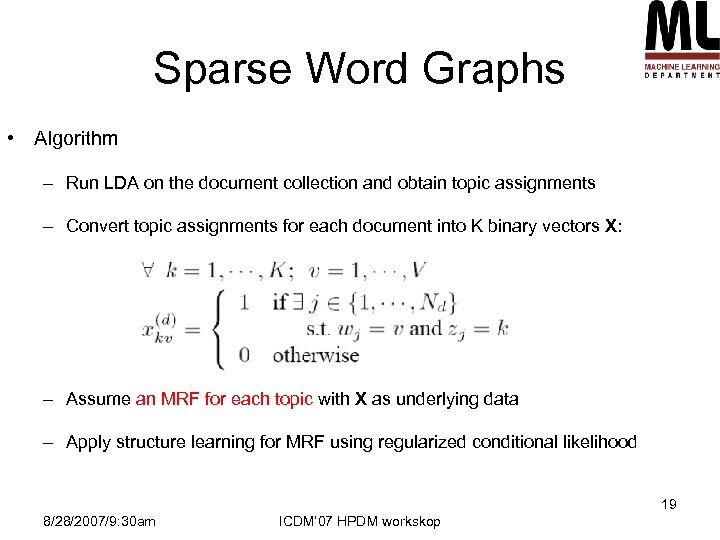

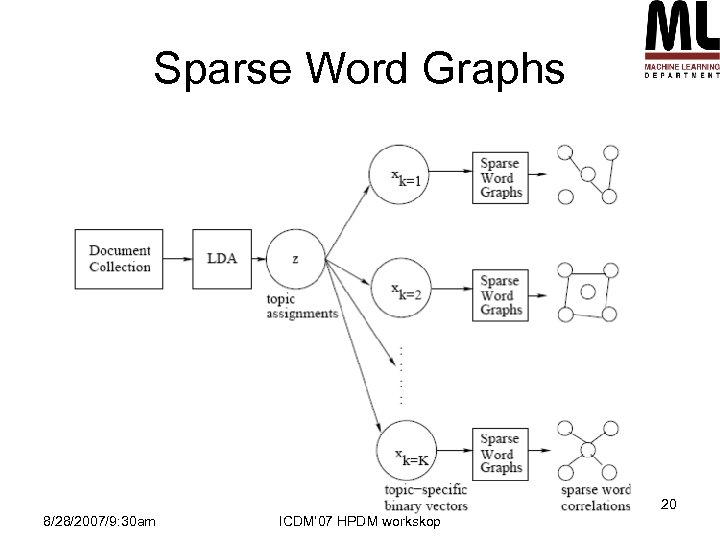

Sparse Word Graphs • Algorithm – Run LDA on the document collection and obtain topic assignments – Convert topic assignments for each document into K binary vectors X: – Assume an MRF for each topic with X as underlying data – Apply structure learning for MRF using regularized conditional likelihood 19 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Sparse Word Graphs 20 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

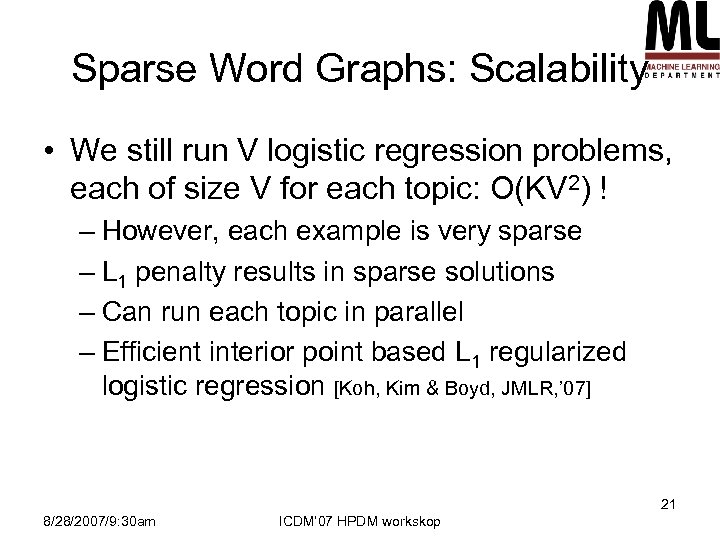

Sparse Word Graphs: Scalability • We still run V logistic regression problems, each of size V for each topic: O(KV 2) ! – However, each example is very sparse – L 1 penalty results in sparse solutions – Can run each topic in parallel – Efficient interior point based L 1 regularized logistic regression [Koh, Kim & Boyd, JMLR, ’ 07] 21 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

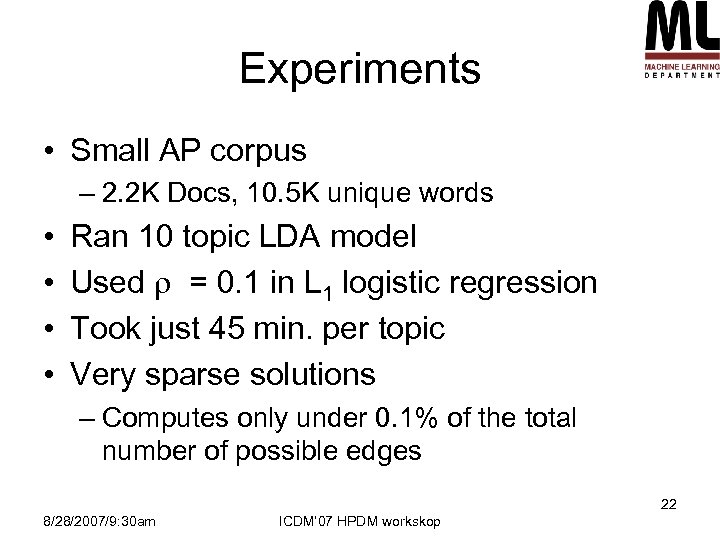

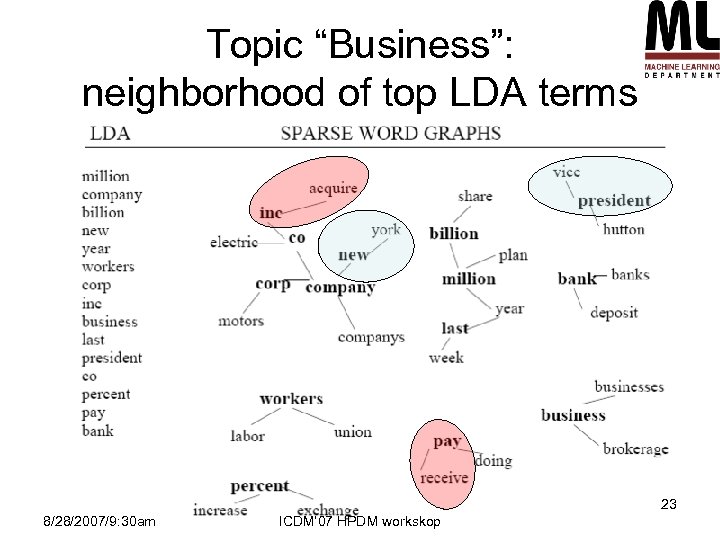

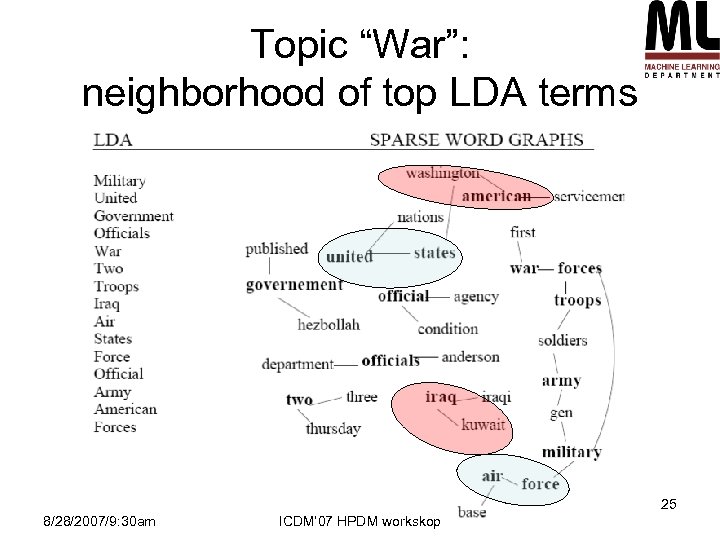

Experiments • Small AP corpus – 2. 2 K Docs, 10. 5 K unique words • • Ran 10 topic LDA model Used = 0. 1 in L 1 logistic regression Took just 45 min. per topic Very sparse solutions – Computes only under 0. 1% of the total number of possible edges 22 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Topic “Business”: neighborhood of top LDA terms 23 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

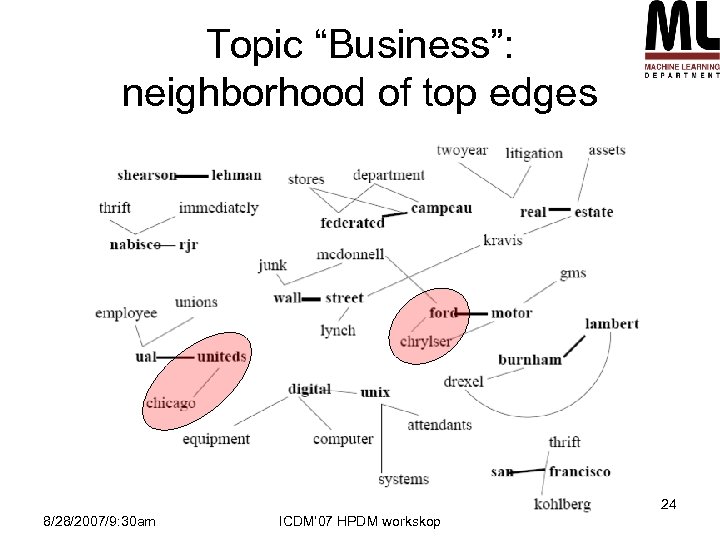

Topic “Business”: neighborhood of top edges 24 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Topic “War”: neighborhood of top LDA terms 25 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

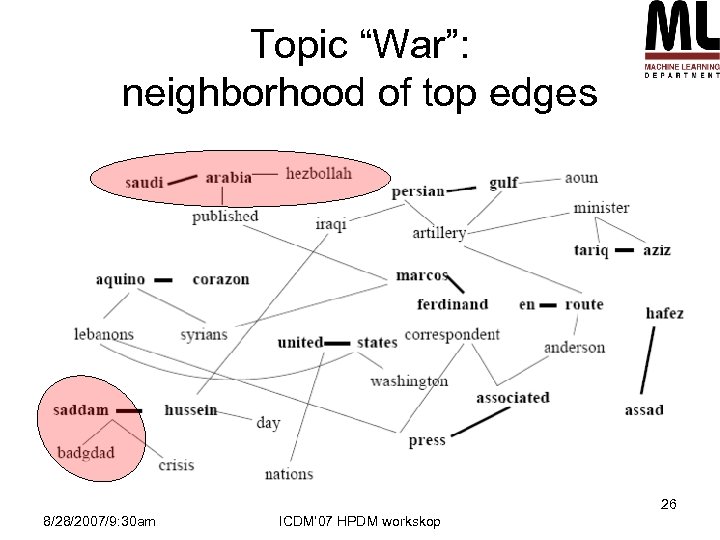

Topic “War”: neighborhood of top edges 26 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Concluding remarks • Pros – A highly scalable algorithm for capturing within topic word correlations – Captures both short distance and long distance correlations – Makes topics more interpretable • Cons – Not a complete probabilistic model • Significant modeling challenge since the correlations are latent 27 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

Concluding remarks • Applications of Sparse Word Graphs – Better document summarization and visualization tool – Word sense disambiguation – Semantic query expansion • Future Work – Evaluation on a “real task” – Build a unified statistical model 28 8/28/2007/9: 30 am ICDM’ 07 HPDM workskop

23c6e3924fc9f2a510999cced5290f3d.ppt