0385ed2c01c389e865ad9bee8ea608f3.ppt

- Количество слайдов: 83

Some Observations on Hindi Dependency Parsing Samar Husain Language Technologies Research Centre, IIIT-Hyderabad.

Introduction 2 • Parsing a free work order language with (relatively) rich morphology is a challenging task – Methods, problems, causes. • Experiments with Hindi

Introduction 3 • Parsing a free work order language with (relatively) rich morphology is a challenging task – Methods, problems, causes. • Experiments with Hindi

Hindi: Brief overview • malaya ne sameer ko kitaba dii. Malay ERG Sameer DAT book gave “Malay gave the book to Sameer” (S-IO-DO-V) S-DO-IO-V IO-S-DO-V IO-DO-S-V DO-S-IO-V DO-IO-S-V 4

Hindi: Brief overview • Inflections – Gender, number, person – Tense, aspect and modality • Agreement – Noun-adjective – Noun-verb 5

Dependency Grammar • A formalism for linguistic analysis – Dependencies between words central to analysis – Different from phrase structure analysis 6

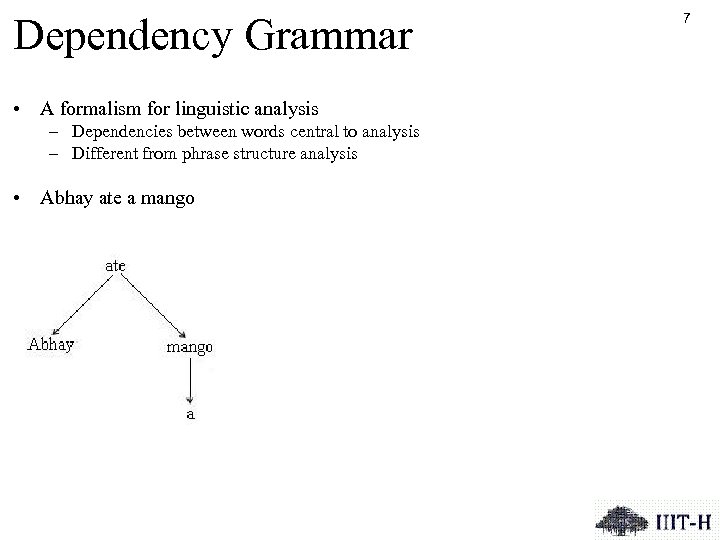

Dependency Grammar • A formalism for linguistic analysis – Dependencies between words central to analysis – Different from phrase structure analysis • Abhay ate a mango 7

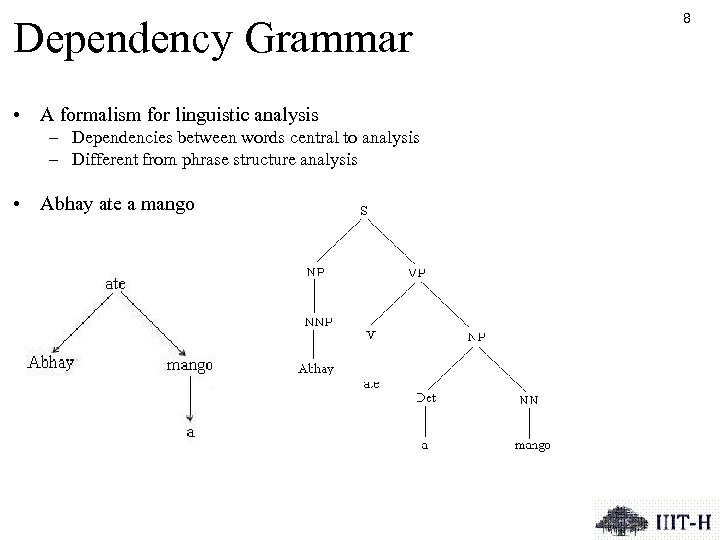

Dependency Grammar • A formalism for linguistic analysis – Dependencies between words central to analysis – Different from phrase structure analysis • Abhay ate a mango 8

9 • Dependency Tree – Root property – Spanning property – Connectedness property – Single head property – Acyclicity property – Arc size property Kübler et al. (2009)

Dependency Parsing 10 • M = (Γ, λ, h) – A dependency parsing model M comprises of a set of constraints Γ that define the space of permissible dependency structures, a set of parameters λ and a parsing algorithm h – Γ maps an arbitrary sentence S and dependency type set R to a set of wellformed dependency trees Gs • Γ = (Σ, R, C) – where, Σ is the set of terminal symbols (here, words), R is the label set, and C is the set of constraints. Such constraints restrict dependencies between words and possible head of the word in well defined ways. • G = h (Γ, λ, S) – given a set of constraints Γ, parameter λ and a new sentence S, how does the system find out the most appropriate dependency tree G for that sentence Kübler et al. (2009)

Dependency Parsing 11 • M = (Γ, λ, h) – A dependency parsing model M comprises of a set of constraints Γ that define the space of permissible dependency structures, a set of parameters λ and a parsing algorithm h – Γ maps an arbitrary sentence S and dependency type set R to a set of wellformed dependency trees Gs • Γ = (Σ, R, C) – where, Σ is the set of terminal symbols (here, words), R is the label set, and C is the set of constraints. Such constraints restrict dependencies between words and possible head of the word in well defined ways. • G = h (Γ, λ, S) – given a set of constraints Γ, parameter λ and a new sentence S, how does the system find out the most appropriate dependency tree G for that sentence Kübler et al. (2009)

Dependency Parsing 12 • M = (Γ, λ, h) – A dependency parsing model M comprises of a set of constraints Γ that define the space of permissible dependency structures, a set of parameters λ and a parsing algorithm h – Γ maps an arbitrary sentence S and dependency type set R to a set of wellformed dependency trees Gs • Γ = (Σ, R, C) – where, Σ is the set of terminal symbols (here, words), R is the label set, and C is the set of constraints. Such constraints restrict dependencies between words and possible head of the word in well defined ways. • G = h (Γ, λ, S) – given a set of constraints Γ, parameter λ and a new sentence S, how does the system find out the most appropriate dependency tree G for that sentence Kübler et al. (2009)

13 • Constraint based – based on the notion of eliminative parsing, where sentences are analyzed by successively eliminating representations that violate constraints until only valid representations remain • Data-driven – learning problem, which is the task of learning a parsing model from a representative sample of structure of sentences (training data) – the parsing problem (or inference/decoding problem), which is the task of applying the learned model to the analysis of a new sentence.

14 • Constraint based – based on the notion of eliminative parsing, where sentences are analyzed by successively eliminating representations that violate constraints until only valid representations remain • Data-driven – learning problem, which is the task of learning a parsing model from a representative sample of structure of sentences (training data) – the parsing problem (or inference/decoding problem), which is the task of applying the learned model to the analysis of a new sentence.

Constraint based method • A Two-Stage Generalized Hybrid Constraint Based Parser (GH-CBP) – Incorporates some of the notions of CPG – Uses Integer linear programming for constraint satisfaction – Also incorporate ideas from graph-based parsing and labeling for prioritization Bharati et al. (2009 a, 2009 b); Husain (2011) 15

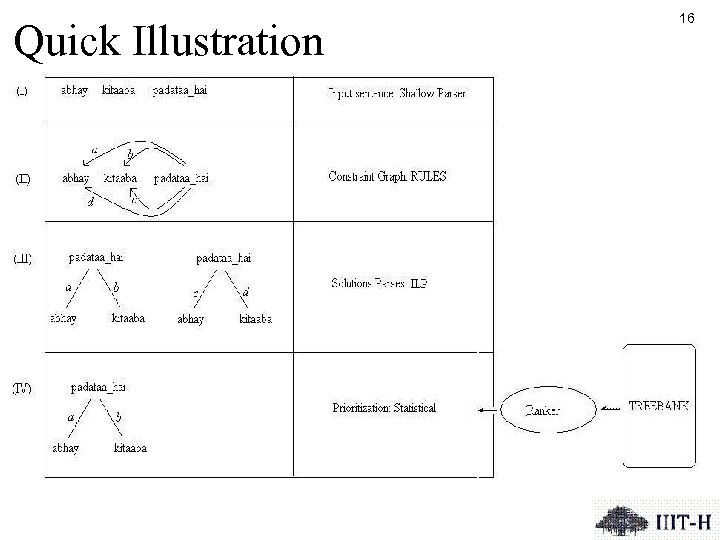

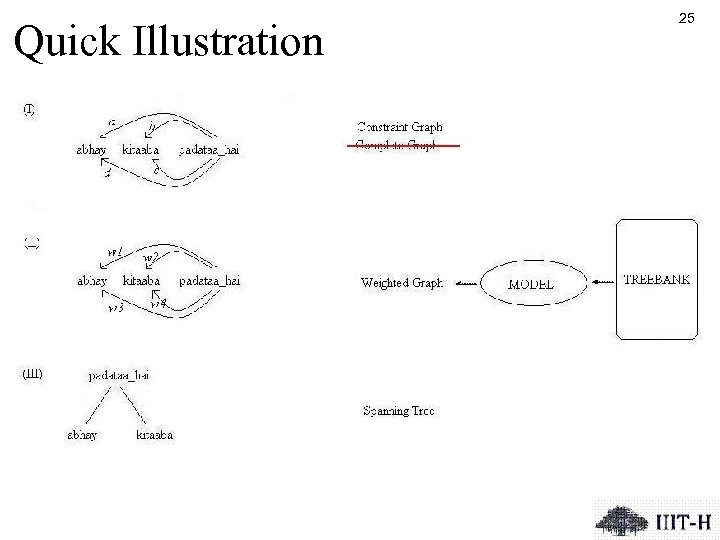

Quick Illustration 16

Data driven approaches • Transition based systems – Malt. Parser • Graph based systems – MSTParser 17

Malt. Parser 18 • Malt is a classifier based Shift/Reduce parser. • It uses arc-eager, arc-standard, covington projective and convington non-projective algorithms for parsing • History-based feature models are used for predicting the next parser action • Support vector machines are used for mapping histories to parser actions Nivre et al. , (2006)

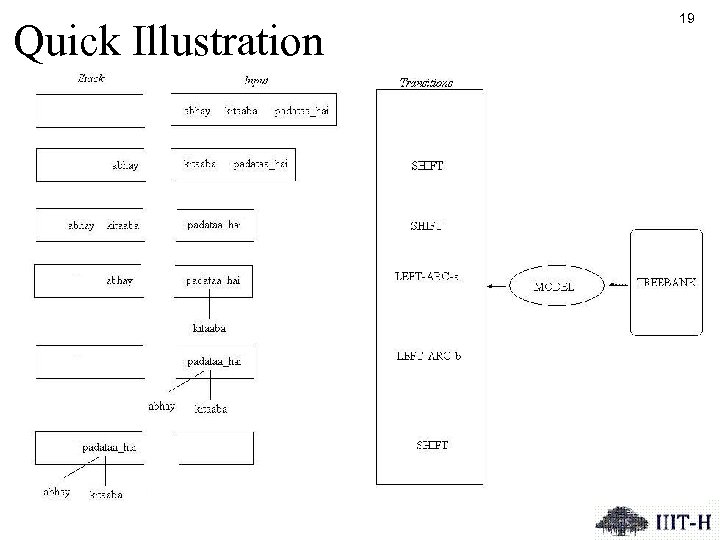

Quick Illustration 19

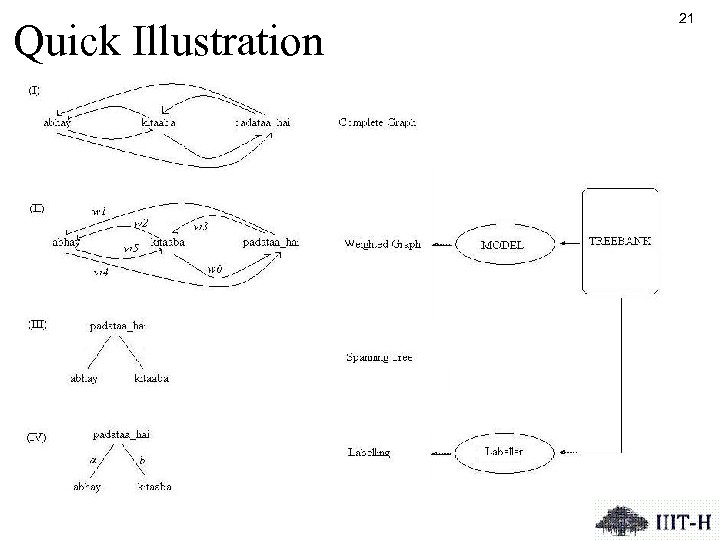

MSTParser 20 • MST uses Chu-Liu-Edmonds Maximum Spanning Tree algorithm for non-projective parsing and Eisner's algorithm for projective parsing. • It uses online large margin learning as the learning algorithm Mc. Donald et al. , (2005 a, 2005 b)

Quick Illustration 21

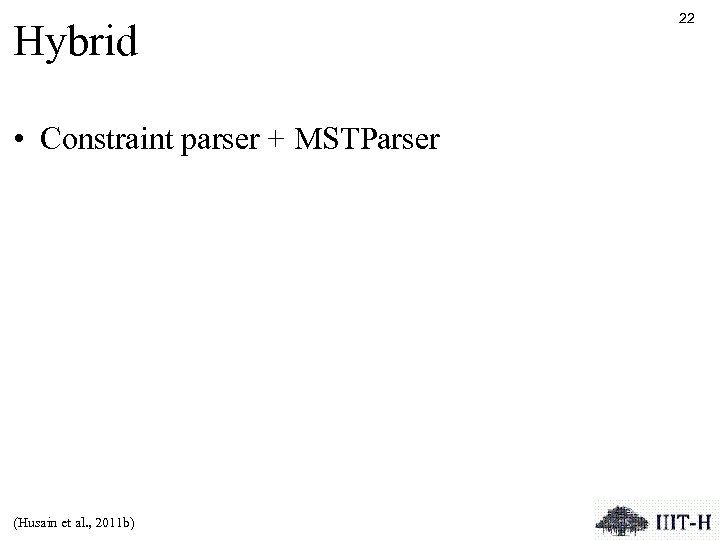

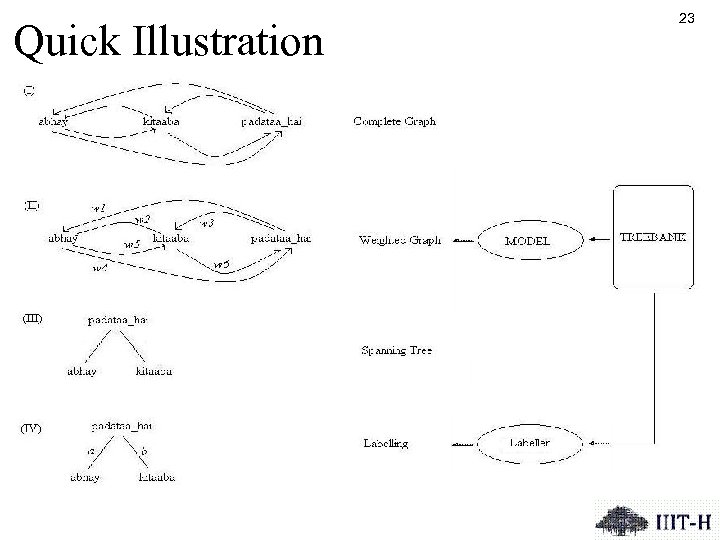

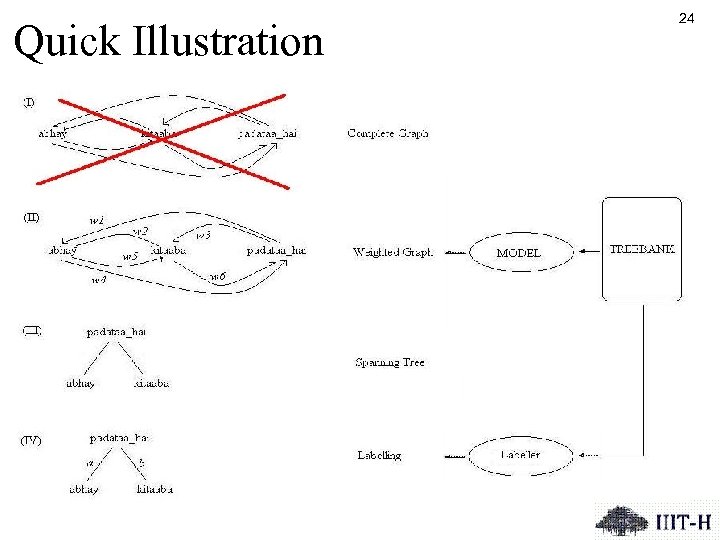

Hybrid • Constraint parser + MSTParser (Husain et al. , 2011 b) 22

Quick Illustration 23

Quick Illustration 24

Quick Illustration 25

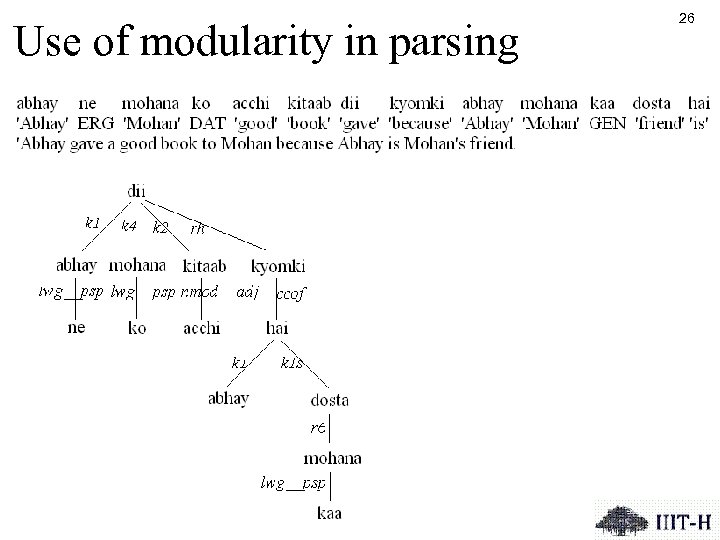

Use of modularity in parsing 26

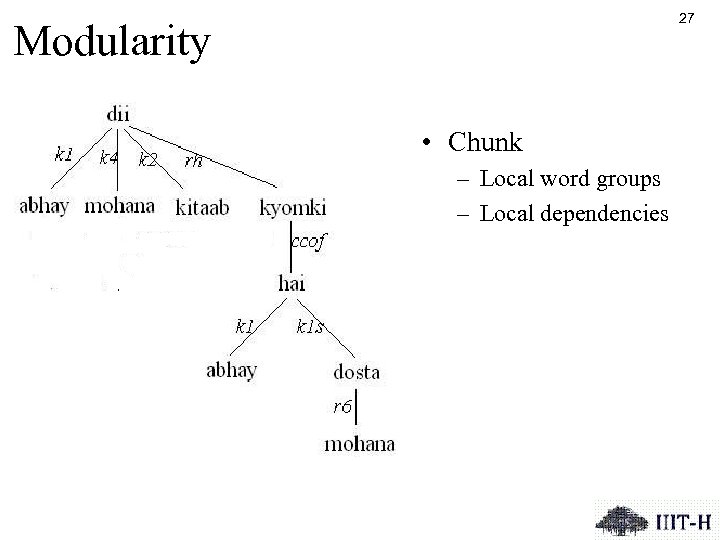

27 Modularity • Chunk – Local word groups – Local dependencies

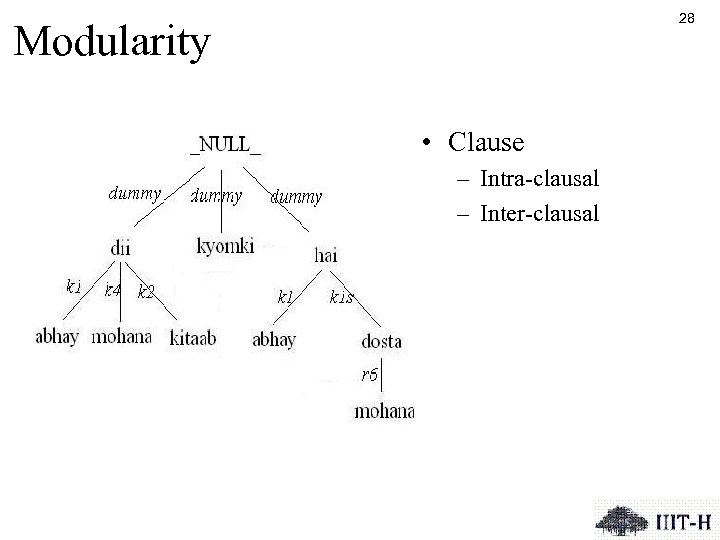

28 Modularity • Clause – Intra-clausal – Inter-clausal

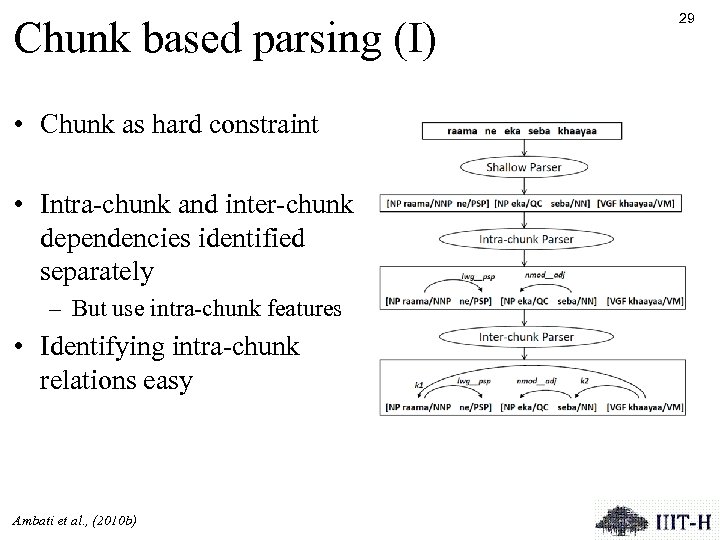

Chunk based parsing (I) • Chunk as hard constraint • Intra-chunk and inter-chunk dependencies identified separately – But use intra-chunk features • Identifying intra-chunk relations easy Ambati et al. , (2010 b) 29

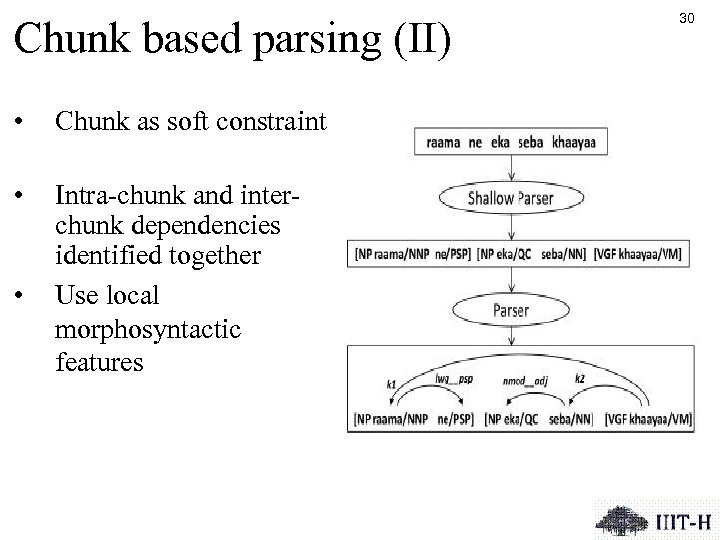

Chunk based parsing (II) • Chunk as soft constraint • Intra-chunk and interchunk dependencies identified together Use local morphosyntactic features • 30

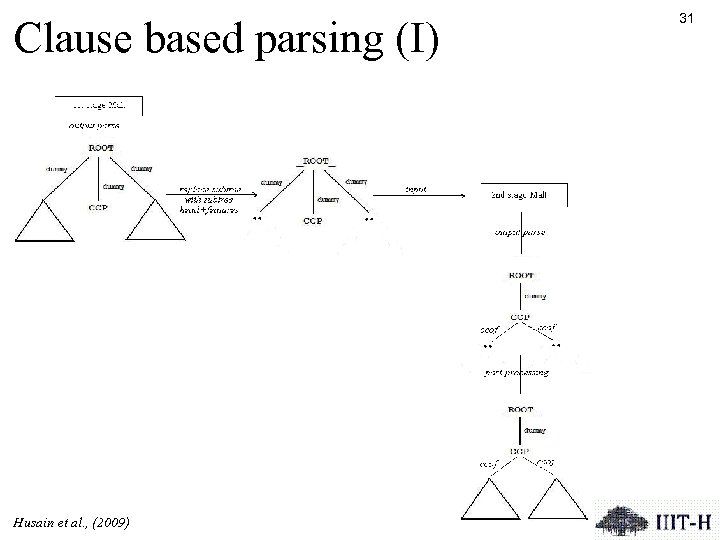

Clause based parsing (I) Husain et al. , (2009) 31

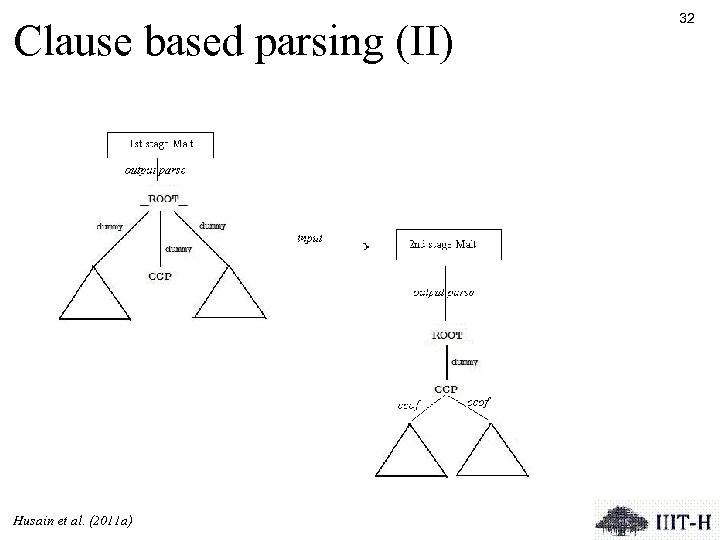

Clause based parsing (II) Husain et al. (2011 a) 32

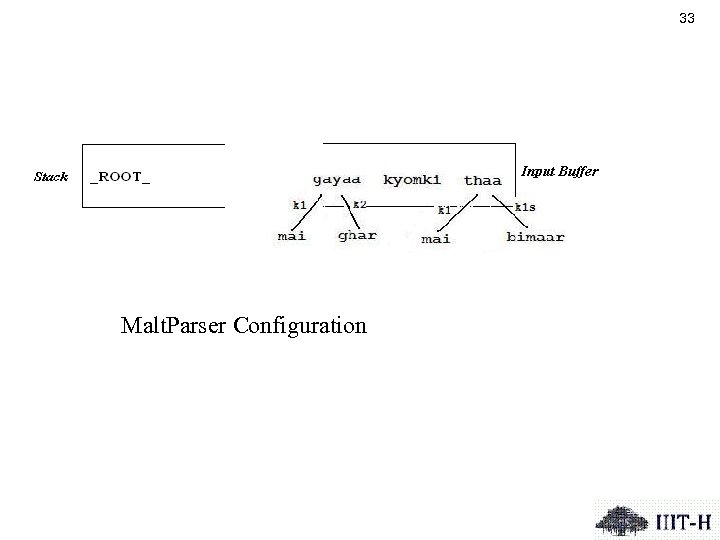

33 Malt. Parser Configuration

Clause based parsing (III) 34 • Similar to parser stacking – ‘guide’ Malt with a 1 st stage parse by Malt. – The additional features added to the 2 nd-stage parser during 2 -Soft parsing encode the decisions by the 1 ststage parser concerning potential arcs and labels considered by the 2 nd stage parser, • in particular, arcs involving the word currently on top of the stack and the word currently at the head of the input buffer.

Experimental setup 35 • Parsers – GH-CBP (version 1. 6) – Malt. Parser (version 1. 3. 1) – MSTParser (version 0. 4 b) • Data – ICON 10 tools contest – The training set had 3000 sentences, the development had 500 sentences and test set had 300 sentences

Evaluation metric and accuracies 36 • Co. NLL dependency parsing shared task 2008 (Nivre et al. , 2008) – UAS: Unlabeled attachment accuracy – LAS: Label attachment accuracy – LA: Label accuracy • Performance – Constraint based (coarse-grained tagset; oracle) • UAS = 88. 50 • LAS = 79. 12 – Statistical (fine-grained) • UAS = ~91 • LAS = ~76

Remarks: Malt • Crucial features – Deprel of the partially built tree – Conjoined features • Good for short distance dependencies • Non-projective algo doesn’t help • Arc-eager, Libsvm Bharati et al. , (2008), Ambati et al. , (2010 a) 37

Remarks: MSTParser • Crucial features – Conjoined features • Modified MST • Difficult to incorporate complex features for labeled parsing – We use Max. Ent as a labeler • Good for long distance dependencies and for identifying the root • Non-projective performs better • Training k=5, order=2 (Bharati et al. , 2008), (Ambati et al. , 2010 a) 38

What helps • • Morphological features Local morphosyntactic Clausal Minimal semantics Bharati et al. , (2008); Ambati et al; . (2009) Ambati et al. , (2010 a); Ambati et al. , (2010 b); Gadde et al. , (2010); 39

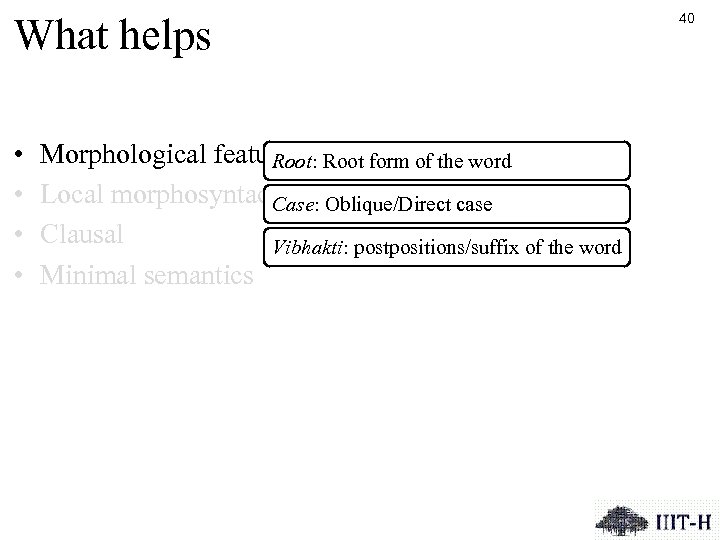

What helps • • Morphological features Root form of the word Root: Local morphosyntactic Oblique/Direct case Case: Clausal Vibhakti: postpositions/suffix of the word Minimal semantics 40

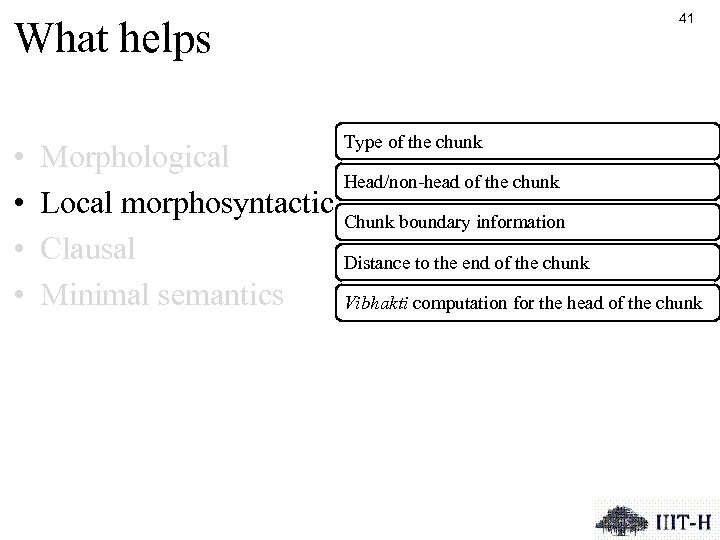

41 What helps • • Morphological Local morphosyntactic Clausal Minimal semantics Type of the chunk Head/non-head of the chunk Chunk boundary information Distance to the end of the chunk Vibhakti computation for the head of the chunk

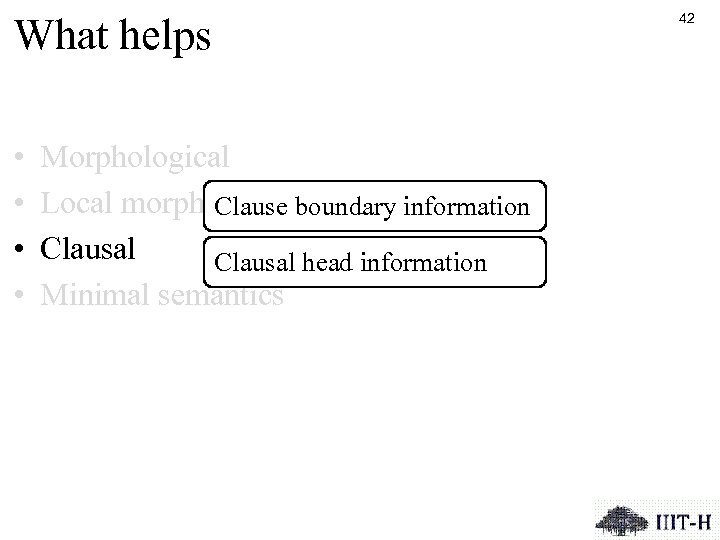

What helps • • Morphological Local morphosyntactic Clause boundary information Clausal head information Minimal semantics 42

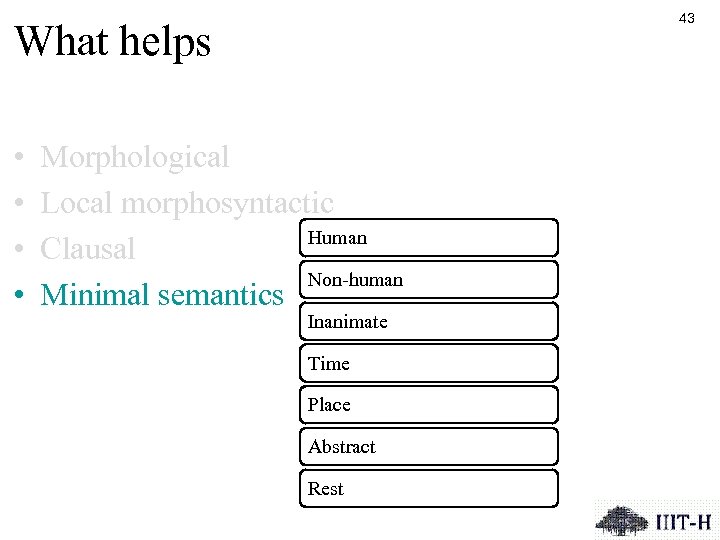

43 What helps • • Morphological Local morphosyntactic Human Clausal Non-human Minimal semantics Inanimate Time Place Abstract Rest

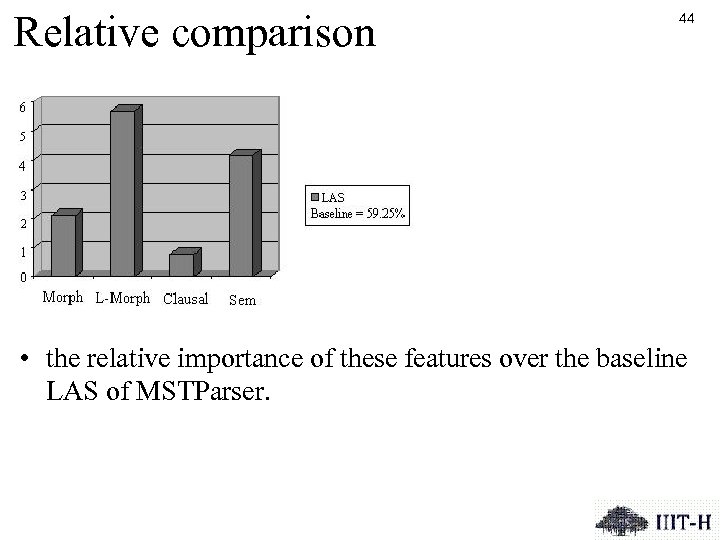

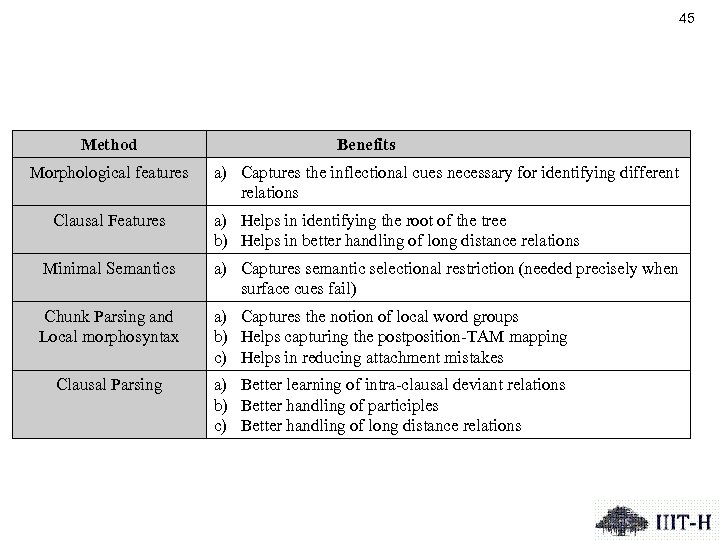

Relative comparison 44 • the relative importance of these features over the baseline LAS of MSTParser.

45 Method Morphological features Clausal Features Benefits a) Captures the inflectional cues necessary for identifying different relations a) Helps in identifying the root of the tree b) Helps in better handling of long distance relations Minimal Semantics a) Captures semantic selectional restriction (needed precisely when surface cues fail) Chunk Parsing and Local morphosyntax a) Captures the notion of local word groups b) Helps capturing the postposition-TAM mapping c) Helps in reducing attachment mistakes Clausal Parsing a) Better learning of intra-clausal deviant relations b) Better handling of participles c) Better handling of long distance relations

What doesn’t • Gender, number, person 46

Parsing MOR-FWO languages • Problems in parsing of MOR-FWO languages – Non-configurational nature of these languages – Inherent limitations in the parsing/learning algorithms – Less amount of annotated data 47

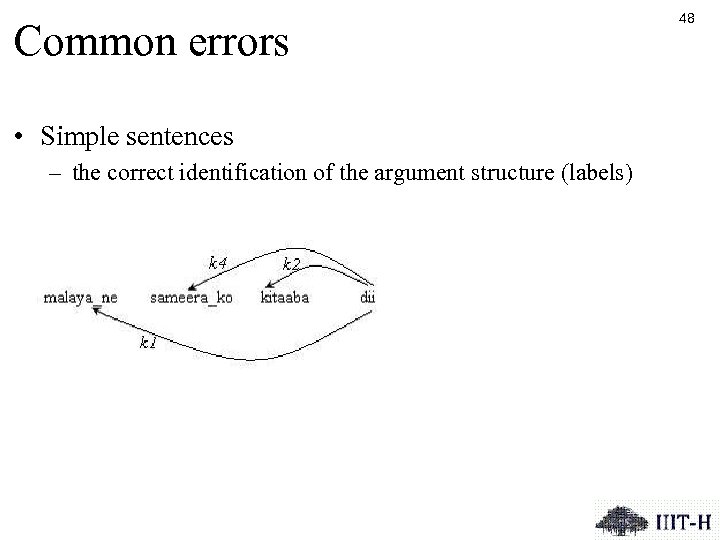

Common errors • Simple sentences – the correct identification of the argument structure (labels) 48

Common errors • Reasons for errors in label – Word order not strict – absence of postpositions, – ambiguous TAMs, and – inability of the parser to exploit agreement features – inability to always make simple linguistic generalizations 49

50 • Embedded clauses – Relative clauses – Participles

![51 [ jo ladakaa vahaan baithaa hai ] vaha meraa bhaaii hai ‘which’ ‘boy’ 51 [ jo ladakaa vahaan baithaa hai ] vaha meraa bhaaii hai ‘which’ ‘boy’](https://present5.com/presentation/0385ed2c01c389e865ad9bee8ea608f3/image-51.jpg)

51 [ jo ladakaa vahaan baithaa hai ] vaha meraa bhaaii hai ‘which’ ‘boy’ ’there’ ’sitting’ ’is’ ’that’ ’my’ ’brother’ ‘is’ ‘The boy who is sitting there is my brother’

![52 [ jo ladakaa vahaan baithaa hai ] vaha meraa bhaaii hai ‘which’ ‘boy’ 52 [ jo ladakaa vahaan baithaa hai ] vaha meraa bhaaii hai ‘which’ ‘boy’](https://present5.com/presentation/0385ed2c01c389e865ad9bee8ea608f3/image-52.jpg)

52 [ jo ladakaa vahaan baithaa hai ] vaha meraa bhaaii hai ‘which’ ‘boy’ ’there’ ’sitting’ ’is’ ’that’ ’my’ ’brother’ ‘is’ ‘The boy who is sitting there is my brother’ • Missing relative pronoun • Non-projectivitiy

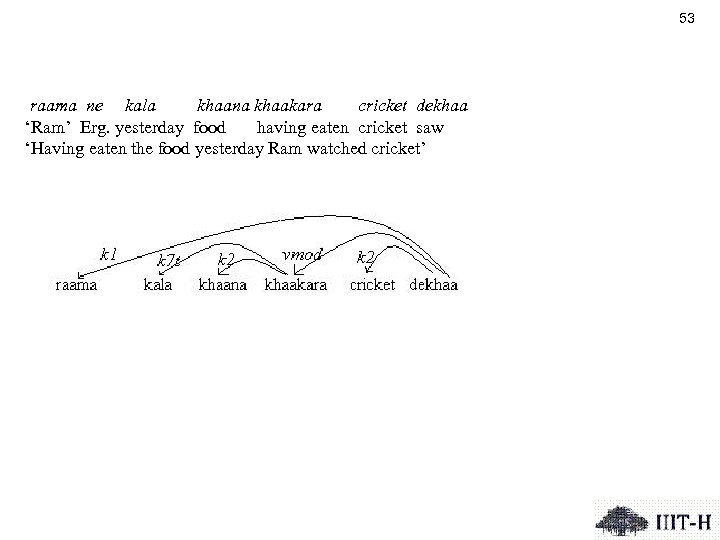

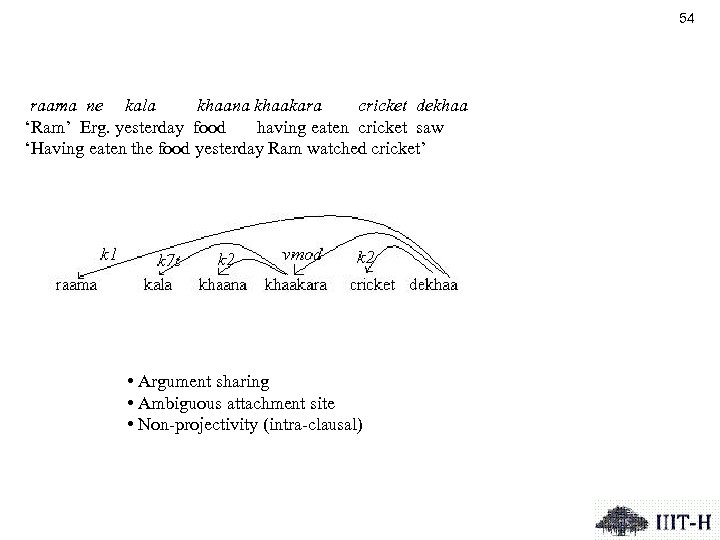

53 raama ne kala khaana khaakara cricket dekhaa ‘Ram’ Erg. yesterday food having eaten cricket saw ‘Having eaten the food yesterday Ram watched cricket’

54 raama ne kala khaana khaakara cricket dekhaa ‘Ram’ Erg. yesterday food having eaten cricket saw ‘Having eaten the food yesterday Ram watched cricket’ • Argument sharing • Ambiguous attachment site • Non-projectivity (intra-clausal)

55 • Coordination • Paired connectives

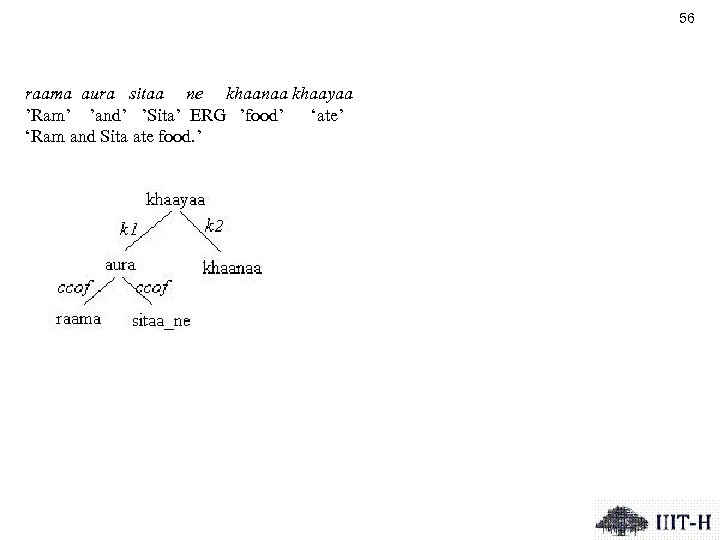

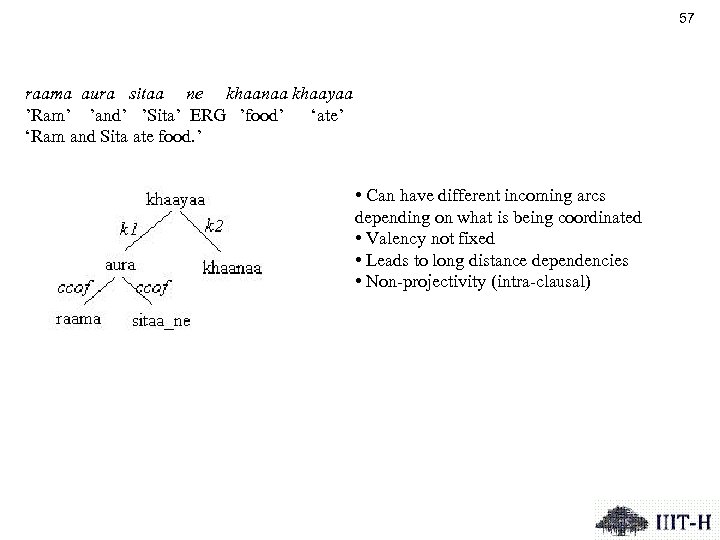

56 raama aura sitaa ne khaanaa khaayaa ’Ram’ ’and’ ’Sita’ ERG ’food’ ‘ate’ ‘Ram and Sita ate food. ’

57 raama aura sitaa ne khaanaa khaayaa ’Ram’ ’and’ ’Sita’ ERG ’food’ ‘ate’ ‘Ram and Sita ate food. ’ • Can have different incoming arcs depending on what is being coordinated • Valency not fixed • Leads to long distance dependencies • Non-projectivity (intra-clausal)

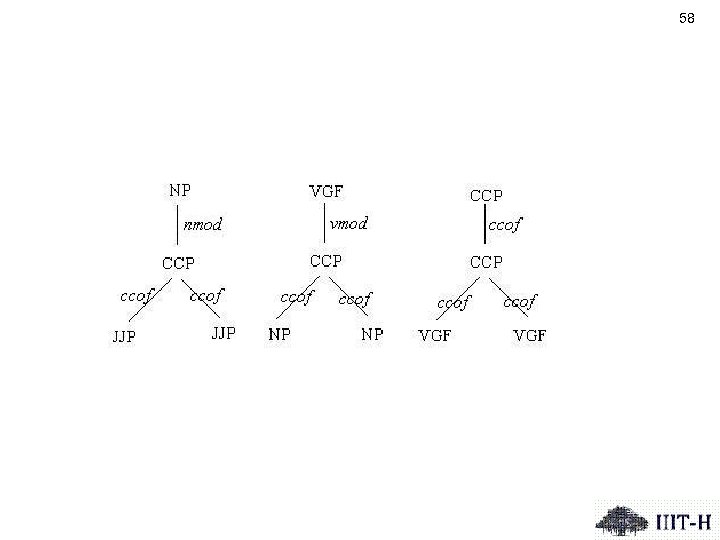

58

Complex predicates 59 • Noun/Adjective + Verb (Verbalizer) – raam ne shyaam kii madad kii Ram Erg. Shyam Gen. help do-Past ‘Ram helped Shyam. ’ • Difficult to identify • Behavioral diagnostics do not always work across the board – Only some can be automated Begum et al. , (2011)

Non-verbal heads • Nouns – Appositions • Predicative adjectives 60

Non-projectivity • ~14% non-projective arcs • Many are inter-clausal relations – Relative co-relative construction – Extraposed relative clause – Paired connectives –… Mannem et al. , (2009) 61

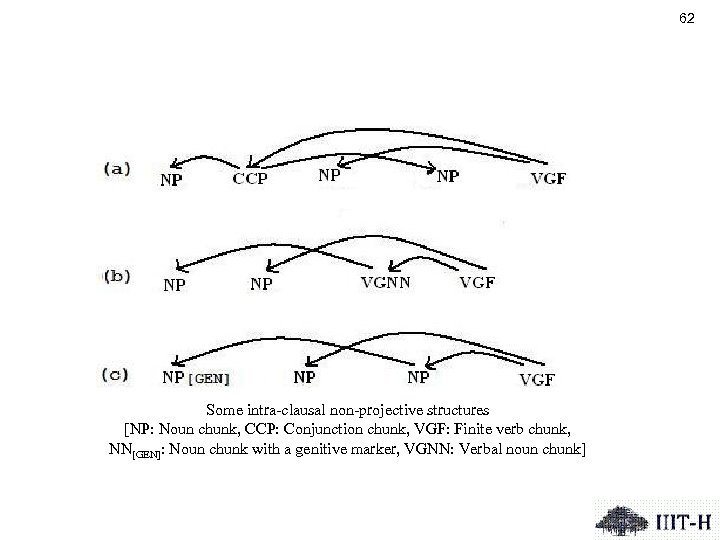

62 Some intra-clausal non-projective structures [NP: Noun chunk, CCP: Conjunction chunk, VGF: Finite verb chunk, NN[GEN]: Noun chunk with a genitive marker, VGNN: Verbal noun chunk]

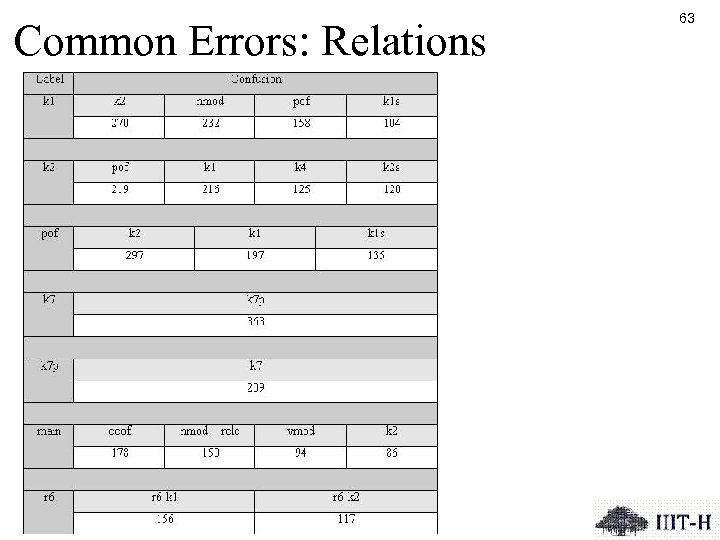

Common Errors: Relations 63

Causes • Complex feature pattern – agreement • Difficulty in making linguistic generalizations – Single subject • • Long distance dependencies Nonprojectivity – Both inter-clausal and intra-clausal • Genuine ambiguities – Participle argument attachment • Small corpus size – Training on ~70 k word corpus – But, for many languages small data size is not a crucial factor in ascertaining their parsing performance (Hall et al. , 2007) 64

Causes • Complex feature pattern – agreement • Difficulty in making linguistic generalizations – Single subject • • Long distance dependencies Nonprojectivity – Both inter-clausal and intra-clausal • Genuine ambiguities – Participle argument attachment • Small corpus size – Training on ~70 k word corpus – But, for many languages small data size is not a crucial factor in ascertaining their parsing performance (Hall et al. , 2007) 65

Causes • Complex feature pattern – agreement • Difficulty in making linguistic generalizations – Single subject • • Long distance dependencies Nonprojectivity – Both inter-clausal and intra-clausal • Genuine ambiguities – Participle argument attachment • Small corpus size – Training on ~70 k word corpus – But, for many languages small data size is not a crucial factor in ascertaining their parsing performance (Hall et al. , 2007) 66

Causes • Complex feature pattern – agreement • Difficulty in making linguistic generalizations – Single subject • • Long distance dependencies Nonprojectivity – Both inter-clausal and intra-clausal • Genuine ambiguities – Participle argument attachment • Small corpus size – Training on ~70 k word corpus – But, for many languages small data size is not a crucial factor in ascertaining their parsing performance (Hall et al. , 2007) 67

Causes • Complex feature pattern – agreement • Difficulty in making linguistic generalizations – Single subject • • Long distance dependencies Nonprojectivity – Both inter-clausal and intra-clausal • Genuine ambiguities – Participle argument attachment • Small corpus size – Training on ~70 k word corpus – But, for many languages small data size is not a crucial factor in ascertaining their parsing performance (Hall et al. , 2007) 68

Causes • Complex feature pattern – agreement • Difficulty in making linguistic generalizations – Single subject • • Long distance dependencies Nonprojectivity – Both inter-clausal and intra-clausal • Genuine ambiguities – Participle argument attachment • Small corpus size – Training on ~70 k word corpus – But, for many languages small data size is not a crucial factor in ascertaining their parsing performance (Hall et al. , 2007) 69

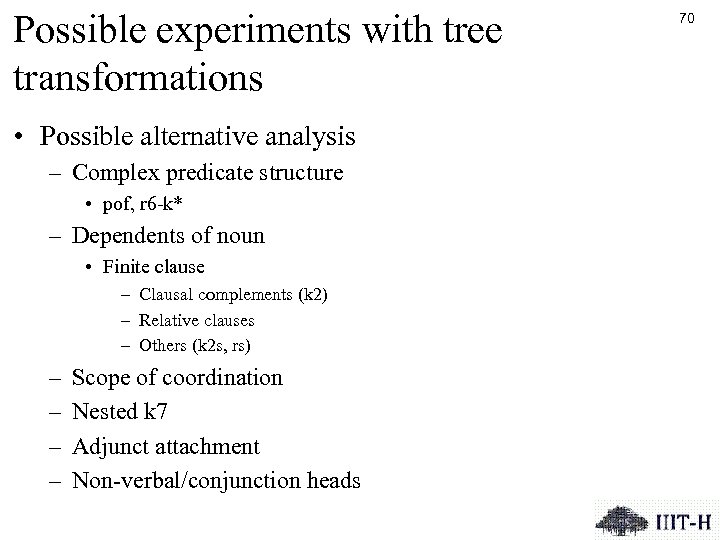

Possible experiments with tree transformations • Possible alternative analysis – Complex predicate structure • pof, r 6 -k* – Dependents of noun • Finite clause – Clausal complements (k 2) – Relative clauses – Others (k 2 s, rs) – – Scope of coordination Nested k 7 Adjunct attachment Non-verbal/conjunction heads 70

Final remarks • Malt. Parser with clausal modularity outperforms the parser that uses only the local morphosyntactic features • The performance of linguistically rich MSTParser is better than the one with clausal feature. • In UAS, MSTParser with clausal feature is the highest • Both MSTParser and Malt. Parser outperform GH-CBP – But scope for improvement as oracle is high – More knowledge 71

Final remarks • Malt. Parser with clausal modularity outperforms the parser that uses only the local morphosyntactic features • The performance of linguistically rich MSTParser is better than the one with clausal feature. • In UAS, MSTParser with clausal feature is the highest • Both MSTParser and Malt. Parser outperform GH-CBP – But scope for improvement as oracle is high – More knowledge 72

Final remarks • Malt. Parser with clausal modularity outperforms the parser that uses only the local morphosyntactic features • The performance of linguistically rich MSTParser is better than the one with clausal feature. • In UAS, MSTParser with clausal feature is the highest • Both MSTParser and Malt. Parser outperform GH-CBP – But scope for improvement as oracle is high – More knowledge 73

Final remarks 74 • Malt. Parser with clausal modularity outperforms the parser that uses only the local morphosyntactic features • The performance of linguistically rich MSTParser is better than the one with clausal feature. • In UAS, MSTParser with clausal feature is the highest • Both MSTParser and Malt. Parser outperform GH-CBP – But scope for improvement as oracle is high – More knowledge

75 • In spite of the positive effects of important features, linguistic modularity, etc. the overall performance for all the parsers is still low. – particularly true for LAS • ambiguous post-positions, and • lack of post-positions • But also because of larger tagset. • Minimal semantics helps – automatic identification of such semantic tags is not a trivial task • Knowledge of verbal heads – (linguistically rich) MSTParser

76 • In spite of the positive effects of important features, linguistic modularity, etc. the overall performance for all the parsers is still low. – particularly true for LAS • ambiguous post-positions, and • lack of post-positions • But also because of larger tagset. • Minimal semantics helps – automatic identification of such semantic tags is not a trivial task • Knowledge of verbal heads – (linguistically rich) MSTParser

77 • In spite of the positive effects of important features, linguistic modularity, etc. the overall performance for all the parsers is still low. – particularly true for LAS • ambiguous post-positions, and • lack of post-positions • But also because of larger tagset. • Minimal semantics helps – automatic identification of such semantic tags is not a trivial task • Knowledge of verbal heads – (linguistically rich) MSTParser

78 • Overall the UAS for all the parsers is high – shows that most of the languages structures are been identified successfully – Non-projectivity for data-driven parsing still a problem – Ambiguous constructions • More data? • Data-driven parsers do not learn many linguistic generalizations – agreement, single subject constraint, etc. – Such generalizations are frequent in some complex patterns that exists between verbal heads and their children. – Some recent work have been able to incorporate this successfully

79 • Overall the UAS for all the parsers is high – shows that most of the languages structures are been identified successfully – Non-projectivity for data-driven parsing still a problem – Ambiguous constructions • More data? • Data-driven parsers currently unable to learn many linguistic generalizations – agreement, single subject constraint, etc. – Such generalizations are frequent in some complex patterns that exists between verbal heads and their children. – Some recent work have been able to incorporate this successfully

80 Thanks!

References • • • 81 B. Ambati, S. Husain, J. Nivre and R. Sangal. 2010 a. On the Role of Morphosyntactic Features in Hindi Dependency Parsing. In Proceedings of NAACL-HLT 2010 workshop on Statistical Parsing of Morphologically Rich Languages (SPMRL 2010), Los Angeles, CA. B. Ambati, S. Husain, S. Jain, D. M. Sharma and R. Sangal. 2010 b. Two methods to incorporate 'local morphosyntactic' features in Hindi dependency parsing. In Proceedings of NAACL-HLT 2010 workshop on Statistical Parsing of Morphologically Rich Languages (SPMRL 2010) Los Angeles, CA. B. Ambati, P. Gade, G. S. K. Chaitanya and S. Husain. 2009. Effect of Minimal Semantics on Dependency Parsing. In RANLP 09 student paper workshop. R. Begum, K. Jindal, A. Jain, S. Husain and D. M. Sharma. 2011. Identification of Conjunct Verbs in Hindi and Its Effect on Parsing Accuracy. In Proceedings of the 12 th CICLing, Tokyo, Japan. A. Bharati, S. Husain, D. M. Sharma and R. Sangal. 2009 a. Two stage constraint based hybrid approach to free word order language dependency parsing. In Proceedings of the 11 th International Conference on Parsing Technologies (IWPT). Paris. A. Bharati, S. Husain, M. Vijay, K. Deepak, D. M. Sharma and R. Sangal. 2009 b. Constraint Based Hybrid Approach to Parsing Indian Languages. In Proceedings of the 23 rd Pacific Asia Conference on Language, Information and Computation (PACLIC 23). Hong Kong.

82 • • • A. Bharati, S. Husain, B. Ambati, S. Jain, D. M. Sharma and R. Sangal. 2008. Two semantic features make all the difference in Parsing accuracy. In Proceedings of the 6 th International Conference on Natural Language Processing (ICON-08), CDAC Pune, India. P. Gadde, K. Jindal, S. Husain, D. M Sharma, and R. Sangal. 2010. Improving Data Driven Dependency Parsing using Clausal Information. In Proceedings of NAACL-HLT 2010, Los Angeles, CA. 2010. J. Hall, J. Nilsson, J. Nivre, G. Eryigit, B. Megyesi, M. Nilsson and M. Saers. 2007. Single Malt or Blended? A Study in Multilingual Parser Optimization. In Proceedings of the Co. NLL Shared Task Session of EMNLP-Co. NLL 2007, 933— 939 S. Husain. 2011. A Generalized Parsing Framework based on Computational Paninian Grammar. Ph. D Thesis. IIIT-Hyderbad, India. S. Husain, P. Gadde, J. Nivre and R. Sangal. 2011 a. Clausal parsing helps dependency parsing. In Proceedings of IJCNLP 2011.

83 • • • S. Husain, P. Gade, and R. Sangal. 2011 b. Linguistically Rich Graph Based Data Driven Parsing. In Submission. S. Husain, P. Gadde, B. Ambati, D. M. Sharma and R. Sangal. 2009. A modular cascaded approach to complete parsing. In Proceedings of the COLIPS International Conference on Asian Language Processing 2009 (IALP). Singapore. S. Kubler, R. Mc. Donald and J. Nivre. 2009. Dependency parsing. Morgan and Claypool. P. Mannem, H. Chaudhry and A. Bharati. 2009. Insights into Non-projectivity in Hindi. In ACL IJCNLP 09 student paper workshop. R. Mc. Donald, K. Crammer, and F. Pereira. 2005 a. Online large-margin training of dependency parsers. In Proceedings of ACL 2005. pp. 91– 98. R. Mc. Donald, F. Pereira, K. Ribarov, and J. Hajic. 2005 b. Non-projective dependency parsing using spanning tree algorithms. Proceedings of HLT/EMNLP, pp. 523– 530. J. Nivre. 2006. Inductive Dependency Parsing. Springer.

0385ed2c01c389e865ad9bee8ea608f3.ppt