fd91a931e354bf9a50284e0204cb09fd.ppt

- Количество слайдов: 64

Software testing Unit Testing

Course objectives • Discuss Unit Testing • Presenting elements of the JUnit testing framework • Reference: Roy Osherove, The Art of Unit Testing 2 nd edition, 2014

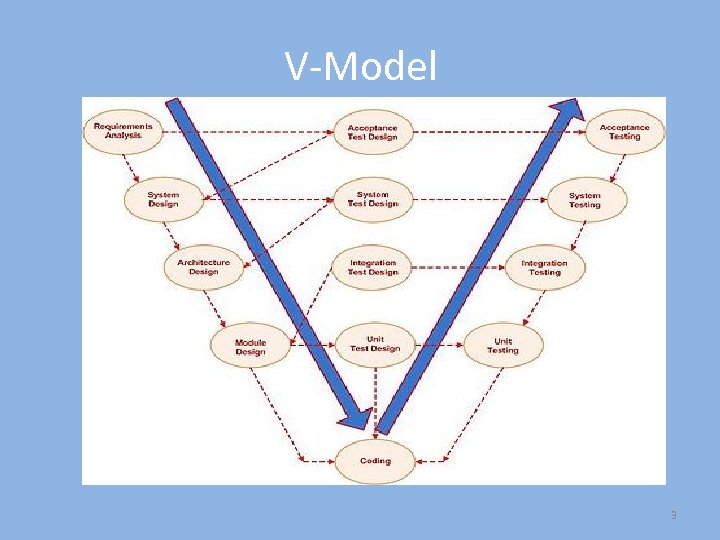

V-Model 3

Developer testing • Developer has to ensure the delivered code is of good quality and they have to test their code: – Use some external piece of code to call their code – Run the entire application and manually check how the application uses their code – Disadvantages: easy to miss testing cases, the external code to test with may have its own bugs, difficult to perform regression tests, not in the attention of other team members, etc. Can they do better? • Unit Testing: testing a piece of code (method, function) in isolation: the expected failures are in that unit only • Integration testing: testing how a component integrates with other components: the expected failures may be in all the integrated components

Unit test • A unit test is an automated piece of code that invokes the unit of work being tested, and then checks some assumptions about a single end result of that unit • A unit test usually comprises three main actions: – Arrange objects, creating and setting them up as necessary. – Act on an object. – Assert that something is as expected

![Integration tests • [The art of Unit Testing]: • An external dependency is an Integration tests • [The art of Unit Testing]: • An external dependency is an](https://present5.com/presentation/fd91a931e354bf9a50284e0204cb09fd/image-6.jpg)

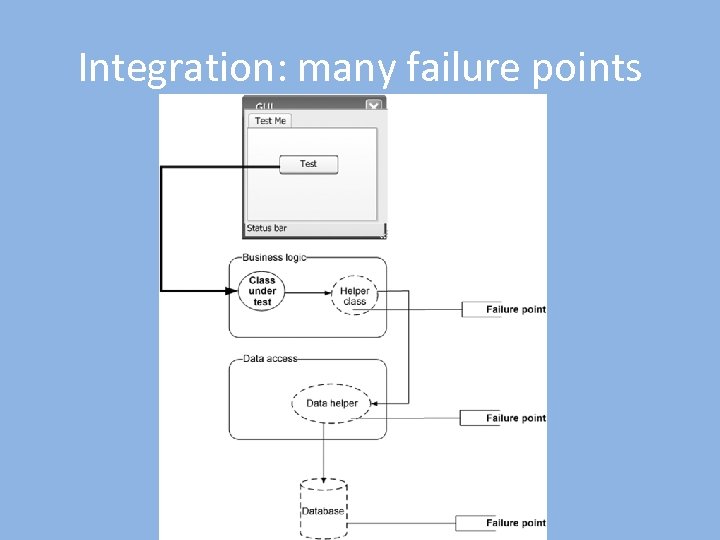

Integration tests • [The art of Unit Testing]: • An external dependency is an object in your system that your code under test interacts with and over which you have no control. (Common examples are filesystems, threads, memory, time, other services, and so on. ) • Integration testing is testing a unit of work with external dependencies • An integration test uses real dependencies; unit tests isolate the unit of work from its dependencies so that they’re easily consistent in their results and can easily control and simulate any aspect of the unit’s behavior

Integration: many failure points

Properties of a good unit test It should be automated and repeatable. It should be easy to implement. It should be relevant tomorrow. Anyone should be able to run it at the push of a button. It should run quickly. It should be consistent in its results (it always returns the same result if you don’t change anything between runs). • It should have full control of the unit under test. • It should be fully isolated (runs independently of other tests). • When it fails, it should be easy to detect what was expected and determine how to pinpoint the problem. • • •

Validation criteria for a good tests environment • Can I run and get results from a unit test I wrote two weeks or months or years ago? • Can any member of my team run and get results from unit tests I wrote two months ago? • Can I run all the unit tests I’ve written in no more than a few minutes? • Can I run all the unit tests I’ve written at the push of a button? • Can I write a basic test in no more than a few minutes?

Questions to answer • • • How many tests to write? Where to place tests? How to name tests? When to write tests? When to run tests? How to write tests?

How many tests Every public functionality should be tested Every statement in the code (? ) Some notable exceptions: getters and setters Tests should cover all the cases the developers consider they deserve to be tested: testing methods like Equivalence Classes Analysis and identification of the Boundary Limits may be of great help • A test case may have to be tested with many data sets • •

Where to put tests • Along with the production code in a separate package: tools like maven, ant, etc may help not deploying the code for production • Separate project: easier to separate the deployed pieces; enforce better test and code design

![Naming tests • [Unit. Of. Work. Name]_[Scenario. Under. Test]_[Expected. Behavior]. • Unit. Of. Work. Naming tests • [Unit. Of. Work. Name]_[Scenario. Under. Test]_[Expected. Behavior]. • Unit. Of. Work.](https://present5.com/presentation/fd91a931e354bf9a50284e0204cb09fd/image-13.jpg)

Naming tests • [Unit. Of. Work. Name]_[Scenario. Under. Test]_[Expected. Behavior]. • Unit. Of. Work. Name—The name of the method or group of methods or classes you’re testing. • Scenario—The conditions under which the unit is tested, such as “bad login” or “invalid user” or “good password. ” You could describe the parameters being sent to the public method or the initial state of the system when the unit of work is invoked such as “system out of memory” or “no users exist” or “user already exists. ” • Expected. Behavior—What you expect the tested method to do under the specified conditions. This could be one of three possibilities: return a value as a result (a real value, or an exception), change the state of the system as a result (like adding a new user to the system, so the system behaves differently on the next login), or call a third-party system as a result (like an external web service). • Ex: is. Valid. File. Name_Bad. Extension_Returns. False()

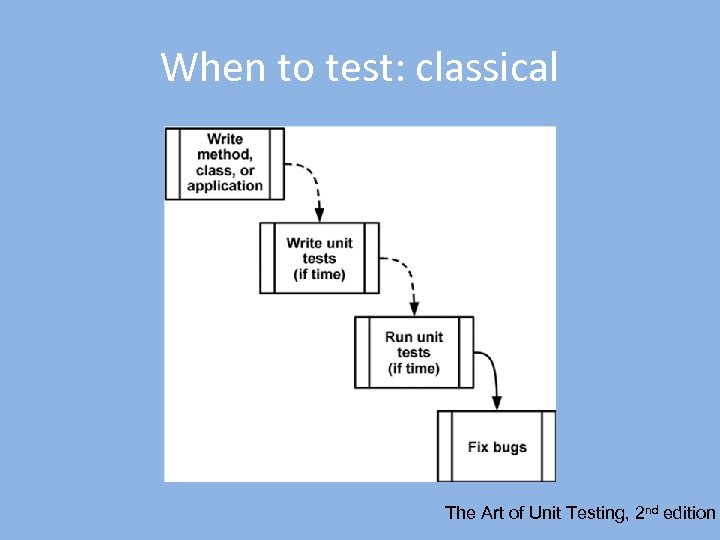

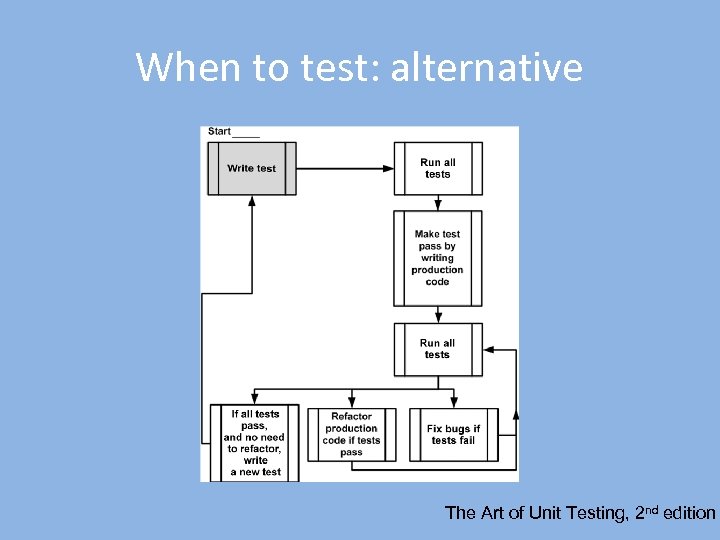

When to write tests • Classical case: tests written and run after the code is developed • Alternative approach: tests written before code exists: Tests Driven Development; no code exists if no test exists for it

When to run tests • Tests deserve to be run after each piece of code is developed (even before, in TDD) • Not only the tests for that specific developed code could be run, but a larger set of tests • If too many tests to run, run them at specified times in the day, once a week, after each commit, etc • Continuous integration: helps running tests at desired moments and generate reports on the tests success/failure

Writing unit tests • Unit tests are still code: they have to be carefully written and subject of the same important concerns we consider for regular code: correctness, reusability, maintainability, extendibility, performance, readability, etc. • Several best practices/design patterns are quite exercised when writing unit tests: special APIs – Unit Testing Frameworks

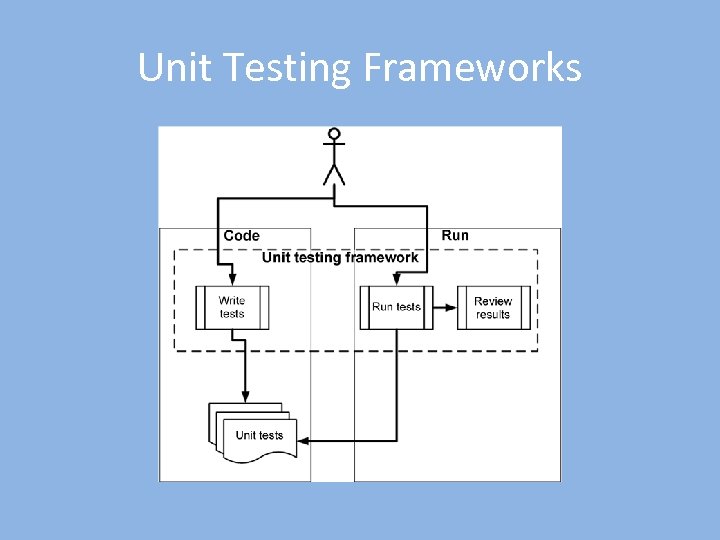

Unit Testing Frameworks

UTF Facilitates: • • • Tests Writing: ■ Base classes or interfaces to inherit ■ Attributes to place in your code to note which of your methods are tests ■ Assertion classes that have special assertion methods you invoke to verify your code Tests Execution: ■ Identifies tests in your code ■ Runs tests automatically ■ Indicates status while running ■ Can be automated by the command line Tests Execution Reporting: ■ How many tests ran ■ How many tests didn’t run ■ How many tests failed ■ Which tests failed ■ The reason tests failed ■ The ASSERT message you wrote ■ The code location that failed ■ Possibly a full stack trace of any exceptions that caused the test to fail, and will let you go to the various method calls inside the call stacks:

x. Units • • JUnit: http: //www. junit. org/ (Java) Test. NG: http: //testng. org/doc/index. html (Java) VBUnit: http: //www. vbunit. com/ (Visual Basic) x. Unit. net: http: //www. codeplex. com/xunit (. NET) NUnit: http: //www. nunit. org/ (. NET) Many x. Units: http: //www. xprogramming. com/software. htm Useful short info about TDD and other x. Units: http: //www. agiledata. org/essays/tdd. html

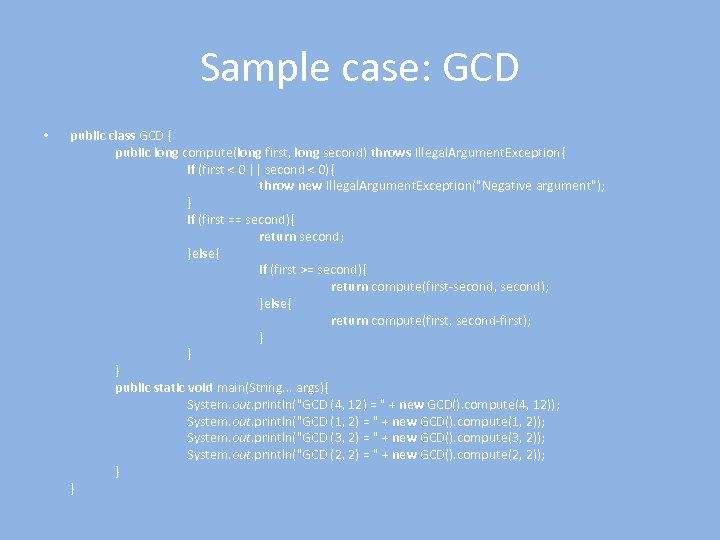

Sample case: GCD • public class GCD { public long compute(long first, long second) throws Illegal. Argument. Exception{ if (first < 0 || second < 0){ throw new Illegal. Argument. Exception("Negative argument"); } if (first == second){ return second; }else{ if (first >= second){ return compute(first-second, second); }else{ return compute(first, second-first); } } } public static void main(String. . . args){ System. out. println("GCD (4, 12) = " + new GCD(). compute(4, 12)); System. out. println("GCD (1, 2) = " + new GCD(). compute(1, 2)); System. out. println("GCD (3, 2) = " + new GCD(). compute(3, 2)); System. out. println("GCD (2, 2) = " + new GCD(). compute(2, 2)); } }

Short analysis • Tested with an external testing code (the main function) • Provide some set of cases for the developer to test • Shows how the compute method could be called • Difficult to asses the correctness of the results if many tests done • Missed some important cases: System. out. println("GCD (0, 2) = " + new GCD(). compute(2, 2));

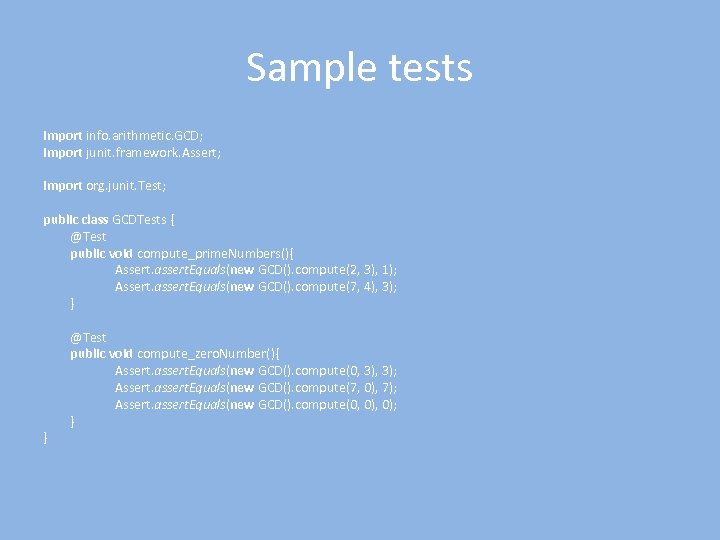

Using JUnit • Create a project GCD. tests in the IDE • Add the JUnit 4 library to the project • Create a dependency from the new project to the GCD project • Start writing test cases: see next slide

Sample tests import info. arithmetic. GCD; import junit. framework. Assert; import org. junit. Test; public class GCDTests { @Test public void compute_prime. Numbers(){ Assert. assert. Equals(new GCD(). compute(2, 3), 1); Assert. assert. Equals(new GCD(). compute(7, 4), 3); } } @Test public void compute_zero. Number(){ Assert. assert. Equals(new GCD(). compute(0, 3); Assert. assert. Equals(new GCD(). compute(7, 0), 7); Assert. assert. Equals(new GCD(). compute(0, 0); }

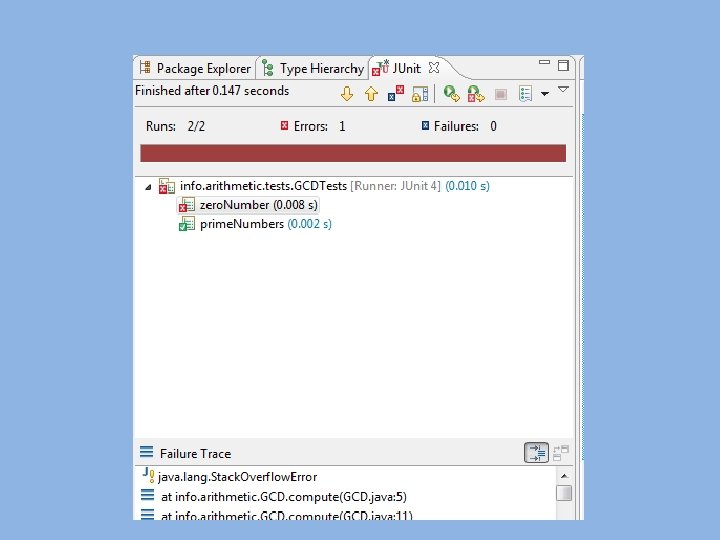

Observations • Separated tests from the code: the tests lifecycle may differ from the code lifecycle • Easier to see the reason for tests based on their name • Enforces the tests designer to think twice about the functionality • The tests results are formally collected and can be part of some reports: see next slide

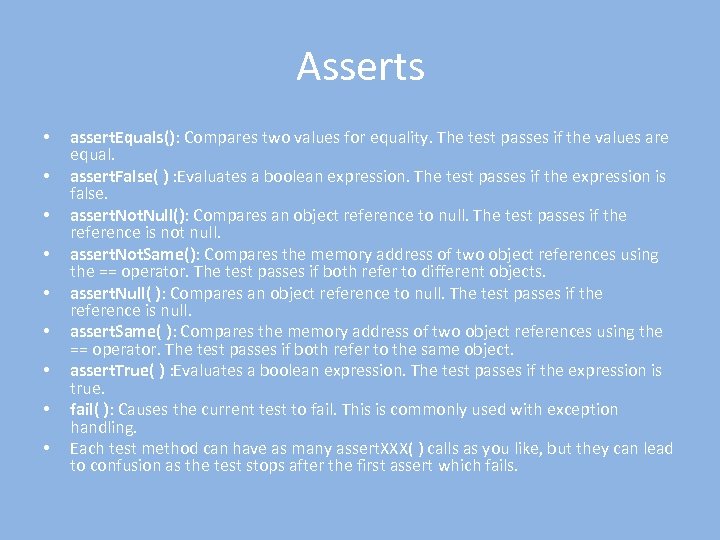

Asserts • • • assert. Equals(): Compares two values for equality. The test passes if the values are equal. assert. False( ) : Evaluates a boolean expression. The test passes if the expression is false. assert. Not. Null(): Compares an object reference to null. The test passes if the reference is not null. assert. Not. Same(): Compares the memory address of two object references using the == operator. The test passes if both refer to different objects. assert. Null( ): Compares an object reference to null. The test passes if the reference is null. assert. Same( ): Compares the memory address of two object references using the == operator. The test passes if both refer to the same object. assert. True( ) : Evaluates a boolean expression. The test passes if the expression is true. fail( ): Causes the current test to fail. This is commonly used with exception handling. Each test method can have as many assert. XXX( ) calls as you like, but they can lead to confusion as the test stops after the first assert which fails.

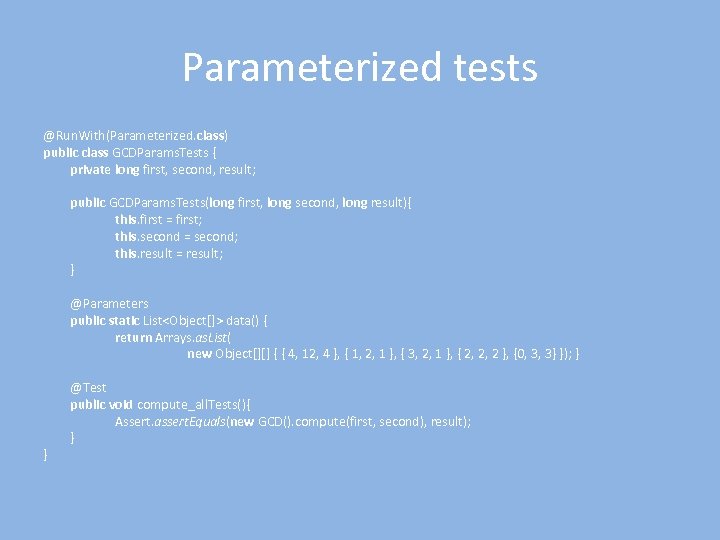

Parameterized tests @Run. With(Parameterized. class) public class GCDParams. Tests { private long first, second, result; public GCDParams. Tests(long first, long second, long result){ this. first = first; this. second = second; this. result = result; } @Parameters public static List<Object[]> data() { return Arrays. as. List( new Object[][] { { 4, 12, 4 }, { 1, 2, 1 }, { 3, 2, 1 }, { 2, 2, 2 }, {0, 3, 3} }); } } @Test public void compute_all. Tests(){ Assert. assert. Equals(new GCD(). compute(first, second), result); }

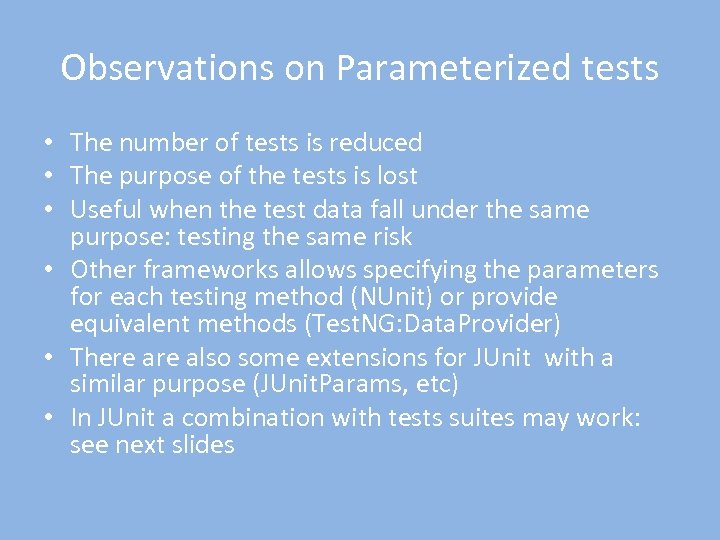

Observations on Parameterized tests • The number of tests is reduced • The purpose of the tests is lost • Useful when the test data fall under the same purpose: testing the same risk • Other frameworks allows specifying the parameters for each testing method (NUnit) or provide equivalent methods (Test. NG: Data. Provider) • There also some extensions for JUnit with a similar purpose (JUnit. Params, etc) • In JUnit a combination with tests suites may work: see next slides

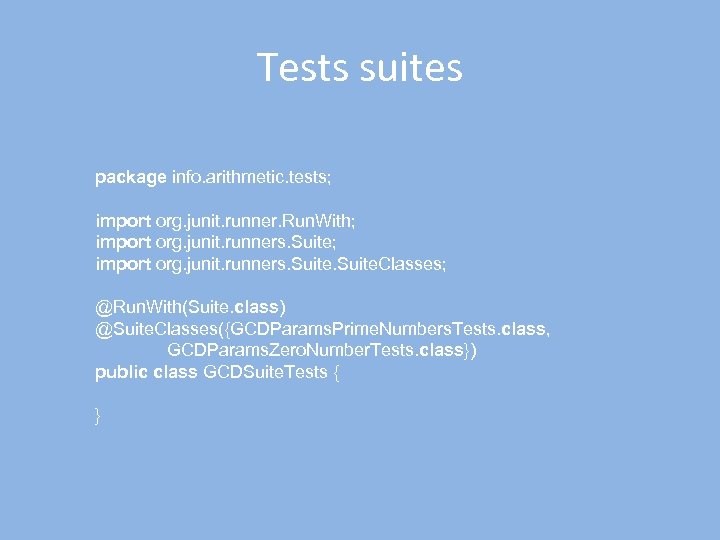

Tests suites package info. arithmetic. tests; import org. junit. runner. Run. With; import org. junit. runners. Suite. Classes; @Run. With(Suite. class) @Suite. Classes({GCDParams. Prime. Numbers. Tests. class, GCDParams. Zero. Number. Tests. class}) public class GCDSuite. Tests { }

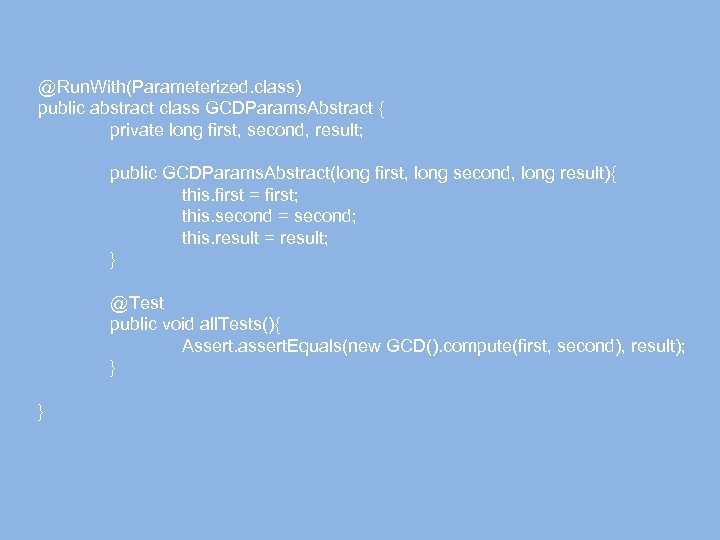

@Run. With(Parameterized. class) public abstract class GCDParams. Abstract { private long first, second, result; public GCDParams. Abstract(long first, long second, long result){ this. first = first; this. second = second; this. result = result; } @Test public void all. Tests(){ Assert. assert. Equals(new GCD(). compute(first, second), result); } }

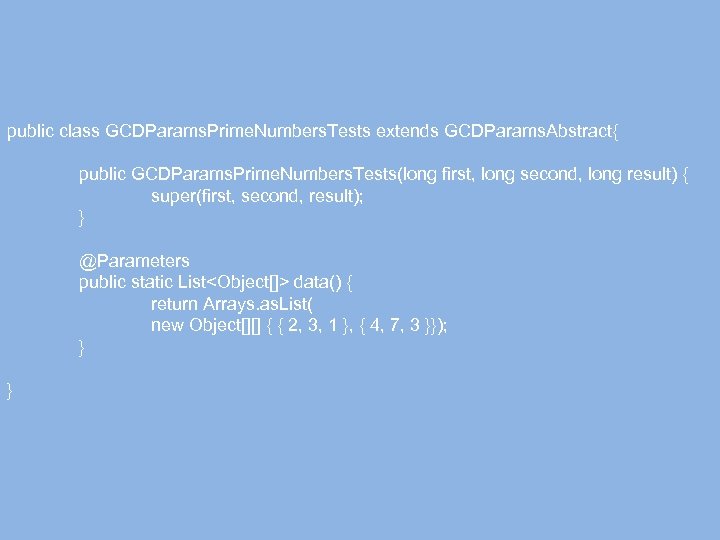

public class GCDParams. Prime. Numbers. Tests extends GCDParams. Abstract{ public GCDParams. Prime. Numbers. Tests(long first, long second, long result) { super(first, second, result); } @Parameters public static List<Object[]> data() { return Arrays. as. List( new Object[][] { { 2, 3, 1 }, { 4, 7, 3 }}); } }

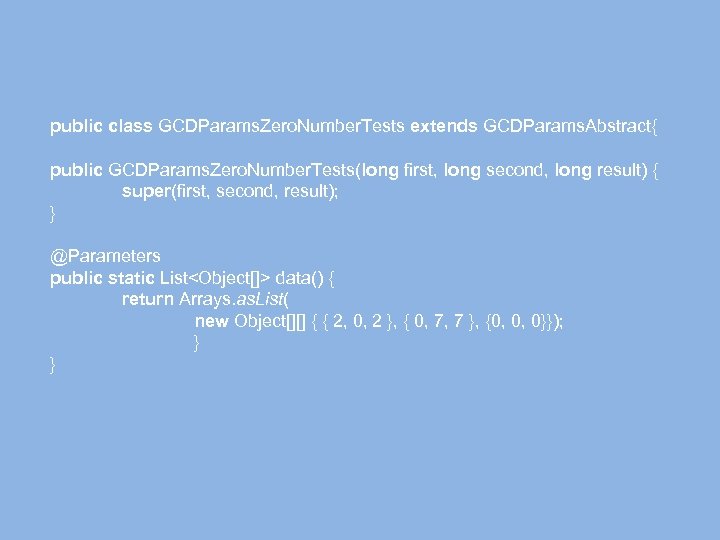

public class GCDParams. Zero. Number. Tests extends GCDParams. Abstract{ public GCDParams. Zero. Number. Tests(long first, long second, long result) { super(first, second, result); } @Parameters public static List<Object[]> data() { return Arrays. as. List( new Object[][] { { 2, 0, 2 }, { 0, 7, 7 }, {0, 0, 0}}); } }

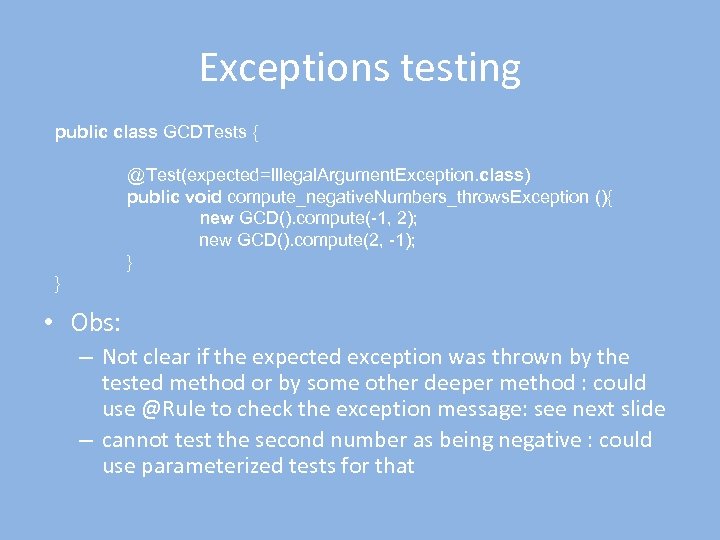

Exceptions testing public class GCDTests { @Test(expected=Illegal. Argument. Exception. class) public void compute_negative. Numbers_throws. Exception (){ new GCD(). compute(-1, 2); new GCD(). compute(2, -1); } } • Obs: – Not clear if the expected exception was thrown by the tested method or by some other deeper method : could use @Rule to check the exception message: see next slide – cannot test the second number as being negative : could use parameterized tests for that

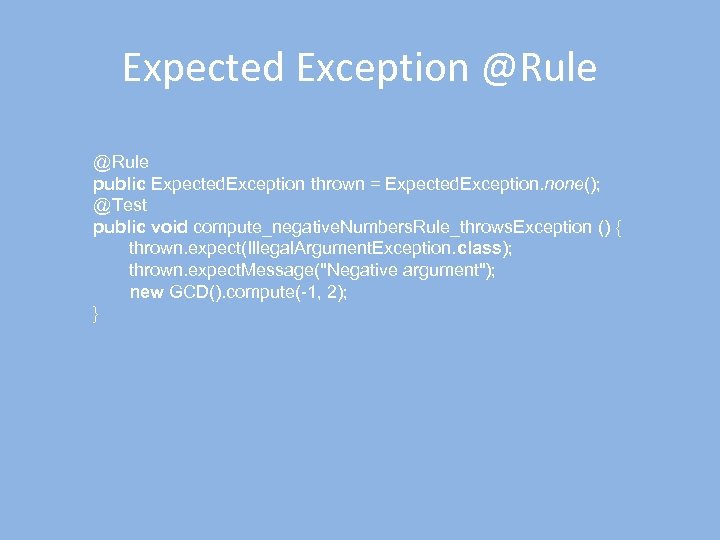

Expected Exception @Rule public Expected. Exception thrown = Expected. Exception. none(); @Test public void compute_negative. Numbers. Rule_throws. Exception () { thrown. expect(Illegal. Argument. Exception. class); thrown. expect. Message("Negative argument"); new GCD(). compute(-1, 2); }

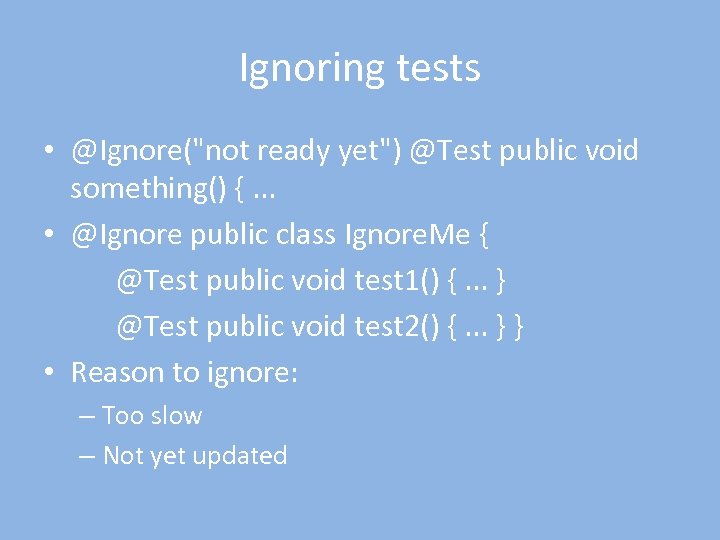

Ignoring tests • @Ignore("not ready yet") @Test public void something() {. . . • @Ignore public class Ignore. Me { @Test public void test 1() {. . . } @Test public void test 2() {. . . } } • Reason to ignore: – Too slow – Not yet updated

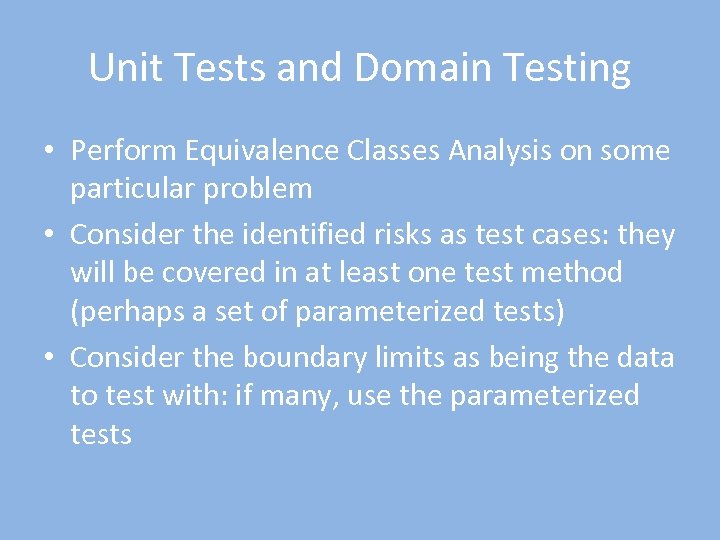

Unit Tests and Domain Testing • Perform Equivalence Classes Analysis on some particular problem • Consider the identified risks as test cases: they will be covered in at least one test method (perhaps a set of parameterized tests) • Consider the boundary limits as being the data to test with: if many, use the parameterized tests

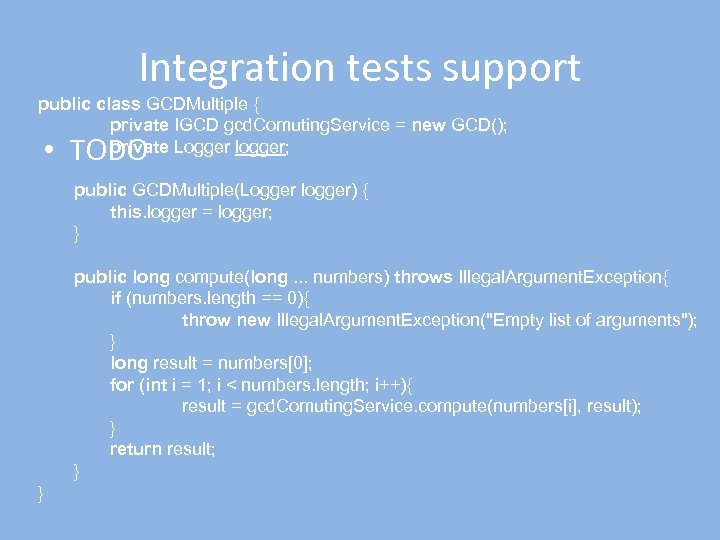

Integration tests support public class GCDMultiple { private IGCD gcd. Comuting. Service = new GCD(); private Logger logger; • TODO public GCDMultiple(Logger logger) { this. logger = logger; } public long compute(long. . . numbers) throws Illegal. Argument. Exception{ if (numbers. length == 0){ throw new Illegal. Argument. Exception("Empty list of arguments"); } long result = numbers[0]; for (int i = 1; i < numbers. length; i++){ result = gcd. Comuting. Service. compute(numbers[i], result); } return result; } }

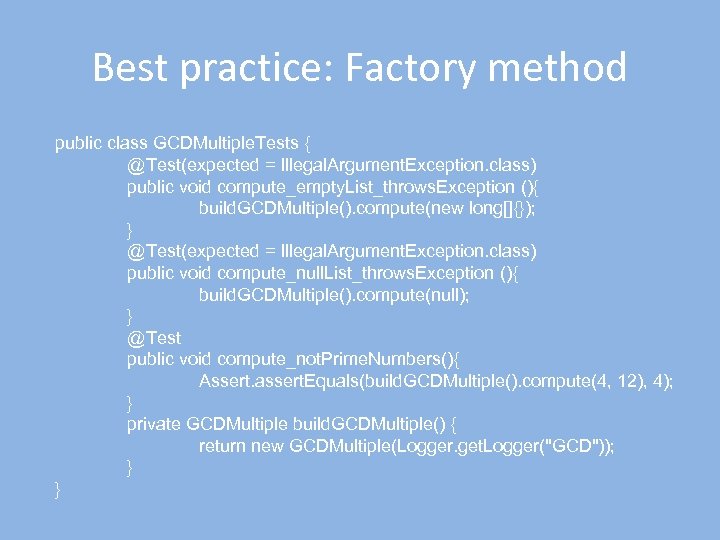

Best practice: Factory method public class GCDMultiple. Tests { @Test(expected = Illegal. Argument. Exception. class) public void compute_empty. List_throws. Exception (){ build. GCDMultiple(). compute(new long[]{}); } @Test(expected = Illegal. Argument. Exception. class) public void compute_null. List_throws. Exception (){ build. GCDMultiple(). compute(null); } @Test public void compute_not. Prime. Numbers(){ Assert. assert. Equals(build. GCDMultiple(). compute(4, 12), 4); } private GCDMultiple build. GCDMultiple() { return new GCDMultiple(Logger. get. Logger("GCD")); } }

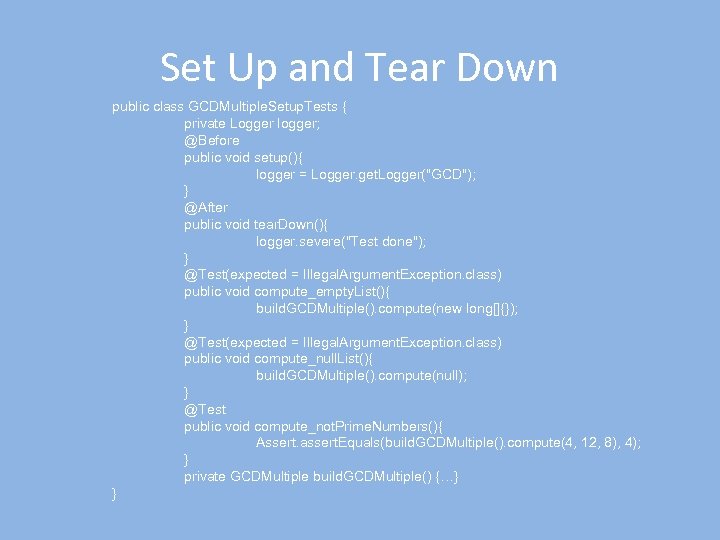

Set Up and Tear Down public class GCDMultiple. Setup. Tests { private Logger logger; @Before public void setup(){ logger = Logger. get. Logger("GCD"); } @After public void tear. Down(){ logger. severe("Test done"); } @Test(expected = Illegal. Argument. Exception. class) public void compute_empty. List(){ build. GCDMultiple(). compute(new long[]{}); } @Test(expected = Illegal. Argument. Exception. class) public void compute_null. List(){ build. GCDMultiple(). compute(null); } @Test public void compute_not. Prime. Numbers(){ Assert. assert. Equals(build. GCDMultiple(). compute(4, 12, 8), 4); } private GCDMultiple build. GCDMultiple() {…} }

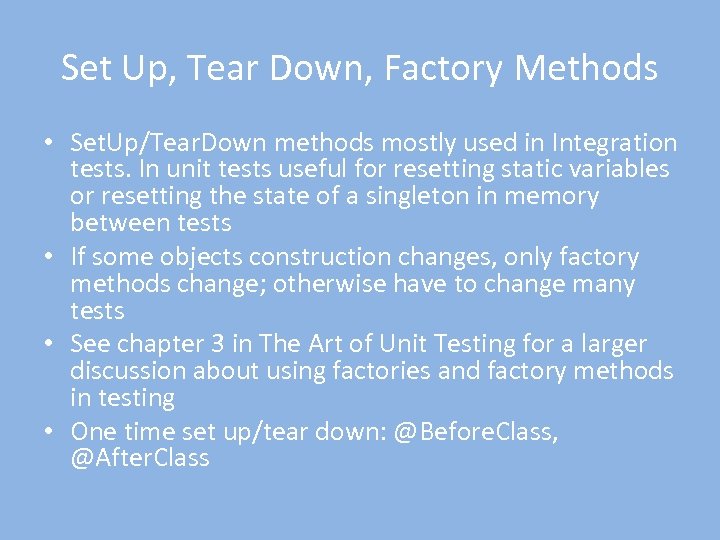

Set Up, Tear Down, Factory Methods • Set. Up/Tear. Down methods mostly used in Integration tests. In unit tests useful for resetting static variables or resetting the state of a singleton in memory between tests • If some objects construction changes, only factory methods change; otherwise have to change many tests • See chapter 3 in The Art of Unit Testing for a larger discussion about using factories and factory methods in testing • One time set up/tear down: @Before. Class, @After. Class

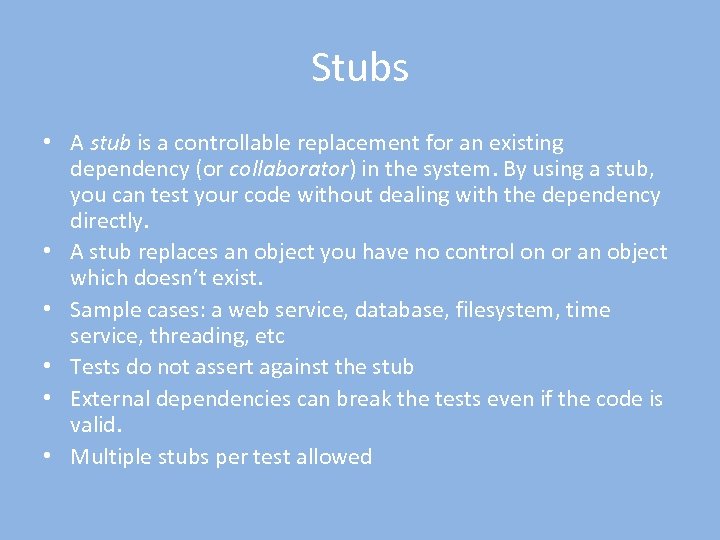

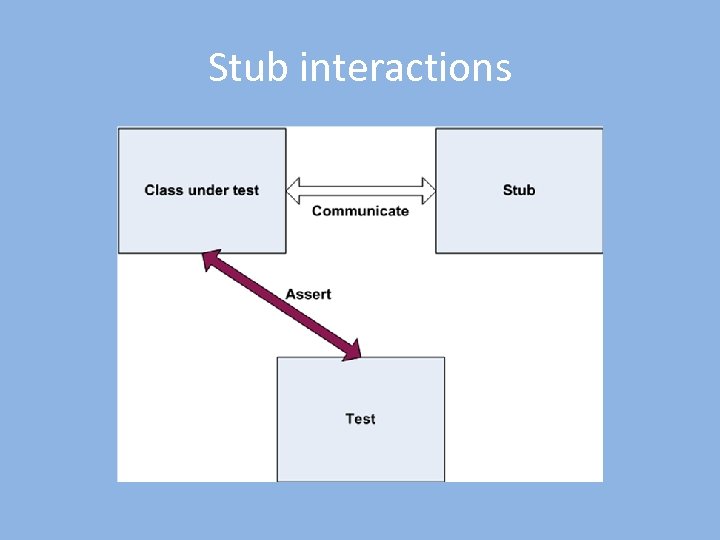

Stubs • A stub is a controllable replacement for an existing dependency (or collaborator) in the system. By using a stub, you can test your code without dealing with the dependency directly. • A stub replaces an object you have no control on or an object which doesn’t exist. • Sample cases: a web service, database, filesystem, time service, threading, etc • Tests do not assert against the stub • External dependencies can break the tests even if the code is valid. • Multiple stubs per test allowed

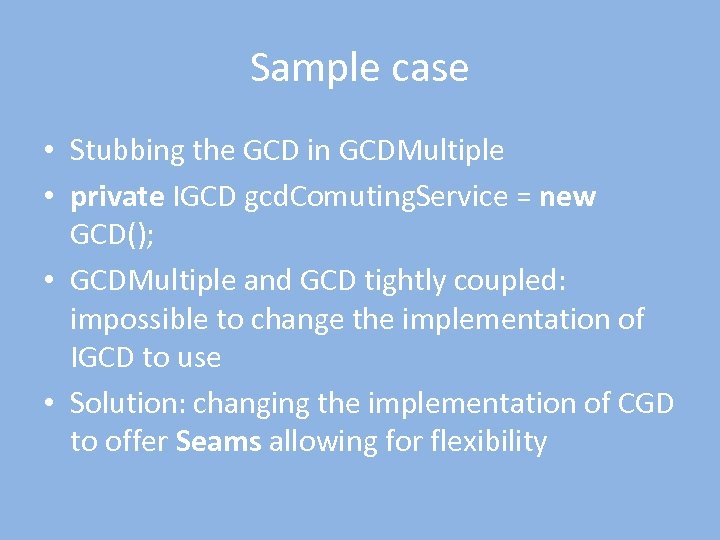

Sample case • Stubbing the GCD in GCDMultiple • private IGCD gcd. Comuting. Service = new GCD(); • GCDMultiple and GCD tightly coupled: impossible to change the implementation of IGCD to use • Solution: changing the implementation of CGD to offer Seams allowing for flexibility

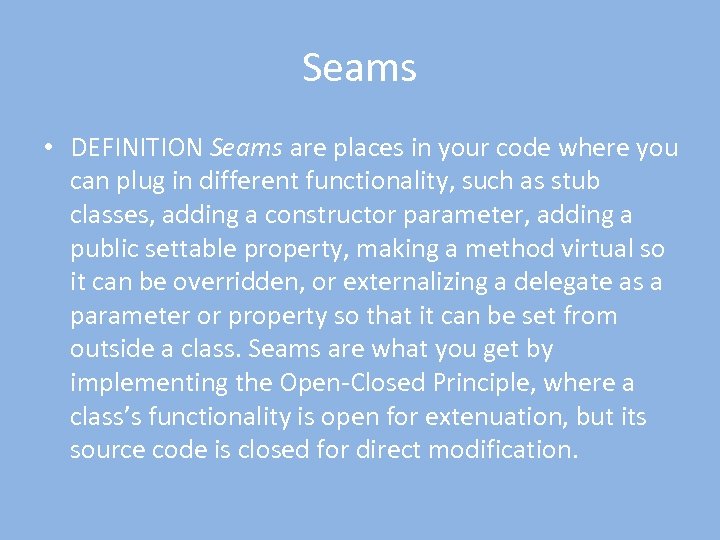

Seams • DEFINITION Seams are places in your code where you can plug in different functionality, such as stub classes, adding a constructor parameter, adding a public settable property, making a method virtual so it can be overridden, or externalizing a delegate as a parameter or property so that it can be set from outside a class. Seams are what you get by implementing the Open-Closed Principle, where a class’s functionality is open for extenuation, but its source code is closed for direct modification.

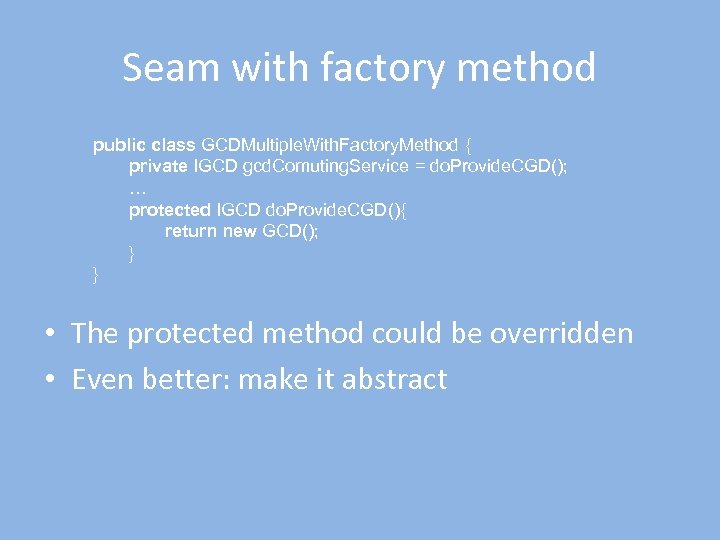

Seam with factory method public class GCDMultiple. With. Factory. Method { private IGCD gcd. Comuting. Service = do. Provide. CGD(); … protected IGCD do. Provide. CGD(){ return new GCD(); } } • The protected method could be overridden • Even better: make it abstract

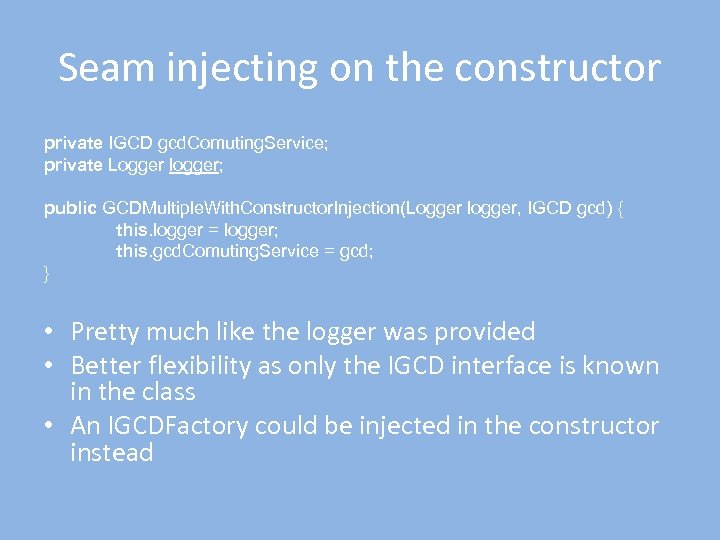

Seam injecting on the constructor private IGCD gcd. Comuting. Service; private Logger logger; public GCDMultiple. With. Constructor. Injection(Logger logger, IGCD gcd) { this. logger = logger; this. gcd. Comuting. Service = gcd; } • Pretty much like the logger was provided • Better flexibility as only the IGCD interface is known in the class • An IGCDFactory could be injected in the constructor instead

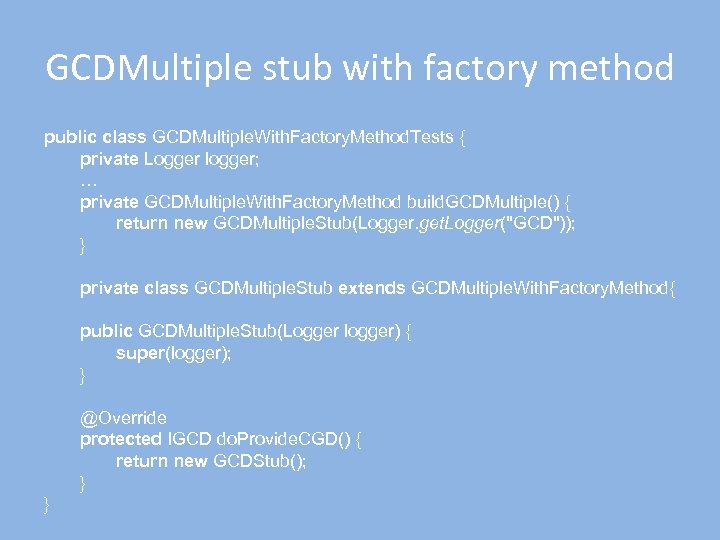

GCDMultiple stub with factory method public class GCDMultiple. With. Factory. Method. Tests { private Logger logger; … private GCDMultiple. With. Factory. Method build. GCDMultiple() { return new GCDMultiple. Stub(Logger. get. Logger("GCD")); } private class GCDMultiple. Stub extends GCDMultiple. With. Factory. Method{ public GCDMultiple. Stub(Logger logger) { super(logger); } @Override protected IGCD do. Provide. CGD() { return new GCDStub(); } }

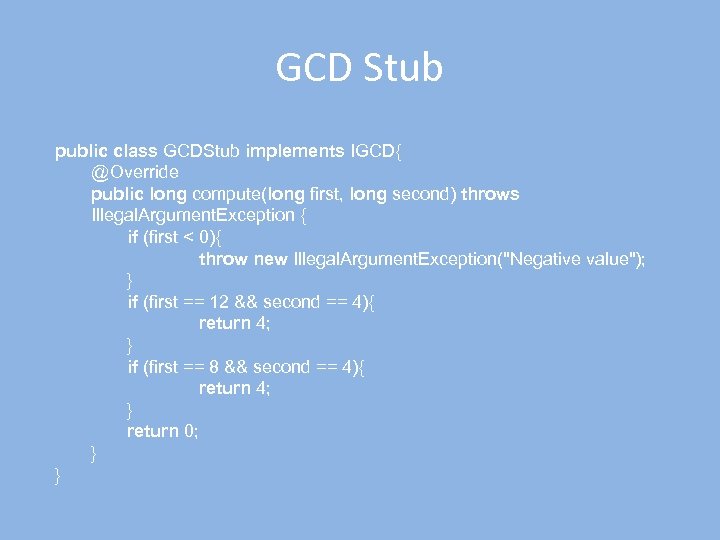

GCD Stub public class GCDStub implements IGCD{ @Override public long compute(long first, long second) throws Illegal. Argument. Exception { if (first < 0){ throw new Illegal. Argument. Exception("Negative value"); } if (first == 12 && second == 4){ return 4; } if (first == 8 && second == 4){ return 4; } return 0; } }

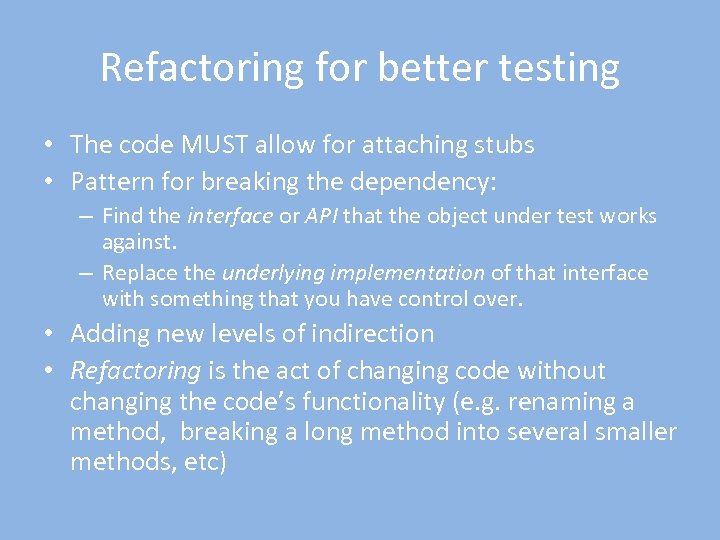

Refactoring for better testing • The code MUST allow for attaching stubs • Pattern for breaking the dependency: – Find the interface or API that the object under test works against. – Replace the underlying implementation of that interface with something that you have control over. • Adding new levels of indirection • Refactoring is the act of changing code without changing the code’s functionality (e. g. renaming a method, breaking a long method into several smaller methods, etc)

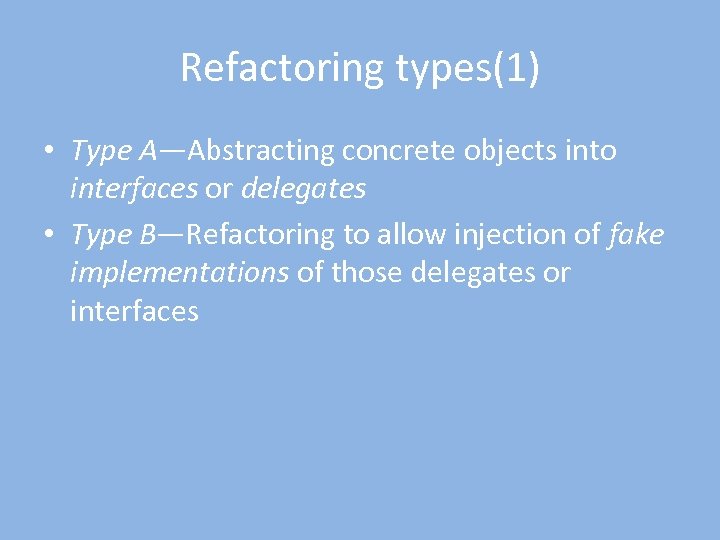

Refactoring types(1) • Type A—Abstracting concrete objects into interfaces or delegates • Type B—Refactoring to allow injection of fake implementations of those delegates or interfaces

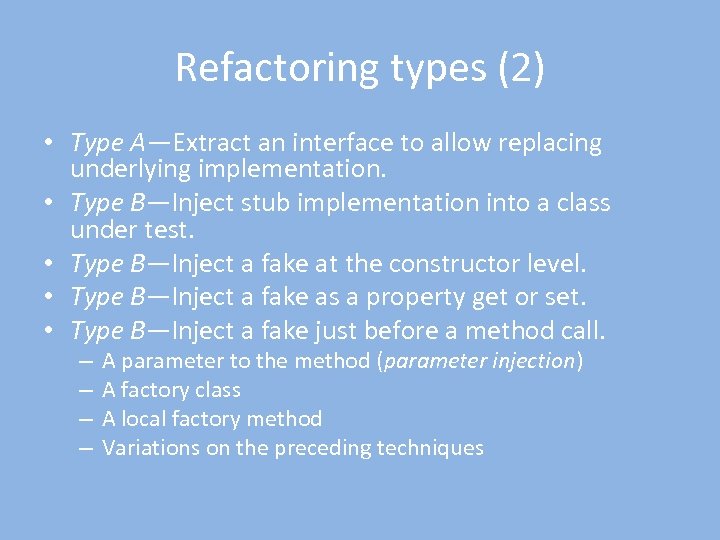

Refactoring types (2) • Type A—Extract an interface to allow replacing underlying implementation. • Type B—Inject stub implementation into a class under test. • Type B—Inject a fake at the constructor level. • Type B—Inject a fake as a property get or set. • Type B—Inject a fake just before a method call. – – A parameter to the method (parameter injection) A factory class A local factory method Variations on the preceding techniques

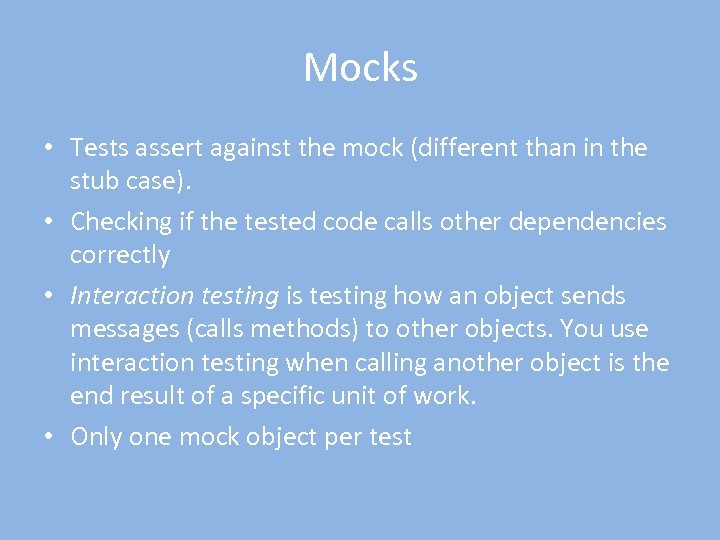

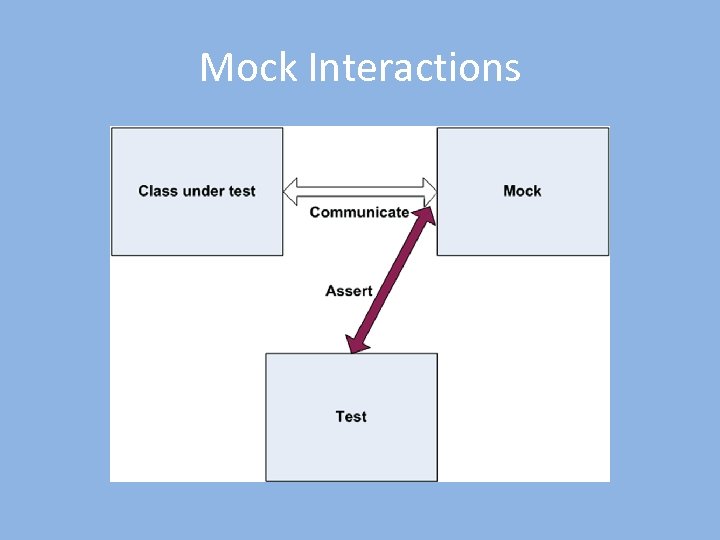

Mocks • Tests assert against the mock (different than in the stub case). • Checking if the tested code calls other dependencies correctly • Interaction testing is testing how an object sends messages (calls methods) to other objects. You use interaction testing when calling another object is the end result of a specific unit of work. • Only one mock object per test

Stub interactions

Mock Interactions

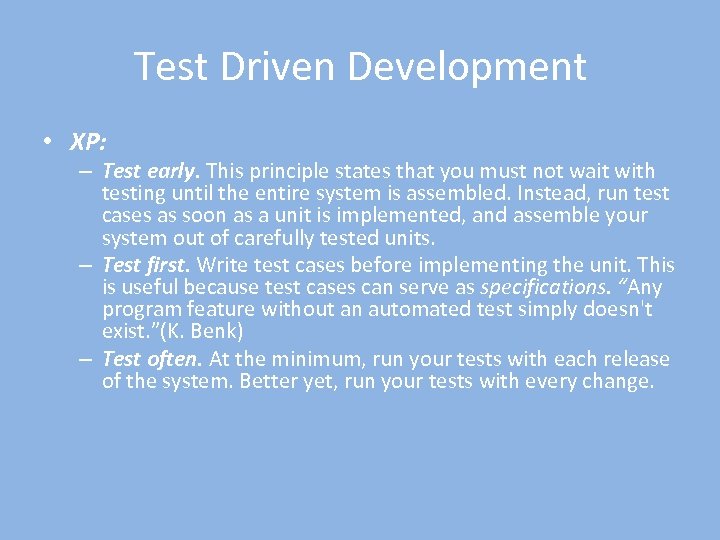

Test Driven Development • XP: – Test early. This principle states that you must not wait with testing until the entire system is assembled. Instead, run test cases as soon as a unit is implemented, and assemble your system out of carefully tested units. – Test first. Write test cases before implementing the unit. This is useful because test cases can serve as specifications. “Any program feature without an automated test simply doesn't exist. ”(K. Benk) – Test often. At the minimum, run your tests with each release of the system. Better yet, run your tests with every change.

When to test: classical The Art of Unit Testing, 2 nd edition

Test Driven Development • Alerting a programmer about mistakes as soon as possible • A programmer taking a TDD approach refuses to write a new function until there is first a test that fails because that function isn’t present • If it's worth building, it's worth testing. • If it's not worth testing, why are you wasting your time working on it? • You communicate your intentions twice, stating the same idea in different ways: first with a test, then with production code. When they match, it’s likely they were both coded correctly. If they don’t, there’s a mistake somewhere.

When to test: alternative The Art of Unit Testing, 2 nd edition

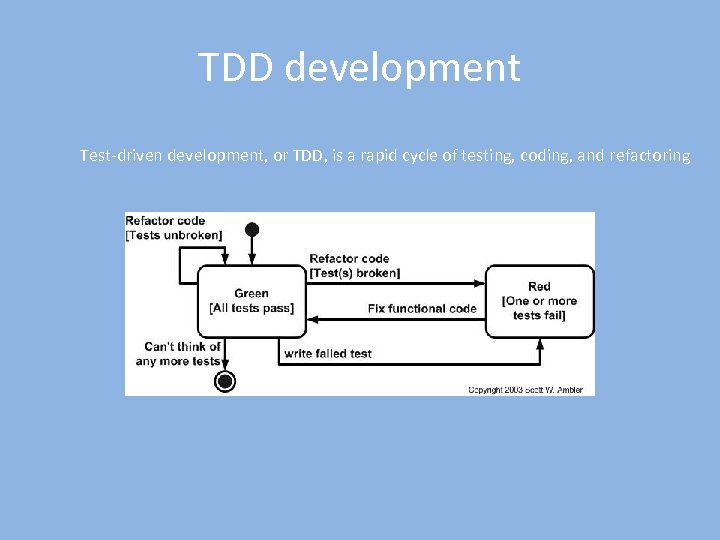

TDD development Test-driven development, or TDD, is a rapid cycle of testing, coding, and refactoring

TDD steps • In TDD, the tests are written from the perspective of a class’ public interface. They focus on the class’ behavior, not its implementation • After TDD is finished, the tests remain. They’re checked in with the rest of the code, and they act as living documentation of the code. More importantly, programmers run all the tests with (nearly) every build, ensuring that code continues to work as originally intended. If someone accidentally changes the code’s behavior—for example, with a misguided refactoring— the tests fail, signaling the mistake.

Step 1: Think of tests • Your first step, therefore, is to engage in a rather odd thought process. Imagine what behavior you want your code to have, then think of a small increment that will require fewer than five lines of code. Next, think of a test—also a few lines of code—that will fail unless that behavior is present. • In other words, think of a test that will force you to add the next few lines of production code. This is the hardest part of TDD because the concept of tests driving your code seems backward, and because it can be difficult to think in small increments.

Step 2: Write failing tests (Red stage) • In the first few tests, this often means you write your test to use method and class names that don’t exist yet. This is intentional—it forces you to design your class’ interface from the perspective of a user of the class, not as its implementer. • After the test is coded, run your entire suite of tests and watch the new test fail. In most TDD testing tools, this will result in a red progress bar. • Troubleshoot unexpected successes and troubleshoot unexpected failures.

Step 3: Writing production code (Green stage) • Next, write just enough production code to get the test to pass. Again, you should usually need less than five lines of code. Don’t worry about design purity or conceptual elegance—just do what you need to do to make the test pass. • Run your tests again, and watch all the tests pass. This will result in a green progress bar. • If the test fails, get back to known-good code as quickly as you can and investigate the new written code for the errors.

Step 4: Refactor • Review the code and look for possible improvements • Don’t anticipate future needs, and certainly don’t add new behavior. • Refactorings aren’t supposed to change behavior. New behavior requires a failing test.

Step 5: Repeat the process • Each time you finish the TDD cycle, you add a tiny bit of well-tested, well-designed code. • The key to success with TDD is small increments. Typically, you’ll run through several cycles very quickly, then spend more time on refactoring for a cycle or two, then speed up again. • Do not skip refactoring and design, which are too important to skip. • Take very small steps, run the tests frequently, and minimize the time you spend with a red bar.

fd91a931e354bf9a50284e0204cb09fd.ppt