7d6a7ac6f50966d6b4e5060ed27da7a2.ppt

- Количество слайдов: 11

SLICE Simulation for LHCb and Integrated Control Environment Gennady Kuznetsov & Glenn Patrick (RAL) Cosener’s House Workshop 23 rd May 2002

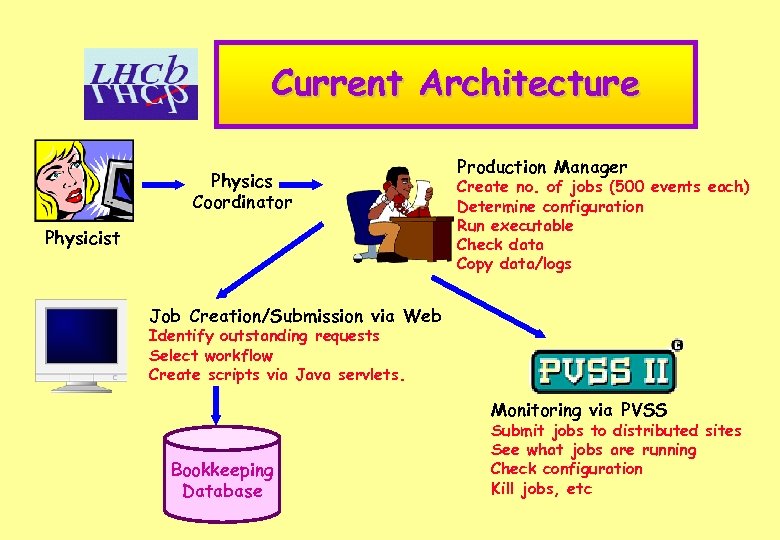

Current Architecture Physics Coordinator Physicist Production Manager Create no. of jobs (500 events each) Determine configuration Run executable Check data Copy data/logs Job Creation/Submission via Web Identify outstanding requests Select workflow Create scripts via Java servlets. Monitoring via PVSS Bookkeeping Database Submit jobs to distributed sites See what jobs are running Check configuration Kill jobs, etc

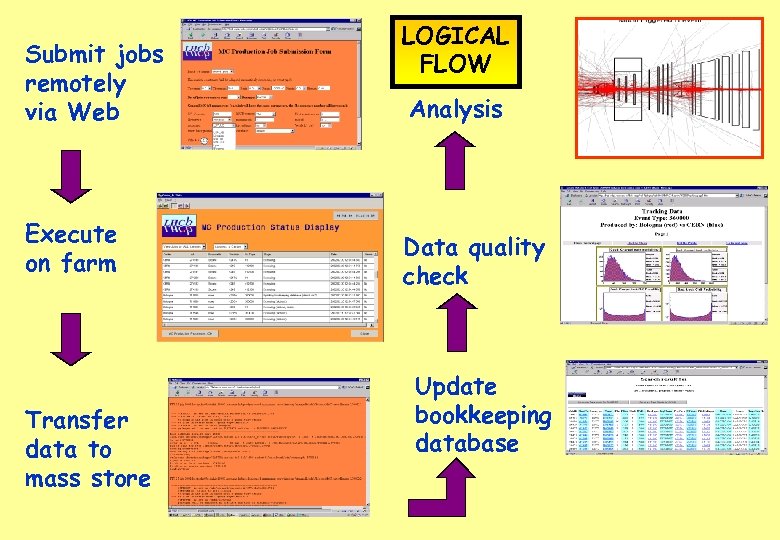

Submit jobs remotely via Web Execute on farm Transfer data to mass store LOGICAL FLOW Analysis Data quality check Update bookkeeping database

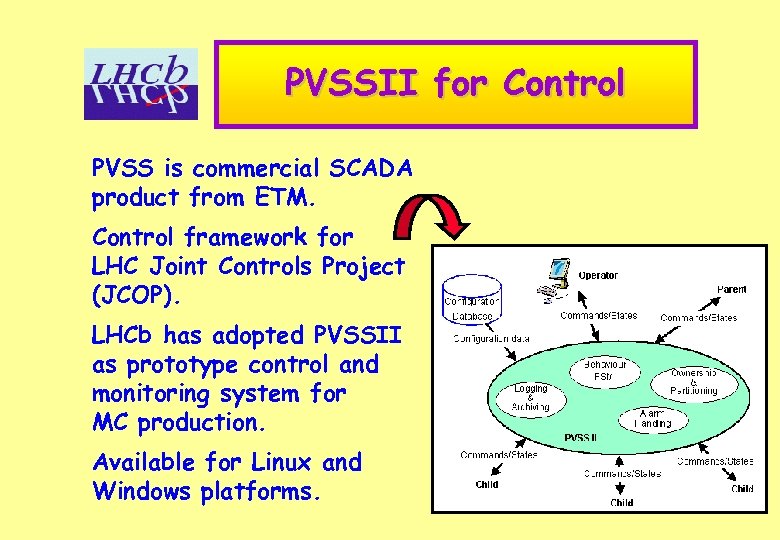

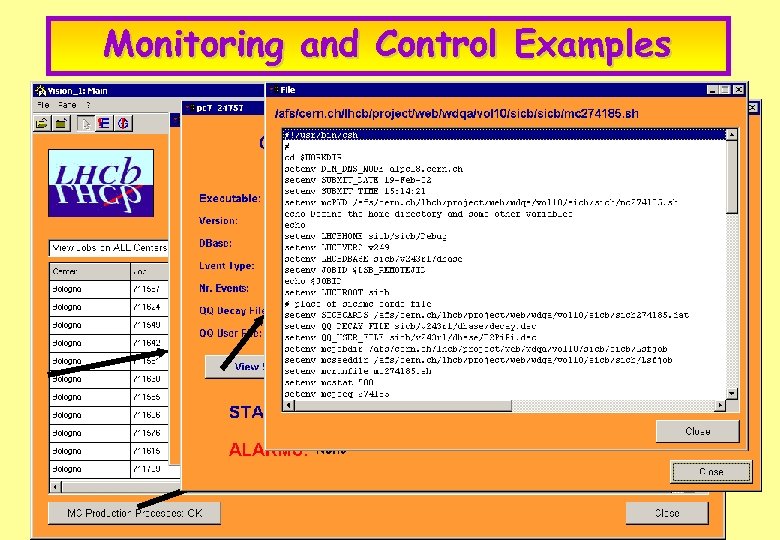

PVSSII for Control PVSS is commercial SCADA product from ETM. Control framework for LHC Joint Controls Project (JCOP). LHCb has adopted PVSSII as prototype control and monitoring system for MC production. Available for Linux and Windows platforms.

Monitoring and Control Examples

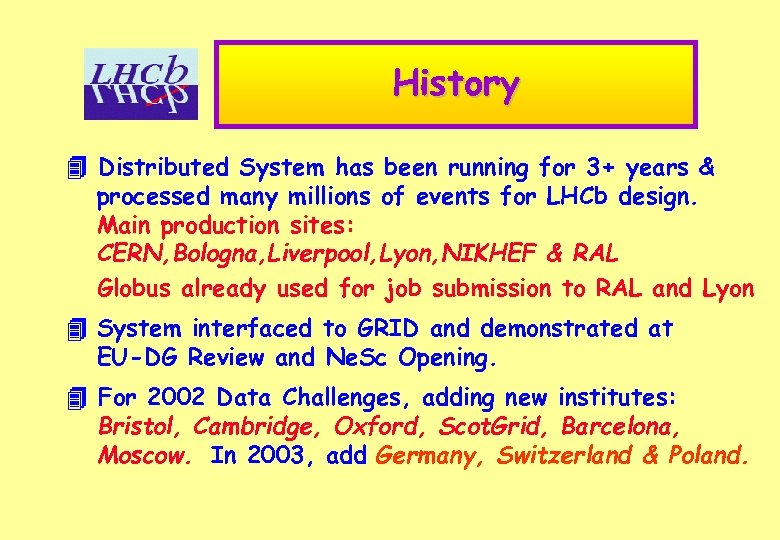

History Distributed System has been running for 3+ years & processed many millions of events for LHCb design. Main production sites: CERN, Bologna, Liverpool, Lyon, NIKHEF & RAL Globus already used for job submission to RAL and Lyon System interfaced to GRID and demonstrated at EU-DG Review and Ne. Sc Opening. For 2002 Data Challenges, adding new institutes: Bristol, Cambridge, Oxford, Scot. Grid, Barcelona, Moscow. In 2003, add Germany, Switzerland & Poland.

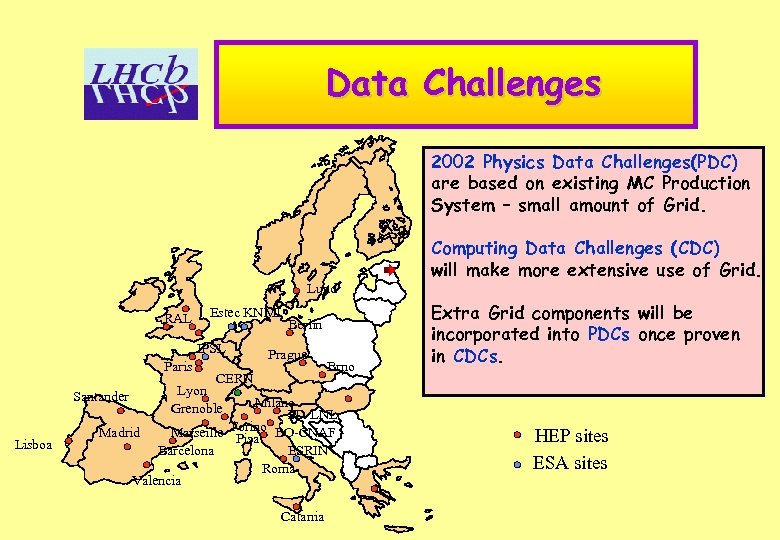

Data Challenges 2002 Physics Data Challenges(PDC) are based on existing MC Production (>40) System – small amount of Grid. Lund RAL Estec KNMI IPSL Paris Santander Lisboa Berlin Prague CERN Brno Computing Data Challenges (CDC) Dubna will make more extensive use of Grid. Moscow Extra Grid components will be incorporated into PDCs once proven in CDCs. Lyon Grenoble Milano PD-LNL Torino BO-CNAF Madrid Marseille Pisa Barcelona ESRIN Roma Valencia Catania HEP sites ESA sites

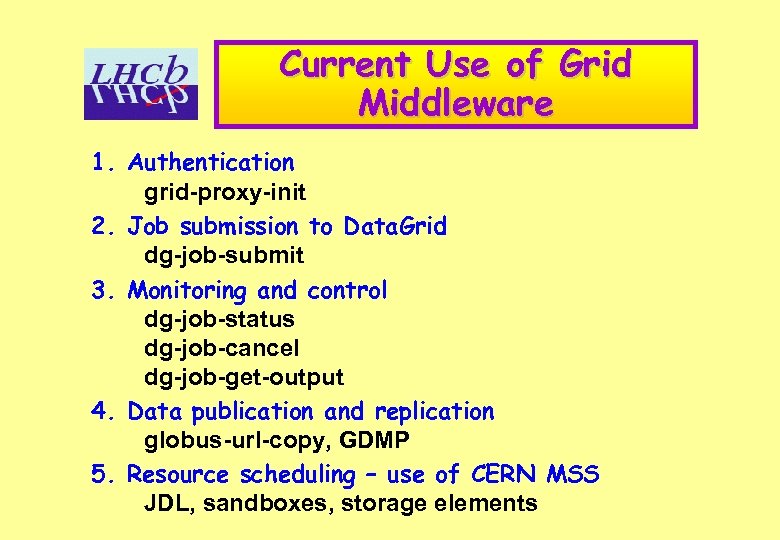

Current Use of Grid Middleware 1. Authentication grid-proxy-init 2. Job submission to Data. Grid dg-job-submit 3. Monitoring and control dg-job-status dg-job-cancel dg-job-get-output 4. Data publication and replication globus-url-copy, GDMP 5. Resource scheduling – use of CERN MSS JDL, sandboxes, storage elements

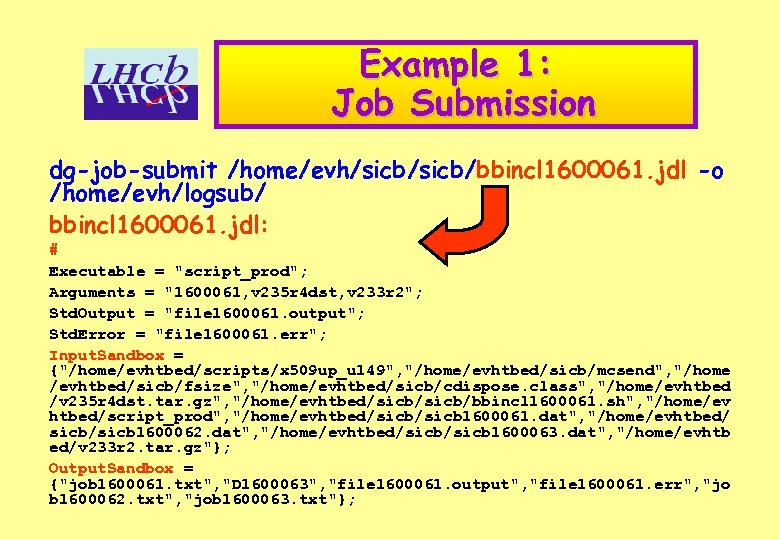

Example 1: Job Submission dg-job-submit /home/evh/sicb/bbincl 1600061. jdl -o /home/evh/logsub/ bbincl 1600061. jdl: # Executable = "script_prod"; Arguments = "1600061, v 235 r 4 dst, v 233 r 2"; Std. Output = "file 1600061. output"; Std. Error = "file 1600061. err"; Input. Sandbox = {"/home/evhtbed/scripts/x 509 up_u 149", "/home/evhtbed/sicb/mcsend", "/home /evhtbed/sicb/fsize", "/home/evhtbed/sicb/cdispose. class", "/home/evhtbed /v 235 r 4 dst. tar. gz", "/home/evhtbed/sicb/bbincl 1600061. sh", "/home/ev htbed/script_prod", "/home/evhtbed/sicb 1600061. dat", "/home/evhtbed/ sicb/sicb 1600062. dat", "/home/evhtbed/sicb 1600063. dat", "/home/evhtb ed/v 233 r 2. tar. gz"}; Output. Sandbox = {"job 1600061. txt", "D 1600063", "file 1600061. output", "file 1600061. err", "jo b 1600062. txt", "job 1600063. txt"};

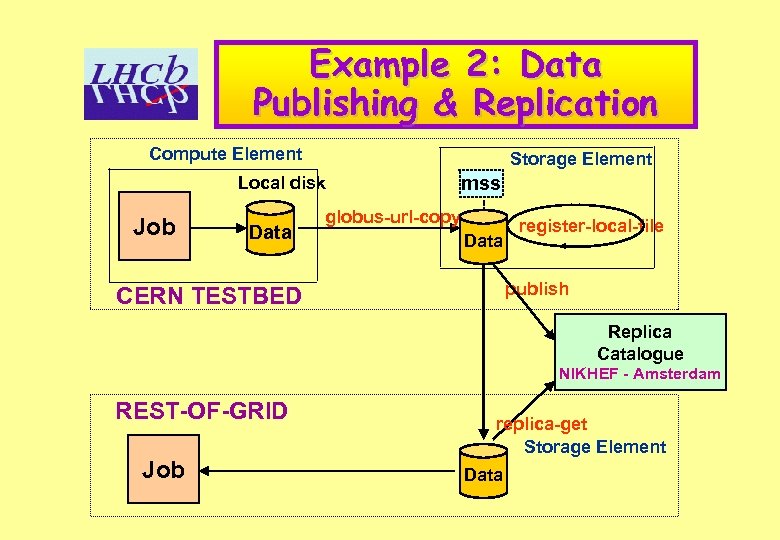

Example 2: Data Publishing & Replication Compute Element Storage Element Local disk Job Data mss globus-url-copy Data register-local-file publish CERN TESTBED Replica Catalogue NIKHEF - Amsterdam REST-OF-GRID Job replica-get Storage Element Data

The Future? l l l The Gaudi framework will eventually be used for all LHCb software applications. GANGA will be used to interface to Grid services. New LHCb Data Management System being designed (Marcus Frank et al). Have to consider how the MC Control System interacts with: Monitoring, configuration & production dbases. Meta-data of production files. Output of running jobs. User Interface, etc.

7d6a7ac6f50966d6b4e5060ed27da7a2.ppt