94a5fe8a5fd23a4c1a39af759f696c15.ppt

- Количество слайдов: 31

SLA Decomposition: Translating Service Level Objectives to System Level Thresholds Yuan Chen, Subu Iyer, Xue Liu, Dejan Milojicic, Akhil Sahai Enterprise Systems and Software Lab Hewlett Packard Labs

Introduction • Service Level Agreements (SLAs) − service behavior guarantees, e. g. performance, availability, reliability, security − penalties in case the guarantees are violated • The ability to deliver according to pre-defined SLAs agreements is a key to success • SLAs management − capturing the guarantees between a service provider and a customer − meeting these service level agreements, by designing systems/services accordingly − monitoring for violations of agreed SLAs − enforcing SLAs in case of violations 2

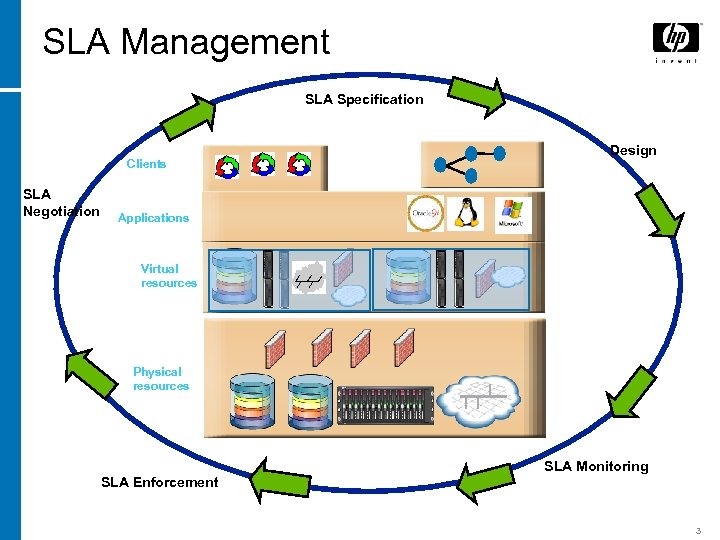

SLA Management SLA Specification Clients SLA Negotiation Design Applications Virtual resources Physical resources SLA Monitoring SLA Enforcement 3

Design • Services/systems need to be designed to meet the agreed SLAs − to ensure that the system/service behaves satisfactorily before putting it in production • Enterprise systems and services are comprised of multiple subcomponents − each sub system or component potentially affects the overall behavior − any high level goals specified for a service in SLA potentially relates to low level system components • Traditional designs usually involve domain experts − manual and ad-hoc − costly, time-consuming, and often inflexible 4

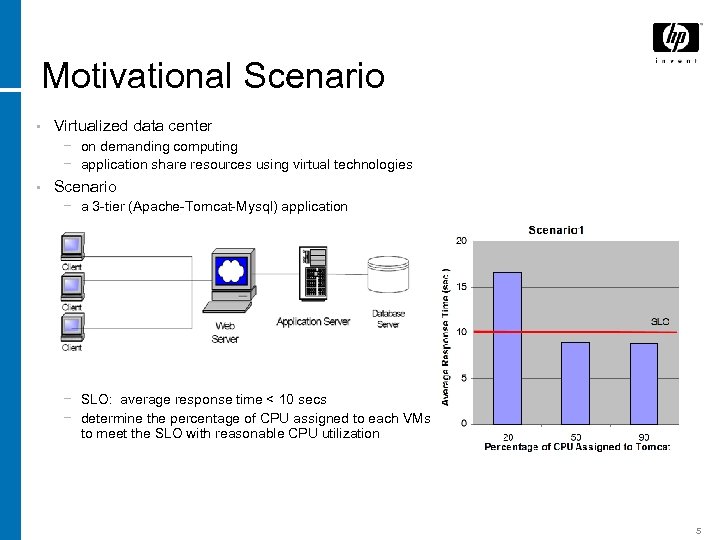

Motivational Scenario • Virtualized data center − on demanding computing − application share resources using virtual technologies • Scenario − a 3 -tier (Apache-Tomcat-Mysql) application − SLO: average response time < 10 secs − determine the percentage of CPU assigned to each VMs to meet the SLO with reasonable CPU utilization 5

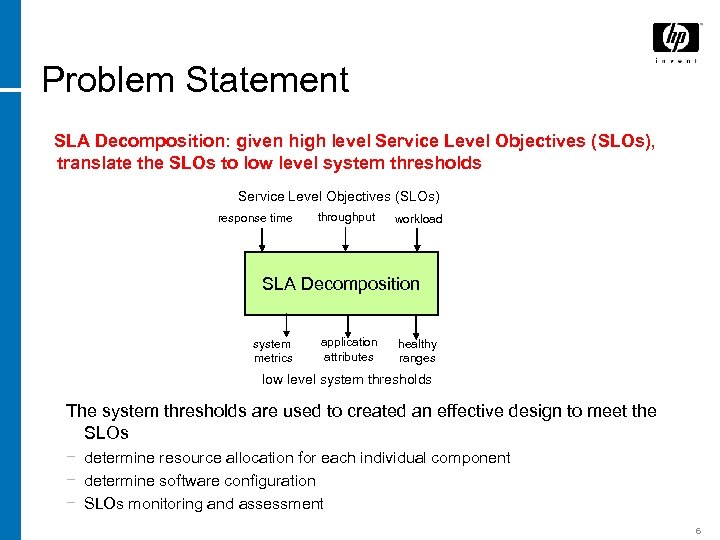

Problem Statement SLA Decomposition: given high level Service Level Objectives (SLOs), translate the SLOs to low level system thresholds Service Level Objectives (SLOs) response time throughput workload SLA Decomposition system metrics application attributes healthy ranges low level system thresholds The system thresholds are used to created an effective design to meet the SLOs − determine resource allocation for each individual component − determine software configuration − SLOs monitoring and assessment 6

Challenges • The decomposition problem requires domain experts to be involved, which makes the process manual, complex, costly and time consuming • Complex and dynamic behavior of multi-component applications − components interact with each other in a complex manner − multi-thread/multi-server, various configurations, cache & optimization − various workload − different software architectures, e. g. , 2 - vs 3 -tier, 3 -tier Servlet vs 3 -tier EJB − different kinds of software components and performance behaviors, e. g. , Microsoft IIS, Apache, JBoss, Web. Logic, Web. Sphere; Oracle, My. SQL Microsoft SQL server • Impact of virtualization and application sharing, e. g. , Xen, VMware − granular allocation of resources − environments are dynamic • Different kinds of SLOs, e. g. , performance, availability, security, … 7

Goal Develop a SLA decomposition approach for multi-component applications, which translates high level SLOs to the state of each component involved in providing the service − Effective: ensures that the overall SLO goals are met reasonably well − Automated: eliminates the involvement of domain experts − Extensible: applicable to commonly used multi-component applications − Flexible: easily adapts to changes in SLOs, application topology, software configuration and infrastructure 8

Outline • Problem Statement and Challenges • Our Approach − − Overview Analytical Model for Multi-tier Applications Component Profile Creation SLA Decomposition • Validation • Related Work • Summary and Future Work 9

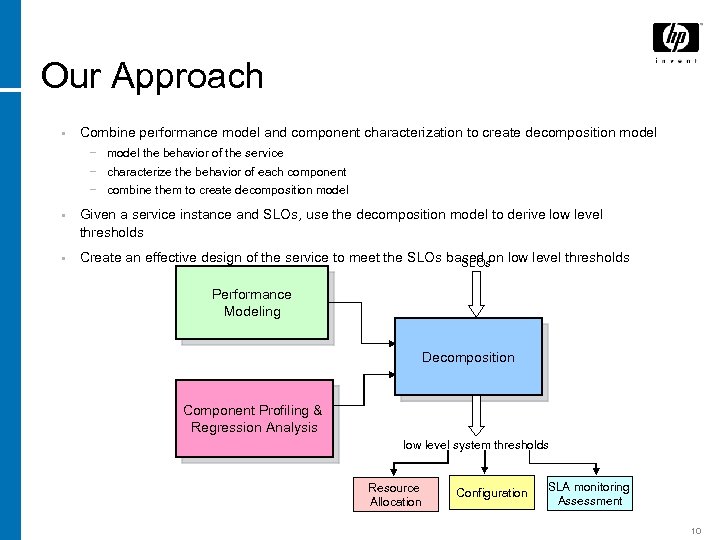

Our Approach • Combine performance model and component characterization to create decomposition model − model the behavior of the service − characterize the behavior of each component − combine them to create decomposition model • Given a service instance and SLOs, use the decomposition model to derive low level thresholds • Create an effective design of the service to meet the SLOs based on low level thresholds SLOs Performance Modeling Decomposition Component Profiling & Regression Analysis low level system thresholds Resource Allocation Configuration SLA monitoring Assessment 10

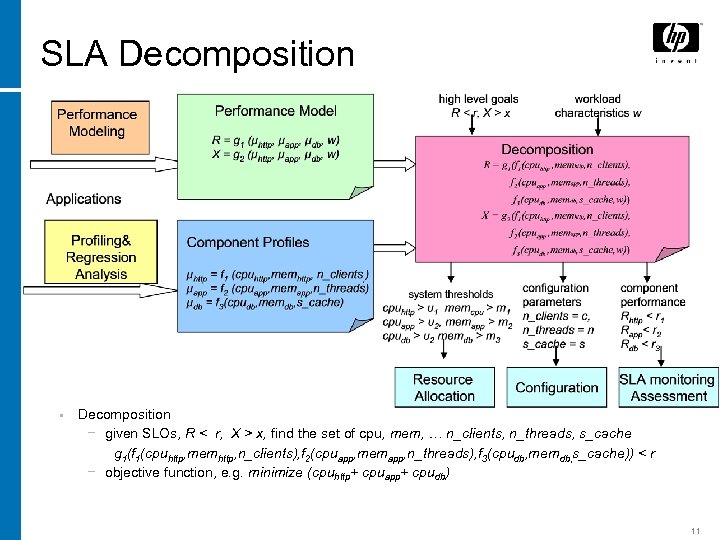

SLA Decomposition • Decomposition − given SLOs, R < r, X > x, find the set of cpu, mem, … n_clients, n_threads, s_cache g 1(f 1(cpuhttp, memhttp, n_clients), f 2(cpuapp, memapp, n_threads), f 3(cpudb, memdb, s_cache)) < r − objective function, e. g. minimize (cpuhttp+ cpuapp+ cpudb) 11

Outline • Problem Statement and Challenges • Our Approach − − Overview Analytical Model for Multi-tier Applications Component Profile Creation SLA Decomposition • Validation • Related Work • Summary and Future Work 12

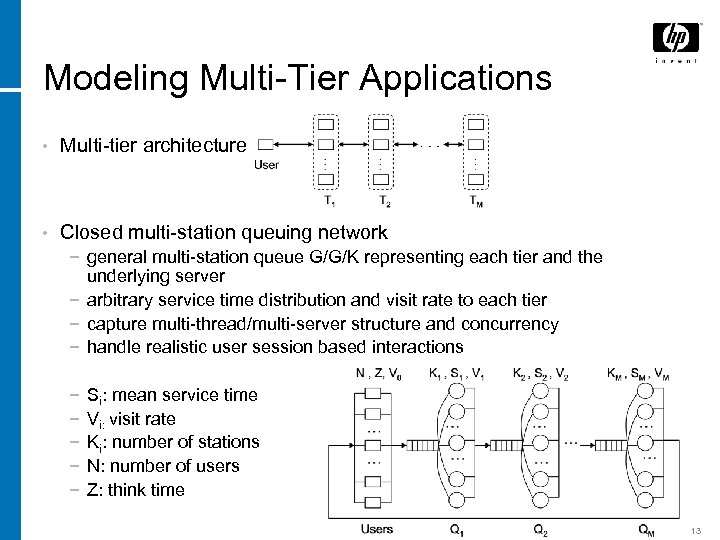

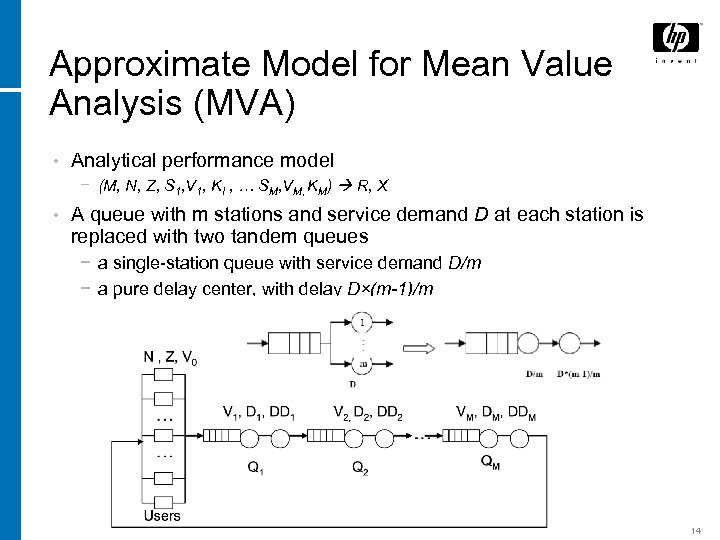

Modeling Multi-Tier Applications • Multi-tier architecture • Closed multi-station queuing network − general multi-station queue G/G/K representing each tier and the underlying server − arbitrary service time distribution and visit rate to each tier − capture multi-thread/multi-server structure and concurrency − handle realistic user session based interactions − − − Si: mean service time Vi: visit rate Ki: number of stations N: number of users Z: think time 13

Approximate Model for Mean Value Analysis (MVA) • Analytical performance model − (M, N, Z, S 1, V 1, KI , … SM, VM, KM) R, X • A queue with m stations and service demand D at each station is replaced with two tandem queues − a single-station queue with service demand D/m − a pure delay center, with delay D×(m-1)/m 14

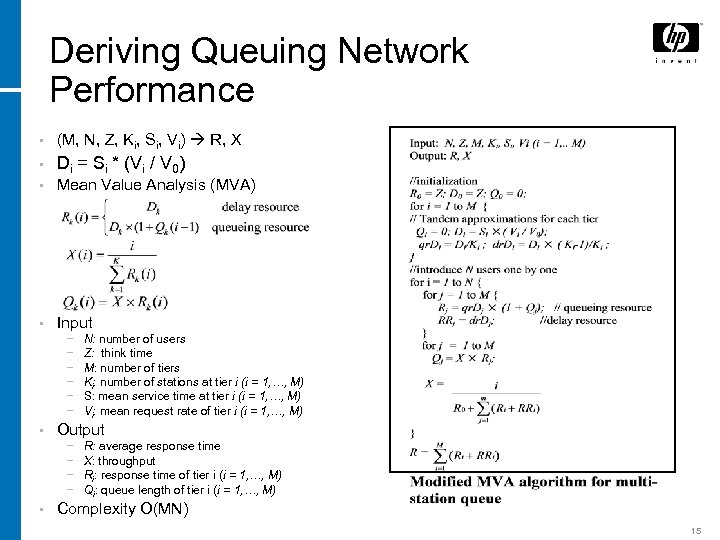

Deriving Queuing Network Performance • (M, N, Z, Ki, Si, Vi) R, X • Di = Si * (Vi / V 0) • Mean Value Analysis (MVA) • Input − − − • Output − − • N: number of users Z: think time M: number of tiers Ki: number of stations at tier i (i = 1, …, M) S: mean service time at tier i (i = 1, …, M) Vi: mean request rate of tier i (i = 1, …, M) R: average response time X: throughput Ri: response time of tier i (i = 1, …, M) Qi: queue length of tier i (i = 1, …, M) Complexity O(MN) 15

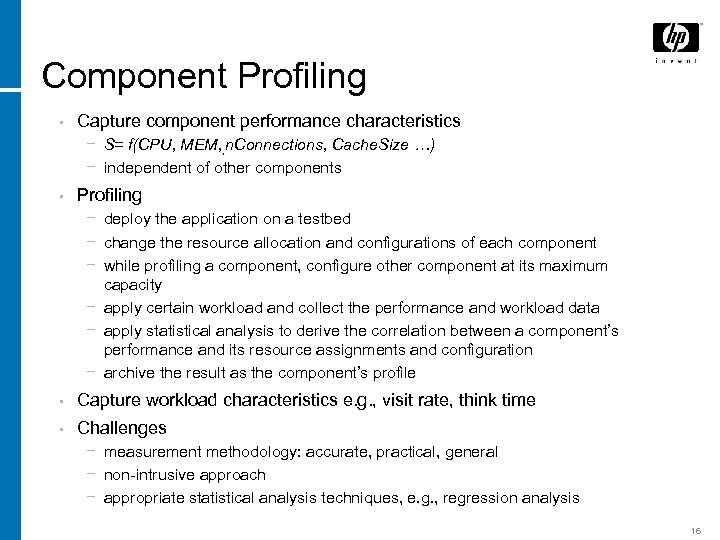

Component Profiling • Capture component performance characteristics − S= f(CPU, MEM, , n. Connections, Cache. Size …) − independent of other components • Profiling − deploy the application on a testbed − change the resource allocation and configurations of each component − while profiling a component, configure other component at its maximum capacity − apply certain workload and collect the performance and workload data − apply statistical analysis to derive the correlation between a component’s performance and its resource assignments and configuration − archive the result as the component’s profile • Capture workload characteristics e. g. , visit rate, think time • Challenges − measurement methodology: accurate, practical, general − non-intrusive approach − appropriate statistical analysis techniques, e. g. , regression analysis 16

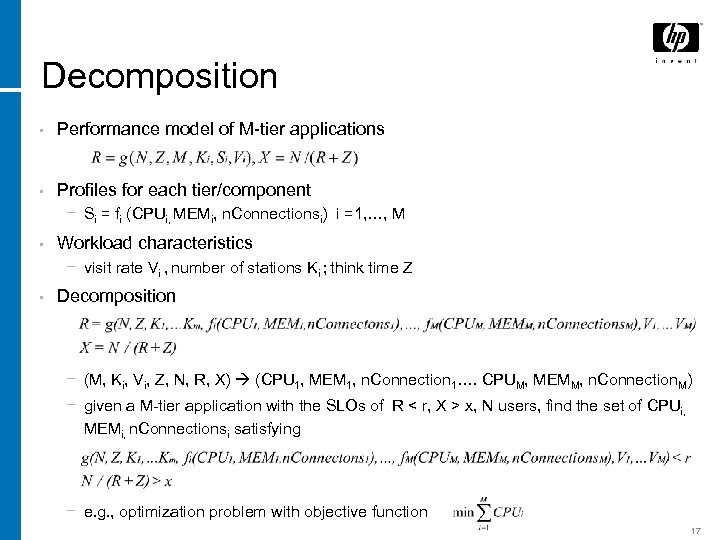

Decomposition • Performance model of M-tier applications • Profiles for each tier/component − Si = fi (CPUi, MEMi, n. Connectionsi) i =1, …, M • Workload characteristics − visit rate Vi , number of stations Ki ; think time Z • Decomposition − (M, Ki, Vi, Z, N, R, X) (CPU 1, MEM 1, n. Connection 1…. CPUM, MEMM, n. Connection. M) − given a M-tier application with the SLOs of R < r, X > x, N users, find the set of CPUi, MEMi, n. Connectionsi satisfying − e. g. , optimization problem with objective function 17

Outline • Problem Statement and Challenges • Our Approach − − • Overview Analytical Model for Multi-tier Applications Component Profile Creation Decomposition Validation − Performance Model Validation − Component Profile Creation − SLA Decomposition Validation • Related Work • Summary and Future Work 18

Virtualized Data Center Testbed • Setup − a cluster of HP Proliant servers with Xen virtual machines (VMs) − each of the server nodes has two processors, 4 GB of RAM, and 1 G Ethernet interfaces − each running Fedora 4, kernel 2. 6. 12, and Xen 3. 0 -teseting − TPC-W and RUBi. S − VMs hosting different tiers on different server nodes • Estimate component service time − − • TS 1: when an idle thread is assigned or when a new thread is created TS 2: when a thread is returned to the thread pool or destroyed T = TS 2 – TS 1, S = T – waiting-time fine grained, works well for both light load and overload conditions Estimate number of stations − Max clients for Apache, Max threads for Tomcat, − My. SQL: average number of running threads 19

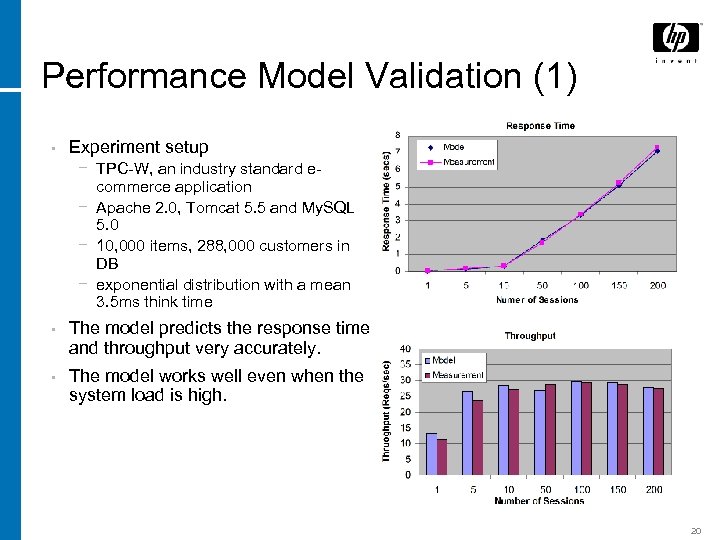

Performance Model Validation (1) • Experiment setup − TPC-W, an industry standard ecommerce application − Apache 2. 0, Tomcat 5. 5 and My. SQL 5. 0 − 10, 000 items, 288, 000 customers in DB − exponential distribution with a mean 3. 5 ms think time • The model predicts the response time and throughput very accurately. • The model works well even when the system load is high. 20

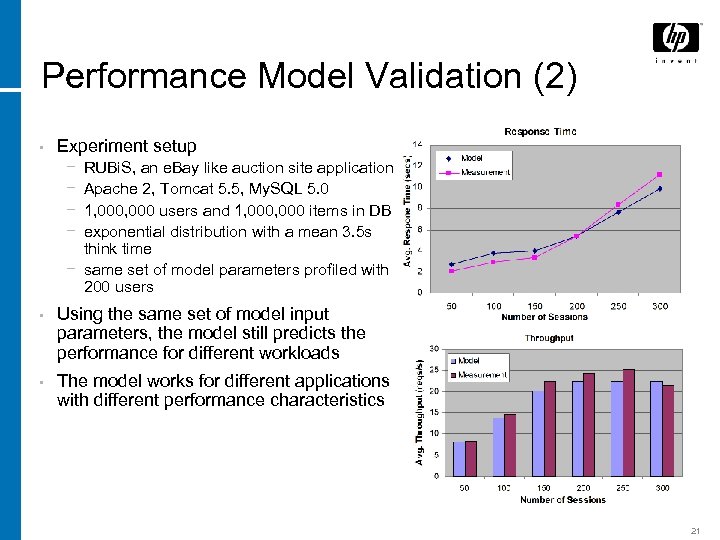

Performance Model Validation (2) • Experiment setup − − RUBi. S, an e. Bay like auction site application Apache 2, Tomcat 5. 5, My. SQL 5. 0 1, 000 users and 1, 000 items in DB exponential distribution with a mean 3. 5 s think time − same set of model parameters profiled with 200 users • Using the same set of model input parameters, the model still predicts the performance for different workloads • The model works for different applications with different performance characteristics 21

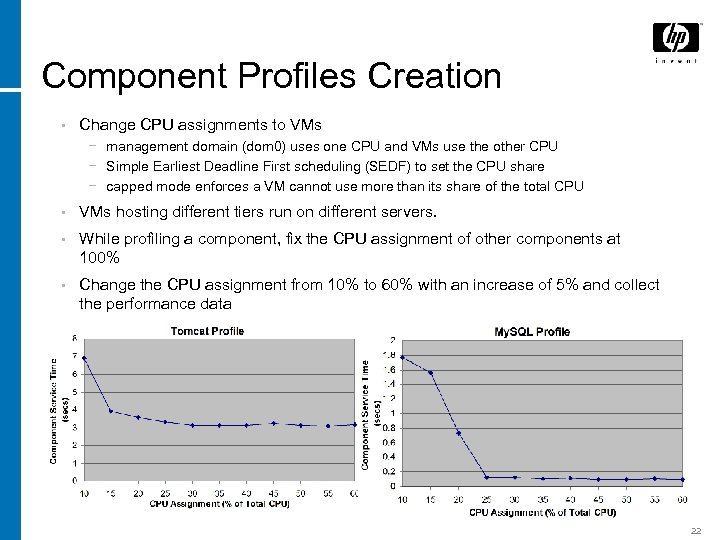

Component Profiles Creation • Change CPU assignments to VMs − management domain (dom 0) uses one CPU and VMs use the other CPU − Simple Earliest Deadline First scheduling (SEDF) to set the CPU share − capped mode enforces a VM cannot use more than its share of the total CPU • VMs hosting different tiers run on different servers. • While profiling a component, fix the CPU assignment of other components at 100% • Change the CPU assignment from 10% to 60% with an increase of 5% and collect the performance data • Derive the component service time (workload independent) from the measurements 22

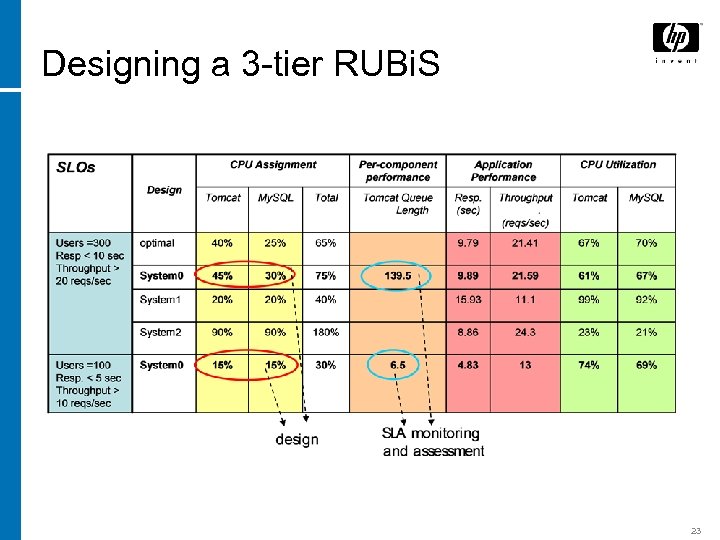

Designing a 3 -tier RUBi. S 23

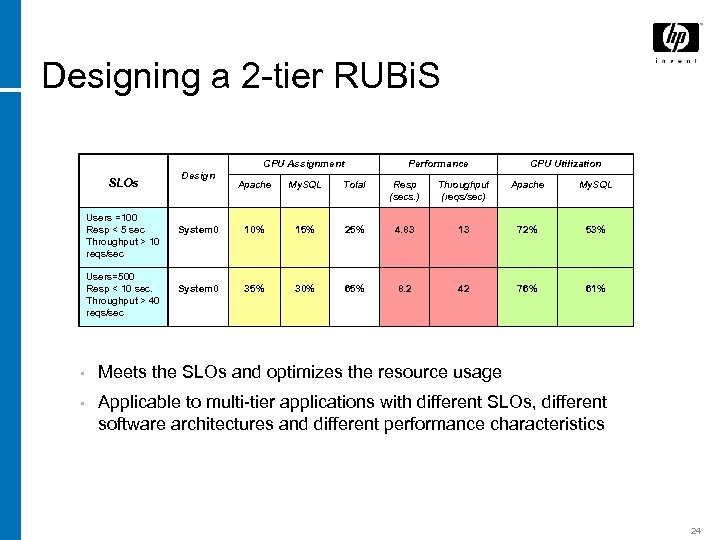

Designing a 2 -tier RUBi. S CPU Assignment SLOs Users =100 Resp < 5 sec Throughput > 10 reqs/sec Users=500 Resp < 10 sec. Throughput > 40 reqs/sec Design Performance CPU Utilization Apache My. SQL Total Resp (secs. ) Throughput (reqs/sec) Apache My. SQL System 0 10% 15% 25% 4. 83 13 72% 53% System 0 35% 30% 65% 8. 2 42 76% 61% • Meets the SLOs and optimizes the resource usage • Applicable to multi-tier applications with different SLOs, different software architectures and different performance characteristics 24

Outline • Problem Statement and Challenges • Our Approach − − Overview Analytical Model for Multi-tier Applications Component Profile Creation Decomposition • Validation • Related Work • Summary and Future Work 25

Related Work • Using Queuing theory models for provisioning − C. Stewart, and K. Shen, NSDI 2005 − B. Urgaonkar, et. al. , ICAC 2005 − A. Zhang, P. Santos, D. Beyer, and H. Tang, HPL-2002 -301 • Performance models for multi-tier applications − B. Urgaonkar, et. al. , SIGMETRICS 2005 − T. Kelley, WORLDS 2005 − U. Herzog and J. Rolia, layered queueing model • Classification-based decomposition − Y. Udupi, A. Sahai and S. Singhal, IM 2007 • ACTS: Automated Control and Tuning of Systems 26

Summary • Proposed a systematic approach to combine performance modeling and component profiling to derive low level system thresholds from performance oriented SLOs − create an effective design (e. g. , resource selection and allocation, software configuration) to ensure SLAs − SLA monitoring and assessment • Presented an effective analytical performance model for multi-tier applications − accurately predict the performance − work well for applications with different software architectures, workloads and performance characteristics • Validated the proposed approach for multi-tier applications in virtualized environment − design the system to meet the given SLOs with reasonable resource usage − work for common multi-tier applications with different SLOs goals and software architectures − easy to adapt to changes in applications and environments 27

Open Issues • Extensions to other parameters like memory and configuration parameters − “nice” regression function? • Non-stationary workload − multi-class queueing network, layering queueing model − combine regression model and queueing model • Profiling and measurement − tools and technologies from Mercury Interactive − non-intrusive approach: derive model parameters via regression model • Long running transactions − e. g. , HPC applications, complex composed service • Non-performance based SLOs − e. g. , availability goals − tradeoff analysis • Complex and large scale systems − advanced constraint solving and optimization algorithms required 28

Future Work • Extend profiling to other parameters − other system resources in addition to CPU resources, e. g. , memory, I/O, cache − software configuration parameters − apply regression analysis on the profiling results − general and practical measurement methodology • Apply the approach to realistic applications and workloads − non-stationary workload: multi-class queueing network model − non-intrusive profiling and measurement − enterprise applications, HPC applications, and composed services − non-performance SLOs, e. g. , availability − non-traditional SLOs, e. g. , represented as utility functions • Use advanced constraint solving and optimization algorithms for complex and large scale problems • Integrate SLA decomposition into SLA life-cycle management − integrate with tradeoff analysis, SLA monitoring and SLA assessment 29

Papers • SLA Decomposition: Translating Service Level Objectives SLOs) to Low-level System Thresholds. Yuan Chen, Subu Iyer, Xue Liu, Dejan Molojicic, and Akhil Sahai. To appear in Proceedings of the 4 th IEEE International Conference on Autonomic Computing (ICAC 2007), June 2007. • HP Technical Report: http: //www. hpl. hp. com/techreports/2007/HPL 2007 -17. html 30

Thank you! 31

94a5fe8a5fd23a4c1a39af759f696c15.ppt