c960284000ac179ae58380d4cd7cf066.ppt

- Количество слайдов: 42

Situation Models and Embodied Language Processes Franz Schmalhofer University of Osnabrück / Germany 1) Memory and Situation Models 2) Computational Modeling of Inferences 3) What Memory and Language are for 4) Neural Correlates 5) Integration of Behavioral Experiments and Neural Correlates (ERP; f. MRI) by Formal Models

Text comprehension • Mary heard the ice-cream van coming. • She remembered the pocket money. • She rushed into the house.

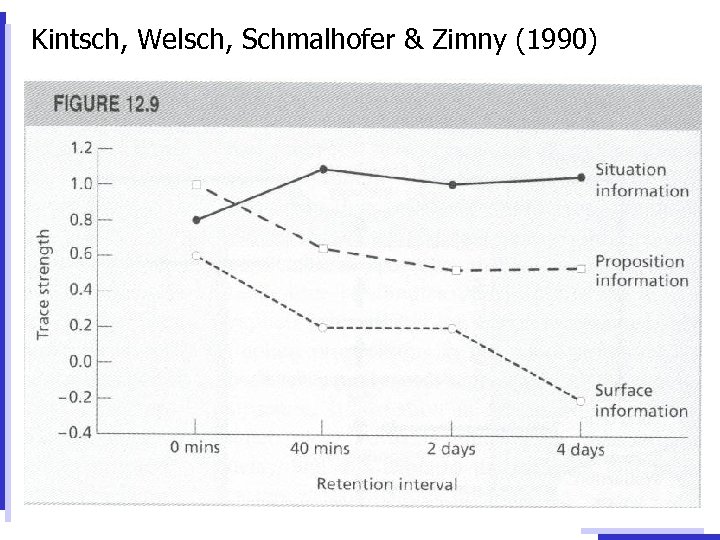

Kintsch, Welsch, Schmalhofer & Zimny (1990)

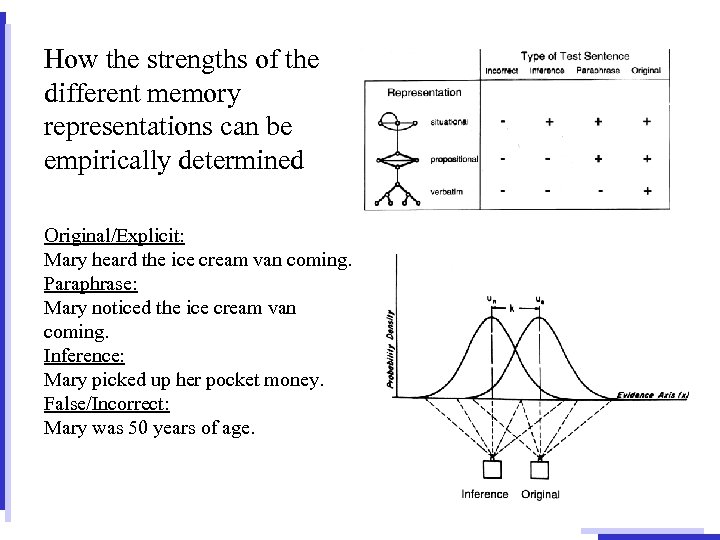

How the strengths of the different memory representations can be empirically determined Original/Explicit: Mary heard the ice cream van coming. Paraphrase: Mary noticed the ice cream van coming. Inference: Mary picked up her pocket money. False/Incorrect: Mary was 50 years of age.

Propositional Representations Many cognitive science theories assume that knowledge and/or the meaning of sentences is represented by propositions, semantic nets and the like (e. g. Anderson, 1976; Kintsch, 1974; Collins & Quillian, 1969; Schank 1975, Schank & Abelson, 1978) Example: The propositional representation of the sentence George loves Sally [LOVES(GEORGE, SALLY)] Compare to: Two word sentences of children during language learning; Protolanguage (Bickerton, 1981, 1995)

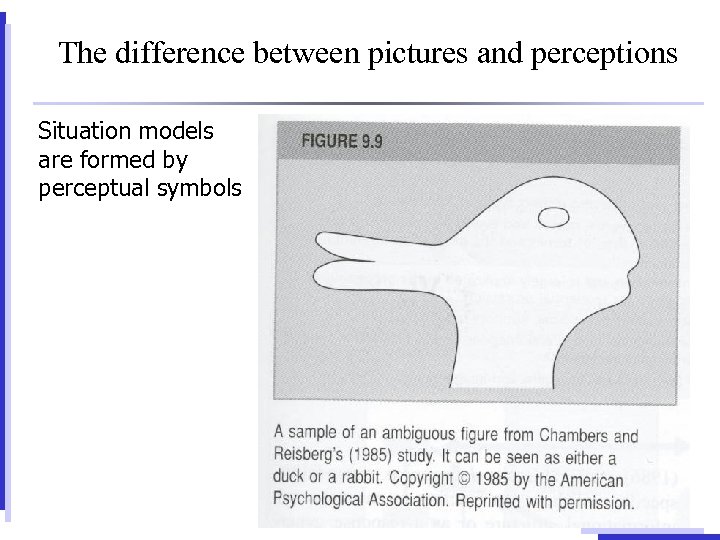

The difference between pictures and perceptions Situation models are formed by perceptual symbols

Comprehension includes a large range of topics in cognitive psychology: • • • pattern recognition, knowledge representations, Working memory, Recognition and recall, learning, problem solving and decision making Kintsch, W. (1998). Comprehension as a Paradigm for Cognition

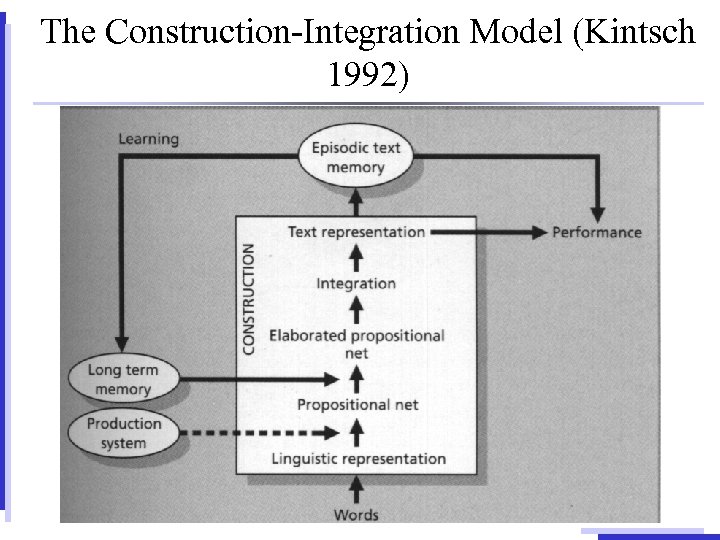

The Construction-Integration Model (Kintsch 1992) Comprehension: a two phase process Construction: Constructing mental units and interconnecting them in a network Integration: Integration of constructed units via a context sensitive process

The Construction-Integration Model (Kintsch 1992)

Text Comprehension Up to the 1980`s language comprehension was mostly viewed as the representation of the meaning of the text itself (focus on propositional representations) Now language is viewed as a set of processing instructions (Zwaan, 2004) on how to construct a mental representation of the described situation (mental model or situation model) (Johnson. Laird, 1983; van Dijk & Kintsch, 1983)

Situation Models as Event Indices (Zwaan and Radvansky, 1998): The Event-indexing model of Zwaan & Radvansky (1998) suggests that readers monitor five indexes (aspects) of the evolving situation model at the time when they read stories: - Protagonist - Temporality - Causality - Spatiality - Intentionality Or more generally: space, time, causes, agents, intentions; In other words: everything that is relevant for planning actions and predicting future perceptions

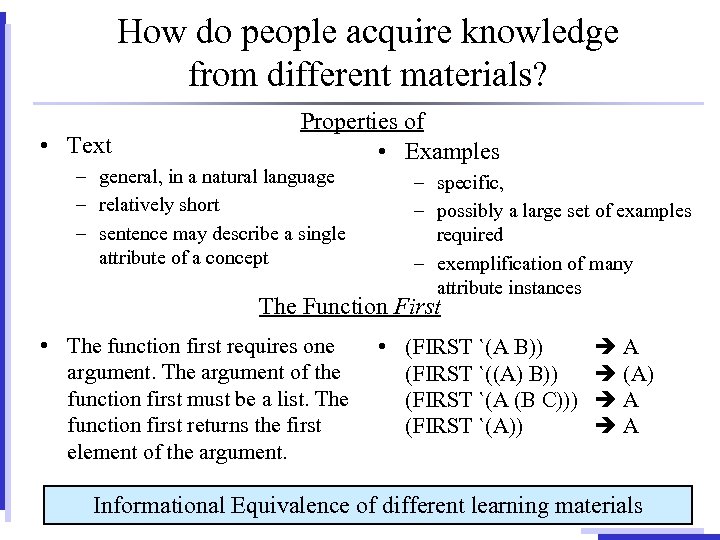

How do people acquire knowledge from different materials? • Text Properties of • Examples – general, in a natural language – relatively short – sentence may describe a single attribute of a concept – specific, – possibly a large set of examples required – exemplification of many attribute instances The Function First • The function first requires one argument. The argument of the function first must be a list. The function first returns the first element of the argument. • (FIRST `(A B)) (FIRST `((A) B)) (FIRST `(A (B C))) (FIRST `(A)) A (A) A A Informational Equivalence of different learning materials

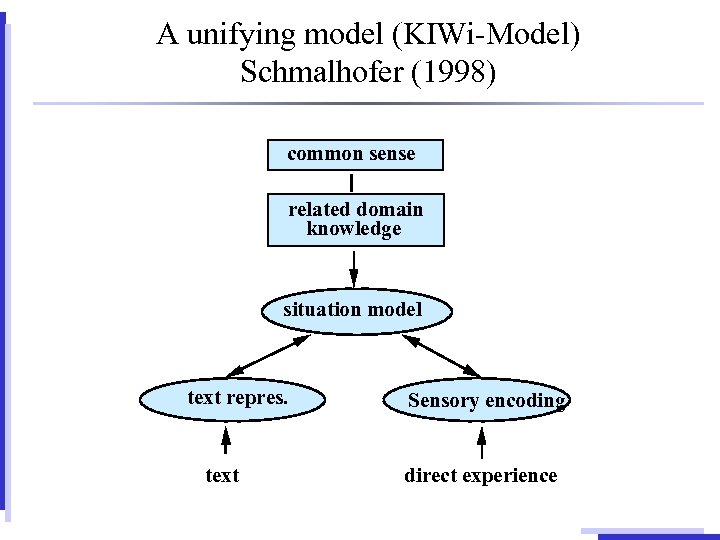

A unifying model (KIWi-Model) Schmalhofer (1998) common sense related domain knowledge situation model text repres. text Sensory encoding direct experience

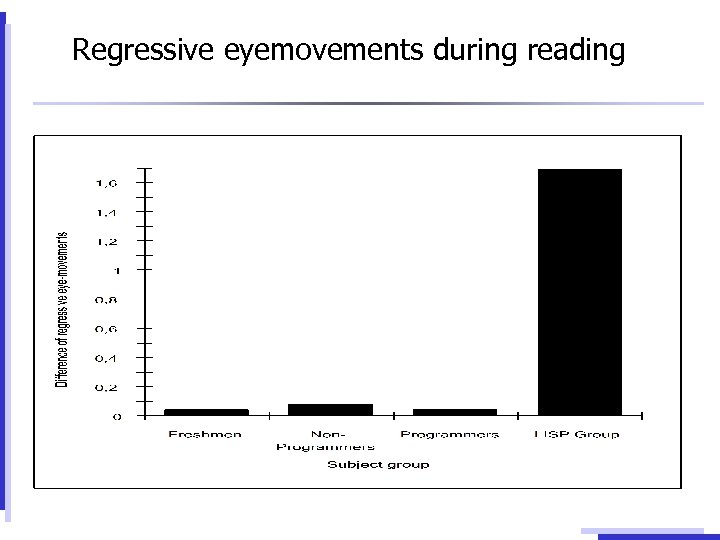

Regressive eyemovements during reading

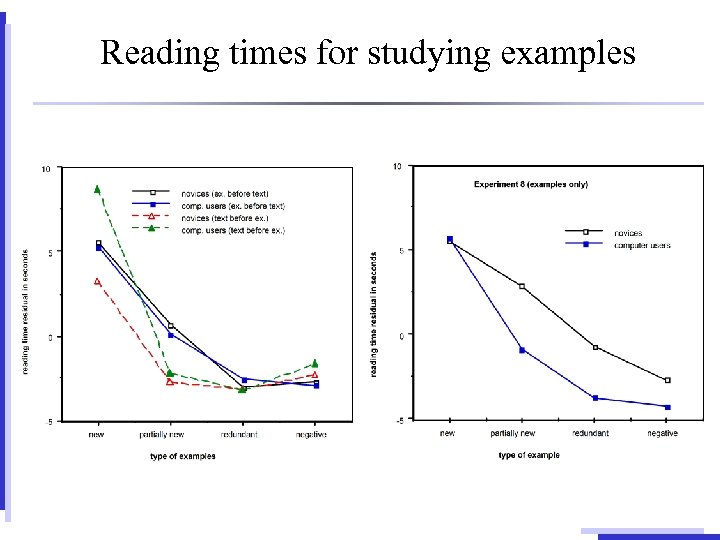

Reading times for studying examples

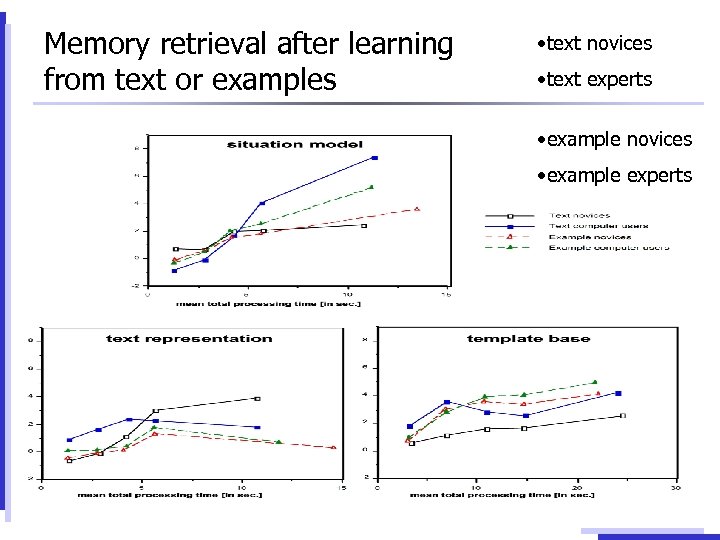

Memory retrieval after learning from text or examples • text novices • text experts • example novices • example experts

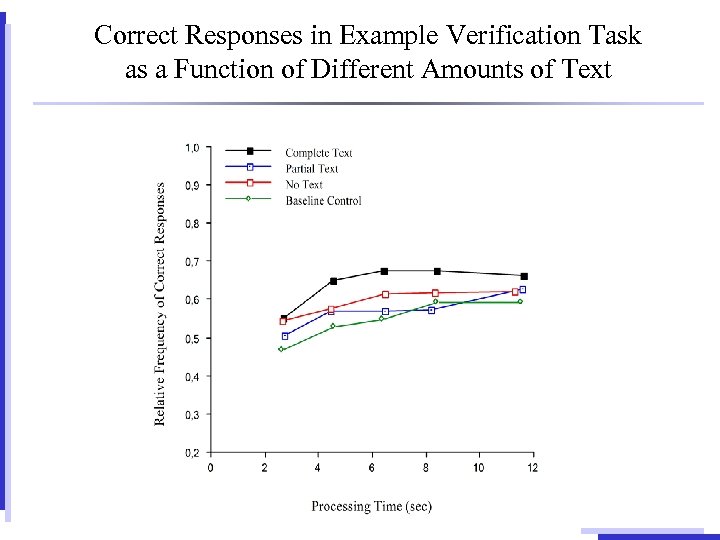

Correct Responses in Example Verification Task as a Function of Different Amounts of Text

Summary of the tested predictions of KIWi-Model • KA is a goal-driven process, consisting of construction and integration phases • Text and examples can be equated for informational contents • The material-related representations are constructed by general heuristics, the situation model depends on domain knowledge • Experts construct and use deep knowledge (situation model), novices rely on material-related representations • Integrative KA (perception, language and memory) instead of the dominance of one source

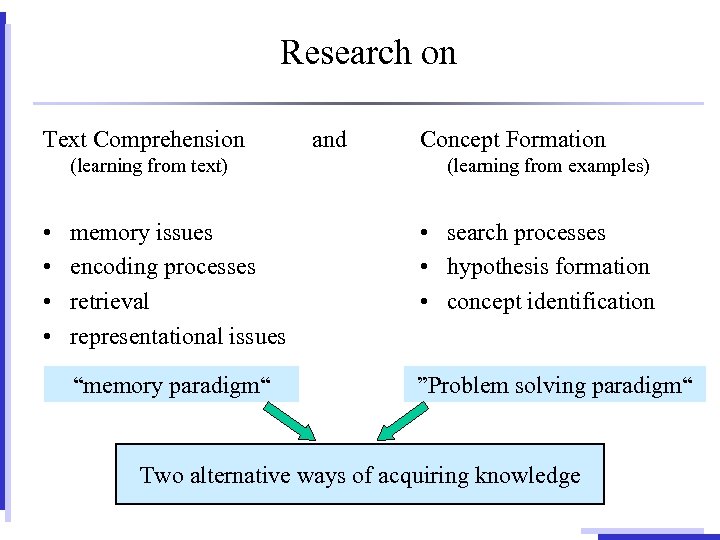

Research on Text Comprehension (learning from text) • • and Concept Formation (learning from examples) memory issues encoding processes retrieval representational issues • search processes • hypothesis formation • concept identification “memory paradigm“ ”Problem solving paradigm“ Two alternative ways of acquiring knowledge

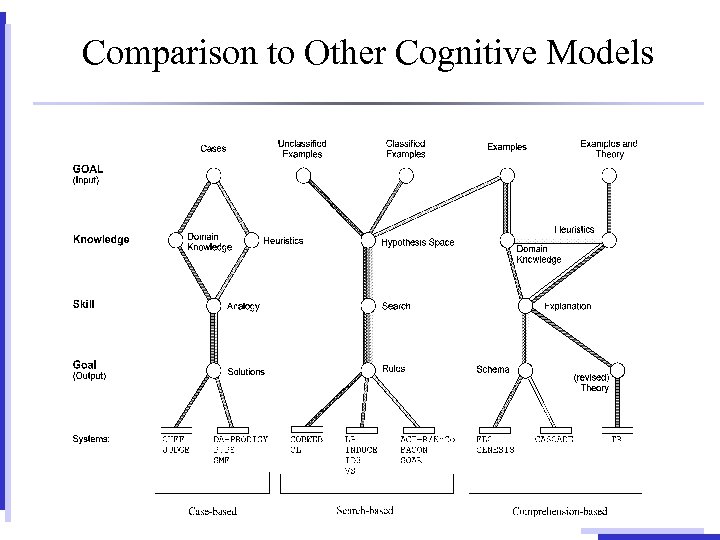

Comparison to Other Cognitive Models

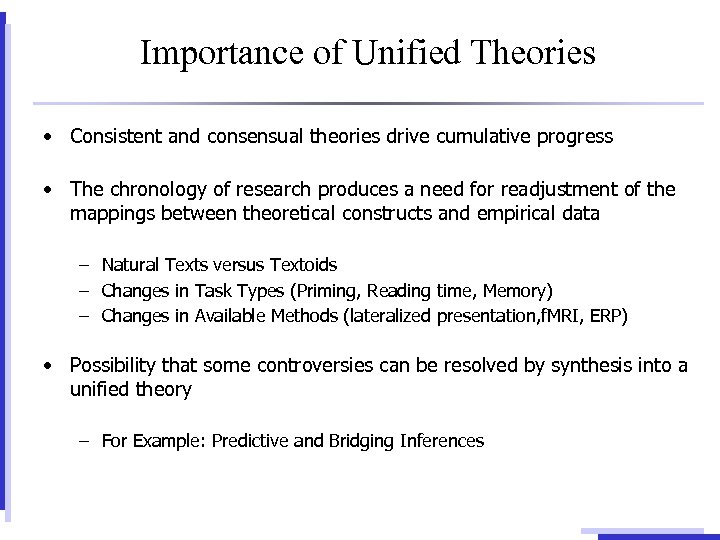

Importance of Unified Theories • Consistent and consensual theories drive cumulative progress • The chronology of research produces a need for readjustment of the mappings between theoretical constructs and empirical data – Natural Texts versus Textoids – Changes in Task Types (Priming, Reading time, Memory) – Changes in Available Methods (lateralized presentation, f. MRI, ERP) • Possibility that some controversies can be resolved by synthesis into a unified theory – For Example: Predictive and Bridging Inferences

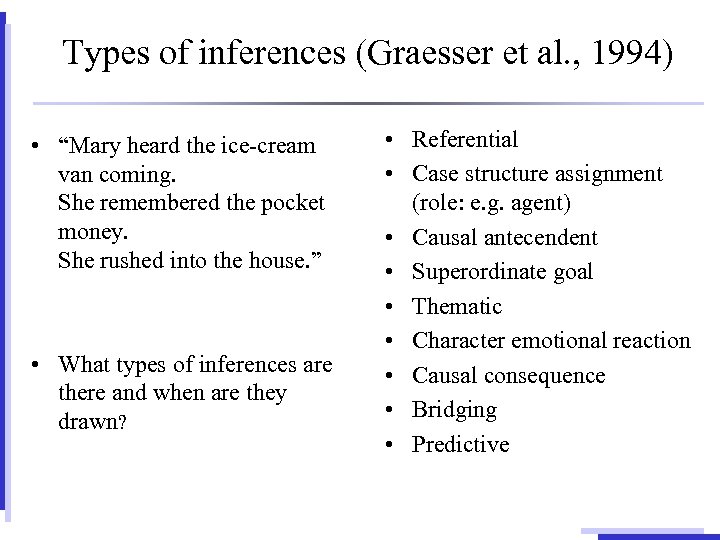

Types of inferences (Graesser et al. , 1994) • “Mary heard the ice-cream van coming. She remembered the pocket money. She rushed into the house. ” • What types of inferences are there and when are they drawn? • Referential • Case structure assignment (role: e. g. agent) • Causal antecendent • Superordinate goal • Thematic • Character emotional reaction • Causal consequence • Bridging • Predictive

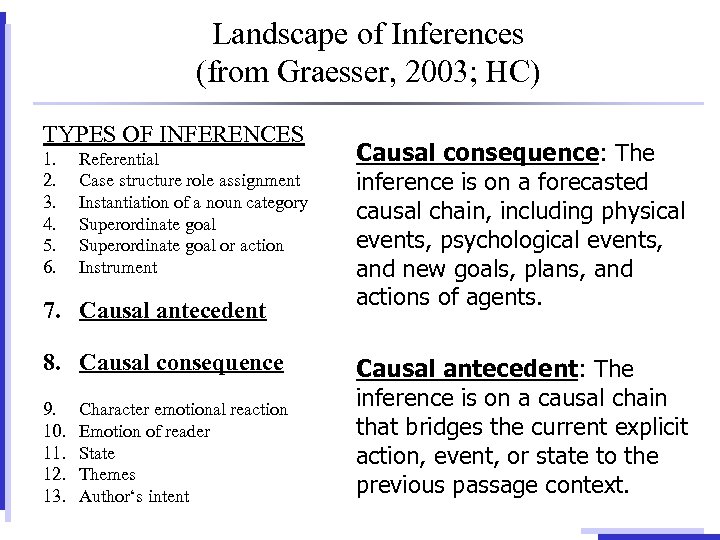

Landscape of Inferences (from Graesser, 2003; HC) TYPES OF INFERENCES 1. 2. 3. 4. 5. 6. Referential Case structure role assignment Instantiation of a noun category Superordinate goal or action Instrument 7. Causal antecedent 8. Causal consequence 9. 10. 11. 12. 13. Character emotional reaction Emotion of reader State Themes Author‘s intent Causal consequence: The inference is on a forecasted causal chain, including physical events, psychological events, and new goals, plans, and actions of agents. Causal antecedent: The inference is on a causal chain that bridges the current explicit action, event, or state to the previous passage context.

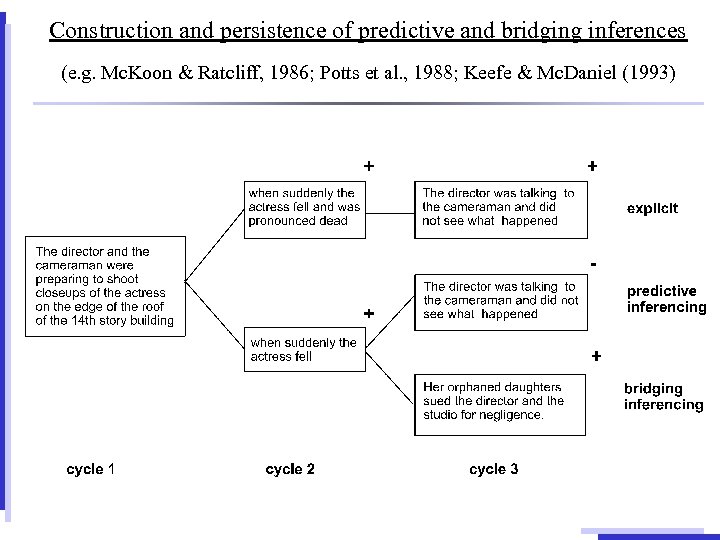

Construction and persistence of predictive and bridging inferences (e. g. Mc. Koon & Ratcliff, 1986; Potts et al. , 1988; Keefe & Mc. Daniel (1993)

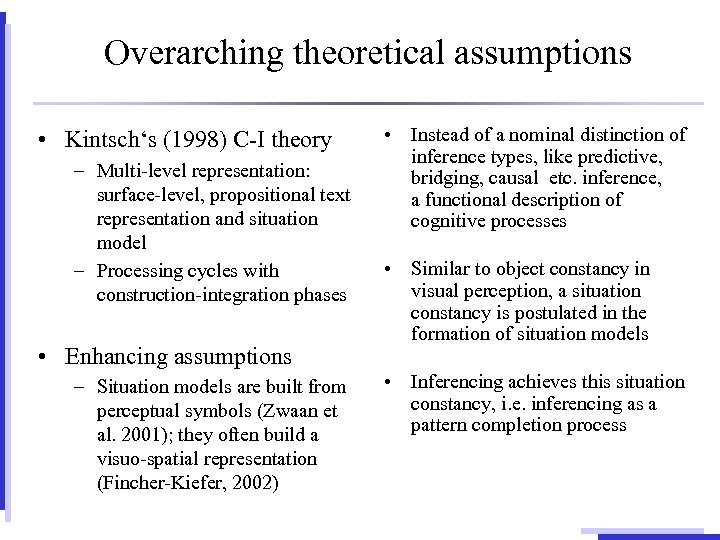

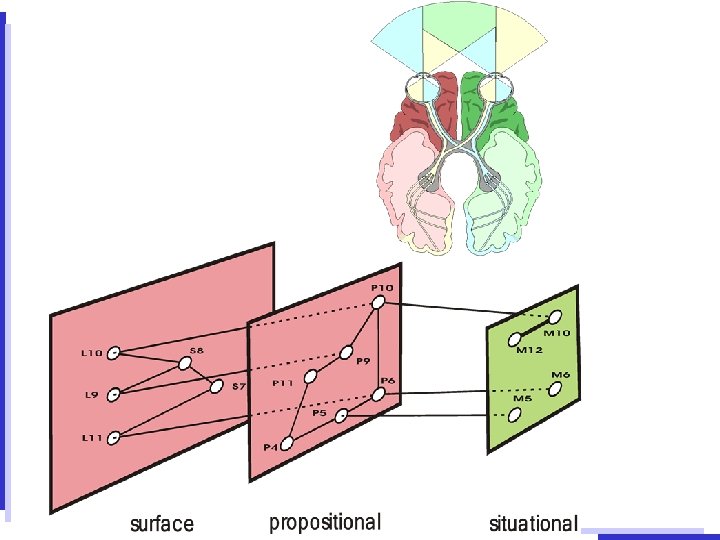

Overarching theoretical assumptions • Kintsch‘s (1998) C-I theory – Multi-level representation: surface-level, propositional text representation and situation model – Processing cycles with construction-integration phases • Enhancing assumptions – Situation models are built from perceptual symbols (Zwaan et al. 2001); they often build a visuo-spatial representation (Fincher-Kiefer, 2002) • Instead of a nominal distinction of inference types, like predictive, bridging, causal etc. inference, a functional description of cognitive processes • Similar to object constancy in visual perception, a situation constancy is postulated in the formation of situation models • Inferencing achieves this situation constancy, i. e. inferencing as a pattern completion process

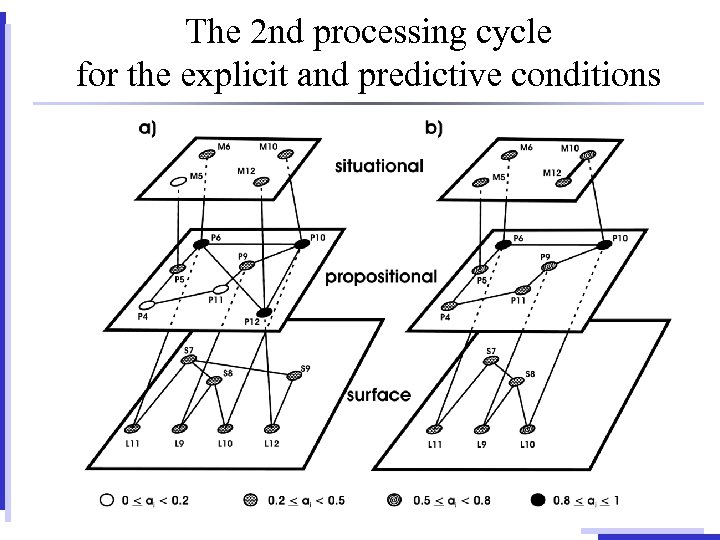

The 2 nd processing cycle for the explicit and predictive conditions

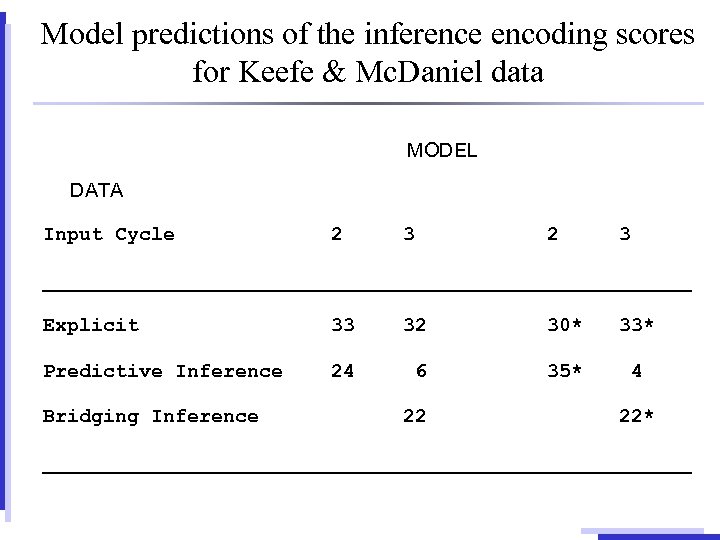

Model predictions of the inference encoding scores for Keefe & Mc. Daniel data MODEL DATA Input Cycle 2 3 ___________________________ Explicit 33 32 30* 33* Predictive Inference 24 6 35* 4 Bridging Inference 22 22* ___________________________

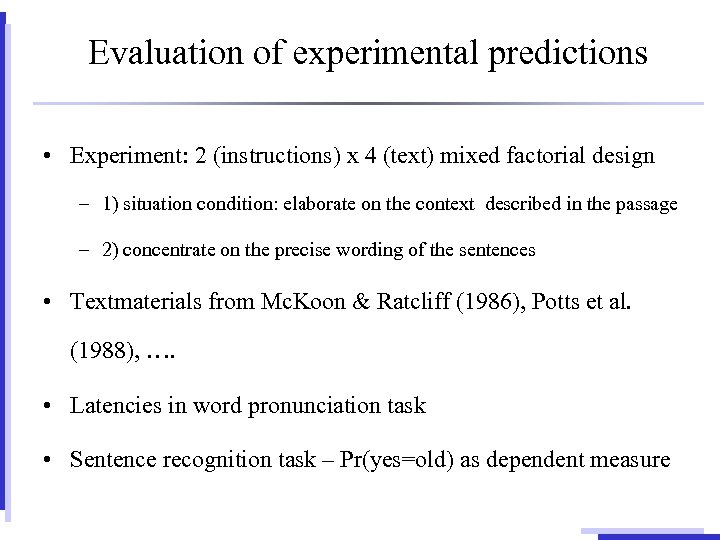

Evaluation of experimental predictions • Experiment: 2 (instructions) x 4 (text) mixed factorial design – 1) situation condition: elaborate on the context described in the passage – 2) concentrate on the precise wording of the sentences • Textmaterials from Mc. Koon & Ratcliff (1986), Potts et al. (1988), …. • Latencies in word pronunciation task • Sentence recognition task – Pr(yes=old) as dependent measure

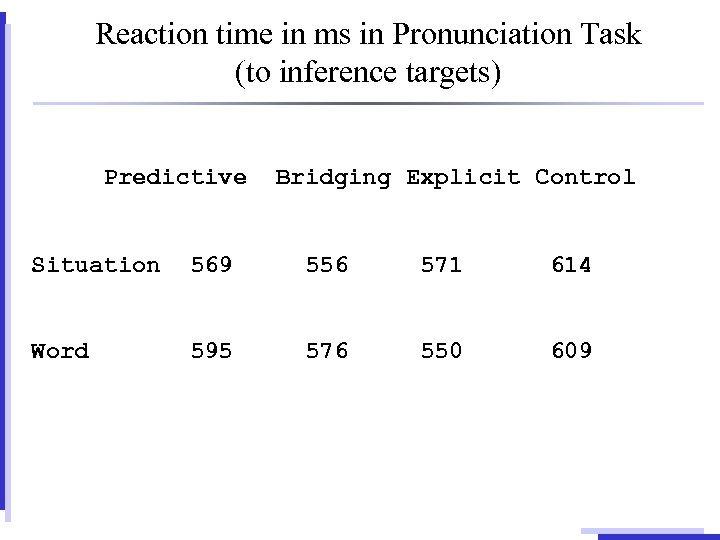

Reaction time in ms in Pronunciation Task (to inference targets) Predictive Bridging Explicit Control Situation 569 556 571 614 Word 595 576 550 609

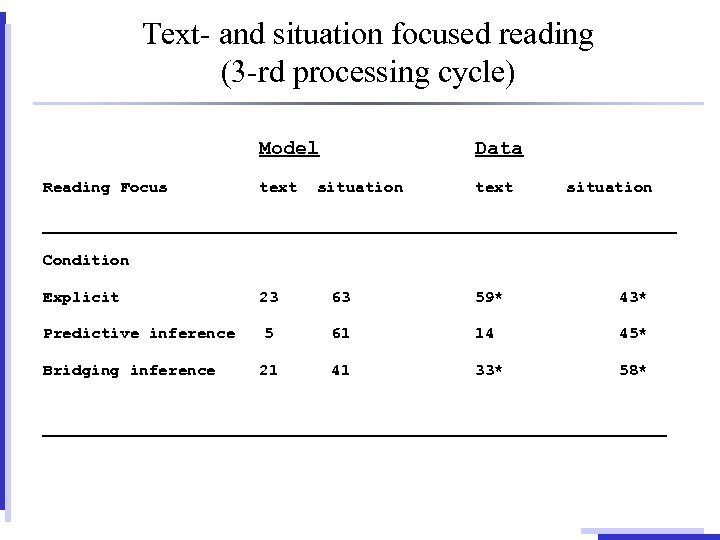

Text- and situation focused reading (3 -rd processing cycle) Model Reading Focus Data text situation text situation _________________________________ Condition Explicit 23 63 59* 43* Predictive inference 5 61 14 45* Bridging inference 41 33* 58* 21 _________________________________

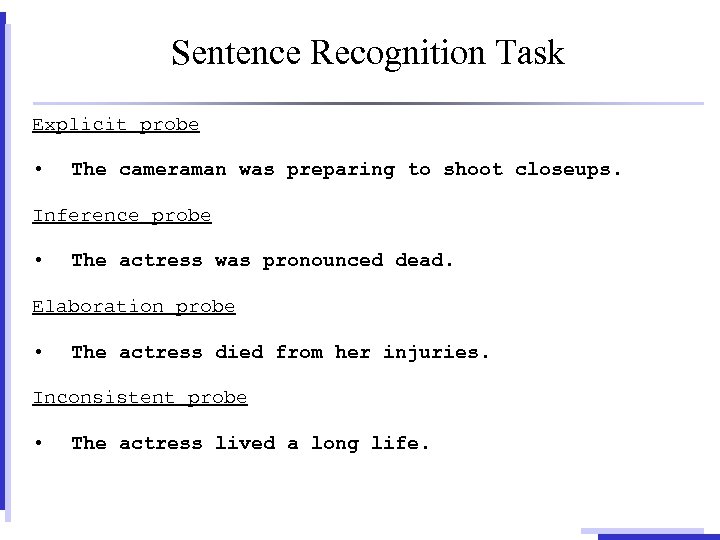

Sentence Recognition Task Explicit probe • The cameraman was preparing to shoot closeups. Inference probe • The actress was pronounced dead. Elaboration probe • The actress died from her injuries. Inconsistent probe • The actress lived a long life.

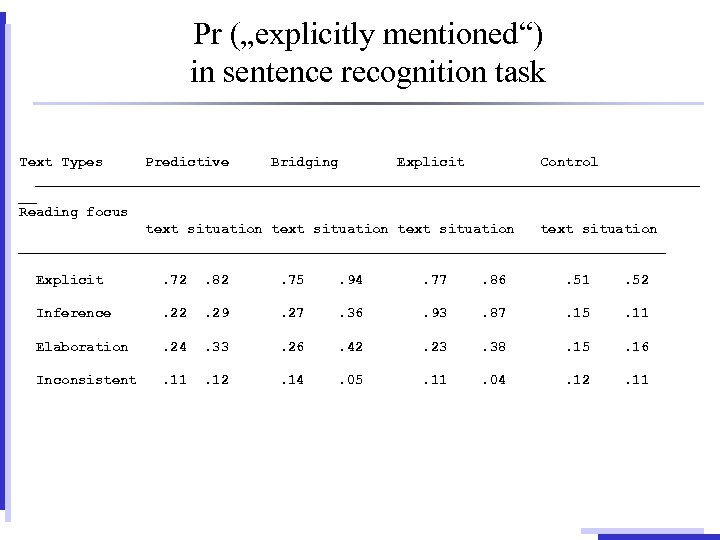

Pr („explicitly mentioned“) in sentence recognition task Text Types Predictive Bridging Explicit Control ________________________________________ __ Reading focus text situation _______________________________________ Explicit . 72 . 82 . 75 . 94 . 77 . 86 . 51 . 52 Inference . 22 . 29 . 27 . 36 . 93 . 87 . 15 . 11 Elaboration . 24 . 33 . 26 . 42 . 23 . 38 . 15 . 16 Inconsistent . 11 . 12 . 14 . 05 . 11 . 04 . 12 . 11

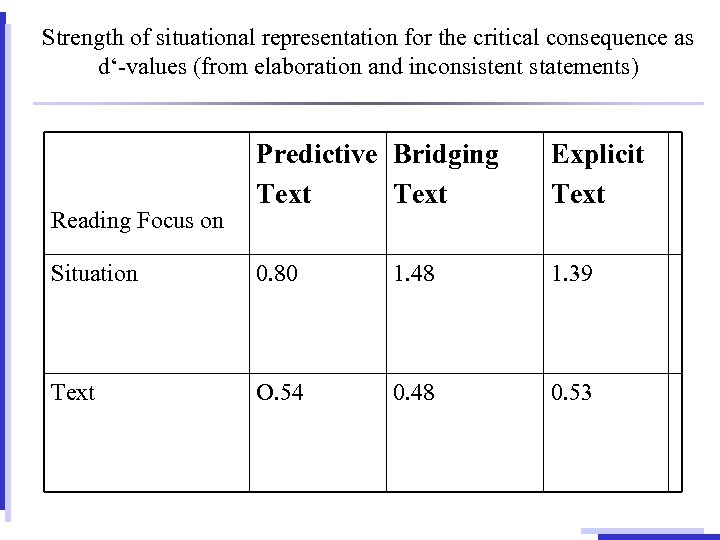

Strength of situational representation for the critical consequence as d‘-values (from elaboration and inconsistent statements) Predictive Bridging Text Explicit Text Situation 0. 80 1. 48 1. 39 Text O. 54 0. 48 0. 53 Reading Focus on

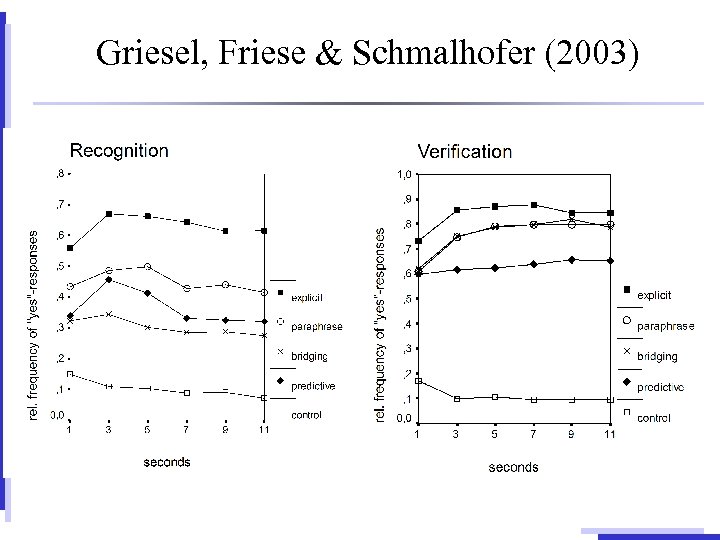

Griesel, Friese & Schmalhofer (2003)

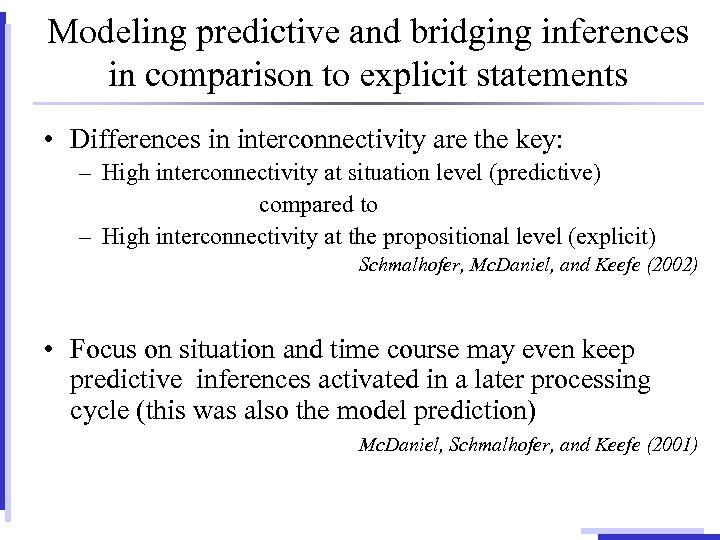

Modeling predictive and bridging inferences in comparison to explicit statements • Differences in interconnectivity are the key: – High interconnectivity at situation level (predictive) compared to – High interconnectivity at the propositional level (explicit) Schmalhofer, Mc. Daniel, and Keefe (2002) • Focus on situation and time course may even keep predictive inferences activated in a later processing cycle (this was also the model prediction) Mc. Daniel, Schmalhofer, and Keefe (2001)

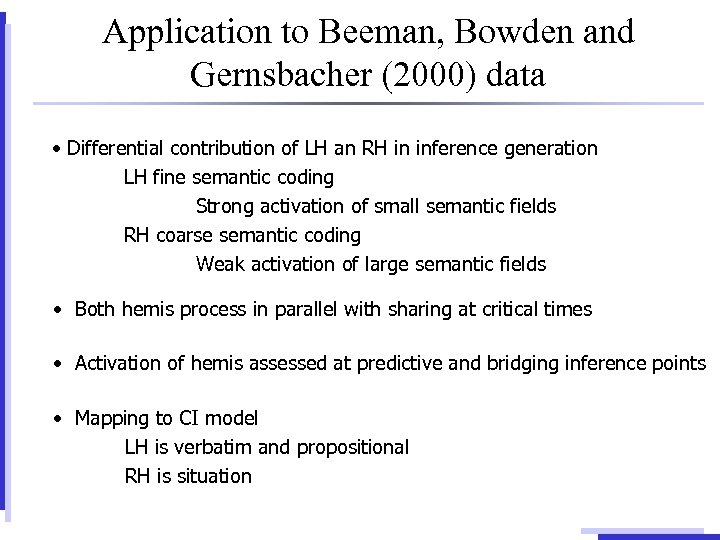

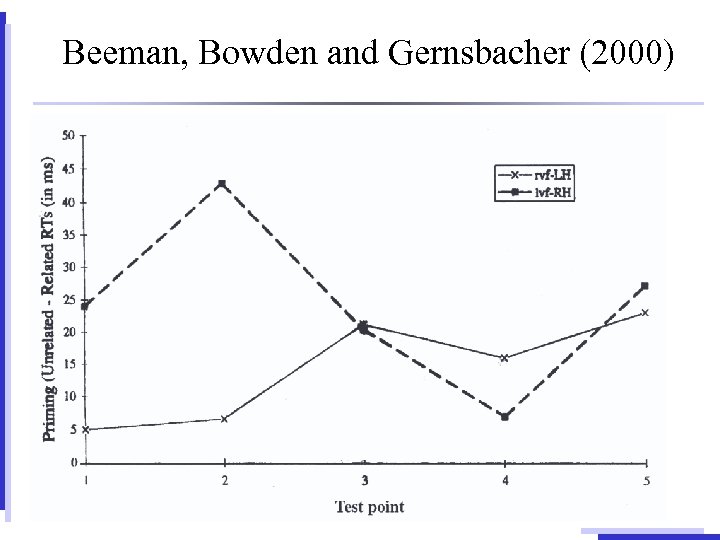

Application to Beeman, Bowden and Gernsbacher (2000) data • Differential contribution of LH an RH in inference generation LH fine semantic coding Strong activation of small semantic fields RH coarse semantic coding Weak activation of large semantic fields • Both hemis process in parallel with sharing at critical times • Activation of hemis assessed at predictive and bridging inference points • Mapping to CI model LH is verbatim and propositional RH is situation

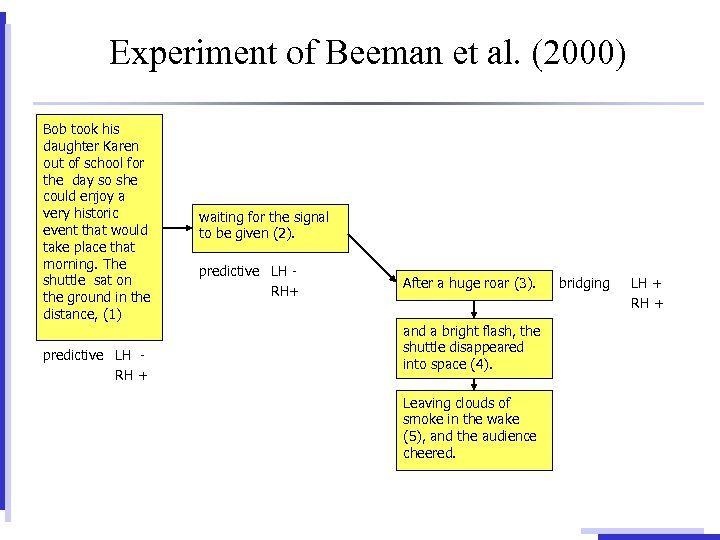

Experiment of Beeman et al. (2000) Bob took his daughter Karen out of school for the day so she could enjoy a very historic event that would take place that morning. The shuttle sat on the ground in the distance, (1) predictive LH RH + waiting for the signal to be given (2). predictive LH RH+ After a huge roar (3). and a bright flash, the shuttle disappeared into space (4). Leaving clouds of smoke in the wake (5), and the audience cheered. bridging LH + RH +

Beeman, Bowden and Gernsbacher (2000)

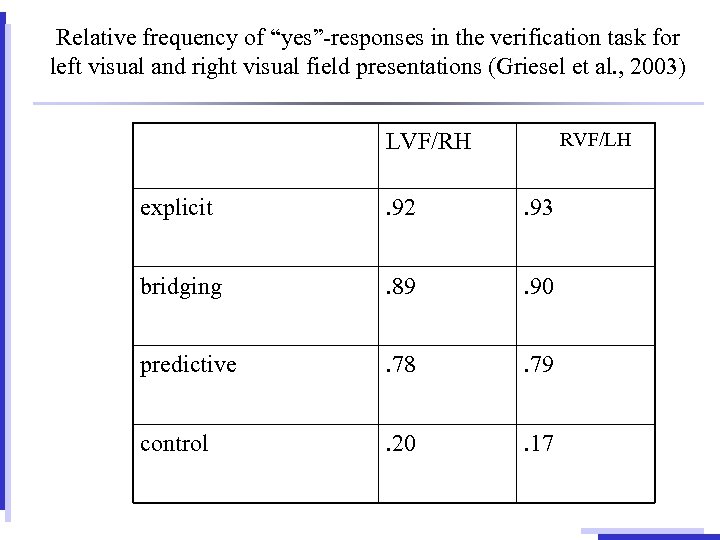

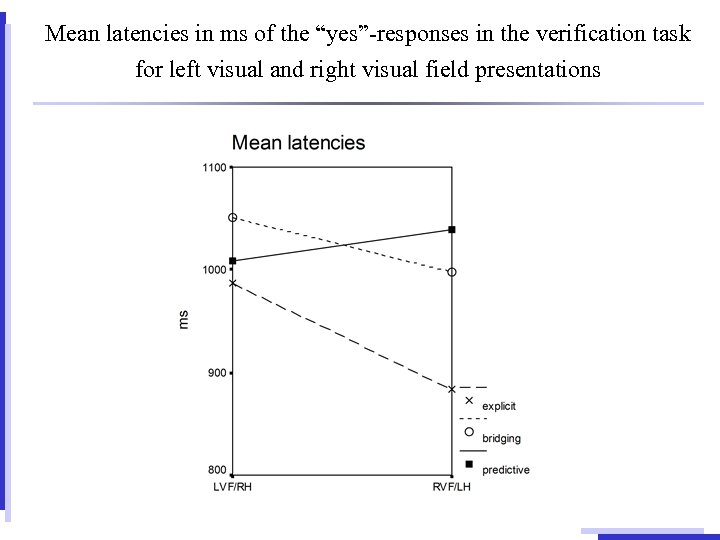

Relative frequency of “yes”-responses in the verification task for left visual and right visual field presentations (Griesel et al. , 2003) RVF/LH LVF/RH explicit . 92 . 93 bridging . 89 . 90 predictive . 78 . 79 control . 20 . 17

Mean latencies in ms of the “yes”-responses in the verification task for left visual and right visual field presentations

Summary • Experimentation for differentiation; Theorizing for integration; • Theories of text comprehension can be instantiated to simulate data from multiple experiments in detail – Systematic relation of dependent and independent variables to the different conceptual entities in models • Integration of existing data and theories is exciting, especially in view of ERP and new brain imaging data, related to inferencing

c960284000ac179ae58380d4cd7cf066.ppt