91129f7718115916a8be0711b76c2915.ppt

- Количество слайдов: 59

Simulation-based Testing of Emerging Defense Information Systems Bernard P. Zeigler Professor, Electrical and Computer Engineering University of Arizona Co-Director, Arizona Center for Integrative Modeling and Simulation (ACIMS) and Joint Interoperability Test Command (JITC) Fort Huachuca, Arizona www. acims. arizona. edu

Overview • Modeling and simulation methodology is attaining core-technology status for standards conformance testing of information technologybased defense systems • We discuss the development of automated test case generators for testing new defense systems for conformance to military tactical data link standards • DEVS discrete event simulation formalism has proved capable of capturing the information-processing complexities underlying the MIL -STD-6016 C standard for message exchange and collaboration among diverse radar sensors • DEVS is being used in distributed simulation to evaluate the performance of an emerging approach to the formation of single integrated air pictures (SIAP)

Arizona Center for Integrative Modeling and Simulation (ACIMS) • Mandated by the Arizona Board of Regents in 2001 • Mission – Advance Modeling and Simulation through – Research – Education – Technology Transfer • Spans University of Arizona and Arizona State University • Maintains links with graduates through research and technology collaborations

Unique ACIMS/NGIT Relationship • A long term contractual relationship between Northrop Grumman IT (NGIT) with ACIMS • Employs faculty, graduate/undergrad students, others • Perform M&S tasks for/at Fort Huachuca • Challenges: – Rationalize different ways of doing business – Deliver quality services on time and on budget • Benefits: – NGIT Access to well-trained, creative talent – Students gain experience with real world technical requirements and work environments – Transfer ACIMS technology

Genesis • Joint Interoperability Test Command (JITC) has mission of standards compliance and interoperability certification • Traditional test methods require modernization to address – increasing complexity, – rapid change and development, and – agility of modern C 4 ISR systems • Simulation based acquisition and net-centricity – pose challenges to the JITC and the Department of Defense – must redefine the scope, thoroughness and the process of conformance testing

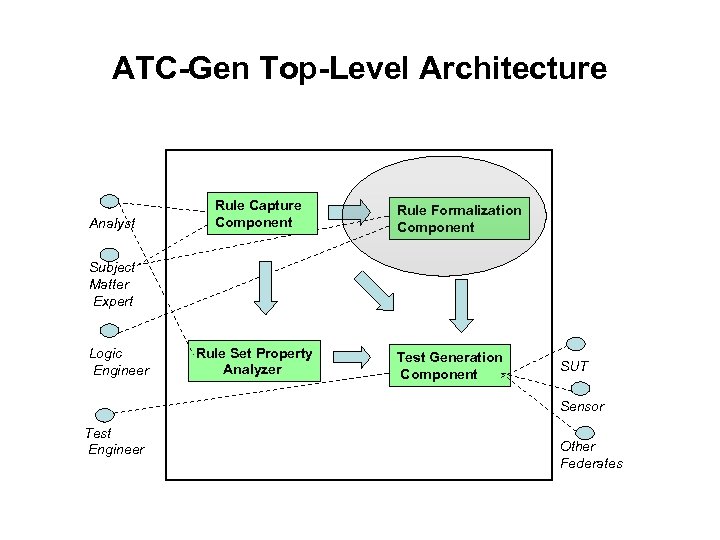

Response – ATC-Gen • JITC has taken the initiative to integrate modeling and simulation into the automation of the testing process • Funded the development of Automated Test Case Generator (ATCGen) led by ACIMS • In R&D of two years, proved the feasibility and the general direction • The requirements have evolved to a practical implementation level, with help from conventional testing personnel. • ATC-Gen was deployed at the JITC in 2005 for testing SIAP systems and evaluated for its advantages over conventional methods The ACTGen Development Team, NGIT & ACIMS was selected as the winner in the Cross-Function category for the 2004/2005 M&S Awards presented by the National Training Systems Association (NTSA).

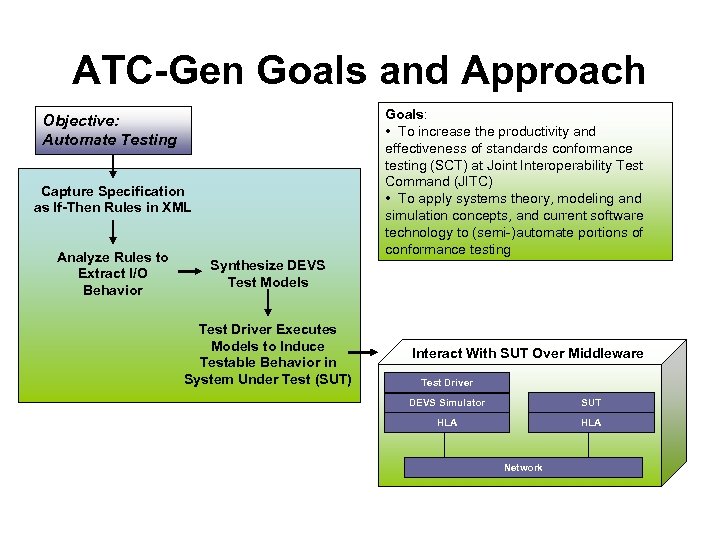

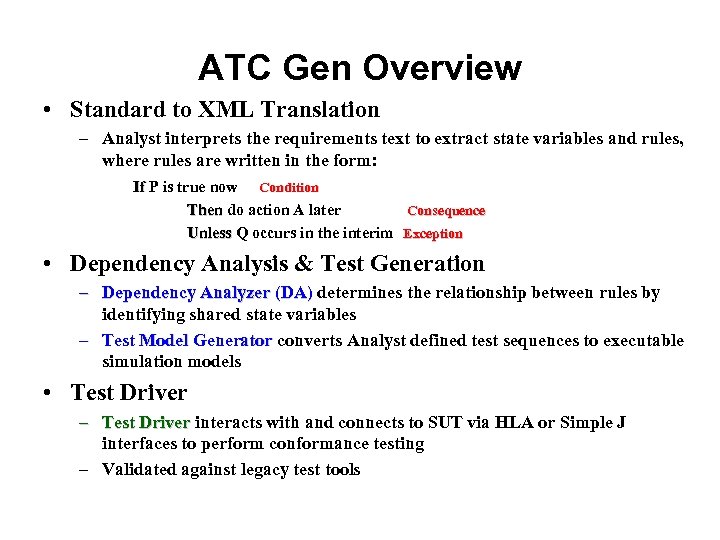

ATC-Gen Goals and Approach Goals: • To increase the productivity and effectiveness of standards conformance testing (SCT) at Joint Interoperability Test Command (JITC) • To apply systems theory, modeling and simulation concepts, and current software technology to (semi-)automate portions of conformance testing Objective: Automate Testing Capture Specification as If-Then Rules in XML Analyze Rules to Extract I/O Behavior Synthesize DEVS Test Models Test Driver Executes Models to Induce Testable Behavior in System Under Test (SUT) Interact With SUT Over Middleware Test Driver DEVS Simulator SUT HLA Network

Link-16: The Nut-to-crack • Joint Single Link Implementation Requirements Specification (JSLIRS) is an evolving standard (MIL-STD-6016 c) for tactical data link information exchange and networked command/control of radar systems • Presents significant challenges to automated conformance testing: – – – • The specification document states requirements in natural language open to ambiguous interpretations The document is voluminous many interdependent chapters and appendixes labor intensive and prone to error potentially incomplete and inconsistent. Problem: how to ensure that a certification test procedure – – is traceable back to specification completely covers the requirements can be consistently replicated across the numerous contexts military service, inter-national, and commercial companies

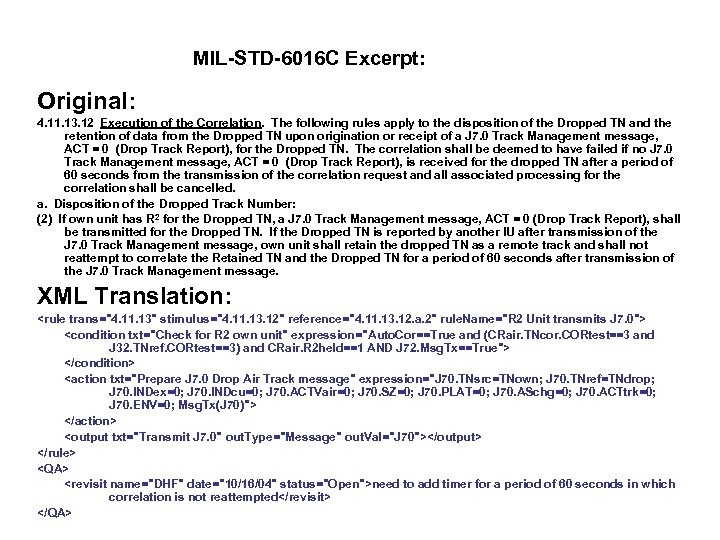

MIL-STD-6016 C Excerpt: Original: 4. 11. 13. 12 Execution of the Correlation. The following rules apply to the disposition of the Dropped TN and the retention of data from the Dropped TN upon origination or receipt of a J 7. 0 Track Management message, ACT = 0 (Drop Track Report), for the Dropped TN. The correlation shall be deemed to have failed if no J 7. 0 Track Management message, ACT = 0 (Drop Track Report), is received for the dropped TN after a period of 60 seconds from the transmission of the correlation request and all associated processing for the correlation shall be cancelled. a. Disposition of the Dropped Track Number: (2) If own unit has R 2 for the Dropped TN, a J 7. 0 Track Management message, ACT = 0 (Drop Track Report), shall be transmitted for the Dropped TN. If the Dropped TN is reported by another IU after transmission of the J 7. 0 Track Management message, own unit shall retain the dropped TN as a remote track and shall not reattempt to correlate the Retained TN and the Dropped TN for a period of 60 seconds after transmission of the J 7. 0 Track Management message. XML Translation: <rule trans="4. 11. 13" stimulus="4. 11. 13. 12" reference="4. 11. 13. 12. a. 2" rule. Name="R 2 Unit transmits J 7. 0"> <condition txt="Check for R 2 own unit" expression="Auto. Cor==True and (CRair. TNcor. CORtest==3 and J 32. TNref. CORtest==3) and CRair. R 2 held==1 AND J 72. Msg. Tx==True"> </condition> <action txt="Prepare J 7. 0 Drop Air Track message" expression="J 70. TNsrc=TNown; J 70. TNref=TNdrop; J 70. INDex=0; J 70. INDcu=0; J 70. ACTVair=0; J 70. SZ=0; J 70. PLAT=0; J 70. ASchg=0; J 70. ACTtrk=0; J 70. ENV=0; Msg. Tx(J 70)"> </action> <output txt="Transmit J 7. 0" out. Type="Message" out. Val="J 70"></output> </rule> <QA> <revisit name="DHF" date="10/16/04" status="Open">need to add timer for a period of 60 seconds in which correlation is not reattempted</revisit> </QA>

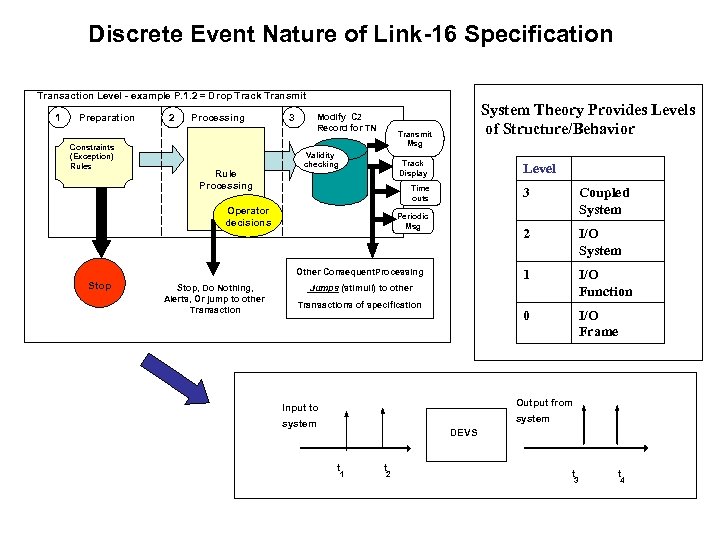

Discrete Event Nature of Link-16 Specification Transaction Level - example P. 1. 2 = Drop Track Transmit 1 Preparation Constraints (Exception) Rules 2 Processing Rule Processing Modify C 2 Record for TN 3 System Theory Provides Levels of Structure/Behavior Transmit Msg Validity checking Track Display Level Time outs Operator decisions 3 2 Stop, Do Nothing, Alerts, Or jump to other Transaction I/O Function 0 Other Consequent. Processing I/O System 1 Periodic Msg Coupled System I/O Frame Jumps (stimuli) to other Transactions of specification Output from Input to system DEVS t 1 t 2 t 3 t 4

ATC-Gen Top-Level Architecture Analyst Rule Capture Component Rule Formalization Component Subject Matter Expert Logic Engineer Rule Set Property Analyzer Test Generation Component SUT Sensor Test Engineer Other Federates

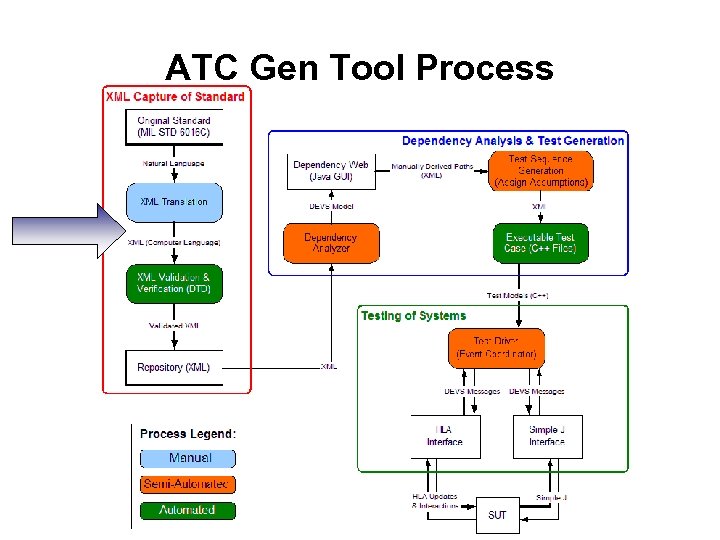

ATC Gen Overview • Standard to XML Translation – Analyst interprets the requirements text to extract state variables and rules, where rules are written in the form: If P is true now Condition Then do action A later Consequence Unless Q occurs in the interim Exception • Dependency Analysis & Test Generation – Dependency Analyzer (DA) determines the relationship between rules by identifying shared state variables – Test Model Generator converts Analyst defined test sequences to executable simulation models • Test Driver – Test Driver interacts with and connects to SUT via HLA or Simple J interfaces to perform conformance testing – Validated against legacy test tools

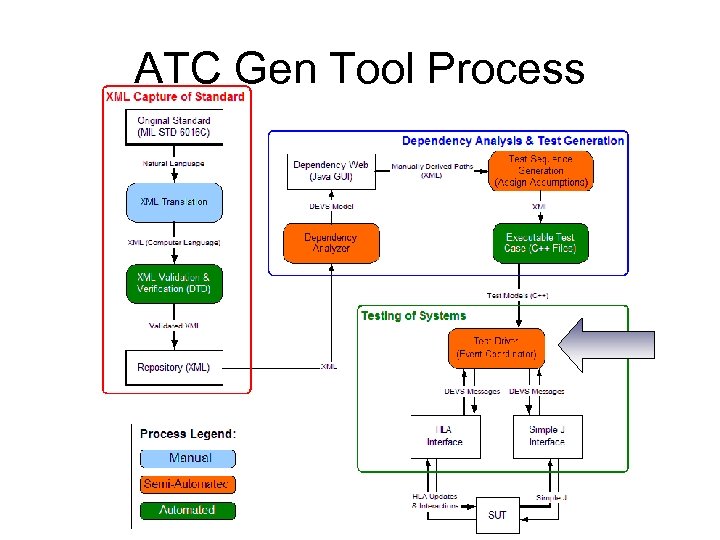

ATC Gen Tool Process

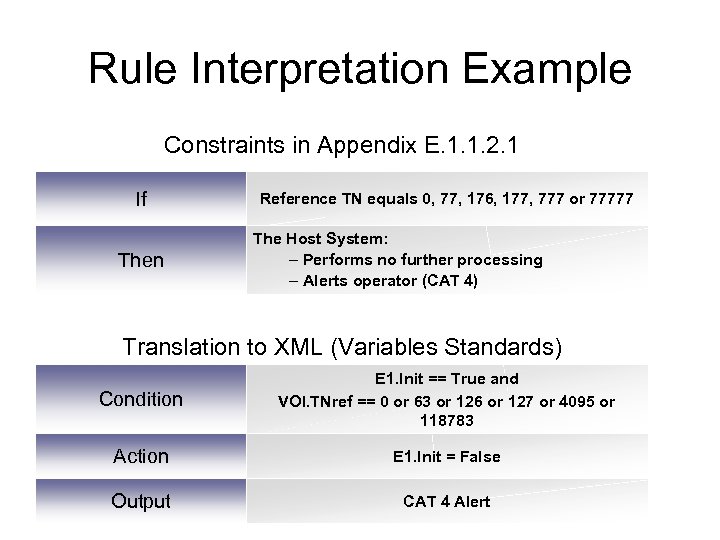

Rule Interpretation Example Constraints in Appendix E. 1. 1. 2. 1 If Then Reference TN equals 0, 77, 176, 177, 777 or 77777 The Host System: – Performs no further processing – Alerts operator (CAT 4) Translation to XML (Variables Standards) Condition E 1. Init == True and VOI. TNref == 0 or 63 or 126 or 127 or 4095 or 118783 Action E 1. Init = False Output CAT 4 Alert

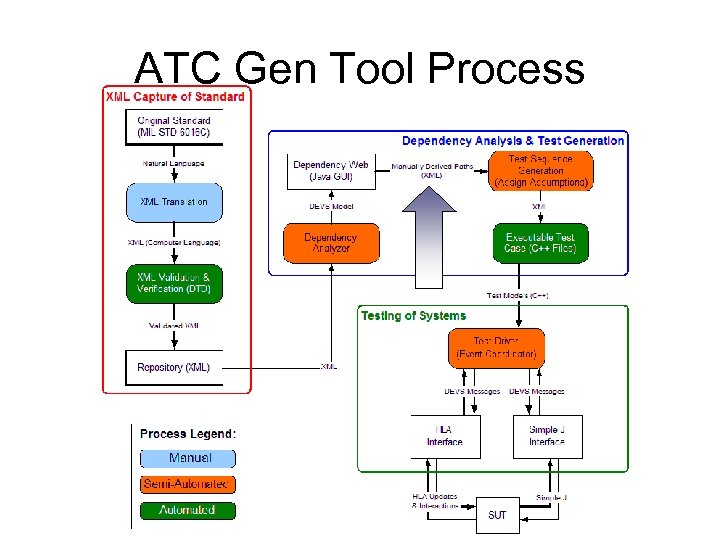

ATC Gen Tool Process

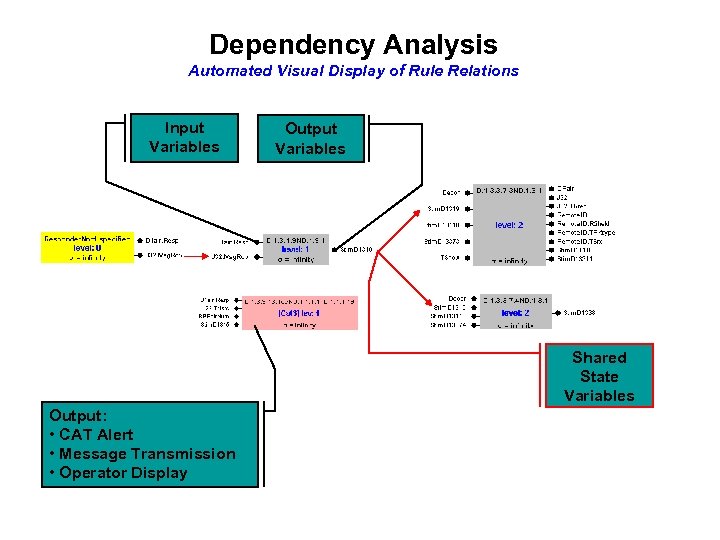

Dependency Analysis Automated Visual Display of Rule Relations Input Variables Output Variables Shared State Variables Output: • CAT Alert • Message Transmission • Operator Display

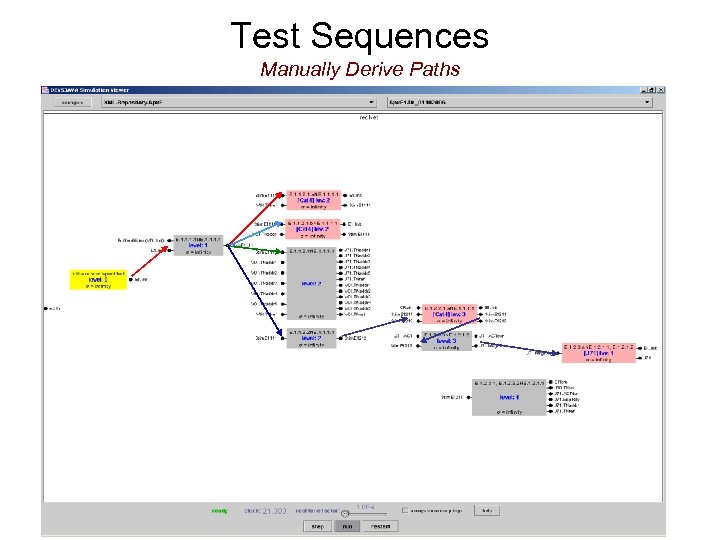

Test Sequences Manually Derive Paths

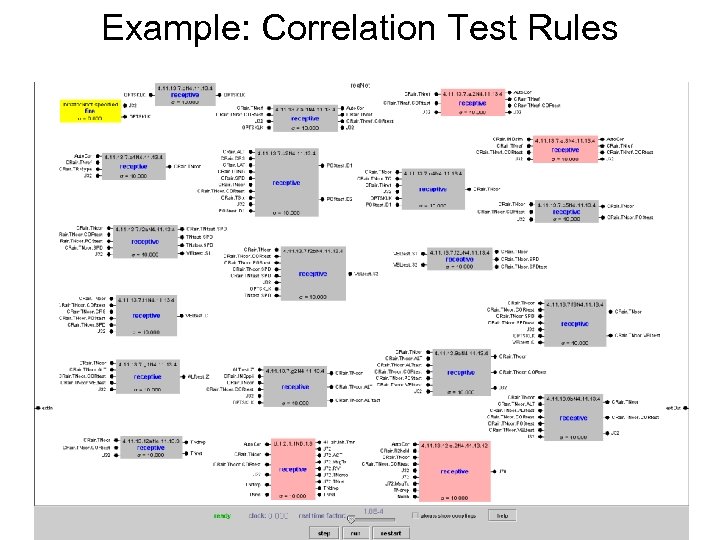

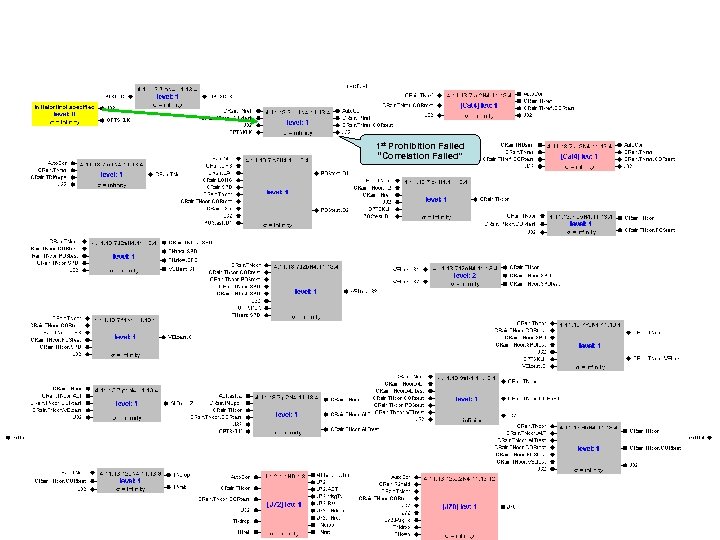

Example: Correlation Test Rules

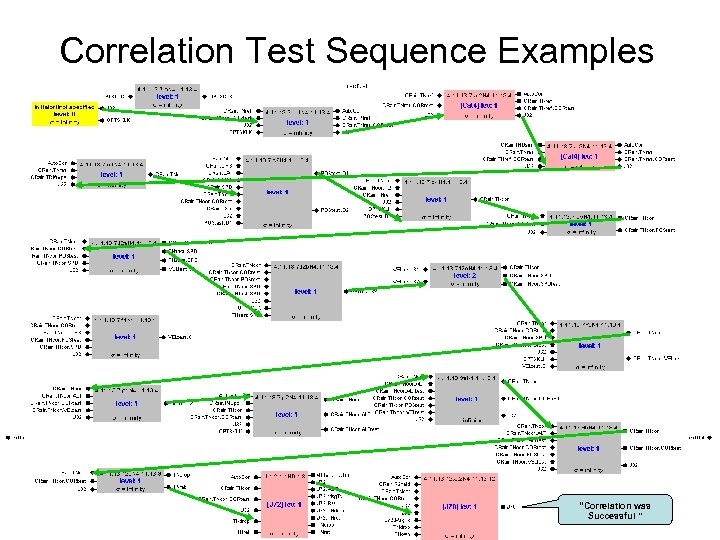

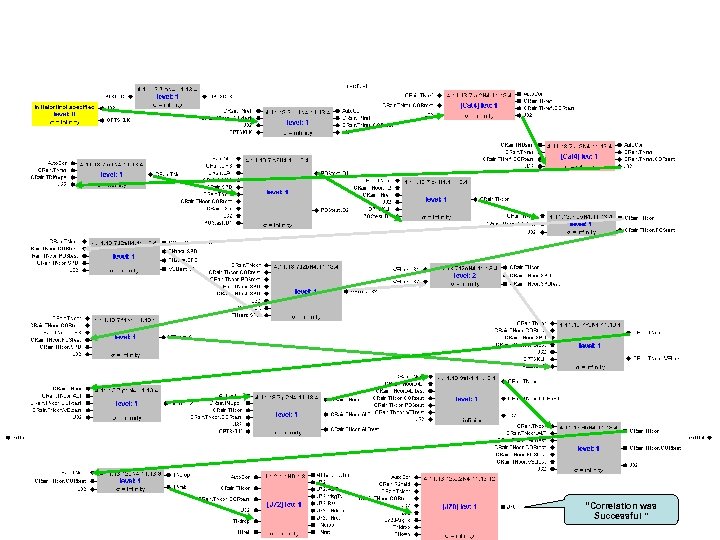

Correlation Test Sequence Examples “Correlation was Successful ”

“Correlation was Successful ”

1 st Prohibition Failed “Correlation Failed”

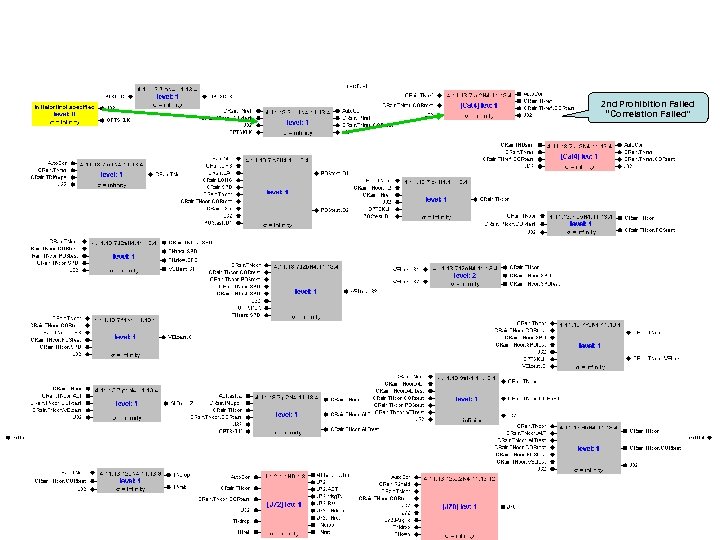

2 nd Prohibition Failed “Correlation Failed”

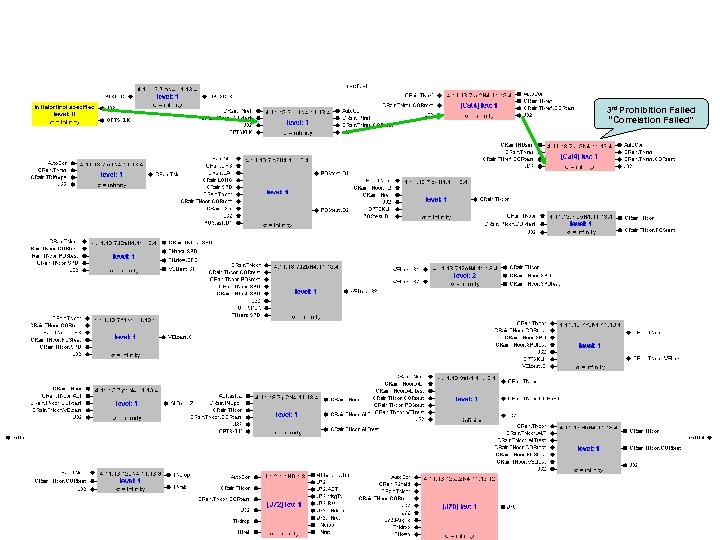

3 rd Prohibition Failed “Correlation Failed”

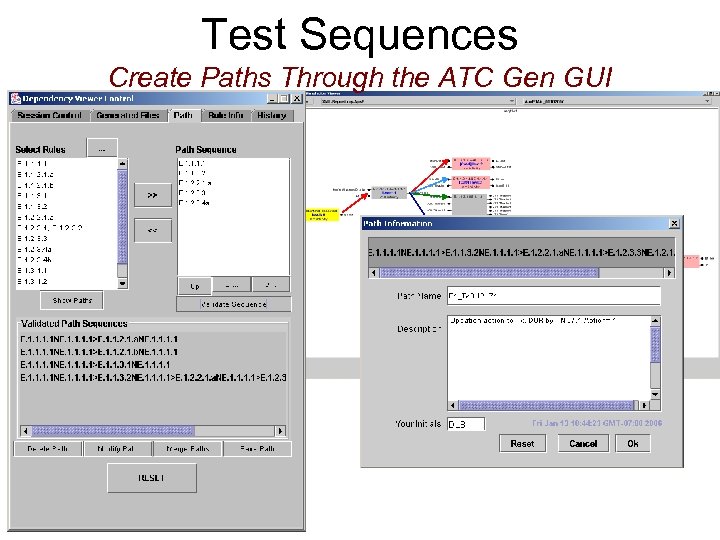

Test Sequences Create Paths Through the ATC Gen GUI

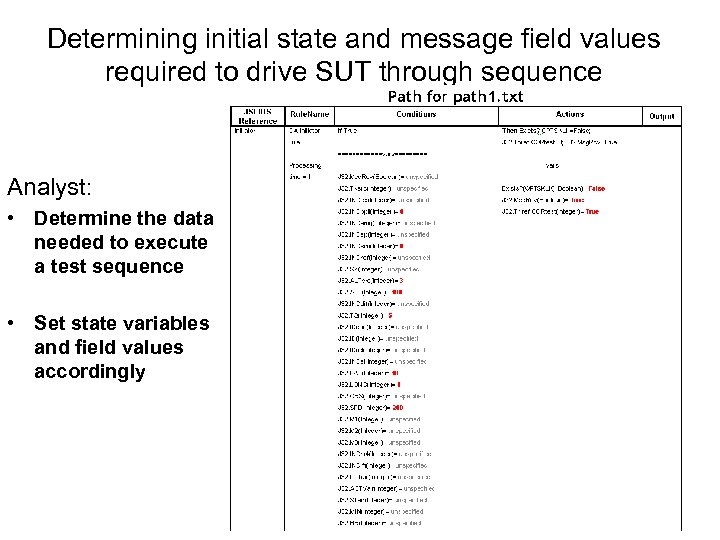

Determining initial state and message field values required to drive SUT through sequence Analyst: • Determine the data needed to execute a test sequence • Set state variables and field values accordingly

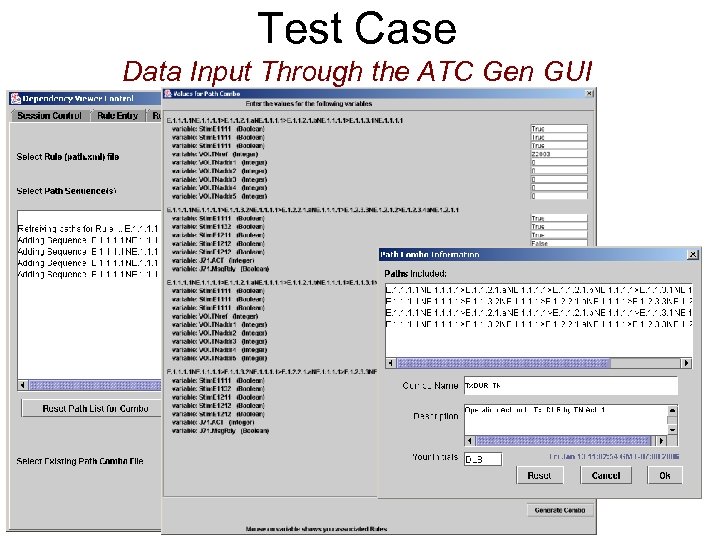

Test Case Data Input Through the ATC Gen GUI

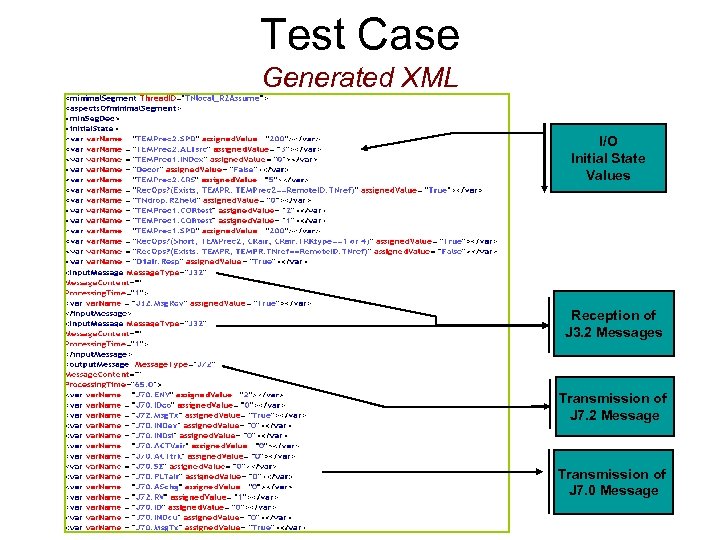

Test Case Generated XML I/O Initial State Values Reception of J 3. 2 Messages Transmission of J 7. 2 Message Transmission of J 7. 0 Message

ATC Gen Tool Process

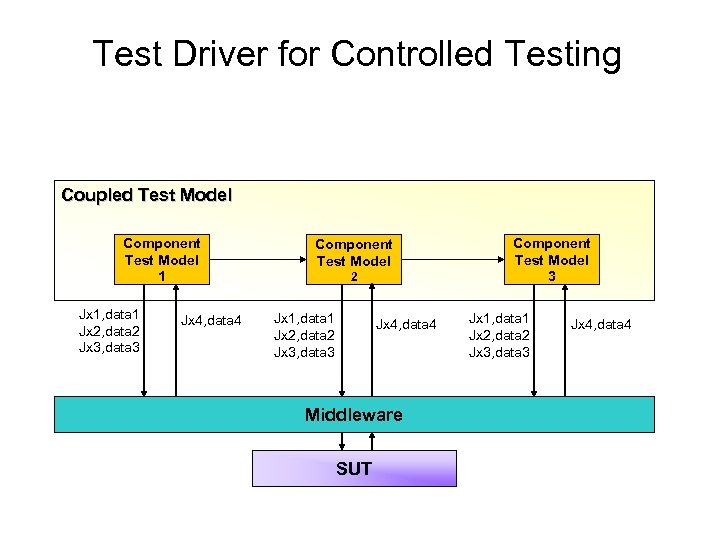

Test Driver for Controlled Testing Coupled Test Model Component Test Model 1 Jx 1, data 1 Jx 2, data 2 Jx 3, data 3 Jx 4, data 4 Component Test Model 2 Jx 1, data 1 Jx 2, data 2 Jx 3, data 3 Jx 4, data 4 Middleware SUT Component Test Model 3 Jx 1, data 1 Jx 2, data 2 Jx 3, data 3 Jx 4, data 4

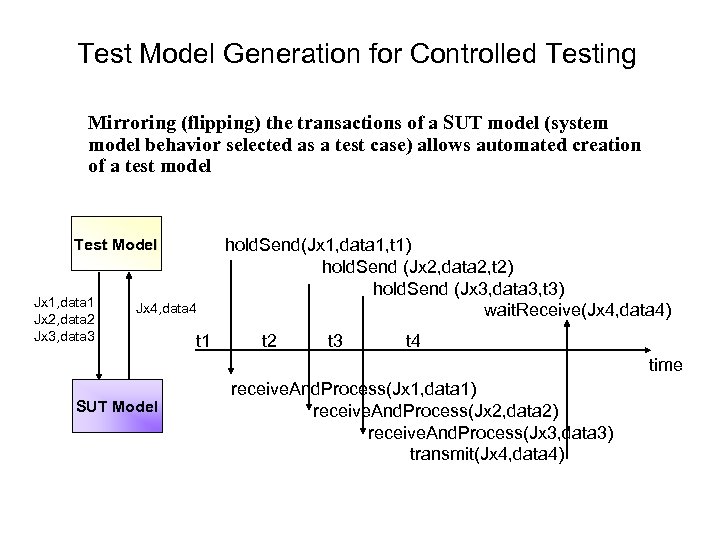

Test Model Generation for Controlled Testing Mirroring (flipping) the transactions of a SUT model (system model behavior selected as a test case) allows automated creation of a test model Test Model Jx 1, data 1 Jx 2, data 2 Jx 3, data 3 Jx 4, data 4 t 1 hold. Send(Jx 1, data 1, t 1) hold. Send (Jx 2, data 2, t 2) hold. Send (Jx 3, data 3, t 3) wait. Receive(Jx 4, data 4) t 2 t 3 t 4 time SUT Model receive. And. Process(Jx 1, data 1) receive. And. Process(Jx 2, data 2) receive. And. Process(Jx 3, data 3) transmit(Jx 4, data 4)

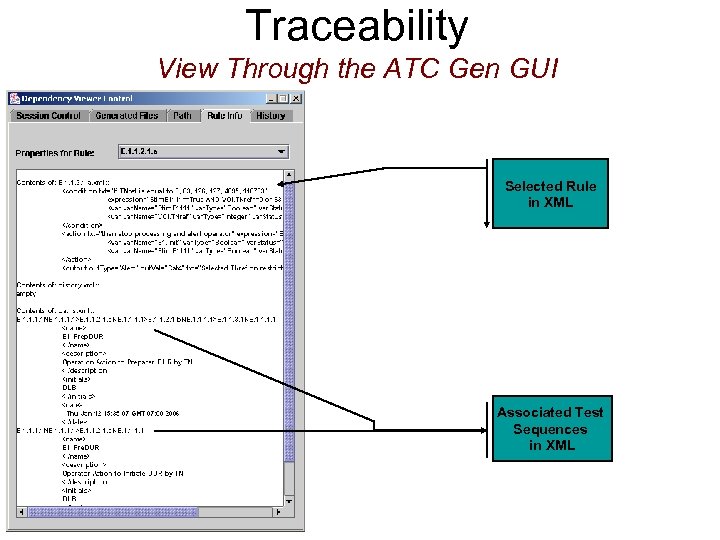

Traceability View Through the ATC Gen GUI Selected Rule in XML Associated Test Sequences in XML

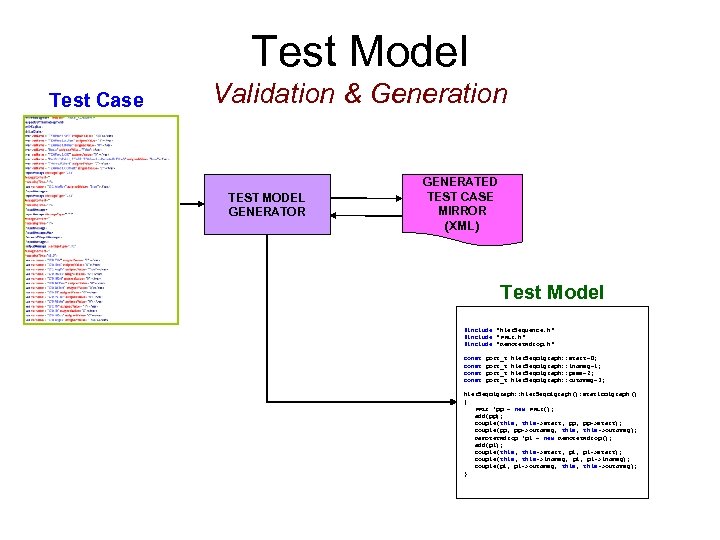

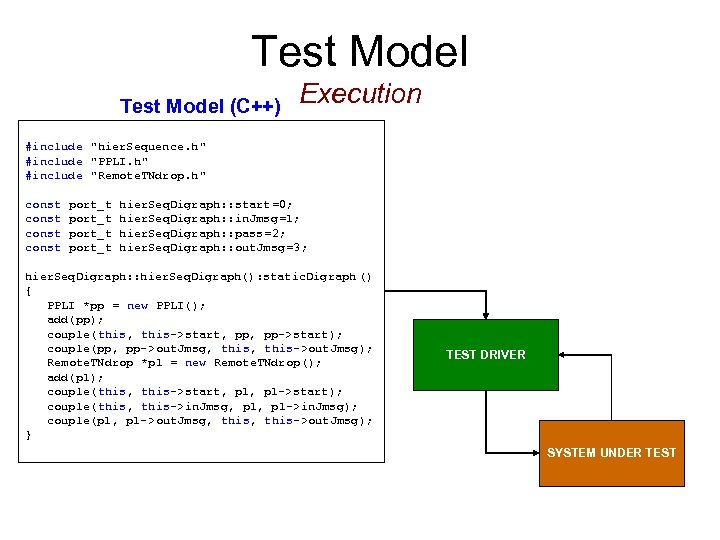

Test Model Test Case Validation & Generation TEST MODEL GENERATOR GENERATED TEST CASE MIRROR (XML) Test Model #include "hier. Sequence. h" #include "PPLI. h" #include "Remote. TNdrop. h" const port_t hier. Seq. Digraph: : start=0; const port_t hier. Seq. Digraph: : in. Jmsg=1; const port_t hier. Seq. Digraph: : pass=2; const port_t hier. Seq. Digraph: : out. Jmsg=3; hier. Seq. Digraph: : hier. Seq. Digraph(): static. Digraph () { PPLI *pp = new PPLI(); add(pp); couple(this, this->start, pp->start); couple(pp, pp->out. Jmsg, this->out. Jmsg); Remote. TNdrop *p 1 = new Remote. TNdrop(); add(p 1); couple(this, this->start, p 1 ->start); couple(this, this->in. Jmsg, p 1 ->in. Jmsg); couple(p 1, p 1 ->out. Jmsg, this->out. Jmsg); }

Test Model (C++) Execution #include "hier. Sequence. h" #include "PPLI. h" #include "Remote. TNdrop. h" const port_t hier. Seq. Digraph: : start=0; const port_t hier. Seq. Digraph: : in. Jmsg=1; const port_t hier. Seq. Digraph: : pass=2; const port_t hier. Seq. Digraph: : out. Jmsg=3; hier. Seq. Digraph: : hier. Seq. Digraph(): static. Digraph () { PPLI *pp = new PPLI(); add(pp); couple(this, this->start, pp->start); couple(pp, pp->out. Jmsg, this->out. Jmsg); Remote. TNdrop *p 1 = new Remote. TNdrop(); add(p 1); couple(this, this->start, p 1 ->start); couple(this, this->in. Jmsg, p 1 ->in. Jmsg); couple(p 1, p 1 ->out. Jmsg, this->out. Jmsg); } TEST DRIVER SYSTEM UNDER TEST

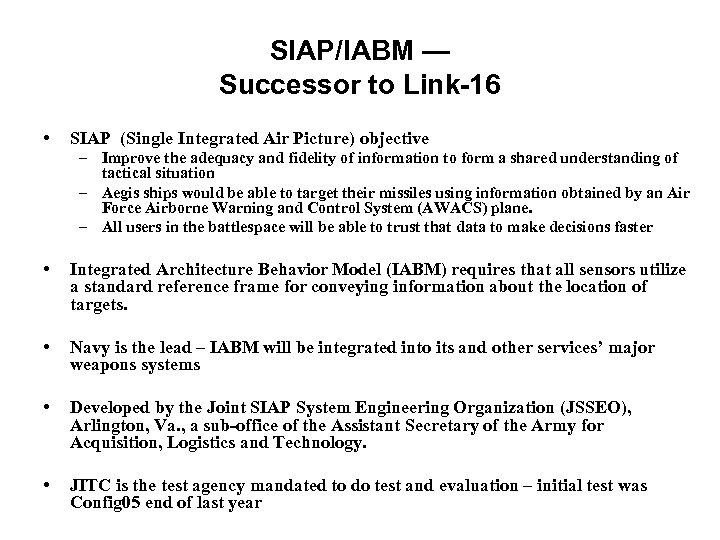

SIAP/IABM — Successor to Link-16 • SIAP (Single Integrated Air Picture) objective – Improve the adequacy and fidelity of information to form a shared understanding of tactical situation – Aegis ships would be able to target their missiles using information obtained by an Air Force Airborne Warning and Control System (AWACS) plane. – All users in the battlespace will be able to trust that data to make decisions faster • Integrated Architecture Behavior Model (IABM) requires that all sensors utilize a standard reference frame for conveying information about the location of targets. • Navy is the lead – IABM will be integrated into its and other services’ major weapons systems • Developed by the Joint SIAP System Engineering Organization (JSSEO), Arlington, Va. , a sub-office of the Assistant Secretary of the Army for Acquisition, Logistics and Technology. • JITC is the test agency mandated to do test and evaluation – initial test was Config 05 end of last year

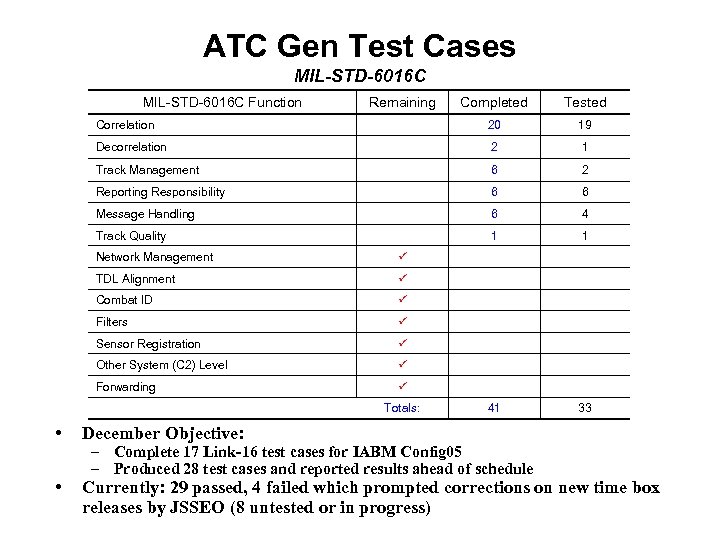

ATC Gen Test Cases MIL-STD-6016 C Function Remaining Completed Tested Correlation 20 19 Decorrelation 2 1 Track Management 6 2 Reporting Responsibility 6 6 Message Handling 6 4 Track Quality 1 1 41 33 Network Management TDL Alignment Combat ID Filters Sensor Registration Other System (C 2) Level Forwarding Totals: • December Objective: • Currently: 29 passed, 4 failed which prompted corrections on new time box releases by JSSEO (8 untested or in progress) – Complete 17 Link-16 test cases for IABM Config 05 – Produced 28 test cases and reported results ahead of schedule

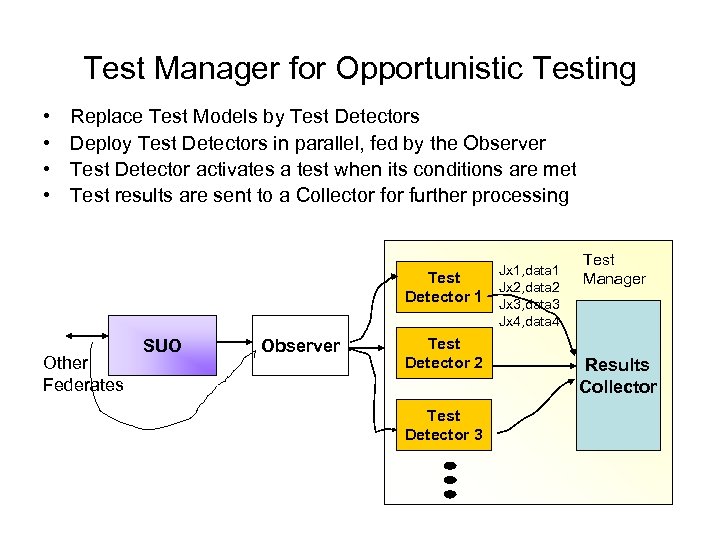

Test Manager for Opportunistic Testing • • Replace Test Models by Test Detectors Deploy Test Detectors in parallel, fed by the Observer Test Detector activates a test when its conditions are met Test results are sent to a Collector further processing Test Detector 1 Other Federates SUO Observer Test Detector 2 Test Detector 3 Jx 1, data 1 Jx 2, data 2 Jx 3, data 3 Jx 4, data 4 Test Manager Results Collector

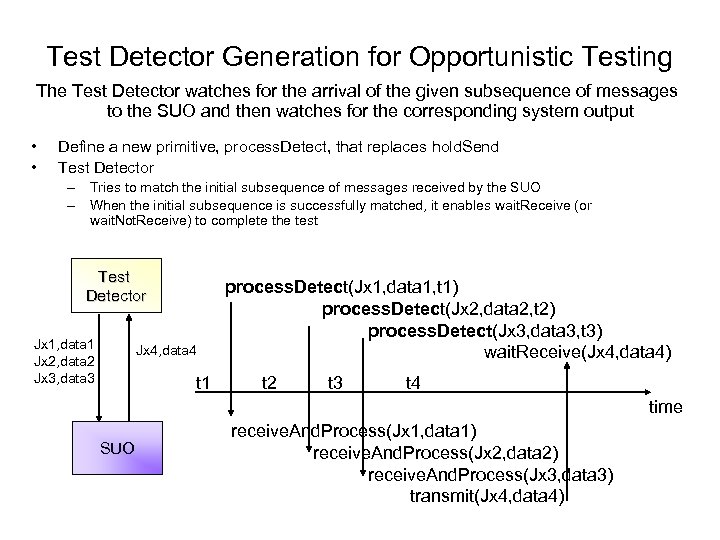

Test Detector Generation for Opportunistic Testing The Test Detector watches for the arrival of the given subsequence of messages to the SUO and then watches for the corresponding system output • • Define a new primitive, process. Detect, that replaces hold. Send Test Detector – Tries to match the initial subsequence of messages received by the SUO – When the initial subsequence is successfully matched, it enables wait. Receive (or wait. Not. Receive) to complete the test Test Detector Jx 1, data 1 Jx 2, data 2 Jx 3, data 3 Jx 4, data 4 t 1 process. Detect(Jx 1, data 1, t 1) process. Detect(Jx 2, data 2, t 2) process. Detect(Jx 3, data 3, t 3) wait. Receive(Jx 4, data 4) t 2 t 3 t 4 time SUO receive. And. Process(Jx 1, data 1) receive. And. Process(Jx 2, data 2) receive. And. Process(Jx 3, data 3) transmit(Jx 4, data 4)

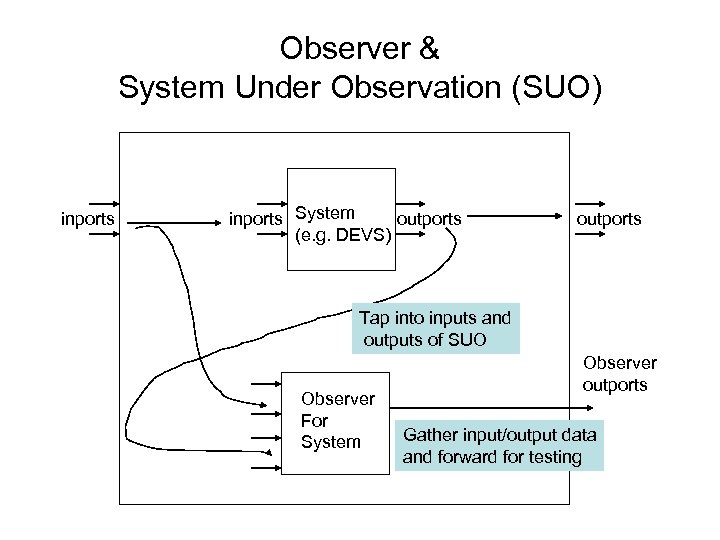

Observer & System Under Observation (SUO) inports System outports (e. g. DEVS) outports Tap into inputs and outputs of SUO Observer For System Observer outports Gather input/output data and forward for testing

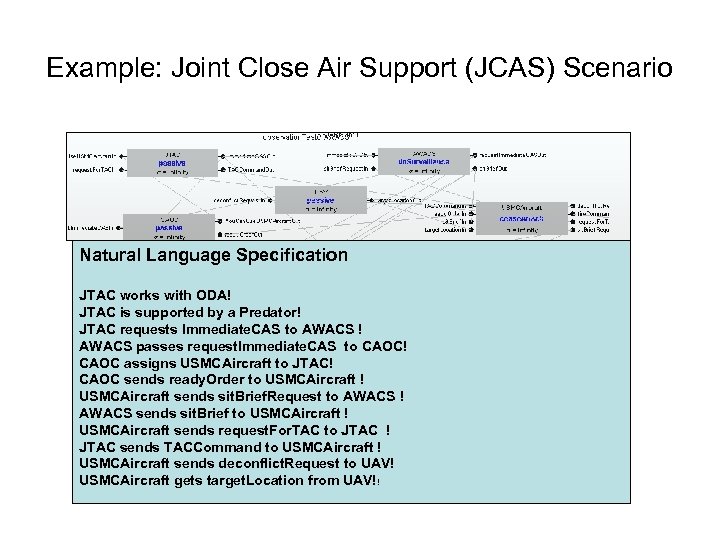

Example: Joint Close Air Support (JCAS) Scenario Natural Language Specification JTAC works with ODA! JTAC is supported by a Predator! JTAC requests Immediate. CAS to AWACS ! AWACS passes request. Immediate. CAS to CAOC! CAOC assigns USMCAircraft to JTAC! CAOC sends ready. Order to USMCAircraft ! USMCAircraft sends sit. Brief. Request to AWACS ! AWACS sends sit. Brief to USMCAircraft ! USMCAircraft sends request. For. TAC to JTAC ! JTAC sends TACCommand to USMCAircraft ! USMCAircraft sends deconflict. Request to UAV! USMCAircraft gets target. Location from UAV!!

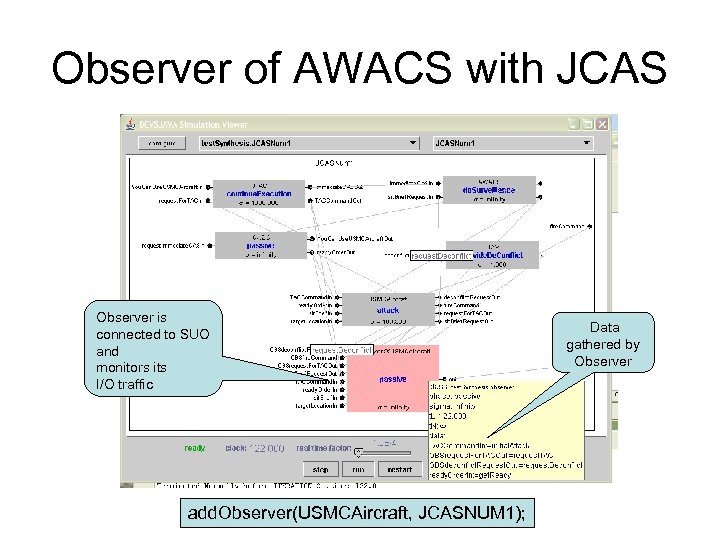

Observer of AWACS with JCAS Observer is connected to SUO and monitors its I/O traffic add. Observer(USMCAircraft, JCASNUM 1); Data gathered by Observer

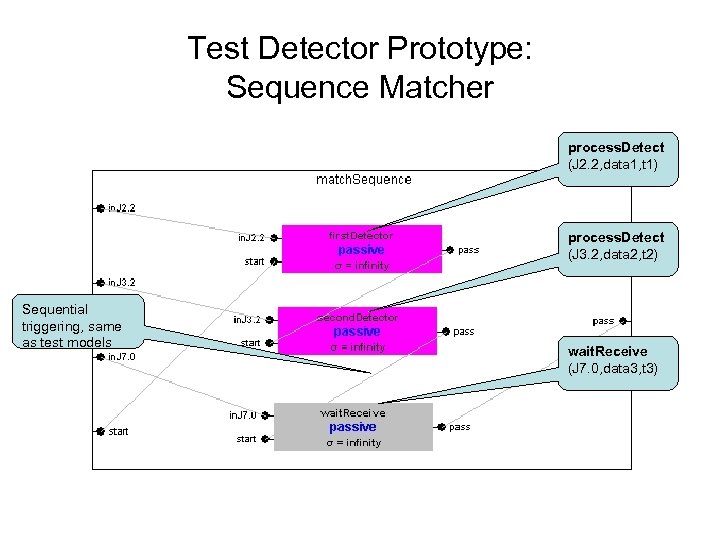

Test Detector Prototype: Sequence Matcher process. Detect (J 2. 2, data 1, t 1) process. Detect (J 3. 2, data 2, t 2) Sequential triggering, same as test models wait. Receive (J 7. 0, data 3, t 3)

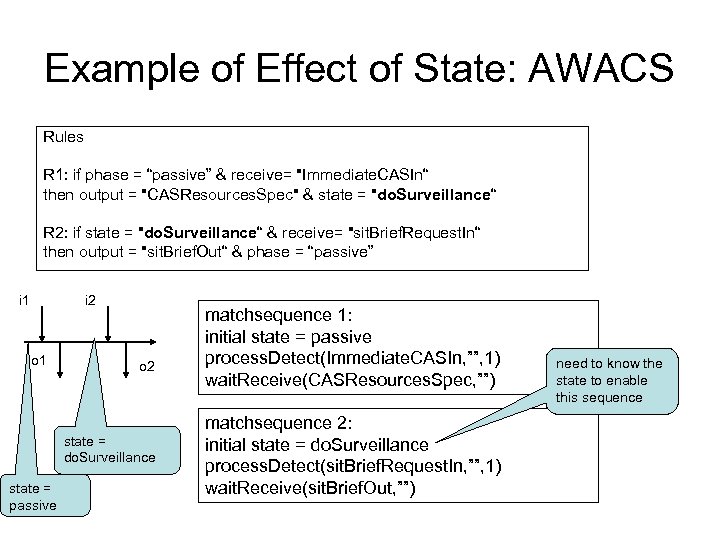

Example of Effect of State: AWACS Rules R 1: if phase = “passive” & receive= "Immediate. CASIn“ then output = "CASResources. Spec" & state = "do. Surveillance“ R 2: if state = "do. Surveillance“ & receive= "sit. Brief. Request. In“ then output = "sit. Brief. Out“ & phase = “passive” i 1 i 2 o 1 o 2 state = do. Surveillance state = passive matchsequence 1: initial state = passive process. Detect(Immediate. CASIn, ””, 1) wait. Receive(CASResources. Spec, ””) matchsequence 2: initial state = do. Surveillance process. Detect(sit. Brief. Request. In, ””, 1) wait. Receive(sit. Brief. Out, ””) need to know the state to enable this sequence

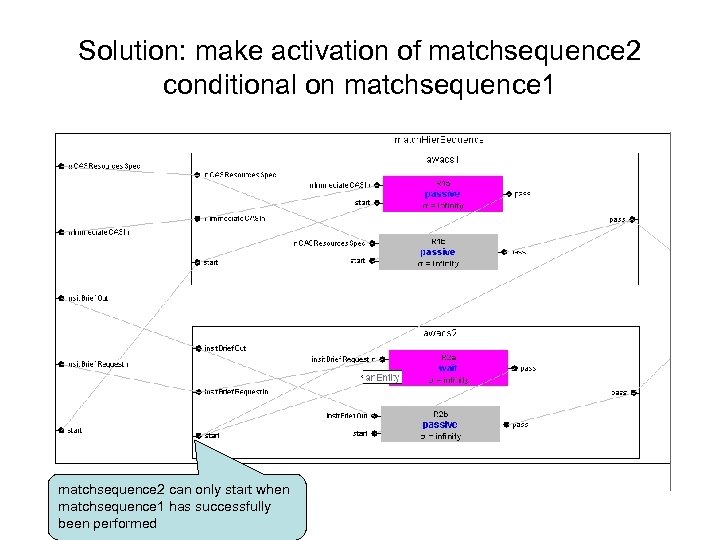

Solution: make activation of matchsequence 2 conditional on matchsequence 1 matchsequence 2 can only start when matchsequence 1 has successfully been performed

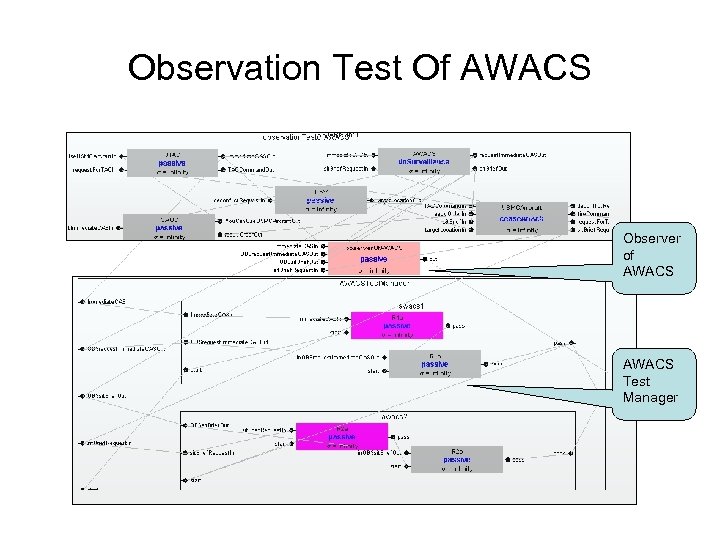

Observation Test Of AWACS Observer of AWACS Test Manager

Problem with Fixed Set of Test Detectors • after a test detector has been started up, a message may arrive that requires it to be re-initialized • Parallel search and processing required by fixed presence of multiple test detectors under the test manager may limit the processing and/or number of monitor points • does not allow for changing from one test focus to another in realtime, e. g. going from format testing to correlation testing once format the first has been satisfied Solution • • on-demand inclusion of test detector instances remove detector when known to be “finished” employ DEVS variable structure capabilities requires intelligence to decide inclusion and removal

Dynamic Test Suite: Features • Test Detectors are inserted into Test Suite by Test Control • Test Control uses table to select Detectors based on incoming message • Test Control passes on just received message and starts up Test Detector • Each Detector stage starts up next stage and removes itself from Test Suite as soon as the result of its test is known – If the outcome is a pass (test is successful) then next stage is started up

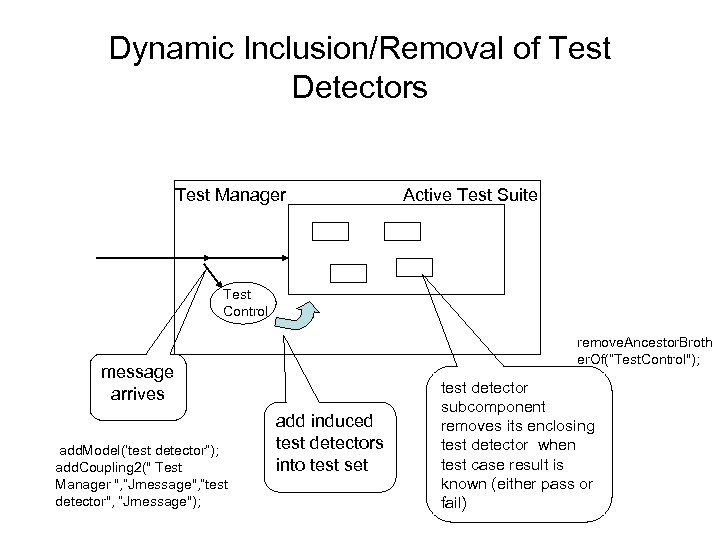

Dynamic Inclusion/Removal of Test Detectors Test Manager Active Test Suite Test Control remove. Ancestor. Broth er. Of(“Test. Control"); message arrives add. Model(‘test detector”); add. Coupling 2(" Test Manager ", “Jmessage", “test detector", “Jmessage"); add induced test detectors into test set test detector subcomponent removes its enclosing test detector when test case result is known (either pass or fail)

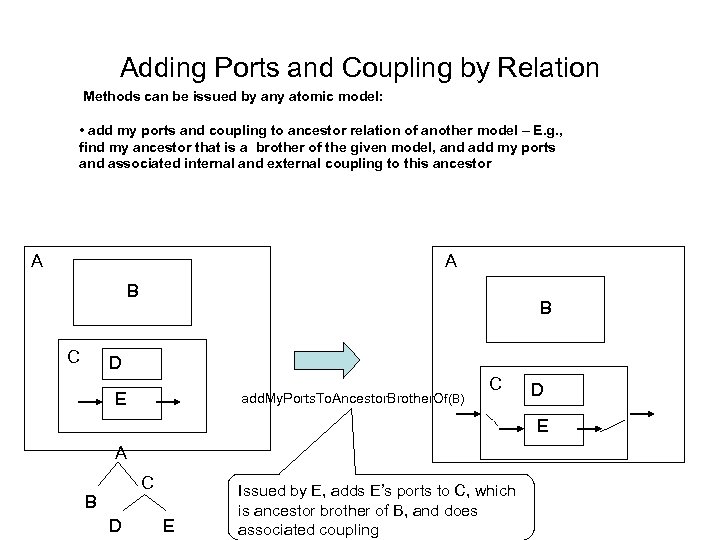

Adding Ports and Coupling by Relation Methods can be issued by any atomic model: • add my ports and coupling to ancestor relation of another model – E. g. , find my ancestor that is a brother of the given model, and add my ports and associated internal and external coupling to this ancestor A A B C B D E add. My. Ports. To. Ancestor. Brother. Of(B) C D E A C B D E Issued by E, adds E’s ports to C, which is ancestor brother of B, and does associated coupling

Adding Ports and Coupling (cont’d) Methods can be issued by any atomic model: • add output ports and coupling from source to destination – adds outports of source as input ports of destination and couples them • add input ports and coupling from source to destination – adds inports of source as outputs of destination and couples them A A B B In 1 out 1 add. Outports. Ncoupling(C, B)) add. Inports. Ncoupling(C, B)) C C D E in 1 Issued by E, allows E to communicate with B D E out 1

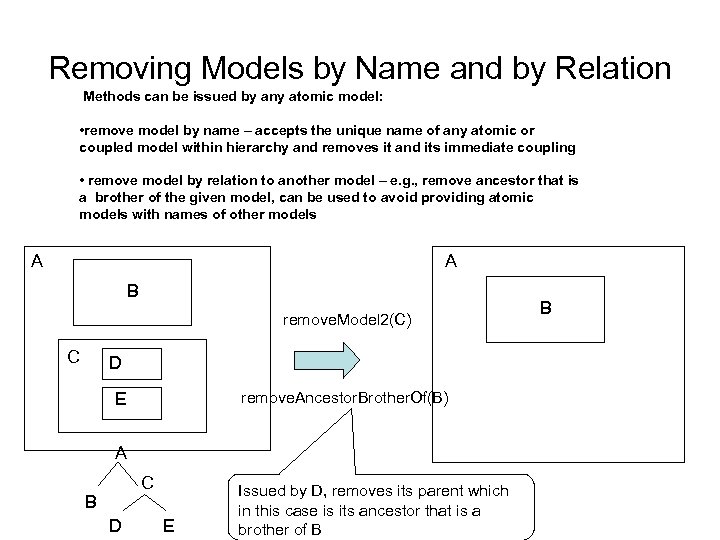

Removing Models by Name and by Relation Methods can be issued by any atomic model: • remove model by name – accepts the unique name of any atomic or coupled model within hierarchy and removes it and its immediate coupling • remove model by relation to another model – e. g. , remove ancestor that is a brother of the given model, can be used to avoid providing atomic models with names of other models A A B remove. Model 2(C) C D remove. Ancestor. Brother. Of(B) E A C B D E Issued by D, removes its parent which in this case is its ancestor that is a brother of B B

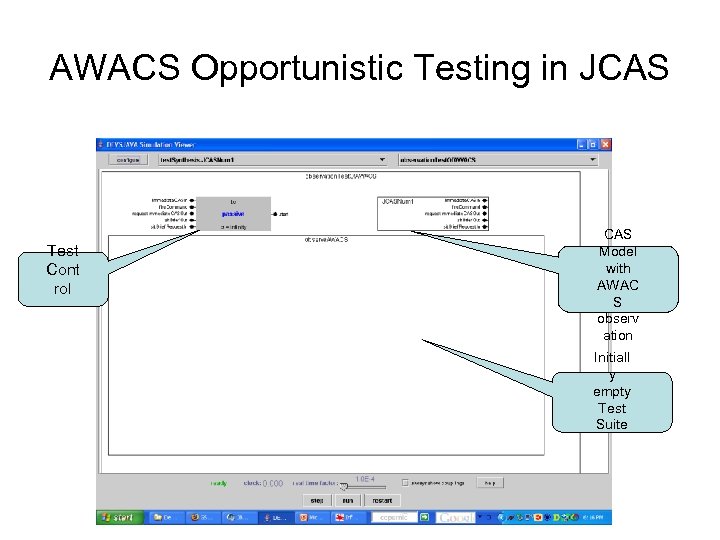

AWACS Opportunistic Testing in JCAS Test Cont rol CAS Model with AWAC S observ ation Initiall y empty Test Suite

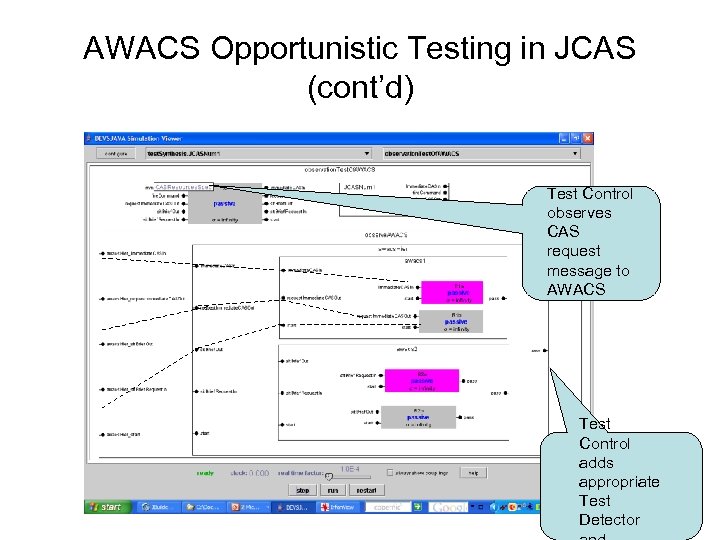

AWACS Opportunistic Testing in JCAS (cont’d) Test Control observes CAS request message to AWACS Test Control adds appropriate Test Detector

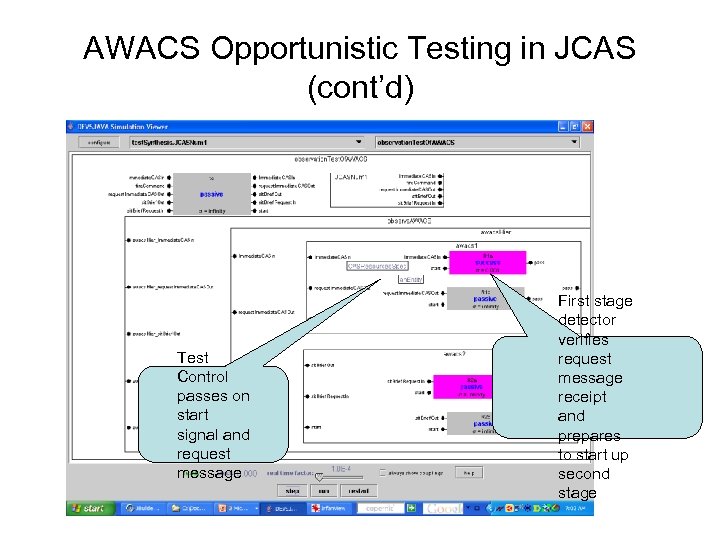

AWACS Opportunistic Testing in JCAS (cont’d) Test Control passes on start signal and request message First stage detector verifies request message receipt and prepares to start up second stage

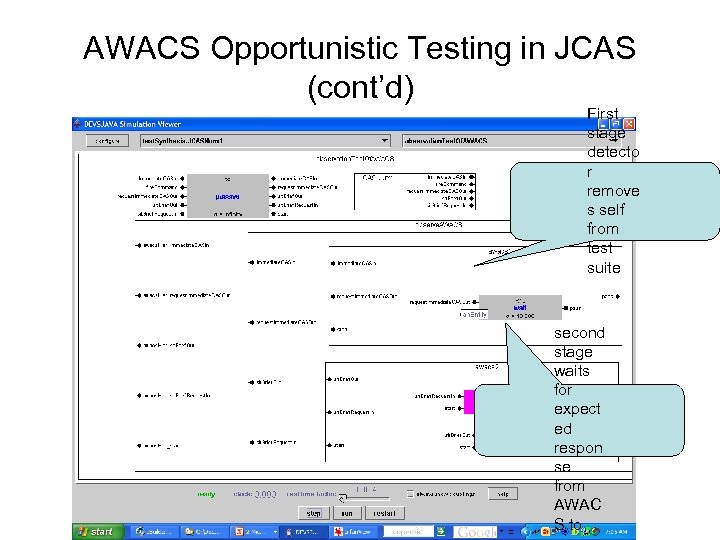

AWACS Opportunistic Testing in JCAS (cont’d) First stage detecto r remove s self from test suite second stage waits for expect ed respon se from AWAC S to

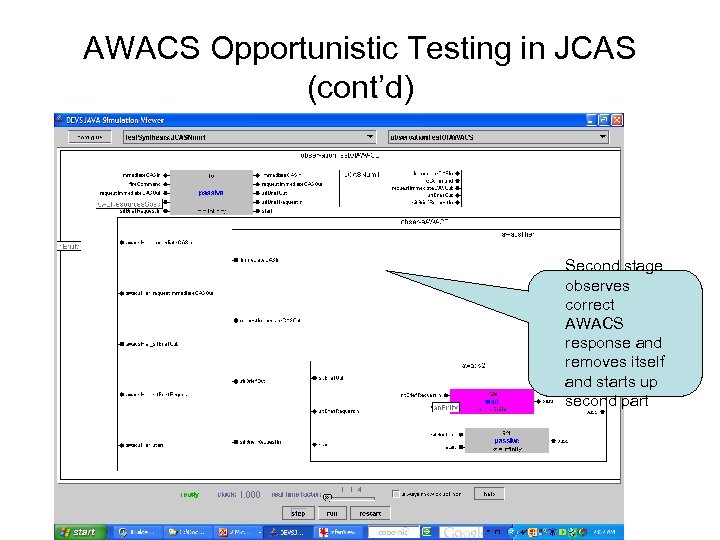

AWACS Opportunistic Testing in JCAS (cont’d) Second stage observes correct AWACS response and removes itself and starts up second part

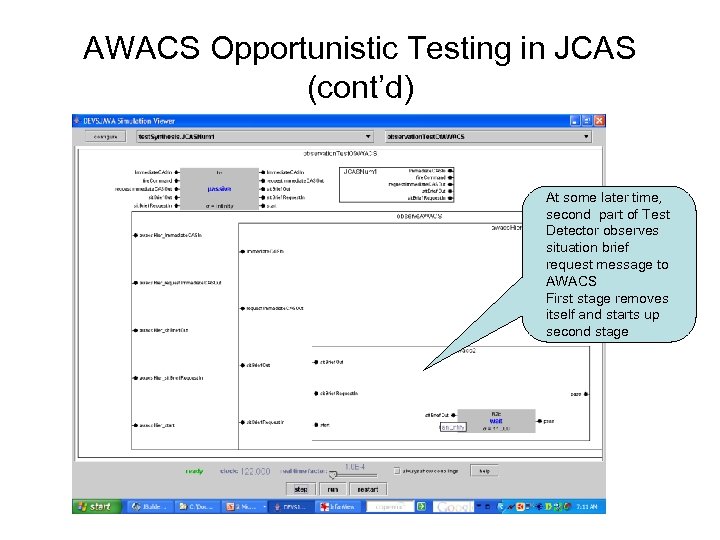

AWACS Opportunistic Testing in JCAS (cont’d) At some later time, second part of Test Detector observes situation brief request message to AWACS First stage removes itself and starts up second stage

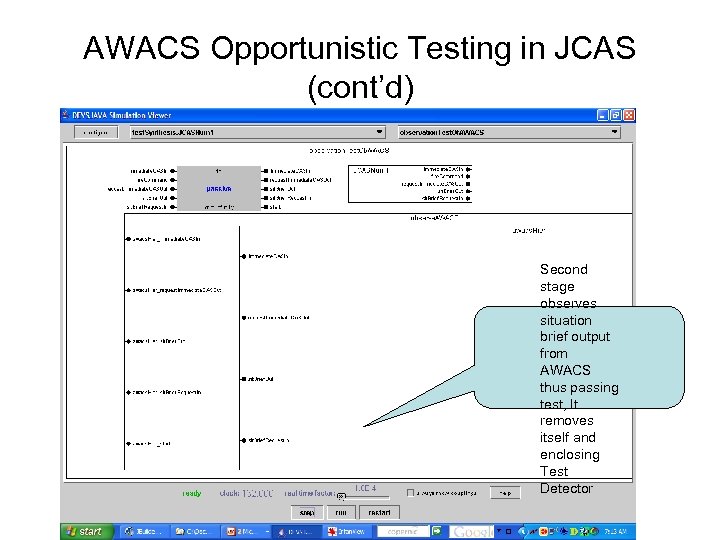

AWACS Opportunistic Testing in JCAS (cont’d) Second stage observes situation brief output from AWACS thus passing test, It removes itself and enclosing Test Detector

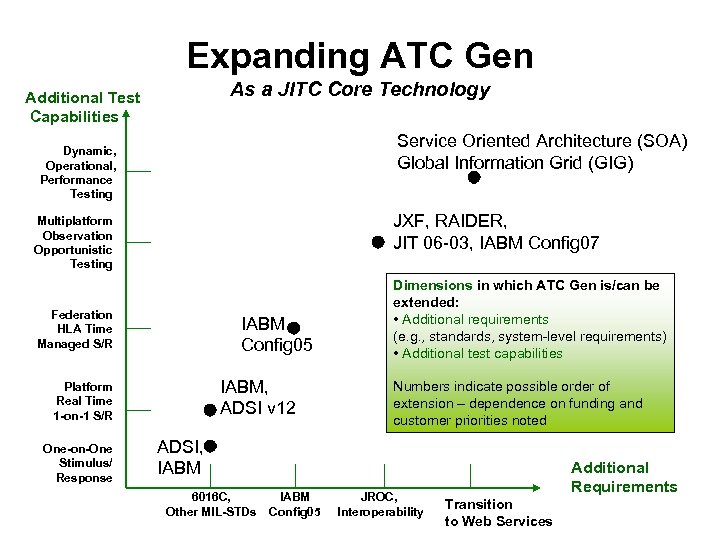

Expanding ATC Gen As a JITC Core Technology Additional Test Capabilities Service Oriented Architecture (SOA) Global Information Grid (GIG) Dynamic, Operational, Performance Testing JXF, RAIDER, JIT 06 -03, IABM Config 07 Multiplatform Observation Opportunistic Testing Federation HLA Time Managed S/R IABM Config 05 IABM, ADSI v 12 Platform Real Time 1 -on-1 S/R One-on-One Stimulus/ Response Dimensions in which ATC Gen is/can be extended: • Additional requirements (e. g. , standards, system-level requirements) • Additional test capabilities Numbers indicate possible order of extension – dependence on funding and customer priorities noted ADSI, IABM 6016 C, Other MIL-STDs IABM Config 05 JROC, Interoperability Additional Requirements Transition to Web Services

Summary • Systems theory and DEVS were critical to the successful development of automated test case generator • ATC-Gen is being integrated into JITC testing as core technology • Role of ACIMS at JITC is expanding to web services and data engineering • Model continuity and mixed virtual/real environment support integrated development and testing process

91129f7718115916a8be0711b76c2915.ppt