0c28896d2574bc8ea703f65d8284b779.ppt

- Количество слайдов: 20

Simplifying Parallel Programming with Incomplete Parallel Languages Laxmikant Kale Computer Science http: //charm. cs. uiuc. edu

Simplifying Parallel Programming with Incomplete Parallel Languages Laxmikant Kale Computer Science http: //charm. cs. uiuc. edu

Requirements • • • Composibility and Interoperability Respect for locality Dealing with heterogeneity Dealing with the memory wall Dealing with dynamic resource variation – Machine running 2 parallel apps on 64 cores, needs to run a third one – Shrink and expand the sets of cores assigned to a job • Dealing with Static resource variation – I. e. Parallel App should run unchanged on the next generation manycore with twice as many cores 2 • Above all: Simplicity August 2008 www. upcrc. illinois. edu

Requirements • • • Composibility and Interoperability Respect for locality Dealing with heterogeneity Dealing with the memory wall Dealing with dynamic resource variation – Machine running 2 parallel apps on 64 cores, needs to run a third one – Shrink and expand the sets of cores assigned to a job • Dealing with Static resource variation – I. e. Parallel App should run unchanged on the next generation manycore with twice as many cores 2 • Above all: Simplicity August 2008 www. upcrc. illinois. edu

How to Simplify Parallel Programming • The question to ask is: What to automate? – NOT what can be done relatively easily by the programmer • E. g. Deciding what to do in parallel – Strive for a good balance between “system” and programmer • Balance Evolves towards more automation • This talk: – A sequence of ideas, building on each other – Over a long period of time 3 www. upcrc. illinois. edu August 2008

How to Simplify Parallel Programming • The question to ask is: What to automate? – NOT what can be done relatively easily by the programmer • E. g. Deciding what to do in parallel – Strive for a good balance between “system” and programmer • Balance Evolves towards more automation • This talk: – A sequence of ideas, building on each other – Over a long period of time 3 www. upcrc. illinois. edu August 2008

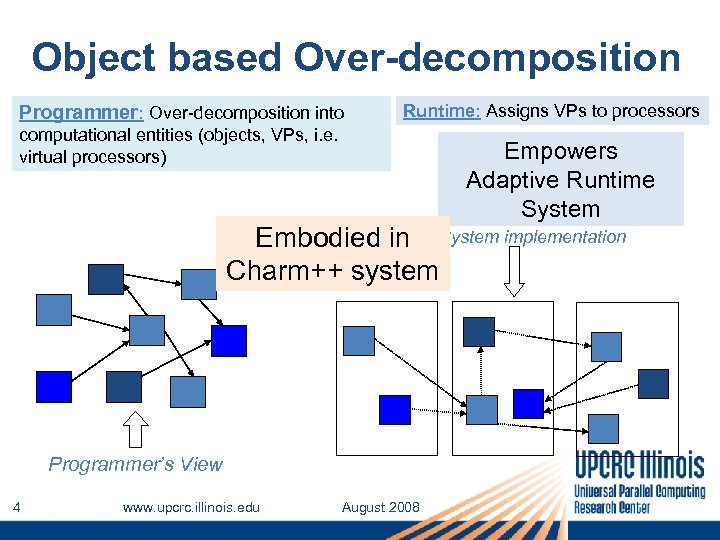

Object based Over-decomposition Programmer: Over-decomposition into Runtime: Assigns VPs to processors computational entities (objects, VPs, i. e. virtual processors) Empowers Adaptive Runtime System Embodied in System implementation Charm++ system Programmer’s View 4 www. upcrc. illinois. edu August 2008

Object based Over-decomposition Programmer: Over-decomposition into Runtime: Assigns VPs to processors computational entities (objects, VPs, i. e. virtual processors) Empowers Adaptive Runtime System Embodied in System implementation Charm++ system Programmer’s View 4 www. upcrc. illinois. edu August 2008

Some benefits of Charm++ model • Software Engineering – Num. of VPs to match application logic (not physical cores) – i. e. “programming model is independent of number of processors” – Separate VPs for different modules • Dynamic mapping – Heterogeneity • Vacate, adjust to speed, share – Change set of processors used – Dynamic load balancing • Message driven execution – Compositionality – Predictability 5 www. upcrc. illinois. edu August 2008

Some benefits of Charm++ model • Software Engineering – Num. of VPs to match application logic (not physical cores) – i. e. “programming model is independent of number of processors” – Separate VPs for different modules • Dynamic mapping – Heterogeneity • Vacate, adjust to speed, share – Change set of processors used – Dynamic load balancing • Message driven execution – Compositionality – Predictability 5 www. upcrc. illinois. edu August 2008

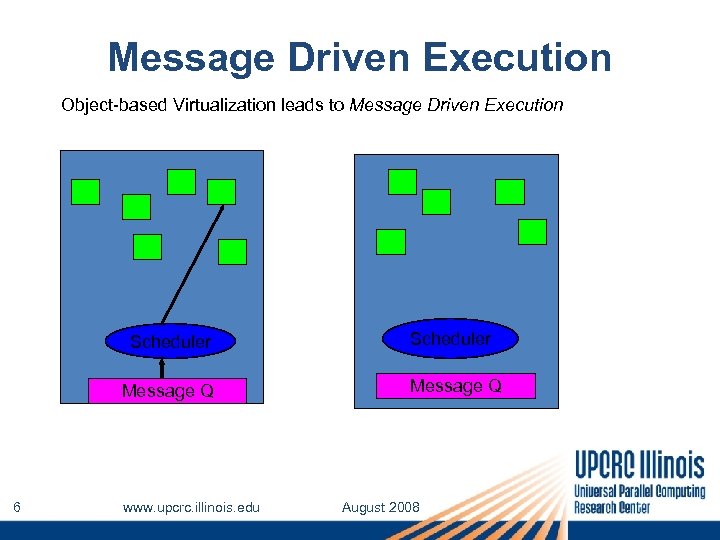

Message Driven Execution Object-based Virtualization leads to Message Driven Execution Scheduler Message Q 6 www. upcrc. illinois. edu Scheduler Message Q August 2008

Message Driven Execution Object-based Virtualization leads to Message Driven Execution Scheduler Message Q 6 www. upcrc. illinois. edu Scheduler Message Q August 2008

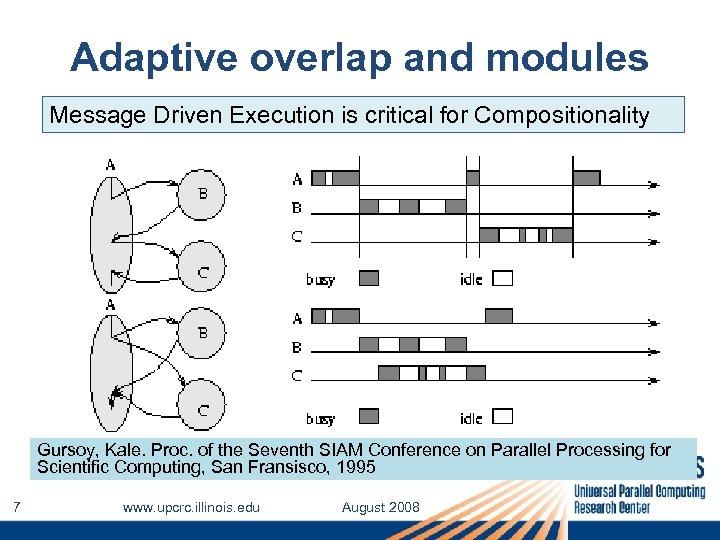

Adaptive overlap and modules Message Driven Execution is critical for Compositionality Gursoy, Kale. Proc. of the Seventh SIAM Conference on Parallel Processing for Scientific Computing, San Fransisco, 1995 7 www. upcrc. illinois. edu August 2008

Adaptive overlap and modules Message Driven Execution is critical for Compositionality Gursoy, Kale. Proc. of the Seventh SIAM Conference on Parallel Processing for Scientific Computing, San Fransisco, 1995 7 www. upcrc. illinois. edu August 2008

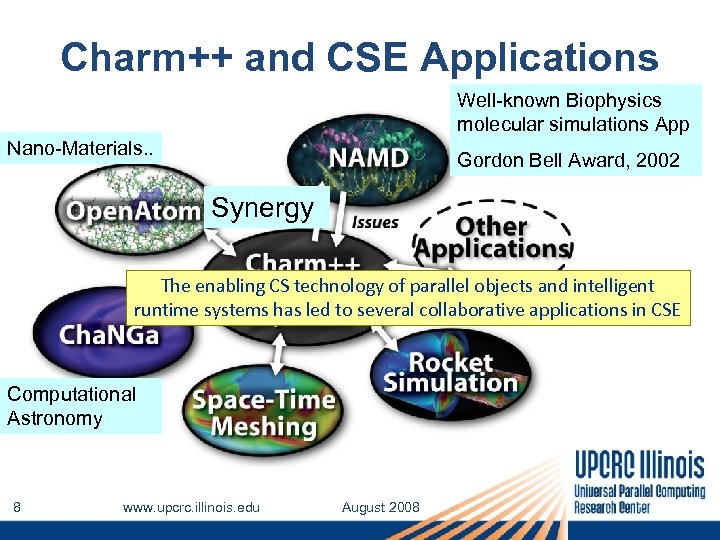

Charm++ and CSE Applications Well-known Biophysics molecular simulations App Nano-Materials. . Gordon Bell Award, 2002 Synergy The enabling CS technology of parallel objects and intelligent runtime systems has led to several collaborative applications in CSE Computational Astronomy 8 www. upcrc. illinois. edu August 2008

Charm++ and CSE Applications Well-known Biophysics molecular simulations App Nano-Materials. . Gordon Bell Award, 2002 Synergy The enabling CS technology of parallel objects and intelligent runtime systems has led to several collaborative applications in CSE Computational Astronomy 8 www. upcrc. illinois. edu August 2008

CSE to Many. Core • The Charm++ model has succeeded in CSE/HPC 15% of cycles at NCSA, 20% at PSC, were used on • Because: Charm++ apps, in a one year – Resource management, … period • In spite of: – Based on C++, not Fortran, message-driven model, . . • But is an even better fit for desktop programmers – C++, event driven execution – Predictability of data/code accesses 9 www. upcrc. illinois. edu August 2008

CSE to Many. Core • The Charm++ model has succeeded in CSE/HPC 15% of cycles at NCSA, 20% at PSC, were used on • Because: Charm++ apps, in a one year – Resource management, … period • In spite of: – Based on C++, not Fortran, message-driven model, . . • But is an even better fit for desktop programmers – C++, event driven execution – Predictability of data/code accesses 9 www. upcrc. illinois. edu August 2008

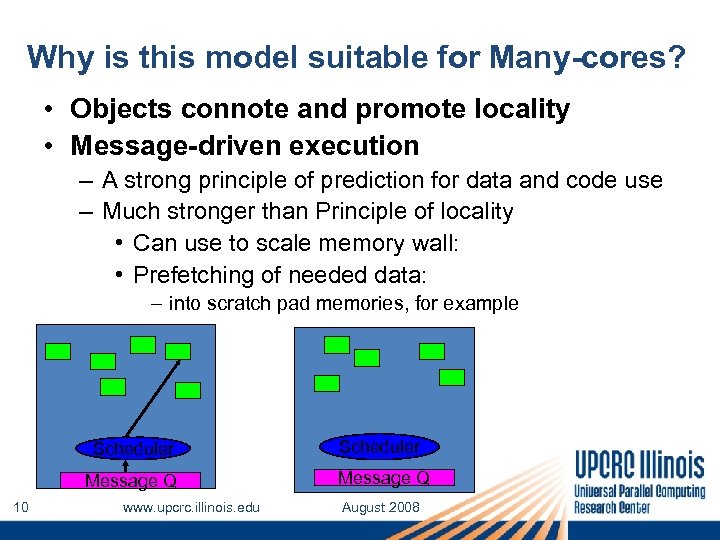

Why is this model suitable for Many-cores? • Objects connote and promote locality • Message-driven execution – A strong principle of prediction for data and code use – Much stronger than Principle of locality • Can use to scale memory wall: • Prefetching of needed data: – into scratch pad memories, for example Scheduler Message Q 10 Scheduler Message Q www. upcrc. illinois. edu August 2008

Why is this model suitable for Many-cores? • Objects connote and promote locality • Message-driven execution – A strong principle of prediction for data and code use – Much stronger than Principle of locality • Can use to scale memory wall: • Prefetching of needed data: – into scratch pad memories, for example Scheduler Message Q 10 Scheduler Message Q www. upcrc. illinois. edu August 2008

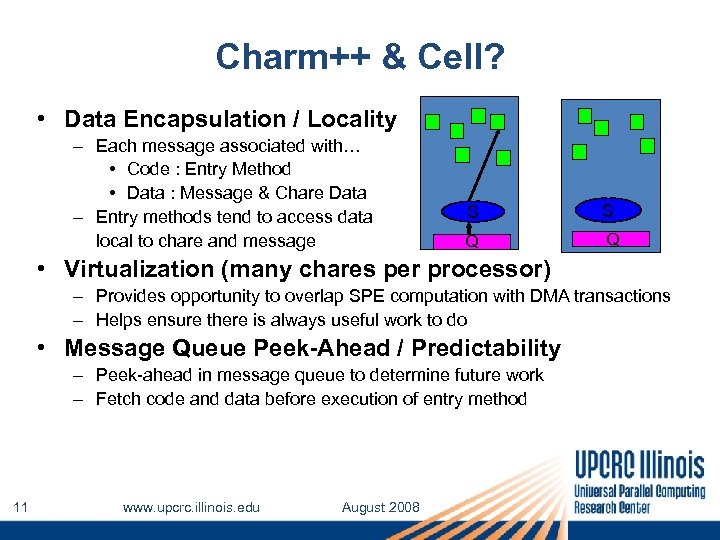

Charm++ & Cell? • Data Encapsulation / Locality – Each message associated with… • Code : Entry Method • Data : Message & Chare Data – Entry methods tend to access data local to chare and message S Q • Virtualization (many chares per processor) – Provides opportunity to overlap SPE computation with DMA transactions – Helps ensure there is always useful work to do • Message Queue Peek-Ahead / Predictability – Peek-ahead in message queue to determine future work – Fetch code and data before execution of entry method 11 www. upcrc. illinois. edu August 2008

Charm++ & Cell? • Data Encapsulation / Locality – Each message associated with… • Code : Entry Method • Data : Message & Chare Data – Entry methods tend to access data local to chare and message S Q • Virtualization (many chares per processor) – Provides opportunity to overlap SPE computation with DMA transactions – Helps ensure there is always useful work to do • Message Queue Peek-Ahead / Predictability – Peek-ahead in message queue to determine future work – Fetch code and data before execution of entry method 11 www. upcrc. illinois. edu August 2008

So, I expect Charm++ to be a strong contender for manycore models BUT: What about the quest for Simplicity? Charm++ is powerful, but not much simpler than, say, MPI 12 www. upcrc. illinois. edu August 2008

So, I expect Charm++ to be a strong contender for manycore models BUT: What about the quest for Simplicity? Charm++ is powerful, but not much simpler than, say, MPI 12 www. upcrc. illinois. edu August 2008

How to Attain Simplicity? • Parallel Programming is much too complex – In part because of resource management issues : • Handled by Adaptive Runtime Systems – In part, because of lack of support for common patterns – In a larger part, because of unintended nondeterminacy • Race conditions • Clearly, we need simple models 13 – But what are willing to give up? (No free lunch) – Give up “Completeness”!? ! – May be one can design a language that is simple to www. upcrc. illinois. edu August 2008 use, but not expressive enough to capture all needs

How to Attain Simplicity? • Parallel Programming is much too complex – In part because of resource management issues : • Handled by Adaptive Runtime Systems – In part, because of lack of support for common patterns – In a larger part, because of unintended nondeterminacy • Race conditions • Clearly, we need simple models 13 – But what are willing to give up? (No free lunch) – Give up “Completeness”!? ! – May be one can design a language that is simple to www. upcrc. illinois. edu August 2008 use, but not expressive enough to capture all needs

Incomplete Models? • A collection of “incomplete” languages, backed by a (few) complete ones, will do the trick – As long as they are interoperable, and – support parallel composition • Recall: 14 – Message Driven Objects promote exactly such interoperability – Different modules, written in different languages/paradigms, can overlap in time and on processors, without programmer having to worry about this explicitly www. upcrc. illinois. edu August 2008

Incomplete Models? • A collection of “incomplete” languages, backed by a (few) complete ones, will do the trick – As long as they are interoperable, and – support parallel composition • Recall: 14 – Message Driven Objects promote exactly such interoperability – Different modules, written in different languages/paradigms, can overlap in time and on processors, without programmer having to worry about this explicitly www. upcrc. illinois. edu August 2008

Simplicity • Where does simplicity come from? – Support common patterns of parallel behavior – Outlaw non-determinacy! – Deterministic, Simple, parallel programming models • With Marc Snir, Vikram Adve, . . – Are there examples of such paradigms? • Multiphase shared Arrays : [LCPC ‘ 04] • Charisma++ : [LCR ’ 04, HPDC ‘ 07] 15 www. upcrc. illinois. edu August 2008

Simplicity • Where does simplicity come from? – Support common patterns of parallel behavior – Outlaw non-determinacy! – Deterministic, Simple, parallel programming models • With Marc Snir, Vikram Adve, . . – Are there examples of such paradigms? • Multiphase shared Arrays : [LCPC ‘ 04] • Charisma++ : [LCR ’ 04, HPDC ‘ 07] 15 www. upcrc. illinois. edu August 2008

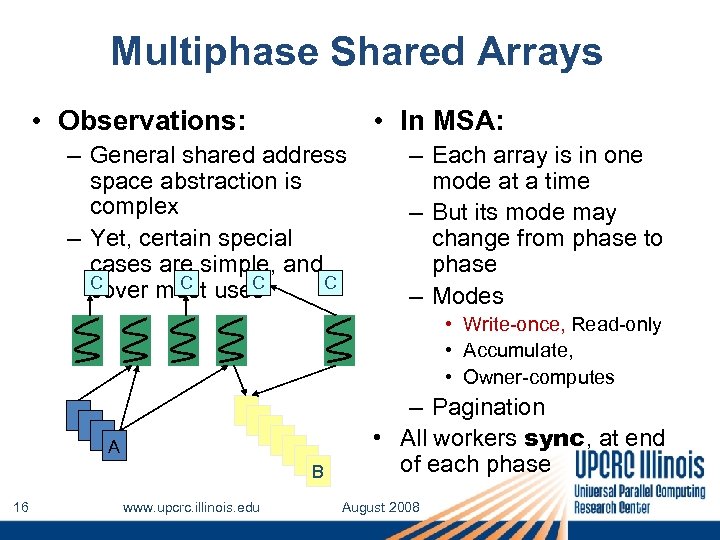

Multiphase Shared Arrays • Observations: • In MSA: – General shared address space abstraction is complex – Yet, certain special cases are simple, and C C cover most uses – Each array is in one mode at a time – But its mode may change from phase to phase – Modes • Write-once, Read-only • Accumulate, • Owner-computes A B 16 www. upcrc. illinois. edu – Pagination • All workers sync, at end of each phase August 2008

Multiphase Shared Arrays • Observations: • In MSA: – General shared address space abstraction is complex – Yet, certain special cases are simple, and C C cover most uses – Each array is in one mode at a time – But its mode may change from phase to phase – Modes • Write-once, Read-only • Accumulate, • Owner-computes A B 16 www. upcrc. illinois. edu – Pagination • All workers sync, at end of each phase August 2008

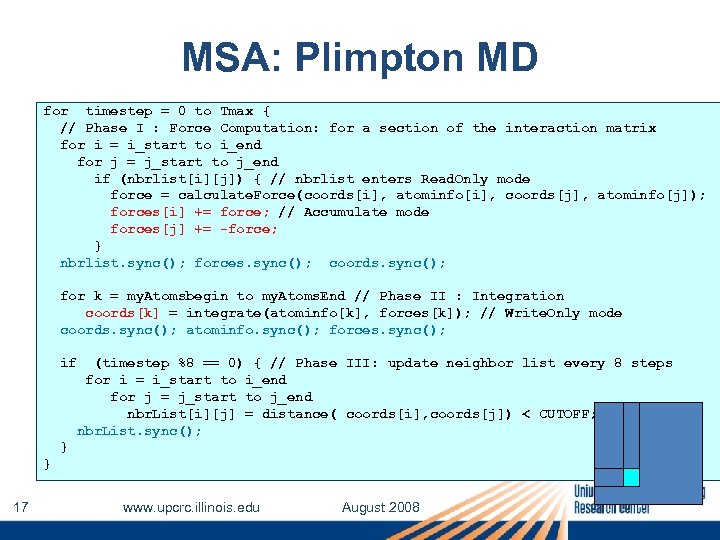

MSA: Plimpton MD for timestep = 0 to Tmax { // Phase I : Force Computation: for a section of the interaction matrix for i = i_start to i_end for j = j_start to j_end if (nbrlist[i][j]) { // nbrlist enters Read. Only mode force = calculate. Force(coords[i], atominfo[i], coords[j], atominfo[j]); forces[i] += force; // Accumulate mode forces[j] += -force; } nbrlist. sync(); forces. sync(); coords. sync(); for k = my. Atomsbegin to my. Atoms. End // Phase II : Integration coords[k] = integrate(atominfo[k], forces[k]); // Write. Only mode coords. sync(); atominfo. sync(); forces. sync(); if (timestep %8 == 0) { // Phase III: update neighbor list every 8 steps for i = i_start to i_end for j = j_start to j_end nbr. List[i][j] = distance( coords[i], coords[j]) < CUTOFF; nbr. List. sync(); } } 17 www. upcrc. illinois. edu August 2008

MSA: Plimpton MD for timestep = 0 to Tmax { // Phase I : Force Computation: for a section of the interaction matrix for i = i_start to i_end for j = j_start to j_end if (nbrlist[i][j]) { // nbrlist enters Read. Only mode force = calculate. Force(coords[i], atominfo[i], coords[j], atominfo[j]); forces[i] += force; // Accumulate mode forces[j] += -force; } nbrlist. sync(); forces. sync(); coords. sync(); for k = my. Atomsbegin to my. Atoms. End // Phase II : Integration coords[k] = integrate(atominfo[k], forces[k]); // Write. Only mode coords. sync(); atominfo. sync(); forces. sync(); if (timestep %8 == 0) { // Phase III: update neighbor list every 8 steps for i = i_start to i_end for j = j_start to j_end nbr. List[i][j] = distance( coords[i], coords[j]) < CUTOFF; nbr. List. sync(); } } 17 www. upcrc. illinois. edu August 2008

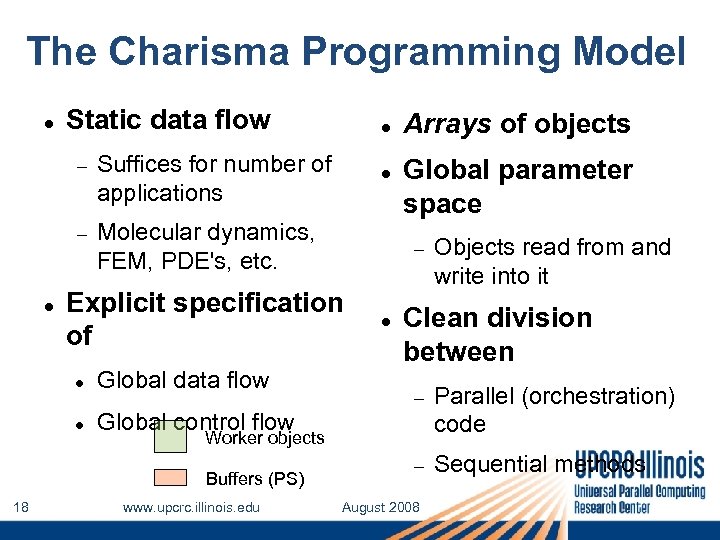

The Charisma Programming Model Static data flow Suffices for number of applications Molecular dynamics, FEM, PDE's, etc. Global data flow Arrays of objects Global parameter space Explicit specification of Parallel (orchestration) code Sequential methods Worker objects www. upcrc. illinois. edu Objects read from and write into it Clean division between Global control flow Buffers (PS) 18 August 2008

The Charisma Programming Model Static data flow Suffices for number of applications Molecular dynamics, FEM, PDE's, etc. Global data flow Arrays of objects Global parameter space Explicit specification of Parallel (orchestration) code Sequential methods Worker objects www. upcrc. illinois. edu Objects read from and write into it Clean division between Global control flow Buffers (PS) 18 August 2008

![Charisma++ example (Simple) while (e > threshold) forall i in J <+e, lb[i], rb[i]> Charisma++ example (Simple) while (e > threshold) forall i in J <+e, lb[i], rb[i]>](https://present5.com/presentation/0c28896d2574bc8ea703f65d8284b779/image-19.jpg) Charisma++ example (Simple) while (e > threshold) forall i in J <+e, lb[i], rb[i]> : = J[i]. compute(rb[i-1], lb[i+1]); 19 www. upcrc. illinois. edu August 2008

Charisma++ example (Simple) while (e > threshold) forall i in J <+e, lb[i], rb[i]> : = J[i]. compute(rb[i-1], lb[i+1]); 19 www. upcrc. illinois. edu August 2008

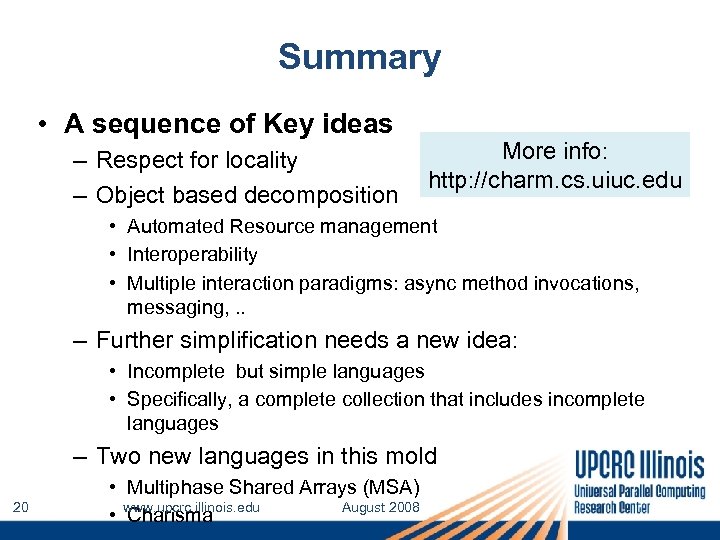

Summary • A sequence of Key ideas – Respect for locality – Object based decomposition More info: http: //charm. cs. uiuc. edu • Automated Resource management • Interoperability • Multiple interaction paradigms: async method invocations, messaging, . . – Further simplification needs a new idea: • Incomplete but simple languages • Specifically, a complete collection that includes incomplete languages – Two new languages in this mold 20 • Multiphase Shared Arrays (MSA) www. upcrc. illinois. edu August 2008 • Charisma

Summary • A sequence of Key ideas – Respect for locality – Object based decomposition More info: http: //charm. cs. uiuc. edu • Automated Resource management • Interoperability • Multiple interaction paradigms: async method invocations, messaging, . . – Further simplification needs a new idea: • Incomplete but simple languages • Specifically, a complete collection that includes incomplete languages – Two new languages in this mold 20 • Multiphase Shared Arrays (MSA) www. upcrc. illinois. edu August 2008 • Charisma