a6d19d272f80a2132a93ecf94cbed3b2.ppt

- Количество слайдов: 32

Similarity & Recommendation Arjen P. de Vries arjen@cwi. nl CWI Scientific Meeting September 27 th 2013

Similarity & Recommendation Arjen P. de Vries arjen@cwi. nl CWI Scientific Meeting September 27 th 2013

Recommendation • Informally: – Search for information “without a query” • Three types: – Content-based recommendation – Collaborative filtering (CF) • Memory-based • Model-based – Hybrid approaches

Recommendation • Informally: – Search for information “without a query” • Three types: – Content-based recommendation – Collaborative filtering (CF) • Memory-based • Model-based – Hybrid approaches

Recommendation • Informally: – Search for information “without a query” • Three types: – Content-based recommendation – Collaborative filtering • Memory-based • Model-based – Hybrid approaches Today’s focus!

Recommendation • Informally: – Search for information “without a query” • Three types: – Content-based recommendation – Collaborative filtering • Memory-based • Model-based – Hybrid approaches Today’s focus!

Collaborative Filtering • Collaborative filtering (originally introduced by Patti Maes as “social information filtering”) 1. Compare user judgments 2. Recommend differences between similar users • Leading principle: People’s tastes are not randomly distributed – A. k. a. “You are what you buy”

Collaborative Filtering • Collaborative filtering (originally introduced by Patti Maes as “social information filtering”) 1. Compare user judgments 2. Recommend differences between similar users • Leading principle: People’s tastes are not randomly distributed – A. k. a. “You are what you buy”

Collaborative Filtering • Benefits over content-based approach – Overcomes problems with finding suitable features to represent e. g. art, music – Serendipity – Implicit mechanism for qualitative aspects like style • Problems: large groups, broad domains

Collaborative Filtering • Benefits over content-based approach – Overcomes problems with finding suitable features to represent e. g. art, music – Serendipity – Implicit mechanism for qualitative aspects like style • Problems: large groups, broad domains

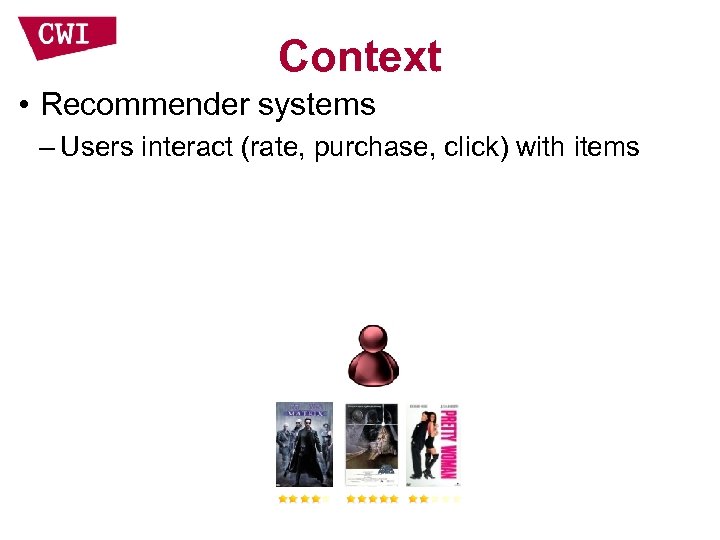

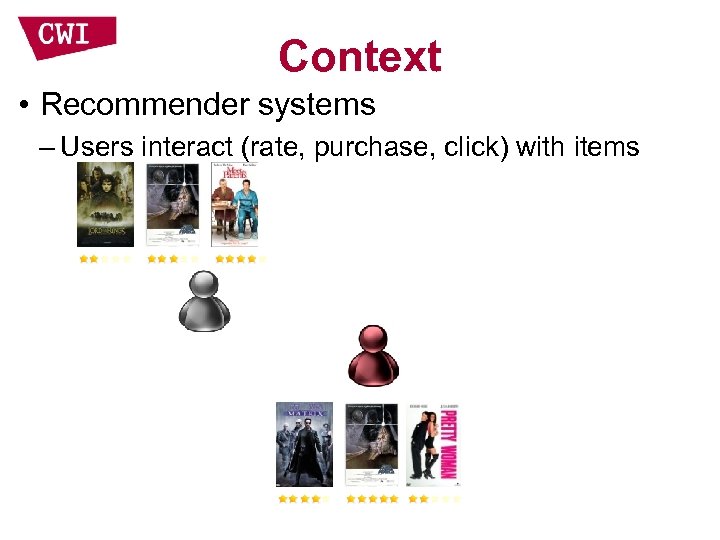

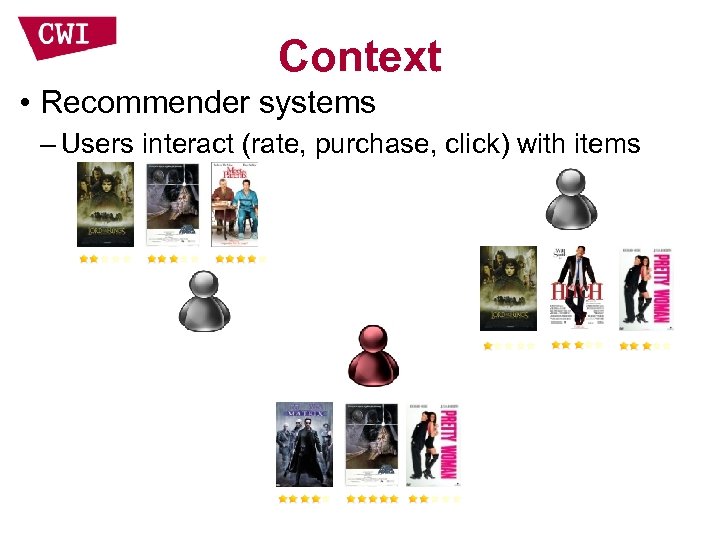

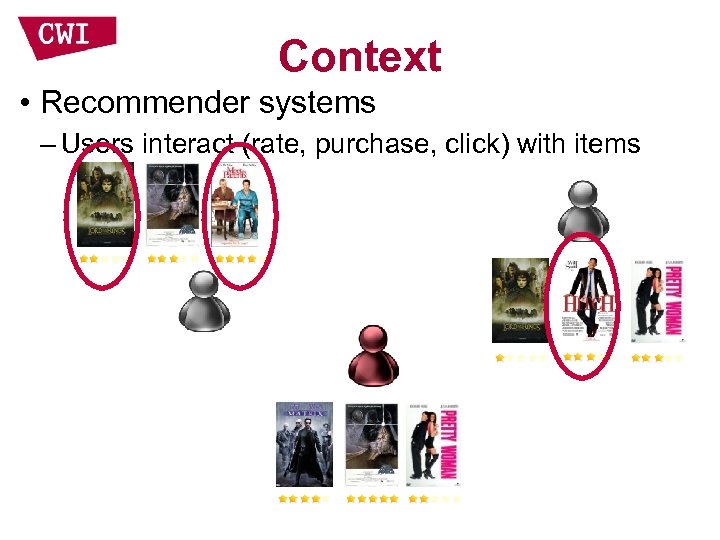

Context • Recommender systems – Users interact (rate, purchase, click) with items

Context • Recommender systems – Users interact (rate, purchase, click) with items

Context • Recommender systems – Users interact (rate, purchase, click) with items

Context • Recommender systems – Users interact (rate, purchase, click) with items

Context • Recommender systems – Users interact (rate, purchase, click) with items

Context • Recommender systems – Users interact (rate, purchase, click) with items

Context • Recommender systems – Users interact (rate, purchase, click) with items

Context • Recommender systems – Users interact (rate, purchase, click) with items

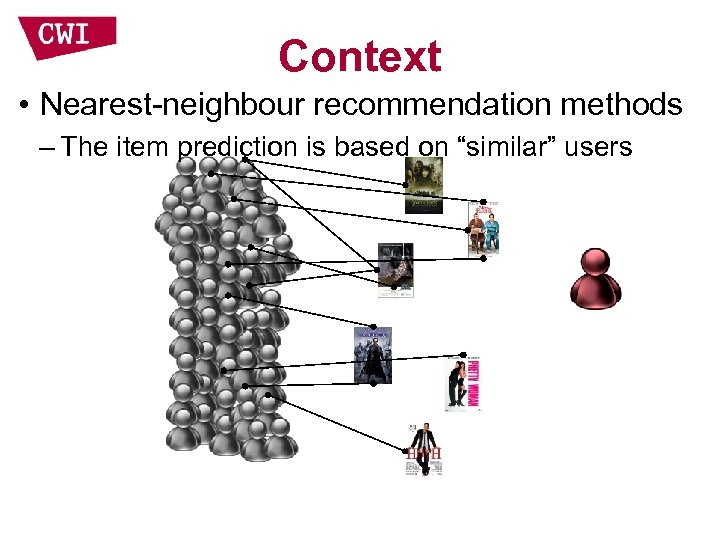

Context • Nearest-neighbour recommendation methods – The item prediction is based on “similar” users

Context • Nearest-neighbour recommendation methods – The item prediction is based on “similar” users

Context • Nearest-neighbour recommendation methods – The item prediction is based on “similar” users

Context • Nearest-neighbour recommendation methods – The item prediction is based on “similar” users

Similarity

Similarity

Similarity

Similarity

Similarity s( , ) sim( , )s( , )

Similarity s( , ) sim( , )s( , )

Research Question • How does the choice of similarity measure determine the quality of the recommendations?

Research Question • How does the choice of similarity measure determine the quality of the recommendations?

Sparseness • Too many items exist, so many ratings will be missing • A user’s neighborhood is likely to extend to include “not-so-similar” users and/or items

Sparseness • Too many items exist, so many ratings will be missing • A user’s neighborhood is likely to extend to include “not-so-similar” users and/or items

“Best” similarity? • Consider cosine similarity vs. Pearson similarity • Most existing studies report Pearson correlation to lead to superior recommendation accuracy

“Best” similarity? • Consider cosine similarity vs. Pearson similarity • Most existing studies report Pearson correlation to lead to superior recommendation accuracy

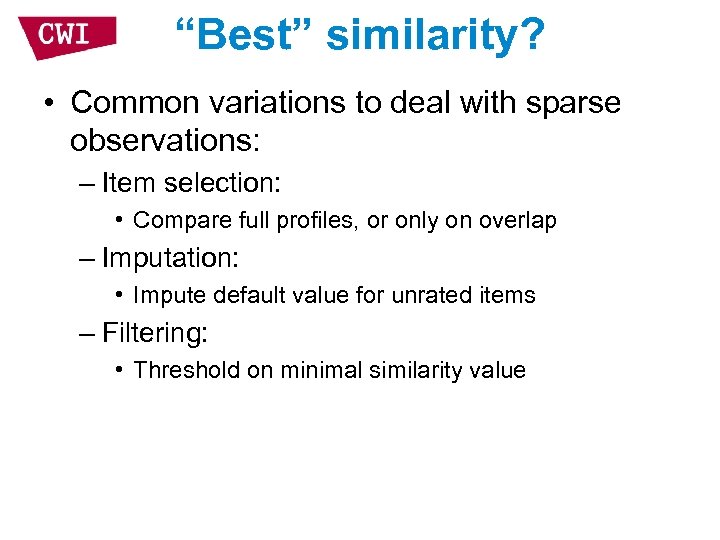

“Best” similarity? • Common variations to deal with sparse observations: – Item selection: • Compare full profiles, or only on overlap – Imputation: • Impute default value for unrated items – Filtering: • Threshold on minimal similarity value

“Best” similarity? • Common variations to deal with sparse observations: – Item selection: • Compare full profiles, or only on overlap – Imputation: • Impute default value for unrated items – Filtering: • Threshold on minimal similarity value

“Best” similarity? • Cosine superior (!), but not for all settings – No consistent results

“Best” similarity? • Cosine superior (!), but not for all settings – No consistent results

Analysis

Analysis

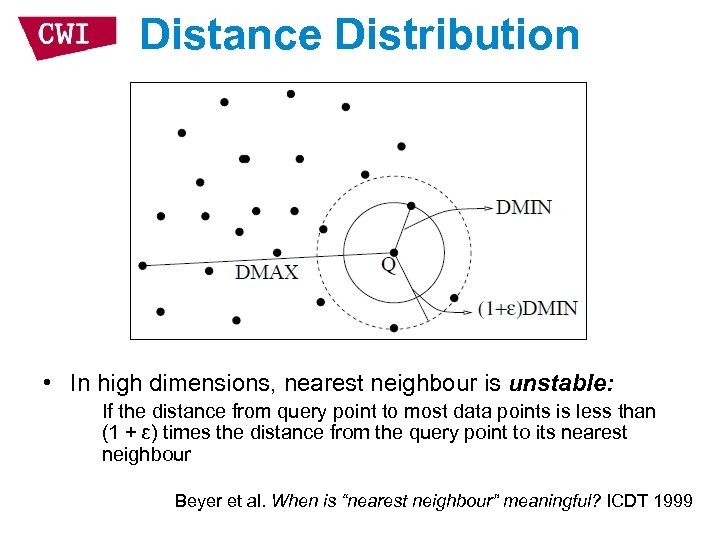

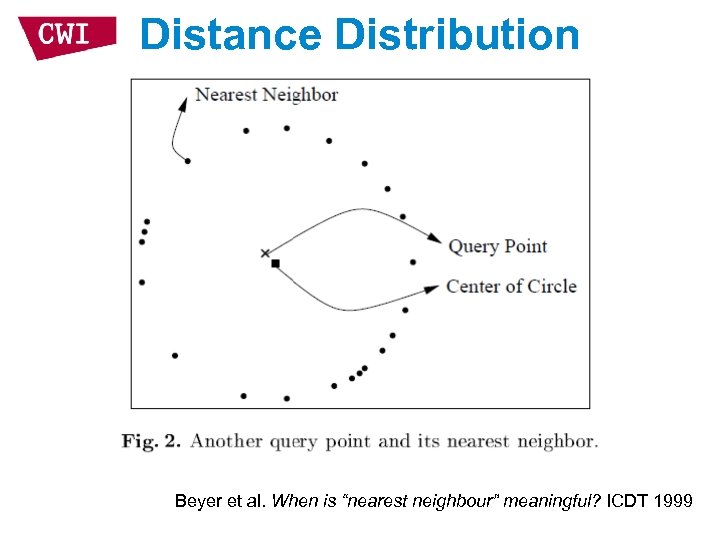

Distance Distribution • In high dimensions, nearest neighbour is unstable: If the distance from query point to most data points is less than (1 + ε) times the distance from the query point to its nearest neighbour Beyer et al. When is “nearest neighbour” meaningful? ICDT 1999

Distance Distribution • In high dimensions, nearest neighbour is unstable: If the distance from query point to most data points is less than (1 + ε) times the distance from the query point to its nearest neighbour Beyer et al. When is “nearest neighbour” meaningful? ICDT 1999

Distance Distribution Beyer et al. When is “nearest neighbour” meaningful? ICDT 1999

Distance Distribution Beyer et al. When is “nearest neighbour” meaningful? ICDT 1999

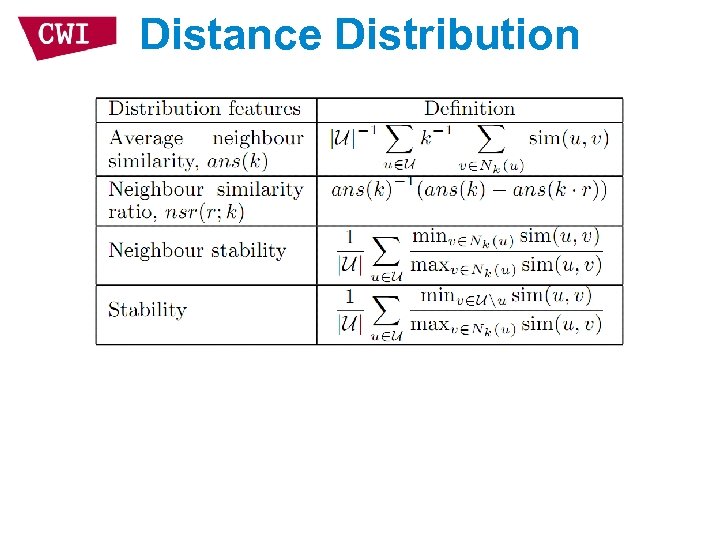

Distance Distribution • Quality q(n, f): Fraction of users for which the similarity function has ranked at least n percent of the user community within a factor f of the nearest neighbour’s similarity value (well. . . its corresponding distance)

Distance Distribution • Quality q(n, f): Fraction of users for which the similarity function has ranked at least n percent of the user community within a factor f of the nearest neighbour’s similarity value (well. . . its corresponding distance)

Distance Distribution

Distance Distribution

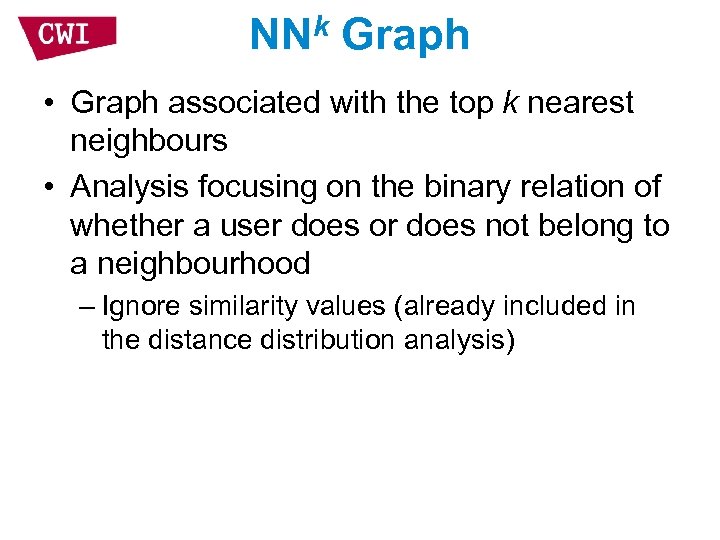

NNk Graph • Graph associated with the top k nearest neighbours • Analysis focusing on the binary relation of whether a user does or does not belong to a neighbourhood – Ignore similarity values (already included in the distance distribution analysis)

NNk Graph • Graph associated with the top k nearest neighbours • Analysis focusing on the binary relation of whether a user does or does not belong to a neighbourhood – Ignore similarity values (already included in the distance distribution analysis)

NNk Graph

NNk Graph

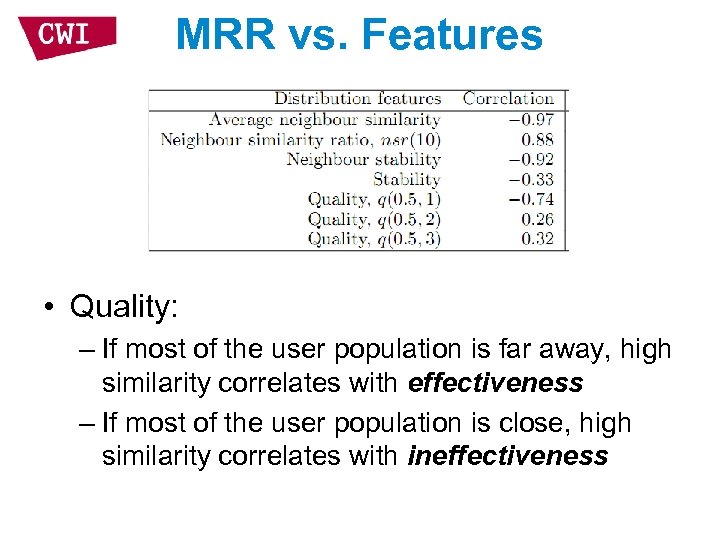

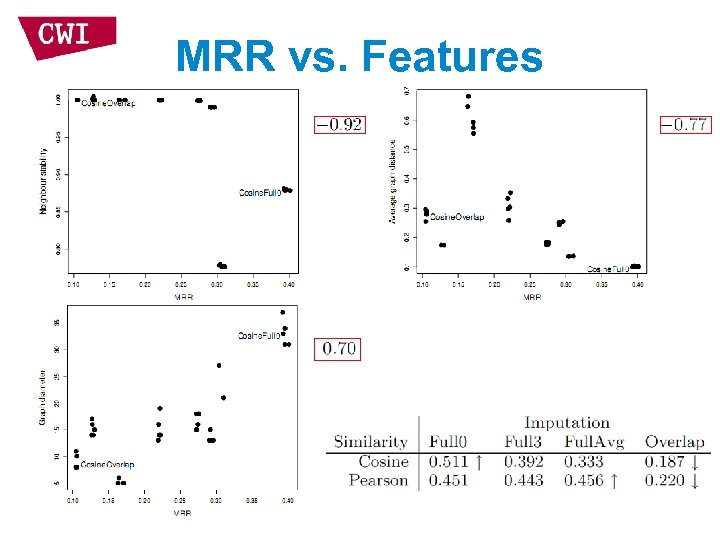

MRR vs. Features • Quality: – If most of the user population is far away, high similarity correlates with effectiveness – If most of the user population is close, high similarity correlates with ineffectiveness

MRR vs. Features • Quality: – If most of the user population is far away, high similarity correlates with effectiveness – If most of the user population is close, high similarity correlates with ineffectiveness

MRR vs. Features

MRR vs. Features

Conclusions (so far) • “Similarity features” correlate with recommendation effectiveness – “Stability” of a metric (as defined in database literature on k-NN search in high dimensions) is related to its ability to discriminate between good and bad neighbours

Conclusions (so far) • “Similarity features” correlate with recommendation effectiveness – “Stability” of a metric (as defined in database literature on k-NN search in high dimensions) is related to its ability to discriminate between good and bad neighbours

Future Work • How to exploit this knowledge to now improve recommendation systems?

Future Work • How to exploit this knowledge to now improve recommendation systems?

News Recommendation Challenge

News Recommendation Challenge

Thanks • Alejandro Bellogín – ERCIM fellow in the Information Access group Details: Bellogín and De Vries, ICTIR 2013.

Thanks • Alejandro Bellogín – ERCIM fellow in the Information Access group Details: Bellogín and De Vries, ICTIR 2013.