d34291457959721a4a88c4a4f100b973.ppt

- Количество слайдов: 41

Sharing Your Court’s Successes: The Importance of Program Evaluation and Data Entry Kevin Baldwin, Ph. D. Applied Research Services, Inc. Atlanta, GA

Sharing Your Court’s Successes: The Importance of Program Evaluation and Data Entry Kevin Baldwin, Ph. D. Applied Research Services, Inc. Atlanta, GA

Today’s Objectives • Provide “Evaluation 101” - the basics • Describe a generic logic model • Use the logic model as a starting point to develop goals, objectives, and measurable indicators • Develop a basic evaluation plan • Discuss data, data systems, and tools • Discuss importance of continuous evaluation, quality improvement

Today’s Objectives • Provide “Evaluation 101” - the basics • Describe a generic logic model • Use the logic model as a starting point to develop goals, objectives, and measurable indicators • Develop a basic evaluation plan • Discuss data, data systems, and tools • Discuss importance of continuous evaluation, quality improvement

What is Evaluation? • The systematic collection and analysis of information (data), often for the purpose of making decisions • “e. VALUEation – value is our middle name” – this implies that we are assigning worth to something

What is Evaluation? • The systematic collection and analysis of information (data), often for the purpose of making decisions • “e. VALUEation – value is our middle name” – this implies that we are assigning worth to something

Why Evaluate? • To answer critical questions about the court • To document the court’s processes and demonstrate outcomes • To assess Fidelity of Implementation • To comply with funder’s mandates • To provide information and feedback for Continuous Quality Improvement

Why Evaluate? • To answer critical questions about the court • To document the court’s processes and demonstrate outcomes • To assess Fidelity of Implementation • To comply with funder’s mandates • To provide information and feedback for Continuous Quality Improvement

Logic Models – An Indispensable Tool • A logic model is a graphical representation of your program that describes what problems you are addressing, how you are addressing them, and what you hope to accomplish in the short, medium, and long-term • While it describes your program, it also serves as a basis for your evaluation, as it links what you are doing to what you hope to accomplish

Logic Models – An Indispensable Tool • A logic model is a graphical representation of your program that describes what problems you are addressing, how you are addressing them, and what you hope to accomplish in the short, medium, and long-term • While it describes your program, it also serves as a basis for your evaluation, as it links what you are doing to what you hope to accomplish

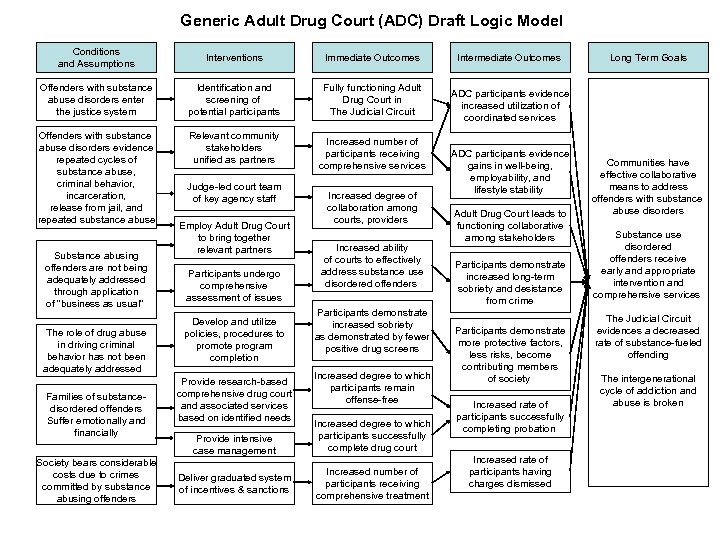

Generic Adult Drug Court (ADC) Draft Logic Model Conditions and Assumptions Interventions Immediate Outcomes Offenders with substance abuse disorders enter the justice system Identification and screening of potential participants Fully functioning Adult Drug Court in The Judicial Circuit Offenders with substance abuse disorders evidence repeated cycles of substance abuse, criminal behavior, incarceration, release from jail, and repeated substance abuse Relevant community stakeholders unified as partners Substance abusing offenders are not being adequately addressed through application of “business as usual” The role of drug abuse in driving criminal behavior has not been adequately addressed Families of substancedisordered offenders Suffer emotionally and financially Society bears considerable costs due to crimes committed by substance abusing offenders Judge-led court team of key agency staff Employ Adult Drug Court to bring together relevant partners Participants undergo comprehensive assessment of issues Develop and utilize policies, procedures to promote program completion Provide research-based comprehensive drug court and associated services based on identified needs Provide intensive case management Deliver graduated system of incentives & sanctions Increased number of participants receiving comprehensive services Increased degree of collaboration among courts, providers Increased ability of courts to effectively address substance use disordered offenders Participants demonstrate increased sobriety as demonstrated by fewer positive drug screens Increased degree to which participants remain offense-free Increased degree to which participants successfully complete drug court Increased number of participants receiving comprehensive treatment Intermediate Outcomes Long Term Goals ADC participants evidence increased utilization of coordinated services ADC participants evidence gains in well-being, employability, and lifestyle stability Adult Drug Court leads to functioning collaborative among stakeholders Participants demonstrate increased long-term sobriety and desistance from crime Participants demonstrate more protective factors, less risks, become contributing members of society Increased rate of participants successfully completing probation Increased rate of participants having charges dismissed Communities have effective collaborative means to address offenders with substance abuse disorders Substance use disordered offenders receive early and appropriate intervention and comprehensive services The Judicial Circuit evidences a decreased rate of substance-fueled offending The intergenerational cycle of addiction and abuse is broken

Generic Adult Drug Court (ADC) Draft Logic Model Conditions and Assumptions Interventions Immediate Outcomes Offenders with substance abuse disorders enter the justice system Identification and screening of potential participants Fully functioning Adult Drug Court in The Judicial Circuit Offenders with substance abuse disorders evidence repeated cycles of substance abuse, criminal behavior, incarceration, release from jail, and repeated substance abuse Relevant community stakeholders unified as partners Substance abusing offenders are not being adequately addressed through application of “business as usual” The role of drug abuse in driving criminal behavior has not been adequately addressed Families of substancedisordered offenders Suffer emotionally and financially Society bears considerable costs due to crimes committed by substance abusing offenders Judge-led court team of key agency staff Employ Adult Drug Court to bring together relevant partners Participants undergo comprehensive assessment of issues Develop and utilize policies, procedures to promote program completion Provide research-based comprehensive drug court and associated services based on identified needs Provide intensive case management Deliver graduated system of incentives & sanctions Increased number of participants receiving comprehensive services Increased degree of collaboration among courts, providers Increased ability of courts to effectively address substance use disordered offenders Participants demonstrate increased sobriety as demonstrated by fewer positive drug screens Increased degree to which participants remain offense-free Increased degree to which participants successfully complete drug court Increased number of participants receiving comprehensive treatment Intermediate Outcomes Long Term Goals ADC participants evidence increased utilization of coordinated services ADC participants evidence gains in well-being, employability, and lifestyle stability Adult Drug Court leads to functioning collaborative among stakeholders Participants demonstrate increased long-term sobriety and desistance from crime Participants demonstrate more protective factors, less risks, become contributing members of society Increased rate of participants successfully completing probation Increased rate of participants having charges dismissed Communities have effective collaborative means to address offenders with substance abuse disorders Substance use disordered offenders receive early and appropriate intervention and comprehensive services The Judicial Circuit evidences a decreased rate of substance-fueled offending The intergenerational cycle of addiction and abuse is broken

Measurable Indicators • Using your logic model, develop a series of measurable indicators that are tied to your goals and objectives • It’s not enough to say that “things will change” – we need to specify and measure what changes occur, in what direction, compared to whom, and in what amount

Measurable Indicators • Using your logic model, develop a series of measurable indicators that are tied to your goals and objectives • It’s not enough to say that “things will change” – we need to specify and measure what changes occur, in what direction, compared to whom, and in what amount

Evaluation Components • Formative Evaluation – assesses the start-up activities of a program or initiative • Process Evaluation – assesses the “who, what, where, when, how, and how much” associated with delivery of a program or initiative

Evaluation Components • Formative Evaluation – assesses the start-up activities of a program or initiative • Process Evaluation – assesses the “who, what, where, when, how, and how much” associated with delivery of a program or initiative

Evaluation Components, cont. • Fidelity of Implementation – assesses the degree to which a program or initiative is delivered as designed • Outcome Evaluation – assesses the degree to which a program or initiative achieves its stated objectives – the “so what? ” aspect

Evaluation Components, cont. • Fidelity of Implementation – assesses the degree to which a program or initiative is delivered as designed • Outcome Evaluation – assesses the degree to which a program or initiative achieves its stated objectives – the “so what? ” aspect

Evaluation Components, cont. • Cost Benefit Analysis – assesses the costs of your program and compares the costs (typically per participant) to the cost of not having the program. Typically drug courts have demonstrated significant cost savings, which helps with sustainability.

Evaluation Components, cont. • Cost Benefit Analysis – assesses the costs of your program and compares the costs (typically per participant) to the cost of not having the program. Typically drug courts have demonstrated significant cost savings, which helps with sustainability.

Evaluation Data Evaluation involves the systematic measurement and collection of data, the specific types of which depend on the types of questions you are asking and the types of evaluation components you have included in your evaluation plan.

Evaluation Data Evaluation involves the systematic measurement and collection of data, the specific types of which depend on the types of questions you are asking and the types of evaluation components you have included in your evaluation plan.

Evaluation Data, cont. • Basically, we need to measure certain meaningful things that can tell us how our program is working (process evaluation), if it is working as designed (fidelity of implementation), if it is having the desired effects (outcome evaluation), and at what relative cost (cost benefit analysis).

Evaluation Data, cont. • Basically, we need to measure certain meaningful things that can tell us how our program is working (process evaluation), if it is working as designed (fidelity of implementation), if it is having the desired effects (outcome evaluation), and at what relative cost (cost benefit analysis).

Evaluation Data, cont. • The data to be collected derive directly from the questions we are asking. It helps therefore to craft questions of a directional nature: – Do graduates have reduced ( ) rates of recidivism? – Do participants have increased ( ) employment skills?

Evaluation Data, cont. • The data to be collected derive directly from the questions we are asking. It helps therefore to craft questions of a directional nature: – Do graduates have reduced ( ) rates of recidivism? – Do participants have increased ( ) employment skills?

Evaluation Data, cont. • We often cannot just report however that something increased or decreased. We need to say how much, and compared to whom. Therefore we need benchmarks and/or control and/or comparison groups.

Evaluation Data, cont. • We often cannot just report however that something increased or decreased. We need to say how much, and compared to whom. Therefore we need benchmarks and/or control and/or comparison groups.

Evaluation Data, cont. Comparing our results to a benchmark would be to say that our participants had a 5% positive urinalysis rate, compared to the 25% rate observed in non-participants.

Evaluation Data, cont. Comparing our results to a benchmark would be to say that our participants had a 5% positive urinalysis rate, compared to the 25% rate observed in non-participants.

Evaluation Data, cont. A comparison group is a group of people similar to our intervention sample, but receiving some other type of intervention. A control group is a group of people similar to our intervention sample, but receiving no intervention at all.

Evaluation Data, cont. A comparison group is a group of people similar to our intervention sample, but receiving some other type of intervention. A control group is a group of people similar to our intervention sample, but receiving no intervention at all.

Evaluation Planning • Your evaluation should be structured in such a way as to obtain a clear understanding of the court and its objectives from many perspectives • Determine all potential audiences for your evaluation findings, and determine what they would like to know about the court

Evaluation Planning • Your evaluation should be structured in such a way as to obtain a clear understanding of the court and its objectives from many perspectives • Determine all potential audiences for your evaluation findings, and determine what they would like to know about the court

Evaluation Planning, cont. • Develop a list of all potential questions to be answered by an evaluation • Survey all means and methods currently in place to collect and report on data • Match the list of questions to the list of data currently being collected, and identify the gaps

Evaluation Planning, cont. • Develop a list of all potential questions to be answered by an evaluation • Survey all means and methods currently in place to collect and report on data • Match the list of questions to the list of data currently being collected, and identify the gaps

Evaluation Planning, cont. • Bring together a group of stakeholders interested in data and evaluation to form a data or evaluation team (also consider bringing on a professional evaluator) • Develop an evaluation plan that tracks the logic model, addresses the court’s stated objectives and answers stakeholders’ questions

Evaluation Planning, cont. • Bring together a group of stakeholders interested in data and evaluation to form a data or evaluation team (also consider bringing on a professional evaluator) • Develop an evaluation plan that tracks the logic model, addresses the court’s stated objectives and answers stakeholders’ questions

Evaluation Planning, cont. • A good evaluation plan describes: – what data is to be collected – when data is collected – in what format data is collected – where and how the data is obtained – who is responsible for collecting it

Evaluation Planning, cont. • A good evaluation plan describes: – what data is to be collected – when data is collected – in what format data is collected – where and how the data is obtained – who is responsible for collecting it

Evaluation Planning, cont. • A good evaluation plan will also include an automated means of recording, storing, and reporting data. This is a critical, but often overlooked aspect of evaluation. Paper-based systems are not sufficient.

Evaluation Planning, cont. • A good evaluation plan will also include an automated means of recording, storing, and reporting data. This is a critical, but often overlooked aspect of evaluation. Paper-based systems are not sufficient.

The ‘ 80 s are calling – they want their paper-based data system back

The ‘ 80 s are calling – they want their paper-based data system back

Evaluation Planning, cont. • Always be thinking of ways to measure, document, and communicate your impacts – the differences your program makes. What would your community look life if your program suddenly went away?

Evaluation Planning, cont. • Always be thinking of ways to measure, document, and communicate your impacts – the differences your program makes. What would your community look life if your program suddenly went away?

Internet-based Court Case Management Systems • The state has asked courts to chose between one of two systems: – Connexis Cloud – Five. Point Solutions Accountability Court Case Management (ACCM) • If you are not using one of these, use another electronic means of storing and retrieving your court and participant data

Internet-based Court Case Management Systems • The state has asked courts to chose between one of two systems: – Connexis Cloud – Five. Point Solutions Accountability Court Case Management (ACCM) • If you are not using one of these, use another electronic means of storing and retrieving your court and participant data

Internet-based Court Case Management Systems • It is not enough to simply have one of these systems – you and your team members need to use the system to the point that you come to rely on it • If you are reliant on the system, the data must adhere to the three Cs: – Complete – Current – Correct

Internet-based Court Case Management Systems • It is not enough to simply have one of these systems – you and your team members need to use the system to the point that you come to rely on it • If you are reliant on the system, the data must adhere to the three Cs: – Complete – Current – Correct

Internet-based Court Case Management Systems G I G O

Internet-based Court Case Management Systems G I G O

The Importance of Fidelity You could have the most powerful intervention ever devised, but it is worthless if it is not delivered as its developers intended. For example, Excedrin® works wonders for headaches, but not when applied directly to the forehead.

The Importance of Fidelity You could have the most powerful intervention ever devised, but it is worthless if it is not delivered as its developers intended. For example, Excedrin® works wonders for headaches, but not when applied directly to the forehead.

Fidelity in the Real World

Fidelity in the Real World

Qualitative Data • This refers to data that is generally non-numeric in nature. It is obtained from interviews, focus groups, observations, and other forms of interaction between and among people. Often this provides rich narrative “stories” that inform and expand upon numerical data.

Qualitative Data • This refers to data that is generally non-numeric in nature. It is obtained from interviews, focus groups, observations, and other forms of interaction between and among people. Often this provides rich narrative “stories” that inform and expand upon numerical data.

Quantitative Data • This refers to data that is numeric in nature. It is obtained by counting or measuring things or constructs, and is generally what people think of when they hear the word data. These data can be abstracted and certain tests can be applied to answer very specific questions.

Quantitative Data • This refers to data that is numeric in nature. It is obtained by counting or measuring things or constructs, and is generally what people think of when they hear the word data. These data can be abstracted and certain tests can be applied to answer very specific questions.

Mixed Methods Design • It is a good idea to collect a broad range of both qualitative and quantitative data, and doing so is referred to as a mixed methods evaluation design. This provides the necessary numerical (“quantifiable”) data, while providing the qualitative stories that people can really connect with.

Mixed Methods Design • It is a good idea to collect a broad range of both qualitative and quantitative data, and doing so is referred to as a mixed methods evaluation design. This provides the necessary numerical (“quantifiable”) data, while providing the qualitative stories that people can really connect with.

Evaluation Data Examples • Process data examples – Number of referrals – Number enrolled – Number of classes attended – Types of classes attended – Reasons for leaving (e. g. , completion, dropped out) – Number of drug tests administered – Number of drug tests refused/failed

Evaluation Data Examples • Process data examples – Number of referrals – Number enrolled – Number of classes attended – Types of classes attended – Reasons for leaving (e. g. , completion, dropped out) – Number of drug tests administered – Number of drug tests refused/failed

Evaluation Data Examples, cont. • Outcome data examples – Number of graduates – Number of re-arrests – Percentage who relapse – Number of subsequent DFCS cases – Number of subsequent hospitalizations – Number of subsequent ER admissions – Days clean

Evaluation Data Examples, cont. • Outcome data examples – Number of graduates – Number of re-arrests – Percentage who relapse – Number of subsequent DFCS cases – Number of subsequent hospitalizations – Number of subsequent ER admissions – Days clean

Evaluation Data Examples, cont. • Cost Benefit Analysis data examples – Cost of treatment per participant – Cost of day in jail – Cost of foster care per child – Cost of adjudicating one felony drug offense – Cost of DFCS case investigation

Evaluation Data Examples, cont. • Cost Benefit Analysis data examples – Cost of treatment per participant – Cost of day in jail – Cost of foster care per child – Cost of adjudicating one felony drug offense – Cost of DFCS case investigation

Basic Principles • Respect the privacy & confidentiality of those with whom you are working • Federal laws (e. g. , CFR 42, HIPPA) govern the use of substance abuse & health information

Basic Principles • Respect the privacy & confidentiality of those with whom you are working • Federal laws (e. g. , CFR 42, HIPPA) govern the use of substance abuse & health information

Basic Principles • Respect and take into account the cultural, racial, ethnic and gender differences of your clients & their families • Use results responsibly and ethically – don’t go beyond the intended use of the measures

Basic Principles • Respect and take into account the cultural, racial, ethnic and gender differences of your clients & their families • Use results responsibly and ethically – don’t go beyond the intended use of the measures

Evaluation Results • Ultimately your stakeholders and funders will ask these questions: – Did it work? Was there an impact? – How well did it work? How much of an impact did you observe? – How does this impact compare with results of alternative models?

Evaluation Results • Ultimately your stakeholders and funders will ask these questions: – Did it work? Was there an impact? – How well did it work? How much of an impact did you observe? – How does this impact compare with results of alternative models?

Using Evaluation Findings • Describe your court and it’s participants • Describe your court’s processes and procedures • Continually improve your court’s functioning (CQI) • Document your impacts and outcomes (sustainability)

Using Evaluation Findings • Describe your court and it’s participants • Describe your court’s processes and procedures • Continually improve your court’s functioning (CQI) • Document your impacts and outcomes (sustainability)

Questions?

Questions?

Kevin Baldwin, Ph. D. Applied Research Services, Inc. Voice: 404 -881 -1120 kbaldwin@ars-corp. com www. ars-corp. com Presented at: 2017 CACJ Accountability Court Conference – Athens, GA

Kevin Baldwin, Ph. D. Applied Research Services, Inc. Voice: 404 -881 -1120 kbaldwin@ars-corp. com www. ars-corp. com Presented at: 2017 CACJ Accountability Court Conference – Athens, GA

Visit our web site at www. ars-corp. com or call (404) 881 -1120 CACJ 2017 Accountability Court Conference – September, 2017

Visit our web site at www. ars-corp. com or call (404) 881 -1120 CACJ 2017 Accountability Court Conference – September, 2017