f6d4a9f930aa175a3f462a95c3599100.ppt

- Количество слайдов: 36

Session id: The Oracle 9 i Multi-Terabyte Data Warehouse Jeff Parker Manager Data Warehouse Development Amazon. com

The Challenges • • • Rapidly evolving business Growing data volumes Do more with less

The Challenges • Rapidly evolving business – New international markets – Continual innovation of features on Amazon • Buy it used • Magazine subscriptions • • – Marketplace Partnerships – Toys R Us, Target Growing data volumes Do more with less

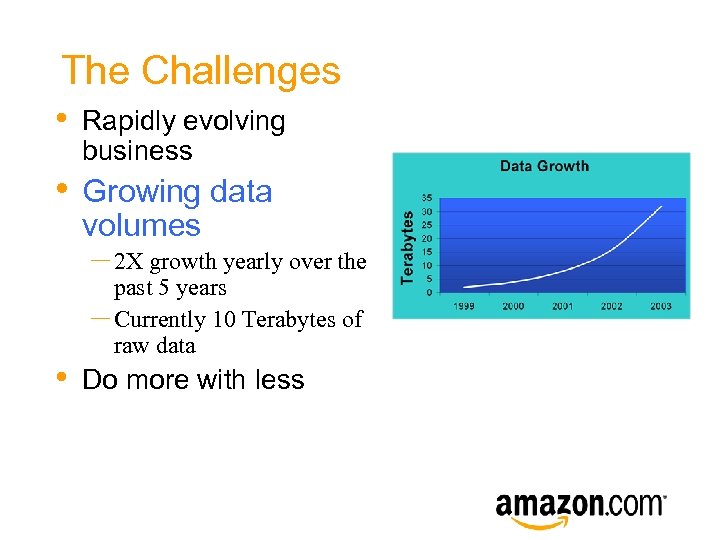

The Challenges • • Rapidly evolving business Growing data volumes – 2 X growth yearly over the • past 5 years – Currently 10 Terabytes of raw data Do more with less

The Challenges • • Rapidly evolving business Growing data volumes • Do more with less – Innovative use of technology and resources – Throwing money and people at the problem is not an option – Leverage existing investment in Oracle

Addressing the issues • Rapidly evolving business –Denormalize only for performance reasons –Create a solution that allows new datasets to be brought in rapidly to the DW, but without high maintenance costs • • Growing data volumes Do more with less

Addressing the issues • Rapidly evolving business • Growing data volumes – Dual database approach to ETL • Staging database for efficient transformation of large datasets. • SQL and hash-joins allow transforms to scale in a non-linear fashion Second database optimized for analytics – Oracle as an API • • Simplifies ETL architecture • Better scalability than traditional ETL tools Do more with less

Addressing the issues • Rapidly evolving business • Growing data volumes • Do more with less –One DW schema supports all countries –Cut costs by eliminating unneeded software –Data driven Load functionality

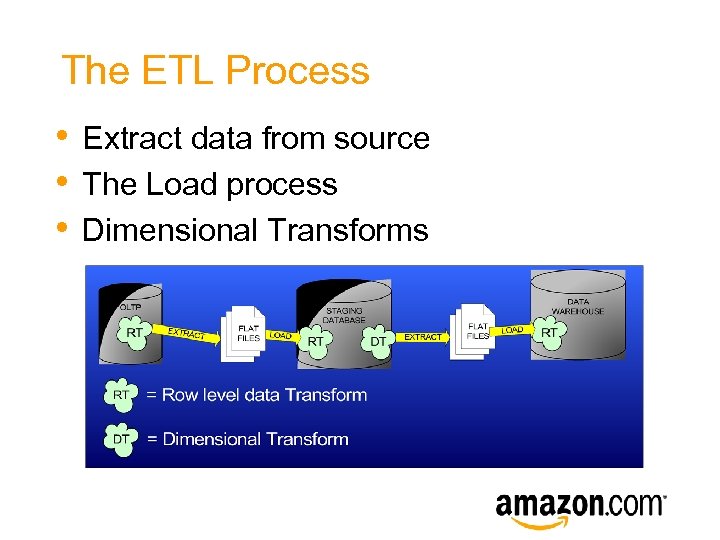

The ETL Process • • • Extract data from source The Load process Dimensional Transforms

The ETL Process • Extract data from source –Can create one or more files to be loaded –Must produce Metadata upon which the Load process can depend • • The Load Process Dimensional Transforms

Extract produced Metadata • Describes each field in database type • • terms Changes as the dataset changes Can reference multiple files Very reliable No additional overhead

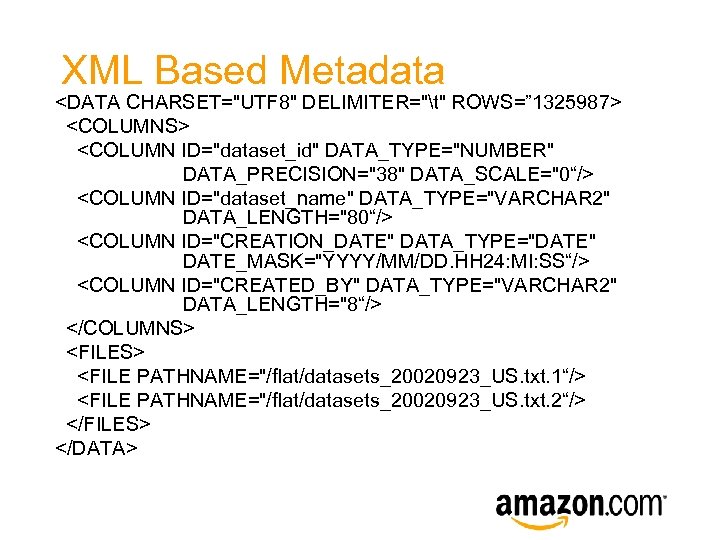

XML Based Metadata <DATA CHARSET="UTF 8" DELIMITER="t" ROWS=” 1325987> <COLUMNS> <COLUMN ID="dataset_id" DATA_TYPE="NUMBER" DATA_PRECISION="38" DATA_SCALE="0“/> <COLUMN ID="dataset_name" DATA_TYPE="VARCHAR 2" DATA_LENGTH="80“/> <COLUMN ID="CREATION_DATE" DATA_TYPE="DATE" DATE_MASK="YYYY/MM/DD. HH 24: MI: SS“/> <COLUMN ID="CREATED_BY" DATA_TYPE="VARCHAR 2" DATA_LENGTH="8“/> </COLUMNS> <FILE PATHNAME="/flat/datasets_20020923_US. txt. 1“/> <FILE PATHNAME="/flat/datasets_20020923_US. txt. 2“/> </FILES> </DATA>

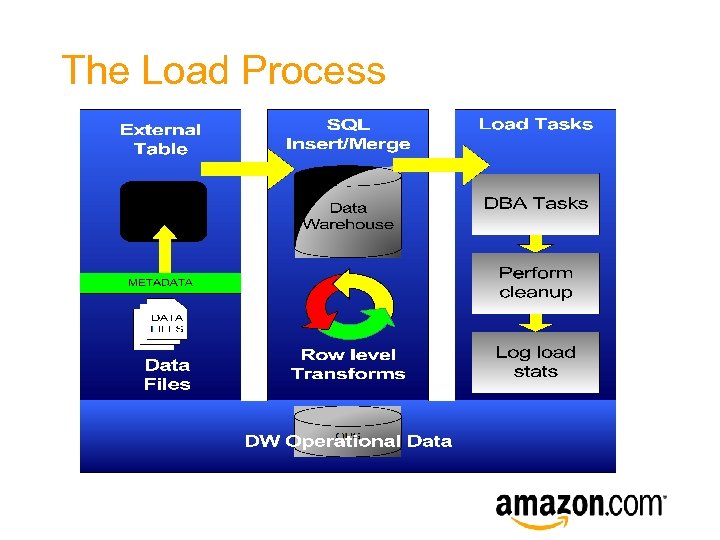

The ETL Process • Extract data from source • The Load Process • –Makes extensive use of External Tables –MERGE and Bulk Insert –Contains integrated DBA tasks –Every load is tracked in an operational database Dimensional Transforms

The Load Process

The Load Process • External Tables • • –access to files on the operating system –Is a building block in a broader ETL process MERGE & Bulk Insert Integrated DBA tasks

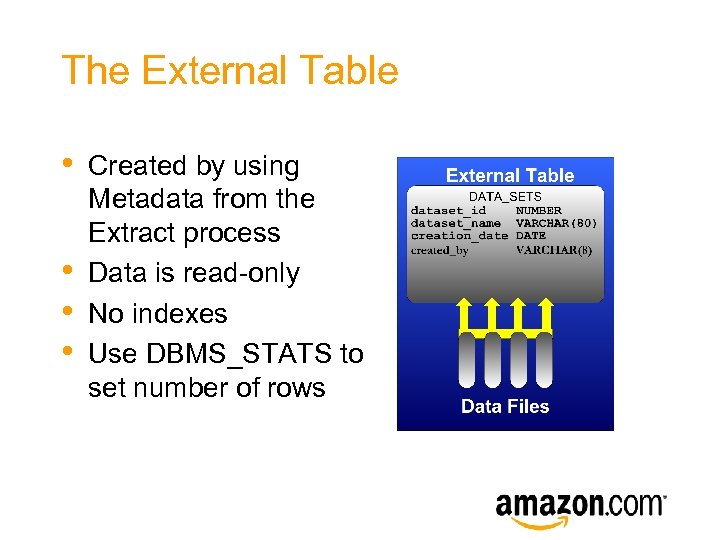

The External Table • • Created by using Metadata from the Extract process Data is read-only No indexes Use DBMS_STATS to set number of rows

Example External Table 1. Copy the data to the database server Ÿ Data must reside in a file system location specified by the DBA’s. - create directory DAT_DIR as ‘/stage/flat’

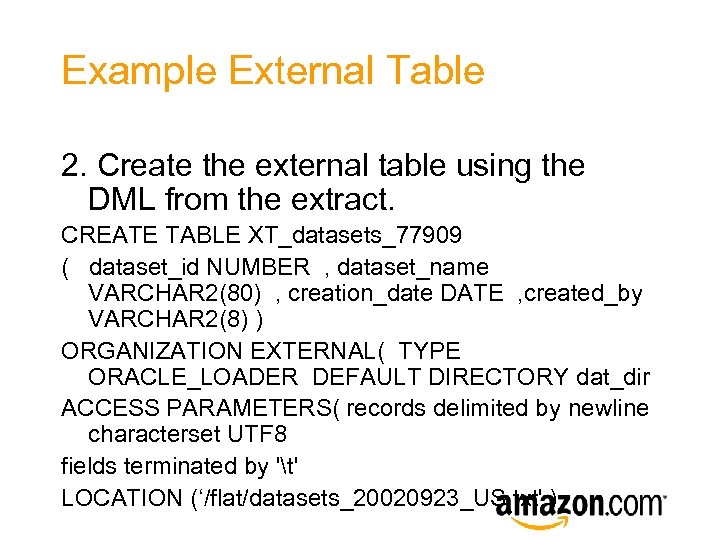

Example External Table 2. Create the external table using the DML from the extract. CREATE TABLE XT_datasets_77909 ( dataset_id NUMBER , dataset_name VARCHAR 2(80) , creation_date DATE , created_by VARCHAR 2(8) ) ORGANIZATION EXTERNAL( TYPE ORACLE_LOADER DEFAULT DIRECTORY dat_dir ACCESS PARAMETERS( records delimited by newline characterset UTF 8 fields terminated by 't' LOCATION (‘/flat/datasets_20020923_US. txt' )

The External Table • • No pre-staging of data Ability to describe a flat file to Oracle Handles horizontally partitioned files Good error messaging

The Load Process • External Tables • MERGE –Can be run in parallel –Combined with external table provides a powerful set of ETL tools • Integrated DBA tasks

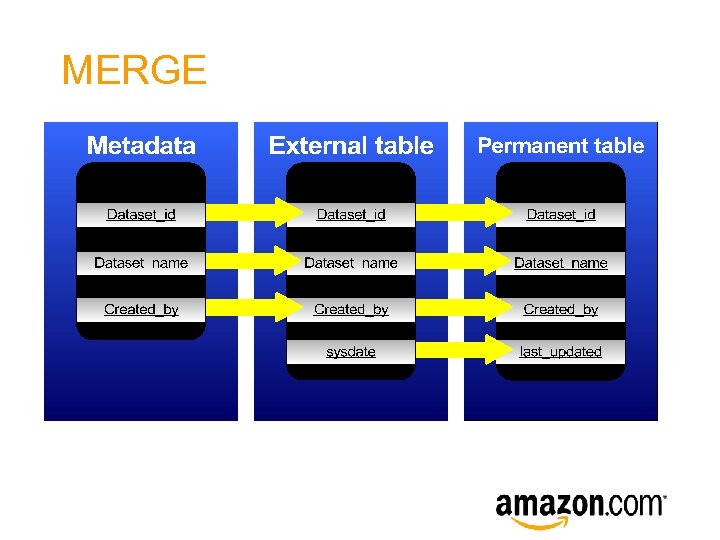

MERGE • Allows for update or insert in a single statement –If key value already exists • • • Yes, update row • No, insert row MERGE statement is auto-generated Row level column transforms are supported

MERGE

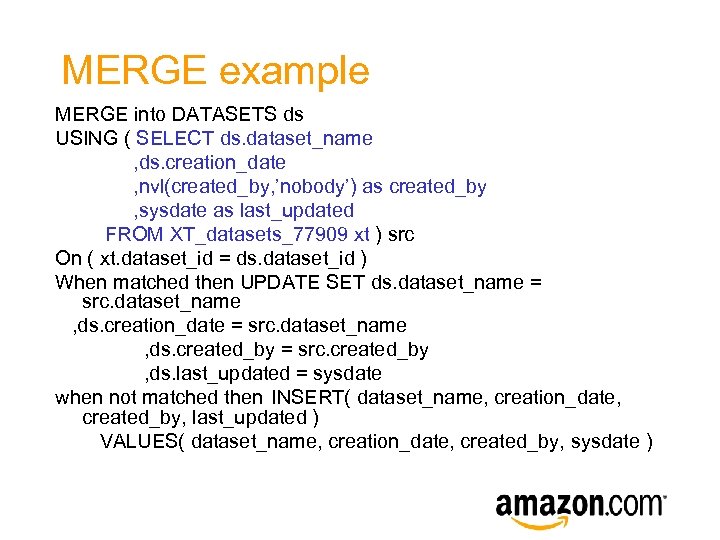

MERGE example MERGE into DATASETS ds USING ( SELECT ds. dataset_name , ds. creation_date , nvl(created_by, ’nobody’) as created_by , sysdate as last_updated FROM XT_datasets_77909 xt ) src On ( xt. dataset_id = ds. dataset_id ) When matched then UPDATE SET ds. dataset_name = src. dataset_name , ds. creation_date = src. dataset_name , ds. created_by = src. created_by , ds. last_updated = sysdate when not matched then INSERT( dataset_name, creation_date, created_by, last_updated ) VALUES( dataset_name, creation_date, created_by, sysdate )

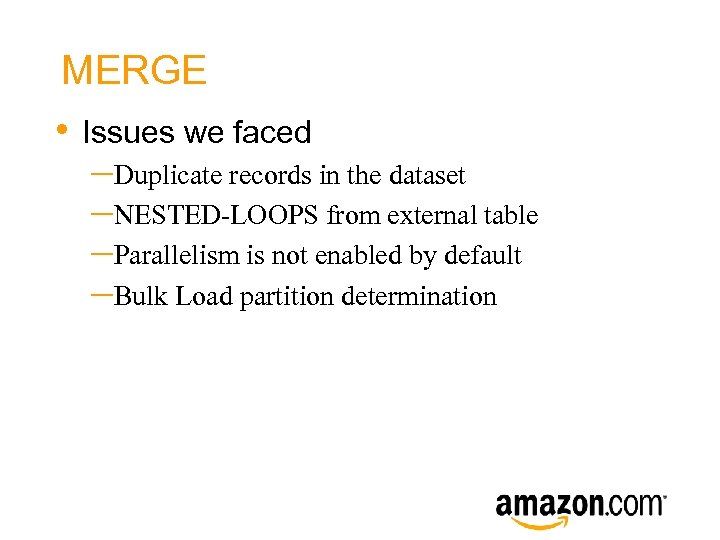

MERGE • Issues we faced –Duplicate records in the dataset –NESTED-LOOPS from external table –Parallelism is not enabled by default –Bulk Load partition determination

The Load Process • • External Tables MERGE • Integrated DBA tasks –Reduces workload required by the DBA team –Streamlines the load process –Eliminates human error

Integrated DBA Tasks • Provided by the DBA team –Managed by the DBA team –ETL team does not need special knowledge of table layout

Integrated DBA Tasks • Truncate Partition developer makes call truncate_partition( ‘TABLE-NAME’, partition-key 1, partition-key 2, partition-key 3 ) DBA utility translates this and executes alter table TABLE-NAME drop partition dbi 20020930_101;

Integrated DBA Tasks • Analyze Partition developer makes call analyze_partition( ‘TABLE-NAME’, partition-key 1, partition-key 2, partition-key 3 ) DBA utility translates this and executes dbms_stats. gather_table_stats(ownn ame , tabname , partname , cascade , estimate_percent, granularity);

Integrated DBA Tasks • Return Partition Name developer makes call get_partition_name( ‘TABLE-NAME’, partition-key 1, partition-key 2, partition-key 3 ) DBA utility translates this and returns the appropriate name of the partition. This is very useful when bulk loading tables.

Integrated DBA Tasks • Partitioning utilities –Helps to streamline the process –Reduces workload of DBA team –Helps to eliminate the problem of double loads for Snapshot tables and partitions

The Load Process • External Tables • MERGE • Integrated DBA tasks • Loads are repeatable processes – Provides access to flat files outside the database – Parallel “upsert” simplifies ETL – Row level transforms can be performed in SQL – Reduces workload required by the DBA team – Streamlines the load process – Eliminates human error

Summary • • • Reduction in time to integrate new subject areas Oracle parallelism scales well Eliminated unneeded software

Summary • Oracle has delivered on the DW promise –Oracle External table combined with MERGE is a viable alternative to other ETL tools –ETL tools are ready today

& QUESTIONS ANSWERS

Reminder – please complete the Oracle. World session survey Thank you.

f6d4a9f930aa175a3f462a95c3599100.ppt