2eb82127e0e4f89db924c89489779cea.ppt

- Количество слайдов: 62

SERVOGrid and Grids for Realtime and Streaming Applications Grid School Vico Equense July 21 2005 Geoffrey Fox Computer Science, Informatics, Physics Pervasive Technology Laboratories Indiana University Bloomington IN 47401 http: //grids. ucs. indiana. edu/ptliupages/presentations/Grid. School 2005/ gcf@indiana. edu http: //www. infomall. org 1

SERVOGrid and Grids for Realtime and Streaming Applications Grid School Vico Equense July 21 2005 Geoffrey Fox Computer Science, Informatics, Physics Pervasive Technology Laboratories Indiana University Bloomington IN 47401 http: //grids. ucs. indiana. edu/ptliupages/presentations/Grid. School 2005/ gcf@indiana. edu http: //www. infomall. org 1

Thank you SERVOGrid and i. SERVO are major collaborations In the USA, JPL leads project involving UC Davis and Irvine, USC and Indiana university Australia, China, Japan and USA are current international partners This talk takes material from talks by • Andrea Donnellan • Marlon Pierce • John Rundle Thank you! 2

Thank you SERVOGrid and i. SERVO are major collaborations In the USA, JPL leads project involving UC Davis and Irvine, USC and Indiana university Australia, China, Japan and USA are current international partners This talk takes material from talks by • Andrea Donnellan • Marlon Pierce • John Rundle Thank you! 2

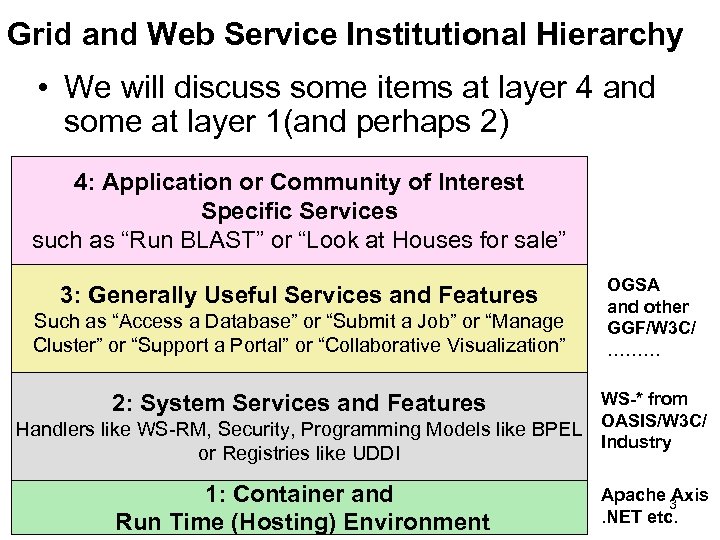

Grid and Web Service Institutional Hierarchy • We will discuss some items at layer 4 and some at layer 1(and perhaps 2) 4: Application or Community of Interest Specific Services such as “Run BLAST” or “Look at Houses for sale” 3: Generally Useful Services and Features Such as “Access a Database” or “Submit a Job” or “Manage Cluster” or “Support a Portal” or “Collaborative Visualization” OGSA and other GGF/W 3 C/ ……… WS-* from Handlers like WS-RM, Security, Programming Models like BPEL OASIS/W 3 C/ Industry 2: System Services and Features or Registries like UDDI 1: Container and Run Time (Hosting) Environment Apache 3 Axis. NET etc.

Grid and Web Service Institutional Hierarchy • We will discuss some items at layer 4 and some at layer 1(and perhaps 2) 4: Application or Community of Interest Specific Services such as “Run BLAST” or “Look at Houses for sale” 3: Generally Useful Services and Features Such as “Access a Database” or “Submit a Job” or “Manage Cluster” or “Support a Portal” or “Collaborative Visualization” OGSA and other GGF/W 3 C/ ……… WS-* from Handlers like WS-RM, Security, Programming Models like BPEL OASIS/W 3 C/ Industry 2: System Services and Features or Registries like UDDI 1: Container and Run Time (Hosting) Environment Apache 3 Axis. NET etc.

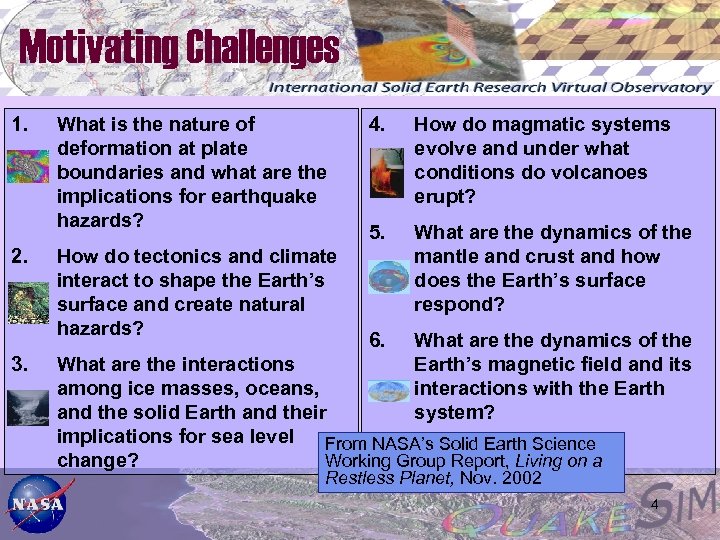

Motivating Challenges 1. 2. 3. What is the nature of deformation at plate boundaries and what are the implications for earthquake hazards? How do tectonics and climate interact to shape the Earth’s surface and create natural hazards? 4. How do magmatic systems evolve and under what conditions do volcanoes erupt? 5. What are the dynamics of the mantle and crust and how does the Earth’s surface respond? 6. What are the dynamics of the Earth’s magnetic field and its interactions with the Earth system? What are the interactions among ice masses, oceans, and the solid Earth and their implications for sea level From NASA’s Solid Earth Science change? Working Group Report, Living on a Restless Planet, Nov. 2002 4

Motivating Challenges 1. 2. 3. What is the nature of deformation at plate boundaries and what are the implications for earthquake hazards? How do tectonics and climate interact to shape the Earth’s surface and create natural hazards? 4. How do magmatic systems evolve and under what conditions do volcanoes erupt? 5. What are the dynamics of the mantle and crust and how does the Earth’s surface respond? 6. What are the dynamics of the Earth’s magnetic field and its interactions with the Earth system? What are the interactions among ice masses, oceans, and the solid Earth and their implications for sea level From NASA’s Solid Earth Science change? Working Group Report, Living on a Restless Planet, Nov. 2002 4

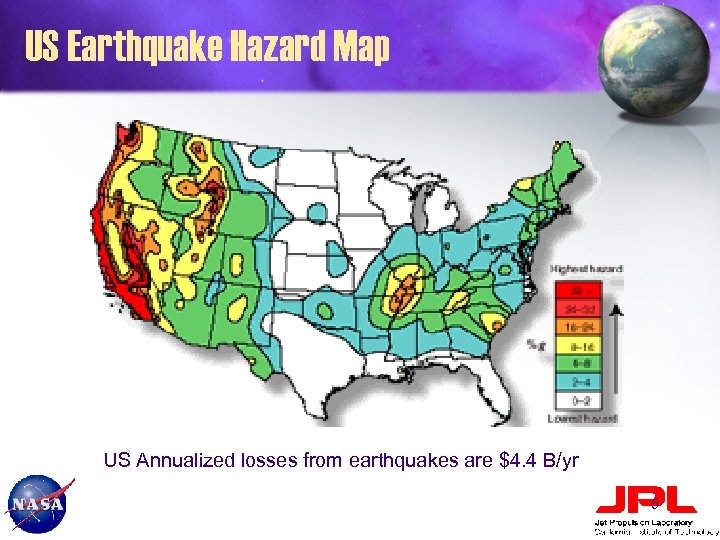

US Earthquake Hazard Map US Annualized losses from earthquakes are $4. 4 B/yr 5

US Earthquake Hazard Map US Annualized losses from earthquakes are $4. 4 B/yr 5

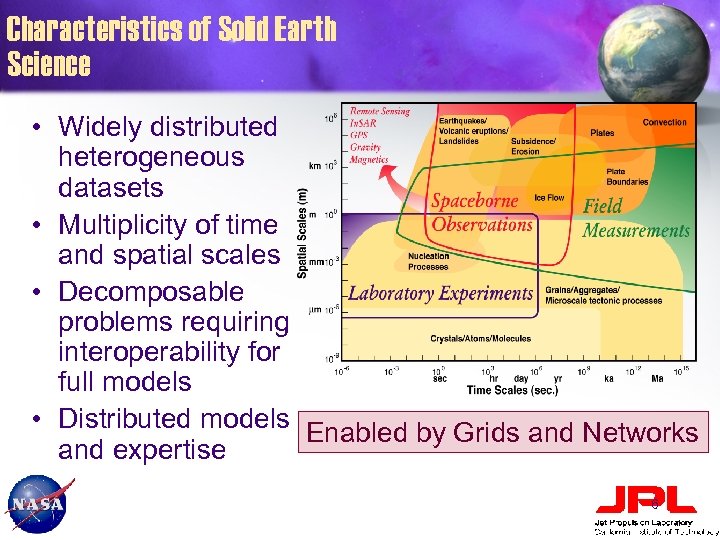

Characteristics of Solid Earth Science • Widely distributed heterogeneous datasets • Multiplicity of time and spatial scales • Decomposable problems requiring interoperability for full models • Distributed models Enabled by Grids and Networks and expertise 6

Characteristics of Solid Earth Science • Widely distributed heterogeneous datasets • Multiplicity of time and spatial scales • Decomposable problems requiring interoperability for full models • Distributed models Enabled by Grids and Networks and expertise 6

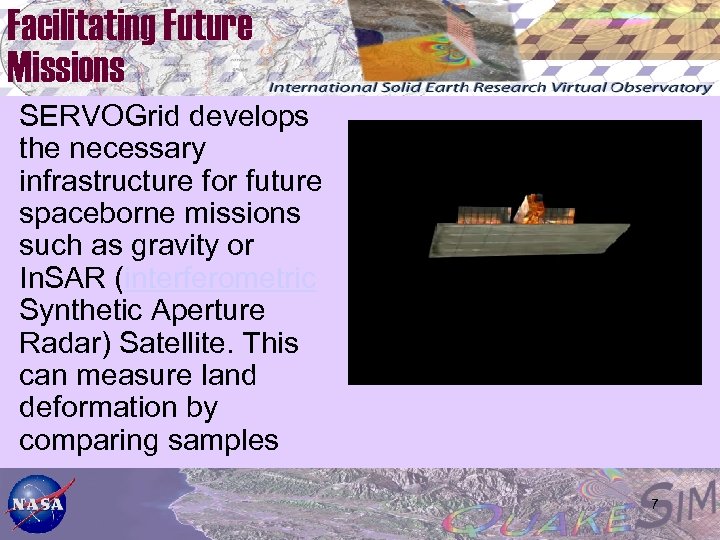

Facilitating Future Missions SERVOGrid develops the necessary infrastructure for future spaceborne missions such as gravity or In. SAR (interferometric Synthetic Aperture Radar) Satellite. This can measure land deformation by comparing samples 7

Facilitating Future Missions SERVOGrid develops the necessary infrastructure for future spaceborne missions such as gravity or In. SAR (interferometric Synthetic Aperture Radar) Satellite. This can measure land deformation by comparing samples 7

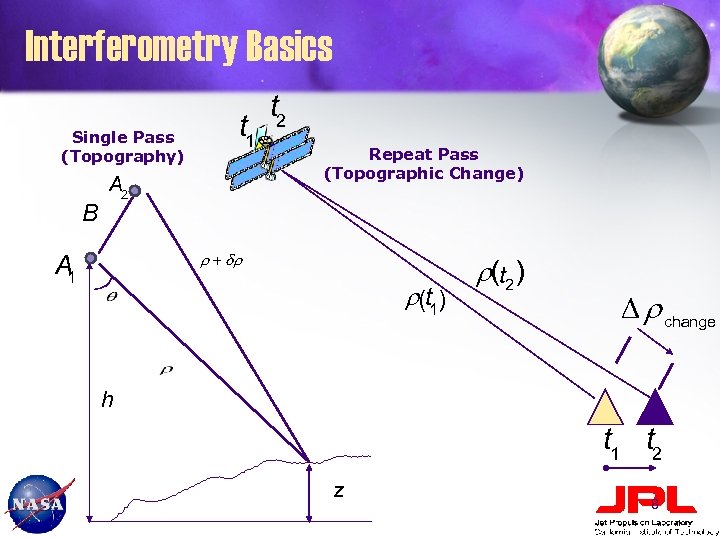

Interferometry Basics Single Pass (Topography) B t 1 A 2 t 2 Repeat Pass (Topographic Change) r + dr A 1 r(t 1) r(t 2 ) D r change h t 1 t 2 z 8

Interferometry Basics Single Pass (Topography) B t 1 A 2 t 2 Repeat Pass (Topographic Change) r + dr A 1 r(t 1) r(t 2 ) D r change h t 1 t 2 z 8

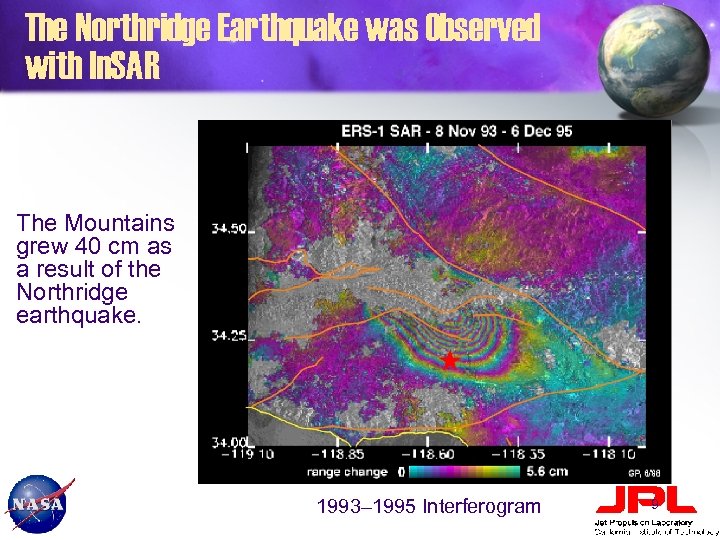

The Northridge Earthquake was Observed with In. SAR The Mountains grew 40 cm as a result of the Northridge earthquake. 1993– 1995 Interferogram 9

The Northridge Earthquake was Observed with In. SAR The Mountains grew 40 cm as a result of the Northridge earthquake. 1993– 1995 Interferogram 9

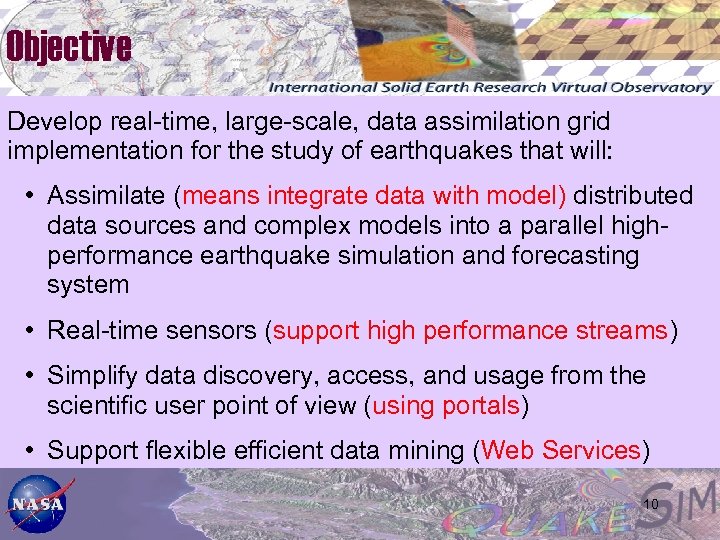

Objective Develop real-time, large-scale, data assimilation grid implementation for the study of earthquakes that will: • Assimilate (means integrate data with model) distributed data sources and complex models into a parallel highperformance earthquake simulation and forecasting system • Real-time sensors (support high performance streams) • Simplify data discovery, access, and usage from the scientific user point of view (using portals) • Support flexible efficient data mining (Web Services) 10

Objective Develop real-time, large-scale, data assimilation grid implementation for the study of earthquakes that will: • Assimilate (means integrate data with model) distributed data sources and complex models into a parallel highperformance earthquake simulation and forecasting system • Real-time sensors (support high performance streams) • Simplify data discovery, access, and usage from the scientific user point of view (using portals) • Support flexible efficient data mining (Web Services) 10

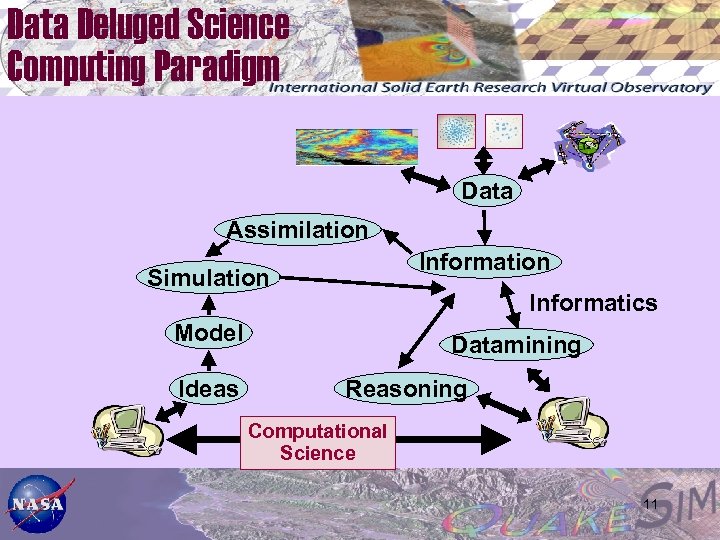

Data Deluged Science Computing Paradigm Data Assimilation Information Simulation Informatics Model Ideas Datamining Reasoning Computational Science 11

Data Deluged Science Computing Paradigm Data Assimilation Information Simulation Informatics Model Ideas Datamining Reasoning Computational Science 11

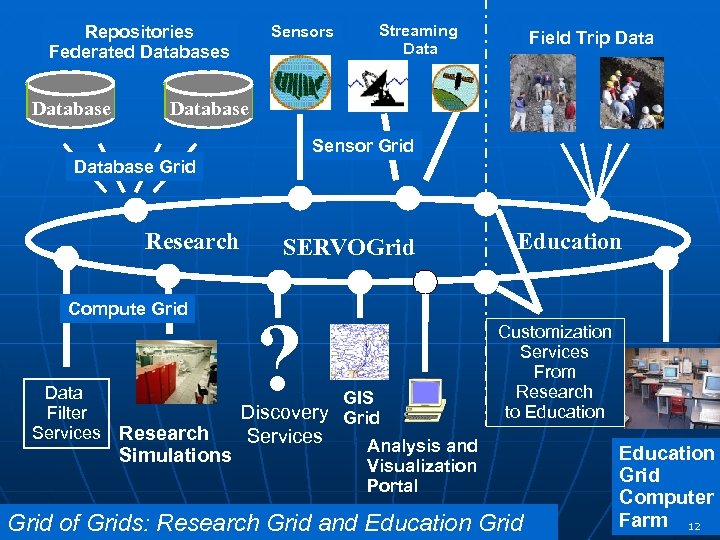

Repositories Federated Databases Database Sensors Streaming Data Field Trip Database Sensor Grid Database Grid Research Compute Grid Data Filter Services Research Simulations SERVOGrid ? GIS Discovery Grid Services Education Customization Services From Research to Education Analysis and Visualization Portal Grid of Grids: Research Grid and Education Grid Computer Farm 12

Repositories Federated Databases Database Sensors Streaming Data Field Trip Database Sensor Grid Database Grid Research Compute Grid Data Filter Services Research Simulations SERVOGrid ? GIS Discovery Grid Services Education Customization Services From Research to Education Analysis and Visualization Portal Grid of Grids: Research Grid and Education Grid Computer Farm 12

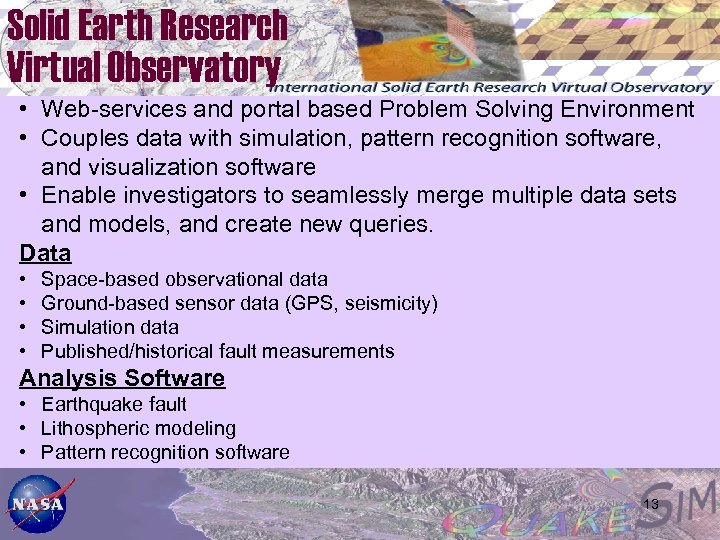

Solid Earth Research Virtual Observatory • Web-services and portal based Problem Solving Environment • Couples data with simulation, pattern recognition software, and visualization software • Enable investigators to seamlessly merge multiple data sets and models, and create new queries. Data • • Space-based observational data Ground-based sensor data (GPS, seismicity) Simulation data Published/historical fault measurements Analysis Software • Earthquake fault • Lithospheric modeling • Pattern recognition software 13

Solid Earth Research Virtual Observatory • Web-services and portal based Problem Solving Environment • Couples data with simulation, pattern recognition software, and visualization software • Enable investigators to seamlessly merge multiple data sets and models, and create new queries. Data • • Space-based observational data Ground-based sensor data (GPS, seismicity) Simulation data Published/historical fault measurements Analysis Software • Earthquake fault • Lithospheric modeling • Pattern recognition software 13

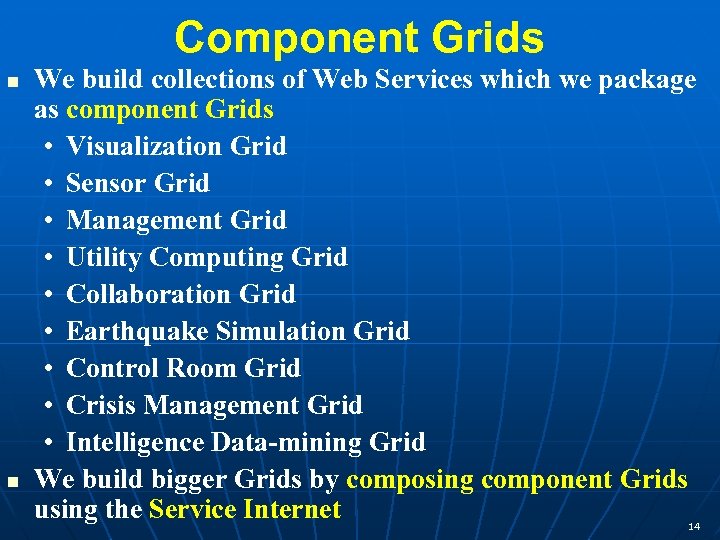

Component Grids We build collections of Web Services which we package as component Grids • Visualization Grid • Sensor Grid • Management Grid • Utility Computing Grid • Collaboration Grid • Earthquake Simulation Grid • Control Room Grid • Crisis Management Grid • Intelligence Data-mining Grid We build bigger Grids by composing component Grids using the Service Internet 14

Component Grids We build collections of Web Services which we package as component Grids • Visualization Grid • Sensor Grid • Management Grid • Utility Computing Grid • Collaboration Grid • Earthquake Simulation Grid • Control Room Grid • Crisis Management Grid • Intelligence Data-mining Grid We build bigger Grids by composing component Grids using the Service Internet 14

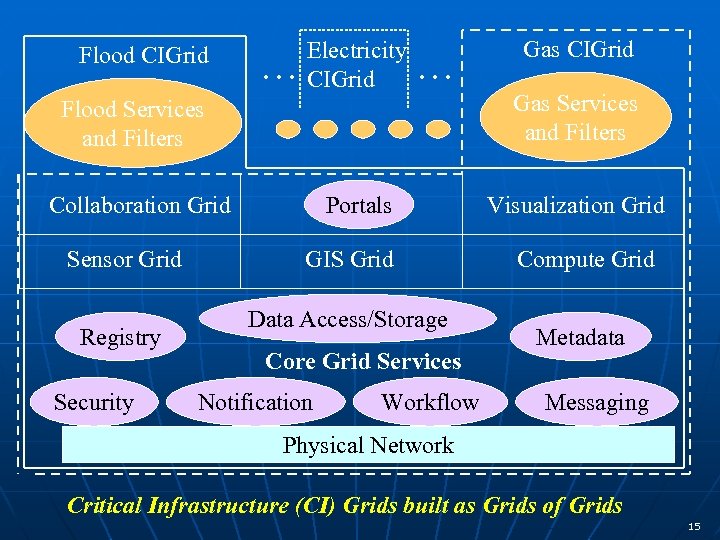

Flood CIGrid … Electricity CIGrid … Flood Services and Filters Collaboration Grid Sensor Grid Registry Security Portals GIS Grid Data Access/Storage Core Grid Services Notification Workflow Gas CIGrid Gas Services and Filters Visualization Grid Compute Grid Metadata Messaging Physical Network Critical Infrastructure (CI) Grids built as Grids of Grids 15

Flood CIGrid … Electricity CIGrid … Flood Services and Filters Collaboration Grid Sensor Grid Registry Security Portals GIS Grid Data Access/Storage Core Grid Services Notification Workflow Gas CIGrid Gas Services and Filters Visualization Grid Compute Grid Metadata Messaging Physical Network Critical Infrastructure (CI) Grids built as Grids of Grids 15

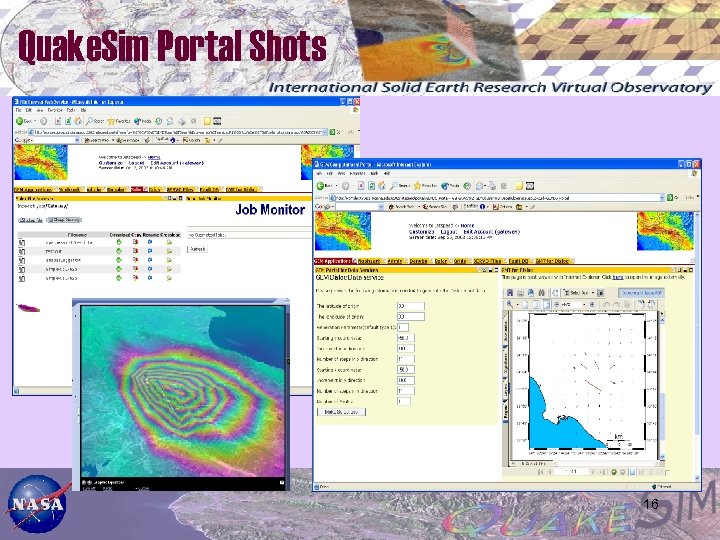

Quake. Sim Portal Shots 16

Quake. Sim Portal Shots 16

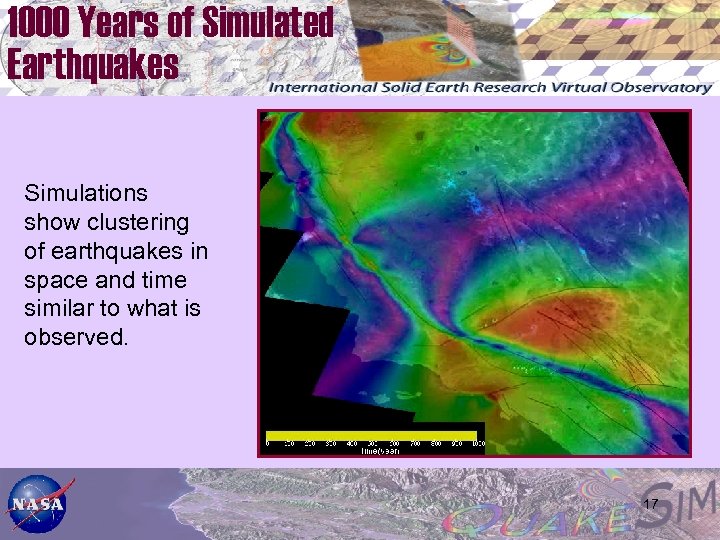

1000 Years of Simulated Earthquakes Simulations show clustering of earthquakes in space and time similar to what is observed. 17

1000 Years of Simulated Earthquakes Simulations show clustering of earthquakes in space and time similar to what is observed. 17

SERVOGrid Apps and Their Data Geo. FEST: Three-dimensional viscoelastic finite element model for calculating nodal displacements and tractions. Allows for realistic fault geometry and characteristics, material properties, and body forces. • Relies upon fault models with geometric and material properties. Virtual California: Program to simulate interactions between vertical strike-slip faults using an elastic layer over a viscoelastic half-space. • Relies upon fault and fault friction models. Pattern Informatics: Calculates regions of enhanced probability for future seismic activity based on the seismic record of the region • Uses seismic data archives RDAHMM: Time series analysis program based on Hidden Markov Modeling. Produces feature vectors and probabilities for transitioning from one class to another. • Used to analyze GPS and seismic catalog archives. • Can be adapted to detect state change events in real time. 18

SERVOGrid Apps and Their Data Geo. FEST: Three-dimensional viscoelastic finite element model for calculating nodal displacements and tractions. Allows for realistic fault geometry and characteristics, material properties, and body forces. • Relies upon fault models with geometric and material properties. Virtual California: Program to simulate interactions between vertical strike-slip faults using an elastic layer over a viscoelastic half-space. • Relies upon fault and fault friction models. Pattern Informatics: Calculates regions of enhanced probability for future seismic activity based on the seismic record of the region • Uses seismic data archives RDAHMM: Time series analysis program based on Hidden Markov Modeling. Produces feature vectors and probabilities for transitioning from one class to another. • Used to analyze GPS and seismic catalog archives. • Can be adapted to detect state change events in real time. 18

Pattern Informatics (PI) PI is a technique developed by john rundle at University of California, Davis for analyzing earthquake seismic records to forecast regions with high future seismic activity. • They have correctly forecasted the locations of 15 of last 16 earthquakes with magnitude > 5. 0 in California. See Tiampo, K. F. , Rundle, J. B. , Mc. Ginnis, S. A. , & Klein, W. Pattern dynamics and forecast methods in seismically active regions. Pure Ap. Geophys. 159, 2429 -2467 (2002). • http: //citebase. eprints. org/cgibin/fulltext? format=application/pdf&identifier=oai%3 Aar Xiv. org%3 Acond-mat%2 F 0102032 PI is being applied other regions of the world, and has gotten a lot of press. • Google “John Rundle UC Davis Pattern Informatics” 19

Pattern Informatics (PI) PI is a technique developed by john rundle at University of California, Davis for analyzing earthquake seismic records to forecast regions with high future seismic activity. • They have correctly forecasted the locations of 15 of last 16 earthquakes with magnitude > 5. 0 in California. See Tiampo, K. F. , Rundle, J. B. , Mc. Ginnis, S. A. , & Klein, W. Pattern dynamics and forecast methods in seismically active regions. Pure Ap. Geophys. 159, 2429 -2467 (2002). • http: //citebase. eprints. org/cgibin/fulltext? format=application/pdf&identifier=oai%3 Aar Xiv. org%3 Acond-mat%2 F 0102032 PI is being applied other regions of the world, and has gotten a lot of press. • Google “John Rundle UC Davis Pattern Informatics” 19

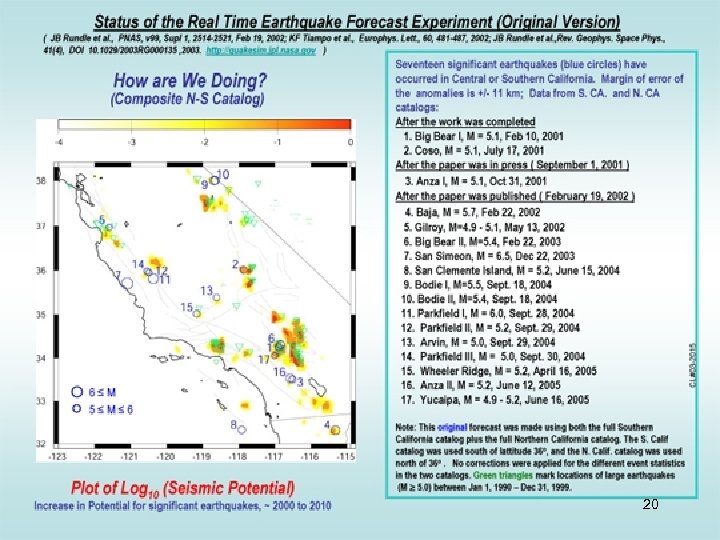

Real-time Earthquake Forecast Seven large events with M 5 have occurred on anomalies, or within the margin of error: 1. 2. 3. 4. 5. 6. 7. Plot of Log 10 P(x) Potential for large earthquakes, M 5, ~ 2000 to 2010 Big Bear I, M = 5. 1, Feb 10, 2001 Coso, M = 5. 1, July 17, 2001 Anza, M = 5. 1, Oct 31, 2001 Baja, M = 5. 7, Feb 22, 2002 Gilroy, M=4. 9 - 5. 1, May 13, 2002 Big Bear II, M=5. 4, Feb 22, 2003 San Simeon, M = 6. 5, Dec 22, 2003 JB Rundle, KF Tiampo, W. Klein, JSS Martins, PNAS, v 99, Supl 1, 2514 -2521, Feb 19, 2002; KF Tiampo, JB Rundle, S. Mc. Ginnis, S. Gross and W. Klein, Europhys. Lett. , 60, 481 -487, 2002 20

Real-time Earthquake Forecast Seven large events with M 5 have occurred on anomalies, or within the margin of error: 1. 2. 3. 4. 5. 6. 7. Plot of Log 10 P(x) Potential for large earthquakes, M 5, ~ 2000 to 2010 Big Bear I, M = 5. 1, Feb 10, 2001 Coso, M = 5. 1, July 17, 2001 Anza, M = 5. 1, Oct 31, 2001 Baja, M = 5. 7, Feb 22, 2002 Gilroy, M=4. 9 - 5. 1, May 13, 2002 Big Bear II, M=5. 4, Feb 22, 2003 San Simeon, M = 6. 5, Dec 22, 2003 JB Rundle, KF Tiampo, W. Klein, JSS Martins, PNAS, v 99, Supl 1, 2514 -2521, Feb 19, 2002; KF Tiampo, JB Rundle, S. Mc. Ginnis, S. Gross and W. Klein, Europhys. Lett. , 60, 481 -487, 2002 20

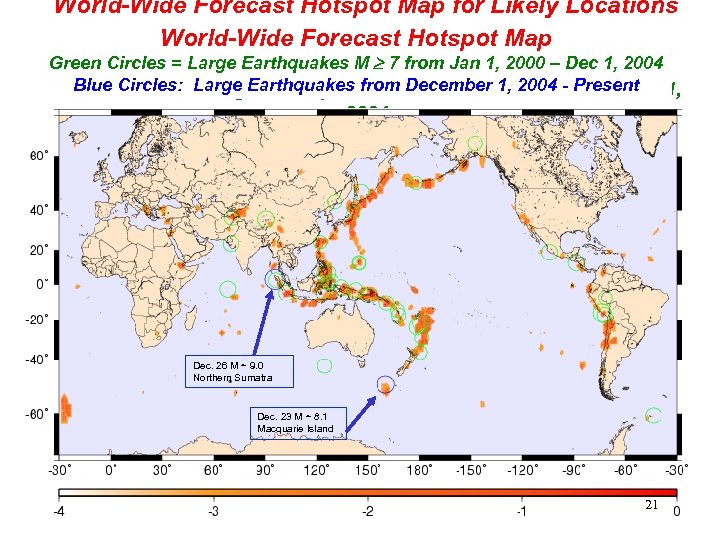

World-Wide Forecast Hotspot Map for Likely Locations of World-Wide Forecast M > 5, 1965 -2000 World-Wide Earthquakes, Hotspot Map Green Circles = Large Earthquakes M For the Decade – Dec 1, 2004 Great Earthquakes M 7. 0 7 from Jan 1, 2000 -2010 Blue Circles: Large Earthquakes from 7 from 1, 2004 - Present Green Circles = Large Earthquakes MDecember Jan 1, 2000 – Dec 1, 2004 Dec. 26 M ~ 9. 0 Northern Sumatra Dec. 23 M ~ 8. 1 Macquarie Island 21

World-Wide Forecast Hotspot Map for Likely Locations of World-Wide Forecast M > 5, 1965 -2000 World-Wide Earthquakes, Hotspot Map Green Circles = Large Earthquakes M For the Decade – Dec 1, 2004 Great Earthquakes M 7. 0 7 from Jan 1, 2000 -2010 Blue Circles: Large Earthquakes from 7 from 1, 2004 - Present Green Circles = Large Earthquakes MDecember Jan 1, 2000 – Dec 1, 2004 Dec. 26 M ~ 9. 0 Northern Sumatra Dec. 23 M ~ 8. 1 Macquarie Island 21

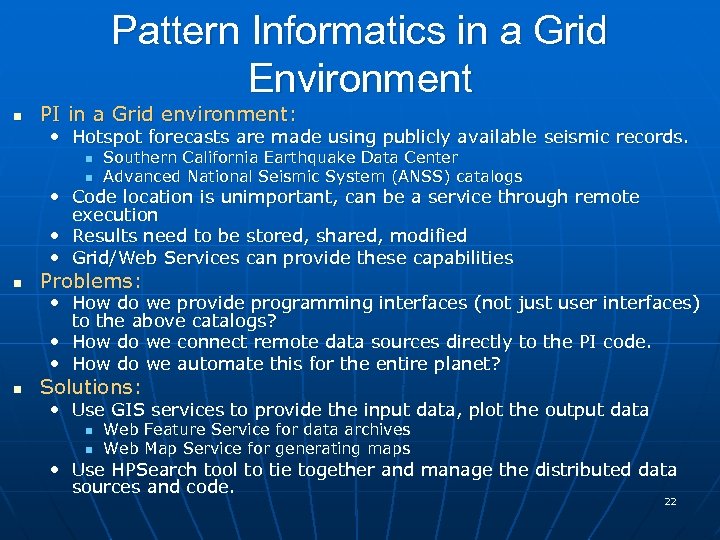

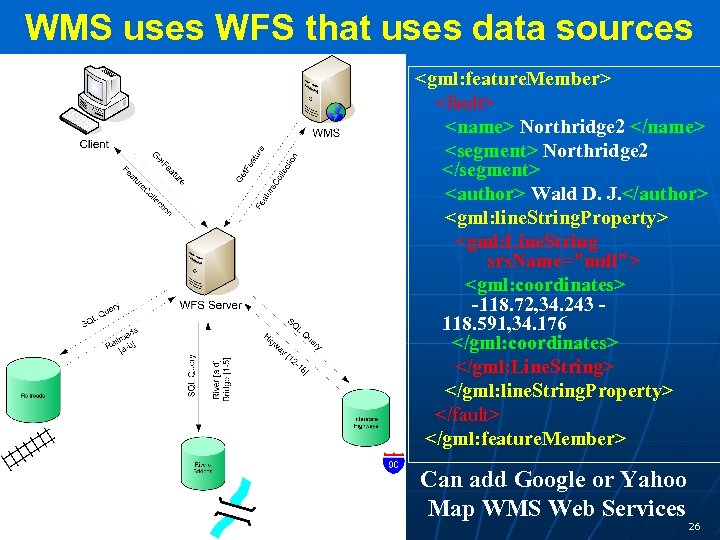

Pattern Informatics in a Grid Environment PI in a Grid environment: • Hotspot forecasts are made using publicly available seismic records. Southern California Earthquake Data Center Advanced National Seismic System (ANSS) catalogs • Code location is unimportant, can be a service through remote execution • Results need to be stored, shared, modified • Grid/Web Services can provide these capabilities Problems: • How do we provide programming interfaces (not just user interfaces) to the above catalogs? • How do we connect remote data sources directly to the PI code. • How do we automate this for the entire planet? Solutions: • Use GIS services to provide the input data, plot the output data Web Feature Service for data archives Web Map Service for generating maps • Use HPSearch tool to tie together and manage the distributed data sources and code. 22

Pattern Informatics in a Grid Environment PI in a Grid environment: • Hotspot forecasts are made using publicly available seismic records. Southern California Earthquake Data Center Advanced National Seismic System (ANSS) catalogs • Code location is unimportant, can be a service through remote execution • Results need to be stored, shared, modified • Grid/Web Services can provide these capabilities Problems: • How do we provide programming interfaces (not just user interfaces) to the above catalogs? • How do we connect remote data sources directly to the PI code. • How do we automate this for the entire planet? Solutions: • Use GIS services to provide the input data, plot the output data Web Feature Service for data archives Web Map Service for generating maps • Use HPSearch tool to tie together and manage the distributed data sources and code. 22

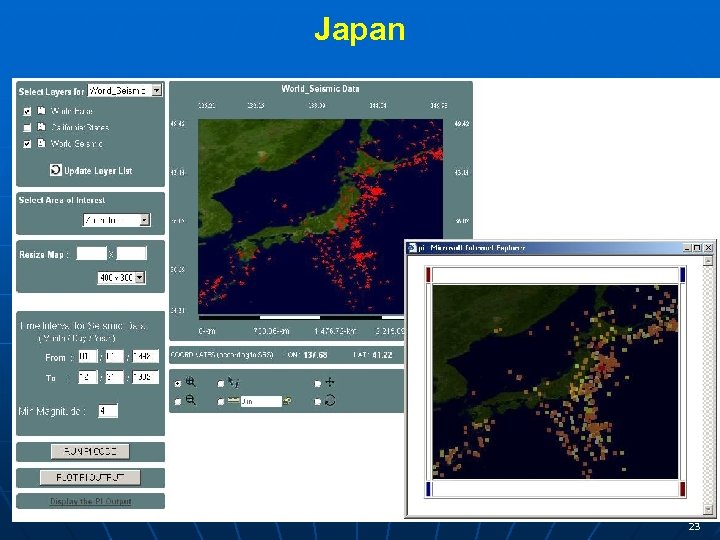

Japan 23

Japan 23

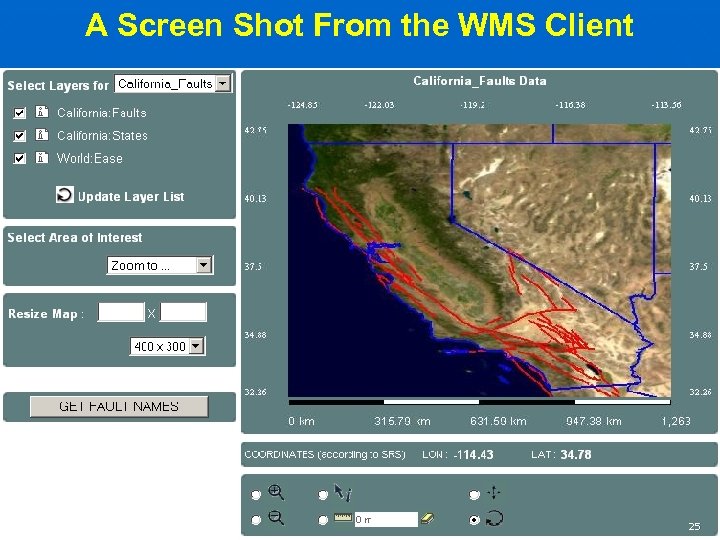

GIS and Sensor Grids OGC has defined a suite of data structures and services to support Geographical Information Systems and Sensors GML Geography Markup language defines specification of geo-referenced data Sensor. ML and O&M (Observation and Measurements) define meta-data and data structure for sensors Services like Web Map Service, Web Feature Service, Sensor Collection Service define services interfaces to access GIS and sensor information Grid workflow links services that are designed to support streaming input and output messages We are building Grid (Web) service implementations of these specifications for NASA’s SERVOGrid 24

GIS and Sensor Grids OGC has defined a suite of data structures and services to support Geographical Information Systems and Sensors GML Geography Markup language defines specification of geo-referenced data Sensor. ML and O&M (Observation and Measurements) define meta-data and data structure for sensors Services like Web Map Service, Web Feature Service, Sensor Collection Service define services interfaces to access GIS and sensor information Grid workflow links services that are designed to support streaming input and output messages We are building Grid (Web) service implementations of these specifications for NASA’s SERVOGrid 24

A Screen Shot From the WMS Client 25

A Screen Shot From the WMS Client 25

WMS uses WFS that uses data sources

WMS uses WFS that uses data sources

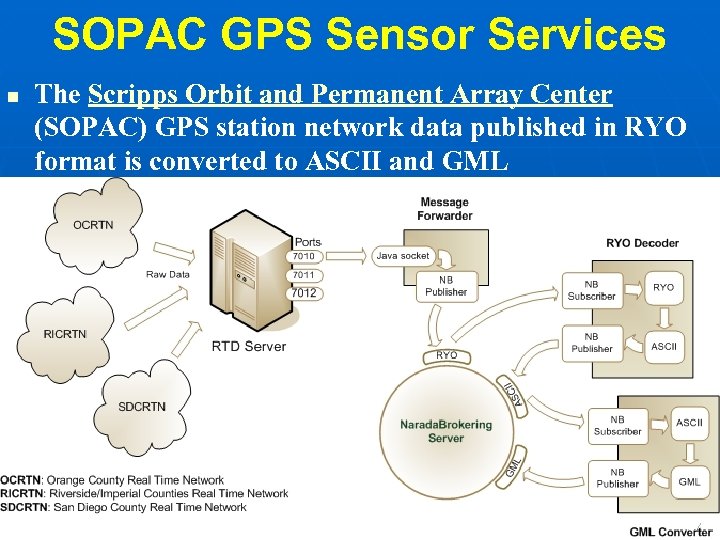

SOPAC GPS Sensor Services The Scripps Orbit and Permanent Array Center (SOPAC) GPS station network data published in RYO format is converted to ASCII and GML 27

SOPAC GPS Sensor Services The Scripps Orbit and Permanent Array Center (SOPAC) GPS station network data published in RYO format is converted to ASCII and GML 27

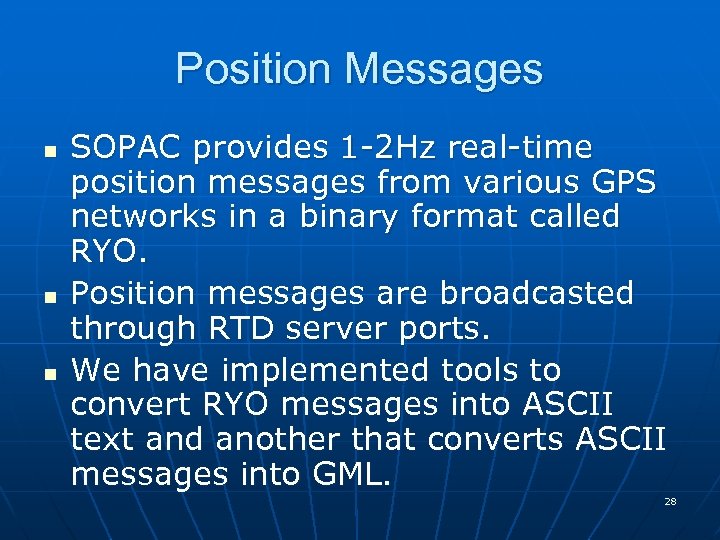

Position Messages SOPAC provides 1 -2 Hz real-time position messages from various GPS networks in a binary format called RYO. Position messages are broadcasted through RTD server ports. We have implemented tools to convert RYO messages into ASCII text and another that converts ASCII messages into GML. 28

Position Messages SOPAC provides 1 -2 Hz real-time position messages from various GPS networks in a binary format called RYO. Position messages are broadcasted through RTD server ports. We have implemented tools to convert RYO messages into ASCII text and another that converts ASCII messages into GML. 28

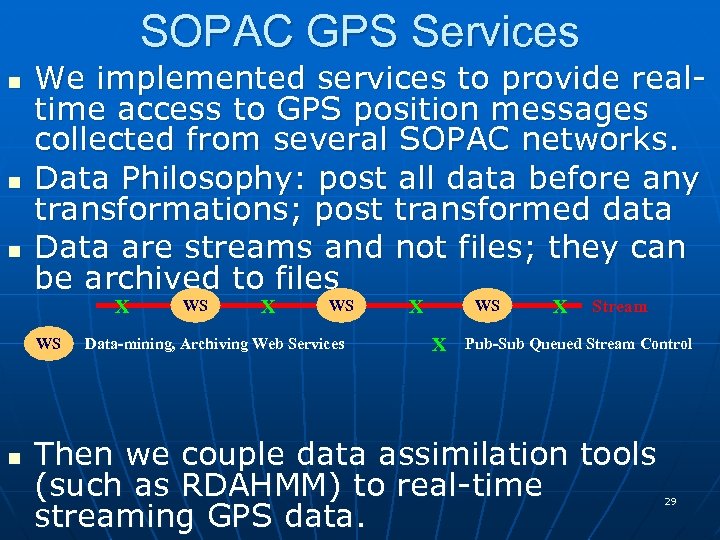

SOPAC GPS Services We implemented services to provide realtime access to GPS position messages collected from several SOPAC networks. Data Philosophy: post all data before any transformations; post transformed data Data are streams and not files; they can be archived to files X WS WS X WS Data-mining, Archiving Web Services X WS X X Stream Pub-Sub Queued Stream Control Then we couple data assimilation tools (such as RDAHMM) to real-time streaming GPS data. 29

SOPAC GPS Services We implemented services to provide realtime access to GPS position messages collected from several SOPAC networks. Data Philosophy: post all data before any transformations; post transformed data Data are streams and not files; they can be archived to files X WS WS X WS Data-mining, Archiving Web Services X WS X X Stream Pub-Sub Queued Stream Control Then we couple data assimilation tools (such as RDAHMM) to real-time streaming GPS data. 29

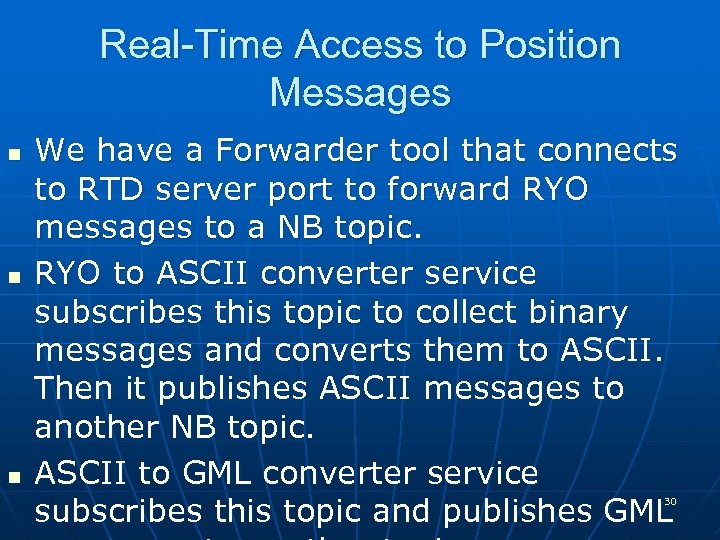

Real-Time Access to Position Messages We have a Forwarder tool that connects to RTD server port to forward RYO messages to a NB topic. RYO to ASCII converter service subscribes this topic to collect binary messages and converts them to ASCII. Then it publishes ASCII messages to another NB topic. ASCII to GML converter service subscribes this topic and publishes GML 30

Real-Time Access to Position Messages We have a Forwarder tool that connects to RTD server port to forward RYO messages to a NB topic. RYO to ASCII converter service subscribes this topic to collect binary messages and converts them to ASCII. Then it publishes ASCII messages to another NB topic. ASCII to GML converter service subscribes this topic and publishes GML 30

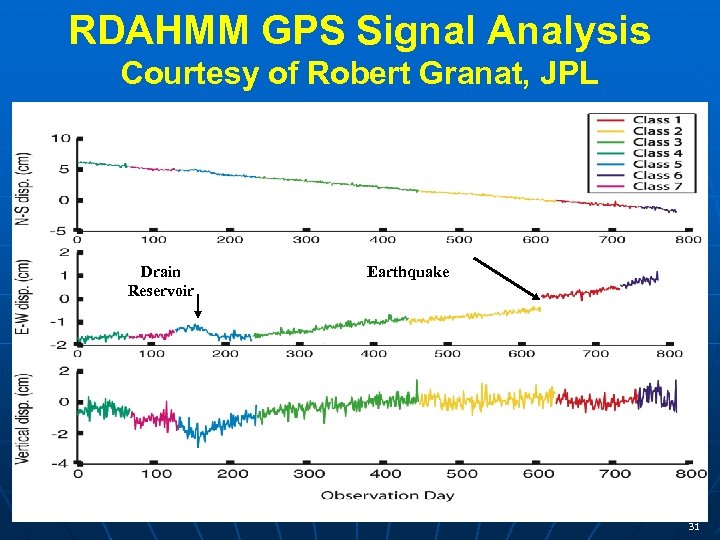

RDAHMM GPS Signal Analysis Courtesy of Robert Granat, JPL Drain Reservoir Earthquake 31

RDAHMM GPS Signal Analysis Courtesy of Robert Granat, JPL Drain Reservoir Earthquake 31

Handling Streams in Web Services Do not open a socket – hand message to messaging system Use Publish-Subscribe as overhead negligible Model is totally asynchronous and event based Messaging system is a distributed set of “SOAP Intermediaries” (message brokers) which manage distributed queues and subscriptions Streams are ordered sets of messages whose common processing is both necessary and an opportunity for efficiency Manage messages and streams to ensure reliable delivery, fast replay, transmission through firewalls, multicast, custom transformations 32

Handling Streams in Web Services Do not open a socket – hand message to messaging system Use Publish-Subscribe as overhead negligible Model is totally asynchronous and event based Messaging system is a distributed set of “SOAP Intermediaries” (message brokers) which manage distributed queues and subscriptions Streams are ordered sets of messages whose common processing is both necessary and an opportunity for efficiency Manage messages and streams to ensure reliable delivery, fast replay, transmission through firewalls, multicast, custom transformations 32

Different ways of Thinking Services and Messages – NOT Jobs and Files Service Internet: Packets replaced by Messages The Bit. Torrent view of Files • Files are chunked into messages which are scattered around the Grid • Chunks are re-assembled into contiguous files Streams replace files by message queues Queues are labeled by topics • System MIGHT chose to backup queues to disk but you just think of messages on distributed queuestimes Note typical time to worry about is a Millisecond Schedule stream-based services NOT jobs 33

Different ways of Thinking Services and Messages – NOT Jobs and Files Service Internet: Packets replaced by Messages The Bit. Torrent view of Files • Files are chunked into messages which are scattered around the Grid • Chunks are re-assembled into contiguous files Streams replace files by message queues Queues are labeled by topics • System MIGHT chose to backup queues to disk but you just think of messages on distributed queuestimes Note typical time to worry about is a Millisecond Schedule stream-based services NOT jobs 33

Do. D Data Strategy Only Handle Information Once (OHIO) – Data is posted in a manner that facilitates re-use without the need for replicating source data. Focus on re-use of existing data repositories. Smart Pull (vice Smart Push) – Applications encourage discovery; users can pull data directly from the net or use value added discovery services (search agents and other “smart pull techniques). Focus on data sharing, with data stored in accessible shared space and advertised (tagged) for discovery. Post at once in Parallel – Process owners make their data available on the net as soon as it is created. Focus on data being tagged and posted before processing (and after processing). 34

Do. D Data Strategy Only Handle Information Once (OHIO) – Data is posted in a manner that facilitates re-use without the need for replicating source data. Focus on re-use of existing data repositories. Smart Pull (vice Smart Push) – Applications encourage discovery; users can pull data directly from the net or use value added discovery services (search agents and other “smart pull techniques). Focus on data sharing, with data stored in accessible shared space and advertised (tagged) for discovery. Post at once in Parallel – Process owners make their data available on the net as soon as it is created. Focus on data being tagged and posted before processing (and after processing). 34

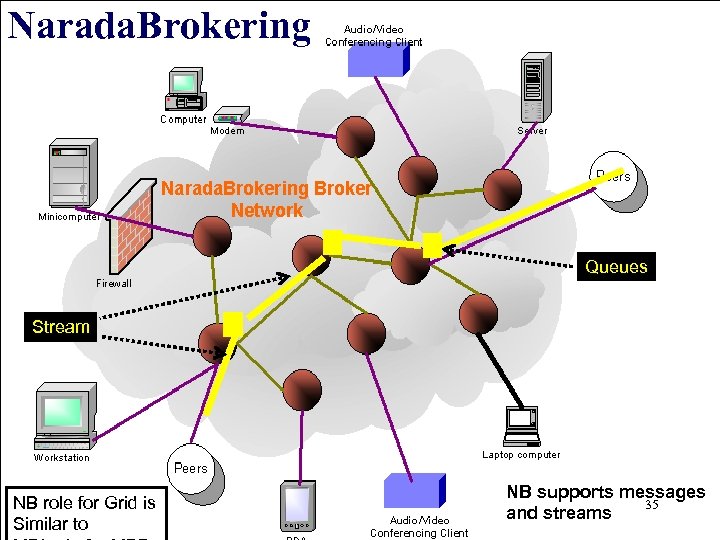

Narada. Brokering Queues Stream NB role for Grid is Similar to NB supports messages 35 and streams

Narada. Brokering Queues Stream NB role for Grid is Similar to NB supports messages 35 and streams

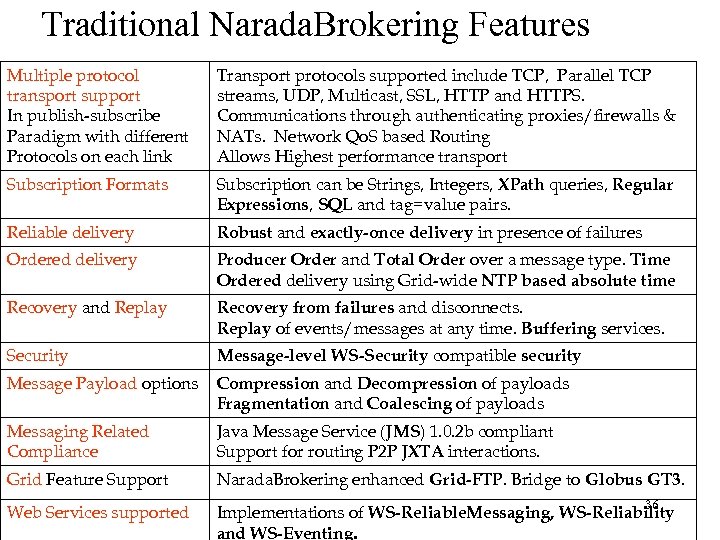

Traditional Narada. Brokering Features Multiple protocol transport support In publish-subscribe Paradigm with different Protocols on each link Transport protocols supported include TCP, Parallel TCP streams, UDP, Multicast, SSL, HTTP and HTTPS. Communications through authenticating proxies/firewalls & NATs. Network Qo. S based Routing Allows Highest performance transport Subscription Formats Subscription can be Strings, Integers, XPath queries, Regular Expressions, SQL and tag=value pairs. Reliable delivery Robust and exactly-once delivery in presence of failures Ordered delivery Producer Order and Total Order over a message type. Time Ordered delivery using Grid-wide NTP based absolute time Recovery and Replay Recovery from failures and disconnects. Replay of events/messages at any time. Buffering services. Security Message-level WS-Security compatible security Message Payload options Compression and Decompression of payloads Fragmentation and Coalescing of payloads Messaging Related Compliance Java Message Service (JMS) 1. 0. 2 b compliant Support for routing P 2 P JXTA interactions. Grid Feature Support Narada. Brokering enhanced Grid-FTP. Bridge to Globus GT 3. Web Services supported Implementations of WS-Reliable. Messaging, WS-Reliability and WS-Eventing. 36

Traditional Narada. Brokering Features Multiple protocol transport support In publish-subscribe Paradigm with different Protocols on each link Transport protocols supported include TCP, Parallel TCP streams, UDP, Multicast, SSL, HTTP and HTTPS. Communications through authenticating proxies/firewalls & NATs. Network Qo. S based Routing Allows Highest performance transport Subscription Formats Subscription can be Strings, Integers, XPath queries, Regular Expressions, SQL and tag=value pairs. Reliable delivery Robust and exactly-once delivery in presence of failures Ordered delivery Producer Order and Total Order over a message type. Time Ordered delivery using Grid-wide NTP based absolute time Recovery and Replay Recovery from failures and disconnects. Replay of events/messages at any time. Buffering services. Security Message-level WS-Security compatible security Message Payload options Compression and Decompression of payloads Fragmentation and Coalescing of payloads Messaging Related Compliance Java Message Service (JMS) 1. 0. 2 b compliant Support for routing P 2 P JXTA interactions. Grid Feature Support Narada. Brokering enhanced Grid-FTP. Bridge to Globus GT 3. Web Services supported Implementations of WS-Reliable. Messaging, WS-Reliability and WS-Eventing. 36

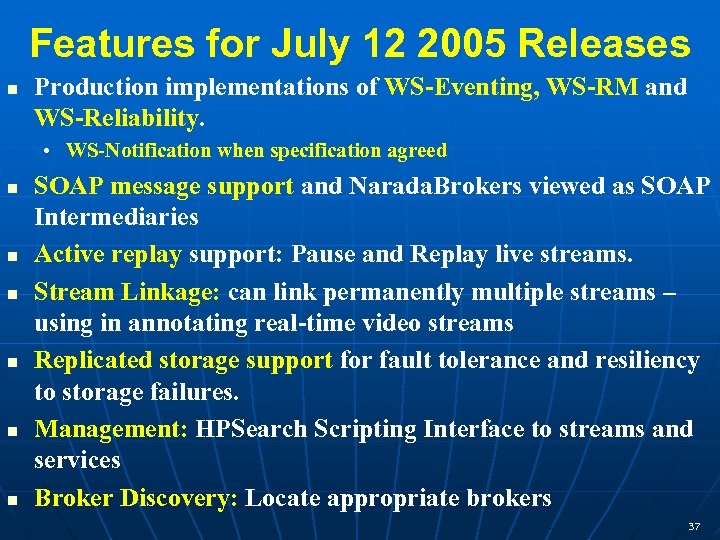

Features for July 12 2005 Releases Production implementations of WS-Eventing, WS-RM and WS-Reliability. • WS-Notification when specification agreed SOAP message support and Narada. Brokers viewed as SOAP Intermediaries Active replay support: Pause and Replay live streams. Stream Linkage: can link permanently multiple streams – using in annotating real-time video streams Replicated storage support for fault tolerance and resiliency to storage failures. Management: HPSearch Scripting Interface to streams and services Broker Discovery: Locate appropriate brokers 37

Features for July 12 2005 Releases Production implementations of WS-Eventing, WS-RM and WS-Reliability. • WS-Notification when specification agreed SOAP message support and Narada. Brokers viewed as SOAP Intermediaries Active replay support: Pause and Replay live streams. Stream Linkage: can link permanently multiple streams – using in annotating real-time video streams Replicated storage support for fault tolerance and resiliency to storage failures. Management: HPSearch Scripting Interface to streams and services Broker Discovery: Locate appropriate brokers 37

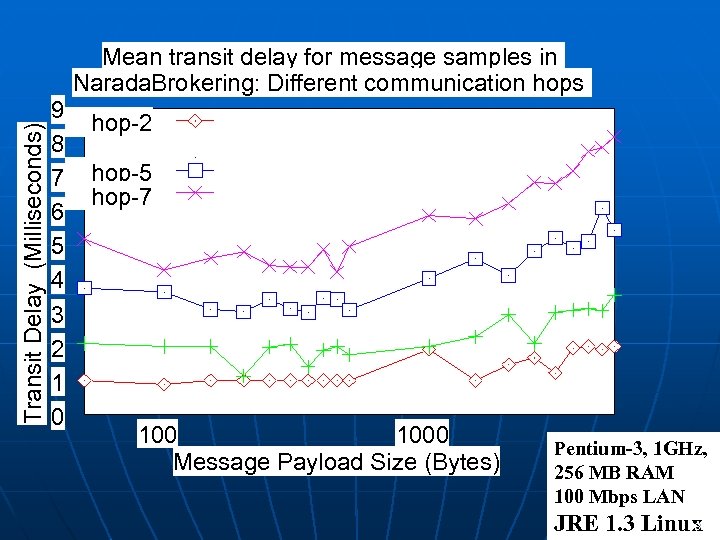

Transit Delay (Milliseconds) Mean transit delay for message samples in Narada. Brokering: Different communication hops 9 8 7 6 5 4 3 2 1 0 hop-2 hop-3 hop-5 hop-7 1000 Message Payload Size (Bytes) Pentium-3, 1 GHz, 256 MB RAM 100 Mbps LAN 38 38 JRE 1. 3 Linux

Transit Delay (Milliseconds) Mean transit delay for message samples in Narada. Brokering: Different communication hops 9 8 7 6 5 4 3 2 1 0 hop-2 hop-3 hop-5 hop-7 1000 Message Payload Size (Bytes) Pentium-3, 1 GHz, 256 MB RAM 100 Mbps LAN 38 38 JRE 1. 3 Linux

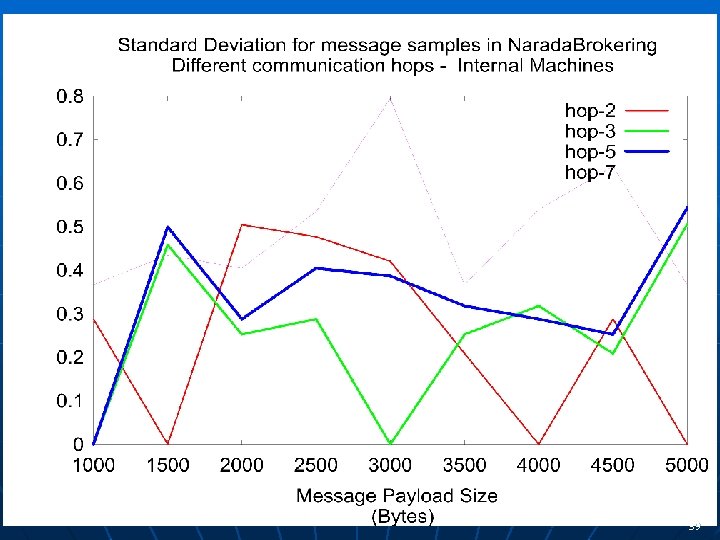

39

39

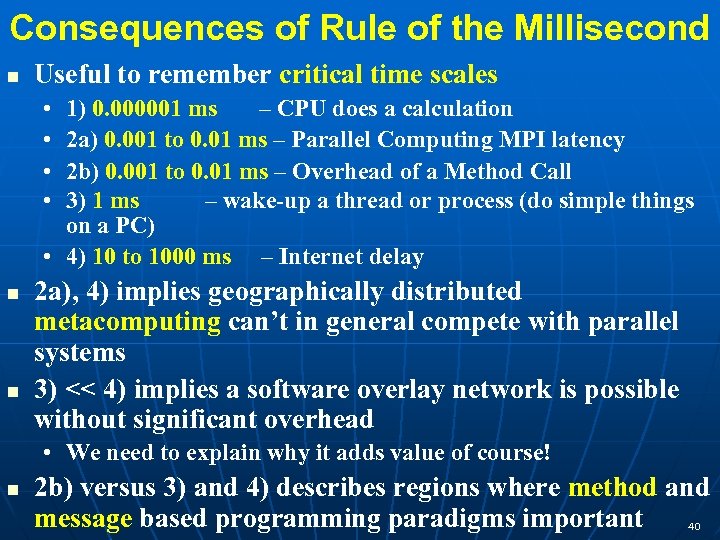

Consequences of Rule of the Millisecond Useful to remember critical time scales • • 1) 0. 000001 ms – CPU does a calculation 2 a) 0. 001 to 0. 01 ms – Parallel Computing MPI latency 2 b) 0. 001 to 0. 01 ms – Overhead of a Method Call 3) 1 ms – wake-up a thread or process (do simple things on a PC) • 4) 10 to 1000 ms – Internet delay 2 a), 4) implies geographically distributed metacomputing can’t in general compete with parallel systems 3) << 4) implies a software overlay network is possible without significant overhead • We need to explain why it adds value of course! 2 b) versus 3) and 4) describes regions where method and message based programming paradigms important 40

Consequences of Rule of the Millisecond Useful to remember critical time scales • • 1) 0. 000001 ms – CPU does a calculation 2 a) 0. 001 to 0. 01 ms – Parallel Computing MPI latency 2 b) 0. 001 to 0. 01 ms – Overhead of a Method Call 3) 1 ms – wake-up a thread or process (do simple things on a PC) • 4) 10 to 1000 ms – Internet delay 2 a), 4) implies geographically distributed metacomputing can’t in general compete with parallel systems 3) << 4) implies a software overlay network is possible without significant overhead • We need to explain why it adds value of course! 2 b) versus 3) and 4) describes regions where method and message based programming paradigms important 40

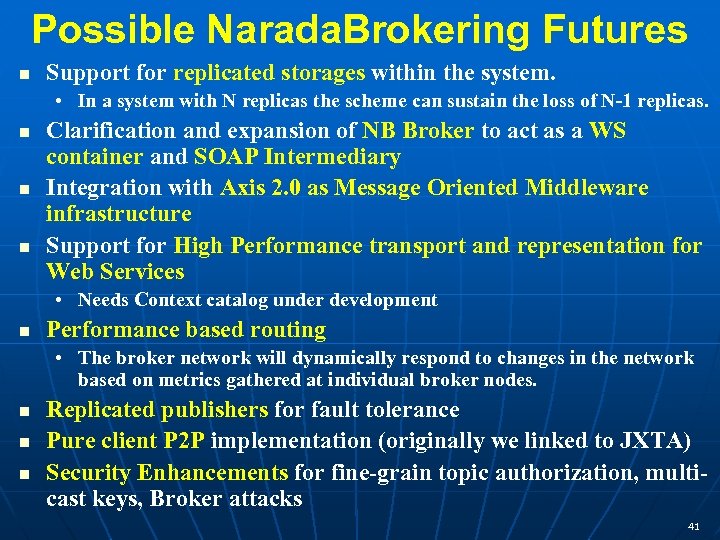

Possible Narada. Brokering Futures Support for replicated storages within the system. • In a system with N replicas the scheme can sustain the loss of N-1 replicas. Clarification and expansion of NB Broker to act as a WS container and SOAP Intermediary Integration with Axis 2. 0 as Message Oriented Middleware infrastructure Support for High Performance transport and representation for Web Services • Needs Context catalog under development Performance based routing • The broker network will dynamically respond to changes in the network based on metrics gathered at individual broker nodes. Replicated publishers for fault tolerance Pure client P 2 P implementation (originally we linked to JXTA) Security Enhancements for fine-grain topic authorization, multicast keys, Broker attacks 41

Possible Narada. Brokering Futures Support for replicated storages within the system. • In a system with N replicas the scheme can sustain the loss of N-1 replicas. Clarification and expansion of NB Broker to act as a WS container and SOAP Intermediary Integration with Axis 2. 0 as Message Oriented Middleware infrastructure Support for High Performance transport and representation for Web Services • Needs Context catalog under development Performance based routing • The broker network will dynamically respond to changes in the network based on metrics gathered at individual broker nodes. Replicated publishers for fault tolerance Pure client P 2 P implementation (originally we linked to JXTA) Security Enhancements for fine-grain topic authorization, multicast keys, Broker attacks 41

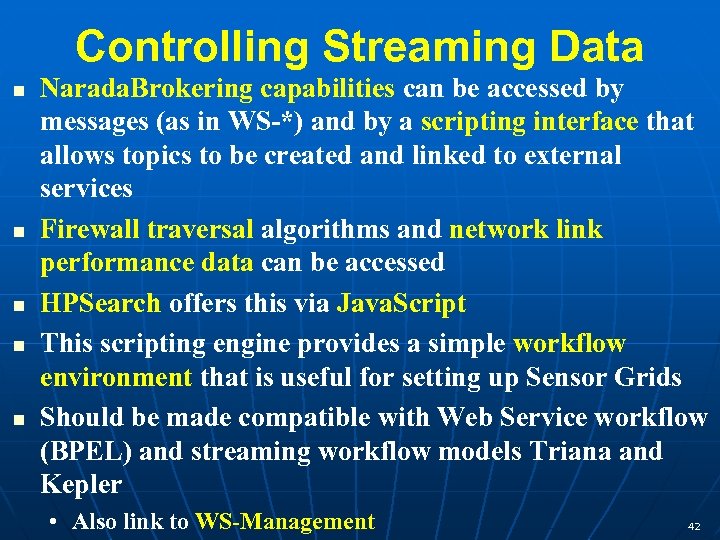

Controlling Streaming Data Narada. Brokering capabilities can be accessed by messages (as in WS-*) and by a scripting interface that allows topics to be created and linked to external services Firewall traversal algorithms and network link performance data can be accessed HPSearch offers this via Java. Script This scripting engine provides a simple workflow environment that is useful for setting up Sensor Grids Should be made compatible with Web Service workflow (BPEL) and streaming workflow models Triana and Kepler • Also link to WS-Management 42

Controlling Streaming Data Narada. Brokering capabilities can be accessed by messages (as in WS-*) and by a scripting interface that allows topics to be created and linked to external services Firewall traversal algorithms and network link performance data can be accessed HPSearch offers this via Java. Script This scripting engine provides a simple workflow environment that is useful for setting up Sensor Grids Should be made compatible with Web Service workflow (BPEL) and streaming workflow models Triana and Kepler • Also link to WS-Management 42

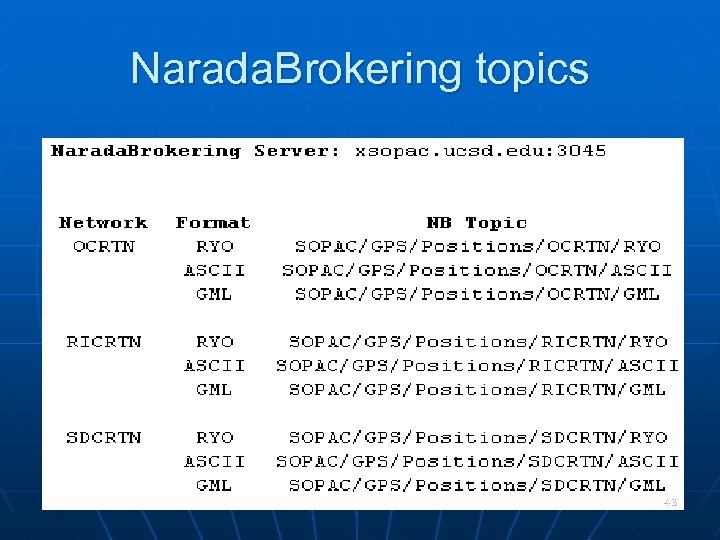

Narada. Brokering topics 43

Narada. Brokering topics 43

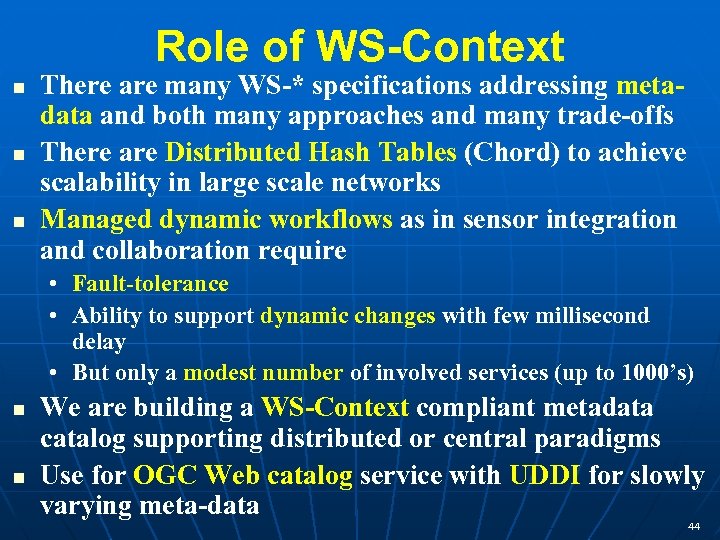

Role of WS-Context There are many WS-* specifications addressing metadata and both many approaches and many trade-offs There are Distributed Hash Tables (Chord) to achieve scalability in large scale networks Managed dynamic workflows as in sensor integration and collaboration require • Fault-tolerance • Ability to support dynamic changes with few millisecond delay • But only a modest number of involved services (up to 1000’s) We are building a WS-Context compliant metadata catalog supporting distributed or central paradigms Use for OGC Web catalog service with UDDI for slowly varying meta-data 44

Role of WS-Context There are many WS-* specifications addressing metadata and both many approaches and many trade-offs There are Distributed Hash Tables (Chord) to achieve scalability in large scale networks Managed dynamic workflows as in sensor integration and collaboration require • Fault-tolerance • Ability to support dynamic changes with few millisecond delay • But only a modest number of involved services (up to 1000’s) We are building a WS-Context compliant metadata catalog supporting distributed or central paradigms Use for OGC Web catalog service with UDDI for slowly varying meta-data 44

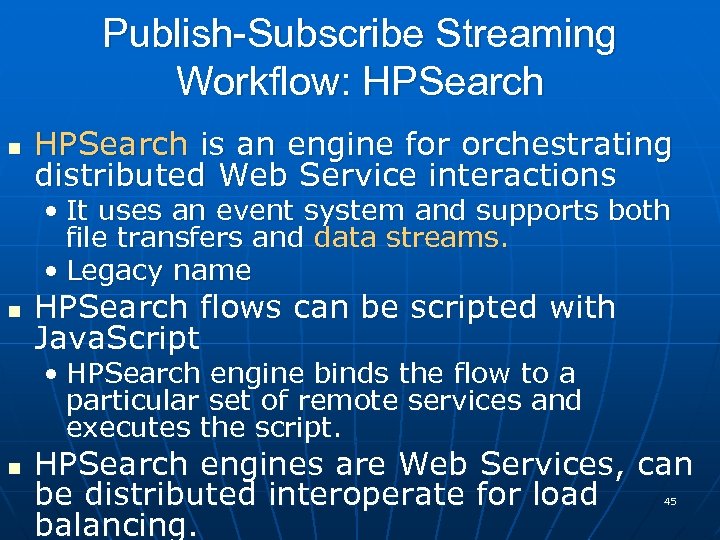

Publish-Subscribe Streaming Workflow: HPSearch is an engine for orchestrating distributed Web Service interactions • It uses an event system and supports both file transfers and data streams. • Legacy name HPSearch flows can be scripted with Java. Script • HPSearch engine binds the flow to a particular set of remote services and executes the script. HPSearch engines are Web Services, can be distributed interoperate for load balancing. 45

Publish-Subscribe Streaming Workflow: HPSearch is an engine for orchestrating distributed Web Service interactions • It uses an event system and supports both file transfers and data streams. • Legacy name HPSearch flows can be scripted with Java. Script • HPSearch engine binds the flow to a particular set of remote services and executes the script. HPSearch engines are Web Services, can be distributed interoperate for load balancing. 45

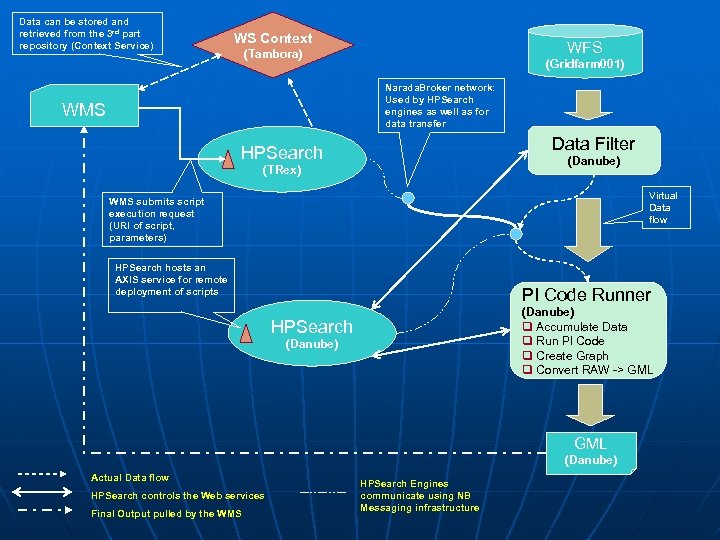

Data can be stored and retrieved from the 3 rd part repository (Context Service) WS Context WFS (Tambora) (Gridfarm 001) Narada. Broker network: Used by HPSearch engines as well as for data transfer WMS Data Filter HPSearch (Danube) (TRex) Virtual Data flow WMS submits script execution request (URI of script, parameters) HPSearch hosts an AXIS service for remote deployment of scripts PI Code Runner (Danube) q Accumulate Data q Run PI Code q Create Graph q Convert RAW -> GML HPSearch (Danube) GML (Danube) Actual Data flow HPSearch controls the Web services Final Output pulled by the WMS HPSearch Engines communicate using NB Messaging infrastructure

Data can be stored and retrieved from the 3 rd part repository (Context Service) WS Context WFS (Tambora) (Gridfarm 001) Narada. Broker network: Used by HPSearch engines as well as for data transfer WMS Data Filter HPSearch (Danube) (TRex) Virtual Data flow WMS submits script execution request (URI of script, parameters) HPSearch hosts an AXIS service for remote deployment of scripts PI Code Runner (Danube) q Accumulate Data q Run PI Code q Create Graph q Convert RAW -> GML HPSearch (Danube) GML (Danube) Actual Data flow HPSearch controls the Web services Final Output pulled by the WMS HPSearch Engines communicate using NB Messaging infrastructure

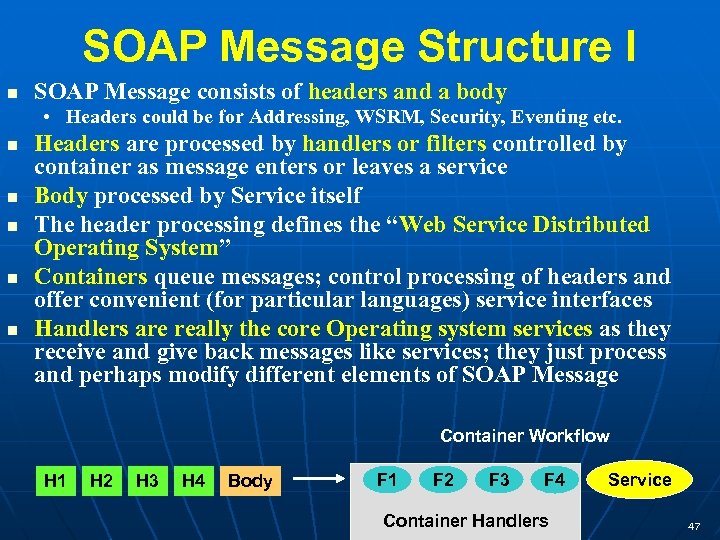

SOAP Message Structure I SOAP Message consists of headers and a body • Headers could be for Addressing, WSRM, Security, Eventing etc. Headers are processed by handlers or filters controlled by container as message enters or leaves a service Body processed by Service itself The header processing defines the “Web Service Distributed Operating System” Containers queue messages; control processing of headers and offer convenient (for particular languages) service interfaces Handlers are really the core Operating system services as they receive and give back messages like services; they just process and perhaps modify different elements of SOAP Message Container Workflow H 1 H 2 H 3 H 4 Body F 1 F 2 F 3 F 4 Container Handlers Service 47

SOAP Message Structure I SOAP Message consists of headers and a body • Headers could be for Addressing, WSRM, Security, Eventing etc. Headers are processed by handlers or filters controlled by container as message enters or leaves a service Body processed by Service itself The header processing defines the “Web Service Distributed Operating System” Containers queue messages; control processing of headers and offer convenient (for particular languages) service interfaces Handlers are really the core Operating system services as they receive and give back messages like services; they just process and perhaps modify different elements of SOAP Message Container Workflow H 1 H 2 H 3 H 4 Body F 1 F 2 F 3 F 4 Container Handlers Service 47

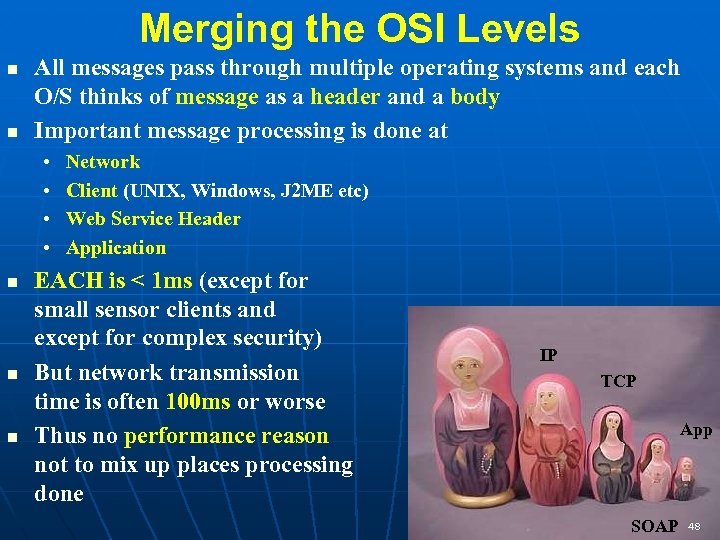

Merging the OSI Levels All messages pass through multiple operating systems and each O/S thinks of message as a header and a body Important message processing is done at • • Network Client (UNIX, Windows, J 2 ME etc) Web Service Header Application EACH is < 1 ms (except for small sensor clients and except for complex security) But network transmission time is often 100 ms or worse Thus no performance reason not to mix up places processing done IP TCP App SOAP 48

Merging the OSI Levels All messages pass through multiple operating systems and each O/S thinks of message as a header and a body Important message processing is done at • • Network Client (UNIX, Windows, J 2 ME etc) Web Service Header Application EACH is < 1 ms (except for small sensor clients and except for complex security) But network transmission time is often 100 ms or worse Thus no performance reason not to mix up places processing done IP TCP App SOAP 48

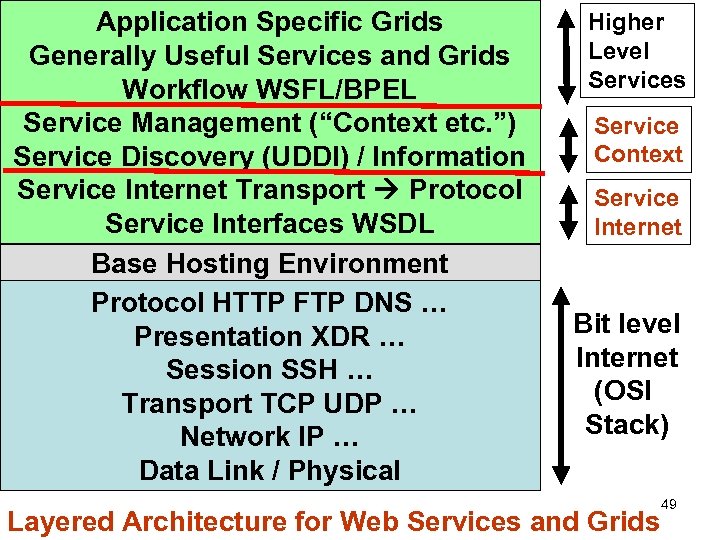

Application Specific Grids Generally Useful Services and Grids Workflow WSFL/BPEL Service Management (“Context etc. ”) Service Discovery (UDDI) / Information Service Internet Transport Protocol Service Interfaces WSDL Base Hosting Environment Protocol HTTP FTP DNS … Presentation XDR … Session SSH … Transport TCP UDP … Network IP … Data Link / Physical Higher Level Services Service Context Service Internet Bit level Internet (OSI Stack) Layered Architecture for Web Services and Grids 49

Application Specific Grids Generally Useful Services and Grids Workflow WSFL/BPEL Service Management (“Context etc. ”) Service Discovery (UDDI) / Information Service Internet Transport Protocol Service Interfaces WSDL Base Hosting Environment Protocol HTTP FTP DNS … Presentation XDR … Session SSH … Transport TCP UDP … Network IP … Data Link / Physical Higher Level Services Service Context Service Internet Bit level Internet (OSI Stack) Layered Architecture for Web Services and Grids 49

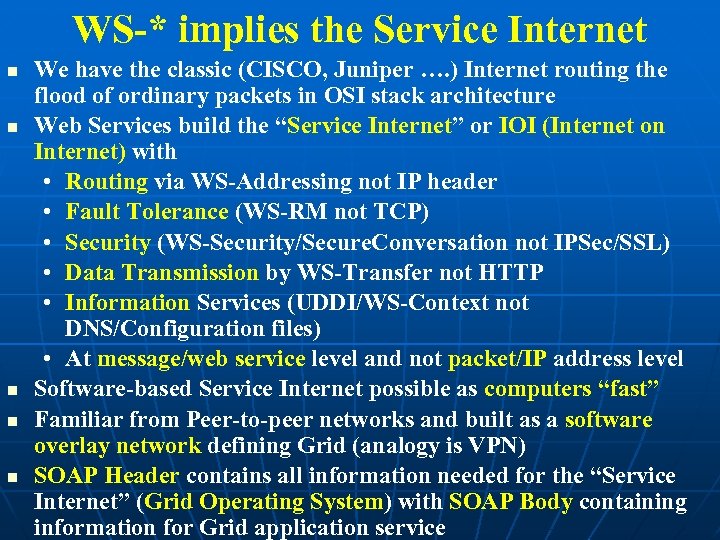

WS-* implies the Service Internet We have the classic (CISCO, Juniper …. ) Internet routing the flood of ordinary packets in OSI stack architecture Web Services build the “Service Internet” or IOI (Internet on Internet) with • Routing via WS-Addressing not IP header • Fault Tolerance (WS-RM not TCP) • Security (WS-Security/Secure. Conversation not IPSec/SSL) • Data Transmission by WS-Transfer not HTTP • Information Services (UDDI/WS-Context not DNS/Configuration files) • At message/web service level and not packet/IP address level Software-based Service Internet possible as computers “fast” Familiar from Peer-to-peer networks and built as a software overlay network defining Grid (analogy is VPN) SOAP Header contains all information needed for the “Service Internet” (Grid Operating System) with SOAP Body containing information for Grid application service

WS-* implies the Service Internet We have the classic (CISCO, Juniper …. ) Internet routing the flood of ordinary packets in OSI stack architecture Web Services build the “Service Internet” or IOI (Internet on Internet) with • Routing via WS-Addressing not IP header • Fault Tolerance (WS-RM not TCP) • Security (WS-Security/Secure. Conversation not IPSec/SSL) • Data Transmission by WS-Transfer not HTTP • Information Services (UDDI/WS-Context not DNS/Configuration files) • At message/web service level and not packet/IP address level Software-based Service Internet possible as computers “fast” Familiar from Peer-to-peer networks and built as a software overlay network defining Grid (analogy is VPN) SOAP Header contains all information needed for the “Service Internet” (Grid Operating System) with SOAP Body containing information for Grid application service

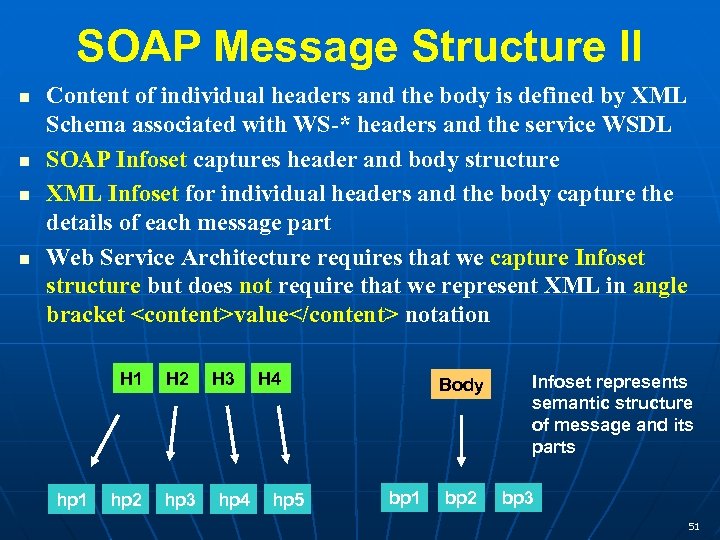

SOAP Message Structure II Content of individual headers and the body is defined by XML Schema associated with WS-* headers and the service WSDL SOAP Infoset captures header and body structure XML Infoset for individual headers and the body capture the details of each message part Web Service Architecture requires that we capture Infoset structure but does not require that we represent XML in angle bracket

SOAP Message Structure II Content of individual headers and the body is defined by XML Schema associated with WS-* headers and the service WSDL SOAP Infoset captures header and body structure XML Infoset for individual headers and the body capture the details of each message part Web Service Architecture requires that we capture Infoset structure but does not require that we represent XML in angle bracket

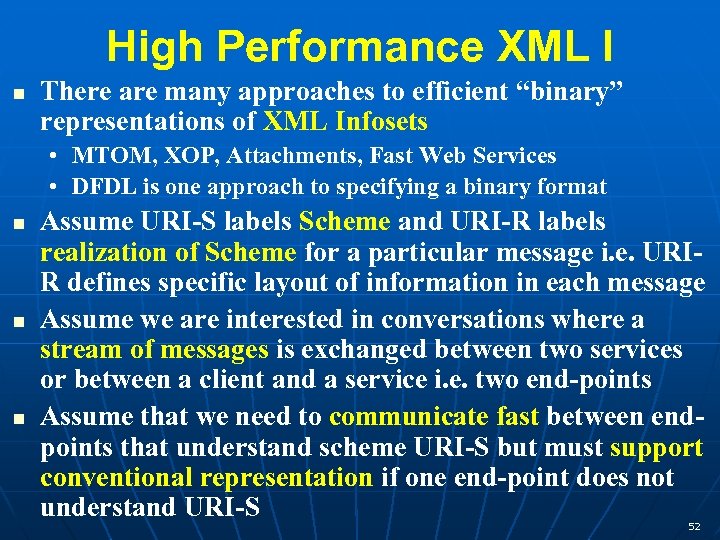

High Performance XML I There are many approaches to efficient “binary” representations of XML Infosets • MTOM, XOP, Attachments, Fast Web Services • DFDL is one approach to specifying a binary format Assume URI-S labels Scheme and URI-R labels realization of Scheme for a particular message i. e. URIR defines specific layout of information in each message Assume we are interested in conversations where a stream of messages is exchanged between two services or between a client and a service i. e. two end-points Assume that we need to communicate fast between endpoints that understand scheme URI-S but must support conventional representation if one end-point does not understand URI-S 52

High Performance XML I There are many approaches to efficient “binary” representations of XML Infosets • MTOM, XOP, Attachments, Fast Web Services • DFDL is one approach to specifying a binary format Assume URI-S labels Scheme and URI-R labels realization of Scheme for a particular message i. e. URIR defines specific layout of information in each message Assume we are interested in conversations where a stream of messages is exchanged between two services or between a client and a service i. e. two end-points Assume that we need to communicate fast between endpoints that understand scheme URI-S but must support conventional representation if one end-point does not understand URI-S 52

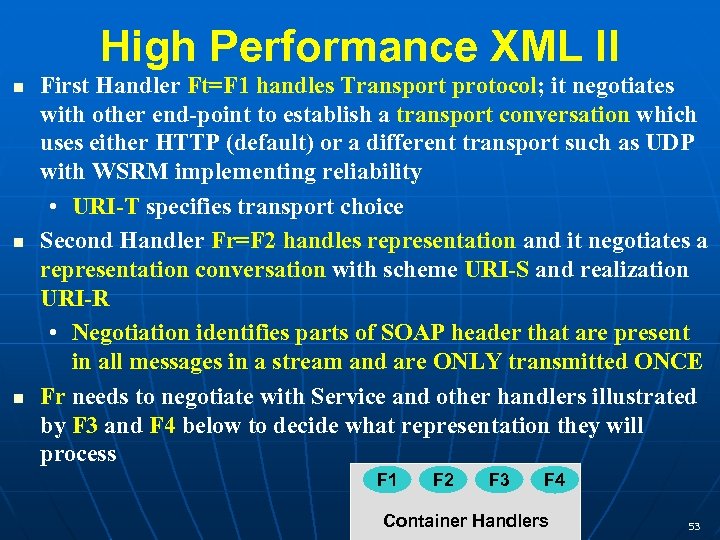

High Performance XML II First Handler Ft=F 1 handles Transport protocol; it negotiates with other end-point to establish a transport conversation which uses either HTTP (default) or a different transport such as UDP with WSRM implementing reliability • URI-T specifies transport choice Second Handler Fr=F 2 handles representation and it negotiates a representation conversation with scheme URI-S and realization URI-R • Negotiation identifies parts of SOAP header that are present in all messages in a stream and are ONLY transmitted ONCE Fr needs to negotiate with Service and other handlers illustrated by F 3 and F 4 below to decide what representation they will process F 1 F 2 F 3 F 4 Container Handlers 53

High Performance XML II First Handler Ft=F 1 handles Transport protocol; it negotiates with other end-point to establish a transport conversation which uses either HTTP (default) or a different transport such as UDP with WSRM implementing reliability • URI-T specifies transport choice Second Handler Fr=F 2 handles representation and it negotiates a representation conversation with scheme URI-S and realization URI-R • Negotiation identifies parts of SOAP header that are present in all messages in a stream and are ONLY transmitted ONCE Fr needs to negotiate with Service and other handlers illustrated by F 3 and F 4 below to decide what representation they will process F 1 F 2 F 3 F 4 Container Handlers 53

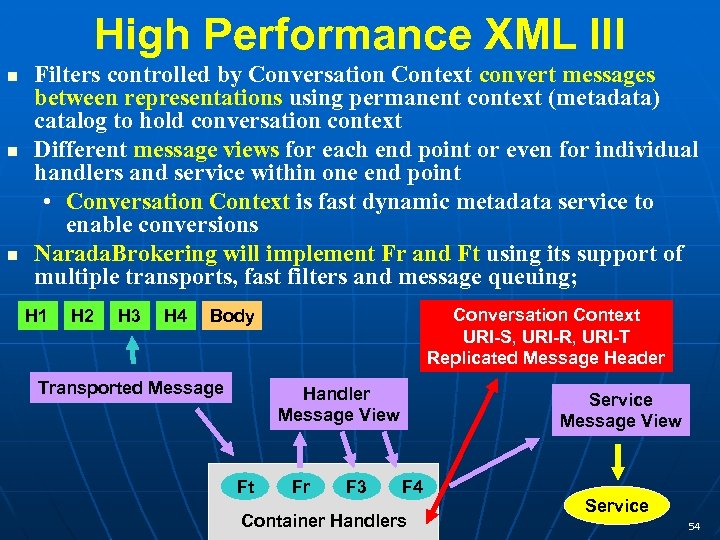

High Performance XML III Filters controlled by Conversation Context convert messages between representations using permanent context (metadata) catalog to hold conversation context Different message views for each end point or even for individual handlers and service within one end point • Conversation Context is fast dynamic metadata service to enable conversions Narada. Brokering will implement Fr and Ft using its support of multiple transports, fast filters and message queuing; H 1 H 2 H 3 H 4 Conversation Context URI-S, URI-R, URI-T Replicated Message Header Body Transported Message Handler Message View Ft Fr F 3 Service Message View F 4 Container Handlers Service 54

High Performance XML III Filters controlled by Conversation Context convert messages between representations using permanent context (metadata) catalog to hold conversation context Different message views for each end point or even for individual handlers and service within one end point • Conversation Context is fast dynamic metadata service to enable conversions Narada. Brokering will implement Fr and Ft using its support of multiple transports, fast filters and message queuing; H 1 H 2 H 3 H 4 Conversation Context URI-S, URI-R, URI-T Replicated Message Header Body Transported Message Handler Message View Ft Fr F 3 Service Message View F 4 Container Handlers Service 54

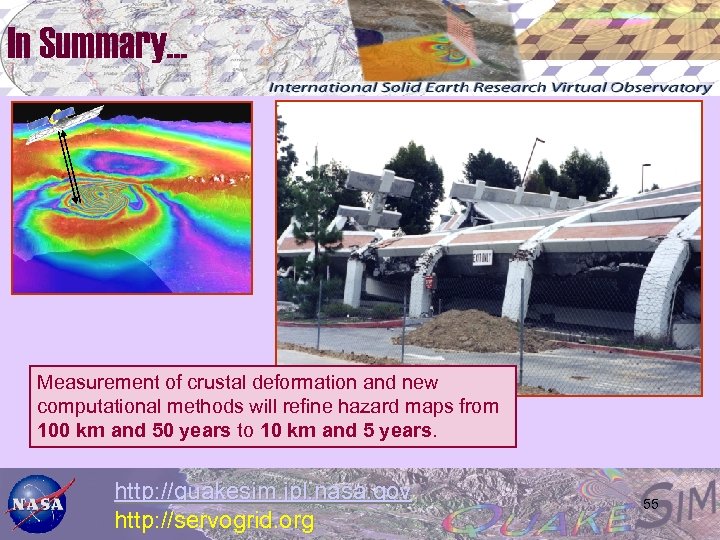

In Summary… Measurement of crustal deformation and new computational methods will refine hazard maps from 100 km and 50 years to 10 km and 5 years. http: //quakesim. jpl. nasa. gov http: //servogrid. org 55

In Summary… Measurement of crustal deformation and new computational methods will refine hazard maps from 100 km and 50 years to 10 km and 5 years. http: //quakesim. jpl. nasa. gov http: //servogrid. org 55

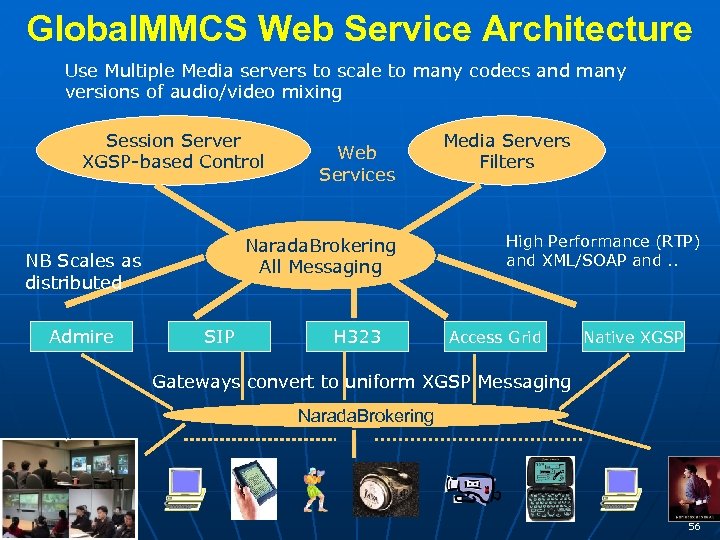

Global. MMCS Web Service Architecture Use Multiple Media servers to scale to many codecs and many versions of audio/video mixing Session Server XGSP-based Control Narada. Brokering All Messaging NB Scales as distributed Admire Web Services SIP H 323 Media Servers Filters High Performance (RTP) and XML/SOAP and. . Access Grid Native XGSP Gateways convert to uniform XGSP Messaging Narada. Brokering 56

Global. MMCS Web Service Architecture Use Multiple Media servers to scale to many codecs and many versions of audio/video mixing Session Server XGSP-based Control Narada. Brokering All Messaging NB Scales as distributed Admire Web Services SIP H 323 Media Servers Filters High Performance (RTP) and XML/SOAP and. . Access Grid Native XGSP Gateways convert to uniform XGSP Messaging Narada. Brokering 56

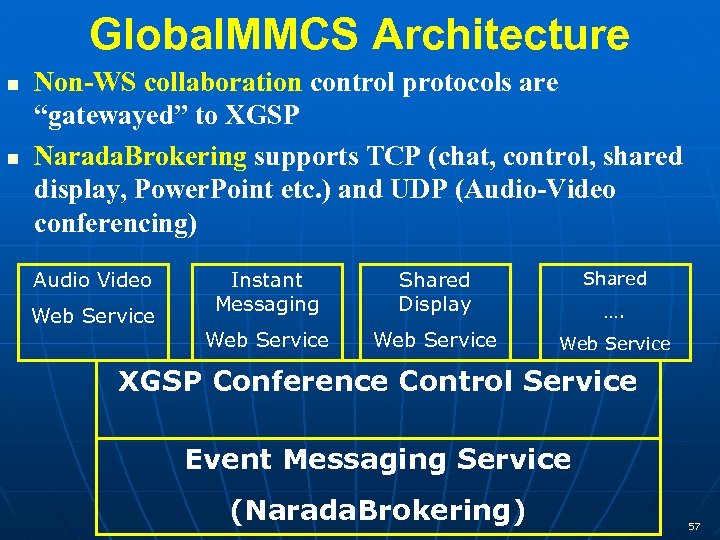

Global. MMCS Architecture Non-WS collaboration control protocols are “gatewayed” to XGSP Narada. Brokering supports TCP (chat, control, shared display, Power. Point etc. ) and UDP (Audio-Video conferencing) Audio Video Web Service Instant Messaging Shared Display Shared Web Service …. XGSP Conference Control Service Event Messaging Service (Narada. Brokering) 57

Global. MMCS Architecture Non-WS collaboration control protocols are “gatewayed” to XGSP Narada. Brokering supports TCP (chat, control, shared display, Power. Point etc. ) and UDP (Audio-Video conferencing) Audio Video Web Service Instant Messaging Shared Display Shared Web Service …. XGSP Conference Control Service Event Messaging Service (Narada. Brokering) 57

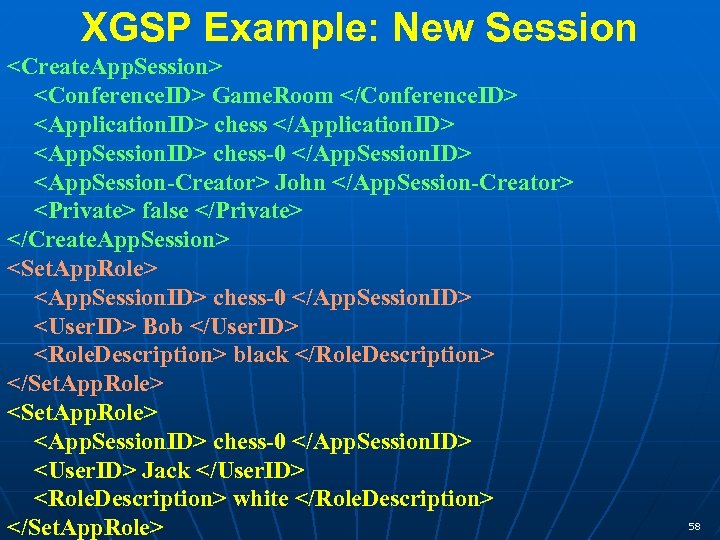

XGSP Example: New Session

XGSP Example: New Session

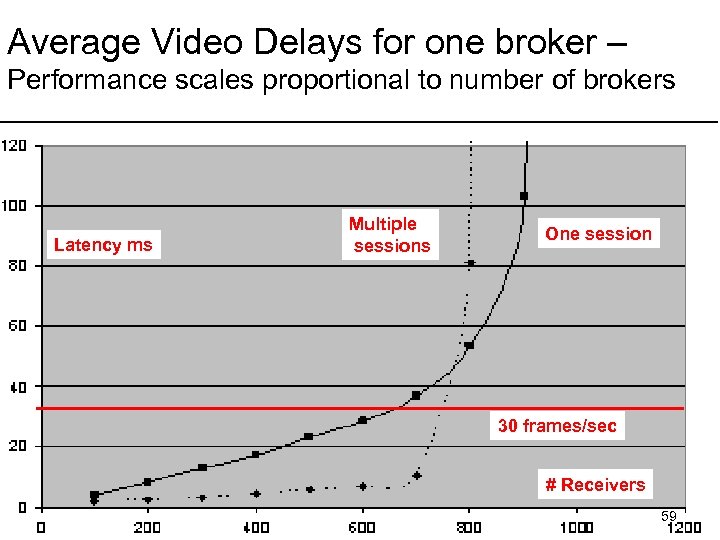

Average Video Delays for one broker – Performance scales proportional to number of brokers Latency ms Multiple sessions One session 30 frames/sec # Receivers 59

Average Video Delays for one broker – Performance scales proportional to number of brokers Latency ms Multiple sessions One session 30 frames/sec # Receivers 59

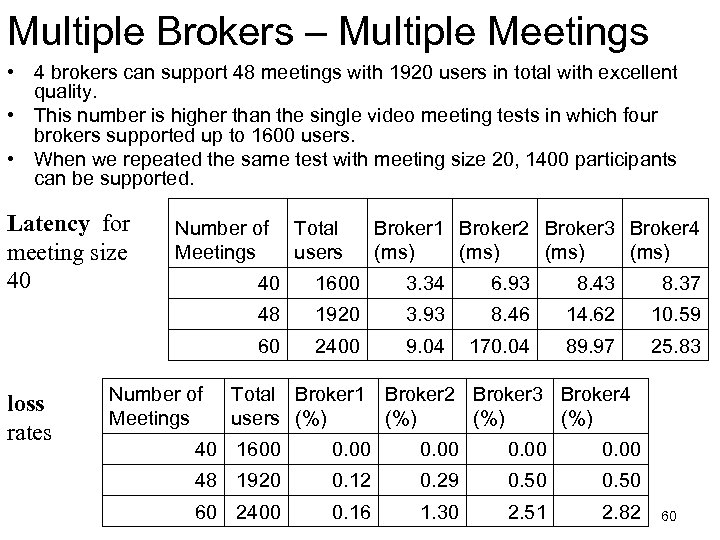

Multiple Brokers – Multiple Meetings • 4 brokers can support 48 meetings with 1920 users in total with excellent quality. • This number is higher than the single video meeting tests in which four brokers supported up to 1600 users. • When we repeated the same test with meeting size 20, 1400 participants can be supported. Latency for meeting size 40 Number of Meetings Total users Broker 1 Broker 2 Broker 3 Broker 4 (ms) 3. 34 6. 93 8. 43 8. 37 1920 3. 93 8. 46 14. 62 10. 59 60 Number of Meetings 1600 48 loss rates 40 2400 9. 04 170. 04 89. 97 25. 83 Total Broker 1 users (%) Broker 2 Broker 3 Broker 4 (%) (%) 40 1600 0. 00 48 1920 0. 12 0. 29 0. 50 60 2400 0. 16 1. 30 2. 51 2. 82 60

Multiple Brokers – Multiple Meetings • 4 brokers can support 48 meetings with 1920 users in total with excellent quality. • This number is higher than the single video meeting tests in which four brokers supported up to 1600 users. • When we repeated the same test with meeting size 20, 1400 participants can be supported. Latency for meeting size 40 Number of Meetings Total users Broker 1 Broker 2 Broker 3 Broker 4 (ms) 3. 34 6. 93 8. 43 8. 37 1920 3. 93 8. 46 14. 62 10. 59 60 Number of Meetings 1600 48 loss rates 40 2400 9. 04 170. 04 89. 97 25. 83 Total Broker 1 users (%) Broker 2 Broker 3 Broker 4 (%) (%) 40 1600 0. 00 48 1920 0. 12 0. 29 0. 50 60 2400 0. 16 1. 30 2. 51 2. 82 60

PDA Download video (using 4 way video mixer service) Desktop PDA 61

PDA Download video (using 4 way video mixer service) Desktop PDA 61

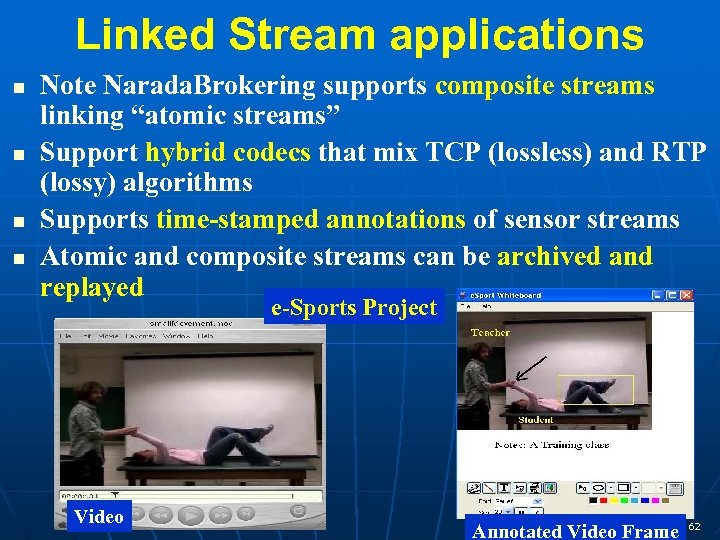

Linked Stream applications Note Narada. Brokering supports composite streams linking “atomic streams” Support hybrid codecs that mix TCP (lossless) and RTP (lossy) algorithms Supports time-stamped annotations of sensor streams Atomic and composite streams can be archived and replayed e-Sports Project Video Annotated Video Frame 62

Linked Stream applications Note Narada. Brokering supports composite streams linking “atomic streams” Support hybrid codecs that mix TCP (lossless) and RTP (lossy) algorithms Supports time-stamped annotations of sensor streams Atomic and composite streams can be archived and replayed e-Sports Project Video Annotated Video Frame 62