064fd8ee1675c2b94ffa87ae529755ef.ppt

- Количество слайдов: 48

Service Configuration and Management: The Workload Management System (RB&WMS&LB) D. Cesini (INFN-CNAF) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 http: //grid. infn. it/

Service Configuration and Management: The Workload Management System (RB&WMS&LB) D. Cesini (INFN-CNAF) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 http: //grid. infn. it/

Summary • WMS Overview • Job state machine • Installing, configuring and managing a WMS(LB) • Debugging job failures and troubleshooting www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 http: //grid. infn. it/

Summary • WMS Overview • Job state machine • Installing, configuring and managing a WMS(LB) • Debugging job failures and troubleshooting www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 http: //grid. infn. it/

Workload Management System (WMS) • Is the grid component that allows users to submit jobs. • Performs all tasks required to execute jobs. • Comprises a set of Grid middleware components responsible for distribution and management of tasks across Grid resources. • Hides to the user the complexity of the Grid. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 3 http: //grid. infn. it/

Workload Management System (WMS) • Is the grid component that allows users to submit jobs. • Performs all tasks required to execute jobs. • Comprises a set of Grid middleware components responsible for distribution and management of tasks across Grid resources. • Hides to the user the complexity of the Grid. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 3 http: //grid. infn. it/

Lcg-RB vs. g. Lite-WMS (3. 1) LCG-RB • Use NS as interface to users • Allow submission only to lcg CE • Allow only push mode • Contact the BDII for every job submission • Allow only single job submission • Is slower but very stable www. ccr. infn. it • Use the web services based WMProxy as interface to users • Allow submission to g. Lite, CREAM and lcg CE • Allow push and pull mode • Maintain a local cache of the BDII – the ISM • Allow new types of jobs (i. e. collection and parametric) • Is faster, but still not so stable even if is improving quickly. Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 4 http: //grid. infn. it/

Lcg-RB vs. g. Lite-WMS (3. 1) LCG-RB • Use NS as interface to users • Allow submission only to lcg CE • Allow only push mode • Contact the BDII for every job submission • Allow only single job submission • Is slower but very stable www. ccr. infn. it • Use the web services based WMProxy as interface to users • Allow submission to g. Lite, CREAM and lcg CE • Allow push and pull mode • Maintain a local cache of the BDII – the ISM • Allow new types of jobs (i. e. collection and parametric) • Is faster, but still not so stable even if is improving quickly. Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 4 http: //grid. infn. it/

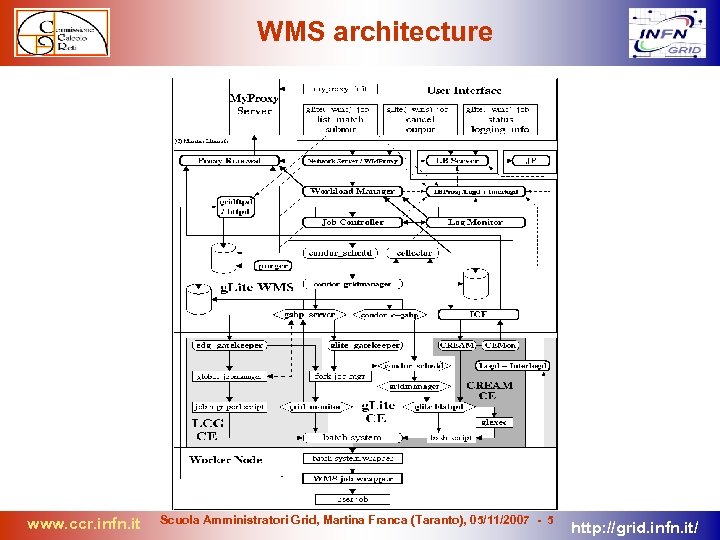

WMS architecture www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 5 http: //grid. infn. it/

WMS architecture www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 5 http: //grid. infn. it/

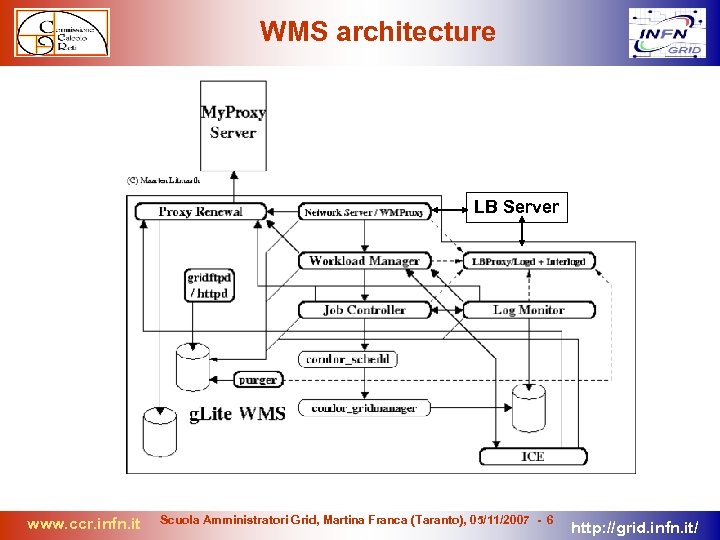

WMS architecture LB Server www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 6 http: //grid. infn. it/

WMS architecture LB Server www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 6 http: //grid. infn. it/

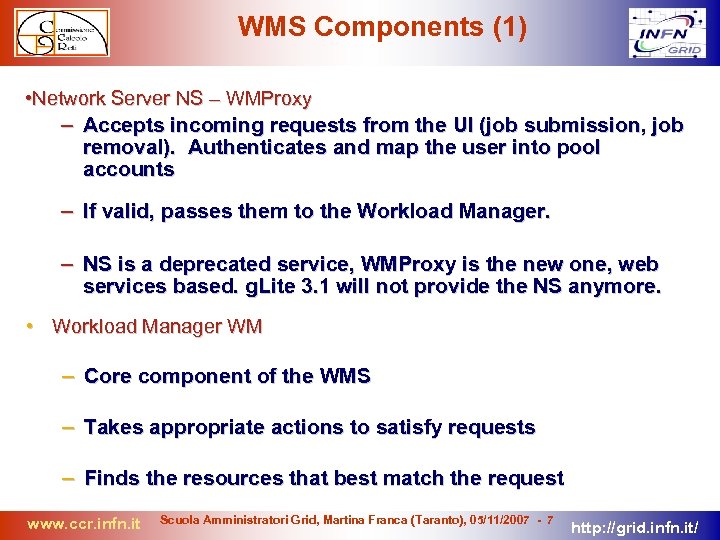

WMS Components (1) • Network Server NS – WMProxy – Accepts incoming requests from the UI (job submission, job removal). Authenticates and map the user into pool accounts – If valid, passes them to the Workload Manager. – NS is a deprecated service, WMProxy is the new one, web services based. g. Lite 3. 1 will not provide the NS anymore. • Workload Manager WM – Core component of the WMS – Takes appropriate actions to satisfy requests – Finds the resources that best match the request www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 7 http: //grid. infn. it/

WMS Components (1) • Network Server NS – WMProxy – Accepts incoming requests from the UI (job submission, job removal). Authenticates and map the user into pool accounts – If valid, passes them to the Workload Manager. – NS is a deprecated service, WMProxy is the new one, web services based. g. Lite 3. 1 will not provide the NS anymore. • Workload Manager WM – Core component of the WMS – Takes appropriate actions to satisfy requests – Finds the resources that best match the request www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 7 http: //grid. infn. it/

WMS Components (2) From the initial design, two submitting models are provided: eager scheduling (“push” model) a job is bound to a resource as soon as possible. Once the decision has been taken, the job is passed to the selected resource for execution lazy scheduling (“pull” model) the job is held by the WM until a resource becomes available. When this happens the resource is matched against the submitted job. At the moment only the push mode is used because of backward compatibility. Pull model needs the new g. Lite CE. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 8 http: //grid. infn. it/

WMS Components (2) From the initial design, two submitting models are provided: eager scheduling (“push” model) a job is bound to a resource as soon as possible. Once the decision has been taken, the job is passed to the selected resource for execution lazy scheduling (“pull” model) the job is held by the WM until a resource becomes available. When this happens the resource is matched against the submitted job. At the moment only the push mode is used because of backward compatibility. Pull model needs the new g. Lite CE. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 8 http: //grid. infn. it/

WMS Components (3) • Job Controllor (JC) – is responsible for: making the final touches to the JDL expression for a job, before it is passed to Condor. C for the actual submission • Condor – responsible for performing the actual job management operations • job submission to CE • job removal www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 9 http: //grid. infn. it/

WMS Components (3) • Job Controllor (JC) – is responsible for: making the final touches to the JDL expression for a job, before it is passed to Condor. C for the actual submission • Condor – responsible for performing the actual job management operations • job submission to CE • job removal www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 - 9 http: //grid. infn. it/

WMS Components (4) • Log Monitor (LM) – is responsible for watching the Condor. C log file intercepting interesting events concerning active jobs • Proxy Renewal Service – is responsible to assure that, for all the lifetime of a job, a valid user proxy exists within the WMS My. Proxy Server is contacted in order to renew the user's credential • LB PROXY (only on the Glite WMS) – A local cache of the LB www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 10 http: //grid. infn. it/

WMS Components (4) • Log Monitor (LM) – is responsible for watching the Condor. C log file intercepting interesting events concerning active jobs • Proxy Renewal Service – is responsible to assure that, for all the lifetime of a job, a valid user proxy exists within the WMS My. Proxy Server is contacted in order to renew the user's credential • LB PROXY (only on the Glite WMS) – A local cache of the LB www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 10 http: //grid. infn. it/

LB Components • Logging & Bookkeeping (LB) – is responsible for: storing events generated by the various Grid components (UI, WMS, WN. . ) Providing this information on user requests (job-status, job-logging-info) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 11 http: //grid. infn. it/

LB Components • Logging & Bookkeeping (LB) – is responsible for: storing events generated by the various Grid components (UI, WMS, WN. . ) Providing this information on user requests (job-status, job-logging-info) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 11 http: //grid. infn. it/

ICE is the components responsible of submitting jobs to the new (not yet in production) CREAM CE. Will replace condor completely. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 12 http: //grid. infn. it/

ICE is the components responsible of submitting jobs to the new (not yet in production) CREAM CE. Will replace condor completely. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 12 http: //grid. infn. it/

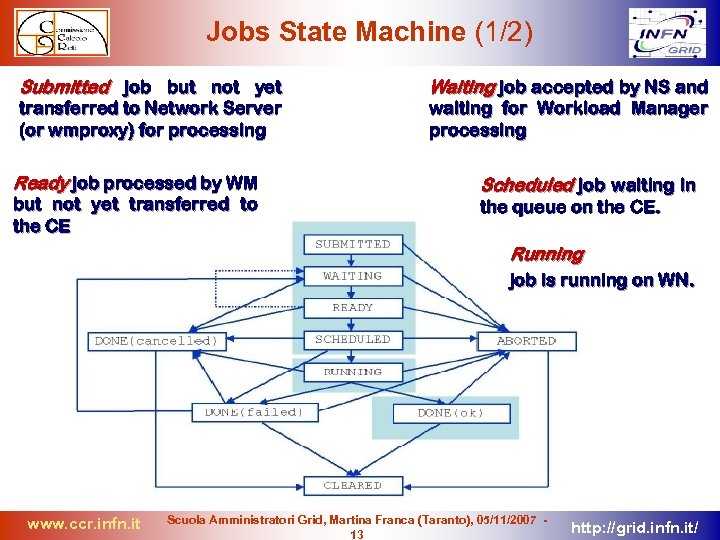

Jobs State Machine (1/2) Submitted job but not yet transferred to Network Server (or wmproxy) for processing Ready job processed by WM but not yet transferred to the CE Waiting job accepted by NS and waiting for Workload Manager processing Scheduled job waiting in the queue on the CE. Running job is running on WN. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 13 http: //grid. infn. it/

Jobs State Machine (1/2) Submitted job but not yet transferred to Network Server (or wmproxy) for processing Ready job processed by WM but not yet transferred to the CE Waiting job accepted by NS and waiting for Workload Manager processing Scheduled job waiting in the queue on the CE. Running job is running on WN. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 13 http: //grid. infn. it/

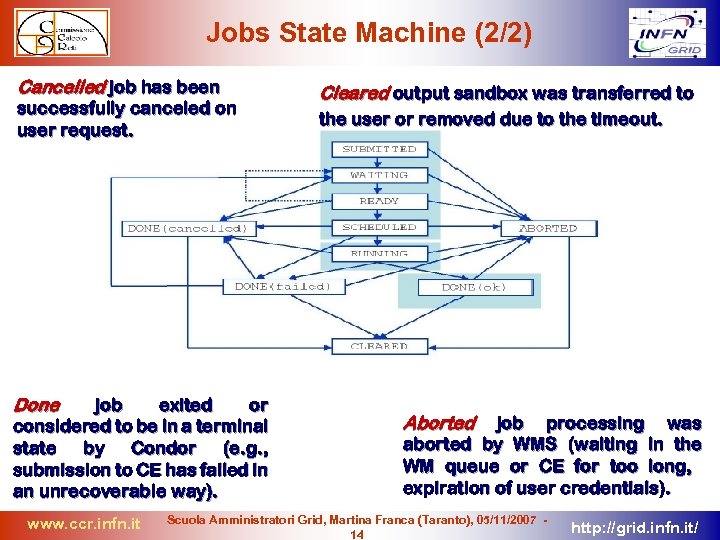

Jobs State Machine (2/2) Cancelled job has been successfully canceled on user request. Done job exited or considered to be in a terminal state by Condor (e. g. , submission to CE has failed in an unrecoverable way). www. ccr. infn. it Cleared output sandbox was transferred to the user or removed due to the timeout. Aborted job processing was aborted by WMS (waiting in the WM queue or CE for too long, expiration of user credentials). Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 14 http: //grid. infn. it/

Jobs State Machine (2/2) Cancelled job has been successfully canceled on user request. Done job exited or considered to be in a terminal state by Condor (e. g. , submission to CE has failed in an unrecoverable way). www. ccr. infn. it Cleared output sandbox was transferred to the user or removed due to the timeout. Aborted job processing was aborted by WMS (waiting in the WM queue or CE for too long, expiration of user credentials). Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 14 http: //grid. infn. it/

Installing/configuring a WMS (LB) • Using YAIM is for sure the easiest way to install a WMS • GLITE YAIM supports the following profile: • glite-WMS • glite-LB • glite-WMSLB (now obsolete, not to be used) • lcg-RB If the machines is supposed to be heavy loaded it is strongly advised to use the separated profiles in different machines Important site. def parameters • WMS_HOST (RB_HOST) • LB_HOST • PX_HOST • BDII_HOST • all the usual users/groups. conf, java, domain, VO… www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 15 http: //grid. infn. it/

Installing/configuring a WMS (LB) • Using YAIM is for sure the easiest way to install a WMS • GLITE YAIM supports the following profile: • glite-WMS • glite-LB • glite-WMSLB (now obsolete, not to be used) • lcg-RB If the machines is supposed to be heavy loaded it is strongly advised to use the separated profiles in different machines Important site. def parameters • WMS_HOST (RB_HOST) • LB_HOST • PX_HOST • BDII_HOST • all the usual users/groups. conf, java, domain, VO… www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 15 http: //grid. infn. it/

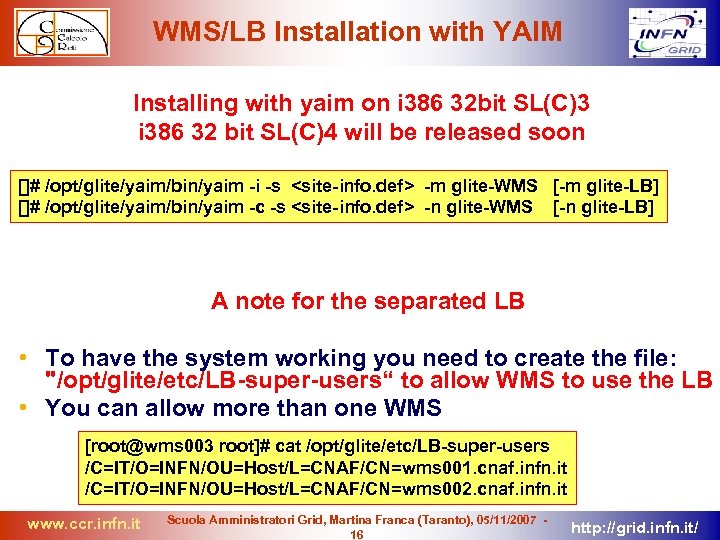

WMS/LB Installation with YAIM Installing with yaim on i 386 32 bit SL(C)3 i 386 32 bit SL(C)4 will be released soon []# /opt/glite/yaim/bin/yaim -i -s

WMS/LB Installation with YAIM Installing with yaim on i 386 32 bit SL(C)3 i 386 32 bit SL(C)4 will be released soon []# /opt/glite/yaim/bin/yaim -i -s

![Running services (1/2) • On a WMS node: [root@wms 002 root]# service g. Lite Running services (1/2) • On a WMS node: [root@wms 002 root]# service g. Lite](https://present5.com/presentation/064fd8ee1675c2b94ffa87ae529755ef/image-17.jpg) Running services (1/2) • On a WMS node: [root@wms 002 root]# service g. Lite status glite-wms-in. ftpd (pid 10509 10162) is running. . . glite-lb-proxy running as 10204 glite-lb-logd running glite-lb-interlogd running /opt/glite/bin/glite-wms-workload_manager (pid 7006) is running. . . Job. Controller running in pid: 10376 Condor. G master running in pid: 10416 Condor. G schedd running in pid: 10423 Logmonitor running. . . glite-proxy-renewd running WMProxy httpd listening on port 7443 httpd (pid 22571 9091 10668 10560 10559 10558 10557 10556 10555 10552) is running. . === WMProxy Server running instances: UID PPID C STIME TTY TIME CMD glite 13988 10555 0 10: 13 ? 00: 00 /opt/glite/bin/glite_wms_wmproxy_server www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 17 http: //grid. infn. it/

Running services (1/2) • On a WMS node: [root@wms 002 root]# service g. Lite status glite-wms-in. ftpd (pid 10509 10162) is running. . . glite-lb-proxy running as 10204 glite-lb-logd running glite-lb-interlogd running /opt/glite/bin/glite-wms-workload_manager (pid 7006) is running. . . Job. Controller running in pid: 10376 Condor. G master running in pid: 10416 Condor. G schedd running in pid: 10423 Logmonitor running. . . glite-proxy-renewd running WMProxy httpd listening on port 7443 httpd (pid 22571 9091 10668 10560 10559 10558 10557 10556 10555 10552) is running. . === WMProxy Server running instances: UID PPID C STIME TTY TIME CMD glite 13988 10555 0 10: 13 ? 00: 00 /opt/glite/bin/glite_wms_wmproxy_server www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 17 http: //grid. infn. it/

![Running services (2/2) • On a LB node: [root@wms 003 root]# service g. Lite Running services (2/2) • On a LB node: [root@wms 003 root]# service g. Lite](https://present5.com/presentation/064fd8ee1675c2b94ffa87ae529755ef/image-18.jpg) Running services (2/2) • On a LB node: [root@wms 003 root]# service g. Lite status glite-lb-notif-interlogd running glite-lb-bkserverd running as 29931 You need mysql running mysql> show databases; +------+ | Database | +------+ | lbserver 20 | | mysql | | test | +------+ www. ccr. infn. it PAY ATTENTION: you need mysql also on a WMS only node because of lbproxy mysql> show databases; +-----+ | Database | +-----+ | lbproxy | | mysql | | test | +-----+ 3 rows in set (0. 00 sec) Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 18 http: //grid. infn. it/

Running services (2/2) • On a LB node: [root@wms 003 root]# service g. Lite status glite-lb-notif-interlogd running glite-lb-bkserverd running as 29931 You need mysql running mysql> show databases; +------+ | Database | +------+ | lbserver 20 | | mysql | | test | +------+ www. ccr. infn. it PAY ATTENTION: you need mysql also on a WMS only node because of lbproxy mysql> show databases; +-----+ | Database | +-----+ | lbproxy | | mysql | | test | +-----+ 3 rows in set (0. 00 sec) Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 18 http: //grid. infn. it/

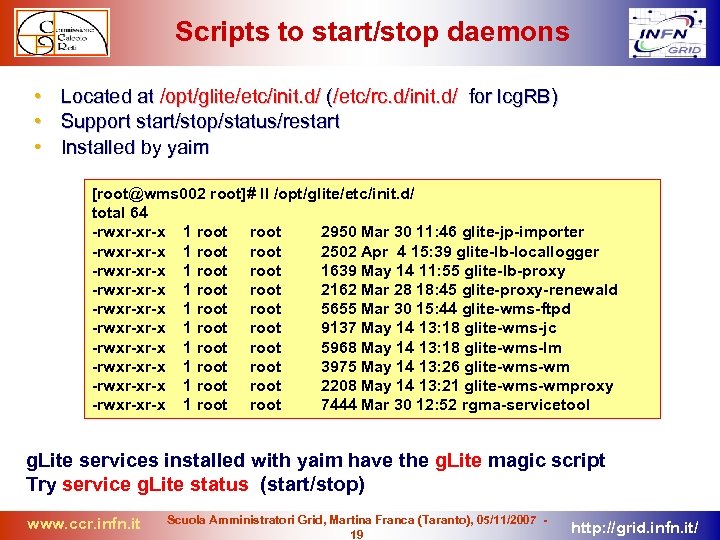

Scripts to start/stop daemons • • • Located at /opt/glite/etc/init. d/ (/etc/rc. d/init. d/ for lcg. RB) Support start/stop/status/restart Installed by yaim [root@wms 002 root]# ll /opt/glite/etc/init. d/ total 64 -rwxr-xr-x 1 root 2950 Mar 30 11: 46 glite-jp-importer -rwxr-xr-x 1 root 2502 Apr 4 15: 39 glite-lb-locallogger -rwxr-xr-x 1 root 1639 May 14 11: 55 glite-lb-proxy -rwxr-xr-x 1 root 2162 Mar 28 18: 45 glite-proxy-renewald -rwxr-xr-x 1 root 5655 Mar 30 15: 44 glite-wms-ftpd -rwxr-xr-x 1 root 9137 May 14 13: 18 glite-wms-jc -rwxr-xr-x 1 root 5968 May 14 13: 18 glite-wms-lm -rwxr-xr-x 1 root 3975 May 14 13: 26 glite-wms-wm -rwxr-xr-x 1 root 2208 May 14 13: 21 glite-wms-wmproxy -rwxr-xr-x 1 root 7444 Mar 30 12: 52 rgma-servicetool g. Lite services installed with yaim have the g. Lite magic script Try service g. Lite status (start/stop) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 19 http: //grid. infn. it/

Scripts to start/stop daemons • • • Located at /opt/glite/etc/init. d/ (/etc/rc. d/init. d/ for lcg. RB) Support start/stop/status/restart Installed by yaim [root@wms 002 root]# ll /opt/glite/etc/init. d/ total 64 -rwxr-xr-x 1 root 2950 Mar 30 11: 46 glite-jp-importer -rwxr-xr-x 1 root 2502 Apr 4 15: 39 glite-lb-locallogger -rwxr-xr-x 1 root 1639 May 14 11: 55 glite-lb-proxy -rwxr-xr-x 1 root 2162 Mar 28 18: 45 glite-proxy-renewald -rwxr-xr-x 1 root 5655 Mar 30 15: 44 glite-wms-ftpd -rwxr-xr-x 1 root 9137 May 14 13: 18 glite-wms-jc -rwxr-xr-x 1 root 5968 May 14 13: 18 glite-wms-lm -rwxr-xr-x 1 root 3975 May 14 13: 26 glite-wms-wm -rwxr-xr-x 1 root 2208 May 14 13: 21 glite-wms-wmproxy -rwxr-xr-x 1 root 7444 Mar 30 12: 52 rgma-servicetool g. Lite services installed with yaim have the g. Lite magic script Try service g. Lite status (start/stop) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 19 http: //grid. infn. it/

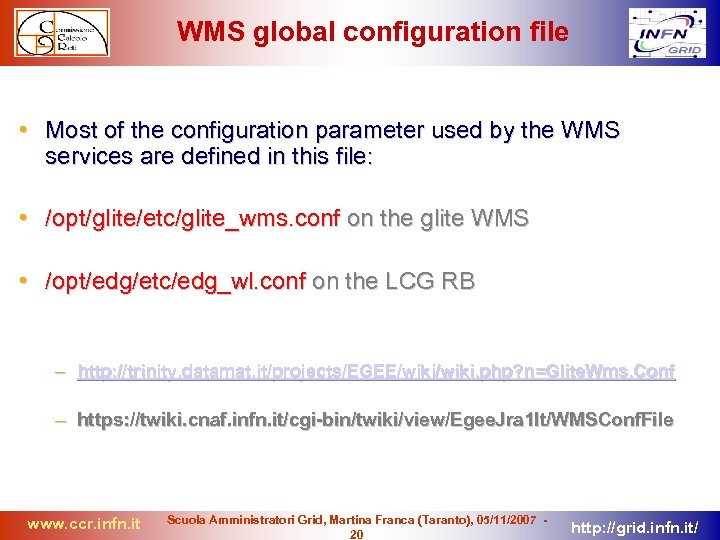

WMS global configuration file • Most of the configuration parameter used by the WMS services are defined in this file: • /opt/glite/etc/glite_wms. conf on the glite WMS • /opt/edg/etc/edg_wl. conf on the LCG RB – http: //trinity. datamat. it/projects/EGEE/wiki. php? n=Glite. Wms. Conf – https: //twiki. cnaf. infn. it/cgi-bin/twiki/view/Egee. Jra 1 It/WMSConf. File www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 20 http: //grid. infn. it/

WMS global configuration file • Most of the configuration parameter used by the WMS services are defined in this file: • /opt/glite/etc/glite_wms. conf on the glite WMS • /opt/edg/etc/edg_wl. conf on the LCG RB – http: //trinity. datamat. it/projects/EGEE/wiki. php? n=Glite. Wms. Conf – https: //twiki. cnaf. infn. it/cgi-bin/twiki/view/Egee. Jra 1 It/WMSConf. File www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 20 http: //grid. infn. it/

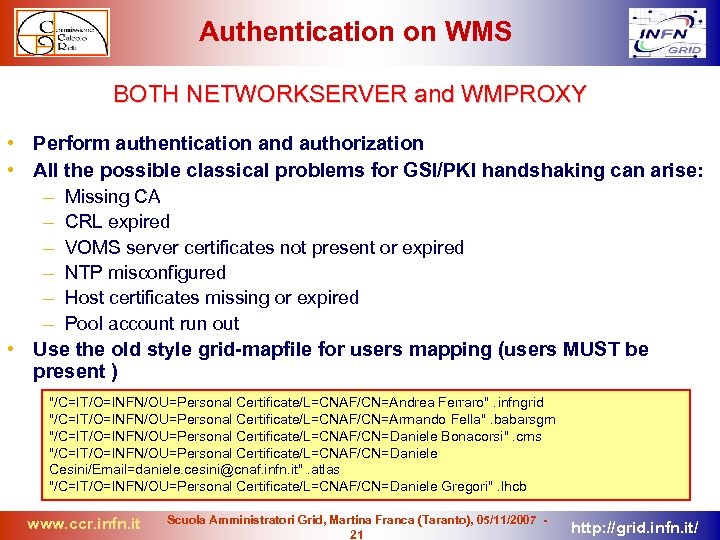

Authentication on WMS BOTH NETWORKSERVER and WMPROXY • Perform authentication and authorization • All the possible classical problems for GSI/PKI handshaking can arise: – Missing CA – CRL expired – VOMS server certificates not present or expired – NTP misconfigured – Host certificates missing or expired – Pool account run out • Use the old style grid-mapfile for users mapping (users MUST be present ) "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Andrea Ferraro". infngrid "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Armando Fella". babarsgm "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Daniele Bonacorsi". cms "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Daniele Cesini/Email=daniele. cesini@cnaf. infn. it". atlas "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Daniele Gregori". lhcb www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 21 http: //grid. infn. it/

Authentication on WMS BOTH NETWORKSERVER and WMPROXY • Perform authentication and authorization • All the possible classical problems for GSI/PKI handshaking can arise: – Missing CA – CRL expired – VOMS server certificates not present or expired – NTP misconfigured – Host certificates missing or expired – Pool account run out • Use the old style grid-mapfile for users mapping (users MUST be present ) "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Andrea Ferraro". infngrid "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Armando Fella". babarsgm "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Daniele Bonacorsi". cms "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Daniele Cesini/Email=daniele. cesini@cnaf. infn. it". atlas "/C=IT/O=INFN/OU=Personal Certificate/L=CNAF/CN=Daniele Gregori". lhcb www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 21 http: //grid. infn. it/

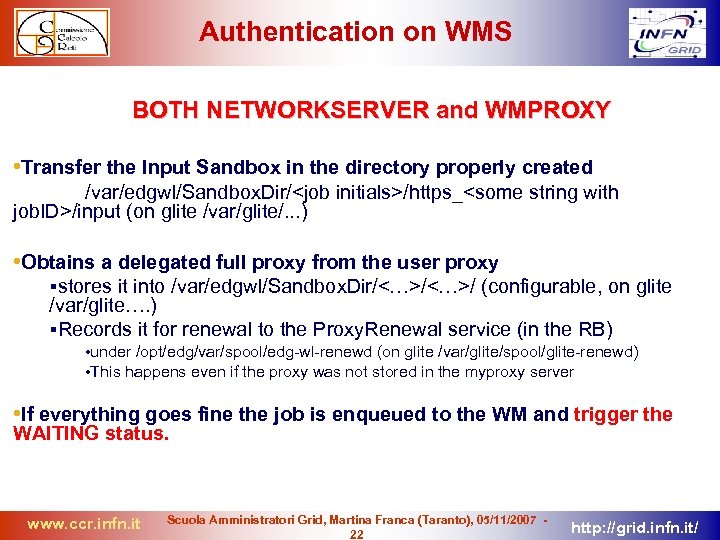

Authentication on WMS BOTH NETWORKSERVER and WMPROXY • Transfer the Input Sandbox in the directory properly created /var/edgwl/Sandbox. Dir/

Authentication on WMS BOTH NETWORKSERVER and WMPROXY • Transfer the Input Sandbox in the directory properly created /var/edgwl/Sandbox. Dir/

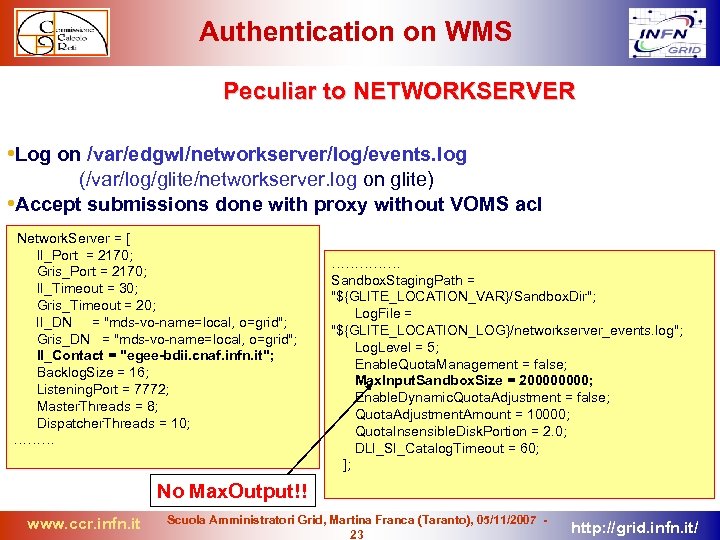

Authentication on WMS Peculiar to NETWORKSERVER • Log on /var/edgwl/networkserver/log/events. log (/var/log/glite/networkserver. log on glite) • Accept submissions done with proxy without VOMS acl Network. Server = [ II_Port = 2170; Gris_Port = 2170; II_Timeout = 30; Gris_Timeout = 20; II_DN = "mds-vo-name=local, o=grid"; Gris_DN = "mds-vo-name=local, o=grid"; II_Contact = "egee-bdii. cnaf. infn. it"; Backlog. Size = 16; Listening. Port = 7772; Master. Threads = 8; Dispatcher. Threads = 10; …………… Sandbox. Staging. Path = "${GLITE_LOCATION_VAR}/Sandbox. Dir"; Log. File = "${GLITE_LOCATION_LOG}/networkserver_events. log"; Log. Level = 5; Enable. Quota. Management = false; Max. Input. Sandbox. Size = 20000; Enable. Dynamic. Quota. Adjustment = false; Quota. Adjustment. Amount = 10000; Quota. Insensible. Disk. Portion = 2. 0; DLI_SI_Catalog. Timeout = 60; ]; No Max. Output!! www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 23 http: //grid. infn. it/

Authentication on WMS Peculiar to NETWORKSERVER • Log on /var/edgwl/networkserver/log/events. log (/var/log/glite/networkserver. log on glite) • Accept submissions done with proxy without VOMS acl Network. Server = [ II_Port = 2170; Gris_Port = 2170; II_Timeout = 30; Gris_Timeout = 20; II_DN = "mds-vo-name=local, o=grid"; Gris_DN = "mds-vo-name=local, o=grid"; II_Contact = "egee-bdii. cnaf. infn. it"; Backlog. Size = 16; Listening. Port = 7772; Master. Threads = 8; Dispatcher. Threads = 10; …………… Sandbox. Staging. Path = "${GLITE_LOCATION_VAR}/Sandbox. Dir"; Log. File = "${GLITE_LOCATION_LOG}/networkserver_events. log"; Log. Level = 5; Enable. Quota. Management = false; Max. Input. Sandbox. Size = 20000; Enable. Dynamic. Quota. Adjustment = false; Quota. Adjustment. Amount = 10000; Quota. Insensible. Disk. Portion = 2. 0; DLI_SI_Catalog. Timeout = 60; ]; No Max. Output!! www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 23 http: //grid. infn. it/

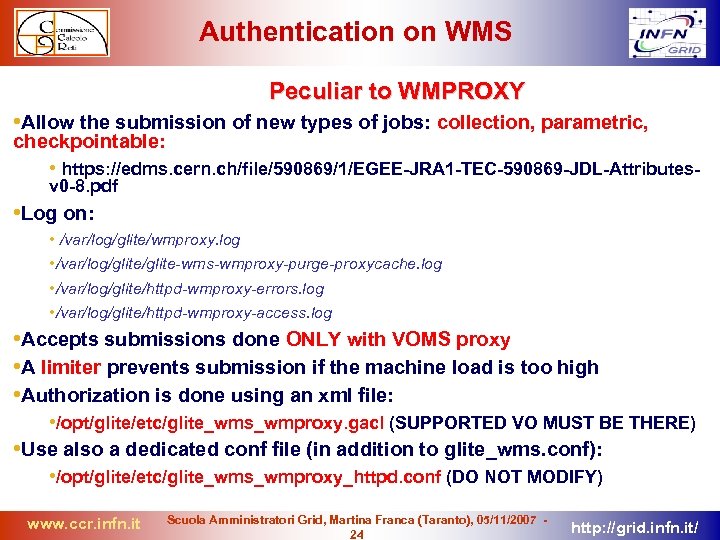

Authentication on WMS Peculiar to WMPROXY • Allow the submission of new types of jobs: collection, parametric, checkpointable: • https: //edms. cern. ch/file/590869/1/EGEE-JRA 1 -TEC-590869 -JDL-Attributesv 0 -8. pdf • Log on: • /var/log/glite/wmproxy. log • /var/log/glite-wms-wmproxy-purge-proxycache. log • /var/log/glite/httpd-wmproxy-errors. log • /var/log/glite/httpd-wmproxy-access. log • Accepts submissions done ONLY with VOMS proxy • A limiter prevents submission if the machine load is too high • Authorization is done using an xml file: • /opt/glite/etc/glite_wms_wmproxy. gacl (SUPPORTED VO MUST BE THERE) • Use also a dedicated conf file (in addition to glite_wms. conf): • /opt/glite/etc/glite_wms_wmproxy_httpd. conf (DO NOT MODIFY) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 24 http: //grid. infn. it/

Authentication on WMS Peculiar to WMPROXY • Allow the submission of new types of jobs: collection, parametric, checkpointable: • https: //edms. cern. ch/file/590869/1/EGEE-JRA 1 -TEC-590869 -JDL-Attributesv 0 -8. pdf • Log on: • /var/log/glite/wmproxy. log • /var/log/glite-wms-wmproxy-purge-proxycache. log • /var/log/glite/httpd-wmproxy-errors. log • /var/log/glite/httpd-wmproxy-access. log • Accepts submissions done ONLY with VOMS proxy • A limiter prevents submission if the machine load is too high • Authorization is done using an xml file: • /opt/glite/etc/glite_wms_wmproxy. gacl (SUPPORTED VO MUST BE THERE) • Use also a dedicated conf file (in addition to glite_wms. conf): • /opt/glite/etc/glite_wms_wmproxy_httpd. conf (DO NOT MODIFY) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 24 http: //grid. infn. it/

![The. gacl file [root@wms 002 root]# cat /opt/glite/etc/glite_wms_wmproxy. gacl <gacl version='0. 0. 1'> <entry> The. gacl file [root@wms 002 root]# cat /opt/glite/etc/glite_wms_wmproxy. gacl <gacl version='0. 0. 1'> <entry>](https://present5.com/presentation/064fd8ee1675c2b94ffa87ae529755ef/image-25.jpg) The. gacl file [root@wms 002 root]# cat /opt/glite/etc/glite_wms_wmproxy. gacl

The. gacl file [root@wms 002 root]# cat /opt/glite/etc/glite_wms_wmproxy. gacl

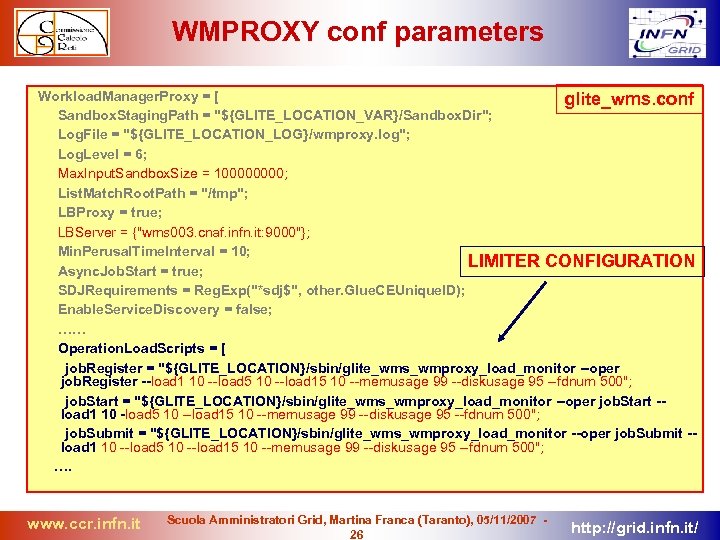

WMPROXY conf parameters Workload. Manager. Proxy = [ glite_wms. conf Sandbox. Staging. Path = "${GLITE_LOCATION_VAR}/Sandbox. Dir"; Log. File = "${GLITE_LOCATION_LOG}/wmproxy. log"; Log. Level = 6; Max. Input. Sandbox. Size = 10000; List. Match. Root. Path = "/tmp"; LBProxy = true; LBServer = {"wms 003. cnaf. infn. it: 9000"}; Min. Perusal. Time. Interval = 10; LIMITER CONFIGURATION Async. Job. Start = true; SDJRequirements = Reg. Exp("*sdj$", other. Glue. CEUnique. ID); Enable. Service. Discovery = false; …… Operation. Load. Scripts = [ job. Register = "${GLITE_LOCATION}/sbin/glite_wms_wmproxy_load_monitor --oper job. Register --load 1 10 --load 5 10 --load 15 10 --memusage 99 --diskusage 95 --fdnum 500"; job. Start = "${GLITE_LOCATION}/sbin/glite_wms_wmproxy_load_monitor --oper job. Start -load 1 10 -load 5 10 --load 15 10 --memusage 99 --diskusage 95 --fdnum 500"; job. Submit = "${GLITE_LOCATION}/sbin/glite_wms_wmproxy_load_monitor --oper job. Submit -load 1 10 --load 5 10 --load 15 10 --memusage 99 --diskusage 95 --fdnum 500"; …. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 26 http: //grid. infn. it/

WMPROXY conf parameters Workload. Manager. Proxy = [ glite_wms. conf Sandbox. Staging. Path = "${GLITE_LOCATION_VAR}/Sandbox. Dir"; Log. File = "${GLITE_LOCATION_LOG}/wmproxy. log"; Log. Level = 6; Max. Input. Sandbox. Size = 10000; List. Match. Root. Path = "/tmp"; LBProxy = true; LBServer = {"wms 003. cnaf. infn. it: 9000"}; Min. Perusal. Time. Interval = 10; LIMITER CONFIGURATION Async. Job. Start = true; SDJRequirements = Reg. Exp("*sdj$", other. Glue. CEUnique. ID); Enable. Service. Discovery = false; …… Operation. Load. Scripts = [ job. Register = "${GLITE_LOCATION}/sbin/glite_wms_wmproxy_load_monitor --oper job. Register --load 1 10 --load 5 10 --load 15 10 --memusage 99 --diskusage 95 --fdnum 500"; job. Start = "${GLITE_LOCATION}/sbin/glite_wms_wmproxy_load_monitor --oper job. Start -load 1 10 -load 5 10 --load 15 10 --memusage 99 --diskusage 95 --fdnum 500"; job. Submit = "${GLITE_LOCATION}/sbin/glite_wms_wmproxy_load_monitor --oper job. Submit -load 1 10 --load 5 10 --load 15 10 --memusage 99 --diskusage 95 --fdnum 500"; …. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 26 http: //grid. infn. it/

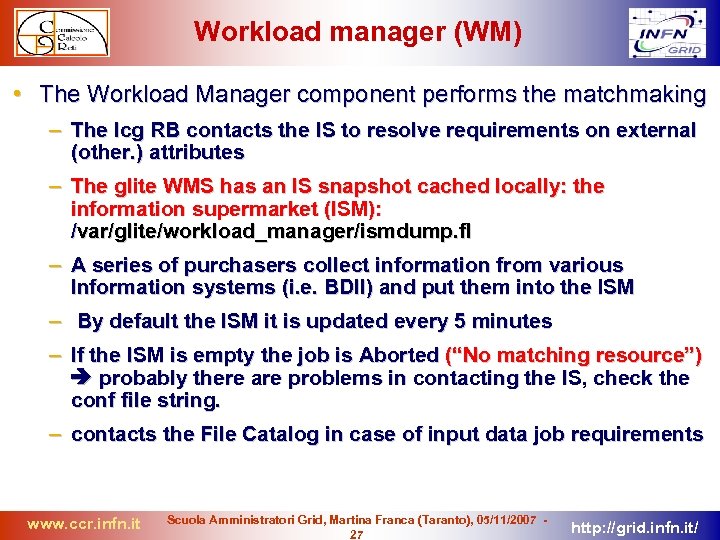

Workload manager (WM) • The Workload Manager component performs the matchmaking – The lcg RB contacts the IS to resolve requirements on external (other. ) attributes – The glite WMS has an IS snapshot cached locally: the information supermarket (ISM): /var/glite/workload_manager/ismdump. fl – A series of purchasers collect information from various Information systems (i. e. BDII) and put them into the ISM – By default the ISM it is updated every 5 minutes – If the ISM is empty the job is Aborted (“No matching resource”) probably there are problems in contacting the IS, check the conf file string. – contacts the File Catalog in case of input data job requirements www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 27 http: //grid. infn. it/

Workload manager (WM) • The Workload Manager component performs the matchmaking – The lcg RB contacts the IS to resolve requirements on external (other. ) attributes – The glite WMS has an IS snapshot cached locally: the information supermarket (ISM): /var/glite/workload_manager/ismdump. fl – A series of purchasers collect information from various Information systems (i. e. BDII) and put them into the ISM – By default the ISM it is updated every 5 minutes – If the ISM is empty the job is Aborted (“No matching resource”) probably there are problems in contacting the IS, check the conf file string. – contacts the File Catalog in case of input data job requirements www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 27 http: //grid. infn. it/

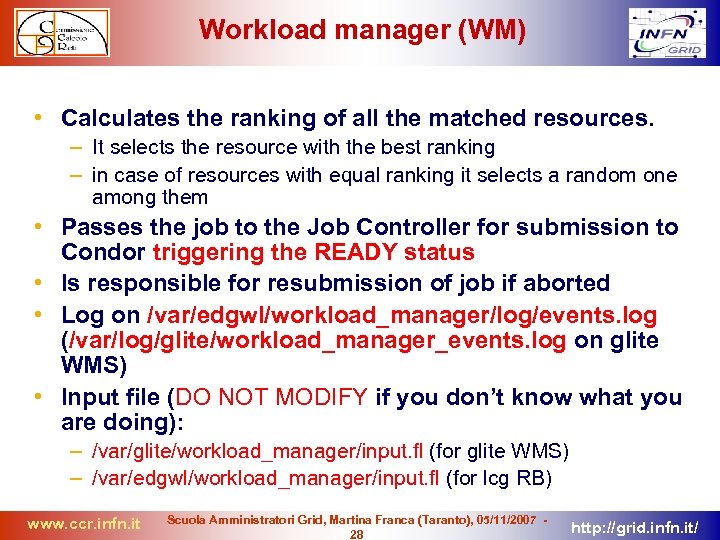

Workload manager (WM) • Calculates the ranking of all the matched resources. – It selects the resource with the best ranking – in case of resources with equal ranking it selects a random one among them • Passes the job to the Job Controller for submission to Condor triggering the READY status • Is responsible for resubmission of job if aborted • Log on /var/edgwl/workload_manager/log/events. log (/var/log/glite/workload_manager_events. log on glite WMS) • Input file (DO NOT MODIFY if you don’t know what you are doing): – /var/glite/workload_manager/input. fl (for glite WMS) – /var/edgwl/workload_manager/input. fl (for lcg RB) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 28 http: //grid. infn. it/

Workload manager (WM) • Calculates the ranking of all the matched resources. – It selects the resource with the best ranking – in case of resources with equal ranking it selects a random one among them • Passes the job to the Job Controller for submission to Condor triggering the READY status • Is responsible for resubmission of job if aborted • Log on /var/edgwl/workload_manager/log/events. log (/var/log/glite/workload_manager_events. log on glite WMS) • Input file (DO NOT MODIFY if you don’t know what you are doing): – /var/glite/workload_manager/input. fl (for glite WMS) – /var/edgwl/workload_manager/input. fl (for lcg RB) www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 28 http: //grid. infn. it/

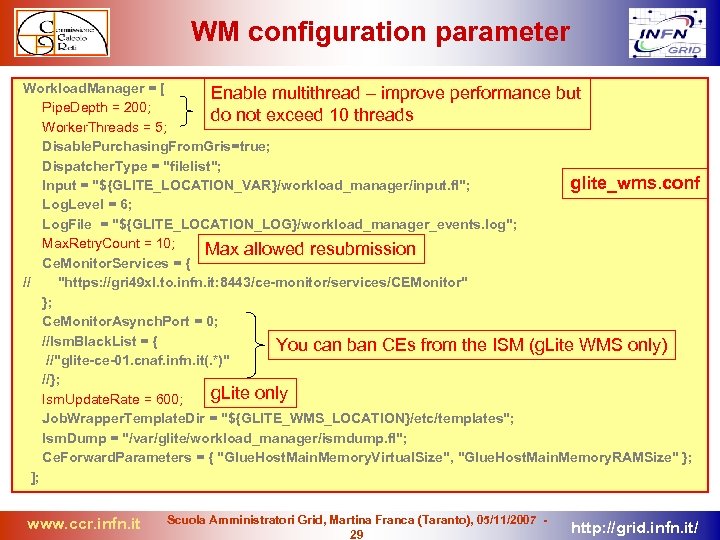

WM configuration parameter Workload. Manager = [ Enable multithread – improve performance but Pipe. Depth = 200; do not exceed 10 threads Worker. Threads = 5; Disable. Purchasing. From. Gris=true; Dispatcher. Type = "filelist"; glite_wms. conf Input = "${GLITE_LOCATION_VAR}/workload_manager/input. fl"; Log. Level = 6; Log. File = "${GLITE_LOCATION_LOG}/workload_manager_events. log"; Max. Retry. Count = 10; Max allowed resubmission Ce. Monitor. Services = { // "https: //gri 49 xl. to. infn. it: 8443/ce-monitor/services/CEMonitor" }; Ce. Monitor. Asynch. Port = 0; //Ism. Black. List = { You can ban CEs from the ISM (g. Lite WMS only) //"glite-ce-01. cnaf. infn. it(. *)" //}; g. Lite only Ism. Update. Rate = 600; Job. Wrapper. Template. Dir = "${GLITE_WMS_LOCATION}/etc/templates"; Ism. Dump = "/var/glite/workload_manager/ismdump. fl"; Ce. Forward. Parameters = { "Glue. Host. Main. Memory. Virtual. Size", "Glue. Host. Main. Memory. RAMSize" }; ]; www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 29 http: //grid. infn. it/

WM configuration parameter Workload. Manager = [ Enable multithread – improve performance but Pipe. Depth = 200; do not exceed 10 threads Worker. Threads = 5; Disable. Purchasing. From. Gris=true; Dispatcher. Type = "filelist"; glite_wms. conf Input = "${GLITE_LOCATION_VAR}/workload_manager/input. fl"; Log. Level = 6; Log. File = "${GLITE_LOCATION_LOG}/workload_manager_events. log"; Max. Retry. Count = 10; Max allowed resubmission Ce. Monitor. Services = { // "https: //gri 49 xl. to. infn. it: 8443/ce-monitor/services/CEMonitor" }; Ce. Monitor. Asynch. Port = 0; //Ism. Black. List = { You can ban CEs from the ISM (g. Lite WMS only) //"glite-ce-01. cnaf. infn. it(. *)" //}; g. Lite only Ism. Update. Rate = 600; Job. Wrapper. Template. Dir = "${GLITE_WMS_LOCATION}/etc/templates"; Ism. Dump = "/var/glite/workload_manager/ismdump. fl"; Ce. Forward. Parameters = { "Glue. Host. Main. Memory. Virtual. Size", "Glue. Host. Main. Memory. RAMSize" }; ]; www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 29 http: //grid. infn. it/

![The Job Controller Job. Controller = [ glite_wms. conf […] Condor. Submit. Dag = The Job Controller Job. Controller = [ glite_wms. conf […] Condor. Submit. Dag =](https://present5.com/presentation/064fd8ee1675c2b94ffa87ae529755ef/image-30.jpg) The Job Controller Job. Controller = [ glite_wms. conf […] Condor. Submit. Dag = "${CONDORG_INSTALL_PATH}/bin/condor_submit_dag"; Condor. Release = "${CONDORG_INSTALL_PATH}/bin/condor_release"; Submit. File. Dir = "${EDG_WL_TMP}/jobcontrol/submit"; Output. File. Dir = "${EDG_WL_TMP}/jobcontrol/cond"; Input = "${EDG_WL_TMP}/jobcontrol/queue. fl"; Lock. File = "${EDG_WL_TMP}/jobcontrol/lock"; Log. File = "${EDG_WL_TMP}/jobcontrol/log/events. log"; […] ]; • Creates the directory for the condor job – This is where Condor stdout and stderr will be stored • Creates the job wrapper – A shell script around the user executable • Creates the condor submit file – From the JDL string representation • Converts the condor submit file into Class. Ad – Understood by Condor • Submits the job to the Condor. G cluster (as job of type “Grid”) – via the condor scheduler www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 30 http: //grid. infn. it/

The Job Controller Job. Controller = [ glite_wms. conf […] Condor. Submit. Dag = "${CONDORG_INSTALL_PATH}/bin/condor_submit_dag"; Condor. Release = "${CONDORG_INSTALL_PATH}/bin/condor_release"; Submit. File. Dir = "${EDG_WL_TMP}/jobcontrol/submit"; Output. File. Dir = "${EDG_WL_TMP}/jobcontrol/cond"; Input = "${EDG_WL_TMP}/jobcontrol/queue. fl"; Lock. File = "${EDG_WL_TMP}/jobcontrol/lock"; Log. File = "${EDG_WL_TMP}/jobcontrol/log/events. log"; […] ]; • Creates the directory for the condor job – This is where Condor stdout and stderr will be stored • Creates the job wrapper – A shell script around the user executable • Creates the condor submit file – From the JDL string representation • Converts the condor submit file into Class. Ad – Understood by Condor • Submits the job to the Condor. G cluster (as job of type “Grid”) – via the condor scheduler www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 30 http: //grid. infn. it/

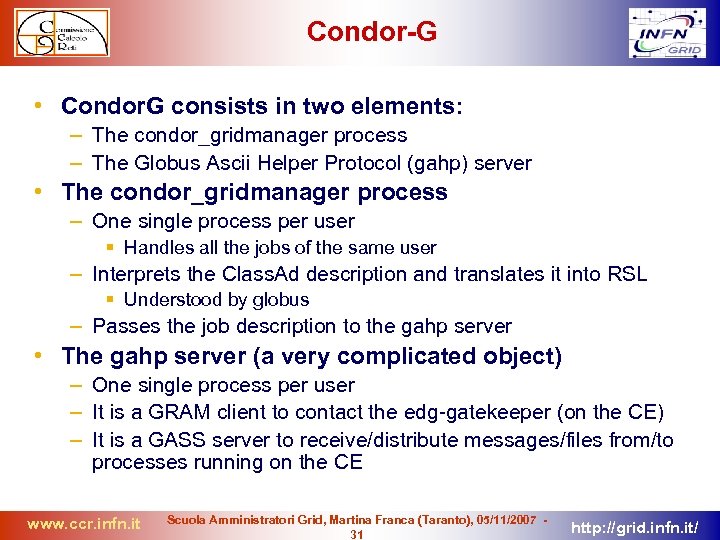

Condor-G • Condor. G consists in two elements: – The condor_gridmanager process – The Globus Ascii Helper Protocol (gahp) server • The condor_gridmanager process – One single process per user Handles all the jobs of the same user – Interprets the Class. Ad description and translates it into RSL Understood by globus – Passes the job description to the gahp server • The gahp server (a very complicated object) – One single process per user – It is a GRAM client to contact the edg-gatekeeper (on the CE) – It is a GASS server to receive/distribute messages/files from/to processes running on the CE www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 31 http: //grid. infn. it/

Condor-G • Condor. G consists in two elements: – The condor_gridmanager process – The Globus Ascii Helper Protocol (gahp) server • The condor_gridmanager process – One single process per user Handles all the jobs of the same user – Interprets the Class. Ad description and translates it into RSL Understood by globus – Passes the job description to the gahp server • The gahp server (a very complicated object) – One single process per user – It is a GRAM client to contact the edg-gatekeeper (on the CE) – It is a GASS server to receive/distribute messages/files from/to processes running on the CE www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 31 http: //grid. infn. it/

![Condor-G On the Resource Broker: [root@lxb 0704 Grid. Logs]# ps -auxgww | grep -i Condor-G On the Resource Broker: [root@lxb 0704 Grid. Logs]# ps -auxgww | grep -i](https://present5.com/presentation/064fd8ee1675c2b94ffa87ae529755ef/image-32.jpg) Condor-G On the Resource Broker: [root@lxb 0704 Grid. Logs]# ps -auxgww | grep -i condor edguser 10622 0. 0 0. 3 4676 1752 ? S Jan 04 12: 31 /opt/condor/sbin/condor_master edguser 10627 0. 0 0. 6 6396 3228 ? S Jan 04 1: 16 condor_schedd -f edguser 19688 0. 0 0. 4 5540 2532 ? S 11: 11 0: 00 condor_gridmanager -f –C (Owner=? ="edguser"&&x 509 userproxysubject=? ="/C=CH/O=CERN/OU=GRID/CN= Simone_Campana_7461_-_ATLAS/CN=proxy") -S /tmp/condor_g_scratch. 0 x 843 b 310. 10627 edguser 19690 0. 1 0. 4 4124 2516 ? S 11: 11 0: 02 /opt/condor/sbin/gahp_server edguser 20630 0. 1 0. 4 5480 2520 ? S 11: 44 0: 00 condor_gridmanager -f -C (Owner=? ="edguser"&&x 509 userproxysubject=? ="/C=CH/O=CERN/OU=GRID/CN= Simone_Campana_7461/CN=proxy") -S /tmp/condor_g_scratch. 0 x 845 da 30. 10627 edguser 20632 1. 2 0. 4 4028 2404 ? S 11: 44 0: 00 /opt/condor/sbin/gahp_server • The GRAM client sends the Condor job to the CE for execution – Contacts the edg-gatekeeper – The GRAM Sandbox is shipped to the CE Contains The Job Wrapper a delegation of the user proxy, info for the GASS server etc … www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 32 http: //grid. infn. it/

Condor-G On the Resource Broker: [root@lxb 0704 Grid. Logs]# ps -auxgww | grep -i condor edguser 10622 0. 0 0. 3 4676 1752 ? S Jan 04 12: 31 /opt/condor/sbin/condor_master edguser 10627 0. 0 0. 6 6396 3228 ? S Jan 04 1: 16 condor_schedd -f edguser 19688 0. 0 0. 4 5540 2532 ? S 11: 11 0: 00 condor_gridmanager -f –C (Owner=? ="edguser"&&x 509 userproxysubject=? ="/C=CH/O=CERN/OU=GRID/CN= Simone_Campana_7461_-_ATLAS/CN=proxy") -S /tmp/condor_g_scratch. 0 x 843 b 310. 10627 edguser 19690 0. 1 0. 4 4124 2516 ? S 11: 11 0: 02 /opt/condor/sbin/gahp_server edguser 20630 0. 1 0. 4 5480 2520 ? S 11: 44 0: 00 condor_gridmanager -f -C (Owner=? ="edguser"&&x 509 userproxysubject=? ="/C=CH/O=CERN/OU=GRID/CN= Simone_Campana_7461/CN=proxy") -S /tmp/condor_g_scratch. 0 x 845 da 30. 10627 edguser 20632 1. 2 0. 4 4028 2404 ? S 11: 44 0: 00 /opt/condor/sbin/gahp_server • The GRAM client sends the Condor job to the CE for execution – Contacts the edg-gatekeeper – The GRAM Sandbox is shipped to the CE Contains The Job Wrapper a delegation of the user proxy, info for the GASS server etc … www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 32 http: //grid. infn. it/

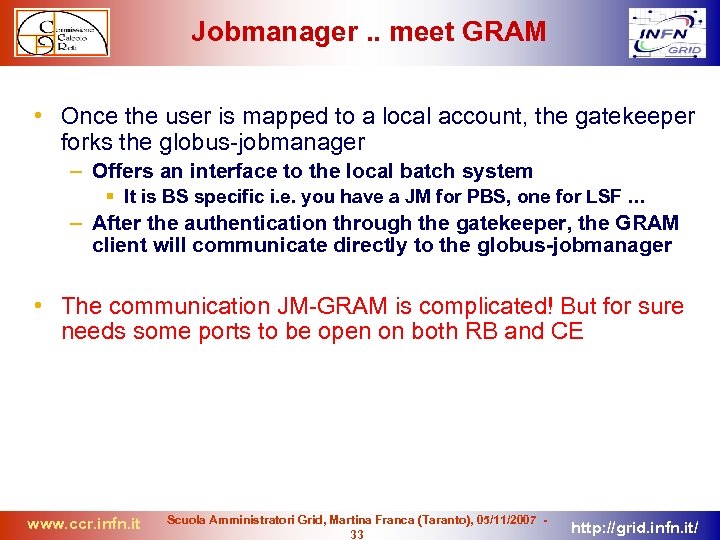

Jobmanager. . meet GRAM • Once the user is mapped to a local account, the gatekeeper forks the globus-jobmanager – Offers an interface to the local batch system It is BS specific i. e. you have a JM for PBS, one for LSF … – After the authentication through the gatekeeper, the GRAM client will communicate directly to the globus-jobmanager • The communication JM-GRAM is complicated! But for sure needs some ports to be open on both RB and CE www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 33 http: //grid. infn. it/

Jobmanager. . meet GRAM • Once the user is mapped to a local account, the gatekeeper forks the globus-jobmanager – Offers an interface to the local batch system It is BS specific i. e. you have a JM for PBS, one for LSF … – After the authentication through the gatekeeper, the GRAM client will communicate directly to the globus-jobmanager • The communication JM-GRAM is complicated! But for sure needs some ports to be open on both RB and CE www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 33 http: //grid. infn. it/

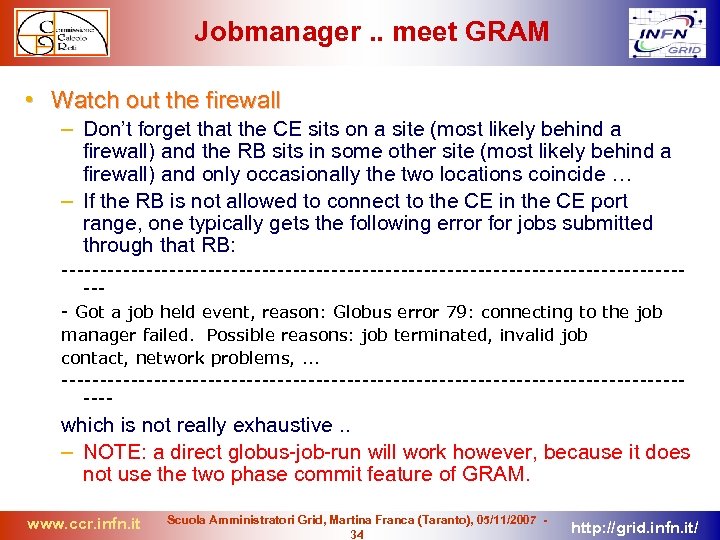

Jobmanager. . meet GRAM • Watch out the firewall – Don’t forget that the CE sits on a site (most likely behind a firewall) and the RB sits in some other site (most likely behind a firewall) and only occasionally the two locations coincide … – If the RB is not allowed to connect to the CE in the CE port range, one typically gets the following error for jobs submitted through that RB: ------------------------------------------ Got a job held event, reason: Globus error 79: connecting to the job manager failed. Possible reasons: job terminated, invalid job contact, network problems, . . . ------------------------------------------ which is not really exhaustive. . – NOTE: a direct globus-job-run will work however, because it does not use the two phase commit feature of GRAM. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 34 http: //grid. infn. it/

Jobmanager. . meet GRAM • Watch out the firewall – Don’t forget that the CE sits on a site (most likely behind a firewall) and the RB sits in some other site (most likely behind a firewall) and only occasionally the two locations coincide … – If the RB is not allowed to connect to the CE in the CE port range, one typically gets the following error for jobs submitted through that RB: ------------------------------------------ Got a job held event, reason: Globus error 79: connecting to the job manager failed. Possible reasons: job terminated, invalid job contact, network problems, . . . ------------------------------------------ which is not really exhaustive. . – NOTE: a direct globus-job-run will work however, because it does not use the two phase commit feature of GRAM. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 34 http: //grid. infn. it/

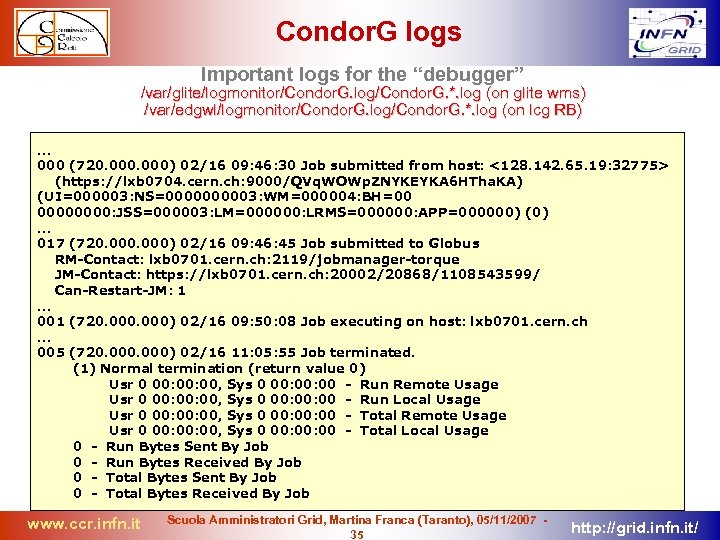

Condor. G logs Important logs for the “debugger” /var/glite/logmonitor/Condor. G. log/Condor. G. *. log (on glite wms) /var/edgwl/logmonitor/Condor. G. log/Condor. G. *. log (on lcg RB). . . 000 (720. 000) 02/16 09: 46: 30 Job submitted from host: <128. 142. 65. 19: 32775> (https: //lxb 0704. cern. ch: 9000/QVq. WOWp. ZNYKEYKA 6 HTha. KA) (UI=000003: NS=000003: WM=000004: BH=00 0000: JSS=000003: LM=000000: LRMS=000000: APP=000000) (0). . . 017 (720. 000) 02/16 09: 46: 45 Job submitted to Globus RM-Contact: lxb 0701. cern. ch: 2119/jobmanager-torque JM-Contact: https: //lxb 0701. cern. ch: 20002/20868/1108543599/ Can-Restart-JM: 1. . . 001 (720. 000) 02/16 09: 50: 08 Job executing on host: lxb 0701. cern. ch. . . 005 (720. 000) 02/16 11: 05: 55 Job terminated. (1) Normal termination (return value 0) Usr 0 00: 00, Sys 0 00: 00 - Run Remote Usage Usr 0 00: 00, Sys 0 00: 00 - Run Local Usage Usr 0 00: 00, Sys 0 00: 00 - Total Remote Usage Usr 0 00: 00, Sys 0 00: 00 - Total Local Usage 0 - Run Bytes Sent By Job 0 - Run Bytes Received By Job 0 - Total Bytes Sent By Job 0 - Total Bytes Received By Job www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 35 http: //grid. infn. it/

Condor. G logs Important logs for the “debugger” /var/glite/logmonitor/Condor. G. log/Condor. G. *. log (on glite wms) /var/edgwl/logmonitor/Condor. G. log/Condor. G. *. log (on lcg RB). . . 000 (720. 000) 02/16 09: 46: 30 Job submitted from host: <128. 142. 65. 19: 32775> (https: //lxb 0704. cern. ch: 9000/QVq. WOWp. ZNYKEYKA 6 HTha. KA) (UI=000003: NS=000003: WM=000004: BH=00 0000: JSS=000003: LM=000000: LRMS=000000: APP=000000) (0). . . 017 (720. 000) 02/16 09: 46: 45 Job submitted to Globus RM-Contact: lxb 0701. cern. ch: 2119/jobmanager-torque JM-Contact: https: //lxb 0701. cern. ch: 20002/20868/1108543599/ Can-Restart-JM: 1. . . 001 (720. 000) 02/16 09: 50: 08 Job executing on host: lxb 0701. cern. ch. . . 005 (720. 000) 02/16 11: 05: 55 Job terminated. (1) Normal termination (return value 0) Usr 0 00: 00, Sys 0 00: 00 - Run Remote Usage Usr 0 00: 00, Sys 0 00: 00 - Run Local Usage Usr 0 00: 00, Sys 0 00: 00 - Total Remote Usage Usr 0 00: 00, Sys 0 00: 00 - Total Local Usage 0 - Run Bytes Sent By Job 0 - Run Bytes Received By Job 0 - Total Bytes Sent By Job 0 - Total Bytes Received By Job www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 35 http: //grid. infn. it/

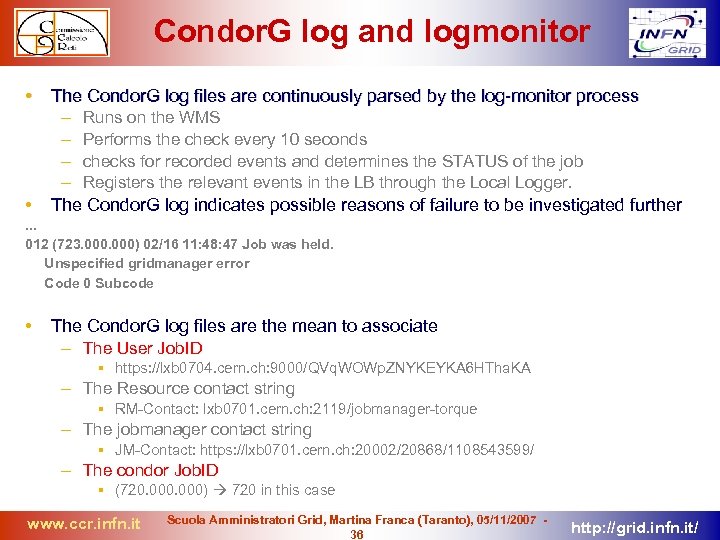

Condor. G log and logmonitor • • The Condor. G log files are continuously parsed by the log-monitor process – Runs on the WMS – Performs the check every 10 seconds – checks for recorded events and determines the STATUS of the job – Registers the relevant events in the LB through the Local Logger. The Condor. G log indicates possible reasons of failure to be investigated further … 012 (723. 000) 02/16 11: 48: 47 Job was held. Unspecified gridmanager error Code 0 Subcode • The Condor. G log files are the mean to associate – The User Job. ID https: //lxb 0704. cern. ch: 9000/QVq. WOWp. ZNYKEYKA 6 HTha. KA – The Resource contact string RM-Contact: lxb 0701. cern. ch: 2119/jobmanager-torque – The jobmanager contact string JM-Contact: https: //lxb 0701. cern. ch: 20002/20868/1108543599/ – The condor Job. ID (720. 000) 720 in this case www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 36 http: //grid. infn. it/

Condor. G log and logmonitor • • The Condor. G log files are continuously parsed by the log-monitor process – Runs on the WMS – Performs the check every 10 seconds – checks for recorded events and determines the STATUS of the job – Registers the relevant events in the LB through the Local Logger. The Condor. G log indicates possible reasons of failure to be investigated further … 012 (723. 000) 02/16 11: 48: 47 Job was held. Unspecified gridmanager error Code 0 Subcode • The Condor. G log files are the mean to associate – The User Job. ID https: //lxb 0704. cern. ch: 9000/QVq. WOWp. ZNYKEYKA 6 HTha. KA – The Resource contact string RM-Contact: lxb 0701. cern. ch: 2119/jobmanager-torque – The jobmanager contact string JM-Contact: https: //lxb 0701. cern. ch: 20002/20868/1108543599/ – The condor Job. ID (720. 000) 720 in this case www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 36 http: //grid. infn. it/

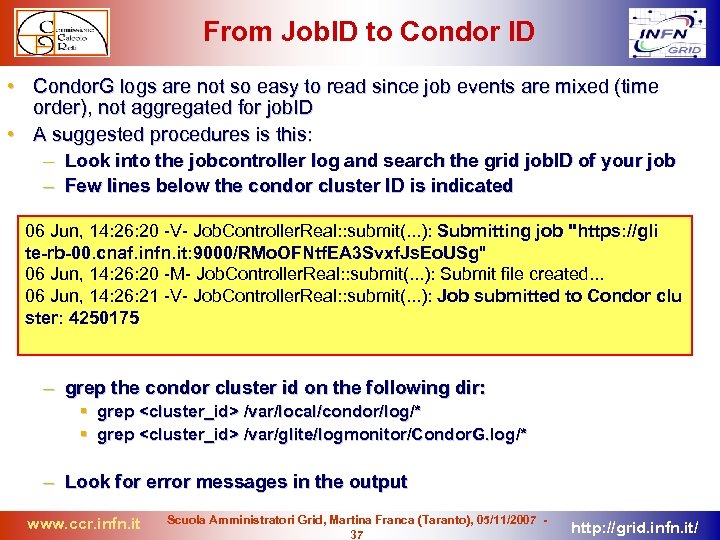

From Job. ID to Condor ID • Condor. G logs are not so easy to read since job events are mixed (time order), not aggregated for job. ID • A suggested procedures is this: – Look into the jobcontroller log and search the grid job. ID of your job – Few lines below the condor cluster ID is indicated 06 Jun, 14: 26: 20 -V- Job. Controller. Real: : submit(. . . ): Submitting job "https: //gli te-rb-00. cnaf. infn. it: 9000/RMo. OFNtf. EA 3 Svxf. Js. Eo. USg" 06 Jun, 14: 26: 20 -M- Job. Controller. Real: : submit(. . . ): Submit file created. . . 06 Jun, 14: 26: 21 -V- Job. Controller. Real: : submit(. . . ): Job submitted to Condor clu ster: 4250175 – grep the condor cluster id on the following dir: grep

From Job. ID to Condor ID • Condor. G logs are not so easy to read since job events are mixed (time order), not aggregated for job. ID • A suggested procedures is this: – Look into the jobcontroller log and search the grid job. ID of your job – Few lines below the condor cluster ID is indicated 06 Jun, 14: 26: 20 -V- Job. Controller. Real: : submit(. . . ): Submitting job "https: //gli te-rb-00. cnaf. infn. it: 9000/RMo. OFNtf. EA 3 Svxf. Js. Eo. USg" 06 Jun, 14: 26: 20 -M- Job. Controller. Real: : submit(. . . ): Submit file created. . . 06 Jun, 14: 26: 21 -V- Job. Controller. Real: : submit(. . . ): Job submitted to Condor clu ster: 4250175 – grep the condor cluster id on the following dir: grep

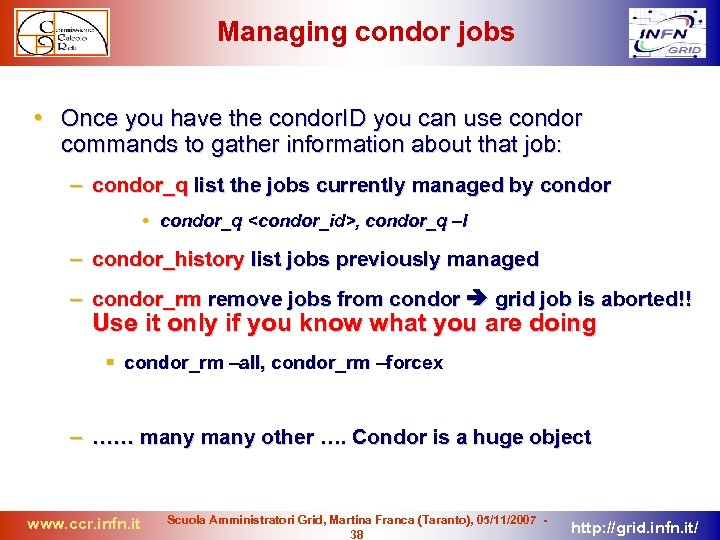

Managing condor jobs • Once you have the condor. ID you can use condor commands to gather information about that job: – condor_q list the jobs currently managed by condor • condor_q

Managing condor jobs • Once you have the condor. ID you can use condor commands to gather information about that job: – condor_q list the jobs currently managed by condor • condor_q

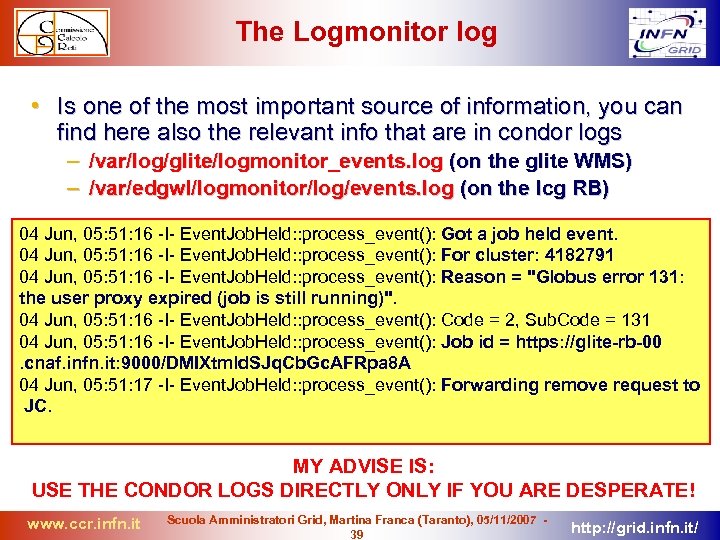

The Logmonitor log • Is one of the most important source of information, you can find here also the relevant info that are in condor logs – /var/log/glite/logmonitor_events. log (on the glite WMS) – /var/edgwl/logmonitor/log/events. log (on the lcg RB) 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): Got a job held event. 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): For cluster: 4182791 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): Reason = "Globus error 131: the user proxy expired (job is still running)". 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): Code = 2, Sub. Code = 131 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): Job id = https: //glite-rb-00. cnaf. infn. it: 9000/DMIXtmld. SJq. Cb. Gc. AFRpa 8 A 04 Jun, 05: 51: 17 -I- Event. Job. Held: : process_event(): Forwarding remove request to JC. MY ADVISE IS: USE THE CONDOR LOGS DIRECTLY ONLY IF YOU ARE DESPERATE! www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 39 http: //grid. infn. it/

The Logmonitor log • Is one of the most important source of information, you can find here also the relevant info that are in condor logs – /var/log/glite/logmonitor_events. log (on the glite WMS) – /var/edgwl/logmonitor/log/events. log (on the lcg RB) 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): Got a job held event. 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): For cluster: 4182791 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): Reason = "Globus error 131: the user proxy expired (job is still running)". 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): Code = 2, Sub. Code = 131 04 Jun, 05: 51: 16 -I- Event. Job. Held: : process_event(): Job id = https: //glite-rb-00. cnaf. infn. it: 9000/DMIXtmld. SJq. Cb. Gc. AFRpa 8 A 04 Jun, 05: 51: 17 -I- Event. Job. Held: : process_event(): Forwarding remove request to JC. MY ADVISE IS: USE THE CONDOR LOGS DIRECTLY ONLY IF YOU ARE DESPERATE! www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 39 http: //grid. infn. it/

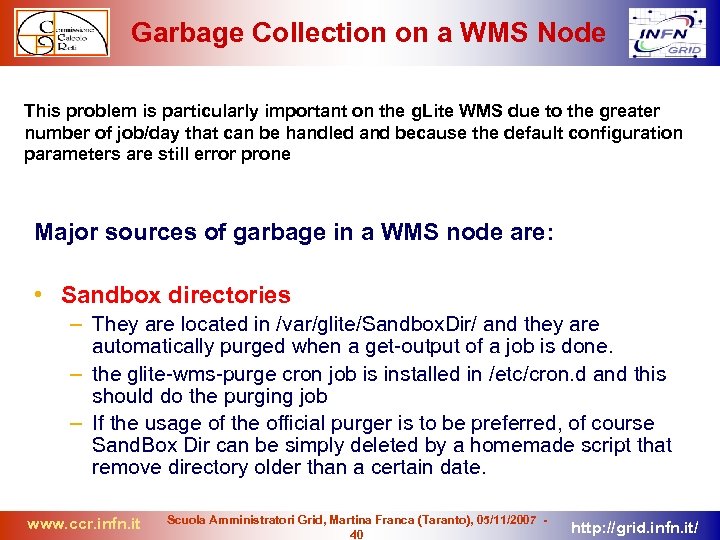

Garbage Collection on a WMS Node This problem is particularly important on the g. Lite WMS due to the greater number of job/day that can be handled and because the default configuration parameters are still error prone Major sources of garbage in a WMS node are: • Sandbox directories – They are located in /var/glite/Sandbox. Dir/ and they are automatically purged when a get-output of a job is done. – the glite-wms-purge cron job is installed in /etc/cron. d and this should do the purging job – If the usage of the official purger is to be preferred, of course Sand. Box Dir can be simply deleted by a homemade script that remove directory older than a certain date. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 40 http: //grid. infn. it/

Garbage Collection on a WMS Node This problem is particularly important on the g. Lite WMS due to the greater number of job/day that can be handled and because the default configuration parameters are still error prone Major sources of garbage in a WMS node are: • Sandbox directories – They are located in /var/glite/Sandbox. Dir/ and they are automatically purged when a get-output of a job is done. – the glite-wms-purge cron job is installed in /etc/cron. d and this should do the purging job – If the usage of the official purger is to be preferred, of course Sand. Box Dir can be simply deleted by a homemade script that remove directory older than a certain date. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 40 http: //grid. infn. it/

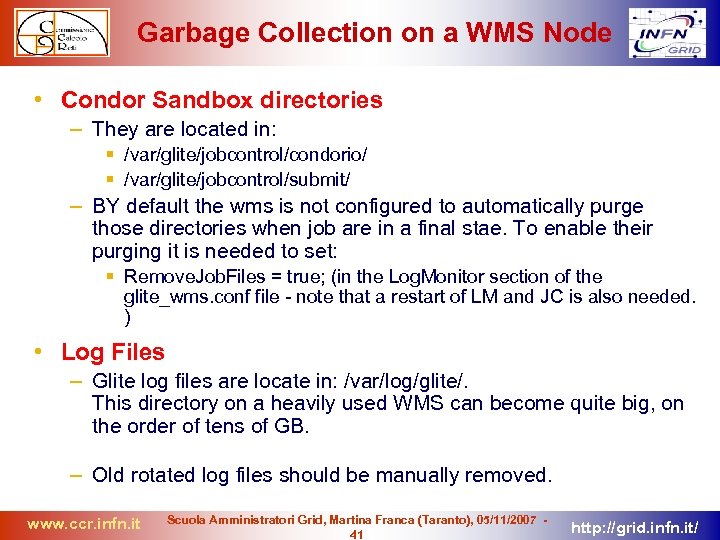

Garbage Collection on a WMS Node • Condor Sandbox directories – They are located in: /var/glite/jobcontrol/condorio/ /var/glite/jobcontrol/submit/ – BY default the wms is not configured to automatically purge those directories when job are in a final stae. To enable their purging it is needed to set: Remove. Job. Files = true; (in the Log. Monitor section of the glite_wms. conf file - note that a restart of LM and JC is also needed. ) • Log Files – Glite log files are locate in: /var/log/glite/. This directory on a heavily used WMS can become quite big, on the order of tens of GB. – Old rotated log files should be manually removed. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 41 http: //grid. infn. it/

Garbage Collection on a WMS Node • Condor Sandbox directories – They are located in: /var/glite/jobcontrol/condorio/ /var/glite/jobcontrol/submit/ – BY default the wms is not configured to automatically purge those directories when job are in a final stae. To enable their purging it is needed to set: Remove. Job. Files = true; (in the Log. Monitor section of the glite_wms. conf file - note that a restart of LM and JC is also needed. ) • Log Files – Glite log files are locate in: /var/log/glite/. This directory on a heavily used WMS can become quite big, on the order of tens of GB. – Old rotated log files should be manually removed. www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 41 http: //grid. infn. it/

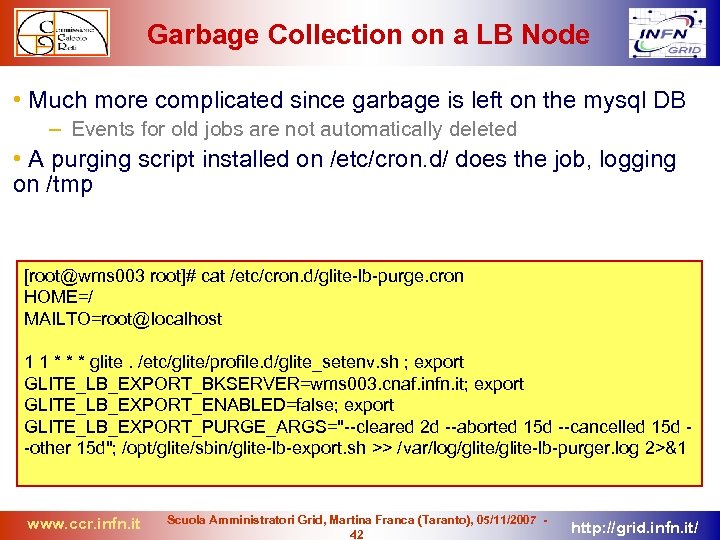

Garbage Collection on a LB Node • Much more complicated since garbage is left on the mysql DB – Events for old jobs are not automatically deleted • A purging script installed on /etc/cron. d/ does the job, logging on /tmp [root@wms 003 root]# cat /etc/cron. d/glite-lb-purge. cron HOME=/ MAILTO=root@localhost 1 1 * * * glite. /etc/glite/profile. d/glite_setenv. sh ; export GLITE_LB_EXPORT_BKSERVER=wms 003. cnaf. infn. it; export GLITE_LB_EXPORT_ENABLED=false; export GLITE_LB_EXPORT_PURGE_ARGS="--cleared 2 d --aborted 15 d --cancelled 15 d -other 15 d"; /opt/glite/sbin/glite-lb-export. sh >> /var/log/glite-lb-purger. log 2>&1 www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 42 http: //grid. infn. it/

Garbage Collection on a LB Node • Much more complicated since garbage is left on the mysql DB – Events for old jobs are not automatically deleted • A purging script installed on /etc/cron. d/ does the job, logging on /tmp [root@wms 003 root]# cat /etc/cron. d/glite-lb-purge. cron HOME=/ MAILTO=root@localhost 1 1 * * * glite. /etc/glite/profile. d/glite_setenv. sh ; export GLITE_LB_EXPORT_BKSERVER=wms 003. cnaf. infn. it; export GLITE_LB_EXPORT_ENABLED=false; export GLITE_LB_EXPORT_PURGE_ARGS="--cleared 2 d --aborted 15 d --cancelled 15 d -other 15 d"; /opt/glite/sbin/glite-lb-export. sh >> /var/log/glite-lb-purger. log 2>&1 www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 42 http: //grid. infn. it/

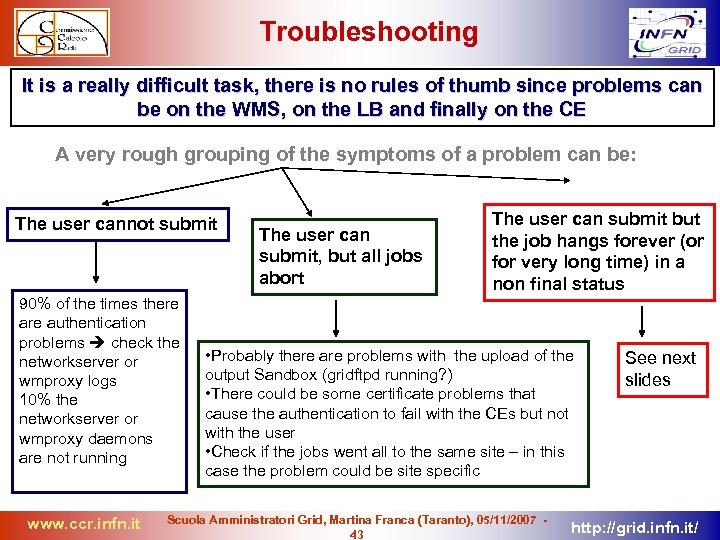

Troubleshooting It is a really difficult task, there is no rules of thumb since problems can be on the WMS, on the LB and finally on the CE A very rough grouping of the symptoms of a problem can be: The user cannot submit 90% of the times there authentication problems check the networkserver or wmproxy logs 10% the networkserver or wmproxy daemons are not running www. ccr. infn. it The user can submit, but all jobs abort The user can submit but the job hangs forever (or for very long time) in a non final status • Probably there are problems with the upload of the output Sandbox (gridftpd running? ) • There could be some certificate problems that cause the authentication to fail with the CEs but not with the user • Check if the jobs went all to the same site – in this case the problem could be site specific Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 43 See next slides http: //grid. infn. it/

Troubleshooting It is a really difficult task, there is no rules of thumb since problems can be on the WMS, on the LB and finally on the CE A very rough grouping of the symptoms of a problem can be: The user cannot submit 90% of the times there authentication problems check the networkserver or wmproxy logs 10% the networkserver or wmproxy daemons are not running www. ccr. infn. it The user can submit, but all jobs abort The user can submit but the job hangs forever (or for very long time) in a non final status • Probably there are problems with the upload of the output Sandbox (gridftpd running? ) • There could be some certificate problems that cause the authentication to fail with the CEs but not with the user • Check if the jobs went all to the same site – in this case the problem could be site specific Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 43 See next slides http: //grid. infn. it/

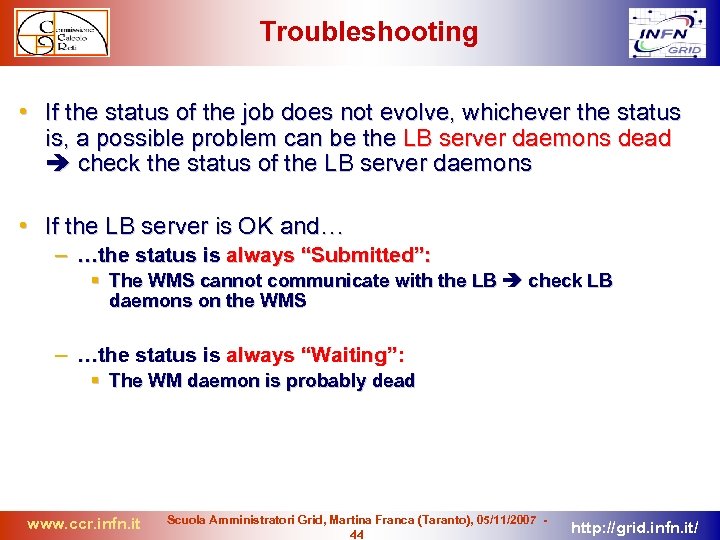

Troubleshooting • If the status of the job does not evolve, whichever the status is, a possible problem can be the LB server daemons dead check the status of the LB server daemons • If the LB server is OK and… – …the status is always “Submitted”: The WMS cannot communicate with the LB check LB daemons on the WMS – …the status is always “Waiting”: The WM daemon is probably dead www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 44 http: //grid. infn. it/

Troubleshooting • If the status of the job does not evolve, whichever the status is, a possible problem can be the LB server daemons dead check the status of the LB server daemons • If the LB server is OK and… – …the status is always “Submitted”: The WMS cannot communicate with the LB check LB daemons on the WMS – …the status is always “Waiting”: The WM daemon is probably dead www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 44 http: //grid. infn. it/

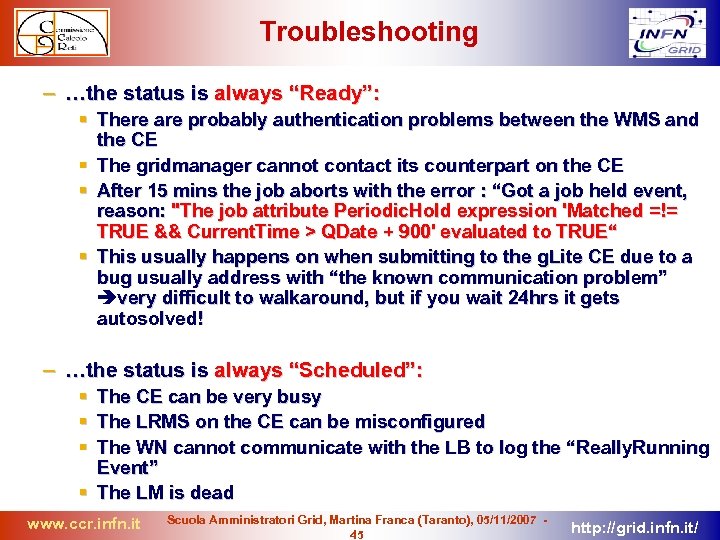

Troubleshooting – …the status is always “Ready”: There are probably authentication problems between the WMS and the CE The gridmanager cannot contact its counterpart on the CE After 15 mins the job aborts with the error : “Got a job held event, reason: "The job attribute Periodic. Hold expression 'Matched =!= TRUE && Current. Time > QDate + 900' evaluated to TRUE“ This usually happens on when submitting to the g. Lite CE due to a bug usually address with “the known communication problem” very difficult to walkaround, but if you wait 24 hrs it gets autosolved! – …the status is always “Scheduled”: The CE can be very busy The LRMS on the CE can be misconfigured The WN cannot communicate with the LB to log the “Really. Running Event” The LM is dead www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 45 http: //grid. infn. it/

Troubleshooting – …the status is always “Ready”: There are probably authentication problems between the WMS and the CE The gridmanager cannot contact its counterpart on the CE After 15 mins the job aborts with the error : “Got a job held event, reason: "The job attribute Periodic. Hold expression 'Matched =!= TRUE && Current. Time > QDate + 900' evaluated to TRUE“ This usually happens on when submitting to the g. Lite CE due to a bug usually address with “the known communication problem” very difficult to walkaround, but if you wait 24 hrs it gets autosolved! – …the status is always “Scheduled”: The CE can be very busy The LRMS on the CE can be misconfigured The WN cannot communicate with the LB to log the “Really. Running Event” The LM is dead www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 45 http: //grid. infn. it/

Troubleshooting – …the status is always “Running”: The LRMS on the CE can be misconfigured The WN cannot communicate with the WMS to upload the output Sandbox The LM is dead www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 46 http: //grid. infn. it/

Troubleshooting – …the status is always “Running”: The LRMS on the CE can be misconfigured The WN cannot communicate with the WMS to upload the output Sandbox The LM is dead www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 46 http: //grid. infn. it/

(My personal) Conclusion on debugging • Debugging a job failure is a complicated task – The WMS middleware is very complicated – Error messages often are not exhaustive – Most likely you will have no access to all the resources • It is impossible to create an exhaustive list of failures – Too many different types – Too many different boundary conditions • The advice – You should try to understand the architecture as much as you can Understanding the job flow is essential in order to understand what went wrong and when … (and why…) – Start debugging … you will get the expertise bit by bit … www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 47 http: //grid. infn. it/

(My personal) Conclusion on debugging • Debugging a job failure is a complicated task – The WMS middleware is very complicated – Error messages often are not exhaustive – Most likely you will have no access to all the resources • It is impossible to create an exhaustive list of failures – Too many different types – Too many different boundary conditions • The advice – You should try to understand the architecture as much as you can Understanding the job flow is essential in order to understand what went wrong and when … (and why…) – Start debugging … you will get the expertise bit by bit … www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 47 http: //grid. infn. it/

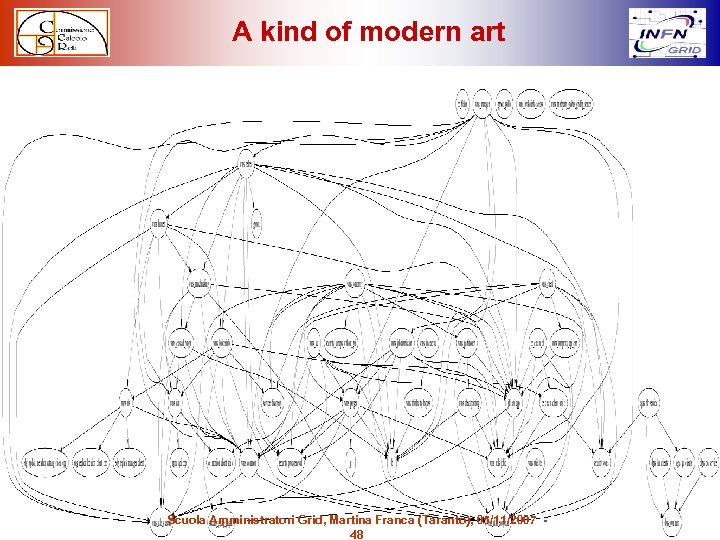

A kind of modern art www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 48 http: //grid. infn. it/

A kind of modern art www. ccr. infn. it Scuola Amministratori Grid, Martina Franca (Taranto), 05/11/2007 48 http: //grid. infn. it/