2e62421ad852d0bcd2018c040cf3e836.ppt

- Количество слайдов: 27

Semantic Smoothing and Fabrication of Phrase Pairs for SMT Boxing Chen, Roland Kuhn and George Foster Dec. 9, 2011

Motivation Phrase-based SMT depends on the phrase table, containing co-occurrence counts #(s, t). s = source phrase, t = target phrase. Typically, use two Relative Frequency (RF) features: PRF(s|t) = #(s, t)/#t, PRF(t|s) = #(s, t)/#s. Smoothing of RF features • Lexical weighting – uses probabilities relating words inside s and t. • Black box phrase table smoothing & discounting techniques - depend only on #s, #t, and #(s, t) (Chen et al. , 2011; Foster et al. , 2006) • Semantic Smoothing (SS) uses info about phrases that are semantically similar to s and t to smooth P(s|t) and P(t|s). (Kuhn et al. , 2010) give “phrase cluster” version of SS where phrases are vectors in a bilingual space – in each language, put phrases into hard clusters & pool P(s|t) and P(t|s) estimates in each quasi-paraphrase cluster. (Max 2010) smooths P(t|s) with information from paraphrases of s that have similar context in source-language training data.

Phrase clustering* English-French example * This was the version of SS we described in (Kuhn et al. , 2010) dead mort 1, 000 est mort 100 décédé 100 chat 0 … … 0 kicked the bucket deceased 3 15 0 6 1 10 + + 0 0 … … 0 0 = Only 4 observations for “kicked the bucket” – PRF(* | “kicked the bucket”) is poorly estimated mort est mort dead 1, 000 100 kicked the bucket 3 0 …… deceased 15 0 …. 0 6 0 …. 0 1, 018 106 111 0 … 0 Pooled vector for P(*| “dead”), P(*| “kicked the bucket”), & P(*| “deceased”)

Phrase clustering • But how did we decide to put “dead”, “kicked the bucket”, and “deceased” into the same hard cluster? • In (Kuhn et al. , 2010) & this paper, we only use information endogenous to the phrase table – no external info! (unless you count edit distance between same-language strings). This was an aesthetic choice; we could have used external info. • The main endogenous info we use is the distance between vectors of co-occurrence counts (massaged with a weird form of tf-idf). Each English phrase is represented as a vector of counts of French phrases, & vice versa.

Phrase clustering • Clustering phrases (Kuhn et al. , 2010): - Compute distance (in lang. 2 space) for each phrase pair in lang. 1. - Merge lang. 1 phrases to same cluster if their distance is less than pre determined threshold. (See figure below). - Result: set of clusters in lang. 1, each a group of quasi-paraphrases. • Do same in lang. 2 using count vectors based on lang. 1 clusters. • Go back to lang. 1 (using lang. 2 clusters); iterate until tired. • Compute cluster-based P(s|t) & P(t|s) (don’t care if s = lang. 1 or 2 …); use as extra features for SMT. dead kicked the bucket lion dead lion kicked the bucket deceased cat

Related work • • • Work on paraphrasing is somewhat related – Paraphrases are alternative ways of conveying the same information. SMT helps paraphrasing – Using SMT technique to extract paraphrases from bilingual parallel corpora (Bannard and Callison-Burch, 2005) Paraphrasing helps SMT* – Paraphrase unseen words or phrases in the test set using additional parallel corpora (Callison-Burch et al. , 2006) – Generate near-paraphrases of the source-side training sentences, then pair each paraphrased sentence with the target translation to enlarge the training data (Nakov 2008) – Paraphrase test source sentence to generate a lattice, then decode with the lattice (Du et al. , 2010) * Difference: these researchers are interested in finding new phrase pairs, not in smoothing probabilities. F

Related work (Max 2010) is much more closely related to our work: - Extract all possible paraphrases p for a phrase f in the test set (this means it’s an online method) by pivot: – All target language phrases e aligned to f are first extracted – All source language phrases p aligned to e are extracted f p 1 p 2 p 3 p 4 e 1 e 2 e 3 e 4 – (Could use data from other parallel data, even other language pairs, to get the paraphrases) F

Related work • (Max 2010) applies heuristic filtering to keep good paraphrases. • Measures similarity between a source phrase f and its possible paraphrase pi via context similarity; from this, gets a smoothed probability Ppara(ei|f) • Context similarity is based on the presence of common n-grams in the immediate vicinity of two phrases (i. e. , on the similarity of the context of f in the sentence being decoded with all the contexts where pi was seen in the training data). * NOTE: (Max 2010) also has a clever Pcont() feature unrelated to our IWSLT paper. F

Related work • (Max 2010) smoothing formula: • simpara(C(f), C(pk)) is similarity between context of f in current test sentence & context of an observation of pk in training data • pk is a paraphrase of f (same language) • <pk, ei>is a biphrase from the training corpus, such that ei is also a translation of f • ej is any possible translation of f The bottom line: Max smooths P(ei|f) = P(t|s) with information from paraphrases of f that have similar contexts to current f (=s). F

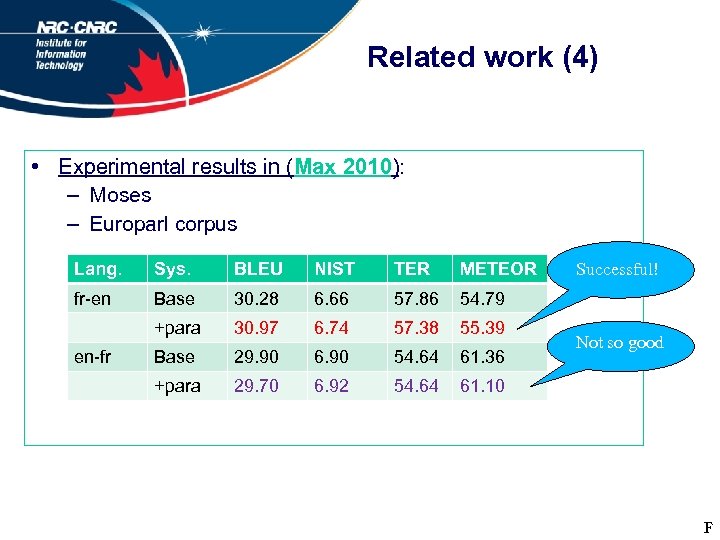

Related work (4) • Experimental results in (Max 2010): – Moses – Europarl corpus Lang. Sys. BLEU NIST TER METEOR fr-en Base 30. 28 6. 66 57. 86 54. 79 +para 30. 97 6. 74 57. 38 55. 39 Base 29. 90 6. 90 54. 64 61. 36 +para 29. 70 6. 92 54. 64 Successful! 61. 10 en-fr Not so good F

Comparison: Max vs. NRC (2010 & 2011) • Similiarities: - Both smooth phrase pair conditional probabilities with information from paraphrases. - Both use only endogenous information: training data for system (Max) or system’s phrase table (NRC researchers). • Differences: - Max implicitly distinguishes between senses of a phrase (a great advantage over NRC!) - For Max, once a paraphrase of f is chosen, its goodness doesn’t matter (personal comm. ); NRC 2011 has rank discounting. - Max approach is online, NRC approach is at training time. - NRC uses edit distance between phrases as well as info in phrase table to determine paraphrases. - Max’s Ppara() only smooths P(t|s); NRC smooths P(t|s) and P(s|t). - Max has no FPs. - A minor difference: NRC applies weird analogue of tf-idf to count vectors prior to hard or soft clustering.

IWSLT 2011 techniques (NN smoothing & FPs) • Three main improvements compared with (Kuhn et al. , 2010) – Using nearest neighbours (NNs) instead of phrase cluster (PC) to define the semantically similar phrase pairs. With NN, each phrase is smoothed with information from a different set of other phrases. I. e. , soft clustering instead of hard clustering. – Rank-based discounting of information: assigns less importance to information from neighbours that are far away from the given phrase. – “Fabricating” phrase pairs that were not seen in training data but which are assigned high probability by the SS model, and using them for decoding. We call these pairs “FPs”.

NN smoothing • Nearest neighbour (NN) vs. phrase cluster (PC) – bigger font = more counts “c” statistics powerful in cluster 1 “f” statistics dominate cluster 2 When smoothing “g”, NN’s are ranked: 1. “g” 2. “i” 3. “h” 4. “f”. The higher the rank, the greater the discount. “f” statistics don’t dominate here. • • • Estimates found by NN are more accurate than those found by PC, because PC is more likely to cluster together unrelated phrases. For NN, we weigh neighbours by distance rank – phrases with lots of observations that aren’t very close have less influence. Less computation than hard clustering: for PC, “rolling snowball” alg.

NN smoothing • Distance score for phrase count vectors as in (Kuhn et al. , 2010): - Tf-idf-like transform that emphasizes bursty phrases - As distance function, either (1 -cosine)*Edit*Dice or MAPL*Edit*Dice (where MAPL = a probabilistic function) • Two parameters for choosing the “nearest neighbours” (NNs) that form soft cluster around current phrase p: – We only consider the closest M neighbours of p – Among those, only choose that are less than a threshold distance F away from p – We did some tuning of M & F on held-out data; ended up being very conservative (M=10, but most phrases are not smoothed) • We don’t iterate back & forth between source & target language as we did in 2010 paper: training algorithm is 1 -pass. F

NN smoothing , (11) . • Conditional Probability Estimation – Similar to what we used in (Kuhn et al. , 2010) – Except that we’re using rank-based discounted counts instead of real counts, so formulas more complicated: where (e. g. ) r(N(si)) is a count vector computed around phrase si from vector for si and its rank-discounted NNs. F

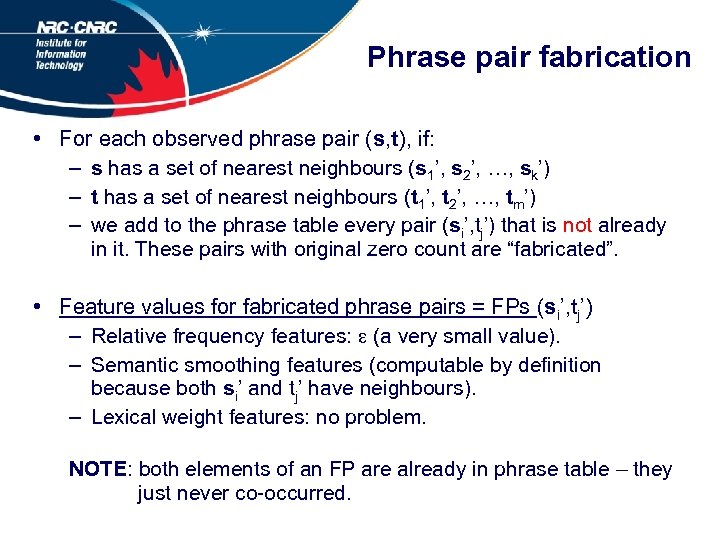

Phrase pair fabrication • For each observed phrase pair (s, t), if: – s has a set of nearest neighbours (s 1’, s 2’, …, sk’) – t has a set of nearest neighbours (t 1’, t 2’, …, tm’) – we add to the phrase table every pair (si’, tj’) that is not already in it. These pairs with original zero count are “fabricated”. • Feature values for fabricated phrase pairs = FPs (si’, tj’) – Relative frequency features: (a very small value). – Semantic smoothing features (computable by definition because both si’ and tj’ have neighbours). – Lexical weight features: no problem. NOTE: both elements of an FP are already in phrase table – they just never co-occurred.

Experiments: CE – NRC’s Portage system, trained on NIST Chinese-English (CE) data (& extra LM from Eng. Gigaword) – Test set: NIST 08 CE BLEU improvement vs. training data size Training (#sent. ) Baseline +SS+FP 25 K 15. 87 16. 00 16. 08 50 K 17. 88 18. 08 18. 12 100 K 19. 64 19. 83 20. 06 200 K 21. 27 21. 61 21. 72 400 K 22. 66 22. 97 23. 05 800 K 23. 91 24. 13 24. 23 1. 6 M 25. 10 25. 30 3. 3 M 26. 47 26. 59

Experiments: FE – NRC’s Portage system, trained on Europarl French-English (FE) data (& extra LM from Giga. Fr. En) – Test set: WMT Newstest 2010 FE BLEU improvement vs. training data size Training (#sent. ) Baseline +SS+FP 25 K 20. 67 20. 79 20. 81 50 K 22. 13 22. 37 22. 41 100 K 23. 36 23. 60 23. 67 200 K 24. 23 24. 54 24. 59 400 K 25. 15 25. 34 25. 39 800 K 25. 8 25. 92 25. 94 1. 6 M 26. 58 26. 71

Experiments: PC vs. NN vs. (NN + FP) • Our current method (NN + FP) and the hard-clustering PC one in (Kuhn et al. , 2010) had a similar pattern – best results for medium amount of training data. • But which is better? • BLEU results below are encouraging, but not conclusive. We prefer our current approach anyway – it’s more elegant & less computationally intensive. Baseline +PC +NN+FP FBIS, CE NIST 08 23. 11 23. 59 23. 70 23. 85 400 K, CE NIST 08 22. 66 22. 90 22. 97 23. 05 200 K, FE Newtest 2010 24. 23 24. 40 24. 54 24. 59 F

Analysis • Only a small proportion of the phrases are involved in NN semantic smoothing: most don’t have useful neighbours. • Statistics for test data (200 K CE system, 200 K FE system) – About 15% of phrase pairs used in CE decoding are smoothed – About 17% of phrase pairs used in FE decoding are smoothed – Even fewer of all phrase pairs in phrase table are smoothed CE - number of NNs per decoding phrase FE - number of NNs per decoding phrase

Analysis • Fabricated pairs: only a tiny number of FPs is ever used for decoding, & number used goes down as amount of training data increases. E. g. , 185 FPs were used for decoding by 25 K CE system, but only 31 by 200 K CE system % of decoding pairs that are fabricated vs. #training sent.

FE Examples English translation Impact Ref BL SS … a smartphone with two operating systems. … a smartphone equipped with two systems of exploitation. … a smartphone equipped with two operating systems. QUAL+ BLEU+ Ref BL SS access to many web sites is restricted. access to many websites is limited. QUAL= BLEU- Ref BL SS it is not for want of trying. this is no fault to try. this is not without trying. QUAL+ BLEU+ Ref BL SS a nice evening , but tomorrow the work would continue. he had spent a pleasant evening but tomorrow should resume work. he had spent a pleasant evening but tomorrow we should go back to work. QUALBLEU- NOTE: SS tends to increase fluency, because encourages use of generally frequent target-language phrases. Sometimes, this may hurt adequacy.

Discussion • New ideas compared to (Kuhn et al. , 2010): 1. NNs = soft clustering 2. rank-based discounting 3. decode with Fabricated Pairs (FPs) • Why is improvement due to PC, NN and NN + FP over “no SS” baseline always biggest for medium amounts of data? Suggestion: if a phrase has too few observations, we will assign the wrong neighbours to it & SS won’t work well. If a phrase has many observations, it doesn’t need smoothing! So SS works best when many phrases have moderate # of observations. • Fabrication only has modest effect on BLEU. However, idea of « filling in » holes in phrase table will make more & more sense as get more & more information sources to vouch for unseen phrase pairs: lexical weights + paraphrases + syntax + … • Both NN and NN + FP may increase fluency (probably not adequacy).

Discussion • SS and SS + FP both only have modest effect on BLEU. Should we give up? • NO. Analysis shows only a small fraction of phrases are affected by SS; for these, it clearly helps a lot. We need to find a way of applying SS to most phrases. • Our work’s biggest flaw: we don’t distinguish between different senses of a phrase. E. g. , it would be crazy to smooth « red » with « scarlet » or « red » with « Marxist » ; we should smooth one sense of « red » with « scarlet » and another sense of « red » with « Marxist » . • Max’s work does take multiple senses into account via sourcelanguage context. But he doesn’t use bilingual space closeness, and he smooths P(t|s) but not P(s|t). The two approaches should be hybridized!

Discussion • Could also improve SS by using exogenous as well as endogenous (phrase table only) information. Lots of techniques in paraphrasing literature: data from other language pairs, patterns, etc. • We have a vector space for each language. Why not look for hidden structure via PCA or LSA? • Why not reformulate SS as a feature-based model, so best weights for neighbours could be tuned automatically (e. g. , as function of different types of vector distances, distance rank, counts, string distances, contexts in test corpus …)?

References (1) C. Bannard and C. Callison-Burch. “Paraphrasing with Bilingual Parallel Corpora”. Proc. ACL, pp. 597 -604, Ann Arbor, USA, June 2005. C. Callison-Burch, P. Koehn, and M. Osborne. “Improved Statistical Machine Translation Using Paraphrases”. Proc. HLT/NAACL, pp. 17 -24, New York City, USA, June 2006. B. Chen, R. Kuhn, G. Foster, and H. Johnson. “Unpacking and transforming feature functions: new ways to smooth phrase tables”. MT Summit XIII, Xiamen, China, Sept. 2011. G. Foster, R. Kuhn, and H. Johnson. “Phrasetable smoothing for statistical machine translation”. Proc. EMNLP, pp. 53 -61, Sydney, Australia, July 2006. N. Habash. “REMOOV: A Tool for Online Handling of Out-of-Vocabulary Words in Machine Translation”. Proc. 2 nd Int. Conf. on Arabic Language Resources and Tools (MEDAR), Cairo, Egypt, 2009.

References (2) H. Johnson, J. Martin, G. Foster, and R. Kuhn. “Improving Translation Quality by Discarding Most of the Phrasetable”. Proc. EMNLP, Prague, Czech Republic, June 28 -30, 2007. R. Kuhn, B. Chen, G. Foster and E. Stratford. “Phrase Clustering for Smoothing TM Probabilities – or, How to Extract Paraphrases from Phrase Tables”. Proc. COLING, pp. 608 -616, Beijing, China, August 2010. Y. Marton, C. Callison-Burch, and Philip Resnik. “Improved Statistical Machine Translation Using Monolingually-Derived Paraphrases”. Proc. EMNLP, pp. 381 -390, Singapore, August 2009. A. Max. “Example-Based Paraphrasing for Improved Phrase-Based Statistical Machine Translation”. Proc. EMNLP, pp. 656 -666, MIT, Massachusetts, USA, October 2010.

2e62421ad852d0bcd2018c040cf3e836.ppt