14a3d0b187254b0720481715e572bf75.ppt

- Количество слайдов: 37

SEE-GRID-SCI SA 1 – Infrastructure Operations: Overview and Achievements www. see-grid-sci. eu PSC 05 Meeting Dubrovnik, 9 -11 September 2009 Antun Balaz SA 1 Leader Institute of Physics Belgrade antun@ipb. ac. rs The SEE-GRID-SCI initiative is co-funded by the European Commission under the FP 7 Research Infrastructures contract no. 211338

SEE-GRID-SCI SA 1 – Infrastructure Operations: Overview and Achievements www. see-grid-sci. eu PSC 05 Meeting Dubrovnik, 9 -11 September 2009 Antun Balaz SA 1 Leader Institute of Physics Belgrade antun@ipb. ac. rs The SEE-GRID-SCI initiative is co-funded by the European Commission under the FP 7 Research Infrastructures contract no. 211338

Overview SA 1 objectives, metrics, activities SA 1 deliverables status SA 1 milestones status Infrastructure development Infrastructure management Service Level Agreement Infrastructure usage Network link to Moldova status Collaboration/Interoperation Action points PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 2

Overview SA 1 objectives, metrics, activities SA 1 deliverables status SA 1 milestones status Infrastructure development Infrastructure management Service Level Agreement Infrastructure usage Network link to Moldova status Collaboration/Interoperation Action points PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 2

SA 1 objectives and metrics Objective 2: Providing infrastructure for new communities § O 2. 1: Expand the current infrastructure q MTSA 1. 1: Increase in the number of computing and storage resources (tables given in Do. W) § O 2. 2: Inclusion of Armenia and Georgia q MTSA 1. 2: Number of Grid sites and processing and storage resources (tables given in Do. W) § O 2. 3: Achieve high reliability, availability and automation q q q MTSA 1. 3: Increase of the average overall Grid site availability (M 01 >= 70%, M 12 >= 75%, M 24 >= 81%) MTSA 1. 4: Number of successful jobs ran as % of total jobs (M 01 >= 50%, M 12 >= 55%, M 24 >= 60%) MTSA 1. 5: Number of management tools expanded or developed (+achieving tools integration and automation) § O 2. 4: Provision of the network link to Moldova PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 3

SA 1 objectives and metrics Objective 2: Providing infrastructure for new communities § O 2. 1: Expand the current infrastructure q MTSA 1. 1: Increase in the number of computing and storage resources (tables given in Do. W) § O 2. 2: Inclusion of Armenia and Georgia q MTSA 1. 2: Number of Grid sites and processing and storage resources (tables given in Do. W) § O 2. 3: Achieve high reliability, availability and automation q q q MTSA 1. 3: Increase of the average overall Grid site availability (M 01 >= 70%, M 12 >= 75%, M 24 >= 81%) MTSA 1. 4: Number of successful jobs ran as % of total jobs (M 01 >= 50%, M 12 >= 55%, M 24 >= 60%) MTSA 1. 5: Number of management tools expanded or developed (+achieving tools integration and automation) § O 2. 4: Provision of the network link to Moldova PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 3

SA 1 activities SA 1. 1: Implementation of the advanced SEE-GRID infrastructure § SA 1. 1. 1: Expand the existing SEE-GRID infrastructure and deploy Grid middleware components and OS in SEE Resource Centers § SA 1. 1. 2: Operate the SEE-GRID infrastructure § SA 1. 1. 3: Deploy and Operate the core services for new VOs § SA 1. 1. 4: Catch-all CA and deployment and operational support for new and emerging Grid CAs § SA 1. 1. 5: Certify and migrate SEE-GRID sites from regional to global production-level e. Infrastructure SA 1. 2: Resource Centre SLA monitoring and enforcement § SA 1. 2. 1: SLA detailed specification, identification and deployment of operational tools relevant for SLA monitoring § SA 1. 2. 2: Monitoring, assessment and enforcement of RC conformance to SLA SA 1. 3: Network Resource Provision § SA 1. 3. 1: Network resource provision and liaison with regional e. Infrastructure networking projects § SA 1. 3. 2: Procurement of a link between Moldova and GEANT PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 4

SA 1 activities SA 1. 1: Implementation of the advanced SEE-GRID infrastructure § SA 1. 1. 1: Expand the existing SEE-GRID infrastructure and deploy Grid middleware components and OS in SEE Resource Centers § SA 1. 1. 2: Operate the SEE-GRID infrastructure § SA 1. 1. 3: Deploy and Operate the core services for new VOs § SA 1. 1. 4: Catch-all CA and deployment and operational support for new and emerging Grid CAs § SA 1. 1. 5: Certify and migrate SEE-GRID sites from regional to global production-level e. Infrastructure SA 1. 2: Resource Centre SLA monitoring and enforcement § SA 1. 2. 1: SLA detailed specification, identification and deployment of operational tools relevant for SLA monitoring § SA 1. 2. 2: Monitoring, assessment and enforcement of RC conformance to SLA SA 1. 3: Network Resource Provision § SA 1. 3. 1: Network resource provision and liaison with regional e. Infrastructure networking projects § SA 1. 3. 2: Procurement of a link between Moldova and GEANT PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 4

SA 1 deliverables status DSA 1. 1 a: Infrastructure Deployment Plan (M 04) § CERN, Editor: D. Stojiljkovic DSA 1. 2: SLA detailed specification and related monitoring tools (M 05) § UOBL, Editor: M. Savic DSA 1. 3 a: Infrastructure overview and assessment (M 12) § UKIM, Editor: B. Jakimovski DSA 1. 1 b: Infrastructure Deployment Plan (M 14) § UOB-IPB, Editor: A. Balaz DSA 1. 3 b: Infrastructure overview and assessment (M 23) § UKIM, Editor: B. Jakimovski PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 5

SA 1 deliverables status DSA 1. 1 a: Infrastructure Deployment Plan (M 04) § CERN, Editor: D. Stojiljkovic DSA 1. 2: SLA detailed specification and related monitoring tools (M 05) § UOBL, Editor: M. Savic DSA 1. 3 a: Infrastructure overview and assessment (M 12) § UKIM, Editor: B. Jakimovski DSA 1. 1 b: Infrastructure Deployment Plan (M 14) § UOB-IPB, Editor: A. Balaz DSA 1. 3 b: Infrastructure overview and assessment (M 23) § UKIM, Editor: B. Jakimovski PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 5

SA 1 milestones status MSA 1. 1: Infrastructure deployment plan defined (M 04) § CERN (verified by DSA 1. 1 a) MSA 1. 2: SLA structure and enforcement plan defined (M 05) § Uo. BL (verified by DSA 1. 2) MSA 1. 3: Network link for Moldova established (M 23) § RENAM: (verified by the operational link to MD and DSA 1. 3 b) MSA 1. 4: Infrastructure performance and usage assessed (M 23) § UKIM (verified by DSA 1. 3 b) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 6

SA 1 milestones status MSA 1. 1: Infrastructure deployment plan defined (M 04) § CERN (verified by DSA 1. 1 a) MSA 1. 2: SLA structure and enforcement plan defined (M 05) § Uo. BL (verified by DSA 1. 2) MSA 1. 3: Network link for Moldova established (M 23) § RENAM: (verified by the operational link to MD and DSA 1. 3 b) MSA 1. 4: Infrastructure performance and usage assessed (M 23) § UKIM (verified by DSA 1. 3 b) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 6

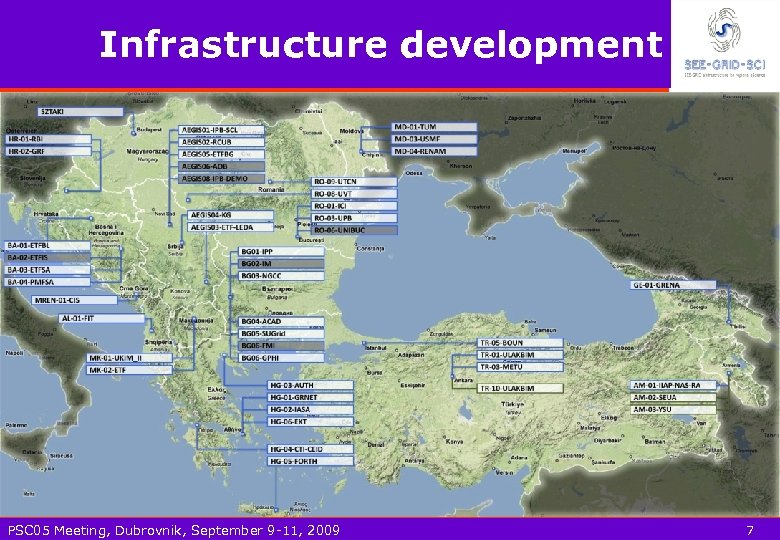

Infrastructure development PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 7

Infrastructure development PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 7

Core services § Catch-all Certification Authority q enables regional sites to obtain user and host certificates § Virtual Organisation Management Service (VOMS), q q For each scientific community deployed in two instances for failover Supporting groups and roles § Workload management service (glite-WMS/LB) and Information Services (BDII) q For each scientific community deployed in several instances for failover § Logical File Catalogue (LFC) q For each scientific community deployed in several instances for failover § My. Proxy q q Supports certificate renewal for all deployed WMS/RB services For each scientific community deployed in several instances for failover § File Transfer Service (FTS) q Used in production § Relational Grid Monitoring Architecture (R-GMA), Registry and Schema q SEE-GRID accounting publisher, with support for MPI jobs accounting § AMGA Metadata Catalogue PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 8

Core services § Catch-all Certification Authority q enables regional sites to obtain user and host certificates § Virtual Organisation Management Service (VOMS), q q For each scientific community deployed in two instances for failover Supporting groups and roles § Workload management service (glite-WMS/LB) and Information Services (BDII) q For each scientific community deployed in several instances for failover § Logical File Catalogue (LFC) q For each scientific community deployed in several instances for failover § My. Proxy q q Supports certificate renewal for all deployed WMS/RB services For each scientific community deployed in several instances for failover § File Transfer Service (FTS) q Used in production § Relational Grid Monitoring Architecture (R-GMA), Registry and Schema q SEE-GRID accounting publisher, with support for MPI jobs accounting § AMGA Metadata Catalogue PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 8

Core services map PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 9

Core services map PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 9

Certificate authorities map: M 01 Catch All CA Candidate CA PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 Established CA Training CA New CA RA 10

Certificate authorities map: M 01 Catch All CA Candidate CA PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 Established CA Training CA New CA RA 10

Certificate authorities map: M 12 Catch All CA Candidate CA Established CA Training CA New CA RA RA PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 11

Certificate authorities map: M 12 Catch All CA Candidate CA Established CA Training CA New CA RA RA PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 11

Infrastructure expansion (1) SEE-GRID-SCI infrastructure contains currently the following resources: § § Dedicated CPUs: 1086 total (increase in Y 1: 318 CPUs) Storage: 288 TB (237 TB more than planned at the end of Y 1) 40 sites in SEE-GRID-SCI production (increase in Y 1: 6 sites) Typical machine configuration: dual or quad-core CPUs, with 1 GB of RAM per CPU core; many sites with 64 -bit architecture All sites on g. Lite-3. 1; Scientific Linux 4. x used as a base OS, but others also present (Cent. OS, Debian) Metrics MTSA 1. 1 generally fulfilled Armenia and Georgia have deployed new Grid sites and joined the SEE-GRID infrastructure – MTSA 1. 2 PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 12

Infrastructure expansion (1) SEE-GRID-SCI infrastructure contains currently the following resources: § § Dedicated CPUs: 1086 total (increase in Y 1: 318 CPUs) Storage: 288 TB (237 TB more than planned at the end of Y 1) 40 sites in SEE-GRID-SCI production (increase in Y 1: 6 sites) Typical machine configuration: dual or quad-core CPUs, with 1 GB of RAM per CPU core; many sites with 64 -bit architecture All sites on g. Lite-3. 1; Scientific Linux 4. x used as a base OS, but others also present (Cent. OS, Debian) Metrics MTSA 1. 1 generally fulfilled Armenia and Georgia have deployed new Grid sites and joined the SEE-GRID infrastructure – MTSA 1. 2 PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 12

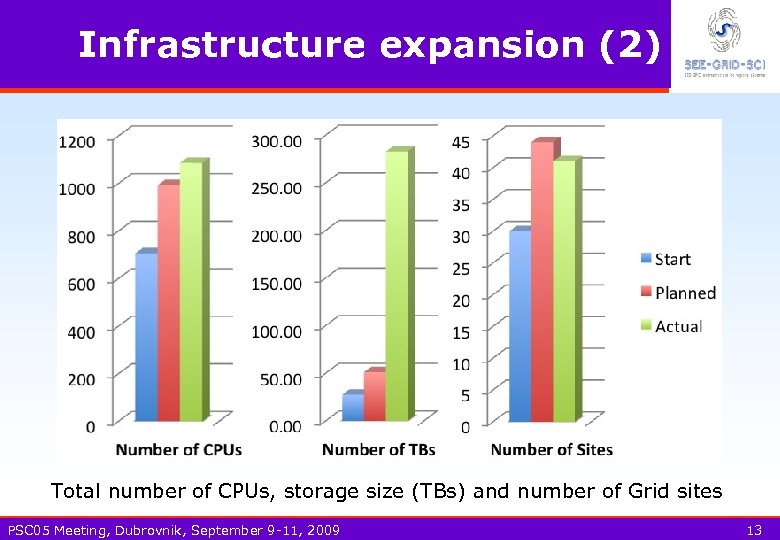

Infrastructure expansion (2) Total number of CPUs, storage size (TBs) and number of Grid sites PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 13

Infrastructure expansion (2) Total number of CPUs, storage size (TBs) and number of Grid sites PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 13

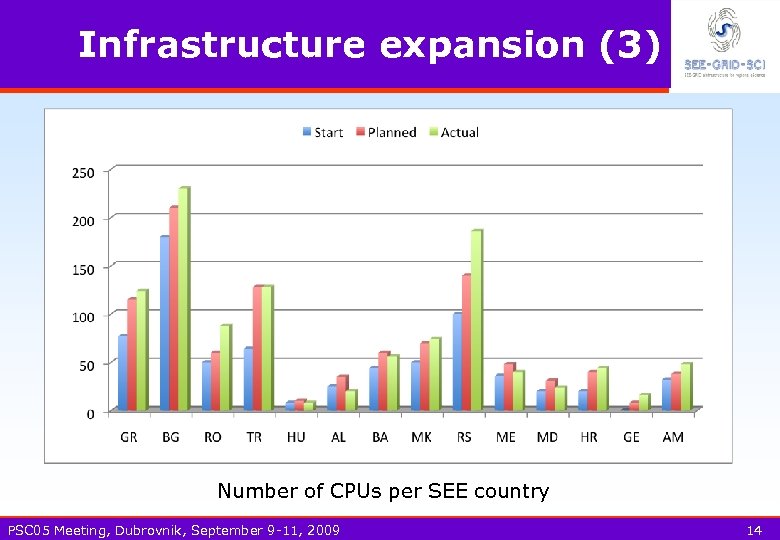

Infrastructure expansion (3) Number of CPUs per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 14

Infrastructure expansion (3) Number of CPUs per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 14

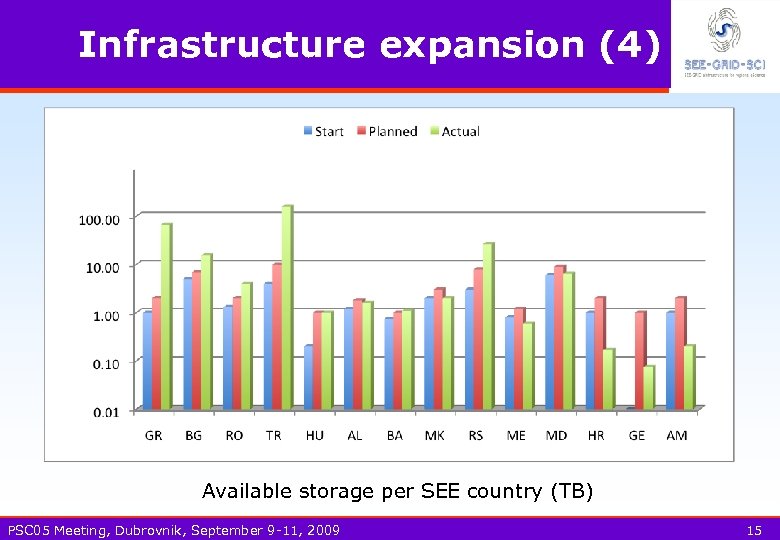

Infrastructure expansion (4) Available storage per SEE country (TB) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 15

Infrastructure expansion (4) Available storage per SEE country (TB) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 15

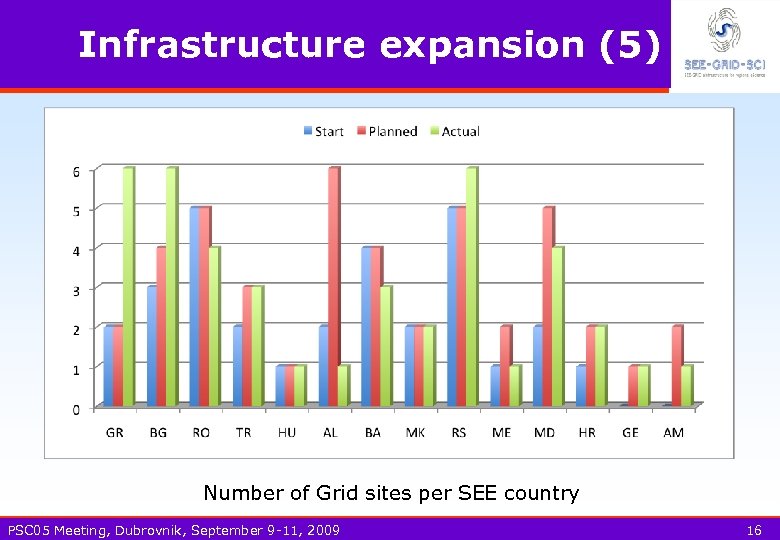

Infrastructure expansion (5) Number of Grid sites per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 16

Infrastructure expansion (5) Number of Grid sites per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 16

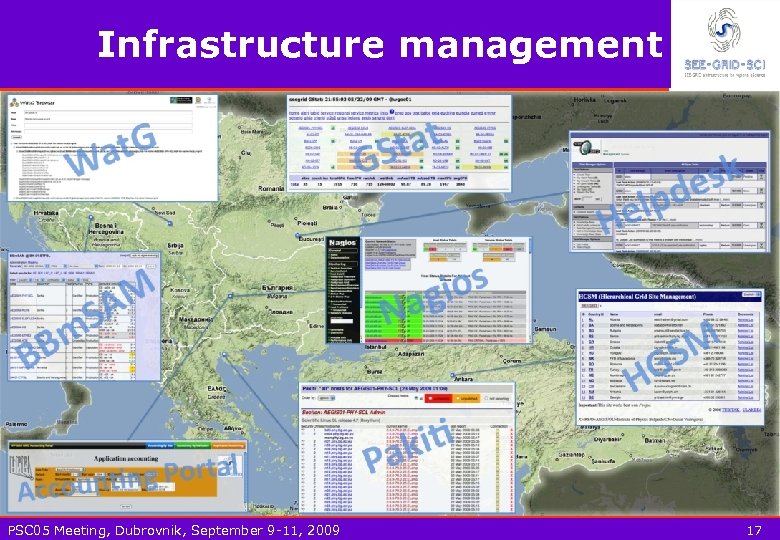

Infrastructure management PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 17

Infrastructure management PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 17

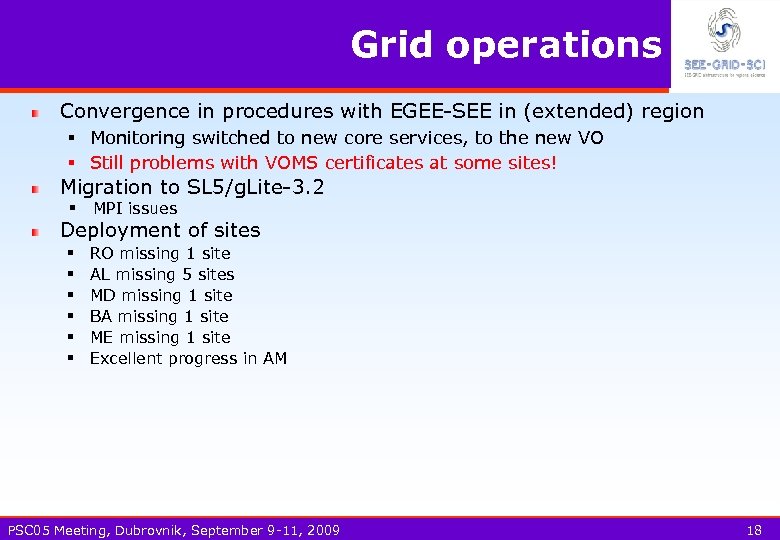

Grid operations Convergence in procedures with EGEE-SEE in (extended) region § Monitoring switched to new core services, to the new VO § Still problems with VOMS certificates at some sites! Migration to SL 5/g. Lite-3. 2 § MPI issues Deployment of sites § § § RO missing 1 site AL missing 5 sites MD missing 1 site BA missing 1 site ME missing 1 site Excellent progress in AM PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 18

Grid operations Convergence in procedures with EGEE-SEE in (extended) region § Monitoring switched to new core services, to the new VO § Still problems with VOMS certificates at some sites! Migration to SL 5/g. Lite-3. 2 § MPI issues Deployment of sites § § § RO missing 1 site AL missing 5 sites MD missing 1 site BA missing 1 site ME missing 1 site Excellent progress in AM PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 18

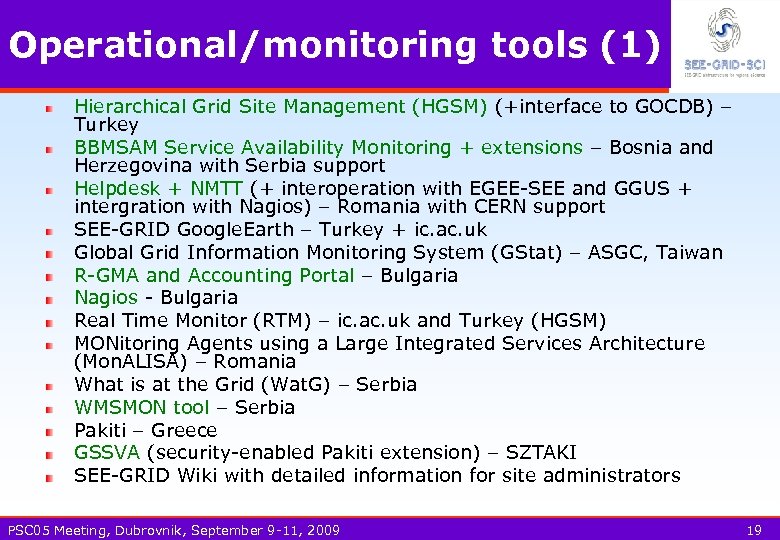

Operational/monitoring tools (1) Hierarchical Grid Site Management (HGSM) (+interface to GOCDB) – Turkey BBMSAM Service Availability Monitoring + extensions – Bosnia and Herzegovina with Serbia support Helpdesk + NMTT (+ interoperation with EGEE-SEE and GGUS + intergration with Nagios) – Romania with CERN support SEE-GRID Google. Earth – Turkey + ic. ac. uk Global Grid Information Monitoring System (GStat) – ASGC, Taiwan R-GMA and Accounting Portal – Bulgaria Nagios - Bulgaria Real Time Monitor (RTM) – ic. ac. uk and Turkey (HGSM) MONitoring Agents using a Large Integrated Services Architecture (Mon. ALISA) – Romania What is at the Grid (Wat. G) – Serbia WMSMON tool – Serbia Pakiti – Greece GSSVA (security-enabled Pakiti extension) – SZTAKI SEE-GRID Wiki with detailed information for site administrators PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 19

Operational/monitoring tools (1) Hierarchical Grid Site Management (HGSM) (+interface to GOCDB) – Turkey BBMSAM Service Availability Monitoring + extensions – Bosnia and Herzegovina with Serbia support Helpdesk + NMTT (+ interoperation with EGEE-SEE and GGUS + intergration with Nagios) – Romania with CERN support SEE-GRID Google. Earth – Turkey + ic. ac. uk Global Grid Information Monitoring System (GStat) – ASGC, Taiwan R-GMA and Accounting Portal – Bulgaria Nagios - Bulgaria Real Time Monitor (RTM) – ic. ac. uk and Turkey (HGSM) MONitoring Agents using a Large Integrated Services Architecture (Mon. ALISA) – Romania What is at the Grid (Wat. G) – Serbia WMSMON tool – Serbia Pakiti – Greece GSSVA (security-enabled Pakiti extension) – SZTAKI SEE-GRID Wiki with detailed information for site administrators PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 19

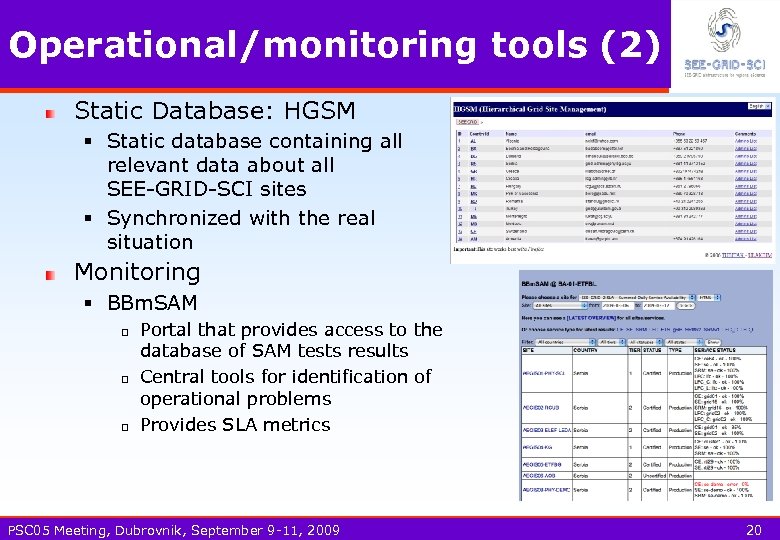

Operational/monitoring tools (2) Static Database: HGSM § Static database containing all relevant data about all SEE-GRID-SCI sites § Synchronized with the real situation Monitoring § BBm. SAM q q q Portal that provides access to the database of SAM tests results Central tools for identification of operational problems Provides SLA metrics PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 20

Operational/monitoring tools (2) Static Database: HGSM § Static database containing all relevant data about all SEE-GRID-SCI sites § Synchronized with the real situation Monitoring § BBm. SAM q q q Portal that provides access to the database of SAM tests results Central tools for identification of operational problems Provides SLA metrics PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 20

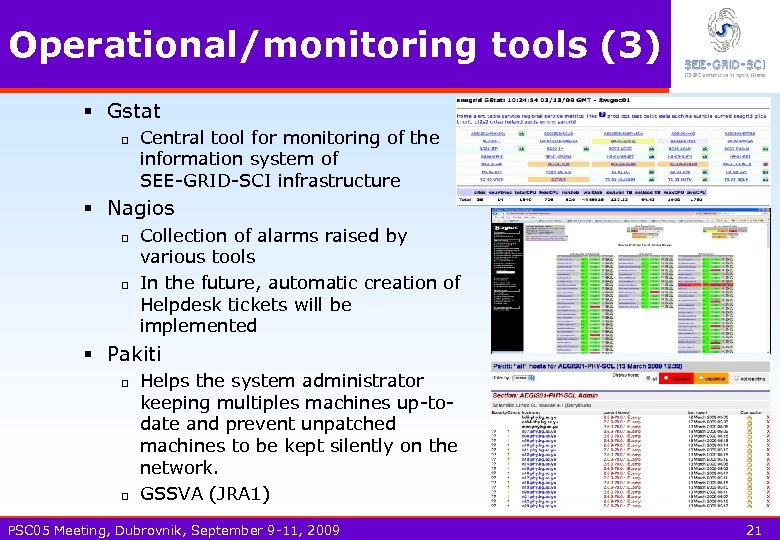

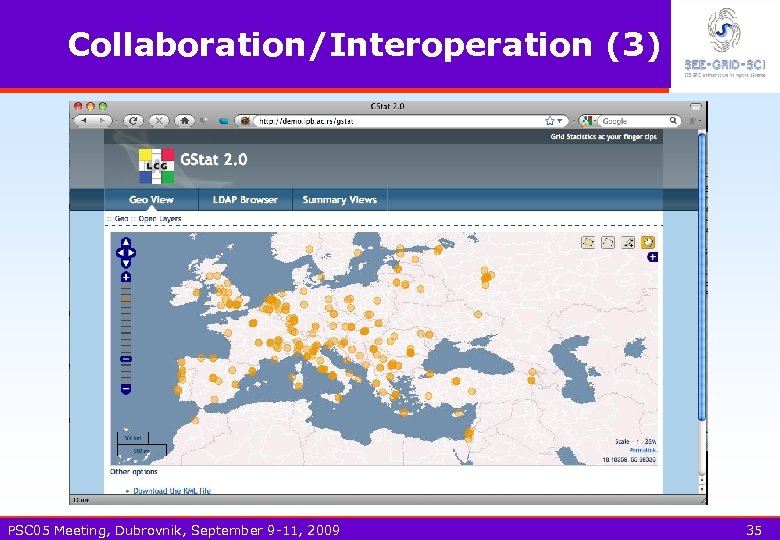

Operational/monitoring tools (3) § Gstat q Central tool for monitoring of the information system of SEE-GRID-SCI infrastructure § Nagios q q Collection of alarms raised by various tools In the future, automatic creation of Helpdesk tickets will be implemented § Pakiti q q Helps the system administrator keeping multiples machines up-todate and prevent unpatched machines to be kept silently on the network. GSSVA (JRA 1) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 21

Operational/monitoring tools (3) § Gstat q Central tool for monitoring of the information system of SEE-GRID-SCI infrastructure § Nagios q q Collection of alarms raised by various tools In the future, automatic creation of Helpdesk tickets will be implemented § Pakiti q q Helps the system administrator keeping multiples machines up-todate and prevent unpatched machines to be kept silently on the network. GSSVA (JRA 1) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 21

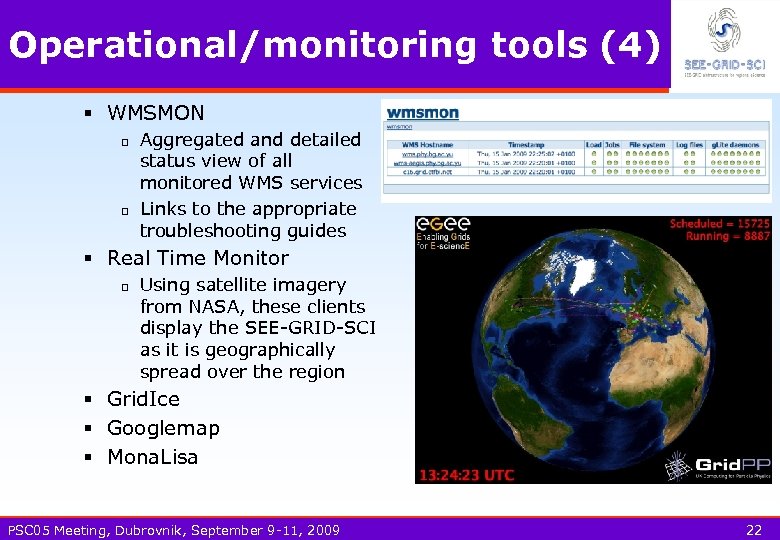

Operational/monitoring tools (4) § WMSMON q q Aggregated and detailed status view of all monitored WMS services Links to the appropriate troubleshooting guides § Real Time Monitor q Using satellite imagery from NASA, these clients display the SEE-GRID-SCI as it is geographically spread over the region § Grid. Ice § Googlemap § Mona. Lisa PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 22

Operational/monitoring tools (4) § WMSMON q q Aggregated and detailed status view of all monitored WMS services Links to the appropriate troubleshooting guides § Real Time Monitor q Using satellite imagery from NASA, these clients display the SEE-GRID-SCI as it is geographically spread over the region § Grid. Ice § Googlemap § Mona. Lisa PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 22

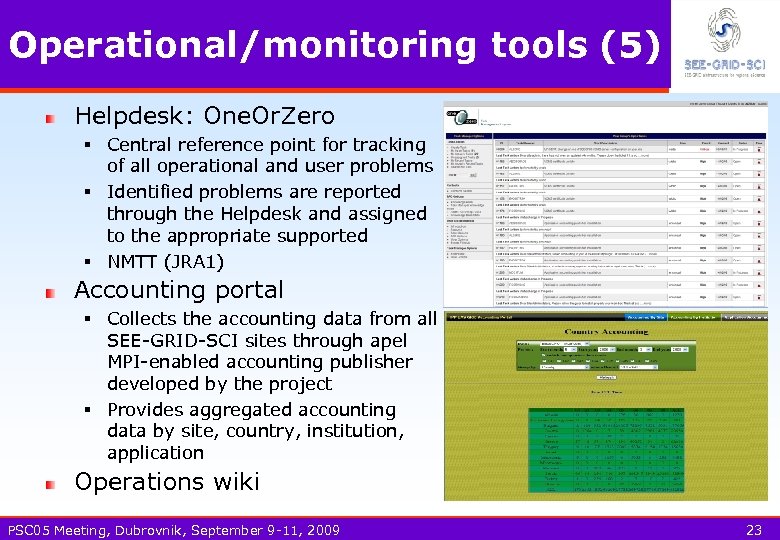

Operational/monitoring tools (5) Helpdesk: One. Or. Zero § Central reference point for tracking of all operational and user problems § Identified problems are reported through the Helpdesk and assigned to the appropriate supported § NMTT (JRA 1) Accounting portal § Collects the accounting data from all SEE-GRID-SCI sites through apel MPI-enabled accounting publisher developed by the project § Provides aggregated accounting data by site, country, institution, application Operations wiki PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 23

Operational/monitoring tools (5) Helpdesk: One. Or. Zero § Central reference point for tracking of all operational and user problems § Identified problems are reported through the Helpdesk and assigned to the appropriate supported § NMTT (JRA 1) Accounting portal § Collects the accounting data from all SEE-GRID-SCI sites through apel MPI-enabled accounting publisher developed by the project § Provides aggregated accounting data by site, country, institution, application Operations wiki PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 23

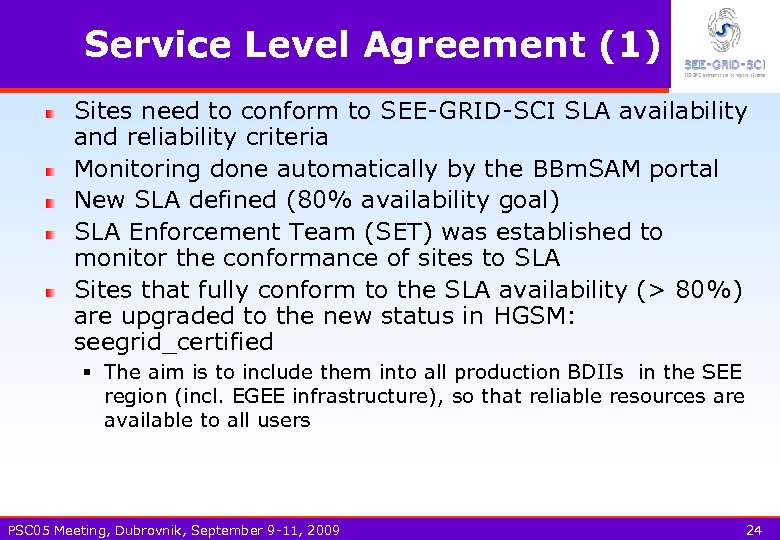

Service Level Agreement (1) Sites need to conform to SEE-GRID-SCI SLA availability and reliability criteria Monitoring done automatically by the BBm. SAM portal New SLA defined (80% availability goal) SLA Enforcement Team (SET) was established to monitor the conformance of sites to SLA Sites that fully conform to the SLA availability (> 80%) are upgraded to the new status in HGSM: seegrid_certified § The aim is to include them into all production BDIIs in the SEE region (incl. EGEE infrastructure), so that reliable resources are available to all users PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 24

Service Level Agreement (1) Sites need to conform to SEE-GRID-SCI SLA availability and reliability criteria Monitoring done automatically by the BBm. SAM portal New SLA defined (80% availability goal) SLA Enforcement Team (SET) was established to monitor the conformance of sites to SLA Sites that fully conform to the SLA availability (> 80%) are upgraded to the new status in HGSM: seegrid_certified § The aim is to include them into all production BDIIs in the SEE region (incl. EGEE infrastructure), so that reliable resources are available to all users PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 24

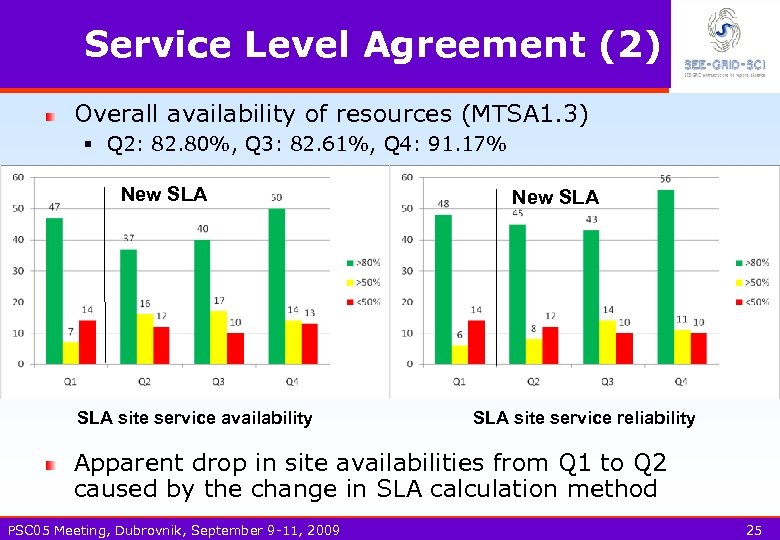

Service Level Agreement (2) Overall availability of resources (MTSA 1. 3) § Q 2: 82. 80%, Q 3: 82. 61%, Q 4: 91. 17% New SLA site service availability New SLA site service reliability Apparent drop in site availabilities from Q 1 to Q 2 caused by the change in SLA calculation method PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 25

Service Level Agreement (2) Overall availability of resources (MTSA 1. 3) § Q 2: 82. 80%, Q 3: 82. 61%, Q 4: 91. 17% New SLA site service availability New SLA site service reliability Apparent drop in site availabilities from Q 1 to Q 2 caused by the change in SLA calculation method PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 25

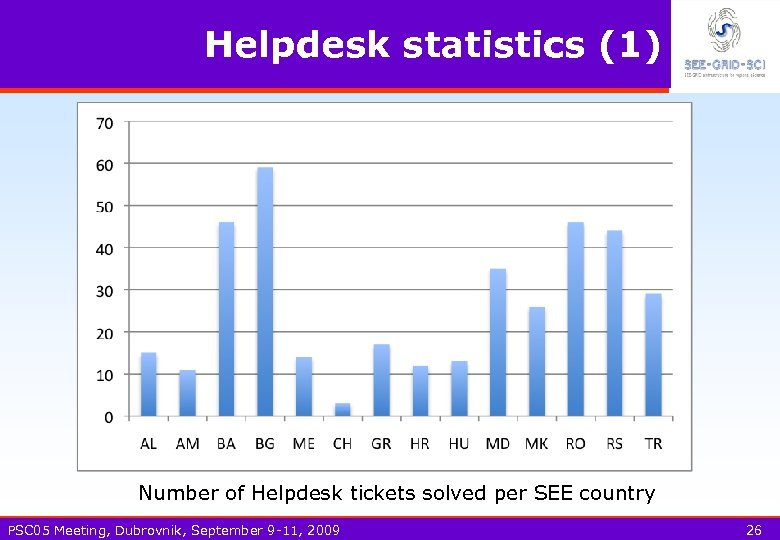

Helpdesk statistics (1) Number of Helpdesk tickets solved per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 26

Helpdesk statistics (1) Number of Helpdesk tickets solved per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 26

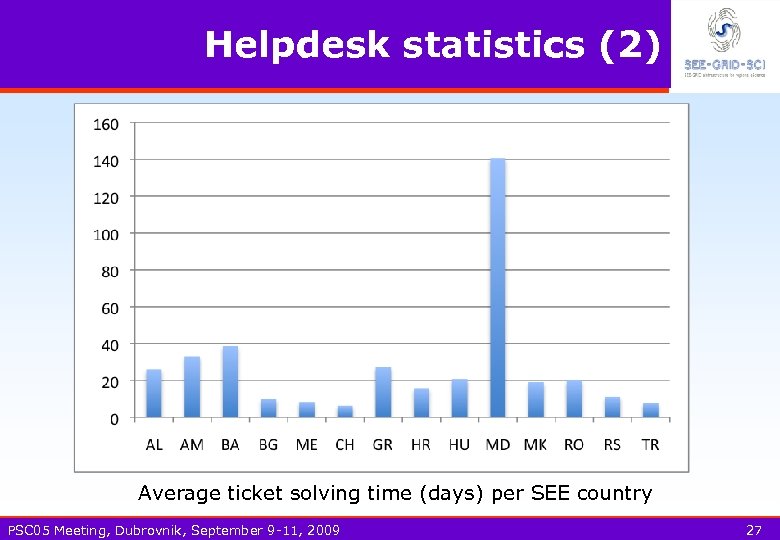

Helpdesk statistics (2) Average ticket solving time (days) per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 27

Helpdesk statistics (2) Average ticket solving time (days) per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 27

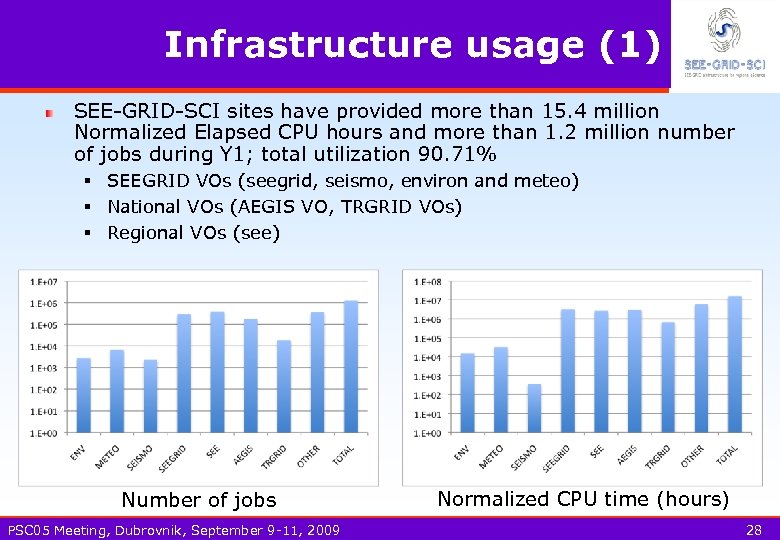

Infrastructure usage (1) SEE-GRID-SCI sites have provided more than 15. 4 million Normalized Elapsed CPU hours and more than 1. 2 million number of jobs during Y 1; total utilization 90. 71% § SEEGRID VOs (seegrid, seismo, environ and meteo) § National VOs (AEGIS VO, TRGRID VOs) § Regional VOs (see) Number of jobs PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 Normalized CPU time (hours) 28

Infrastructure usage (1) SEE-GRID-SCI sites have provided more than 15. 4 million Normalized Elapsed CPU hours and more than 1. 2 million number of jobs during Y 1; total utilization 90. 71% § SEEGRID VOs (seegrid, seismo, environ and meteo) § National VOs (AEGIS VO, TRGRID VOs) § Regional VOs (see) Number of jobs PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 Normalized CPU time (hours) 28

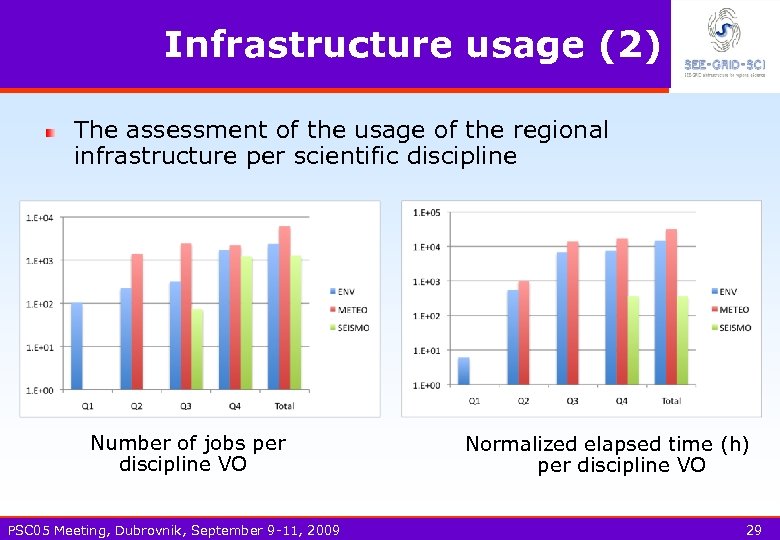

Infrastructure usage (2) The assessment of the usage of the regional infrastructure per scientific discipline Number of jobs per discipline VO PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 Normalized elapsed time (h) per discipline VO 29

Infrastructure usage (2) The assessment of the usage of the regional infrastructure per scientific discipline Number of jobs per discipline VO PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 Normalized elapsed time (h) per discipline VO 29

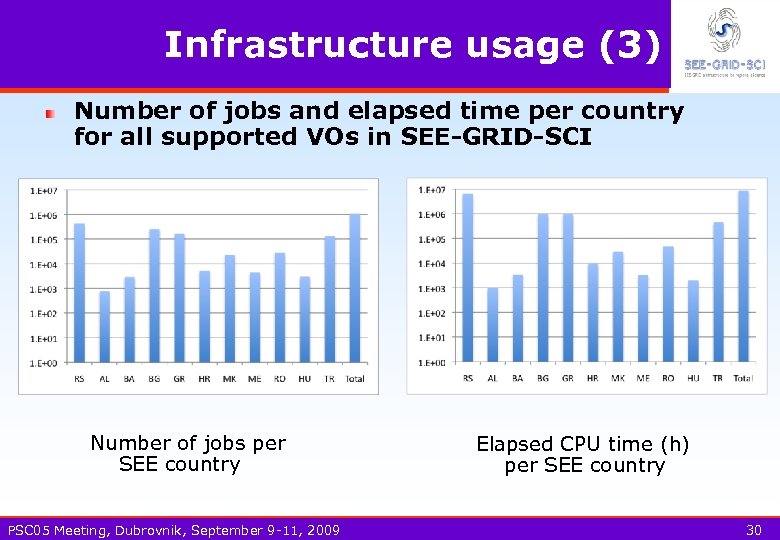

Infrastructure usage (3) Number of jobs and elapsed time per country for all supported VOs in SEE-GRID-SCI Number of jobs per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 Elapsed CPU time (h) per SEE country 30

Infrastructure usage (3) Number of jobs and elapsed time per country for all supported VOs in SEE-GRID-SCI Number of jobs per SEE country PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 Elapsed CPU time (h) per SEE country 30

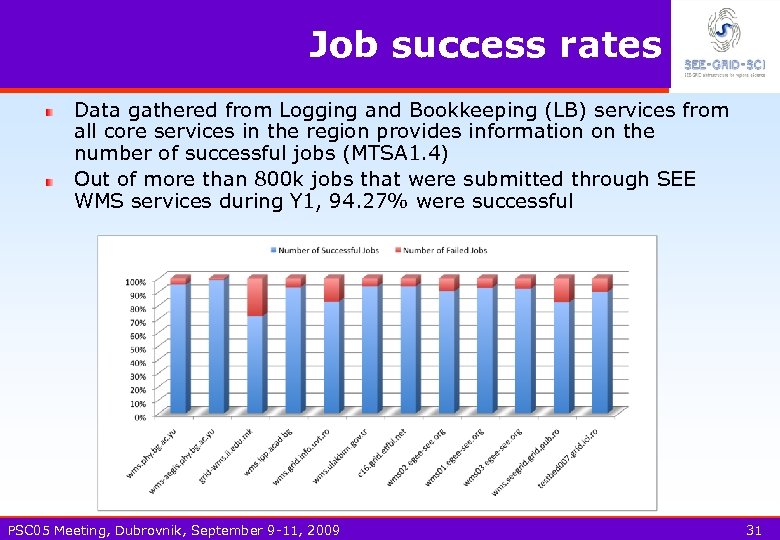

Job success rates Data gathered from Logging and Bookkeeping (LB) services from all core services in the region provides information on the number of successful jobs (MTSA 1. 4) Out of more than 800 k jobs that were submitted through SEE WMS services during Y 1, 94. 27% were successful PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 31

Job success rates Data gathered from Logging and Bookkeeping (LB) services from all core services in the region provides information on the number of successful jobs (MTSA 1. 4) Out of more than 800 k jobs that were submitted through SEE WMS services during Y 1, 94. 27% were successful PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 31

Network link to Moldova status Two stages § Upgrade of the existing radio-relay link Chisinau-Iasi: this is actually implemented approach that has restrictions on the perspective growth; currently realized operational connection to Ro. Edu. Net has 2 x 155 Mbps capacity § Provision of the direct Dark Fiber link Chisinau-Iasi; contract signed in February 2009 In November 2008 an updated proposal was submitted to NATO for co-finding of the Dark Fiber link § NATO Science for Peace Committee authorities positively evaluated the updated proposal and in February 2009, NATO project co-director received confirmation that the revised proposal was accepted PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 32

Network link to Moldova status Two stages § Upgrade of the existing radio-relay link Chisinau-Iasi: this is actually implemented approach that has restrictions on the perspective growth; currently realized operational connection to Ro. Edu. Net has 2 x 155 Mbps capacity § Provision of the direct Dark Fiber link Chisinau-Iasi; contract signed in February 2009 In November 2008 an updated proposal was submitted to NATO for co-finding of the Dark Fiber link § NATO Science for Peace Committee authorities positively evaluated the updated proposal and in February 2009, NATO project co-director received confirmation that the revised proposal was accepted PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 32

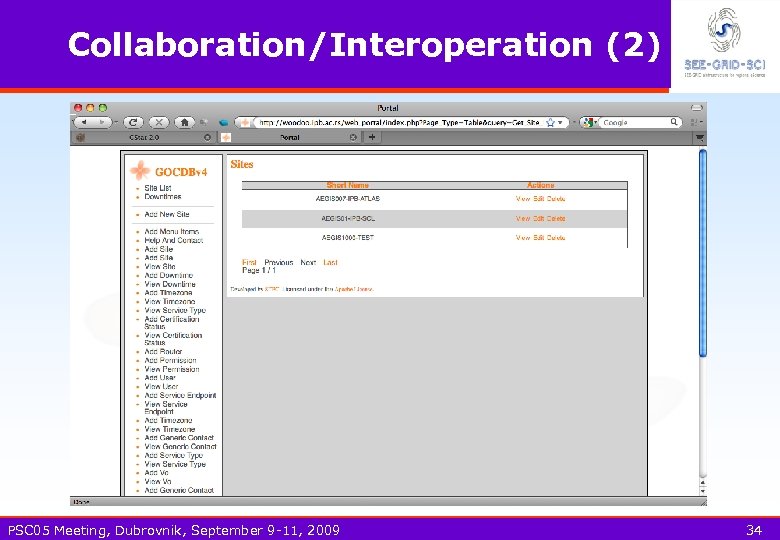

Collaboration/Interoperation (1) NA 4: support of discipline VOs (core services and resources) and apps JRA 1: developing and deploying OTs NA 3: providing training infrastructure (core services and resources) NA 2: inputs to/implementation of policy documents Infrastructure fully interoperable with EGEE and a number of other regional Grid infrastructures Active participation in EGEE Operations Automation Team (OAT) § Joint work and development of Nagios solutions for Grid resources § Interoperation of HGSM and GOCDB; regionalization and testing of GOCDB § Testing of GStat 2. 0 Grid-Operator-on-Duty experiences communicated to EGEE § Basis for regionalization of COD Sharing of tools with other projects/infrastructures: WMSMON, Wat. G Collaboration with the EDGe. S project on establishing interoperability with infrastructures based on desktop Grids PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 33

Collaboration/Interoperation (1) NA 4: support of discipline VOs (core services and resources) and apps JRA 1: developing and deploying OTs NA 3: providing training infrastructure (core services and resources) NA 2: inputs to/implementation of policy documents Infrastructure fully interoperable with EGEE and a number of other regional Grid infrastructures Active participation in EGEE Operations Automation Team (OAT) § Joint work and development of Nagios solutions for Grid resources § Interoperation of HGSM and GOCDB; regionalization and testing of GOCDB § Testing of GStat 2. 0 Grid-Operator-on-Duty experiences communicated to EGEE § Basis for regionalization of COD Sharing of tools with other projects/infrastructures: WMSMON, Wat. G Collaboration with the EDGe. S project on establishing interoperability with infrastructures based on desktop Grids PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 33

Collaboration/Interoperation (2) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 34

Collaboration/Interoperation (2) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 34

Collaboration/Interoperation (3) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 35

Collaboration/Interoperation (3) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 35

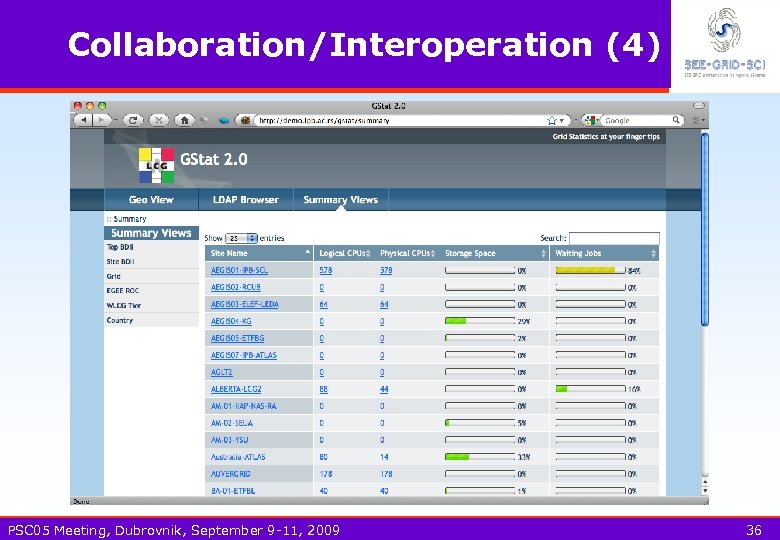

Collaboration/Interoperation (4) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 36

Collaboration/Interoperation (4) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 36

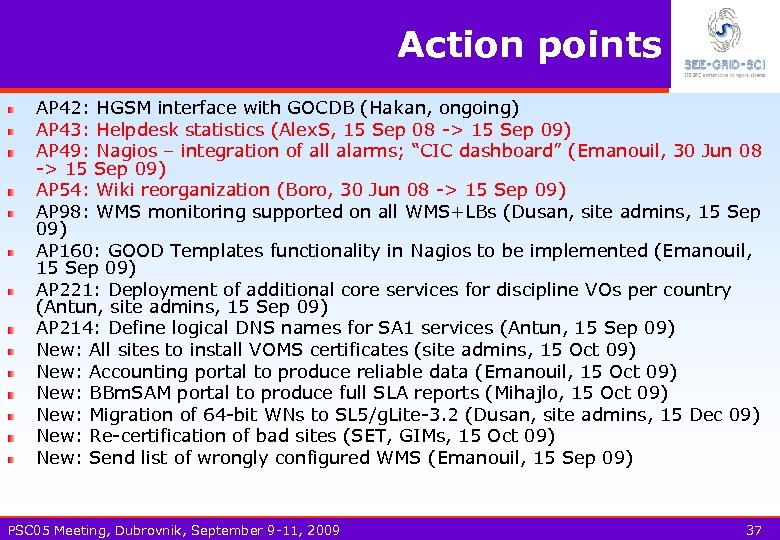

Action points AP 42: HGSM interface with GOCDB (Hakan, ongoing) AP 43: Helpdesk statistics (Alex. S, 15 Sep 08 -> 15 Sep 09) AP 49: Nagios – integration of all alarms; “CIC dashboard” (Emanouil, 30 Jun 08 -> 15 Sep 09) AP 54: Wiki reorganization (Boro, 30 Jun 08 -> 15 Sep 09) AP 98: WMS monitoring supported on all WMS+LBs (Dusan, site admins, 15 Sep 09) AP 160: GOOD Templates functionality in Nagios to be implemented (Emanouil, 15 Sep 09) AP 221: Deployment of additional core services for discipline VOs per country (Antun, site admins, 15 Sep 09) AP 214: Define logical DNS names for SA 1 services (Antun, 15 Sep 09) New: All sites to install VOMS certificates (site admins, 15 Oct 09) New: Accounting portal to produce reliable data (Emanouil, 15 Oct 09) New: BBm. SAM portal to produce full SLA reports (Mihajlo, 15 Oct 09) New: Migration of 64 -bit WNs to SL 5/g. Lite-3. 2 (Dusan, site admins, 15 Dec 09) New: Re-certification of bad sites (SET, GIMs, 15 Oct 09) New: Send list of wrongly configured WMS (Emanouil, 15 Sep 09) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 37

Action points AP 42: HGSM interface with GOCDB (Hakan, ongoing) AP 43: Helpdesk statistics (Alex. S, 15 Sep 08 -> 15 Sep 09) AP 49: Nagios – integration of all alarms; “CIC dashboard” (Emanouil, 30 Jun 08 -> 15 Sep 09) AP 54: Wiki reorganization (Boro, 30 Jun 08 -> 15 Sep 09) AP 98: WMS monitoring supported on all WMS+LBs (Dusan, site admins, 15 Sep 09) AP 160: GOOD Templates functionality in Nagios to be implemented (Emanouil, 15 Sep 09) AP 221: Deployment of additional core services for discipline VOs per country (Antun, site admins, 15 Sep 09) AP 214: Define logical DNS names for SA 1 services (Antun, 15 Sep 09) New: All sites to install VOMS certificates (site admins, 15 Oct 09) New: Accounting portal to produce reliable data (Emanouil, 15 Oct 09) New: BBm. SAM portal to produce full SLA reports (Mihajlo, 15 Oct 09) New: Migration of 64 -bit WNs to SL 5/g. Lite-3. 2 (Dusan, site admins, 15 Dec 09) New: Re-certification of bad sites (SET, GIMs, 15 Oct 09) New: Send list of wrongly configured WMS (Emanouil, 15 Sep 09) PSC 05 Meeting, Dubrovnik, September 9 -11, 2009 37