c31854d1830bd85220559830b39cccef.ppt

- Количество слайдов: 33

Search Applications: Machine Translation Next time: Constraint Satisfaction Reading for today: See “Machine Translation Paper” under links Reading for next time: Chapter 5

Search Applications: Machine Translation Next time: Constraint Satisfaction Reading for today: See “Machine Translation Paper” under links Reading for next time: Chapter 5

Homework Questions? ¡ 2

Homework Questions? ¡ 2

Agenda ¡ Introduction to machine translation Statistical approaches ¡ Use of parallel data ¡ Alignment ¡ ¡ What functions must be optimized? ¡ Comparison of A* and greedy local search (hill climbing) algorithms for translation How they work ¡ Their performance ¡ 3

Agenda ¡ Introduction to machine translation Statistical approaches ¡ Use of parallel data ¡ Alignment ¡ ¡ What functions must be optimized? ¡ Comparison of A* and greedy local search (hill climbing) algorithms for translation How they work ¡ Their performance ¡ 3

Approach to Statistical MT ¡ Translate from past experience ¡ Observe how words, and phrases, and sentences are translated ¡ Given new sentences in the source language, choose the most probable translation in the target language ¡ Data: large corpus of parallel text ¡ E. g. , Canadian Parliamentary proceedings 4

Approach to Statistical MT ¡ Translate from past experience ¡ Observe how words, and phrases, and sentences are translated ¡ Given new sentences in the source language, choose the most probable translation in the target language ¡ Data: large corpus of parallel text ¡ E. g. , Canadian Parliamentary proceedings 4

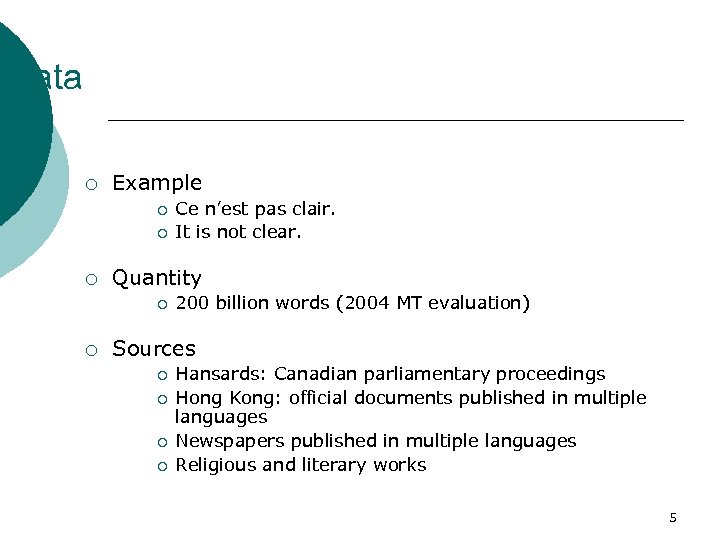

Data ¡ Example ¡ ¡ ¡ Quantity ¡ ¡ Ce n’est pas clair. It is not clear. 200 billion words (2004 MT evaluation) Sources ¡ ¡ Hansards: Canadian parliamentary proceedings Hong Kong: official documents published in multiple languages Newspapers published in multiple languages Religious and literary works 5

Data ¡ Example ¡ ¡ ¡ Quantity ¡ ¡ Ce n’est pas clair. It is not clear. 200 billion words (2004 MT evaluation) Sources ¡ ¡ Hansards: Canadian parliamentary proceedings Hong Kong: official documents published in multiple languages Newspapers published in multiple languages Religious and literary works 5

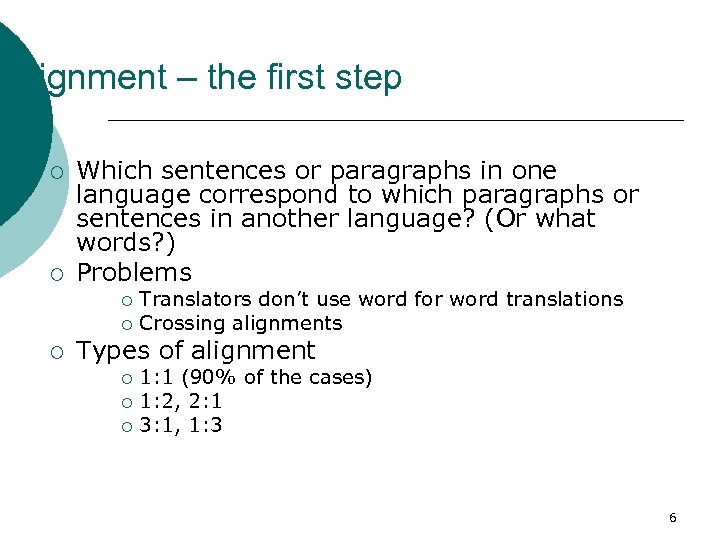

Alignment – the first step ¡ ¡ Which sentences or paragraphs in one language correspond to which paragraphs or sentences in another language? (Or what words? ) Problems Translators don’t use word for word translations ¡ Crossing alignments ¡ ¡ Types of alignment 1: 1 (90% of the cases) ¡ 1: 2, 2: 1 ¡ 3: 1, 1: 3 ¡ 6

Alignment – the first step ¡ ¡ Which sentences or paragraphs in one language correspond to which paragraphs or sentences in another language? (Or what words? ) Problems Translators don’t use word for word translations ¡ Crossing alignments ¡ ¡ Types of alignment 1: 1 (90% of the cases) ¡ 1: 2, 2: 1 ¡ 3: 1, 1: 3 ¡ 6

![With regard to Quant aux According to [the] mineral waters and [(les) eaux minerales With regard to Quant aux According to [the] mineral waters and [(les) eaux minerales](https://present5.com/presentation/c31854d1830bd85220559830b39cccef/image-7.jpg) With regard to Quant aux According to [the] mineral waters and [(les) eaux minerales et [our survey, ] 1988 the lemonades-soft drinks aux limonades], they encounter [elles rencontrent [sales] of still more toujours plus [mineral water users. Indeed d’adeptes. ] En effet and soft drinks] were our survey [notre sondage] [much higher] makes standout fait ressortir [than in 1987, ] the sales [des ventes] reflecting clearly [nettement [The growing popularity] superior Superieures] Of these products. to those in 1987 [a celles de 1987] [Cola drink] manufacturers for cola-based drinks Pour [les boissons a base de cola] [in particular] especially notamment Achieved above Average growth rates An example of 2: 2 alignment 7

With regard to Quant aux According to [the] mineral waters and [(les) eaux minerales et [our survey, ] 1988 the lemonades-soft drinks aux limonades], they encounter [elles rencontrent [sales] of still more toujours plus [mineral water users. Indeed d’adeptes. ] En effet and soft drinks] were our survey [notre sondage] [much higher] makes standout fait ressortir [than in 1987, ] the sales [des ventes] reflecting clearly [nettement [The growing popularity] superior Superieures] Of these products. to those in 1987 [a celles de 1987] [Cola drink] manufacturers for cola-based drinks Pour [les boissons a base de cola] [in particular] especially notamment Achieved above Average growth rates An example of 2: 2 alignment 7

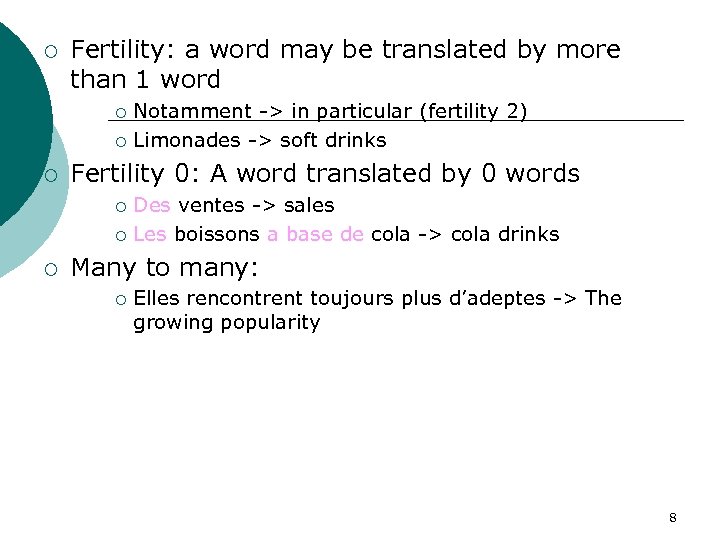

¡ Fertility: a word may be translated by more than 1 word Notamment -> in particular (fertility 2) ¡ Limonades -> soft drinks ¡ ¡ Fertility 0: A word translated by 0 words Des ventes -> sales ¡ Les boissons a base de cola -> cola drinks ¡ ¡ Many to many: ¡ Elles rencontrent toujours plus d’adeptes -> The growing popularity 8

¡ Fertility: a word may be translated by more than 1 word Notamment -> in particular (fertility 2) ¡ Limonades -> soft drinks ¡ ¡ Fertility 0: A word translated by 0 words Des ventes -> sales ¡ Les boissons a base de cola -> cola drinks ¡ ¡ Many to many: ¡ Elles rencontrent toujours plus d’adeptes -> The growing popularity 8

Bead for sentence alignment ¡ A group of sentences in one language that corresponds in content to some group of sentences in the other language ¡ Either group can be empty ¡ How much content has to overlap between sentences to count it as alignment? ¡ An overlapping clause can be sufficient 9

Bead for sentence alignment ¡ A group of sentences in one language that corresponds in content to some group of sentences in the other language ¡ Either group can be empty ¡ How much content has to overlap between sentences to count it as alignment? ¡ An overlapping clause can be sufficient 9

Methods for alignment ¡ Length based ¡ Offset alignment ¡ Word based ¡ Anchors (e. g. , cognates) 10

Methods for alignment ¡ Length based ¡ Offset alignment ¡ Word based ¡ Anchors (e. g. , cognates) 10

Word Based Alignment ¡ ¡ Assume first and last sentences of the texts align (anchors). Then until most sentences aligned: ¡ Form an envelope of alignments from the cartesian product of the list of sentences l Exclude alignments if they cross anchors or too distance Choose pairs of words that tend to occur in alignments ¡ Find pairs of source and target sentences which contain many possible lexical correspondences. ¡ ¡ The most reliable augment the set of anchors 11

Word Based Alignment ¡ ¡ Assume first and last sentences of the texts align (anchors). Then until most sentences aligned: ¡ Form an envelope of alignments from the cartesian product of the list of sentences l Exclude alignments if they cross anchors or too distance Choose pairs of words that tend to occur in alignments ¡ Find pairs of source and target sentences which contain many possible lexical correspondences. ¡ ¡ The most reliable augment the set of anchors 11

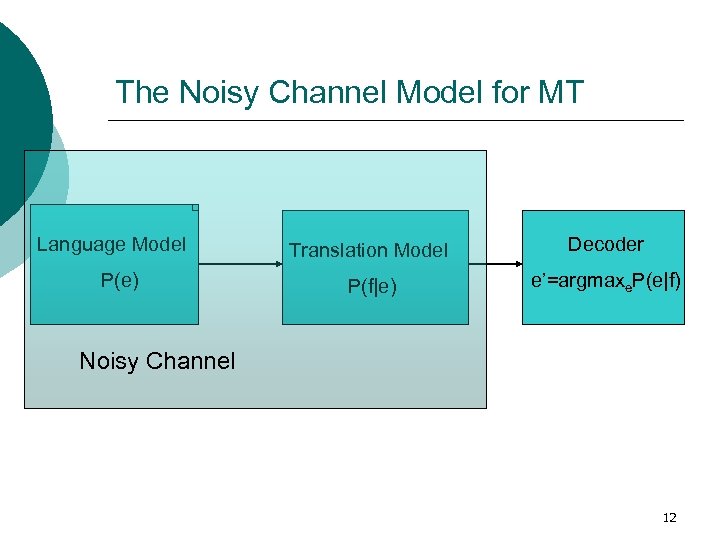

The Noisy Channel Model for MT Language Model P(e) Translation Model Decoder P(f|e) e’=argmaxe. P(e|f) Noisy Channel 12

The Noisy Channel Model for MT Language Model P(e) Translation Model Decoder P(f|e) e’=argmaxe. P(e|f) Noisy Channel 12

The problem ¡ Language model constructed from a large corpus of English Bigram model: probability of word pairs ¡ Trigram model: probability of 3 words in a row ¡ From these, compute sentence probability ¡ ¡ Translation model can be derived from alignment ¡ ¡ For any pair of English/French words, what is the probability that pair is a translation? Decoding is the problem: Given an unseen French sentence, how do we determine the translation? 13

The problem ¡ Language model constructed from a large corpus of English Bigram model: probability of word pairs ¡ Trigram model: probability of 3 words in a row ¡ From these, compute sentence probability ¡ ¡ Translation model can be derived from alignment ¡ ¡ For any pair of English/French words, what is the probability that pair is a translation? Decoding is the problem: Given an unseen French sentence, how do we determine the translation? 13

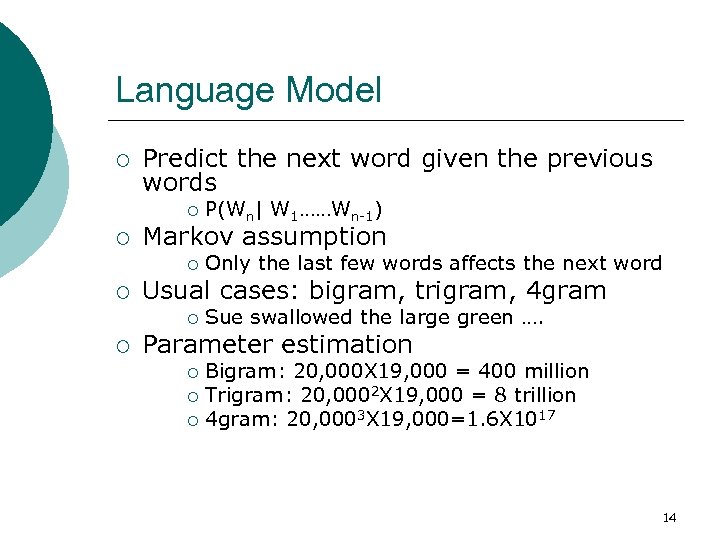

Language Model ¡ Predict the next word given the previous words ¡ ¡ Markov assumption ¡ ¡ Only the last few words affects the next word Usual cases: bigram, trigram, 4 gram ¡ ¡ P(Wn| W 1……Wn-1) Sue swallowed the large green …. Parameter estimation Bigram: 20, 000 X 19, 000 = 400 million ¡ Trigram: 20, 0002 X 19, 000 = 8 trillion ¡ 4 gram: 20, 0003 X 19, 000=1. 6 X 1017 ¡ 14

Language Model ¡ Predict the next word given the previous words ¡ ¡ Markov assumption ¡ ¡ Only the last few words affects the next word Usual cases: bigram, trigram, 4 gram ¡ ¡ P(Wn| W 1……Wn-1) Sue swallowed the large green …. Parameter estimation Bigram: 20, 000 X 19, 000 = 400 million ¡ Trigram: 20, 0002 X 19, 000 = 8 trillion ¡ 4 gram: 20, 0003 X 19, 000=1. 6 X 1017 ¡ 14

Translation Model ¡ For a particular word alignment, multiply the m translation probabilities: P(Jean aime Marie | John loves Mary) ¡ P(Jean|John)XP(aime|loves)XP(Marie|Mar y) ¡ ¡ Then sum the probabilities of all alignments 15

Translation Model ¡ For a particular word alignment, multiply the m translation probabilities: P(Jean aime Marie | John loves Mary) ¡ P(Jean|John)XP(aime|loves)XP(Marie|Mar y) ¡ ¡ Then sum the probabilities of all alignments 15

Decoding is NP complete ¡ When considering any word reordering Swapped words ¡ Words with fertility > n (insertions) ¡ Words with fertility 0 (deletions) ¡ Usual strategy: examine a subset of likely possibilities and choose from that ¡ Search error: decoder returns e’ but there exists some e s. t. P(e|f) > P (e’|f) ¡ 16

Decoding is NP complete ¡ When considering any word reordering Swapped words ¡ Words with fertility > n (insertions) ¡ Words with fertility 0 (deletions) ¡ Usual strategy: examine a subset of likely possibilities and choose from that ¡ Search error: decoder returns e’ but there exists some e s. t. P(e|f) > P (e’|f) ¡ 16

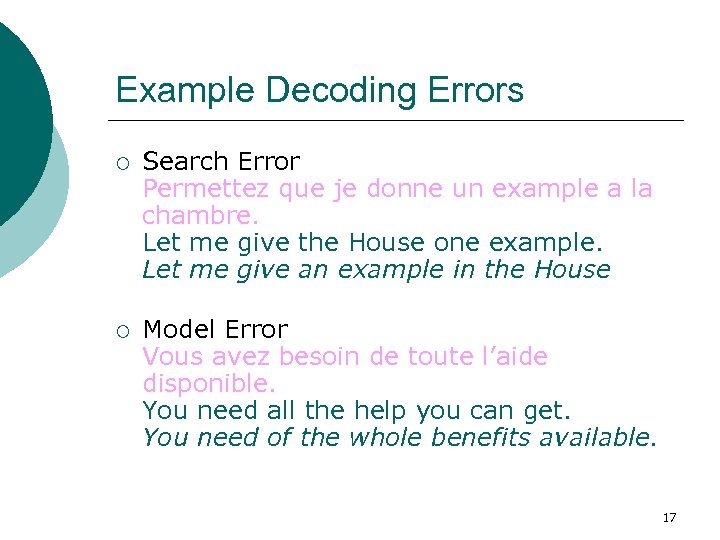

Example Decoding Errors ¡ Search Error Permettez que je donne un example a la chambre. Let me give the House one example. Let me give an example in the House ¡ Model Error Vous avez besoin de toute l’aide disponible. You need all the help you can get. You need of the whole benefits available. 17

Example Decoding Errors ¡ Search Error Permettez que je donne un example a la chambre. Let me give the House one example. Let me give an example in the House ¡ Model Error Vous avez besoin de toute l’aide disponible. You need all the help you can get. You need of the whole benefits available. 17

Search ¡ Traditional decoding method: stack decoder A* algorithm ¡ Deeply explore each hypothesis ¡ ¡ Fast greedy algorithm Much faster than A* ¡ How often does it fail? ¡ ¡ Integer Programming Method Transform to Traveling Salesman (see paper) ¡ Very slow ¡ Guaranteed to find the best choice ¡ 18

Search ¡ Traditional decoding method: stack decoder A* algorithm ¡ Deeply explore each hypothesis ¡ ¡ Fast greedy algorithm Much faster than A* ¡ How often does it fail? ¡ ¡ Integer Programming Method Transform to Traveling Salesman (see paper) ¡ Very slow ¡ Guaranteed to find the best choice ¡ 18

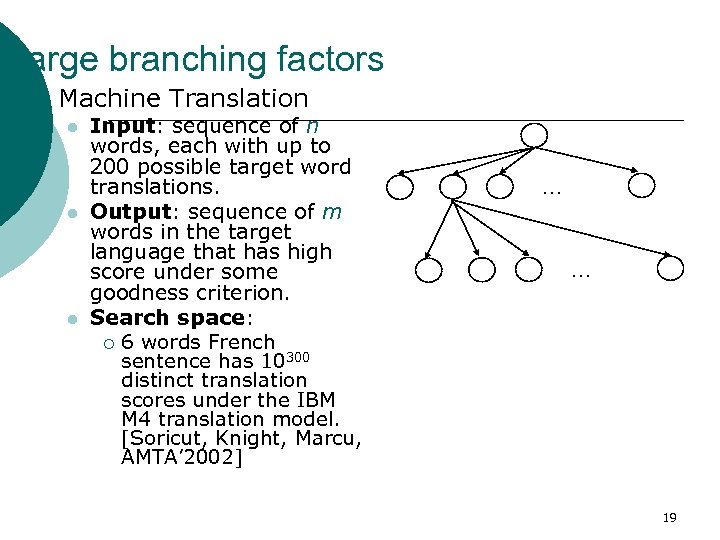

Large branching factors ¡ Machine Translation l l l Input: sequence of n words, each with up to 200 possible target word translations. Output: sequence of m words in the target language that has high score under some goodness criterion. Search space: ¡ 6 words French sentence has 10300 distinct translation scores under the IBM M 4 translation model. [Soricut, Knight, Marcu, AMTA’ 2002] … … 19

Large branching factors ¡ Machine Translation l l l Input: sequence of n words, each with up to 200 possible target word translations. Output: sequence of m words in the target language that has high score under some goodness criterion. Search space: ¡ 6 words French sentence has 10300 distinct translation scores under the IBM M 4 translation model. [Soricut, Knight, Marcu, AMTA’ 2002] … … 19

Stack decoder: A* Initialize the stack with an empty hypothesis ¡ Loop ¡ l l l Pop h, the best hypothesis off the stack If h is a complete sentence, output h and terminate For each possible next word w, extend h by adding w and push the resulting hypothesis onto the stack. 20

Stack decoder: A* Initialize the stack with an empty hypothesis ¡ Loop ¡ l l l Pop h, the best hypothesis off the stack If h is a complete sentence, output h and terminate For each possible next word w, extend h by adding w and push the resulting hypothesis onto the stack. 20

Complications ¡ It’s not a simple left-to-right translation ¡ Because we multiply probabilities as we add words, shorter hypotheses will always win ¡ ¡ Use multiple stacks, one for each length Given fertility possibilities, when we add a new target word for an input source word, how many do we add? 21

Complications ¡ It’s not a simple left-to-right translation ¡ Because we multiply probabilities as we add words, shorter hypotheses will always win ¡ ¡ Use multiple stacks, one for each length Given fertility possibilities, when we add a new target word for an input source word, how many do we add? 21

Example 22

Example 22

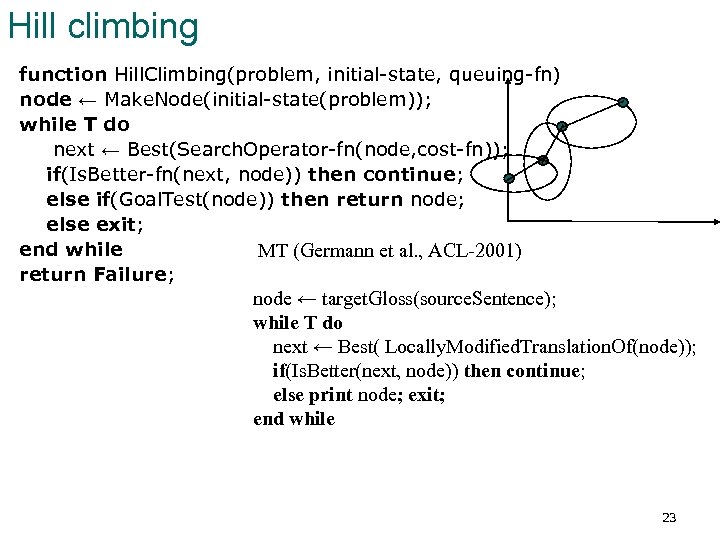

Hill climbing function Hill. Climbing(problem, initial-state, queuing-fn) node ← Make. Node(initial-state(problem)); while T do next ← Best(Search. Operator-fn(node, cost-fn)); if(Is. Better-fn(next, node)) then continue; else if(Goal. Test(node)) then return node; else exit; end while MT (Germann et al. , ACL-2001) return Failure; node ← target. Gloss(source. Sentence); while T do next ← Best( Locally. Modified. Translation. Of(node)); if(Is. Better(next, node)) then continue; else print node; exit; end while 23

Hill climbing function Hill. Climbing(problem, initial-state, queuing-fn) node ← Make. Node(initial-state(problem)); while T do next ← Best(Search. Operator-fn(node, cost-fn)); if(Is. Better-fn(next, node)) then continue; else if(Goal. Test(node)) then return node; else exit; end while MT (Germann et al. , ACL-2001) return Failure; node ← target. Gloss(source. Sentence); while T do next ← Best( Locally. Modified. Translation. Of(node)); if(Is. Better(next, node)) then continue; else print node; exit; end while 23

Types of changes ¡ Translate one or two words (j 1 e 1 j 2 e 2) ¡ Translate and insert (j e 1 e 2) ¡ Remove word of fertility 0 (i) ¡ Swap segments (i 1 i 2 j 1 j 2) ¡ Join words (i 1 i 2) 24

Types of changes ¡ Translate one or two words (j 1 e 1 j 2 e 2) ¡ Translate and insert (j e 1 e 2) ¡ Remove word of fertility 0 (i) ¡ Swap segments (i 1 i 2 j 1 j 2) ¡ Join words (i 1 i 2) 24

Example ¡ Total of 77, 421 possible translations attempted 25

Example ¡ Total of 77, 421 possible translations attempted 25

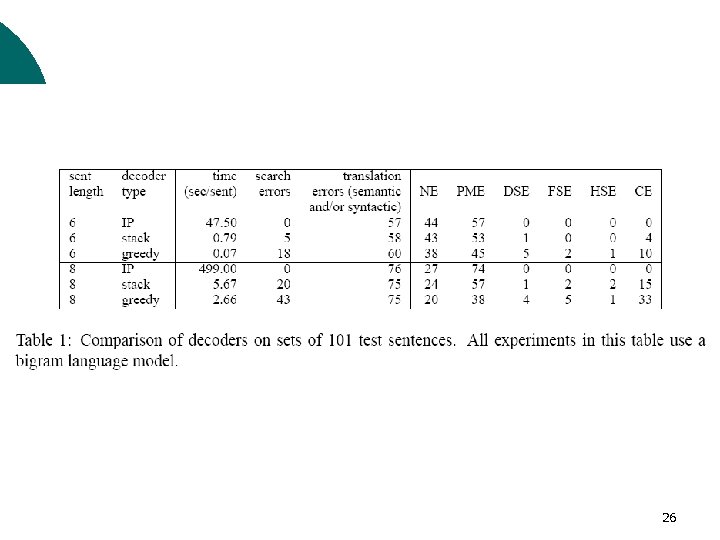

26

26

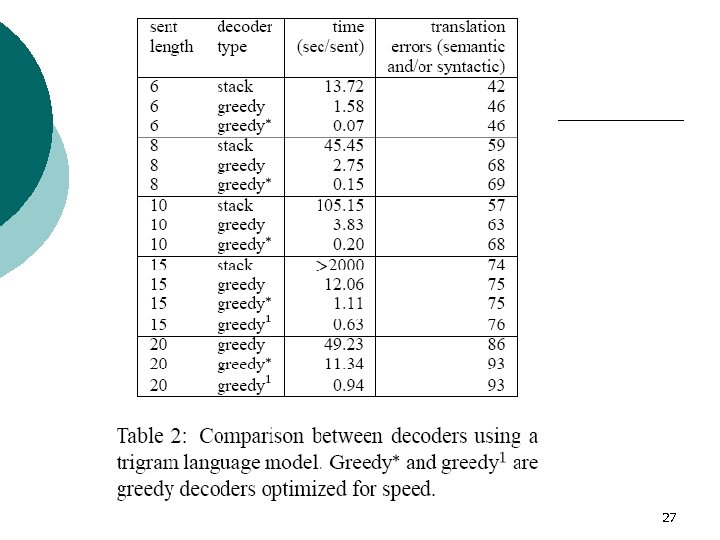

27

27

How to search better? ¡ Make. Node(initial-state(problem)) ¡ Remove. Front(Q) ¡ Search. Operator-fn(node, cost-fn); ¡ queuing-fn(problem, Q, (Next, Cost)); 28

How to search better? ¡ Make. Node(initial-state(problem)) ¡ Remove. Front(Q) ¡ Search. Operator-fn(node, cost-fn); ¡ queuing-fn(problem, Q, (Next, Cost)); 28

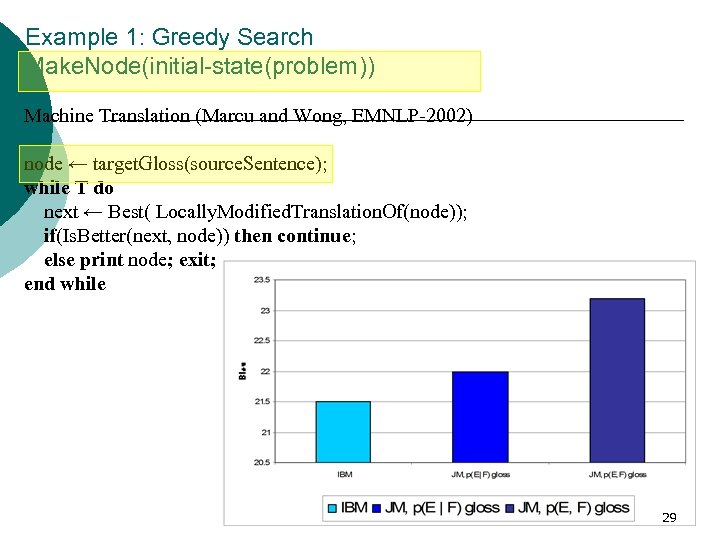

Example 1: Greedy Search Make. Node(initial-state(problem)) Machine Translation (Marcu and Wong, EMNLP-2002) node ← target. Gloss(source. Sentence); while T do next ← Best( Locally. Modified. Translation. Of(node)); if(Is. Better(next, node)) then continue; else print node; exit; end while 29

Example 1: Greedy Search Make. Node(initial-state(problem)) Machine Translation (Marcu and Wong, EMNLP-2002) node ← target. Gloss(source. Sentence); while T do next ← Best( Locally. Modified. Translation. Of(node)); if(Is. Better(next, node)) then continue; else print node; exit; end while 29

Climbing the wrong peak What sentence is more grammatical? 1. better bart than madonna , i say 2. i say better than bart madonna , Model validation Can you make a sentence with these words? a and apparently as be could dissimilar firing identical neural really so things thought two Model stress-testing 30

Climbing the wrong peak What sentence is more grammatical? 1. better bart than madonna , i say 2. i say better than bart madonna , Model validation Can you make a sentence with these words? a and apparently as be could dissimilar firing identical neural really so things thought two Model stress-testing 30

Language-model stress-testing ¡ ¡ Input: bag of words Output: best sequence according to a linear combination of an l ngram LM l syntax-based LM (Collins, 1997) 31

Language-model stress-testing ¡ ¡ Input: bag of words Output: best sequence according to a linear combination of an l ngram LM l syntax-based LM (Collins, 1997) 31

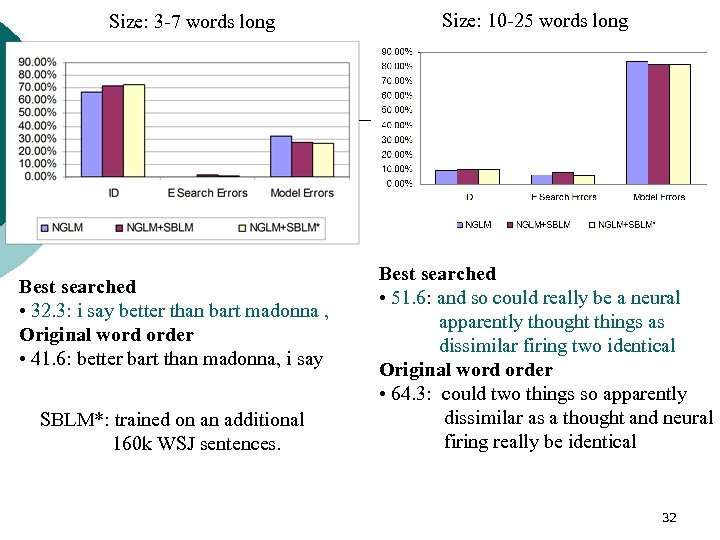

Size: 3 -7 words long Best searched • 32. 3: i say better than bart madonna , Original word order • 41. 6: better bart than madonna, i say SBLM*: trained on an additional 160 k WSJ sentences. Size: 10 -25 words long Best searched • 51. 6: and so could really be a neural apparently thought things as dissimilar firing two identical Original word order • 64. 3: could two things so apparently dissimilar as a thought and neural firing really be identical 32

Size: 3 -7 words long Best searched • 32. 3: i say better than bart madonna , Original word order • 41. 6: better bart than madonna, i say SBLM*: trained on an additional 160 k WSJ sentences. Size: 10 -25 words long Best searched • 51. 6: and so could really be a neural apparently thought things as dissimilar firing two identical Original word order • 64. 3: could two things so apparently dissimilar as a thought and neural firing really be identical 32

End of Class Questions 33

End of Class Questions 33