76a0acf47501dfbb54adee6c1765523d.ppt

- Количество слайдов: 38

SCRAM: Schedule Compliance Risk Assessment Methodology CSSE ARR - March 2012 Los Angeles USA Adrian Pitman Director Materiel Acquisition Improvement Defence Materiel Organisation Australian Dept. of Defence

SCRAM: Schedule Compliance Risk Assessment Methodology CSSE ARR - March 2012 Los Angeles USA Adrian Pitman Director Materiel Acquisition Improvement Defence Materiel Organisation Australian Dept. of Defence

What does SCRAM mean? n Shoo - Go away! n Secure Continuous Remote Alcohol Monitoring ¨ n As modeled here by Lindsay Lohan Schedule Compliance Risk Assessment Methodology

What does SCRAM mean? n Shoo - Go away! n Secure Continuous Remote Alcohol Monitoring ¨ n As modeled here by Lindsay Lohan Schedule Compliance Risk Assessment Methodology

SCRAM Schedule Compliance Risk Assessment Methodology n Collaborative effort: ¨ Australian Department of Defence - Defence Materiel Organisation ¨ Systems and Software Quality Institute, Brisbane, Australia ¨ Software Metrics Inc. , Haymarket, VA

SCRAM Schedule Compliance Risk Assessment Methodology n Collaborative effort: ¨ Australian Department of Defence - Defence Materiel Organisation ¨ Systems and Software Quality Institute, Brisbane, Australia ¨ Software Metrics Inc. , Haymarket, VA

DMO SCRAM Usage n SCRAM has been sponsored by the Australian Defence Materiel Organisation (DMO) ¨ n To improve our Project Schedule Performance in response to Government concern DMO equips and sustains the Australian Defence Force (ADF) ¨ Manages 230+ Concurrent Major Capital Equipment Projects & 100 Minor (<$20 M) defence projects

DMO SCRAM Usage n SCRAM has been sponsored by the Australian Defence Materiel Organisation (DMO) ¨ n To improve our Project Schedule Performance in response to Government concern DMO equips and sustains the Australian Defence Force (ADF) ¨ Manages 230+ Concurrent Major Capital Equipment Projects & 100 Minor (<$20 M) defence projects

DMO SCRAM Usage (cont. ) n SCRAM has evolved from our reviews of troubled programs: ¨ Schedule is almost always the primary concern of program stakeholders ¨ SCRAM is a technical Schedule Risk Analysis methodology that can identify and quantify risk to schedule compliance (focus risk mitigation) ¨ SCRAM is also used to identify root cause of schedule slippage to allow remediation action

DMO SCRAM Usage (cont. ) n SCRAM has evolved from our reviews of troubled programs: ¨ Schedule is almost always the primary concern of program stakeholders ¨ SCRAM is a technical Schedule Risk Analysis methodology that can identify and quantify risk to schedule compliance (focus risk mitigation) ¨ SCRAM is also used to identify root cause of schedule slippage to allow remediation action

What SCRAM is Not n Not a technical assessment of design feasibility n Not an assessment of process capability ¨ However, process problems may be identified and treated as an issue if process performance is identified as contributing to schedule slippage

What SCRAM is Not n Not a technical assessment of design feasibility n Not an assessment of process capability ¨ However, process problems may be identified and treated as an issue if process performance is identified as contributing to schedule slippage

Topics n Overview of SCRAM ¨ SCRAM Components n n n Process Reference and Assessment Model (ISO 15504 compliant) Root Cause Analysis of Schedule Slippage (RCASS) Model SCRAM Assessment Process n Experiences using SCRAM n Benefits of Using SCRAM

Topics n Overview of SCRAM ¨ SCRAM Components n n n Process Reference and Assessment Model (ISO 15504 compliant) Root Cause Analysis of Schedule Slippage (RCASS) Model SCRAM Assessment Process n Experiences using SCRAM n Benefits of Using SCRAM

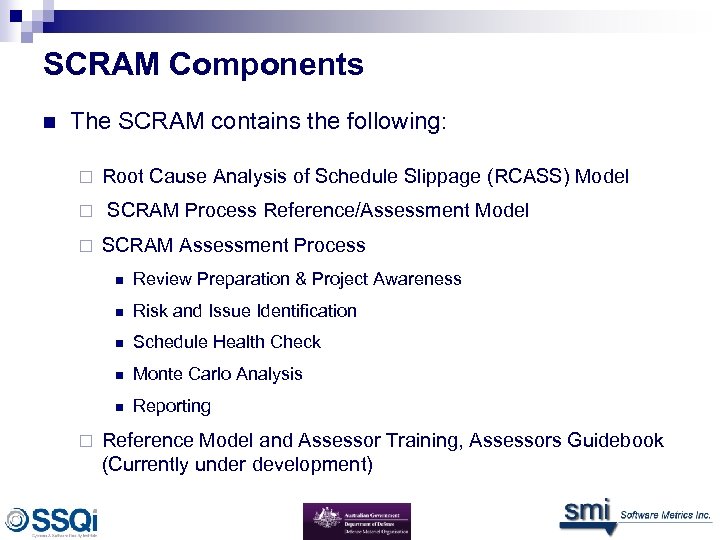

SCRAM Components n The SCRAM contains the following: ¨ ¨ ¨ Root Cause Analysis of Schedule Slippage (RCASS) Model SCRAM Process Reference/Assessment Model SCRAM Assessment Process n n Risk and Issue Identification n Schedule Health Check n Monte Carlo Analysis n ¨ Review Preparation & Project Awareness Reporting Reference Model and Assessor Training, Assessors Guidebook (Currently under development)

SCRAM Components n The SCRAM contains the following: ¨ ¨ ¨ Root Cause Analysis of Schedule Slippage (RCASS) Model SCRAM Process Reference/Assessment Model SCRAM Assessment Process n n Risk and Issue Identification n Schedule Health Check n Monte Carlo Analysis n ¨ Review Preparation & Project Awareness Reporting Reference Model and Assessor Training, Assessors Guidebook (Currently under development)

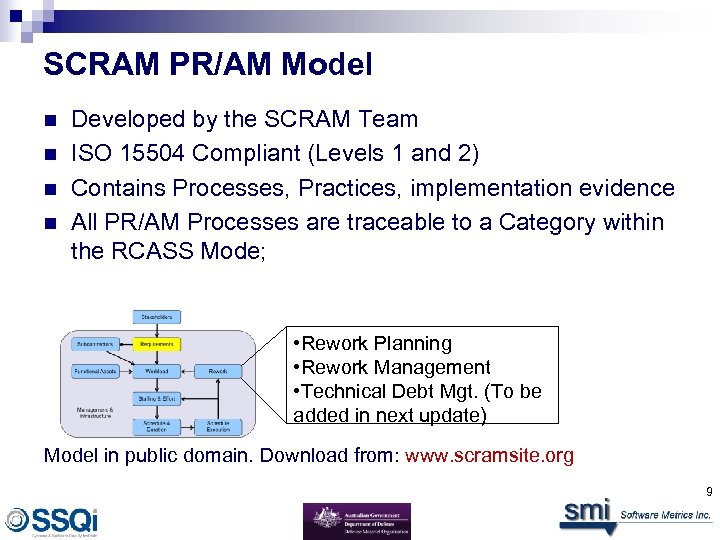

SCRAM PR/AM Model n n Developed by the SCRAM Team ISO 15504 Compliant (Levels 1 and 2) Contains Processes, Practices, implementation evidence All PR/AM Processes are traceable to a Category within the RCASS Mode; • Rework Planning • Rework Management • Technical Debt Mgt. (To be added in next update) Model in public domain. Download from: www. scramsite. org 9

SCRAM PR/AM Model n n Developed by the SCRAM Team ISO 15504 Compliant (Levels 1 and 2) Contains Processes, Practices, implementation evidence All PR/AM Processes are traceable to a Category within the RCASS Mode; • Rework Planning • Rework Management • Technical Debt Mgt. (To be added in next update) Model in public domain. Download from: www. scramsite. org 9

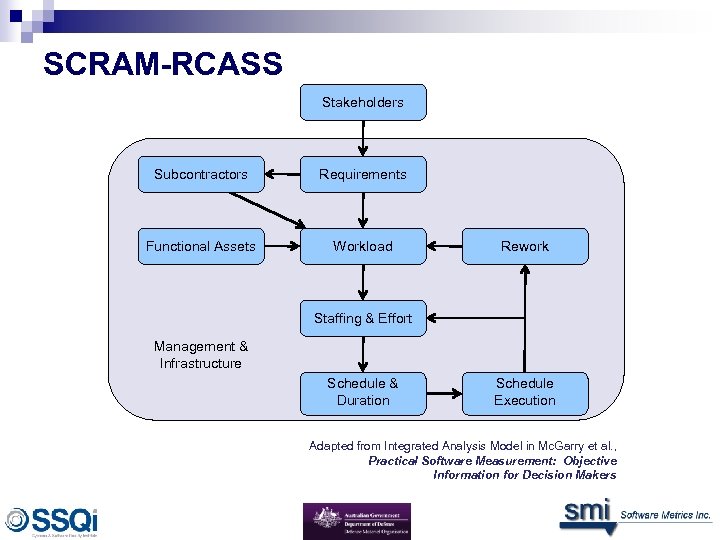

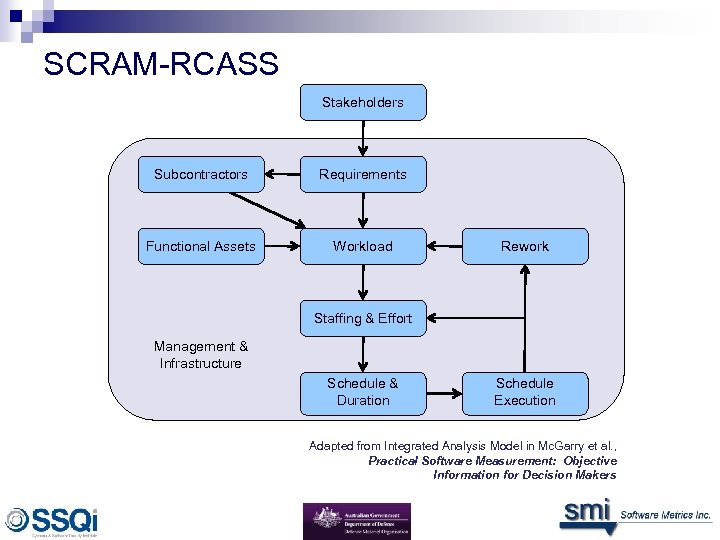

SCRAM-RCASS Stakeholders Subcontractors Requirements Functional Assets Workload Rework Staffing & Effort Management & Infrastructure Schedule & Duration Schedule Execution Adapted from Integrated Analysis Model in Mc. Garry et al. , Practical Software Measurement: Objective Information for Decision Makers

SCRAM-RCASS Stakeholders Subcontractors Requirements Functional Assets Workload Rework Staffing & Effort Management & Infrastructure Schedule & Duration Schedule Execution Adapted from Integrated Analysis Model in Mc. Garry et al. , Practical Software Measurement: Objective Information for Decision Makers

Root Cause Analysis of Schedule Slippage (RCASS) n n Model evolved with experience on SCRAM assessments Used as guidance for: ¨ ¨ ¨ Questions during assessments Categorizing the wealth of data and details gathered during an assessment Highlighting missing information Determining the root causes of slippage Recommending a going-forward plan Recommending measures to serve as leading indicators n n For visibility and tracking in those areas where there are risks and problems Similar to the use of the Structured Analysis Model in PSM to guide categorization of issues and risks via issue identification workshops

Root Cause Analysis of Schedule Slippage (RCASS) n n Model evolved with experience on SCRAM assessments Used as guidance for: ¨ ¨ ¨ Questions during assessments Categorizing the wealth of data and details gathered during an assessment Highlighting missing information Determining the root causes of slippage Recommending a going-forward plan Recommending measures to serve as leading indicators n n For visibility and tracking in those areas where there are risks and problems Similar to the use of the Structured Analysis Model in PSM to guide categorization of issues and risks via issue identification workshops

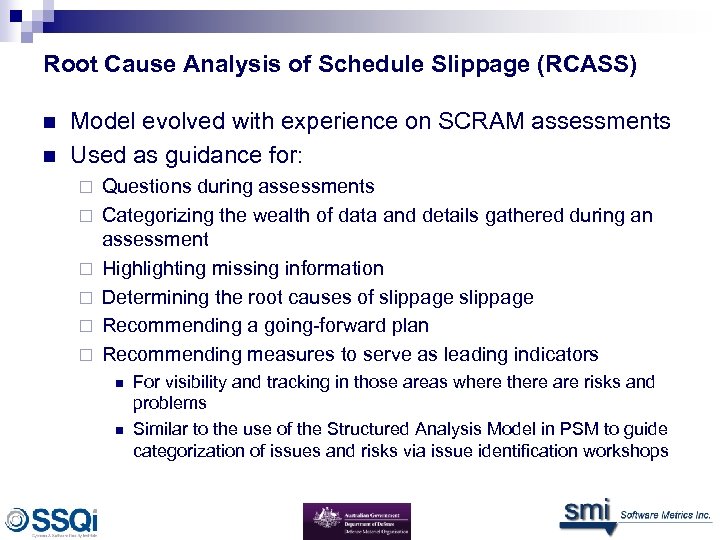

SCRAM Assessment Process 1. 0 Assessment Preparation 2. 0 Project Awareness 3. 0 Project Risk / Issue Identification 4. 0 Project Schedule Validation 5. 0 Data Consolidation & Validation 6. 0 Schedule Compliance Risk Analysis Schedule Compliance Risk Quantified 7. 0 Observation & Reporting

SCRAM Assessment Process 1. 0 Assessment Preparation 2. 0 Project Awareness 3. 0 Project Risk / Issue Identification 4. 0 Project Schedule Validation 5. 0 Data Consolidation & Validation 6. 0 Schedule Compliance Risk Analysis Schedule Compliance Risk Quantified 7. 0 Observation & Reporting

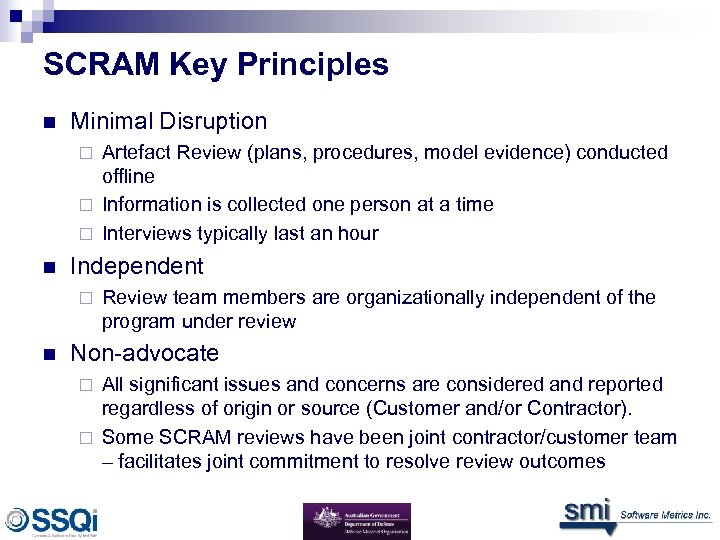

SCRAM Key Principles n Minimal Disruption Artefact Review (plans, procedures, model evidence) conducted offline ¨ Information is collected one person at a time ¨ Interviews typically last an hour ¨ n Independent ¨ n Review team members are organizationally independent of the program under review Non-advocate All significant issues and concerns are considered and reported regardless of origin or source (Customer and/or Contractor). ¨ Some SCRAM reviews have been joint contractor/customer team – facilitates joint commitment to resolve review outcomes ¨

SCRAM Key Principles n Minimal Disruption Artefact Review (plans, procedures, model evidence) conducted offline ¨ Information is collected one person at a time ¨ Interviews typically last an hour ¨ n Independent ¨ n Review team members are organizationally independent of the program under review Non-advocate All significant issues and concerns are considered and reported regardless of origin or source (Customer and/or Contractor). ¨ Some SCRAM reviews have been joint contractor/customer team – facilitates joint commitment to resolve review outcomes ¨

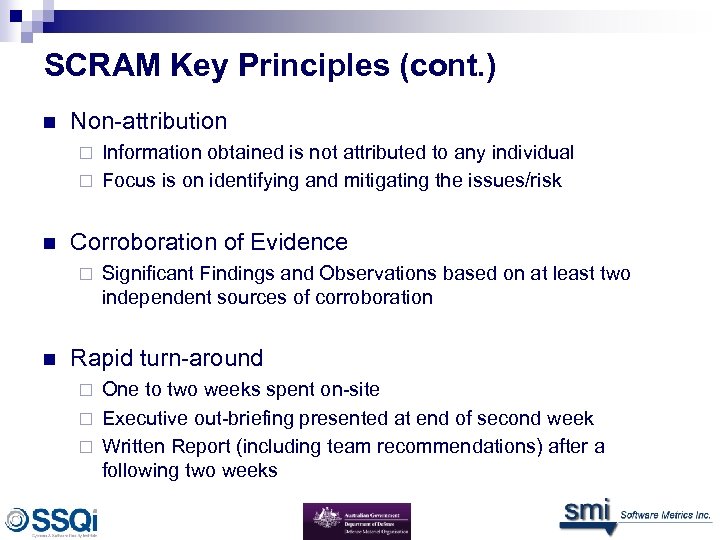

SCRAM Key Principles (cont. ) n Non-attribution Information obtained is not attributed to any individual ¨ Focus is on identifying and mitigating the issues/risk ¨ n Corroboration of Evidence ¨ n Significant Findings and Observations based on at least two independent sources of corroboration Rapid turn-around One to two weeks spent on-site ¨ Executive out-briefing presented at end of second week ¨ Written Report (including team recommendations) after a following two weeks ¨

SCRAM Key Principles (cont. ) n Non-attribution Information obtained is not attributed to any individual ¨ Focus is on identifying and mitigating the issues/risk ¨ n Corroboration of Evidence ¨ n Significant Findings and Observations based on at least two independent sources of corroboration Rapid turn-around One to two weeks spent on-site ¨ Executive out-briefing presented at end of second week ¨ Written Report (including team recommendations) after a following two weeks ¨

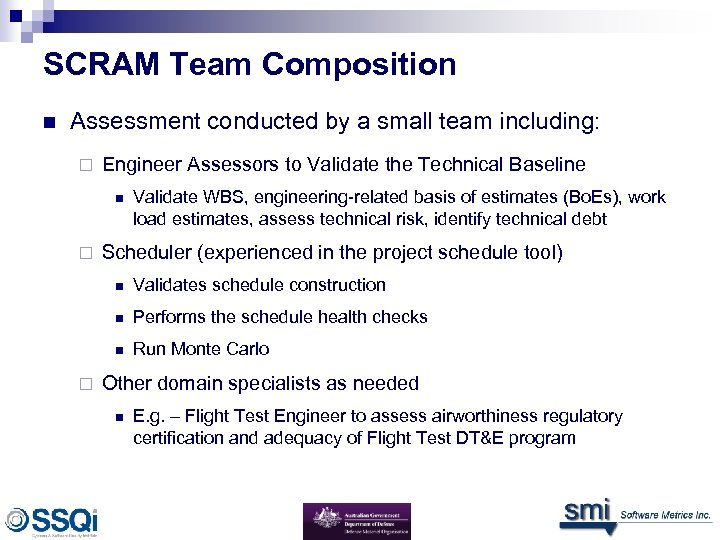

SCRAM Team Composition n Assessment conducted by a small team including: ¨ Engineer Assessors to Validate the Technical Baseline n ¨ Validate WBS, engineering-related basis of estimates (Bo. Es), work load estimates, assess technical risk, identify technical debt Scheduler (experienced in the project schedule tool) n n Performs the schedule health checks n ¨ Validates schedule construction Run Monte Carlo Other domain specialists as needed n E. g. – Flight Test Engineer to assess airworthiness regulatory certification and adequacy of Flight Test DT&E program

SCRAM Team Composition n Assessment conducted by a small team including: ¨ Engineer Assessors to Validate the Technical Baseline n ¨ Validate WBS, engineering-related basis of estimates (Bo. Es), work load estimates, assess technical risk, identify technical debt Scheduler (experienced in the project schedule tool) n n Performs the schedule health checks n ¨ Validates schedule construction Run Monte Carlo Other domain specialists as needed n E. g. – Flight Test Engineer to assess airworthiness regulatory certification and adequacy of Flight Test DT&E program

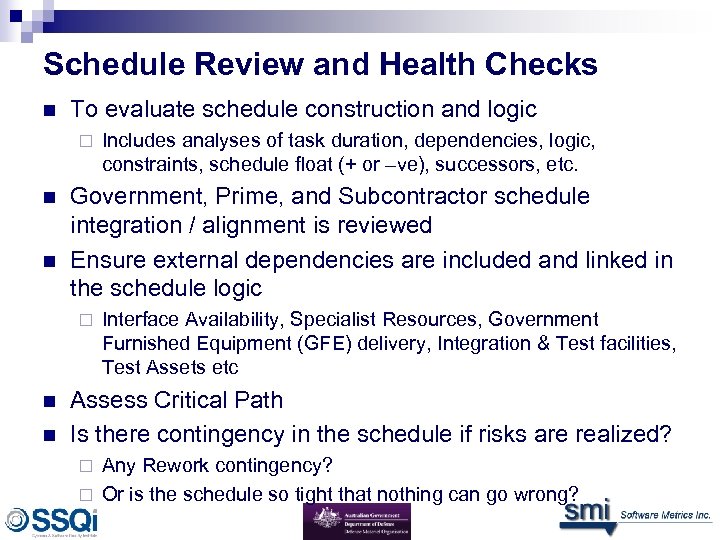

Schedule Review and Health Checks n To evaluate schedule construction and logic ¨ n n Government, Prime, and Subcontractor schedule integration / alignment is reviewed Ensure external dependencies are included and linked in the schedule logic ¨ n n Includes analyses of task duration, dependencies, logic, constraints, schedule float (+ or –ve), successors, etc. Interface Availability, Specialist Resources, Government Furnished Equipment (GFE) delivery, Integration & Test facilities, Test Assets etc Assess Critical Path Is there contingency in the schedule if risks are realized? Any Rework contingency? ¨ Or is the schedule so tight that nothing can go wrong? ¨

Schedule Review and Health Checks n To evaluate schedule construction and logic ¨ n n Government, Prime, and Subcontractor schedule integration / alignment is reviewed Ensure external dependencies are included and linked in the schedule logic ¨ n n Includes analyses of task duration, dependencies, logic, constraints, schedule float (+ or –ve), successors, etc. Interface Availability, Specialist Resources, Government Furnished Equipment (GFE) delivery, Integration & Test facilities, Test Assets etc Assess Critical Path Is there contingency in the schedule if risks are realized? Any Rework contingency? ¨ Or is the schedule so tight that nothing can go wrong? ¨

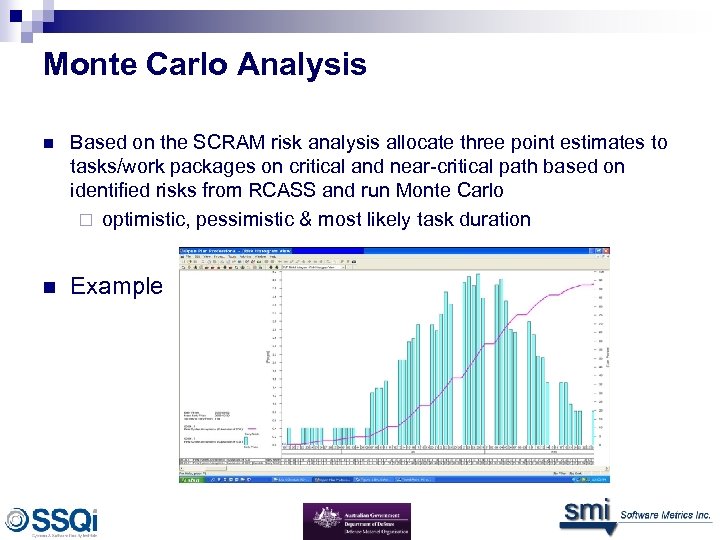

Monte Carlo Analysis n Based on the SCRAM risk analysis allocate three point estimates to tasks/work packages on critical and near-critical path based on identified risks from RCASS and run Monte Carlo ¨ optimistic, pessimistic & most likely task duration n Example

Monte Carlo Analysis n Based on the SCRAM risk analysis allocate three point estimates to tasks/work packages on critical and near-critical path based on identified risks from RCASS and run Monte Carlo ¨ optimistic, pessimistic & most likely task duration n Example

Topics n Overview of SCRAM ¨ SCRAM Components n n RCASS Model Assessment Process n Experiences using SCRAM n Benefits of Using SCRAM

Topics n Overview of SCRAM ¨ SCRAM Components n n RCASS Model Assessment Process n Experiences using SCRAM n Benefits of Using SCRAM

Some Statistics n Number of SCRAM Assessments to date ¨ n Number of years ¨ n 10 DMO Project assessments conducted using SCRAM or SCRAM principles (i. e. before SCRAM was formalised) First review conducted in 2007 Time on Site ¨ Typically two weeks – ONE WEEK INTERVIEWS/DATA COLLECTION – ONE WEEK DATA CONSOLIDATION, ANALYSIS AND OUT-BRIEF n Typical Assessment Team size: ¨ n SCRAM Lead plus 4 or 5 assessors Team experience: typically 150 - 200 years Project experience 19

Some Statistics n Number of SCRAM Assessments to date ¨ n Number of years ¨ n 10 DMO Project assessments conducted using SCRAM or SCRAM principles (i. e. before SCRAM was formalised) First review conducted in 2007 Time on Site ¨ Typically two weeks – ONE WEEK INTERVIEWS/DATA COLLECTION – ONE WEEK DATA CONSOLIDATION, ANALYSIS AND OUT-BRIEF n Typical Assessment Team size: ¨ n SCRAM Lead plus 4 or 5 assessors Team experience: typically 150 - 200 years Project experience 19

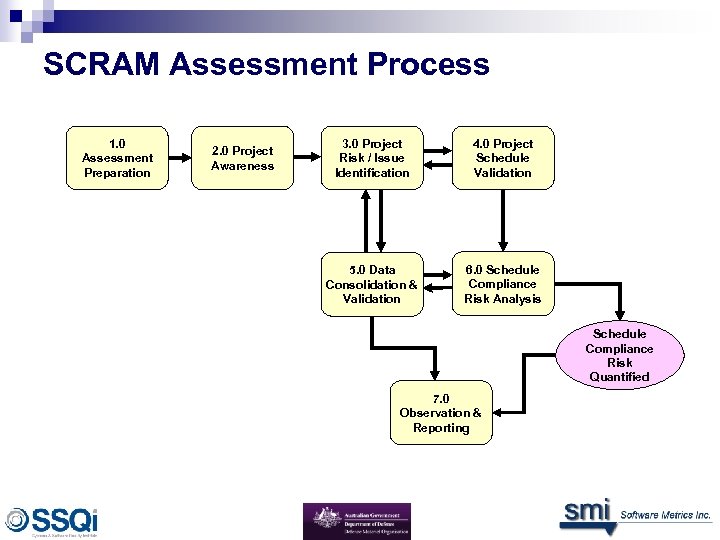

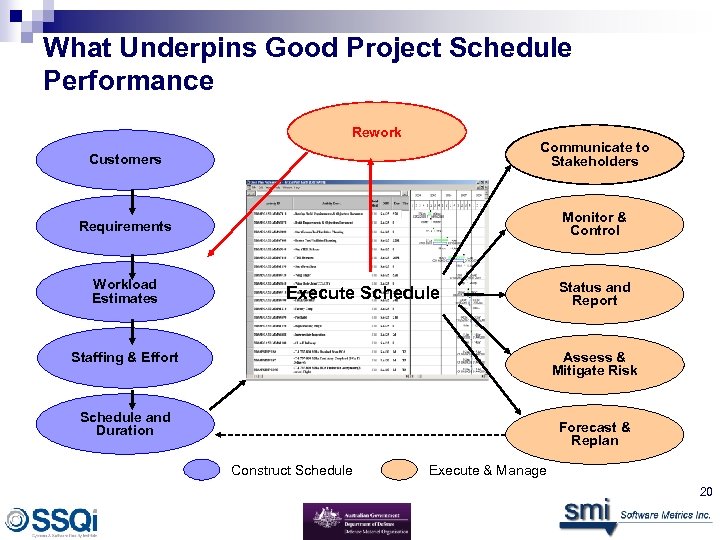

What Underpins Good Project Schedule Performance Rework Customers Communicate to Stakeholders Requirements Monitor & Control Workload Estimates Baseline & Execute Schedule Staffing & Effort Status and Report Assess & Mitigate Risk Schedule and Duration Forecast & Replan Construct Schedule Execute & Manage 20

What Underpins Good Project Schedule Performance Rework Customers Communicate to Stakeholders Requirements Monitor & Control Workload Estimates Baseline & Execute Schedule Staffing & Effort Status and Report Assess & Mitigate Risk Schedule and Duration Forecast & Replan Construct Schedule Execute & Manage 20

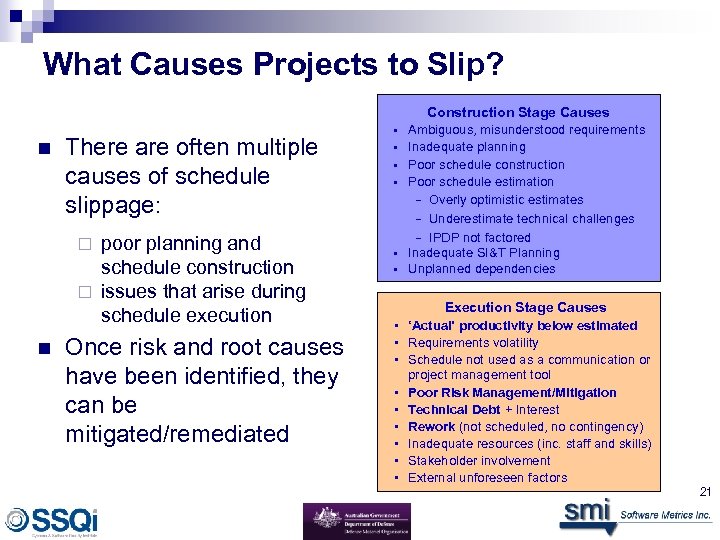

What Causes Projects to Slip? Construction Stage Causes n There are often multiple causes of schedule slippage: poor planning and schedule construction ¨ issues that arise during schedule execution ¨ n Once risk and root causes have been identified, they can be mitigated/remediated • Ambiguous, misunderstood requirements • Inadequate planning • Poor schedule construction • Poor schedule estimation − Overly optimistic estimates − Underestimate technical challenges − IPDP not factored • Inadequate SI&T Planning • Unplanned dependencies Execution Stage Causes • ‘Actual’ productivity below estimated • Requirements volatility • Schedule not used as a communication or project management tool • Poor Risk Management/Mitigation • Technical Debt + Interest • Rework (not scheduled, no contingency) • Inadequate resources (inc. staff and skills) • Stakeholder involvement • External unforeseen factors 21

What Causes Projects to Slip? Construction Stage Causes n There are often multiple causes of schedule slippage: poor planning and schedule construction ¨ issues that arise during schedule execution ¨ n Once risk and root causes have been identified, they can be mitigated/remediated • Ambiguous, misunderstood requirements • Inadequate planning • Poor schedule construction • Poor schedule estimation − Overly optimistic estimates − Underestimate technical challenges − IPDP not factored • Inadequate SI&T Planning • Unplanned dependencies Execution Stage Causes • ‘Actual’ productivity below estimated • Requirements volatility • Schedule not used as a communication or project management tool • Poor Risk Management/Mitigation • Technical Debt + Interest • Rework (not scheduled, no contingency) • Inadequate resources (inc. staff and skills) • Stakeholder involvement • External unforeseen factors 21

SCRAM-RCASS Stakeholders Subcontractors Requirements Functional Assets Workload Rework Staffing & Effort Management & Infrastructure Schedule & Duration Schedule Execution Adapted from Integrated Analysis Model in Mc. Garry et al. , Practical Software Measurement: Objective Information for Decision Makers

SCRAM-RCASS Stakeholders Subcontractors Requirements Functional Assets Workload Rework Staffing & Effort Management & Infrastructure Schedule & Duration Schedule Execution Adapted from Integrated Analysis Model in Mc. Garry et al. , Practical Software Measurement: Objective Information for Decision Makers

Stakeholders n n “Our stakeholders are like a 100 -headed hydra – everyone can say ‘no’ and no one can say ‘yes’. ” Experiences Critical stakeholder (customer) added one condition for acceptance that removed months from the development schedule ¨ Failed organisational relationship, key stakeholders were not talking to each other (even though they were in the same facility) ¨

Stakeholders n n “Our stakeholders are like a 100 -headed hydra – everyone can say ‘no’ and no one can say ‘yes’. ” Experiences Critical stakeholder (customer) added one condition for acceptance that removed months from the development schedule ¨ Failed organisational relationship, key stakeholders were not talking to each other (even though they were in the same facility) ¨

Requirements n n What was that thing you wanted? Experiences Misinterpretation of a communication standard led to an additional 3, 000 requirements to implement the standard. ¨ A large ERP project had two system specifications – one with the sponsor/customer and a different specification under contract with the developer – would this be a problem? ¨

Requirements n n What was that thing you wanted? Experiences Misinterpretation of a communication standard led to an additional 3, 000 requirements to implement the standard. ¨ A large ERP project had two system specifications – one with the sponsor/customer and a different specification under contract with the developer – would this be a problem? ¨

Subcontractor n If the subcontractor doesn’t perform, additional work required by the Prime n n Subcontractor Management essential Experiences Subcontractor omitting processes in order to make delivery deadlines led to integration problems with other system components ¨ Prime and sub-contractor schedules not aligned ¨

Subcontractor n If the subcontractor doesn’t perform, additional work required by the Prime n n Subcontractor Management essential Experiences Subcontractor omitting processes in order to make delivery deadlines led to integration problems with other system components ¨ Prime and sub-contractor schedules not aligned ¨

Functional Assets n n Commercial-off-the-shelf (COTS) products that do not work as advertised, resulting in additional work. Experiences COTS product required a “technology refresh” as the project was years late (cost the project $8 M) ¨ IP issues with the COTS product resulted in additional development effort required ¨ Over 200 instantiations of Open Source code resulted in breach the indemnity requirements ¨

Functional Assets n n Commercial-off-the-shelf (COTS) products that do not work as advertised, resulting in additional work. Experiences COTS product required a “technology refresh” as the project was years late (cost the project $8 M) ¨ IP issues with the COTS product resulted in additional development effort required ¨ Over 200 instantiations of Open Source code resulted in breach the indemnity requirements ¨

Workload n n Overly Optimistic estimates Inadequate Bo. Es Source lines of code grossly underestimated ¨ Contract data deliverables (CDRLs) workload often underestimated by both contractor and customer ¨ n Experiences Identical estimates in four different areas of software development (Cut & Paste estimation) ¨ Re-plan based on twice the historic productivity with no basis for improvement ¨ Five delivery iterations before CDRL approval ¨

Workload n n Overly Optimistic estimates Inadequate Bo. Es Source lines of code grossly underestimated ¨ Contract data deliverables (CDRLs) workload often underestimated by both contractor and customer ¨ n Experiences Identical estimates in four different areas of software development (Cut & Paste estimation) ¨ Re-plan based on twice the historic productivity with no basis for improvement ¨ Five delivery iterations before CDRL approval ¨

Staffing & Effort n n High turnover, especially among experienced staff Experiences Generally, most project had a lack of human resources ¨ Parent company sacking 119 associated workers ¨ Remaining workers went on a “Go Slow” ¨ Scheduling staff for 12 hours (to recover schedule) ¨

Staffing & Effort n n High turnover, especially among experienced staff Experiences Generally, most project had a lack of human resources ¨ Parent company sacking 119 associated workers ¨ Remaining workers went on a “Go Slow” ¨ Scheduling staff for 12 hours (to recover schedule) ¨

Schedule & Duration n n Area of primary interest Experiences No effective integrated master schedule to provide an overall understanding of the completion date of the project ¨ 13 subordinate schedules ¨ Critical Path went subterranean! ¨

Schedule & Duration n n Area of primary interest Experiences No effective integrated master schedule to provide an overall understanding of the completion date of the project ¨ 13 subordinate schedules ¨ Critical Path went subterranean! ¨

Schedule Execution n n Using the schedule to communicate project status and plans Experiences ¨ The schedule was not available to program staff or stakeholders ¨ Undergoing a schedule tool transition for approx 2 years

Schedule Execution n n Using the schedule to communicate project status and plans Experiences ¨ The schedule was not available to program staff or stakeholders ¨ Undergoing a schedule tool transition for approx 2 years

Rework n n Often underestimated or not planned for (e. g. defect correction during integration and test) Experiences No contingency built into the schedule for rework ¨ Clarification of a requirements resulted in an additional 7 software releases (not originally planned or scheduled) ¨

Rework n n Often underestimated or not planned for (e. g. defect correction during integration and test) Experiences No contingency built into the schedule for rework ¨ Clarification of a requirements resulted in an additional 7 software releases (not originally planned or scheduled) ¨

Management & Infrastructure n n Contention for critical resources Processes n n Risk Management, Configuration Management Experiences ¨ Lack of adequate SI&T facilities (in terms of fidelity or capacity) concertinaed the schedule causing major blow out

Management & Infrastructure n n Contention for critical resources Processes n n Risk Management, Configuration Management Experiences ¨ Lack of adequate SI&T facilities (in terms of fidelity or capacity) concertinaed the schedule causing major blow out

Topics n Overview of SCRAM ¨ SCRAM Components n n RCASS Model Assessment Process n Experiences using SCRAM n Benefits of Using SCRAM

Topics n Overview of SCRAM ¨ SCRAM Components n n RCASS Model Assessment Process n Experiences using SCRAM n Benefits of Using SCRAM

SCRAM Benefits n SCRAM root-cause analysis model (RCASS) useful in communicating the status of programs to all key stakeholders ¨ Particularly senior executives (They get it!) n Identifies Root Causes of schedule slippage and permits remediation action n Identifies Risks and focuses Risk Mitigation action n Provides guidance for collection of measures ¨ n Provides visibility and tracking for those areas where there is residual risk Provides greater confidence in the schedule

SCRAM Benefits n SCRAM root-cause analysis model (RCASS) useful in communicating the status of programs to all key stakeholders ¨ Particularly senior executives (They get it!) n Identifies Root Causes of schedule slippage and permits remediation action n Identifies Risks and focuses Risk Mitigation action n Provides guidance for collection of measures ¨ n Provides visibility and tracking for those areas where there is residual risk Provides greater confidence in the schedule

SCRAM - Benefit n n SCRAM can be used to validate a schedule before project execution (based on SCRAM identified systemic schedule management issues) Widely applicable SCRAM can be applied at any point in the program life cycle ¨ SCRAM can be applied to any major system engineering activity or phase ¨ n Examples System Integration & Test (often – as this is typically when the sea of green turns to a sea of red) ¨ Aircraft Flight Testing ¨ Installation/integration of systems on ship ¨ Logistics Enterprise Resource Planning (ERP) application roll out readiness ¨

SCRAM - Benefit n n SCRAM can be used to validate a schedule before project execution (based on SCRAM identified systemic schedule management issues) Widely applicable SCRAM can be applied at any point in the program life cycle ¨ SCRAM can be applied to any major system engineering activity or phase ¨ n Examples System Integration & Test (often – as this is typically when the sea of green turns to a sea of red) ¨ Aircraft Flight Testing ¨ Installation/integration of systems on ship ¨ Logistics Enterprise Resource Planning (ERP) application roll out readiness ¨

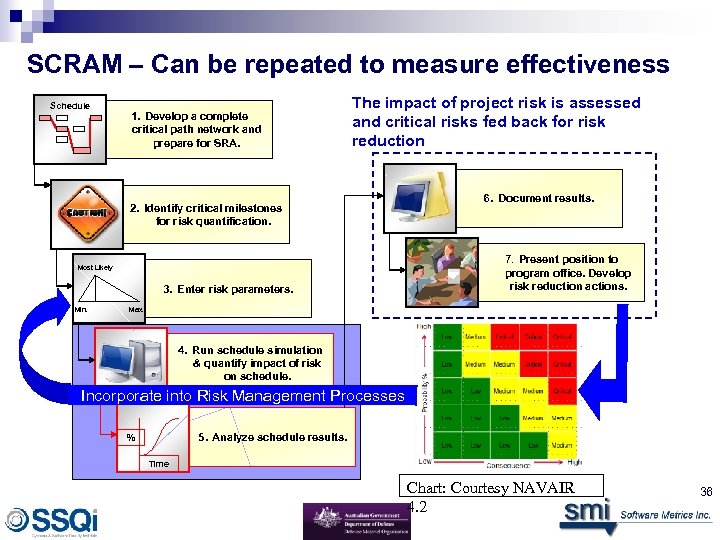

SCRAM – Can be repeated to measure effectiveness Schedule 1. Develop a complete critical path network and prepare for SRA. The impact of project risk is assessed and critical risks fed back for risk reduction 2. Identify critical milestones for risk quantification. Most Likely 3. Enter risk parameters. Min. 6. Document results. 7. Present position to program office. Develop risk reduction actions. Max. 4. Run schedule simulation & quantify impact of risk on schedule. Incorporate into Risk Management Processes 5. Analyze schedule results. % Time Chart: Courtesy NAVAIR 4. 2 36

SCRAM – Can be repeated to measure effectiveness Schedule 1. Develop a complete critical path network and prepare for SRA. The impact of project risk is assessed and critical risks fed back for risk reduction 2. Identify critical milestones for risk quantification. Most Likely 3. Enter risk parameters. Min. 6. Document results. 7. Present position to program office. Develop risk reduction actions. Max. 4. Run schedule simulation & quantify impact of risk on schedule. Incorporate into Risk Management Processes 5. Analyze schedule results. % Time Chart: Courtesy NAVAIR 4. 2 36

SCRAM QUESTIONS For further information on SCRAM contact: www. scramsite. org Govt/Govt to Govt: - Adrian Pitman: adrian. pitman@defence. gov. au In Australia: - Angela Tuffley: a. tuffley@ssqi. org. au In USA: - Betsy Clark: betsy@software-metrics. com In USA: - Brad Clark: brad@software-metrics. com

SCRAM QUESTIONS For further information on SCRAM contact: www. scramsite. org Govt/Govt to Govt: - Adrian Pitman: adrian. pitman@defence. gov. au In Australia: - Angela Tuffley: a. tuffley@ssqi. org. au In USA: - Betsy Clark: betsy@software-metrics. com In USA: - Brad Clark: brad@software-metrics. com

Acronyms n n n ANAO – Australian National Audit Office Bo. E – Basis of Estimate COTS/MOTS – Commercial off the Shelf/Modified or Military off the Shelf DMO – Defence Materiel Organisation (Australia) GFE – Government Furnished Equipment ISO/IEC – International Organization for Standardization/International Electrotechnical Commission ISO/IEC 15504 – Information Technology – Process Assessment Framework RCASS – Root Cause Analysis of Schedule Slippage SCRAM – Schedule Compliance Risk Assessment Methodology SMI – Software Metrics Inc. (United States) SSQi – Systems & Software Quality Institute (Australia)

Acronyms n n n ANAO – Australian National Audit Office Bo. E – Basis of Estimate COTS/MOTS – Commercial off the Shelf/Modified or Military off the Shelf DMO – Defence Materiel Organisation (Australia) GFE – Government Furnished Equipment ISO/IEC – International Organization for Standardization/International Electrotechnical Commission ISO/IEC 15504 – Information Technology – Process Assessment Framework RCASS – Root Cause Analysis of Schedule Slippage SCRAM – Schedule Compliance Risk Assessment Methodology SMI – Software Metrics Inc. (United States) SSQi – Systems & Software Quality Institute (Australia)