dd8484e9e4b05430edb7285c35b3e517.ppt

- Количество слайдов: 54

Scientific Data Management Center – Scientific Process Automation Implementing Scientific Process Automation - from Art to Commodity Mladen A. Vouk and Terence Critchlow 1/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Implementing Scientific Process Automation - from Art to Commodity Mladen A. Vouk and Terence Critchlow 1/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 2/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 2/AHM/Raleigh/Oct 05/v 3 a

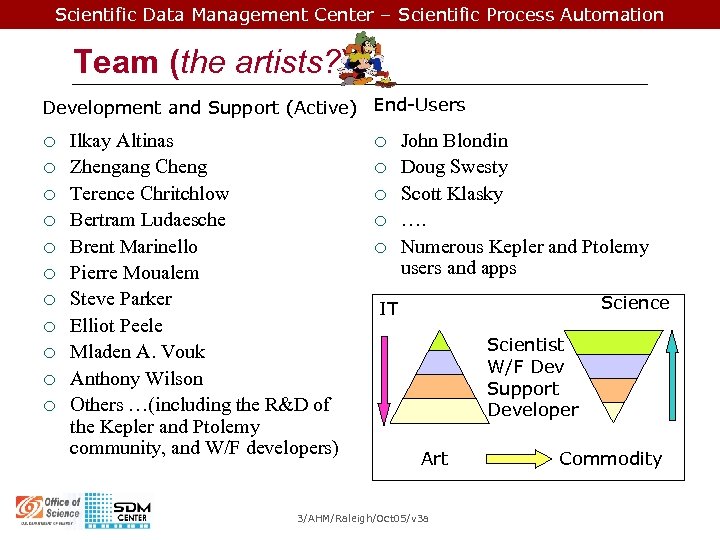

Scientific Data Management Center – Scientific Process Automation Team (the artists? ) Development and Support (Active) End-Users ¡ ¡ ¡ Ilkay Altinas Zhengang Cheng Terence Chritchlow Bertram Ludaesche Brent Marinello Pierre Moualem Steve Parker Elliot Peele Mladen A. Vouk Anthony Wilson Others …(including the R&D of the Kepler and Ptolemy community, and W/F developers) ¡ ¡ ¡ John Blondin Doug Swesty Scott Klasky …. Numerous Kepler and Ptolemy users and apps Science IT Scientist W/F Dev Support Developer Art 3/AHM/Raleigh/Oct 05/v 3 a Commodity

Scientific Data Management Center – Scientific Process Automation Team (the artists? ) Development and Support (Active) End-Users ¡ ¡ ¡ Ilkay Altinas Zhengang Cheng Terence Chritchlow Bertram Ludaesche Brent Marinello Pierre Moualem Steve Parker Elliot Peele Mladen A. Vouk Anthony Wilson Others …(including the R&D of the Kepler and Ptolemy community, and W/F developers) ¡ ¡ ¡ John Blondin Doug Swesty Scott Klasky …. Numerous Kepler and Ptolemy users and apps Science IT Scientist W/F Dev Support Developer Art 3/AHM/Raleigh/Oct 05/v 3 a Commodity

Scientific Data Management Center – Scientific Process Automation Cyberinfrastructure “Cyberinfrastructure makes applications dramatically easier to [use, ] develop and deploy, thus expanding the feasible scope of applications possible within budget and organizational constraints, and shifting the [educator’s, ] scientist’s and engineer’s effort away from information technology (development) and concentrating it on [knowledge transfer, and] scientific and engineering research. Cyberinfrastructure also increases efficiency, quality, and reliability by capturing commonalities among application needs, and facilitates the efficient sharing of equipment and services. ” (from the Appendix of the Report of the National Science Foundation Blue-Ribbon Advisory Panel on Cyberinfrastructure, Jan 2003) 4/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Cyberinfrastructure “Cyberinfrastructure makes applications dramatically easier to [use, ] develop and deploy, thus expanding the feasible scope of applications possible within budget and organizational constraints, and shifting the [educator’s, ] scientist’s and engineer’s effort away from information technology (development) and concentrating it on [knowledge transfer, and] scientific and engineering research. Cyberinfrastructure also increases efficiency, quality, and reliability by capturing commonalities among application needs, and facilitates the efficient sharing of equipment and services. ” (from the Appendix of the Report of the National Science Foundation Blue-Ribbon Advisory Panel on Cyberinfrastructure, Jan 2003) 4/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Rising Expectations ¡ ¡ Delivery of Service with Minimization of Information Technology (IT) Overhead Move away from the specific resources (e. g. , h/w, s/w, net, storage, and app), to the ability to achieve one’s basic mission (learning, teaching, research, outreach, administration, etc. ) Utility-like (appliance-like) on-demand access to needed IT. Use of IT-based solutions moves from a fixed location (such as an specific lab) and fixed resources (e. g. , particular operating system) to a (mobile) personal access device a scientist (e. g. , laptop or a PDA or a cell phone) and service-based delivery Business model behind IT virtualization & services needs to conform to the mission of the institution, as well as realistic resource and/or personnel constraints. 5/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Rising Expectations ¡ ¡ Delivery of Service with Minimization of Information Technology (IT) Overhead Move away from the specific resources (e. g. , h/w, s/w, net, storage, and app), to the ability to achieve one’s basic mission (learning, teaching, research, outreach, administration, etc. ) Utility-like (appliance-like) on-demand access to needed IT. Use of IT-based solutions moves from a fixed location (such as an specific lab) and fixed resources (e. g. , particular operating system) to a (mobile) personal access device a scientist (e. g. , laptop or a PDA or a cell phone) and service-based delivery Business model behind IT virtualization & services needs to conform to the mission of the institution, as well as realistic resource and/or personnel constraints. 5/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 6/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 6/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Component-Based System Engineering* ¡ ¡ ¡ Composition of systems (e. g. , practical workflows) from (existing) components Systems as assemblies of components Development of components as reusable units Facilitation of the maintenance and evolution of systems by customizing and replacing their components Methods and tools for support of different aspects of component-based approach Open – source and process issues, organizational and management issues, coupling, domain, technologies (e. g. , component models), component composition issues, tools… Building Reliable Component-Based Software Systems, Ivica Crnkovic and Magnus Larsson (editors), Artech House Publishers, ISBN 1 -58053 -327 -2, http: //www. idt. mdh. se/cbse-book/ 7/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Component-Based System Engineering* ¡ ¡ ¡ Composition of systems (e. g. , practical workflows) from (existing) components Systems as assemblies of components Development of components as reusable units Facilitation of the maintenance and evolution of systems by customizing and replacing their components Methods and tools for support of different aspects of component-based approach Open – source and process issues, organizational and management issues, coupling, domain, technologies (e. g. , component models), component composition issues, tools… Building Reliable Component-Based Software Systems, Ivica Crnkovic and Magnus Larsson (editors), Artech House Publishers, ISBN 1 -58053 -327 -2, http: //www. idt. mdh. se/cbse-book/ 7/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Advantages ¡ ¡ ¡ Business: Shorter time-to-market, lower development and maintenance costs Technical: Increased understandability of (complex) systems; Increased usability, interoperability, flexibility, adaptability, dependability… Strategic: Increasing (software) market share Scope: Web- and internet-based applications, Desktop and office applications, Mathematical and other libraries, Graphical tools, GUI-based applications, etc. Practical: De-facto standards, e. g. , MS COM, . NET, Sun EJB, J 2 SEE, CORBA Component Model… Focus on functionality 8/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Advantages ¡ ¡ ¡ Business: Shorter time-to-market, lower development and maintenance costs Technical: Increased understandability of (complex) systems; Increased usability, interoperability, flexibility, adaptability, dependability… Strategic: Increasing (software) market share Scope: Web- and internet-based applications, Desktop and office applications, Mathematical and other libraries, Graphical tools, GUI-based applications, etc. Practical: De-facto standards, e. g. , MS COM, . NET, Sun EJB, J 2 SEE, CORBA Component Model… Focus on functionality 8/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Some Issues ¡ ¡ ¡ ¡ ¡ Standards Non-functional requirements Performance including timing, Resource management Dependability, fault-tolerance Domain specific requirements? Skill level needed and IT overhead Changing expectations and scalability … Coupling and synchronization (synchronous vs. asynchronous processing, loose vs. tight coupling, parallelization, data movement, bottlenecks …) Provisioning / Security challenges Marketing hype/over-expectations Customer confusion / skepticism Service quality/control issues Demonstrating benefits/ROI Software Licensing Vendor Factor Other … 9/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Some Issues ¡ ¡ ¡ ¡ ¡ Standards Non-functional requirements Performance including timing, Resource management Dependability, fault-tolerance Domain specific requirements? Skill level needed and IT overhead Changing expectations and scalability … Coupling and synchronization (synchronous vs. asynchronous processing, loose vs. tight coupling, parallelization, data movement, bottlenecks …) Provisioning / Security challenges Marketing hype/over-expectations Customer confusion / skepticism Service quality/control issues Demonstrating benefits/ROI Software Licensing Vendor Factor Other … 9/AHM/Raleigh/Oct 05/v 3 a

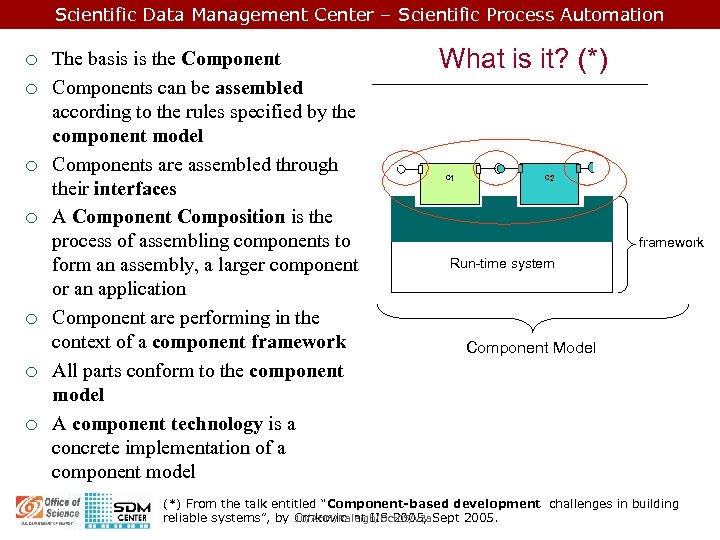

Scientific Data Management Center – Scientific Process Automation ¡ ¡ ¡ ¡ The basis is the Components can be assembled according to the rules specified by the component model Components are assembled through their interfaces A Component Composition is the process of assembling components to form an assembly, a larger component or an application Component are performing in the context of a component framework All parts conform to the component model A component technology is a concrete implementation of a component model What is it? (*) c 1 c 2 Middleware framework Run-time system Component Model (*) From the talk entitled “Component-based development challenges in building reliable systems”, by Crnkovic at IIS 2005, Sept 2005. 10/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation ¡ ¡ ¡ ¡ The basis is the Components can be assembled according to the rules specified by the component model Components are assembled through their interfaces A Component Composition is the process of assembling components to form an assembly, a larger component or an application Component are performing in the context of a component framework All parts conform to the component model A component technology is a concrete implementation of a component model What is it? (*) c 1 c 2 Middleware framework Run-time system Component Model (*) From the talk entitled “Component-based development challenges in building reliable systems”, by Crnkovic at IIS 2005, Sept 2005. 10/AHM/Raleigh/Oct 05/v 3 a

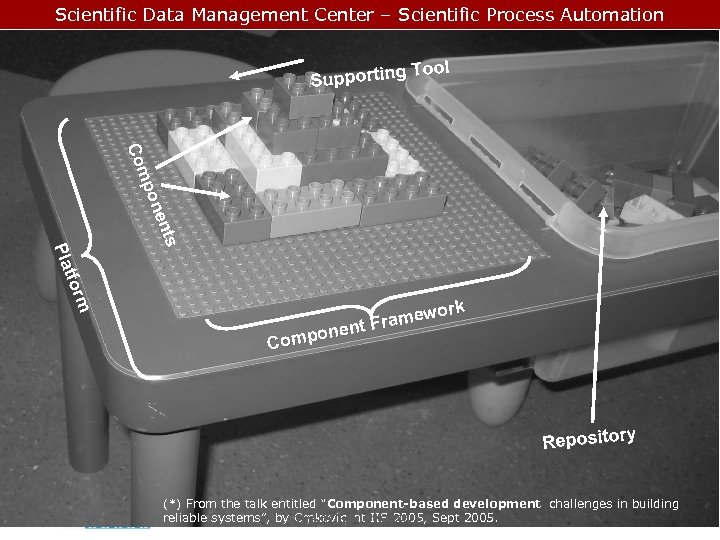

Scientific Data Management Center – Scientific Process Automation Tool Supporting s s ent pon Com C m form tfor Pla Pl ork ramew t. F ponen Com Repository (*) From the talk entitled “Component-based development challenges in building reliable systems”, by Crnkovic at IIS 2005, Sept 2005. 11/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Tool Supporting s s ent pon Com C m form tfor Pla Pl ork ramew t. F ponen Com Repository (*) From the talk entitled “Component-based development challenges in building reliable systems”, by Crnkovic at IIS 2005, Sept 2005. 11/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Component ¡ ¡ ¡ ¡ A unit of composition Contractually specified interfaces Explicit context dependencies (only? ). Can be deployed independently Subject to composition by third party. Confirms a component model which defines interaction and composition standards Composed without modification according to a composition standard. Specified as a) black-box (signal/response), b) gray-box (access to internal states), c) white-box (access to all internals), or d) display-box (see internals, but cannot touch internals). 12/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Component ¡ ¡ ¡ ¡ A unit of composition Contractually specified interfaces Explicit context dependencies (only? ). Can be deployed independently Subject to composition by third party. Confirms a component model which defines interaction and composition standards Composed without modification according to a composition standard. Specified as a) black-box (signal/response), b) gray-box (access to internal states), c) white-box (access to all internals), or d) display-box (see internals, but cannot touch internals). 12/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Principles ¡ ¡ Reusability (docs, re-use process, architecture, framework, V&V, …) Substitutability (alternative implementations, functional equivalence, equivalence on other issues, very precise interfaces and specs, runtime replacement mechanism, V&V, …) Extensibility (extending system component pool, increasing capabilities of individual components – extensible architecture, resource and new functionality discovery, V&V, …) Composability (functional, extra-functional, reasoning about compositions, V&V, …) 13/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Principles ¡ ¡ Reusability (docs, re-use process, architecture, framework, V&V, …) Substitutability (alternative implementations, functional equivalence, equivalence on other issues, very precise interfaces and specs, runtime replacement mechanism, V&V, …) Extensibility (extending system component pool, increasing capabilities of individual components – extensible architecture, resource and new functionality discovery, V&V, …) Composability (functional, extra-functional, reasoning about compositions, V&V, …) 13/AHM/Raleigh/Oct 05/v 3 a

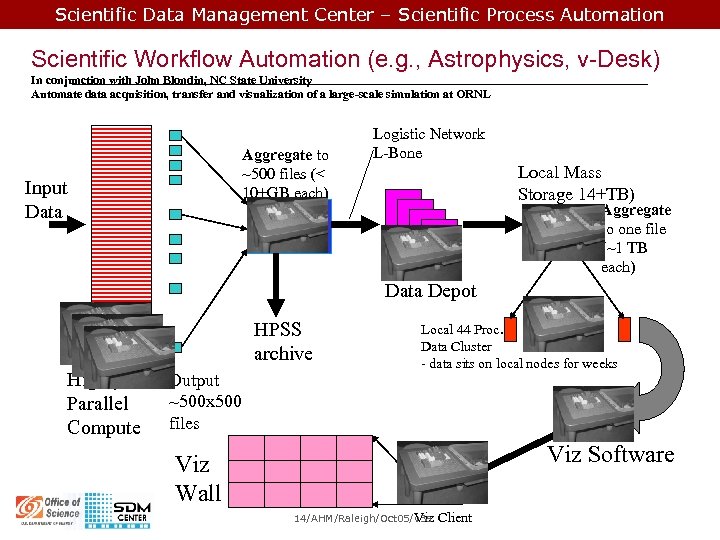

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-Desk) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Aggregate to ~500 files (< 10+GB each) Input Data Logistic Network L-Bone Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Data Depot HPSS archive Highly Parallel Compute Output ~500 x 500 files Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 14/AHM/Raleigh/Oct 05/v 3 a Client

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-Desk) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Aggregate to ~500 files (< 10+GB each) Input Data Logistic Network L-Bone Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Data Depot HPSS archive Highly Parallel Compute Output ~500 x 500 files Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 14/AHM/Raleigh/Oct 05/v 3 a Client

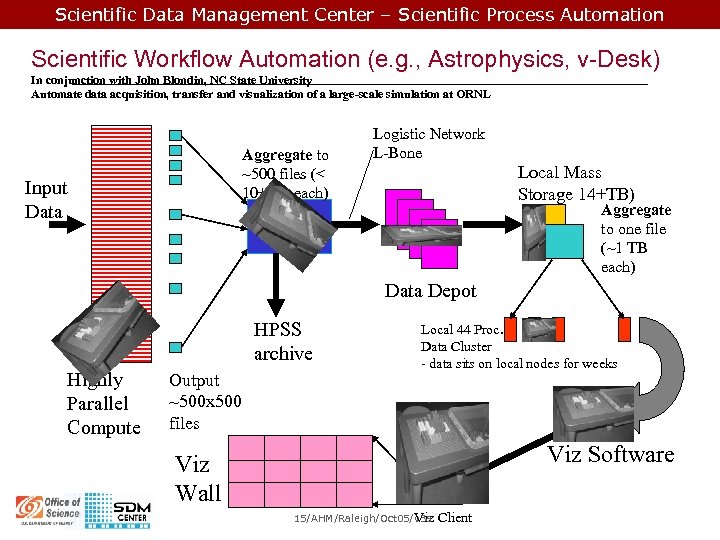

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-Desk) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Aggregate to ~500 files (< 10+GB each) Input Data Logistic Network L-Bone Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Data Depot HPSS archive Highly Parallel Compute Output ~500 x 500 files Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 15/AHM/Raleigh/Oct 05/v 3 a Client

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-Desk) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Aggregate to ~500 files (< 10+GB each) Input Data Logistic Network L-Bone Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Data Depot HPSS archive Highly Parallel Compute Output ~500 x 500 files Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 15/AHM/Raleigh/Oct 05/v 3 a Client

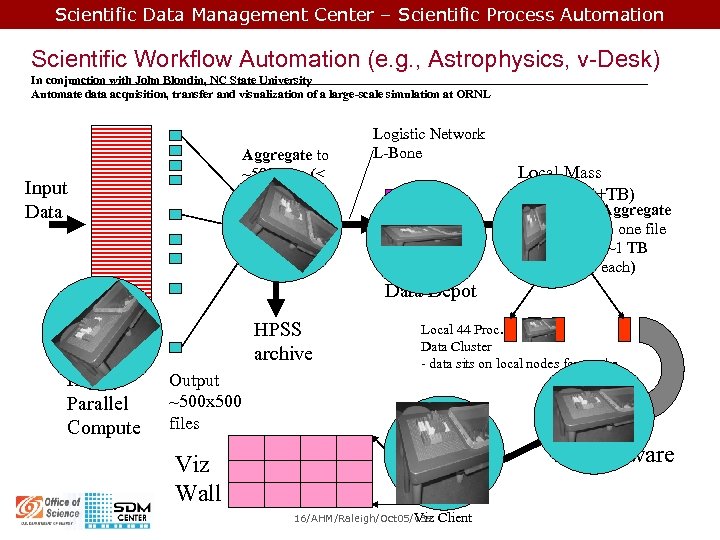

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-Desk) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Aggregate to ~500 files (< 10+GB each) Input Data Logistic Network L-Bone Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Data Depot HPSS archive Highly Parallel Compute Output ~500 x 500 files Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 16/AHM/Raleigh/Oct 05/v 3 a Client

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-Desk) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Aggregate to ~500 files (< 10+GB each) Input Data Logistic Network L-Bone Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Data Depot HPSS archive Highly Parallel Compute Output ~500 x 500 files Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 16/AHM/Raleigh/Oct 05/v 3 a Client

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 17/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 17/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation CCA and Kepler ¡ ¡ CCA is probably more suitable for tightly coupled applications, especially when all the components are ready within a machine or a local cluster. CCA focuses on high performance parallel and distributed processing. Kepler is probably more suitable for loosely coupled and diverse components. The component can reside on different and widely separated machines. It is great for service and data that resides with its owner, and are exposed as services. Kepler focuses on process orchestration and control. More conducive of IP protection. 18/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation CCA and Kepler ¡ ¡ CCA is probably more suitable for tightly coupled applications, especially when all the components are ready within a machine or a local cluster. CCA focuses on high performance parallel and distributed processing. Kepler is probably more suitable for loosely coupled and diverse components. The component can reside on different and widely separated machines. It is great for service and data that resides with its owner, and are exposed as services. Kepler focuses on process orchestration and control. More conducive of IP protection. 18/AHM/Raleigh/Oct 05/v 3 a

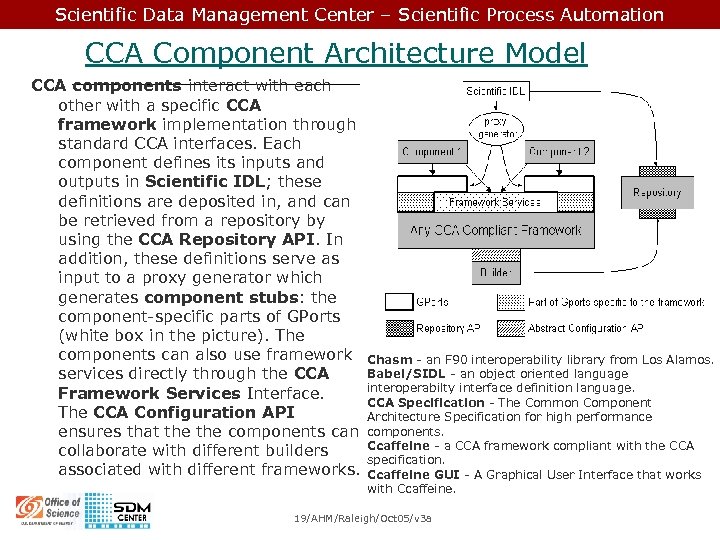

Scientific Data Management Center – Scientific Process Automation CCA Component Architecture Model CCA components interact with each other with a specific CCA framework implementation through standard CCA interfaces. Each component defines its inputs and outputs in Scientific IDL; these definitions are deposited in, and can be retrieved from a repository by using the CCA Repository API. In addition, these definitions serve as input to a proxy generator which generates component stubs: the component-specific parts of GPorts (white box in the picture). The components can also use framework Chasm - an F 90 interoperability library from Los Alamos. Babel/SIDL - an object oriented language services directly through the CCA interoperabilty interface definition language. Framework Services Interface. CCA Specification - The Common Component The CCA Configuration API Architecture Specification for high performance ensures that the components can components. Ccaffeine - a CCA framework compliant with the CCA collaborate with different builders specification. associated with different frameworks. Ccaffeine GUI - A Graphical User Interface that works with Ccaffeine. 19/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation CCA Component Architecture Model CCA components interact with each other with a specific CCA framework implementation through standard CCA interfaces. Each component defines its inputs and outputs in Scientific IDL; these definitions are deposited in, and can be retrieved from a repository by using the CCA Repository API. In addition, these definitions serve as input to a proxy generator which generates component stubs: the component-specific parts of GPorts (white box in the picture). The components can also use framework Chasm - an F 90 interoperability library from Los Alamos. Babel/SIDL - an object oriented language services directly through the CCA interoperabilty interface definition language. Framework Services Interface. CCA Specification - The Common Component The CCA Configuration API Architecture Specification for high performance ensures that the components can components. Ccaffeine - a CCA framework compliant with the CCA collaborate with different builders specification. associated with different frameworks. Ccaffeine GUI - A Graphical User Interface that works with Ccaffeine. 19/AHM/Raleigh/Oct 05/v 3 a

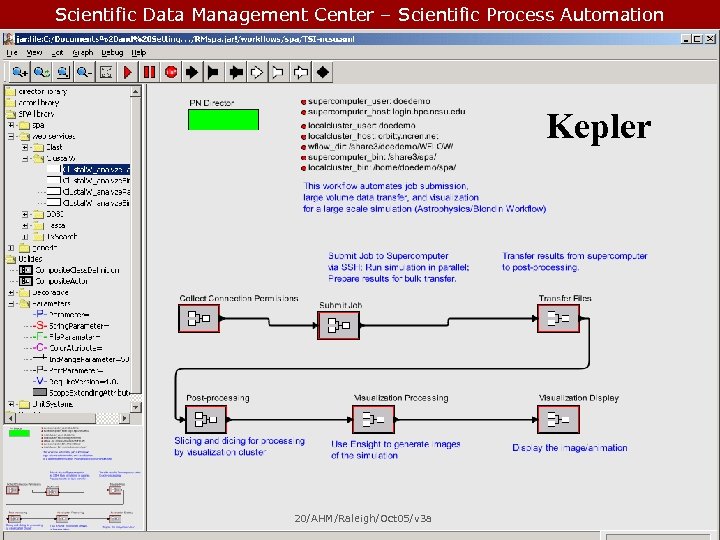

Scientific Data Management Center – Scientific Process Automation Kepler 20/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Kepler 20/AHM/Raleigh/Oct 05/v 3 a

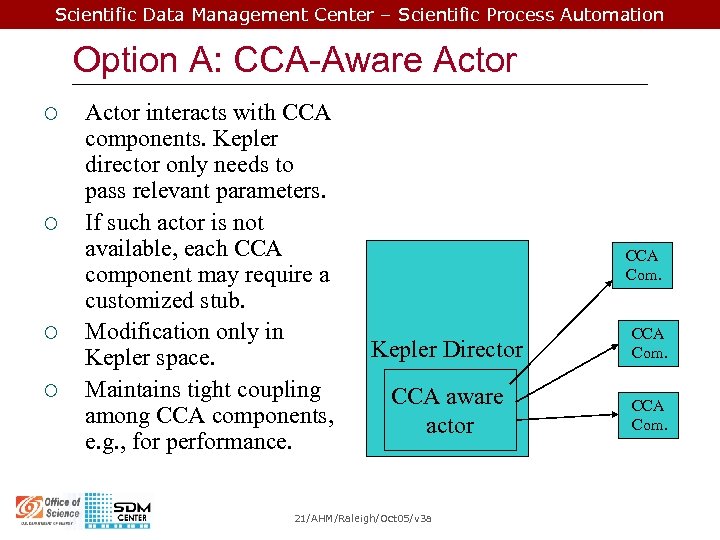

Scientific Data Management Center – Scientific Process Automation Option A: CCA-Aware Actor ¡ ¡ Actor interacts with CCA components. Kepler director only needs to pass relevant parameters. If such actor is not available, each CCA component may require a customized stub. Modification only in Kepler space. Maintains tight coupling among CCA components, e. g. , for performance. CCA Com. Kepler Director CCA Com. CCA aware actor CCA Com. 21/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Option A: CCA-Aware Actor ¡ ¡ Actor interacts with CCA components. Kepler director only needs to pass relevant parameters. If such actor is not available, each CCA component may require a customized stub. Modification only in Kepler space. Maintains tight coupling among CCA components, e. g. , for performance. CCA Com. Kepler Director CCA Com. CCA aware actor CCA Com. 21/AHM/Raleigh/Oct 05/v 3 a

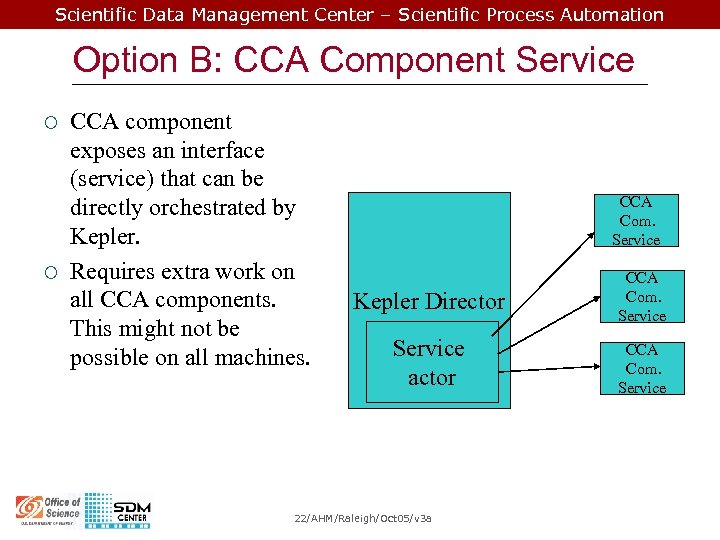

Scientific Data Management Center – Scientific Process Automation Option B: CCA Component Service ¡ ¡ CCA component exposes an interface (service) that can be directly orchestrated by Kepler. Requires extra work on all CCA components. This might not be possible on all machines. CCA Com. Service Kepler Director CCA Com. Service actor CCA Com. Service 22/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Option B: CCA Component Service ¡ ¡ CCA component exposes an interface (service) that can be directly orchestrated by Kepler. Requires extra work on all CCA components. This might not be possible on all machines. CCA Com. Service Kepler Director CCA Com. Service actor CCA Com. Service 22/AHM/Raleigh/Oct 05/v 3 a

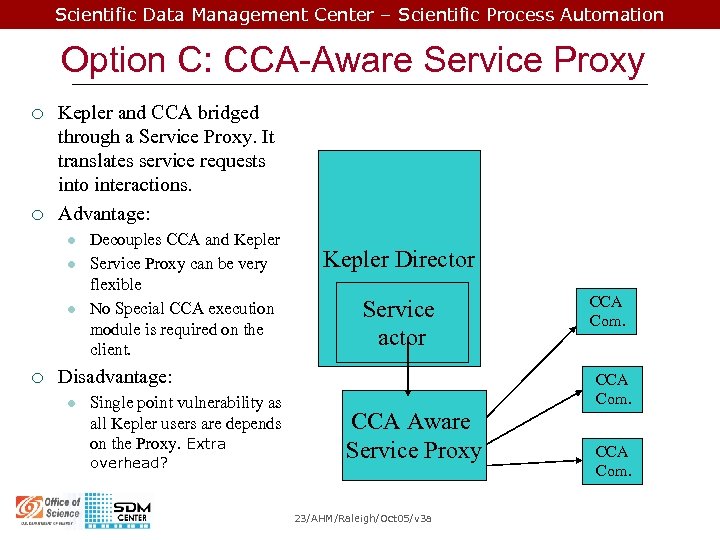

Scientific Data Management Center – Scientific Process Automation Option C: CCA-Aware Service Proxy ¡ ¡ Kepler and CCA bridged through a Service Proxy. It translates service requests into interactions. Advantage: l l l ¡ Decouples CCA and Kepler Service Proxy can be very flexible No Special CCA execution module is required on the client. Kepler Director Service actor Disadvantage: l Single point vulnerability as all Kepler users are depends on the Proxy. Extra overhead? CCA Com. CCA Aware Service Proxy 23/AHM/Raleigh/Oct 05/v 3 a CCA Com.

Scientific Data Management Center – Scientific Process Automation Option C: CCA-Aware Service Proxy ¡ ¡ Kepler and CCA bridged through a Service Proxy. It translates service requests into interactions. Advantage: l l l ¡ Decouples CCA and Kepler Service Proxy can be very flexible No Special CCA execution module is required on the client. Kepler Director Service actor Disadvantage: l Single point vulnerability as all Kepler users are depends on the Proxy. Extra overhead? CCA Com. CCA Aware Service Proxy 23/AHM/Raleigh/Oct 05/v 3 a CCA Com.

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 26/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 26/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Domain Specific Klasky FSP W/F ¡ Swesty TSI W/F ¡ Coleman W/F ¡ Chem. Info W/F ¡ Sci. Run ¡ Blondin TSI W/F ¡ Other … ¡ 27/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Domain Specific Klasky FSP W/F ¡ Swesty TSI W/F ¡ Coleman W/F ¡ Chem. Info W/F ¡ Sci. Run ¡ Blondin TSI W/F ¡ Other … ¡ 27/AHM/Raleigh/Oct 05/v 3 a

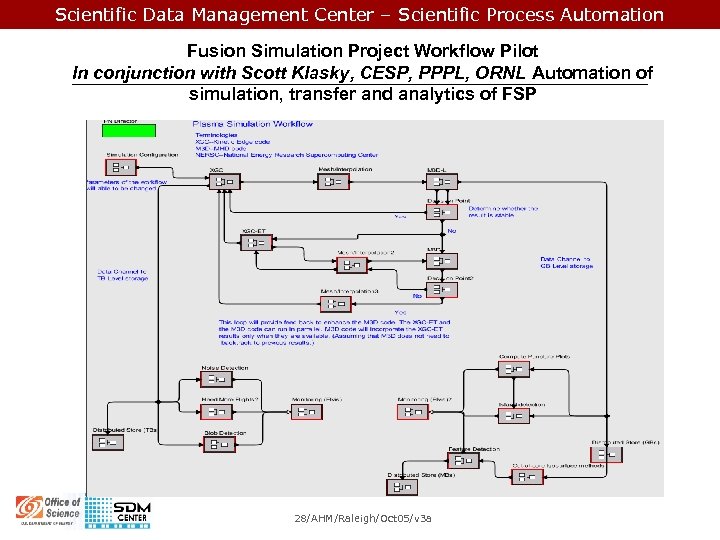

Scientific Data Management Center – Scientific Process Automation Fusion Simulation Project Workflow Pilot In conjunction with Scott Klasky, CESP, PPPL, ORNL Automation of simulation, transfer and analytics of FSP 28/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Fusion Simulation Project Workflow Pilot In conjunction with Scott Klasky, CESP, PPPL, ORNL Automation of simulation, transfer and analytics of FSP 28/AHM/Raleigh/Oct 05/v 3 a

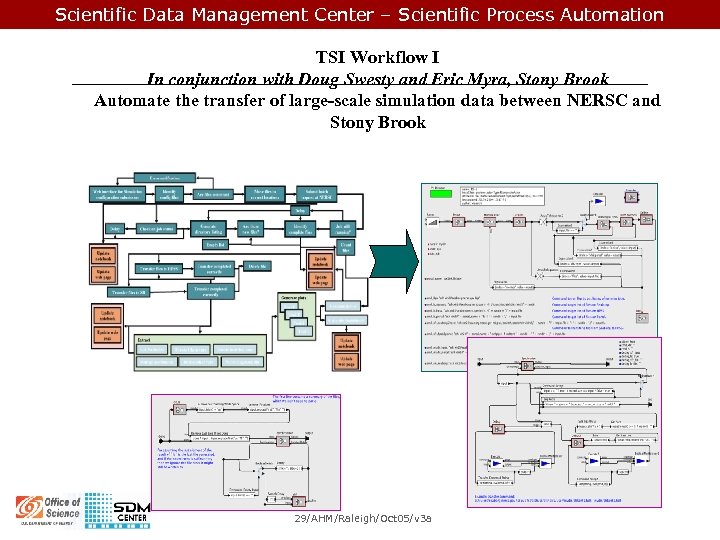

Scientific Data Management Center – Scientific Process Automation TSI Workflow I In conjunction with Doug Swesty and Eric Myra, Stony Brook Automate the transfer of large-scale simulation data between NERSC and Stony Brook 29/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation TSI Workflow I In conjunction with Doug Swesty and Eric Myra, Stony Brook Automate the transfer of large-scale simulation data between NERSC and Stony Brook 29/AHM/Raleigh/Oct 05/v 3 a

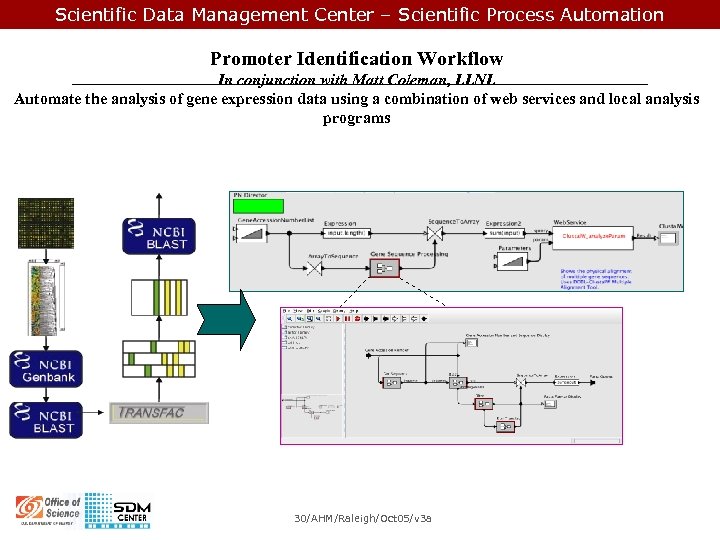

Scientific Data Management Center – Scientific Process Automation Promoter Identification Workflow In conjunction with Matt Coleman, LLNL Automate the analysis of gene expression data using a combination of web services and local analysis programs 30/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Promoter Identification Workflow In conjunction with Matt Coleman, LLNL Automate the analysis of gene expression data using a combination of web services and local analysis programs 30/AHM/Raleigh/Oct 05/v 3 a

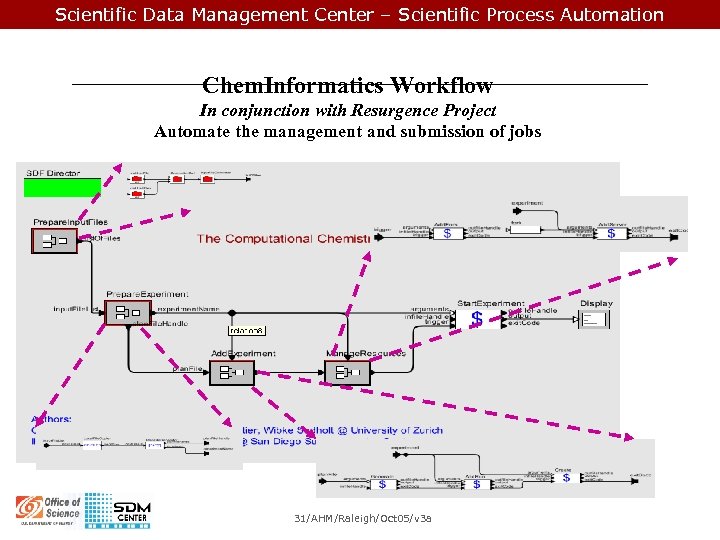

Scientific Data Management Center – Scientific Process Automation Chem. Informatics Workflow In conjunction with Resurgence Project Automate the management and submission of jobs 31/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Chem. Informatics Workflow In conjunction with Resurgence Project Automate the management and submission of jobs 31/AHM/Raleigh/Oct 05/v 3 a

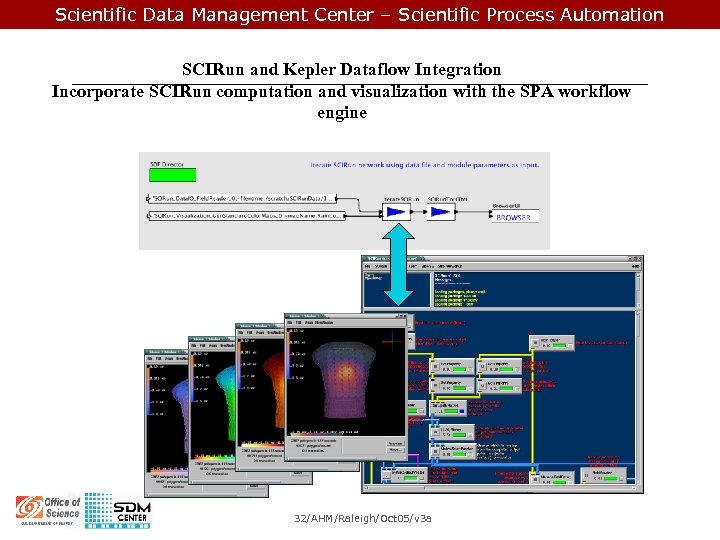

Scientific Data Management Center – Scientific Process Automation SCIRun and Kepler Dataflow Integration Incorporate SCIRun computation and visualization with the SPA workflow engine 32/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation SCIRun and Kepler Dataflow Integration Incorporate SCIRun computation and visualization with the SPA workflow engine 32/AHM/Raleigh/Oct 05/v 3 a

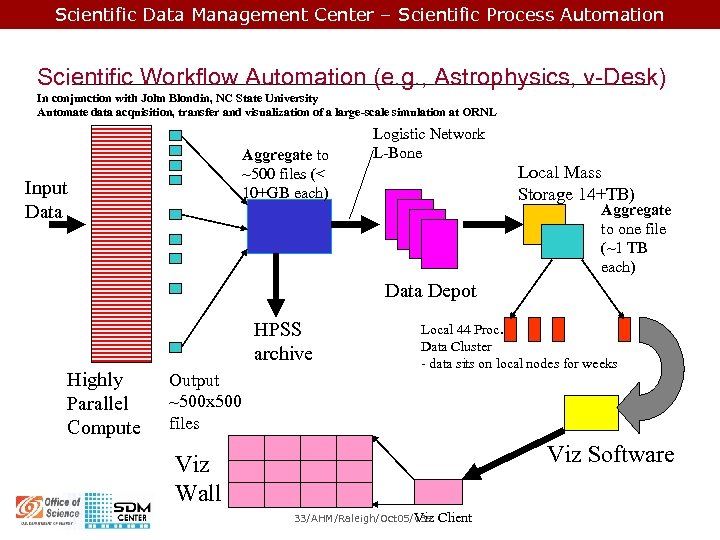

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-Desk) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Aggregate to ~500 files (< 10+GB each) Input Data Logistic Network L-Bone Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Data Depot HPSS archive Highly Parallel Compute Output ~500 x 500 files Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 33/AHM/Raleigh/Oct 05/v 3 a Client

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-Desk) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Aggregate to ~500 files (< 10+GB each) Input Data Logistic Network L-Bone Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Data Depot HPSS archive Highly Parallel Compute Output ~500 x 500 files Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 33/AHM/Raleigh/Oct 05/v 3 a Client

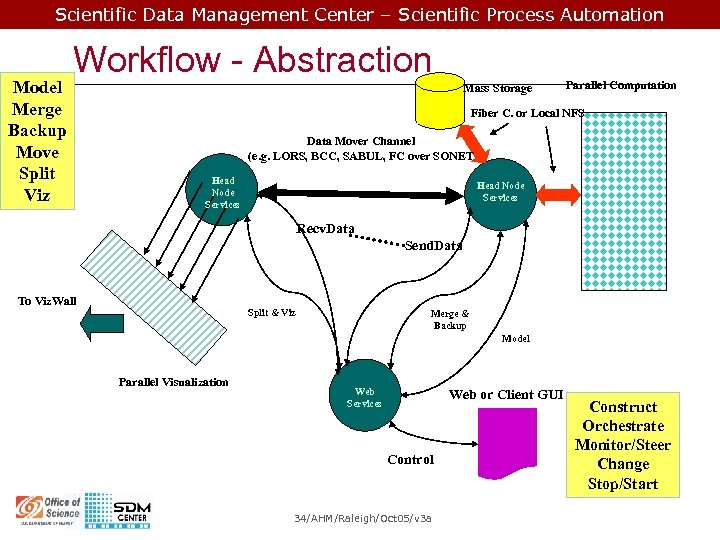

Scientific Data Management Center – Scientific Process Automation Model Merge Backup Move Split Viz Workflow - Abstraction Mass Storage Parallel Computation Fiber C. or Local NFS Data Mover Channel (e. g. LORS, BCC, SABUL, FC over SONET Head Node Services Recv. Data Send. Data To Viz. Wall Split & Viz Merge & Backup Model Parallel Visualization Web Services Web or Client GUI Control 34/AHM/Raleigh/Oct 05/v 3 a Construct Orchestrate Monitor/Steer Change Stop/Start

Scientific Data Management Center – Scientific Process Automation Model Merge Backup Move Split Viz Workflow - Abstraction Mass Storage Parallel Computation Fiber C. or Local NFS Data Mover Channel (e. g. LORS, BCC, SABUL, FC over SONET Head Node Services Recv. Data Send. Data To Viz. Wall Split & Viz Merge & Backup Model Parallel Visualization Web Services Web or Client GUI Control 34/AHM/Raleigh/Oct 05/v 3 a Construct Orchestrate Monitor/Steer Change Stop/Start

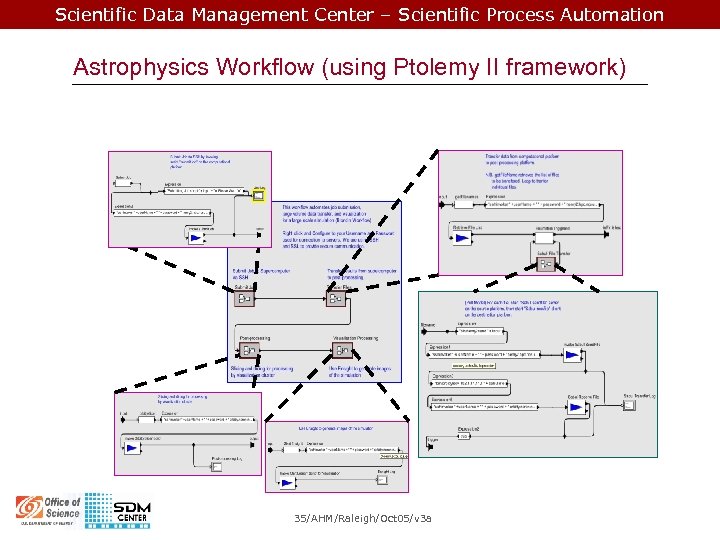

Scientific Data Management Center – Scientific Process Automation Astrophysics Workflow (using Ptolemy II framework) 35/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Astrophysics Workflow (using Ptolemy II framework) 35/AHM/Raleigh/Oct 05/v 3 a

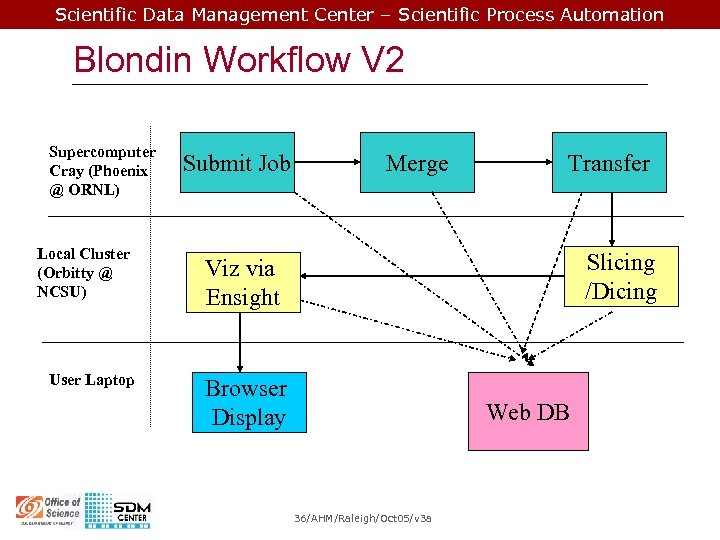

Scientific Data Management Center – Scientific Process Automation Blondin Workflow V 2 Supercomputer Cray (Phoenix @ ORNL) Local Cluster (Orbitty @ NCSU) User Laptop Submit Job Merge Transfer Slicing /Dicing Viz via Ensight Browser Display Web DB 36/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Blondin Workflow V 2 Supercomputer Cray (Phoenix @ ORNL) Local Cluster (Orbitty @ NCSU) User Laptop Submit Job Merge Transfer Slicing /Dicing Viz via Ensight Browser Display Web DB 36/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Basic A number of processing steps ¡ Range of data transfer rates ¡ Parallelism ¡ Speedup? ¡ Implementation (e. g. , Scripts? App? ) ¡ Ease of use (e. g. , LORS) ¡ Tracking (e. g. , DB, Web, provenance) ¡ Fault-Tolerance ¡ Distributed (for most part) ¡ 37/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Basic A number of processing steps ¡ Range of data transfer rates ¡ Parallelism ¡ Speedup? ¡ Implementation (e. g. , Scripts? App? ) ¡ Ease of use (e. g. , LORS) ¡ Tracking (e. g. , DB, Web, provenance) ¡ Fault-Tolerance ¡ Distributed (for most part) ¡ 37/AHM/Raleigh/Oct 05/v 3 a

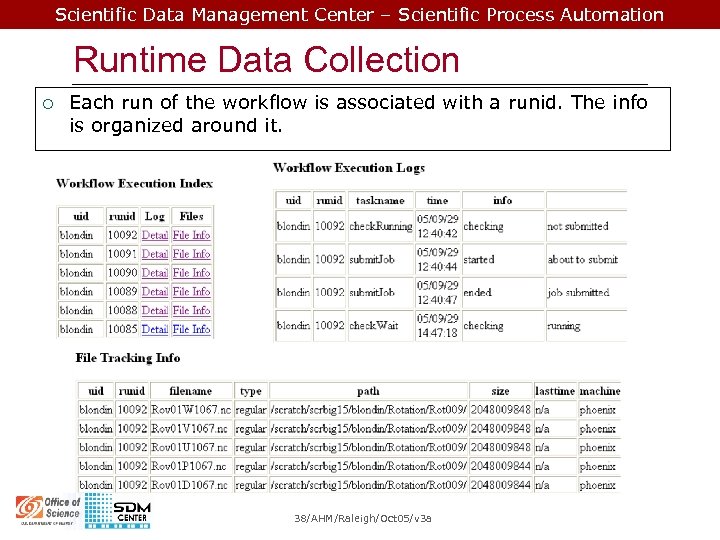

Scientific Data Management Center – Scientific Process Automation Runtime Data Collection ¡ Each run of the workflow is associated with a runid. The info is organized around it. 38/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Runtime Data Collection ¡ Each run of the workflow is associated with a runid. The info is organized around it. 38/AHM/Raleigh/Oct 05/v 3 a

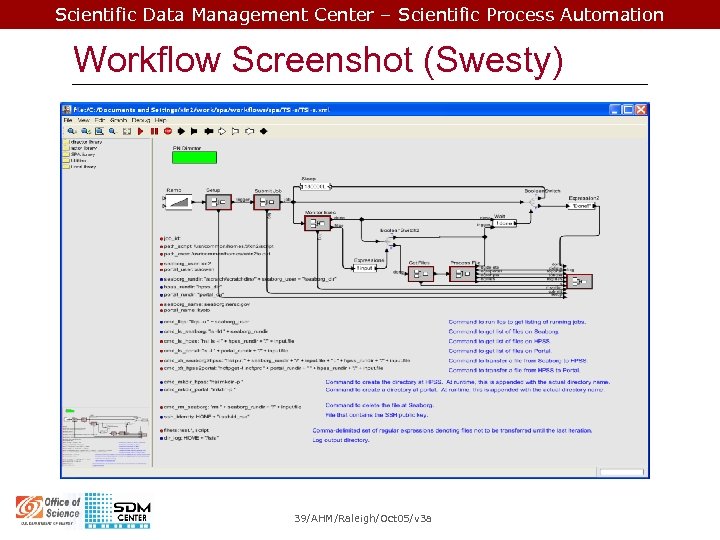

Scientific Data Management Center – Scientific Process Automation Workflow Screenshot (Swesty) 39/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Workflow Screenshot (Swesty) 39/AHM/Raleigh/Oct 05/v 3 a

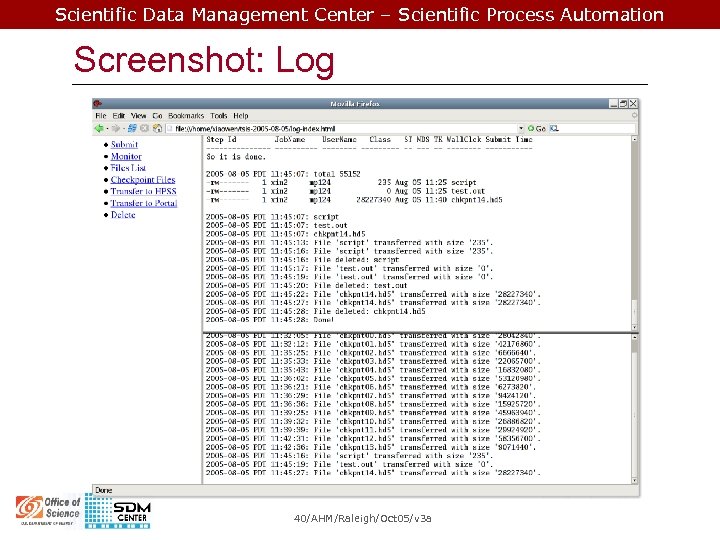

Scientific Data Management Center – Scientific Process Automation Screenshot: Log 40/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Screenshot: Log 40/AHM/Raleigh/Oct 05/v 3 a

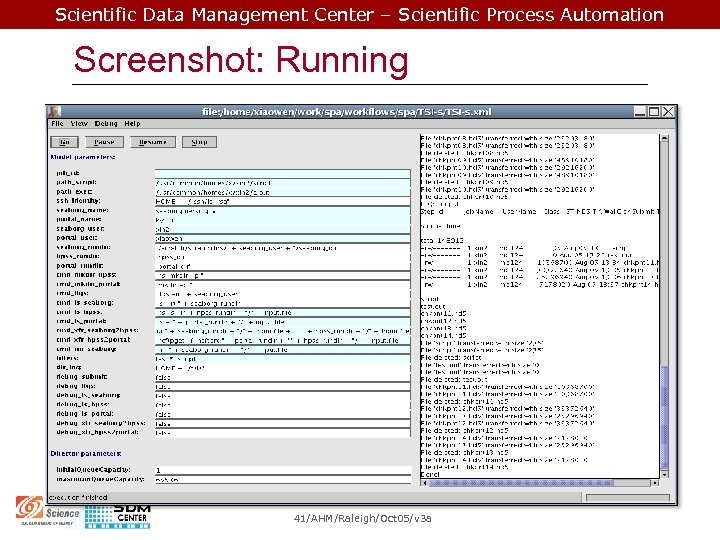

Scientific Data Management Center – Scientific Process Automation Screenshot: Running 41/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Screenshot: Running 41/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Generic Actors (Swesty W/F) ¡ This workflow uses a small number of actors repeatedly to deliver a complex behavior l l l l 160 instances an actors 18 different types of actors ~100 Expression actor instances 13 boolean switch actor instances 13 array manipulation actor instances 8 ssh actor instances < 30 instances of other actors (ex: sleep, file I/O, etc) 42/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Generic Actors (Swesty W/F) ¡ This workflow uses a small number of actors repeatedly to deliver a complex behavior l l l l 160 instances an actors 18 different types of actors ~100 Expression actor instances 13 boolean switch actor instances 13 array manipulation actor instances 8 ssh actor instances < 30 instances of other actors (ex: sleep, file I/O, etc) 42/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Work in Progress ¡ Validation of input data l ¡ Distributed infrastructure l l ¡ llsubmit script and config files need to be consistent Start this from a web page Monitor and control the workflow from a remote site Incorporation of data analysis l A small workflow driven by the specific simulation results and how Doug wants to visualize the data 43/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Work in Progress ¡ Validation of input data l ¡ Distributed infrastructure l l ¡ llsubmit script and config files need to be consistent Start this from a web page Monitor and control the workflow from a remote site Incorporation of data analysis l A small workflow driven by the specific simulation results and how Doug wants to visualize the data 43/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Notes ¡ A complex workflow that is typical of many scientific workflows l ¡ Much can be done with a set of generic actors: l l l ¡ Follows the run simulation, move data, and analyze data paradigm Expression actor Ssh 2 Exec actor Boolean. Switch & Array actors Configuration parameters allow simple adaptation to different environments 44/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Notes ¡ A complex workflow that is typical of many scientific workflows l ¡ Much can be done with a set of generic actors: l l l ¡ Follows the run simulation, move data, and analyze data paradigm Expression actor Ssh 2 Exec actor Boolean. Switch & Array actors Configuration parameters allow simple adaptation to different environments 44/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Notes (Blondin) W/F support system needs to be 1. Flexible - everything changes often! If such tools are to be used by application scientists they need to be easy to reconfigure. 2. Detachable - for one reason or another, one component may not work (network not usable, disks full on local end), so one would like to fire up individual parts of the workflow as needed. 3. Fault-tolerant - ideally, the software itself can recognize some faults and correct them (eg, re-attempt file upload). 45/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Notes (Blondin) W/F support system needs to be 1. Flexible - everything changes often! If such tools are to be used by application scientists they need to be easy to reconfigure. 2. Detachable - for one reason or another, one component may not work (network not usable, disks full on local end), so one would like to fire up individual parts of the workflow as needed. 3. Fault-tolerant - ideally, the software itself can recognize some faults and correct them (eg, re-attempt file upload). 45/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Key Issue Very important to distinguish between a custom-made workflow solution and a more cannonical set of operations, methods, and solutions that can be composed into a scientific workflow. ¡ Complexity, skill level needed to implement, usability, maintainability, “standardization” ¡ l l l e. g. , sort, uniq, grep, ftp, ssh on unix boxes SAS (that can do sorting), home-made sort, LORS, SABUL, bbcp (free, but not standard), etc. 46/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Key Issue Very important to distinguish between a custom-made workflow solution and a more cannonical set of operations, methods, and solutions that can be composed into a scientific workflow. ¡ Complexity, skill level needed to implement, usability, maintainability, “standardization” ¡ l l l e. g. , sort, uniq, grep, ftp, ssh on unix boxes SAS (that can do sorting), home-made sort, LORS, SABUL, bbcp (free, but not standard), etc. 46/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 47/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Overview ¡ From Art to Commodity ¡ Component-based System Engineering ¡ Kepler vs. CCA vs ? ? ? ¡ Domain Specific ¡ Virtualization and Service-based System Engineering 47/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Make everything into a Service Network-based and On-demand ¡ Complements an access/communication unit of choice ¡ Utility-like: ubiquitous, reliable, available, maintainable ¡ Nearly Device-independent ¡ May be application level or smaller granularity (e. g. , functions, web-service, grid-service) ¡ 48/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Make everything into a Service Network-based and On-demand ¡ Complements an access/communication unit of choice ¡ Utility-like: ubiquitous, reliable, available, maintainable ¡ Nearly Device-independent ¡ May be application level or smaller granularity (e. g. , functions, web-service, grid-service) ¡ 48/AHM/Raleigh/Oct 05/v 3 a

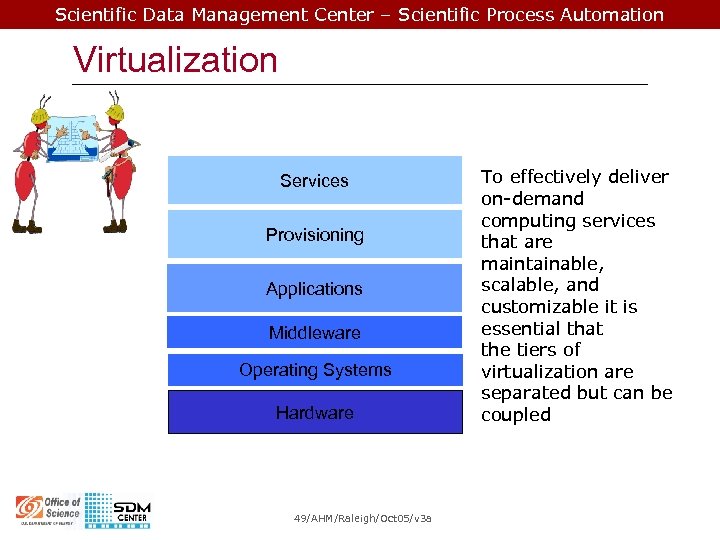

Scientific Data Management Center – Scientific Process Automation Virtualization Services Provisioning Applications Middleware Operating Systems Hardware 49/AHM/Raleigh/Oct 05/v 3 a To effectively deliver on-demand computing services that are maintainable, scalable, and customizable it is essential that the tiers of virtualization are separated but can be coupled

Scientific Data Management Center – Scientific Process Automation Virtualization Services Provisioning Applications Middleware Operating Systems Hardware 49/AHM/Raleigh/Oct 05/v 3 a To effectively deliver on-demand computing services that are maintainable, scalable, and customizable it is essential that the tiers of virtualization are separated but can be coupled

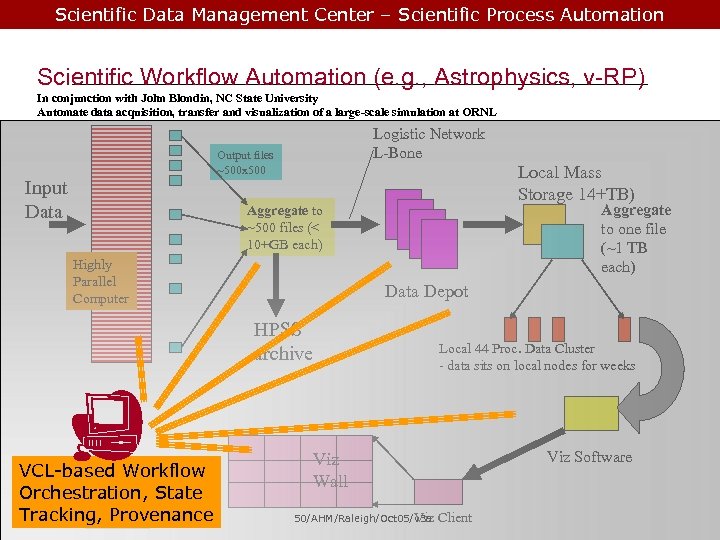

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-RP) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Logistic Network L-Bone Output files ~500 x 500 Input Data Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Aggregate to ~500 files (< 10+GB each) Highly Parallel Computer Data Depot HPSS archive VCL-based Workflow Orchestration, State Tracking, Provenance Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 50/AHM/Raleigh/Oct 05/v 3 a Client

Scientific Data Management Center – Scientific Process Automation Scientific Workflow Automation (e. g. , Astrophysics, v-RP) In conjunction with John Blondin, NC State University Automate data acquisition, transfer and visualization of a large-scale simulation at ORNL Logistic Network L-Bone Output files ~500 x 500 Input Data Local Mass Storage 14+TB) Aggregate to one file (~1 TB each) Aggregate to ~500 files (< 10+GB each) Highly Parallel Computer Data Depot HPSS archive VCL-based Workflow Orchestration, State Tracking, Provenance Local 44 Proc. Data Cluster - data sits on local nodes for weeks Viz Software Viz Wall Viz 50/AHM/Raleigh/Oct 05/v 3 a Client

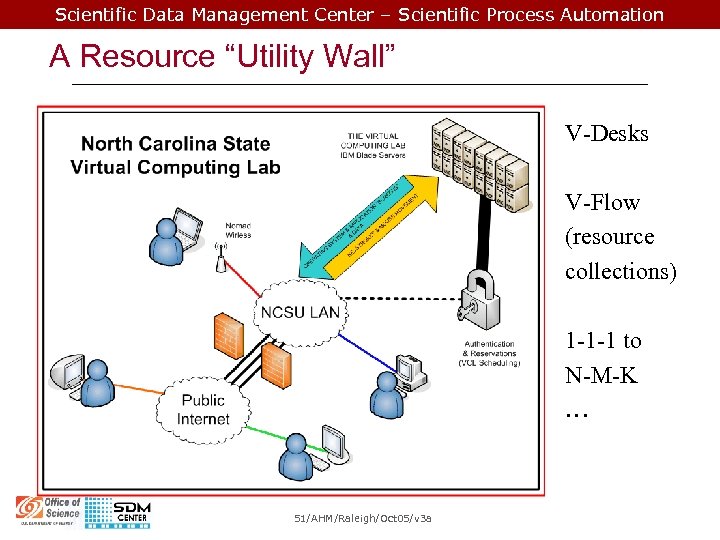

Scientific Data Management Center – Scientific Process Automation A Resource “Utility Wall” V-Desks V-Flow (resource collections) 1 -1 -1 to N-M-K … 51/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation A Resource “Utility Wall” V-Desks V-Flow (resource collections) 1 -1 -1 to N-M-K … 51/AHM/Raleigh/Oct 05/v 3 a

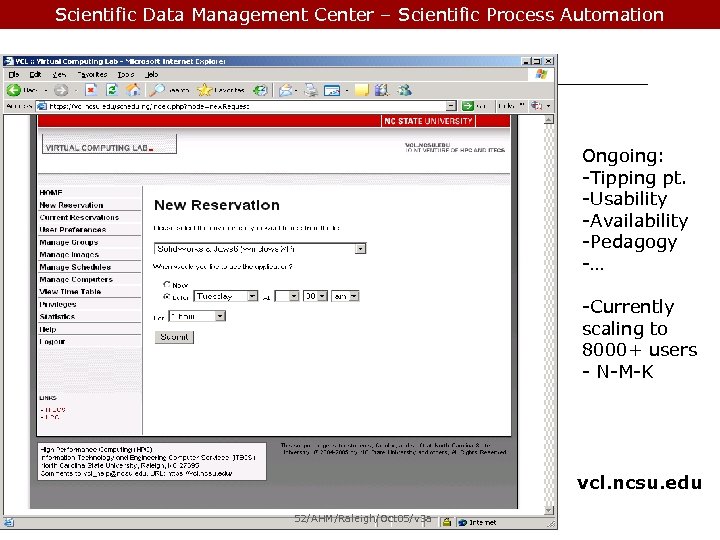

Scientific Data Management Center – Scientific Process Automation Ongoing: -Tipping pt. -Usability -Availability -Pedagogy -… -Currently scaling to 8000+ users - N-M-K vcl. ncsu. edu 52/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Ongoing: -Tipping pt. -Usability -Availability -Pedagogy -… -Currently scaling to 8000+ users - N-M-K vcl. ncsu. edu 52/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Advantages (2) ¡ ¡ ¡ ¡ ¡ Easy to use remote access from one's own desktop or mobile computer in homes, offices, or the local coffee house, bringing the "lab" to you Full access to a dedicated computing resource (some scheduling choices include monitored root or administrator access). This access is the same or more than what is possible in physical computing laboratory. Vendor-standard remote access protocols and client software. Eliminates the need for specialized customization of one's own computer and eases updates and maintenance Platform agnostic (Macs, Win, Linux, …) Extensible to any remotely-accessible desktop systems in specialized campus labs. Departments can bring their lab to their students. Protection of Intellectual Property Data Provenance and tracking Fault-Tolerance Higher Security 53/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Advantages (2) ¡ ¡ ¡ ¡ ¡ Easy to use remote access from one's own desktop or mobile computer in homes, offices, or the local coffee house, bringing the "lab" to you Full access to a dedicated computing resource (some scheduling choices include monitored root or administrator access). This access is the same or more than what is possible in physical computing laboratory. Vendor-standard remote access protocols and client software. Eliminates the need for specialized customization of one's own computer and eases updates and maintenance Platform agnostic (Macs, Win, Linux, …) Extensible to any remotely-accessible desktop systems in specialized campus labs. Departments can bring their lab to their students. Protection of Intellectual Property Data Provenance and tracking Fault-Tolerance Higher Security 53/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Issues (1) ¡ ¡ ¡ ¡ Communication Coupling (loose, tight, v. tight, code-level) and Granularity (fine, medium? , coarse) Communication Methods (e. g. , ssh tunnels, xmprpc, snmp, web/grid services, etc. ) – e. g. , apparently poor support for Cray Storage issues (e. g. , p-netcdf support, bandwidth) Direct and Indirect Data Flows (functionality, throughput, delays, other Qo. S parameters) End-to-end performance Level of abstraction Workflow description language(s) and exchange issues – interoperability “Standard” scientific computing “W/F functions” 54/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Issues (1) ¡ ¡ ¡ ¡ Communication Coupling (loose, tight, v. tight, code-level) and Granularity (fine, medium? , coarse) Communication Methods (e. g. , ssh tunnels, xmprpc, snmp, web/grid services, etc. ) – e. g. , apparently poor support for Cray Storage issues (e. g. , p-netcdf support, bandwidth) Direct and Indirect Data Flows (functionality, throughput, delays, other Qo. S parameters) End-to-end performance Level of abstraction Workflow description language(s) and exchange issues – interoperability “Standard” scientific computing “W/F functions” 54/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Issues (2) ¡ ¡ ¡ ¡ Problem is currently similar to old-time punched-card job submissions (long turn-around time, can be expensive due to front end computational resource I/O bottleneck) - need up front verification and validation – things will change Back-end bottleneck due to hierarchical storage issues (e. g. , retrieval from HPSS) Long term workflow state preservation - needed Recovery (transfers, other failures) – more needed Tracking data and files, provenances Who maintains equipment, storage, data, scripts, workflow elements? Elegant solutions my not be good solutions from the perspective of autonomy. EXTREMELY IMPORTANT!!! – We are trying to get out of the business of totally custom-made solutions. 55/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation Issues (2) ¡ ¡ ¡ ¡ Problem is currently similar to old-time punched-card job submissions (long turn-around time, can be expensive due to front end computational resource I/O bottleneck) - need up front verification and validation – things will change Back-end bottleneck due to hierarchical storage issues (e. g. , retrieval from HPSS) Long term workflow state preservation - needed Recovery (transfers, other failures) – more needed Tracking data and files, provenances Who maintains equipment, storage, data, scripts, workflow elements? Elegant solutions my not be good solutions from the perspective of autonomy. EXTREMELY IMPORTANT!!! – We are trying to get out of the business of totally custom-made solutions. 55/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation 56/AHM/Raleigh/Oct 05/v 3 a

Scientific Data Management Center – Scientific Process Automation 56/AHM/Raleigh/Oct 05/v 3 a