528f627348740f6e486dae9dfd9543fe.ppt

- Количество слайдов: 21

SCARIe FABRIC A pilot study of distributed correlation Huib Jan van Langevelde Ruud Oerlemans Nico Kruithof Sergei Pogrebenko and many others…

What correlators do… • Synthesis imaging simulates a very large telescope • by measuring Fourier components of sky brightness • on each baseline pair • Sensitivity is proportional to √bandwidth • optimal use of available recording bandwidth • by sampling 2 bits (4 level) at Nyquist rate • Correlator calculates ½N(N-1) baseline outputs • after compensating for the geometry of array • Integrates output signal to something relatively slow • and samples with delay/frequency resolution huib 02/11/06 Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 2

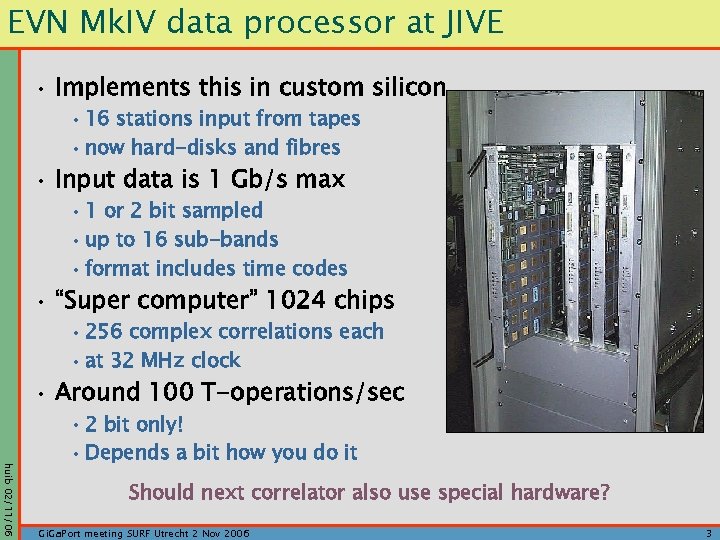

EVN Mk. IV data processor at JIVE • Implements this in custom silicon • 16 stations input from tapes • now hard-disks and fibres • Input data is 1 Gb/s max • 1 or 2 bit sampled • up to 16 sub-bands • format includes time codes • “Super computer” 1024 chips • 256 complex correlations each • at 32 MHz clock • Around 100 T-operations/sec huib 02/11/06 • 2 bit only! • Depends a bit how you do it Should next correlator also use special hardware? Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 3

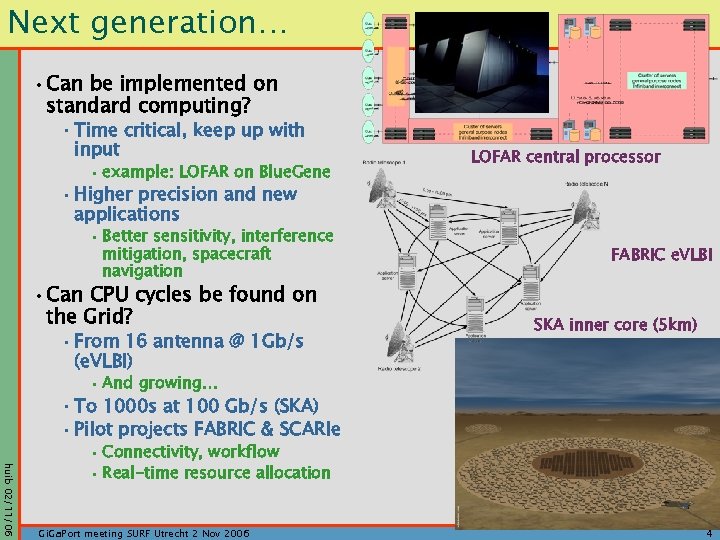

Next generation… • Can be implemented on standard computing? • Time critical, keep up with input • example: LOFAR on Blue. Gene LOFAR central processor • Higher precision and new applications • Better sensitivity, interference mitigation, spacecraft navigation • Can CPU cycles be found on the Grid? • From 16 antenna @ 1 Gb/s (e. VLBI) FABRIC e. VLBI SKA inner core (5 km) • And growing… • To 1000 s at 100 Gb/s (SKA) • Pilot projects FABRIC & SCARIe huib 02/11/06 • Connectivity, workflow • Real-time resource allocation Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 4

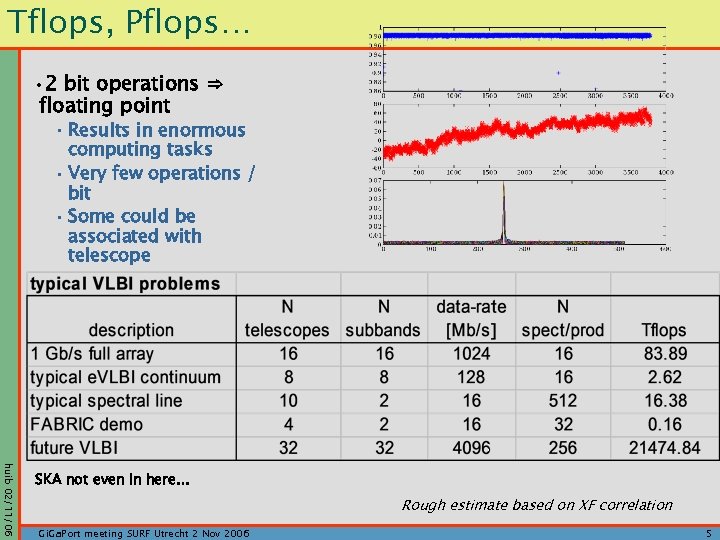

Tflops, Pflops… • 2 bit operations ⇒ floating point • Results in enormous computing tasks • Very few operations / bit • Some could be associated with telescope huib 02/11/06 SKA not even in here… Rough estimate based on XF correlation Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 5

SCARIe FABRIC • EC funded project EXPRe. S (03/2006) • To turn e. VLBI into an operational system • Plus: Joint Research Activity: FABRIC • Future Arrays of Broadband Radio-telescopes on Internet Computing • One work-package on 4 Gb/s data acquisition and transport (Jodrell Bank, Metsahovi, Onsala, Bonn, ASTRON) • One work-package on distributed correlation (JIVE, PSNC Poznan) • Dutch NWO funded project SCARIe (10/2006) • Software Correlator Architecture Research and Implementation for e. VLBI • Collaboration with SARA and Uv. A • Use Dutch Grid with configurable high connectivity: Star. Plane • Software correlation with data originating from JIVE • Complementary projects with matching funding • International and national expertise from other partners • Total of 9 man year at JIVE, plus some matching from staff • plus similar amount at partners huib 02/11/06 Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 6

Aim of the project • Research the possibility of distributed correlation • Using the Grid for getting the CPU cycles • Can it be employed for the next generation VLBI correlation? • Exercise the advantages of software correlation • Using floating point accuracy and special filtering • Explore (push) the boundaries of the Grid paradigm • “Real time” applications, data transfer limitations • To lead to a modest size demo • With some possible real applications: • Monitoring EVN network performance • Continuous available e. VLBI network with few telescopes • Monitoring transient sources • Astrometry, possibly of spectral line sources • Special correlator modes: spacecraft navigation, pulsar gating • Test bed for broadband e. VLBI research huib 02/11/06 Something to try on the roadmap for the next generation correlator, even if you do not believe it is the solution… Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 7

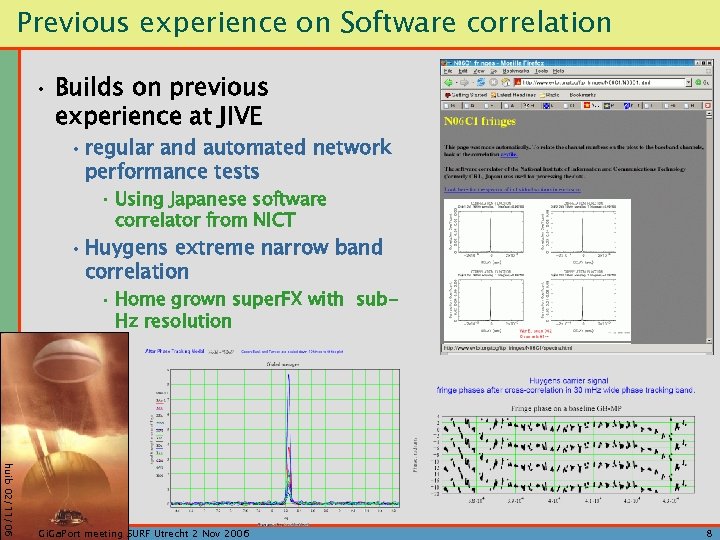

Previous experience on Software correlation • Builds on previous experience at JIVE • regular and automated network performance tests • Using Japanese software correlator from NICT • Huygens extreme narrow band correlation • Home grown super. FX with sub. Hz resolution huib 02/11/06 Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 8

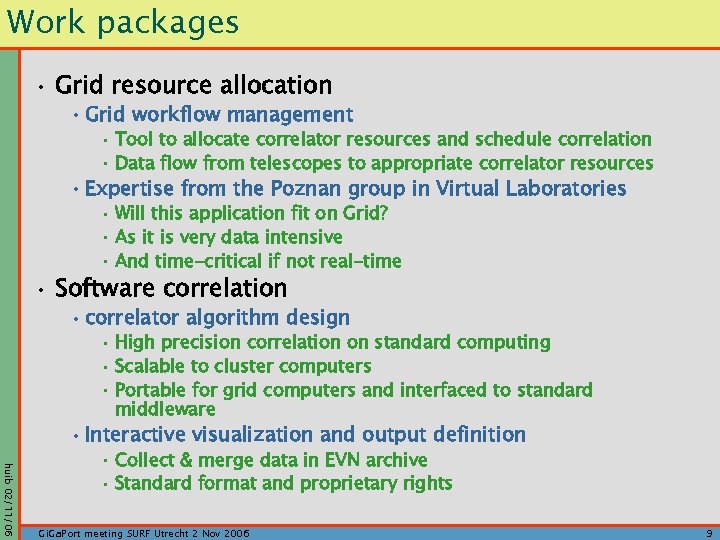

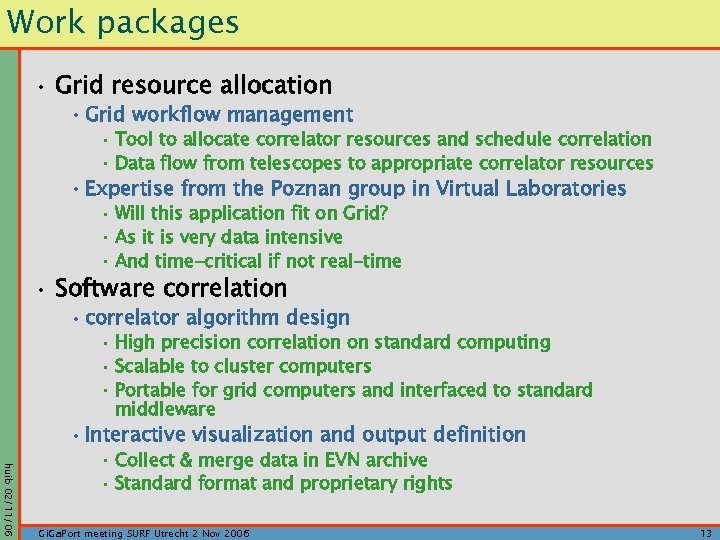

Work packages • Grid resource allocation • Grid workflow management • Tool to allocate correlator resources and schedule correlation • Data flow from telescopes to appropriate correlator resources • Expertise from the Poznan group in Virtual Laboratories • Will this application fit on Grid? • As it is very data intensive • And time-critical if not real-time • Software correlation • correlator algorithm design • High precision correlation on standard computing • Scalable to cluster computers • Portable for grid computers and interfaced to standard middleware • Interactive visualization and output definition huib 02/11/06 • Collect & merge data in EVN archive • Standard format and proprietary rights Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 9

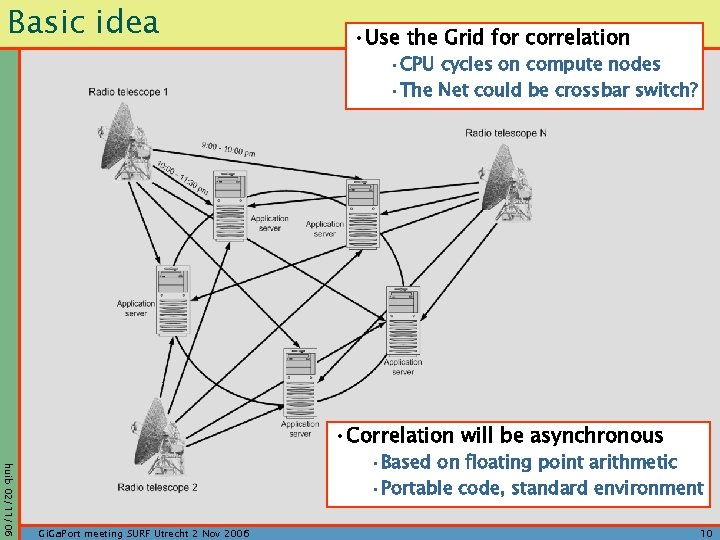

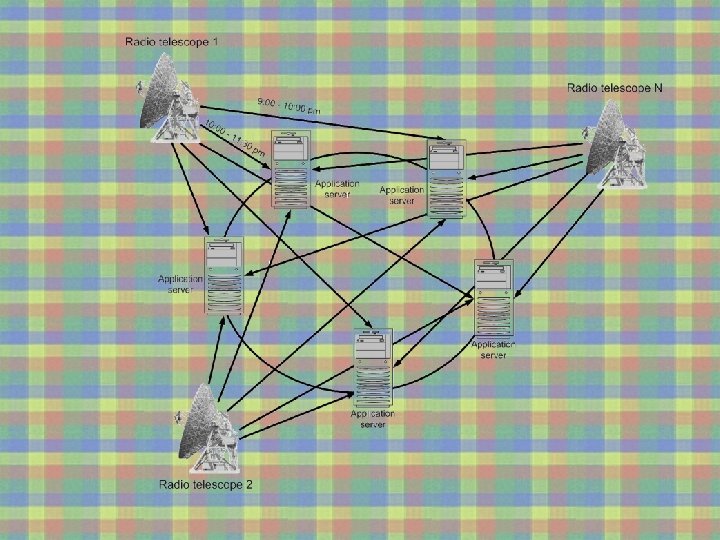

Basic idea • Use the Grid for correlation • CPU cycles on compute nodes • The Net could be crossbar switch? • Correlation will be asynchronous huib 02/11/06 • Based on floating point arithmetic • Portable code, standard environment Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 10

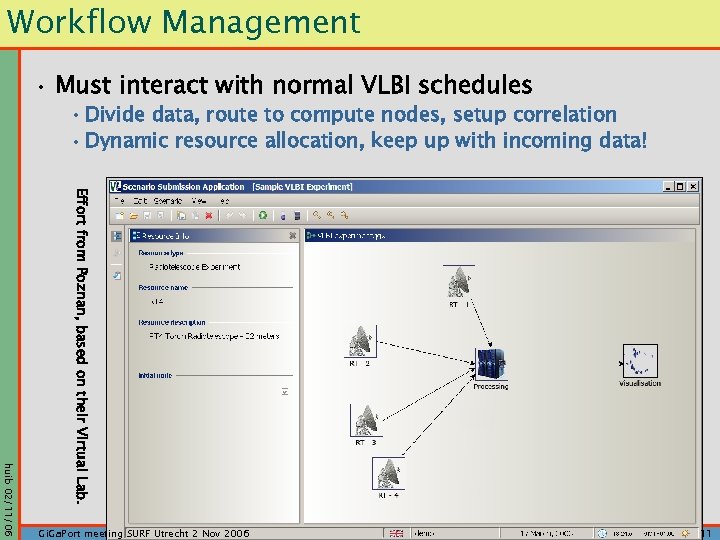

Workflow Management • Must interact with normal VLBI schedules • Divide data, route to compute nodes, setup correlation • Dynamic resource allocation, keep up with incoming data! Effort from Poznan, based on their Virtual Lab. huib 02/11/06 Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 11

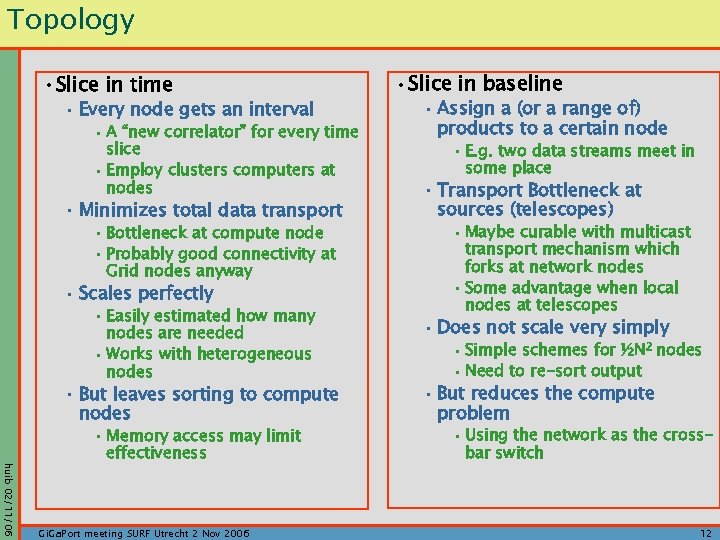

Topology • Slice in time • Every node gets an interval • A “new correlator” for every time slice • Employ clusters computers at nodes • Minimizes total data transport • Bottleneck at compute node • Probably good connectivity at Grid nodes anyway • Scales perfectly • Easily estimated how many nodes are needed • Works with heterogeneous nodes • But leaves sorting to compute nodes huib 02/11/06 • Memory access may limit effectiveness Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 • Slice in baseline • Assign a (or a range of) products to a certain node • E. g. two data streams meet in some place • Transport Bottleneck at sources (telescopes) • Maybe curable with multicast transport mechanism which forks at network nodes • Some advantage when local nodes at telescopes • Does not scale very simply • Simple schemes for ½N 2 nodes • Need to re-sort output • But reduces the compute problem • Using the network as the crossbar switch 12

Work packages • Grid resource allocation • Grid workflow management • Tool to allocate correlator resources and schedule correlation • Data flow from telescopes to appropriate correlator resources • Expertise from the Poznan group in Virtual Laboratories • Will this application fit on Grid? • As it is very data intensive • And time-critical if not real-time • Software correlation • correlator algorithm design • High precision correlation on standard computing • Scalable to cluster computers • Portable for grid computers and interfaced to standard middleware • Interactive visualization and output definition huib 02/11/06 • Collect & merge data in EVN archive • Standard format and proprietary rights Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 13

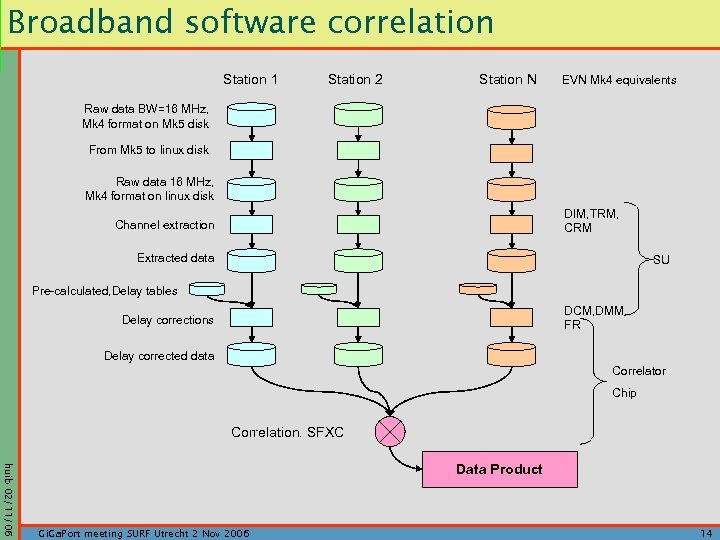

Broadband software correlation Station 1 Station 2 Station N EVN Mk 4 equivalents Raw data BW=16 MHz, Mk 4 format on Mk 5 disk From Mk 5 to linux disk Raw data 16 MHz, Mk 4 format on linux disk DIM, TRM, CRM Channel extraction Extracted data SU Pre-calculated, Delay tables DCM, DMM, FR Delay corrections Delay corrected data Correlator Chip Correlation. SFXC huib 02/11/06 Data Product Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 14

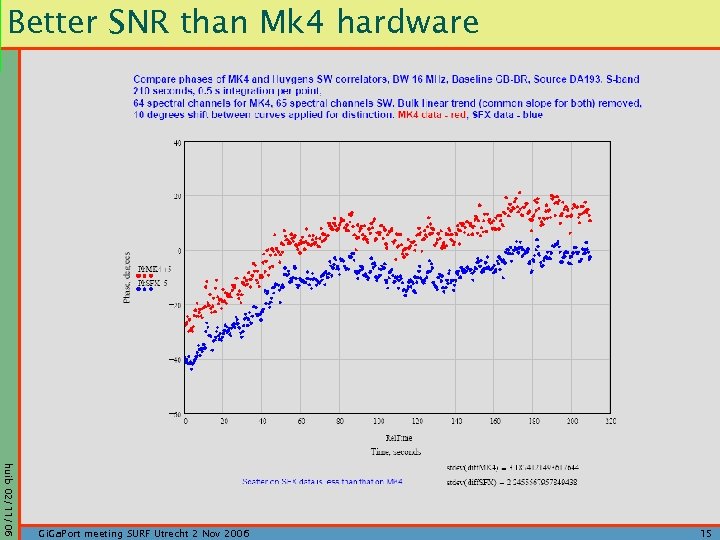

Better SNR than Mk 4 hardware huib 02/11/06 Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 15

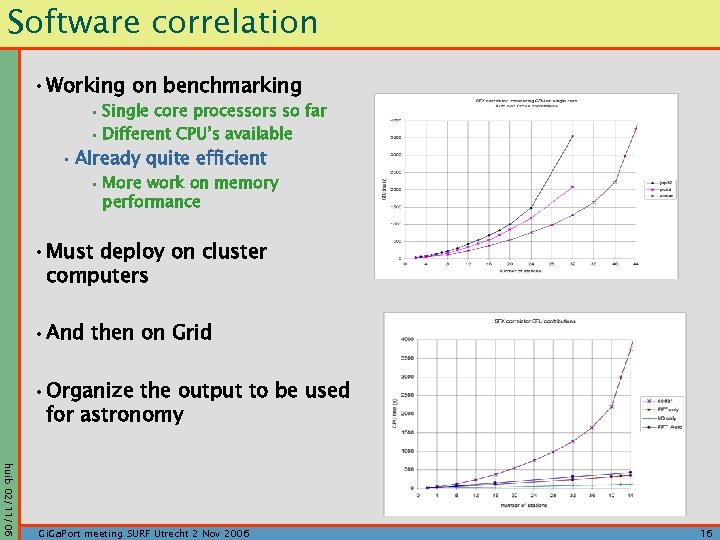

Software correlation • Working on benchmarking • Single core processors so far • Different CPU’s available • Already quite efficient • More work on memory performance • Must deploy on cluster computers • And then on Grid • Organize the output to be used for astronomy huib 02/11/06 Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 16

Side step: Data intensive processing • Radio-astronomy can be extreme • User data sets can be large • Few – 100 GB now • Larger: LOFAR, e. VLBI, APERTIF, SKA • All data enter imaging • Iterative calibration schemes • Few operations per Byte • Parallel computing: not obviously suited for messaging systems • Task (data oriented) parallelization • Processing traditionally done interactively on user platform • More and more pipeline approaches • Addressed in Radio. Net • Project ALBUS • resulted in Python for AIPS • Looking for extension in FP 7 huib 02/11/06 • Interoperability with ALMA, LOFAR • But for user domain NRI e. Sciences 2 Nov 2006 17

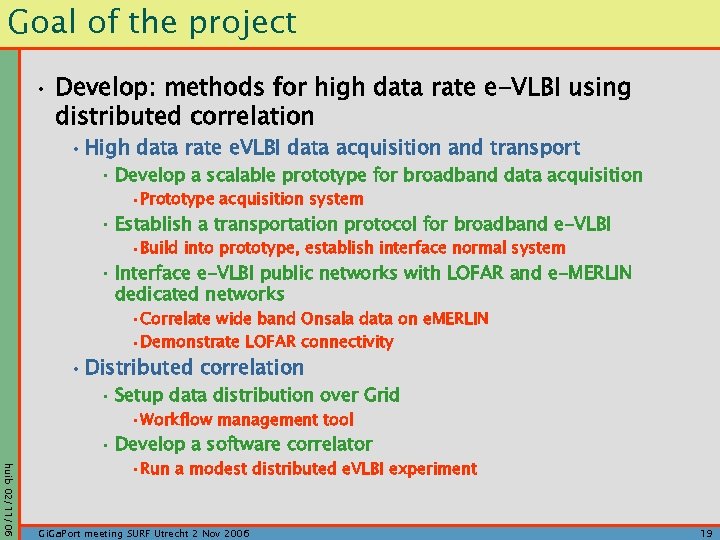

Goal of the project • Develop: methods for high data rate e-VLBI using distributed correlation • High data rate e. VLBI data acquisition and transport • Develop a scalable prototype for broadband data acquisition • Prototype acquisition system • Establish a transportation protocol for broadband e-VLBI • Build into prototype, establish interface normal system • Interface e-VLBI public networks with LOFAR and e-MERLIN dedicated networks • Correlate wide band Onsala data on e. MERLIN • Demonstrate LOFAR connectivity • Distributed correlation • Setup data distribution over Grid • Workflow management tool • Develop a software correlator huib 02/11/06 • Run a modest distributed e. VLBI experiment Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 19

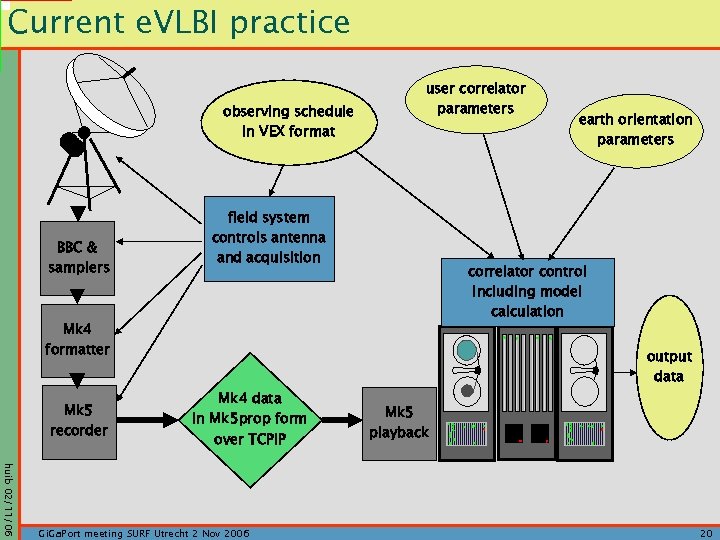

Current e. VLBI practice observing schedule in VEX format BBC & samplers user correlator parameters field system controls antenna and acquisition correlator control including model calculation Mk 4 formatter Mk 5 recorder earth orientation parameters output data Mk 4 data in Mk 5 prop form over TCPIP huib 02/11/06 Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 Mk 5 playback 20

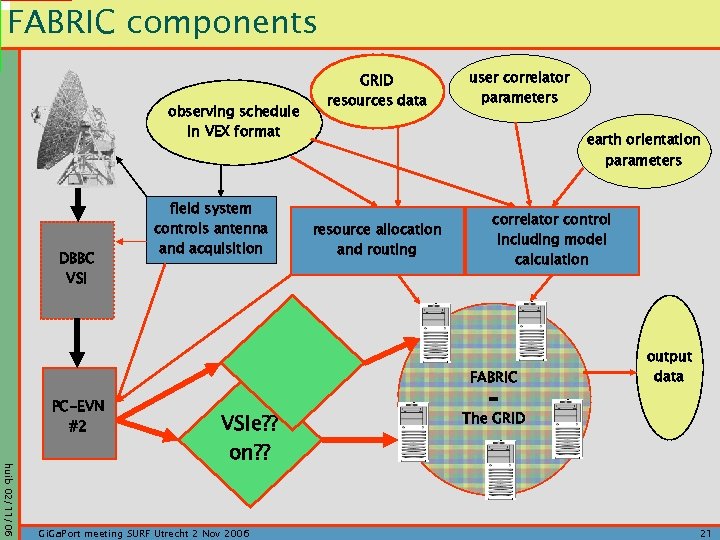

FABRIC components observing schedule in VEX format DBBC VSI PC-EVN #2 field system controls antenna and acquisition huib 02/11/06 VSIe? ? on? ? Gi. Ga. Port meeting SURF Utrecht 2 Nov 2006 GRID resources data user correlator parameters earth orientation parameters resource allocation and routing correlator control including model calculation FABRIC = The GRID output data 21

528f627348740f6e486dae9dfd9543fe.ppt