fdc65595149c630ae015b8c534b88477.ppt

- Количество слайдов: 12

Scaling for the Future Katherine Yelick U. C. Berkeley, EECS http: //iram. cs. berkeley. edu/{istore} http: //www. cs. berkeley. edu/projects/titanium 1

Scaling for the Future Katherine Yelick U. C. Berkeley, EECS http: //iram. cs. berkeley. edu/{istore} http: //www. cs. berkeley. edu/projects/titanium 1

Two Independent Problems • Building a reliable, scalable infrastructure – Scalable processor, cluster, and wide-area systems » IRAM, ISTORE, and Ocean. Store • One example application for the infrastructure – Microscale simulation of biological systems » Model signals from cell membrane to nucleus » Understanding disease and for pharmacological and Bio. MEMS-mediated therapy 2

Two Independent Problems • Building a reliable, scalable infrastructure – Scalable processor, cluster, and wide-area systems » IRAM, ISTORE, and Ocean. Store • One example application for the infrastructure – Microscale simulation of biological systems » Model signals from cell membrane to nucleus » Understanding disease and for pharmacological and Bio. MEMS-mediated therapy 2

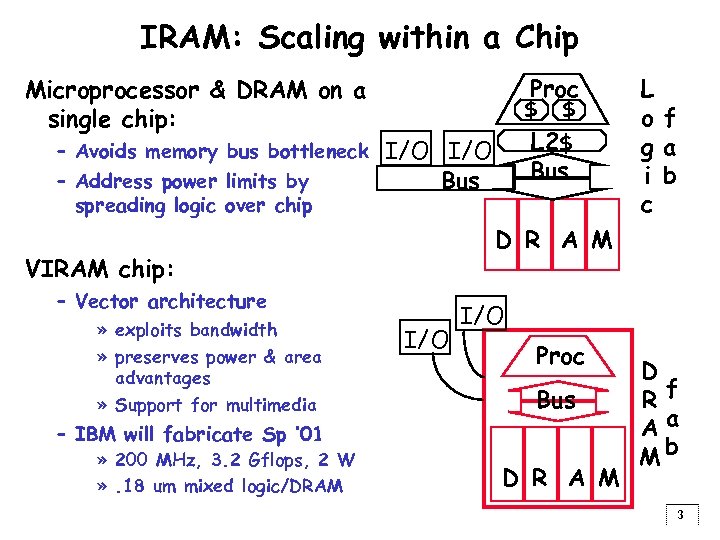

IRAM: Scaling within a Chip Proc Microprocessor & DRAM on a single chip: $ L 2$ Bus – Avoids memory bus bottleneck I/O – Address power limits by Bus spreading logic over chip D R A M VIRAM chip: – Vector architecture » exploits bandwidth » preserves power & area advantages » Support for multimedia $ I/O Proc Bus – IBM will fabricate Sp ’ 01 » 200 MHz, 3. 2 Gflops, 2 W » . 18 um mixed logic/DRAM L o f g a i b c D R A M D Rf Aa Mb 3

IRAM: Scaling within a Chip Proc Microprocessor & DRAM on a single chip: $ L 2$ Bus – Avoids memory bus bottleneck I/O – Address power limits by Bus spreading logic over chip D R A M VIRAM chip: – Vector architecture » exploits bandwidth » preserves power & area advantages » Support for multimedia $ I/O Proc Bus – IBM will fabricate Sp ’ 01 » 200 MHz, 3. 2 Gflops, 2 W » . 18 um mixed logic/DRAM L o f g a i b c D R A M D Rf Aa Mb 3

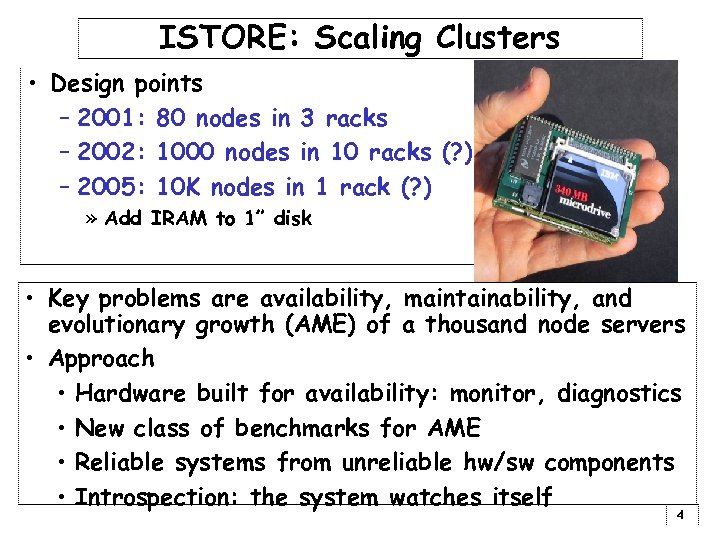

ISTORE: Scaling Clusters • Design points – 2001: 80 nodes in 3 racks – 2002: 1000 nodes in 10 racks (? ) – 2005: 10 K nodes in 1 rack (? ) » Add IRAM to 1” disk • Key problems are availability, maintainability, and evolutionary growth (AME) of a thousand node servers • Approach • Hardware built for availability: monitor, diagnostics • New class of benchmarks for AME • Reliable systems from unreliable hw/sw components • Introspection: the system watches itself 4

ISTORE: Scaling Clusters • Design points – 2001: 80 nodes in 3 racks – 2002: 1000 nodes in 10 racks (? ) – 2005: 10 K nodes in 1 rack (? ) » Add IRAM to 1” disk • Key problems are availability, maintainability, and evolutionary growth (AME) of a thousand node servers • Approach • Hardware built for availability: monitor, diagnostics • New class of benchmarks for AME • Reliable systems from unreliable hw/sw components • Introspection: the system watches itself 4

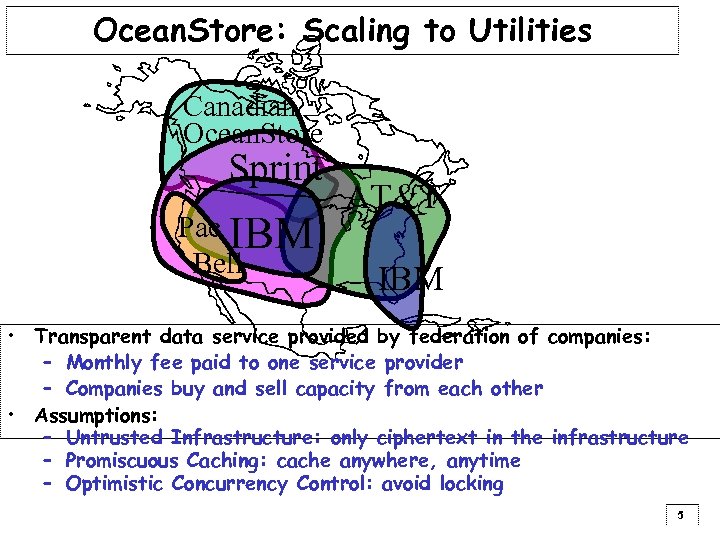

Ocean. Store: Scaling to Utilities Canadian Ocean. Store Sprint Pac IBM Bell AT&T IBM • Transparent data service provided by federation of companies: – Monthly fee paid to one service provider – Companies buy and sell capacity from each other • Assumptions: – Untrusted Infrastructure: only ciphertext in the infrastructure – Promiscuous Caching: cache anywhere, anytime – Optimistic Concurrency Control: avoid locking 5

Ocean. Store: Scaling to Utilities Canadian Ocean. Store Sprint Pac IBM Bell AT&T IBM • Transparent data service provided by federation of companies: – Monthly fee paid to one service provider – Companies buy and sell capacity from each other • Assumptions: – Untrusted Infrastructure: only ciphertext in the infrastructure – Promiscuous Caching: cache anywhere, anytime – Optimistic Concurrency Control: avoid locking 5

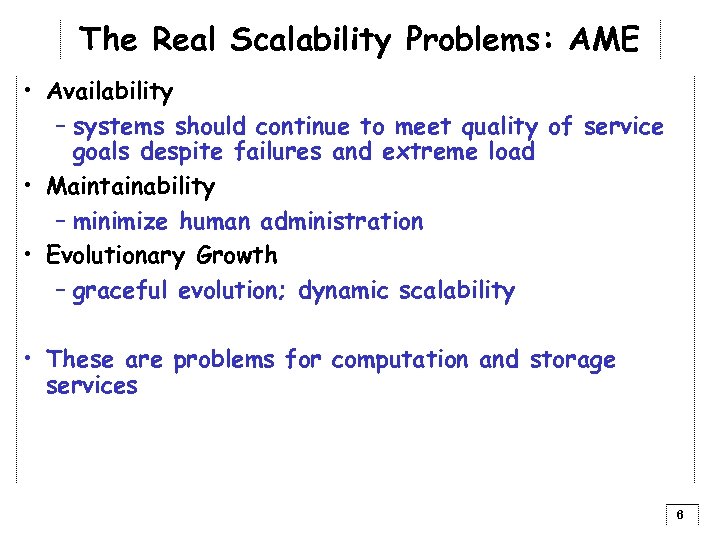

The Real Scalability Problems: AME • Availability – systems should continue to meet quality of service goals despite failures and extreme load • Maintainability – minimize human administration • Evolutionary Growth – graceful evolution; dynamic scalability • These are problems for computation and storage services 6

The Real Scalability Problems: AME • Availability – systems should continue to meet quality of service goals despite failures and extreme load • Maintainability – minimize human administration • Evolutionary Growth – graceful evolution; dynamic scalability • These are problems for computation and storage services 6

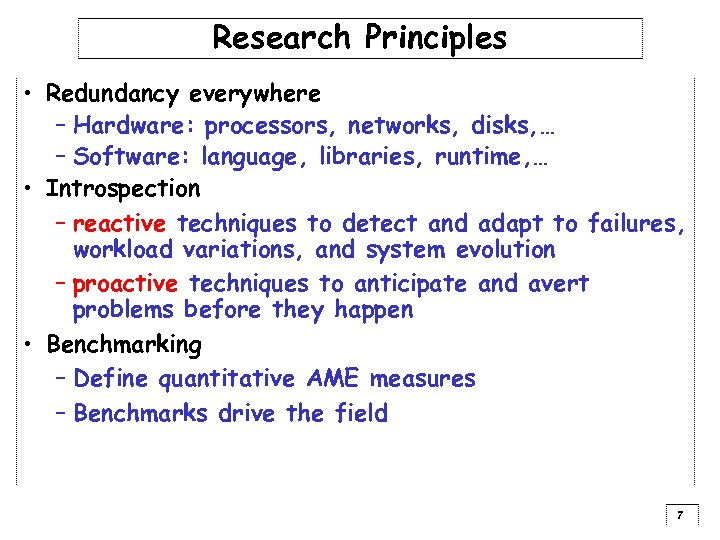

Research Principles • Redundancy everywhere – Hardware: processors, networks, disks, … – Software: language, libraries, runtime, … • Introspection – reactive techniques to detect and adapt to failures, workload variations, and system evolution – proactive techniques to anticipate and avert problems before they happen • Benchmarking – Define quantitative AME measures – Benchmarks drive the field 7

Research Principles • Redundancy everywhere – Hardware: processors, networks, disks, … – Software: language, libraries, runtime, … • Introspection – reactive techniques to detect and adapt to failures, workload variations, and system evolution – proactive techniques to anticipate and avert problems before they happen • Benchmarking – Define quantitative AME measures – Benchmarks drive the field 7

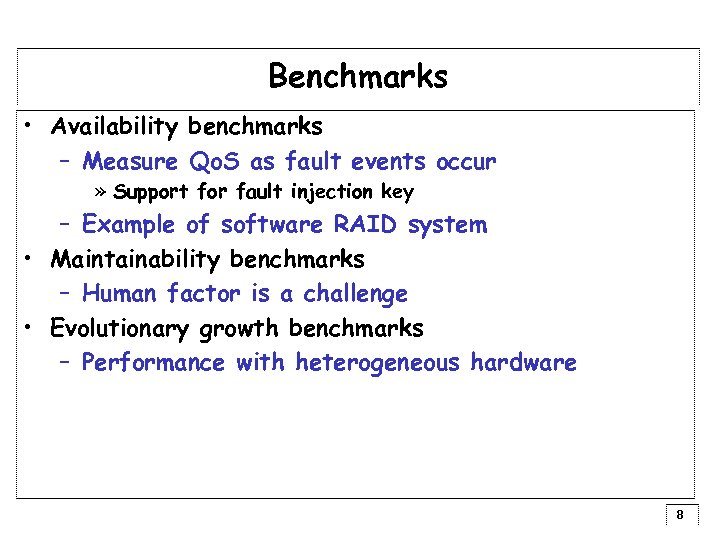

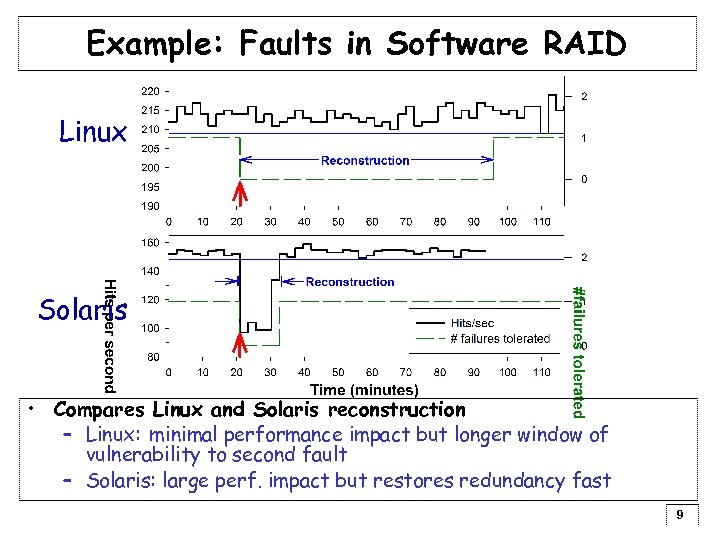

Benchmarks • Availability benchmarks – Measure Qo. S as fault events occur » Support for fault injection key – Example of software RAID system • Maintainability benchmarks – Human factor is a challenge • Evolutionary growth benchmarks – Performance with heterogeneous hardware 8

Benchmarks • Availability benchmarks – Measure Qo. S as fault events occur » Support for fault injection key – Example of software RAID system • Maintainability benchmarks – Human factor is a challenge • Evolutionary growth benchmarks – Performance with heterogeneous hardware 8

Example: Faults in Software RAID Linux Solaris • Compares Linux and Solaris reconstruction – Linux: minimal performance impact but longer window of vulnerability to second fault – Solaris: large perf. impact but restores redundancy fast 9

Example: Faults in Software RAID Linux Solaris • Compares Linux and Solaris reconstruction – Linux: minimal performance impact but longer window of vulnerability to second fault – Solaris: large perf. impact but restores redundancy fast 9

Simulating Microscale Biological Systems • Large scale simulation useful for – Fundamental biological questions: cell behavior – Design of treatments, including Bio-MEMs • Simulations limited in part by – Machine complexity, e. g. , memory hierarchies – Algorithmic complexity, e. g. , adaptation • Old software model: – Hide the machine from the users » Implicit parallelism, hardware-controlled caching, – Results were unusable » Witness success of MPI 10

Simulating Microscale Biological Systems • Large scale simulation useful for – Fundamental biological questions: cell behavior – Design of treatments, including Bio-MEMs • Simulations limited in part by – Machine complexity, e. g. , memory hierarchies – Algorithmic complexity, e. g. , adaptation • Old software model: – Hide the machine from the users » Implicit parallelism, hardware-controlled caching, – Results were unusable » Witness success of MPI 10

New Model for Scalable High Confidence Computing • Domain-specific language that judiciously exposes machine structure – Explicit parallelism, load balancing and locality control – Allows for construction of complex, distributed data structures • Current • Demonstration on higher level models • Heart simulation • Future plans • Algorithms and software that adapts to faults • Microscale systems 11

New Model for Scalable High Confidence Computing • Domain-specific language that judiciously exposes machine structure – Explicit parallelism, load balancing and locality control – Allows for construction of complex, distributed data structures • Current • Demonstration on higher level models • Heart simulation • Future plans • Algorithms and software that adapts to faults • Microscale systems 11

Conclusions • Scaling at all levels – Processors, clusters, wide area • Application challenges – Both storage and compute intensive • Key challenges to future infrastructure are: – Availability and reliability – Complexity of the machine 12

Conclusions • Scaling at all levels – Processors, clusters, wide area • Application challenges – Both storage and compute intensive • Key challenges to future infrastructure are: – Availability and reliability – Complexity of the machine 12