8a9568196f635274a410dfcaddf53dc3.ppt

- Количество слайдов: 51

Scaling and stabilizing large server side infrastructure Yoshinori Matsunobu Principal Infrastructure Architect De. NA

Who am I ● 2006 -2010: Lead My. SQL Consultant at My. SQL AB (now Oracle) ●Sep 2010 - : Database and Infrastructure engineer at De. NA ● ● ● Eliminating single-point-of-failure Establishing no-downtime operations Performance optimizations, reducing the total number of servers Avoiding sudden performance stalls Many more ●Speaking at many conferences such as mysqlconf, OSCON ●Oracle ACE Director from 2011

De. NA: Company Background ●One ● ● of the largest social game providers in Japan Social game platform “Mobage” and many social game titles Subsidiary ngmoco: ) at San Francisco ●Japan localized phone, Smart Phone, and PC games ●Operating thousands of servers in multiple datacenters ● 2 -3 billion page views per day ● 35+ million users ●Got O’Reilly My. SQL Award in 2011 Note: This is not a sponsored session so the talks and slides are neutral

Agenda ● Scaling strategies ● Cloud vs Real Servers ● Performance practices ● Administration practices

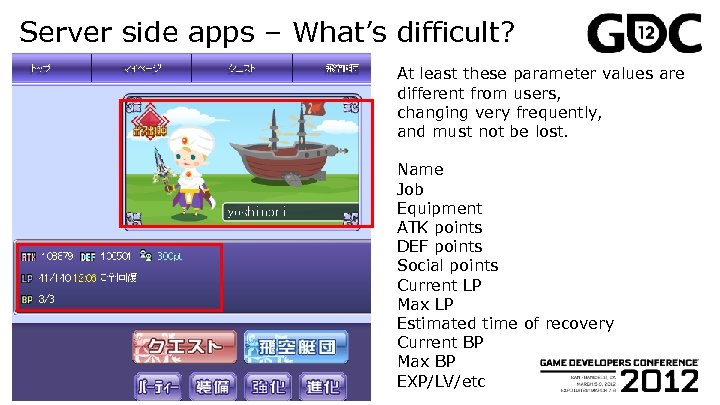

Server side apps – What’s difficult? At least these parameter values are different from users, changing very frequently, and must not be lost. Name Job Equipment ATK points DEF points Social points Current LP Max LP Estimated time of recovery Current BP Max BP EXP/LV/etc

Server side apps – What’s difficult? ●Dynamic ● Should be stored into (stable) databases ●Should ● ●Very ● ●Data ● data must not be lost be highly available Some kinds of “data redundancy” is needed frequently selected and updated Top page is the most frequently accessed. All of the latest values need to be fetched Name does not change, but LP/EXP change very frequently Caching (read scaling) and write tuning strategy should be planned size can be huge 100 K per user x 100 M users = 10 TB in total ●Some kinds of “data distribution” strategy might be needed ●Focus on “text” (images/movies are read only, and can be located on static contents servers so it’s easy from server side point of view) ● Not so much different between online games and general web services

Characteristics of online/social games ● It is very difficult to predict growth ●Social games grow (or shrink) rapidly ●i. e. Estimating 10 Million page views per day before going live. . ●Good case: 100 Million page views/day ●Bad case: Only 10 K page views/day ●It is necessary to prepare servers for handling enough traffics ●It takes time to purchase, ship and setup 100 physical servers ●Too many unused servers might kill your company ● For smaller companies ●Using ● cloud services (AWS, Rackspace, etc) is much less risky For larger companies ●Servers in stock can probably be used

Scaling strategy 1. 2. 3. 4. 5. Single Server Multiple-Tier servers Scaling Reads / Database Redundancy Horizontal Partitioning / Sharding Distributing across regions

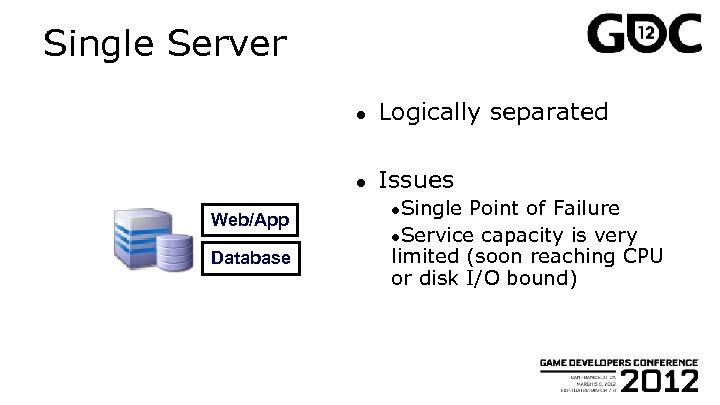

Single Server ● ● Web/App Database Logically separated Issues ●Single Point of Failure ●Service capacity is very limited (soon reaching CPU or disk I/O bound)

H/W failure often happens ●At De. NA, we run thousands of machines in production ● ●Most ● ~N, 000 web servers ~1, 000 database (mainly My. SQL) servers ~100 caching (memcached, etc) servers of server failures are caused by H/W problems Disk I/O errors, memory corruption, etc ●Mature ● ●Do middleware is stable enough My. SQL has not crashed by My. SQL own bugs for years not afraid too much (but prepare) for upgrading middleware ● Older software (Cent. OS 4, My. SQL 5. 0, etc) has lots of bugs that will never be fixed

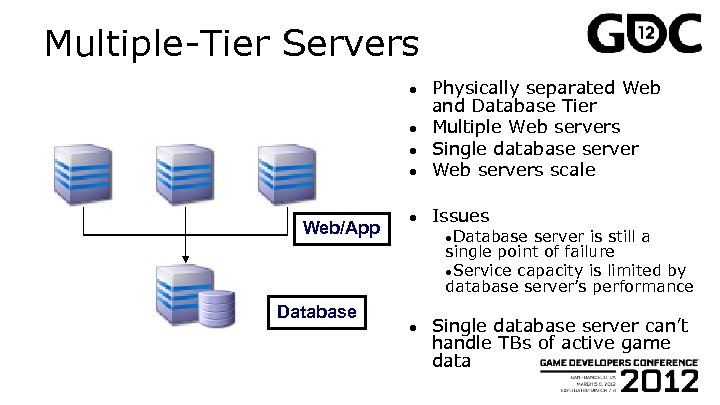

Multiple-Tier Servers ● Physically separated Web and Database Tier Multiple Web servers Single database server Web servers scale ● Issues ● ● ● Web/App Database ●Database server is still a single point of failure ●Service capacity is limited by database server’s performance ● Single database server can’t handle TBs of active game data

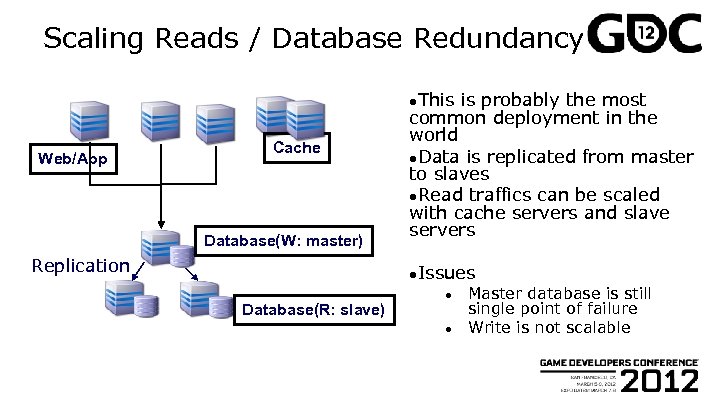

Scaling Reads / Database Redundancy ●This Web/App Cache Database(W: master) Replication is probably the most common deployment in the world ●Data is replicated from master to slaves ●Read traffics can be scaled with cache servers and slave servers ●Issues Database(R: slave) ● ● Master database is still single point of failure Write is not scalable

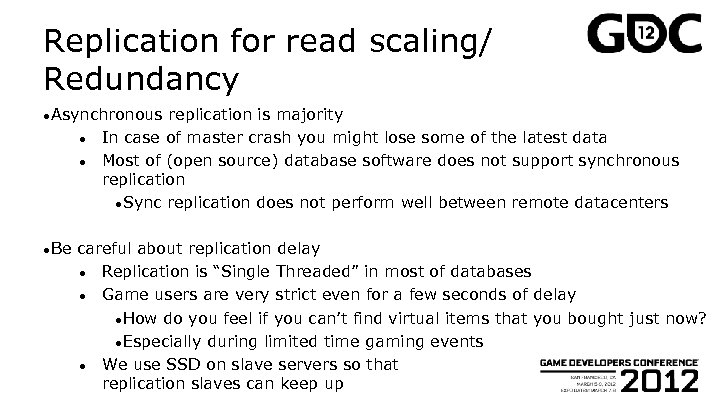

Replication for read scaling/ Redundancy ●Asynchronous ● ● ●Be replication is majority In case of master crash you might lose some of the latest data Most of (open source) database software does not support synchronous replication ●Sync replication does not perform well between remote datacenters careful about replication delay ● Replication is “Single Threaded” in most of databases ● Game users are very strict even for a few seconds of delay ●How do you feel if you can’t find virtual items that you bought just now? ●Especially during limited time gaming events ● We use SSD on slave servers so that replication slaves can keep up

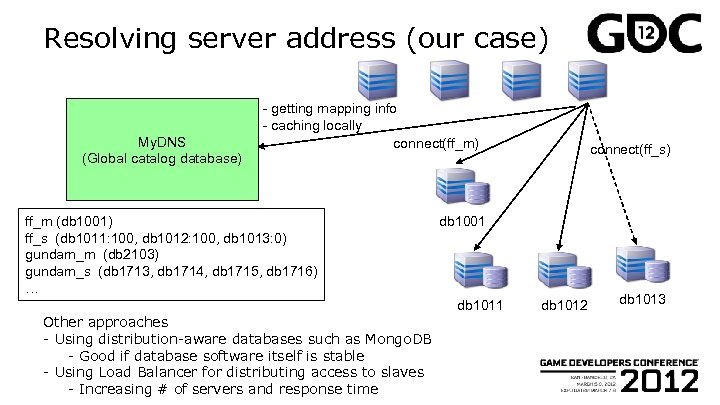

Resolving server address (our case) My. DNS (Global catalog database) - getting mapping info - caching locally connect(ff_m) ff_m (db 1001) ff_s (db 1011: 100, db 1012: 100, db 1013: 0) gundam_m (db 2103) gundam_s (db 1713, db 1714, db 1715, db 1716) … db 1001 db 1011 Other approaches - Using distribution-aware databases such as Mongo. DB - Good if database software itself is stable - Using Load Balancer for distributing access to slaves - Increasing # of servers and response time connect(ff_s) db 1012 db 1013

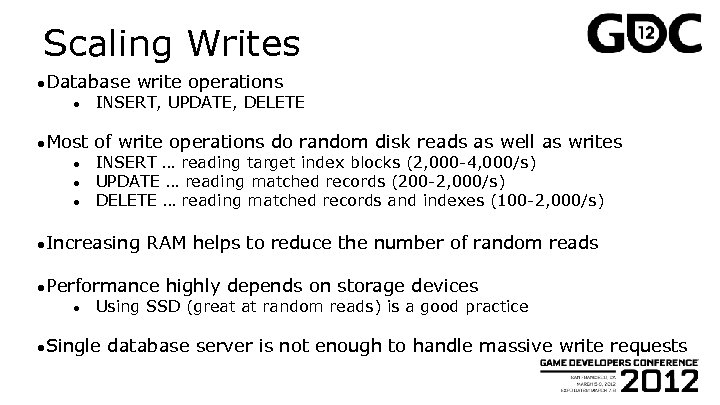

Scaling Writes ●Database ● ●Most ● ● ● write operations INSERT, UPDATE, DELETE of write operations do random disk reads as well as writes INSERT … reading target index blocks (2, 000 -4, 000/s) UPDATE … reading matched records (200 -2, 000/s) DELETE … reading matched records and indexes (100 -2, 000/s) ●Increasing RAM helps to reduce the number of random reads ●Performance ● highly depends on storage devices Using SSD (great at random reads) is a good practice ●Single database server is not enough to handle massive write requests

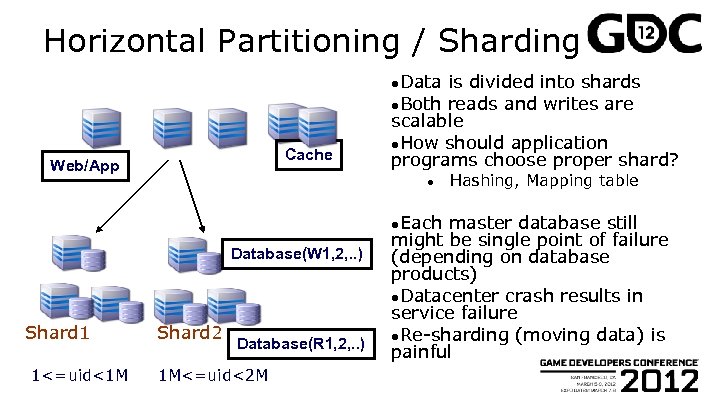

Horizontal Partitioning / Sharding ●Data Cache Web/App is divided into shards ●Both reads and writes are scalable ●How should application programs choose proper shard? ● ●Each Database(W 1, 2, . . ) Shard 1 1<=uid<1 M Shard 2 Database(R 1, 2, . . ) 1 M<=uid<2 M Hashing, Mapping table master database still might be single point of failure (depending on database products) ●Datacenter crash results in service failure ●Re-sharding (moving data) is painful

Approaches for Sharding ●Developing ● ● ●Using ● ● application framework Creating a catalog (mapping) table (i. e. user_id=1. . 1 M => shard 1) Looking up a catalog table (and cache it), then accessing to a proper shard So far many people have taken this approach Once you create a framework, you can use it for many other games Re-sharding (moving data between shards) is difficult sharding-aware database products Database client library automatically selects a proper shard Many recent distributed No. SQL databases support it Mongo. DB, Mem. Base, HBase (and many more) are popular Newer databases tend to have lots of bugs and stability problems

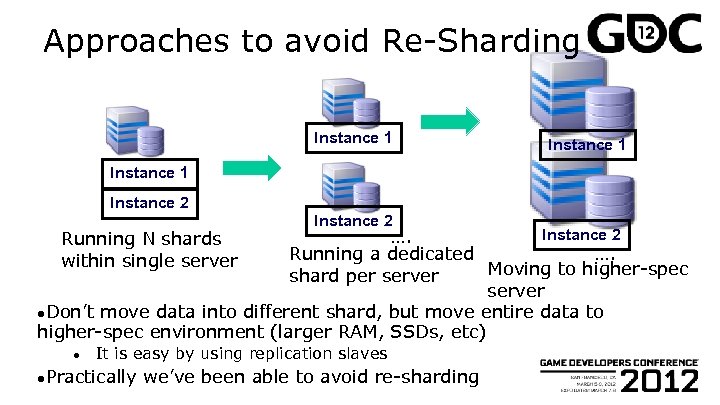

Approaches to avoid Re-Sharding Instance 1 Instance 2 …. Running a dedicated …. Moving to higher-spec shard per server ●Don’t move data into different shard, but move entire data to higher-spec environment (larger RAM, SSDs, etc) Running N shards within single server ● It is easy by using replication slaves ●Practically we’ve been able to avoid re-sharding

Handling rapidly growing users ●On ● ● one of our most popular online games. . At first we started with 2 database shards + 3 slaves per shard The number of registered users in the first two days after launch was much higher than expected We added two more shards dynamically, then mapped all new users to new shards We have heavily used “range-partitioning” for removing older data ●Can drop older data very quickly (less than milliseconds) ●This has helped to reduce total data size (less than 250 GB database size per shard) a lot

Mitigating Replication delay ●For older games using HDD on slaves. . ● ● Even 1, 000+ updates per sec causes replication delay Replacing HDD with SATA SSD on slaves is a good practice ●Many updates (4, 000+ updates per second) ●Some slaves with SSD got behind master ● Increasing RAM ●Increasing ● RAM from 32 GB to 64 GB per shard Reducing the total write volumes ●innodb-doublewrite=0 ● ●We helps in Inno. DB(My. SQL) Avoiding sudden short stalls ●Using xfs filesystem instead of ext 3 migrated to higher spec servers without downtime. We haven’t needed re-shards so far

Cloud vs Physical servers ●De. NA uses physical servers (N+ thousands) ●Some of our subsidiaries use AWS / App. Engine

Advantages of cloud servers ●Initial ● ●No You don’t need to buy 10 servers (may cost more than $50, 000) for a new game that might be closed within a few months lead time to increase servers ● ●No costs are very small It is not uncommon to take 1 month to get new physical H/W components penalty to decrease servers ● If unused physical servers can’t be used anywhere else, it just wastes money

Disadvantages of Cloud servers ●Taking ● ● ● longer time to analyze problems Network configurations are black box Storage devices are black box Problems caused by disks and network often happen, but it takes longer time to find root causes ●This ●Limited ● really matters for games that generate 10 M$/month choices for performance optimizations What I want are: ●Using direct attached PCIe SSD for handling massive reads/writes ●Customizing Linux to reduce TCP connection timeouts (3 seconds to 0. 5 -1 second) ●Updating device drivers ● ●More large Per-server performance tends to be (much) lower than physical server expensive than physical servers when your system becomes

Distributing across DC/regions ●Availability ● Single datacenter crash should not result in service failure ●Latency ● / Response time Round trip time (RTT) between Tokyo to San Francisco exceeds 100 ms ● 10+ round trips within 1 HTTP request will take more than 1 second ● If you plan to release games in Japan/APAC, I don’t recommend sending all contents from US ●Use CDN (to send static contents from APAC region) or run servers in APAC

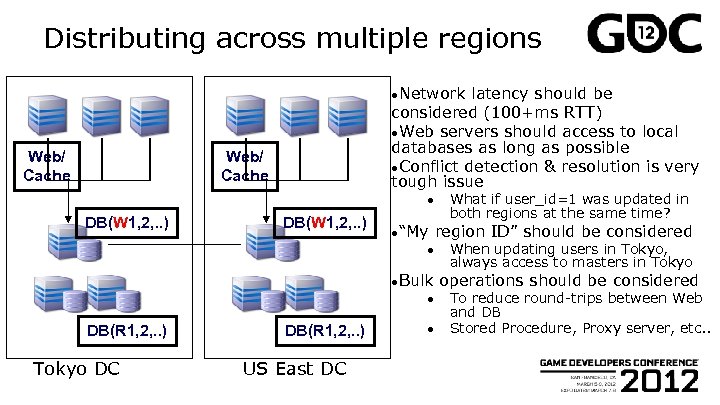

Distributing across multiple regions ●Network Web/ Cache latency should be considered (100+ms RTT) ●Web servers should access to local databases as long as possible ●Conflict detection & resolution is very tough issue Web/ Cache ● DB(W 1, 2, . . ) ●“My ● ●Bulk ● DB(R 1, 2, . . ) Tokyo DC DB(R 1, 2, . . ) US East DC ● What if user_id=1 was updated in both regions at the same time? region ID” should be considered When updating users in Tokyo, always access to masters in Tokyo operations should be considered To reduce round-trips between Web and DB Stored Procedure, Proxy server, etc. .

Monitoring and Performance Practices ●Monitoring ●Fighting against stalls ●Improving per server performance ●Consolidating servers

Monitoring Server ● reachable via ping, http, mysql, etc ●H/W ● ● ● activity and OS errors Memory usage (to avoid out of memory) Disk failure, Disk block failure RAID controller failure Network errors (sometimes caused by bad switches) Clock time

Monitoring Server Performance ●Resource ● ● ● ●Web ● ● Load average (Not perfect, but better than nothing) Concurrency (the number of running threads: Useful to detect stalls) Disk busy rate (svctm, %iowait) CPU utilization (especially on web servers) Network traffics Free memory (Swap is bad / Be careful about memory leak) Servers HTTP response time The number of processes/threads that can accept new HTTP connections ●Database ● ● utilization per second Servers Queries per second Bad queries Long running transactions (blocking other clients) Replication delay

Best practices for minimizing replication delay ●Application ● side Continuously monitor bad queries ●Especially ● Do not run massive updates in single DML statement ●LOAD ● when deploying new modules DATA…; ALTER TABLE… Reduce the number of DML statements ●INSERT+UPDATE+DELETE ●Infrastructure ● ● ● side -> Single UPDATE Using SSD on slave servers Using larger RAM Using xfs filesystem

Performance analysis ●Identifying consuming ●Web ● server Profiling functions that take long elapsed time (i. e. using NYTprof for Perl based applications) ●Database ● ● reasons why the server is so slow or resource server Bad queries (full table scan/etc) / indexing Only a few query patterns consume more than 90% time

Thundering Herd / C 10 K ●Most of middleware can’t handle 100 K+ concurrent requests per server ●Work hard for stable request processing ● ● Sudden cache miss (CDN bug, memcached bug, etc) will result in sending burst requests toward backend servers Case: memcached bug (recently fixed): ●memcached crashed when thousands of persistent connections are established ●All requests to memcached went to database servers (cache miss) ●backend database servers couldn’t handle 10 x more queries -> service down

Stalls ● cause bad response time and unstable resource usage In bad cases web servers or database servers will be down ●Serious ● if happened on database servers All web servers are affected ●Identifying ● ● stalls is important, but difficult Very often root causes are inside source codes of the middleware I often take stack traces and dig into My. SQL source codes

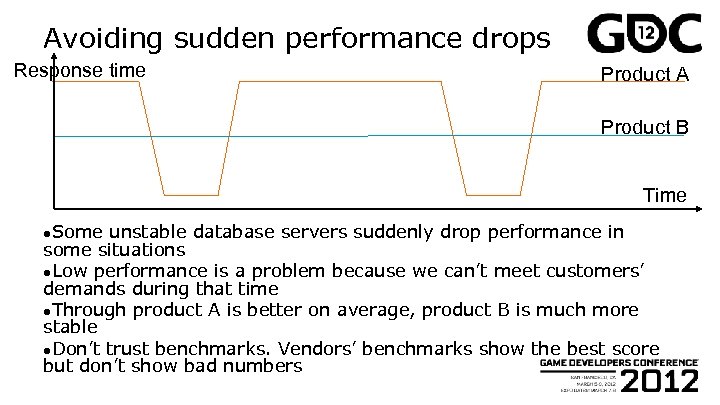

Avoiding sudden performance drops Response time Product A Product B Time ●Some unstable database servers suddenly drop performance in some situations ●Low performance is a problem because we can’t meet customers’ demands during that time ●Through product A is better on average, product B is much more stable ●Don’t trust benchmarks. Vendors’ benchmarks show the best score but don’t show bad numbers

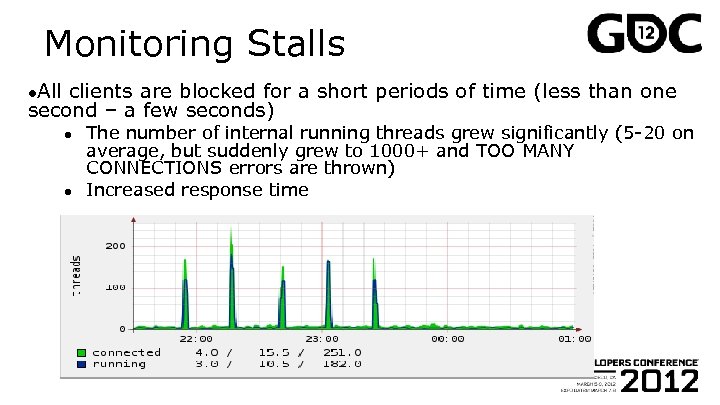

Monitoring Stalls ●All clients are blocked for a short periods of time (less than one second – a few seconds) ● ● The number of internal running threads grew significantly (5 -20 on average, but suddenly grew to 1000+ and TOO MANY CONNECTIONS errors are thrown) Increased response time

Avoiding Stalls ●Avoid ● ● holding locks for a long time Other clients that need the locks are blocked Establishing new TCP connection … sometimes takes 3 seconds for SYN retry ●Prohibiting ● ●Be ● cheats from malicious users i. e Massive requests from same user id careful when choosing database products Most of newly announced database products don’t care about stalls

Improving per-server performance ●To handle 1 million queries per second. . ● ● 1000 queries/sec per server : 1000 servers in total 10000 queries/sec per server : 100 servers in total ●Additional year ●If 900 servers will cost 10 M$ initially, 1 M$ every you can increase per server throughput, you can reduce the total number of servers and TCO

Recent H/W trends ● 64 bit ● ●SSD ● ● Linux + large RAM 60 GB – 128 GB RAM is quite common Database performance can be N times faster Using SSD on My. SQL slaves is a good practice to eliminate replication delay ●Network, ● ● CPU Don’t use 100 Mbps CPU speed matters: There are still many single threaded codes inside middleware

Consolidating Web/DB servers ●How do you handle unpopular games? ●Running a small game on high-end servers is not cost effective ●Recent H/W is fast ●Running N DB instances on a single server is not uncommon ●De. NA consolidates 2 -10 games in a single database server

Performance is not everything ●High Availability, Disaster Recovery (backups), Security, etc… ●Be ● ● careful about malicious users Bot / repeatable access via tools Duplicating items / Real money trades Illegal logging in etc

Administration practices ●Automating setups ●Operations without downtime ●Automating failover

Automation ● ●At The number of dev/ops engineers can not grow as quickly as the number of servers De. NA: ● ●Do is important to reduce operational costs Hundreds of servers are managed per devops engineer Initial server setups (installing OS, setting up Web server) can be done within 30 minutes Can add to / remove from services in seconds to minutes not automate (in production) what you do not understand ● ● How do you restart failover when automated failover stops with errors in the middle? Without understanding in depth it is very hard to recover

Automating installation/setup ●Automate ● ● software installation / filesystem partitioning / etc Kickstart + Local yum repository Cloning OS ●Copying entire OS image (including software packages and conf files) from readonly base server ●We use this approach ●Automate ● initial configuration Not all servers are identical ●Hostname, IP addr, disk size ●some parameters (server-id in My. SQL) ● Use configuration manager like Chef/Puppet (we use similar internal tool) ●Automate ● continuous configuration checking Sometimes people change configurations tentatively and forget to set them back

Moving Servers - Games are expanding rapidly ●For ● ● ● expanding games Adding more web servers Adding more replication slaves or cache servers (for read scaling) Adding more shards (for write scaling) ●De. NA has Perl based sharding framework on application side so that we can add new shards without stopping services ● Scaling up master’s H/W, or upgrading My. SQL version ●More RAM, HDD->SSD/PCI-E SSD, Faster CPU, etc

Moving Servers - Games are shrinking gradually ●For ● ● shrinking games Decreasing web servers Decreasing replication slaves Migrating servers to lower-spec machines Consolidating a few servers into single machine

Moving database servers ●For many databases (My. SQL etc), there is only one master server (replication master) per shard ●Moving ● ●We ● ● ● master is not trivial Many people allocate scheduled maintenance downtime want to move master servers more frequently Scaling-up or Scaling-down (Online games have many more opportunities than non-game web services) Upgrading My. SQL version / updating non-dynamic parameters Working around power outage: Moving games to remote datacenter

Desire for easier operations ●In many cases people do not want to allocate maintenance window ● ● ● Announcing to users, coordinating with customer support, etc Longer downtime reduces revenue and hurts brands Operating staffs will be exhausted by too many midnight work ●Reducing maintenance time is important to manage hundreds or thousands of servers

Our case ●Previous ● ● Allocating 30+ minute maintenance window after 2: 00 am Announcing at top page of the game Coordinating with customer support team Couldn’t do many times because it’s painful ●Current ● ● Migrating to a new server within 0. 5 -3 seconds gracefully with an online My. SQL master switching tool (“MHA for My. SQL”: OSS) Not allocating maintenance window: can be done in daytime ●Note: Be very careful about error handling when using databases that support “automated master switch” ● ● Killing database sessions might result in data inconsistency If it waits for a long time to disconnect, downtime will be longer

Automating Failover ●Master database server is usually a single point of failure, and difficult for failover ●Bleeding edge databases that support automated failover often don’t work as expected ● ●In Split-brain, false positive, etc our case: Using MHA for automated My. SQL failover ● ● Takes 9 -12 seconds to detect failure, 0. 5 -N seconds to complete failover Mostly caused by H/W failure

Manual, Simple failover ●In extreme crash scenario, automated failover is dangerous ● i. e. Datacenter failure ●Identifying ●But ● root cause should be the first priority failover should be done by simple enough command Just one command

Summary ●Understanding scaling solutions is important to provide large/growing social games ●Stable performance is important to avoid sudden service outage. Do not trust sales talks. Many middleware still have many stall problems ●Online games tend to grow or shrink rapidly. Solutions for setting up/migrating servers (including master database) are important

Thank you! ●Yoshinori. Matsunobu@gmail. com ●Yoshinori. Matsunobu at Facebook ●@matsunobu at Twitter

8a9568196f635274a410dfcaddf53dc3.ppt