2a24f8d5d2a2500d9157f8975ab44fd0.ppt

- Количество слайдов: 66

Scalable peer-to-peer substrates: A new foundation for distributed applications? Peter Druschel, Rice University Antony Rowstron, Microsoft Research Cambridge, UK Collaborators: Miguel Castro, Anne-Marie Kermarrec, MSR Cambridge Y. Charlie Hu, Sitaram Iyer, Animesh Nandi, Atul Singh, Dan Wallach, Rice University

Outline • • • Background Pastry proximity routing PAST SCRIBE Conclusions

Background Peer-to-peer systems • • distribution decentralized control self-organization symmetry (communication, node roles)

Peer-to-peer applications • • Pioneers: Napster, Gnutella, Free. Net File sharing: CFS, PAST [SOSP’ 01] Network storage: Far. Site [Sigmetrics’ 00], Oceanstore [ASPLOS’ 00], PAST [SOSP’ 01] Web caching: Squirrel[PODC’ 02] Event notification/multicast: Herald [Hot. OS’ 01], Bayeux [NOSDAV’ 01], CAN-multicast [NGC’ 01], SCRIBE [NGC’ 01], Split. Stream [submitted] Anonymity: Crowds [CACM’ 99], Onion routing [JSAC’ 98] Censorship-resistance: Tangler [CCS’ 02]

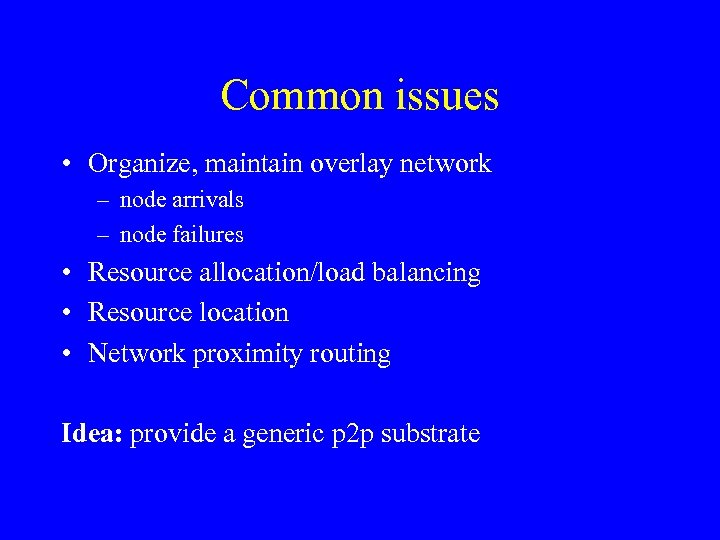

Common issues • Organize, maintain overlay network – node arrivals – node failures • Resource allocation/load balancing • Resource location • Network proximity routing Idea: provide a generic p 2 p substrate

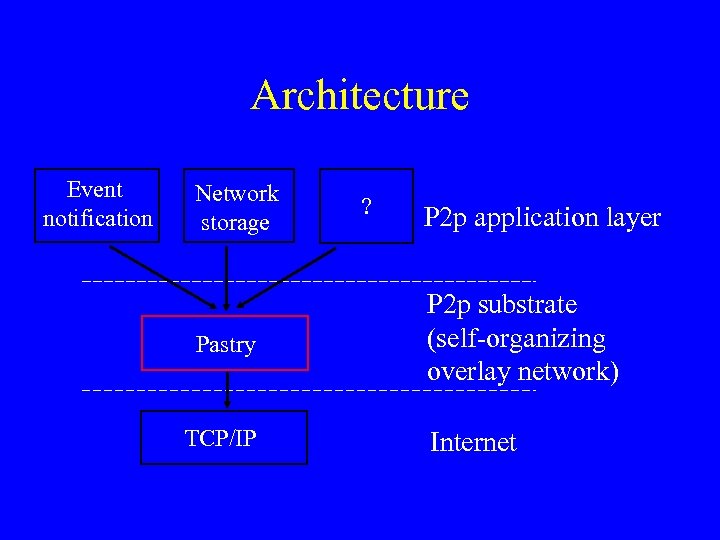

Architecture Event notification Network storage Pastry TCP/IP ? P 2 p application layer P 2 p substrate (self-organizing overlay network) Internet

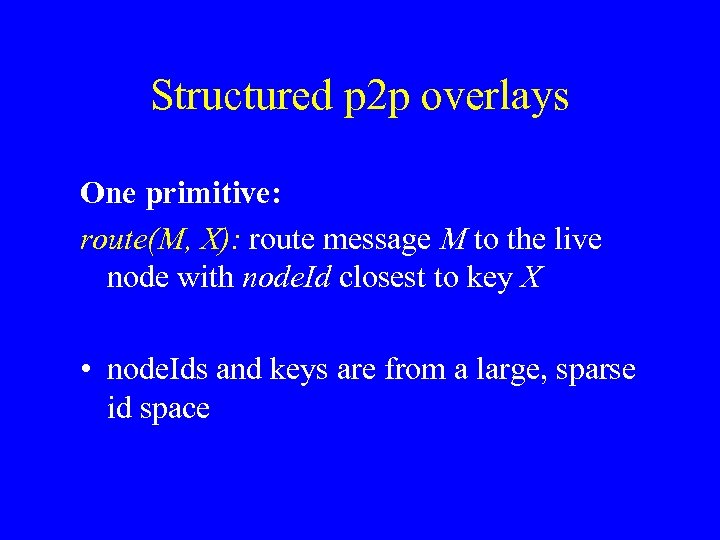

Structured p 2 p overlays One primitive: route(M, X): route message M to the live node with node. Id closest to key X • node. Ids and keys are from a large, sparse id space

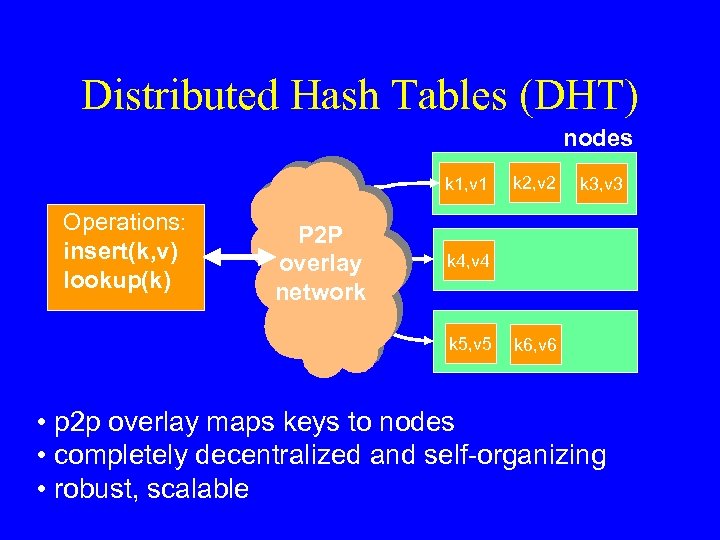

Distributed Hash Tables (DHT) nodes k 1, v 1 Operations: insert(k, v) lookup(k) P 2 P overlay network k 2, v 2 k 3, v 3 k 4, v 4 k 5, v 5 k 6, v 6 • p 2 p overlay maps keys to nodes • completely decentralized and self-organizing • robust, scalable

Why structured p 2 p overlays? • Leverage pooled resources (storage, bandwidth, CPU) • Leverage resource diversity (geographic, ownership) • Leverage existing shared infrastructure • Scalability • Robustness • Self-organization

Outline • • • Background Pastry proximity routing PAST SCRIBE Conclusions

![Pastry: Related work • Chord [Sigcomm’ 01] • CAN [Sigcomm’ 01] • Tapestry [TR Pastry: Related work • Chord [Sigcomm’ 01] • CAN [Sigcomm’ 01] • Tapestry [TR](https://present5.com/presentation/2a24f8d5d2a2500d9157f8975ab44fd0/image-11.jpg)

Pastry: Related work • Chord [Sigcomm’ 01] • CAN [Sigcomm’ 01] • Tapestry [TR UCB/CSD-01 -1141] • • • PNRP [unpub. ] Viceroy [PODC’ 02] Kademlia [IPTPS’ 02] Small World [Kleinberg ’ 99, ‘ 00] Plaxton Trees [Plaxton et al. ’ 97]

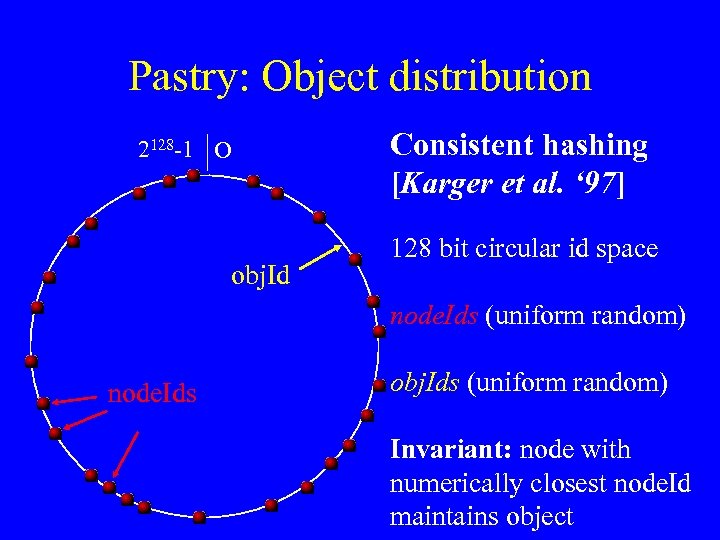

Pastry: Object distribution 2128 -1 O obj. Id Consistent hashing [Karger et al. ‘ 97] 128 bit circular id space node. Ids (uniform random) node. Ids obj. Ids (uniform random) Invariant: node with numerically closest node. Id maintains object

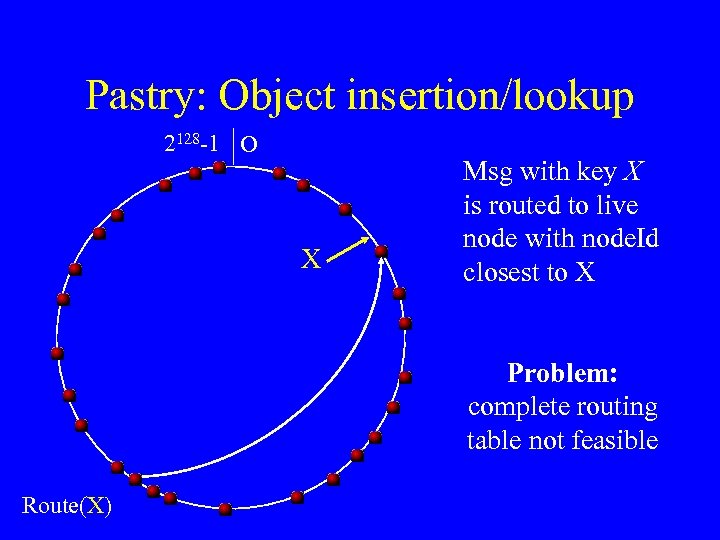

Pastry: Object insertion/lookup 2128 -1 O X Msg with key X is routed to live node with node. Id closest to X Problem: complete routing table not feasible Route(X)

Pastry: Routing Tradeoff • O(log N) routing table size • O(log N) message forwarding steps

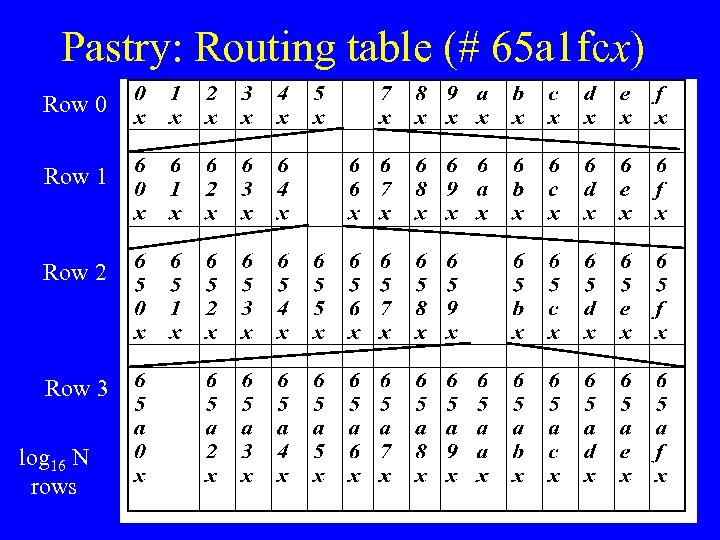

Pastry: Routing table (# 65 a 1 fcx) Row 0 Row 1 Row 2 Row 3 log 16 N rows

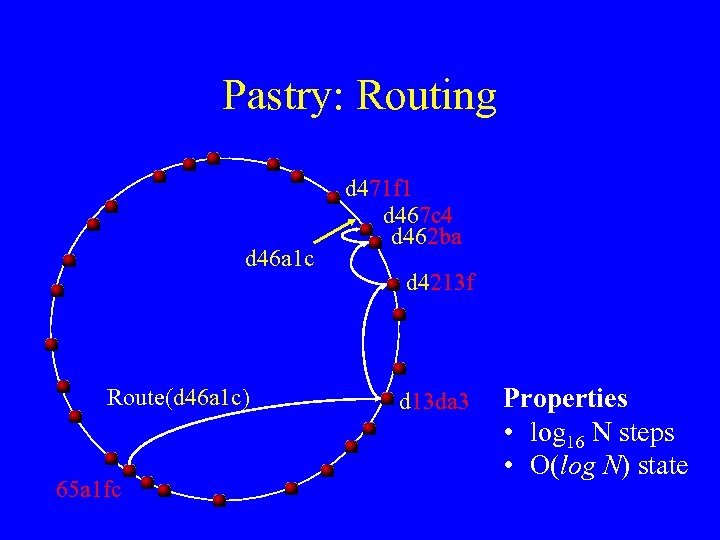

Pastry: Routing d 46 a 1 c Route(d 46 a 1 c) 65 a 1 fc d 471 f 1 d 467 c 4 d 462 ba d 4213 f d 13 da 3 Properties • log 16 N steps • O(log N) state

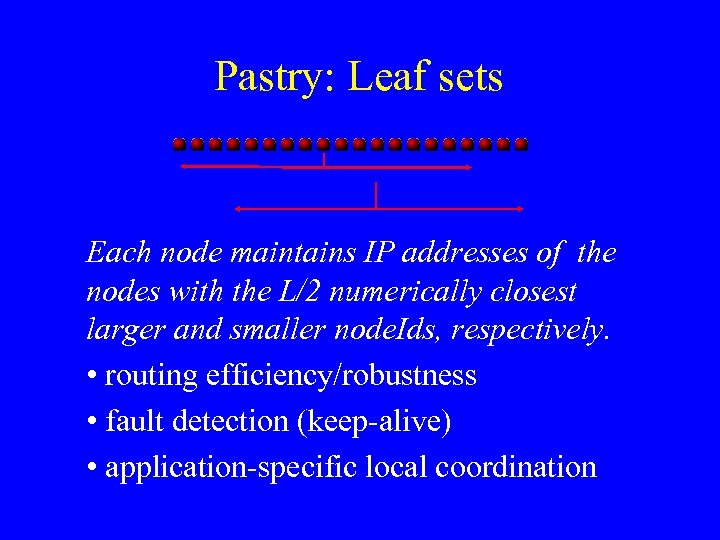

Pastry: Leaf sets Each node maintains IP addresses of the nodes with the L/2 numerically closest larger and smaller node. Ids, respectively. • routing efficiency/robustness • fault detection (keep-alive) • application-specific local coordination

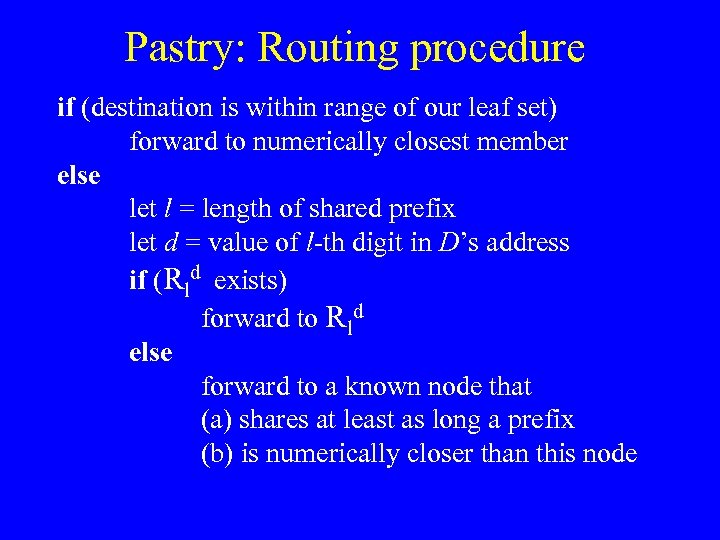

Pastry: Routing procedure if (destination is within range of our leaf set) forward to numerically closest member else let l = length of shared prefix let d = value of l-th digit in D’s address if (Rld exists) forward to Rld else forward to a known node that (a) shares at least as long a prefix (b) is numerically closer than this node

Pastry: Performance Integrity of overlay/ message delivery: • guaranteed unless L/2 simultaneous failures of nodes with adjacent node. Ids Number of routing hops: • No failures: < log 16 N expected, 128/b + 1 max • During failure recovery: – O(N) worst case, average case much better

Pastry: Self-organization Initializing and maintaining routing tables and leaf sets • Node addition • Node departure (failure)

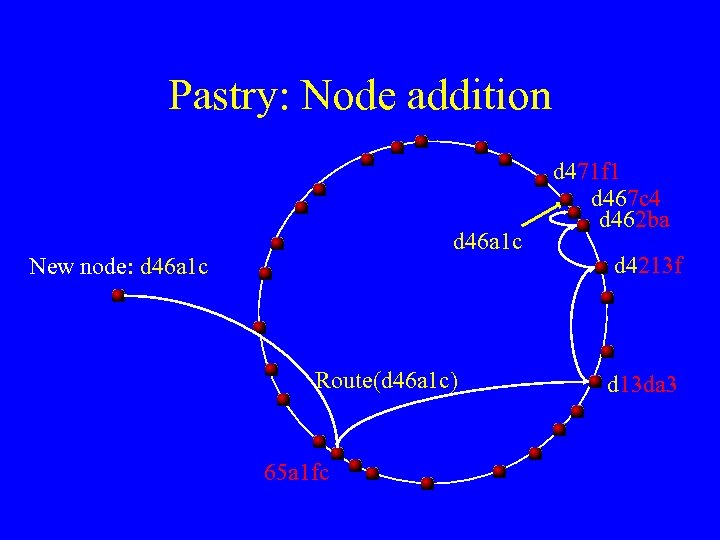

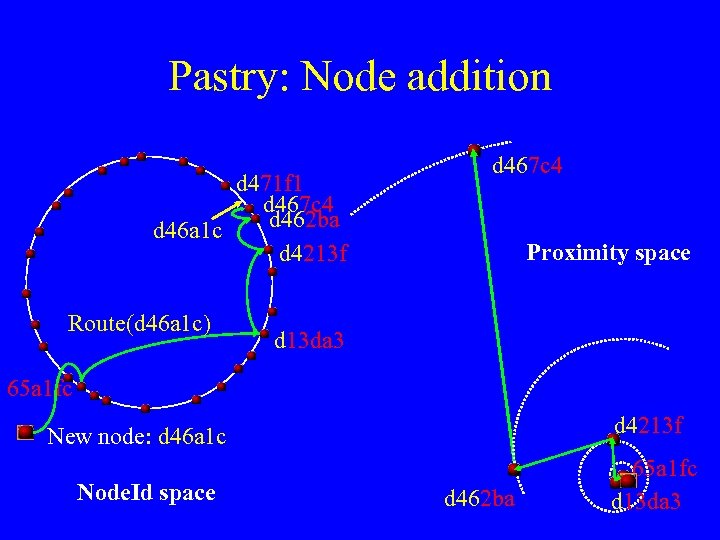

Pastry: Node addition d 46 a 1 c New node: d 46 a 1 c Route(d 46 a 1 c) 65 a 1 fc d 471 f 1 d 467 c 4 d 462 ba d 4213 f d 13 da 3

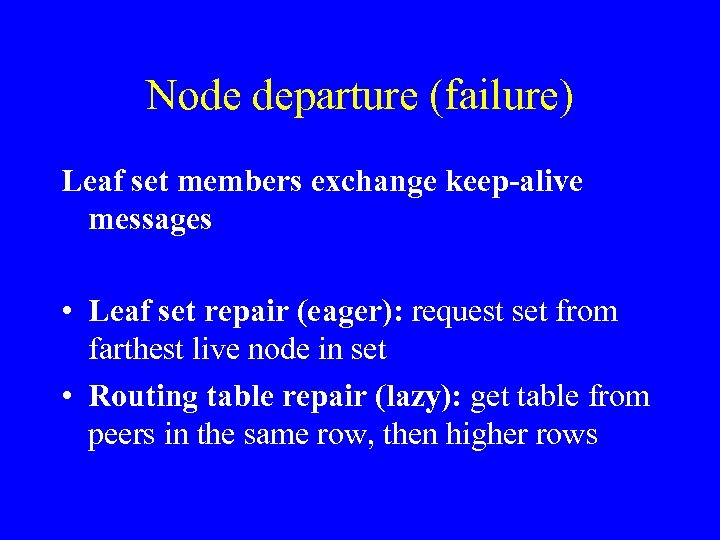

Node departure (failure) Leaf set members exchange keep-alive messages • Leaf set repair (eager): request set from farthest live node in set • Routing table repair (lazy): get table from peers in the same row, then higher rows

Pastry: Experimental results Prototype • implemented in Java • emulated network • deployed testbed (currently ~25 sites worldwide)

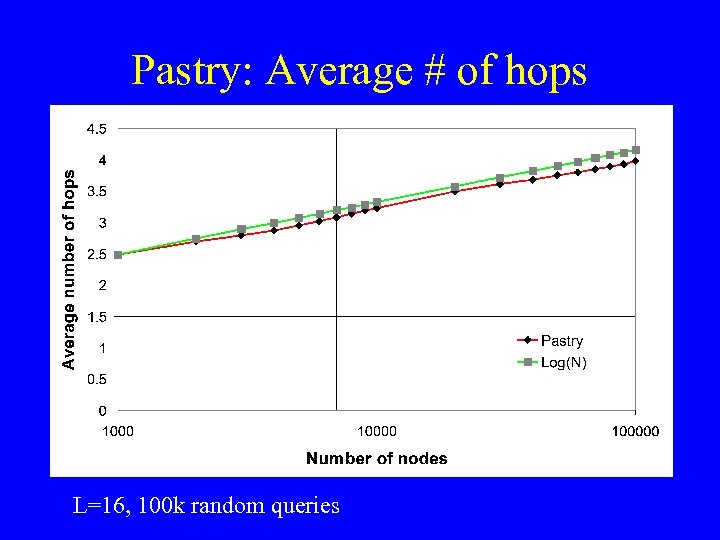

Pastry: Average # of hops L=16, 100 k random queries

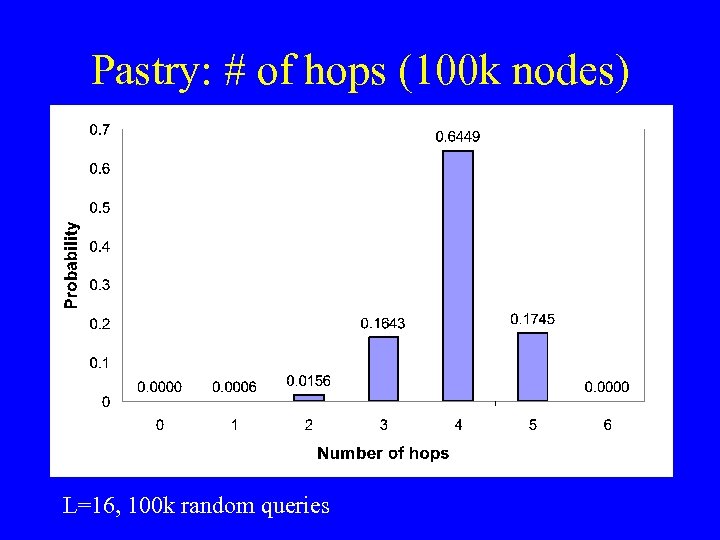

Pastry: # of hops (100 k nodes) L=16, 100 k random queries

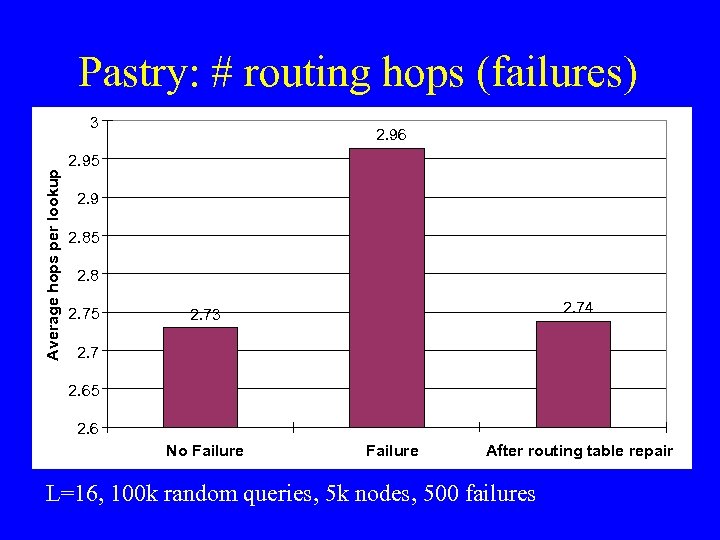

Pastry: # routing hops (failures) Average hops per lookup 3 2. 96 2. 95 2. 9 2. 85 2. 8 2. 75 2. 74 2. 73 2. 7 2. 65 2. 6 No Failure After routing table repair L=16, 100 k random queries, 5 k nodes, 500 failures

Outline • • • Background Pastry proximity routing PAST SCRIBE Conclusions

Pastry: Proximity routing Assumption: scalar proximity metric • e. g. ping delay, # IP hops • a node can probe distance to any other node Proximity invariant: Each routing table entry refers to a node close to the local node (in the proximity space), among all nodes with the appropriate node. Id prefix.

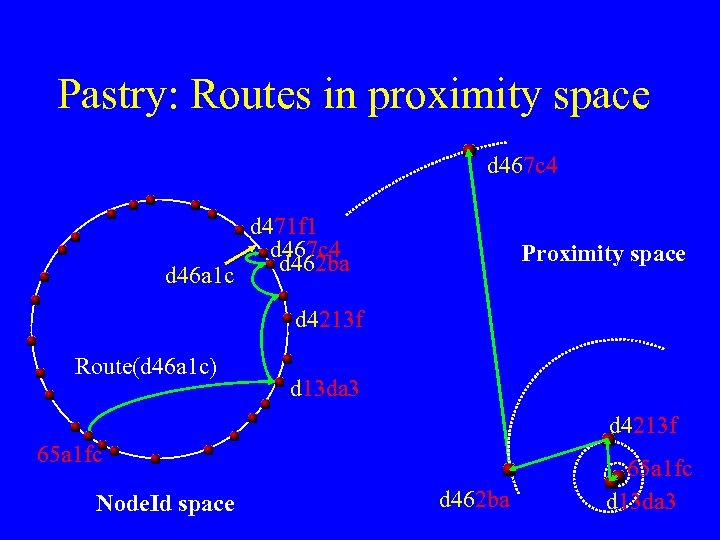

Pastry: Routes in proximity space d 467 c 4 d 471 f 1 d 467 c 4 d 462 ba d 46 a 1 c Proximity space d 4213 f Route(d 46 a 1 c) d 13 da 3 d 4213 f 65 a 1 fc Node. Id space d 462 ba 65 a 1 fc d 13 da 3

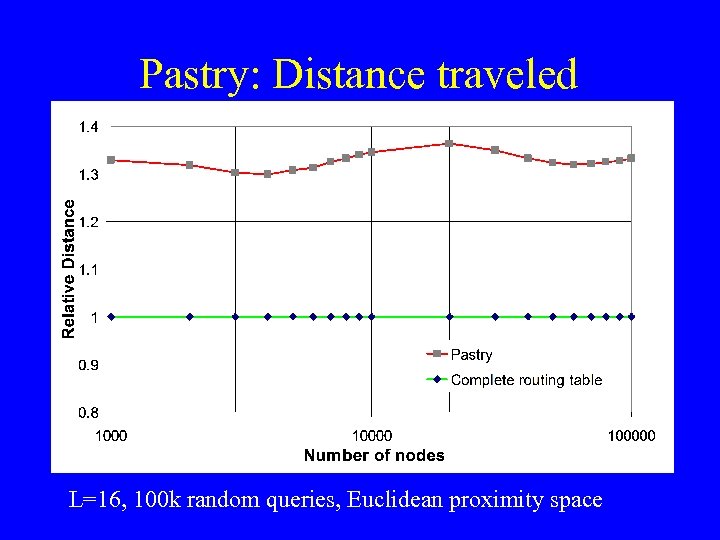

Pastry: Distance traveled L=16, 100 k random queries, Euclidean proximity space

Pastry: Locality properties 1) Expected distance traveled by a message in the proximity space is within a small constant of the minimum 2) Routes of messages sent by nearby nodes with same keys converge at a node near the source nodes 3) Among k nodes with node. Ids closest to the key, message likely to reach the node closest to the source node first

Pastry: Node addition d 471 f 1 d 467 c 4 d 462 ba d 46 a 1 c d 4213 f Route(d 46 a 1 c) d 467 c 4 Proximity space d 13 da 3 65 a 1 fc d 4213 f New node: d 46 a 1 c Node. Id space d 462 ba 65 a 1 fc d 13 da 3

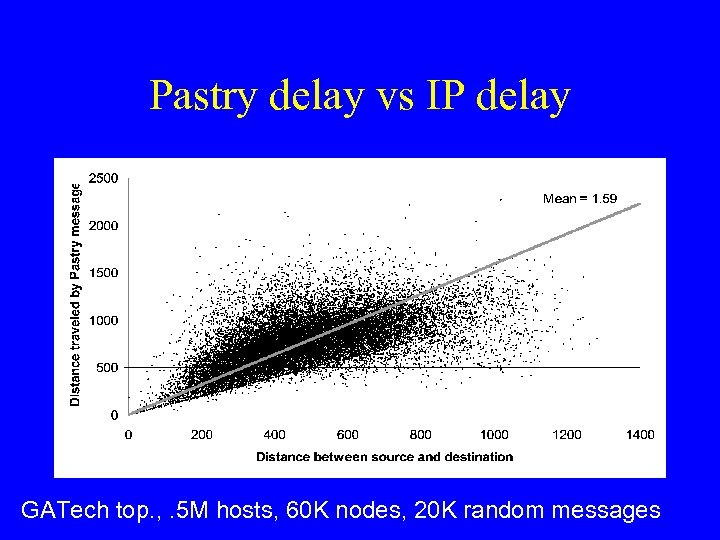

Pastry delay vs IP delay GATech top. , . 5 M hosts, 60 K nodes, 20 K random messages

Pastry: API • route(M, X): route message M to node with node. Id numerically closest to X • deliver(M): deliver message M to application • forwarding(M, X): message M is being forwarded towards key X • new. Leaf(L): report change in leaf set L to application

Pastry: Security • • Secure node. Id assignment Secure node join protocols Randomized routing Byzantine fault-tolerant leaf set membership protocol

Pastry: Summary • Generic p 2 p overlay network • Scalable, fault resilient, self-organizing, secure • O(log N) routing steps (expected) • O(log N) routing table size • Network proximity routing

Outline • • • Background Pastry proximity routing PAST SCRIBE Conclusions

PAST: Cooperative, archival file storage and distribution • • • Layered on top of Pastry Strong persistence High availability Scalability Reduced cost (no backup) Efficient use of pooled resources

PAST API • Insert - store replica of a file at k diverse storage nodes • Lookup - retrieve file from a nearby live storage node that holds a copy • Reclaim - free storage associated with a file Files are immutable

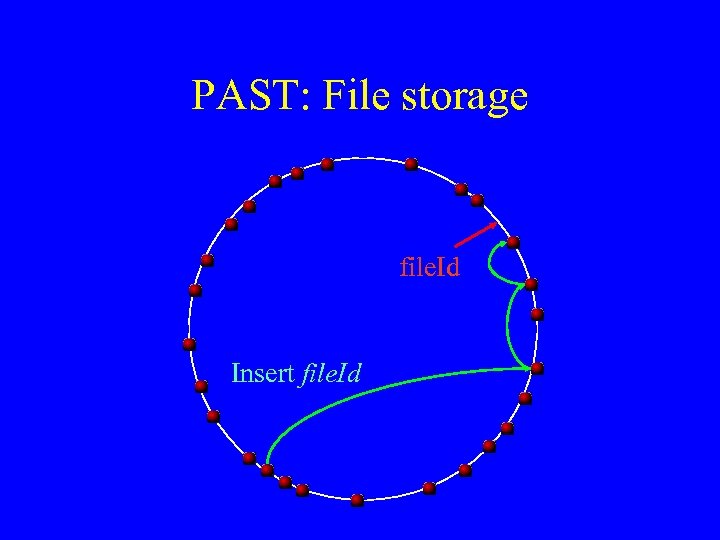

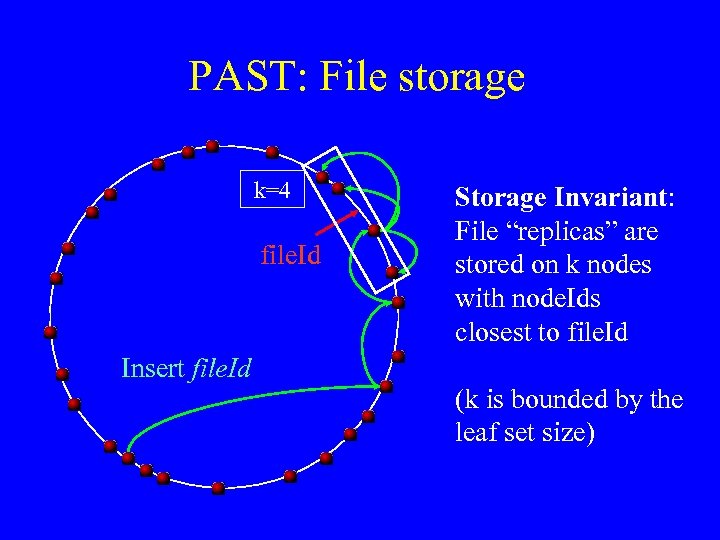

PAST: File storage file. Id Insert file. Id

PAST: File storage k=4 file. Id Storage Invariant: File “replicas” are stored on k nodes with node. Ids closest to file. Id Insert file. Id (k is bounded by the leaf set size)

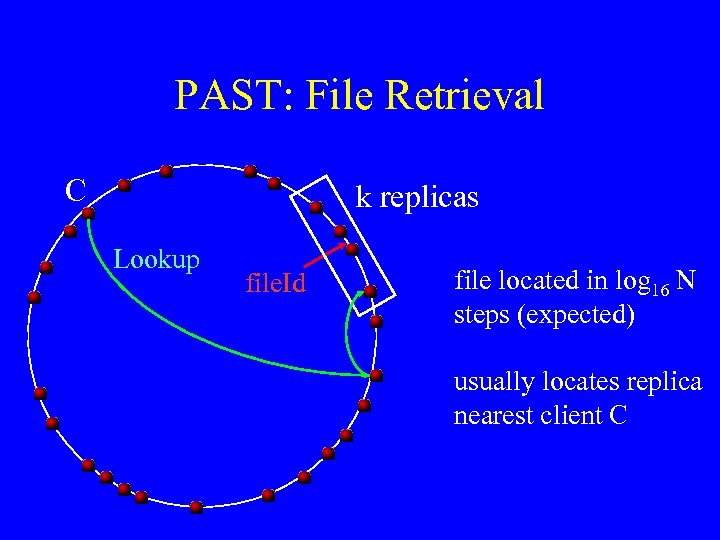

PAST: File Retrieval C k replicas Lookup file. Id file located in log 16 N steps (expected) usually locates replica nearest client C

PAST: Exploiting Pastry • Random, uniformly distributed node. Ids – replicas stored on diverse nodes • Uniformly distributed file. Ids – e. g. SHA-1(filename, public key, salt) – approximate load balance • Pastry routes to closest live node. Id – availability, fault-tolerance

PAST: Storage management • Maintain storage invariant • Balance free space when global utilization is high – statistical variation in assignment of files to nodes (file. Id/node. Id) – file size variations – node storage capacity variations • Local coordination only (leaf sets)

Experimental setup • Web proxy traces from NLANR – 18. 7 Gbytes, 10. 5 K mean, 1. 4 K median, 0 min, 138 MB max • Filesystem – 166. 6 Gbytes. 88 K mean, 4. 5 K median, 0 min, 2. 7 GB max • 2250 PAST nodes (k = 5) – truncated normal distributions of node storage sizes, mean = 27/270 MB

Need for storage management • No diversion (tpri = 1, tdiv = 0): – max utilization 60. 8% – 51. 1% inserts failed • Replica/file diversion (tpri =. 1, tdiv =. 05): – max utilization > 98% – < 1% inserts failed

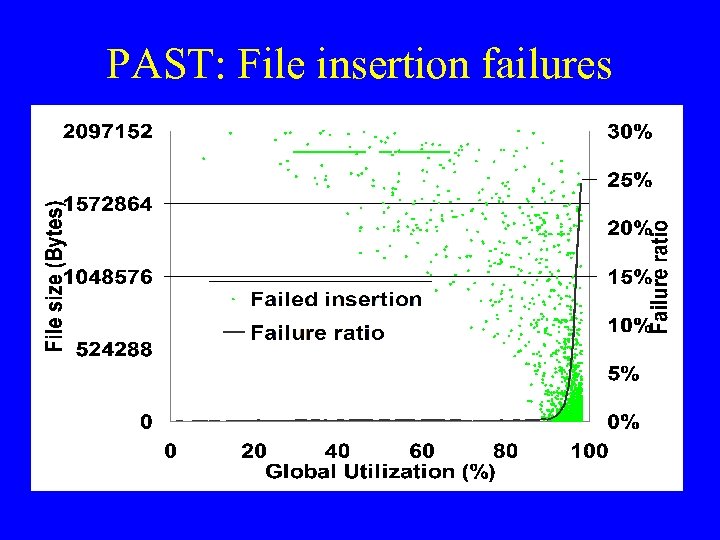

PAST: File insertion failures

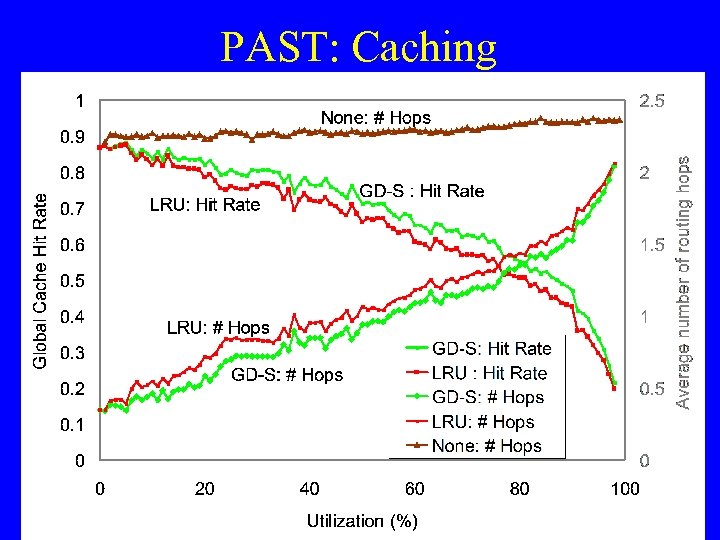

PAST: Caching • Nodes cache files in the unused portion of their allocated disk space • Files caches on nodes along the route of lookup and insert messages Goals: • maximize query xput for popular documents • balance query load • improve client latency

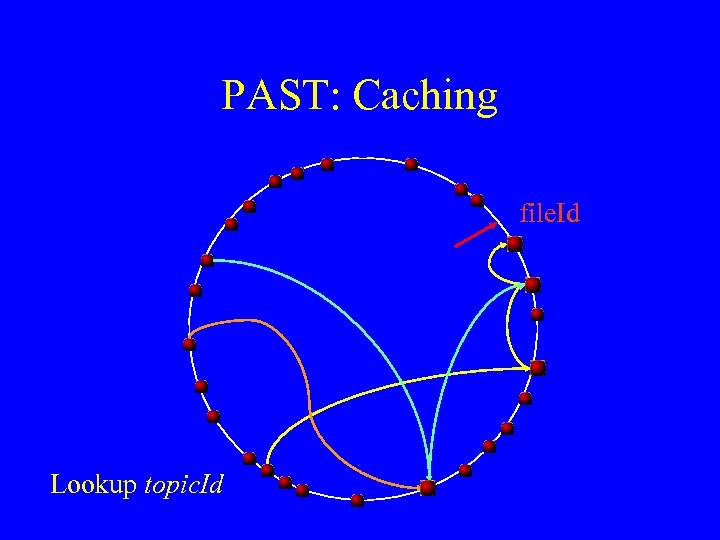

PAST: Caching file. Id Lookup topic. Id

PAST: Caching

PAST: Security • No read access control; users may encrypt content for privacy • File authenticity: file certificates • System integrity: node. Ids, file. Ids nonforgeable, sensitive messages signed • Routing randomized

PAST: Storage quotas Balance storage supply and demand • user holds smartcard issued by brokers – hides user private key, usage quota – debits quota upon issuing file certificate • storage nodes hold smartcards – advertise supply quota – storage nodes subject to random audits within leaf sets

![PAST: Related Work • CFS [SOSP’ 01] • Ocean. Store [ASPLOS 2000] • Far. PAST: Related Work • CFS [SOSP’ 01] • Ocean. Store [ASPLOS 2000] • Far.](https://present5.com/presentation/2a24f8d5d2a2500d9157f8975ab44fd0/image-53.jpg)

PAST: Related Work • CFS [SOSP’ 01] • Ocean. Store [ASPLOS 2000] • Far. Site [Sigmetrics 2000]

Outline • • • Background Pastry locality properties PAST SCRIBE Conclusions

SCRIBE: Large-scale, decentralized multicast • Infrastructure to support topic-based publish-subscribe applications • Scalable: large numbers of topics, subscribers, wide range of subscribers/topic • Efficient: low delay, low link stress, low node overhead

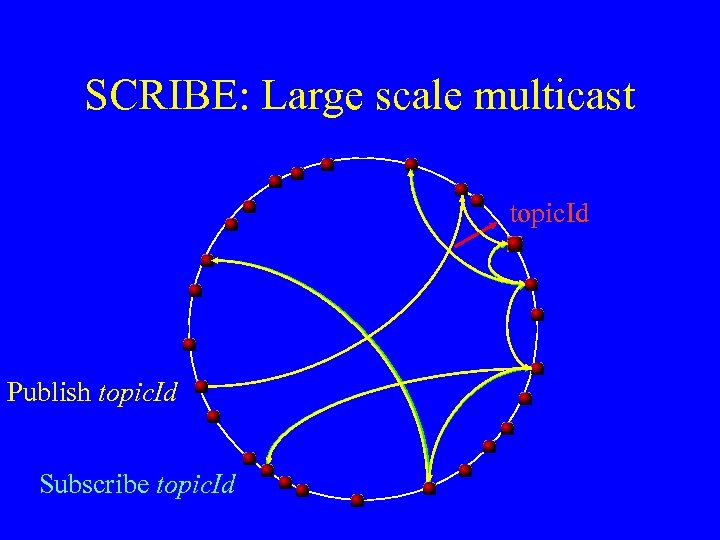

SCRIBE: Large scale multicast topic. Id Publish topic. Id Subscribe topic. Id

Scribe: Results • Simulation results • Comparison with IP multicast: delay, node stress and link stress • Experimental setup – Georgia Tech Transit-Stub model – 100, 000 nodes randomly selected out of. 5 M – Zipf-like subscription distribution, 1500 topics

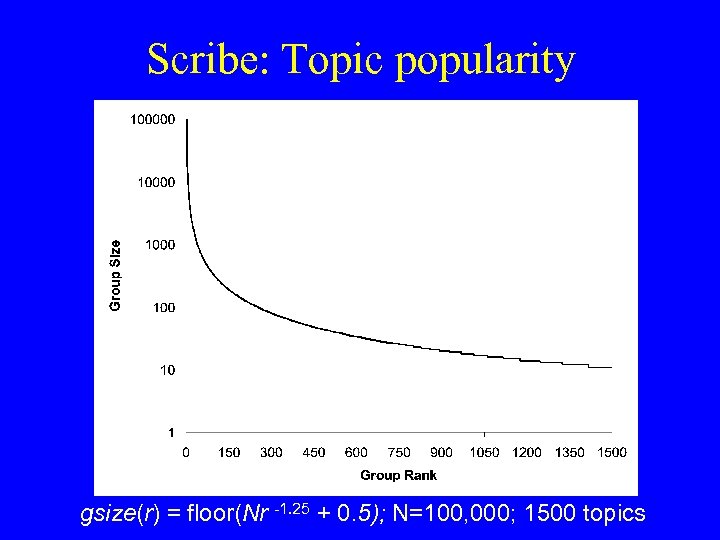

Scribe: Topic popularity gsize(r) = floor(Nr -1. 25 + 0. 5); N=100, 000; 1500 topics

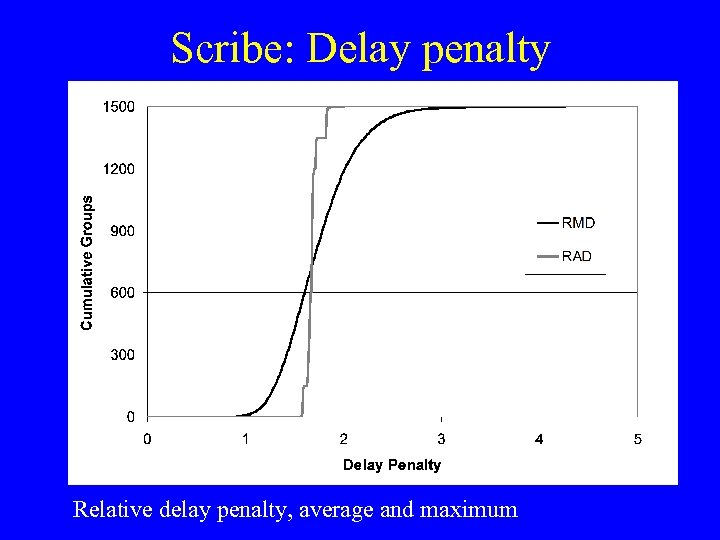

Scribe: Delay penalty Relative delay penalty, average and maximum

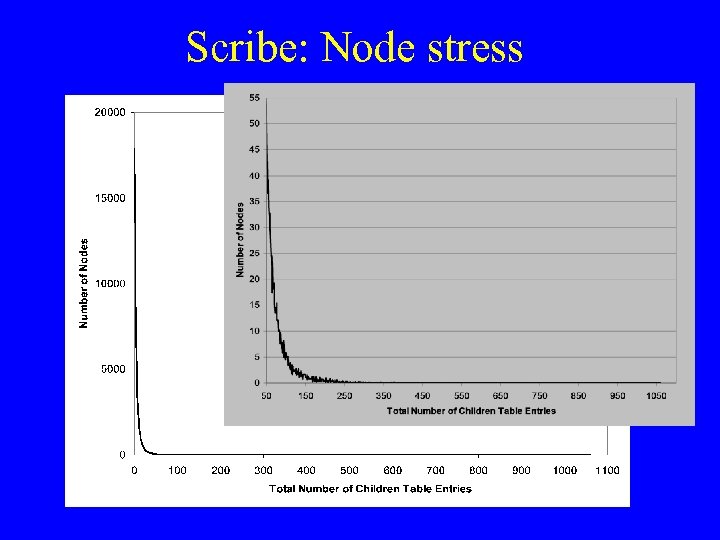

Scribe: Node stress

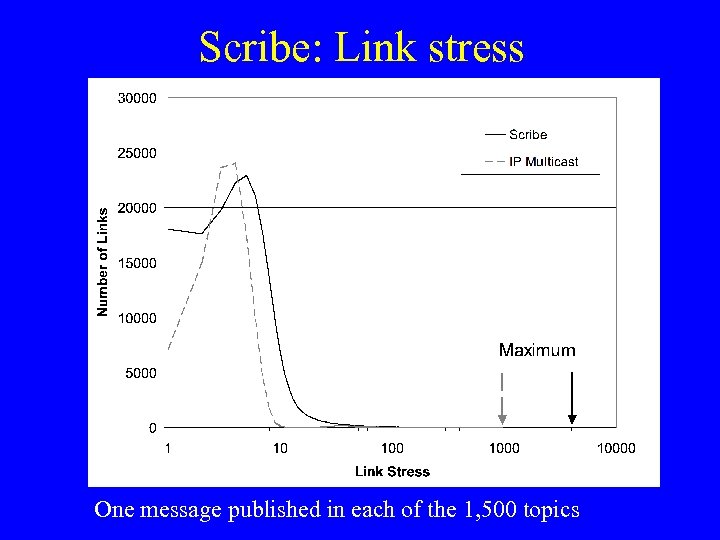

Scribe: Link stress One message published in each of the 1, 500 topics

Related works • Narada • Bayeux/Tapestry • CAN-Multicast

Summary Self-configuring P 2 P framework for topicbased publish-subscribe • Scribe achieves reasonable performance when compared to IP multicast – Scales to a large number of subscribers – Scales to a large number of topics – Good distribution of load

![Status Functional prototypes • Pastry [Middleware 2001] • PAST [Hot. OS-VIII, SOSP’ 01] • Status Functional prototypes • Pastry [Middleware 2001] • PAST [Hot. OS-VIII, SOSP’ 01] •](https://present5.com/presentation/2a24f8d5d2a2500d9157f8975ab44fd0/image-64.jpg)

Status Functional prototypes • Pastry [Middleware 2001] • PAST [Hot. OS-VIII, SOSP’ 01] • SCRIBE [NGC 2001, IEEE JSAC] • Split. Stream [submitted] • Squirrel [PODC’ 02] http: //www. cs. rice. edu/CS/Systems/Pastry

Current Work • Security – secure routing/overlay maintenance/node. Id assignment – quota system • • • Keyword search capabilities Support for mutable files in PAST Anonymity/Anti-censorship New applications Free software releases

Conclusion For more information http: //www. cs. rice. edu/CS/Systems/Pastry

2a24f8d5d2a2500d9157f8975ab44fd0.ppt