37cd4984652c2382157cad14c492d38f.ppt

- Количество слайдов: 44

Scalable Group Communication for the Internet Idit Keidar MIT Lab for Computer Science Theory of Distributed Systems Group The main part of this talk is joint work with Sussman, Marzullo and Dolev

Collaborators • • • Tal Anker Ziv Bar-Joseph Gregory Chockler Danny Dolev Alan Fekete Nabil Huleihel Kyle Ingols Roger Khazan Carl Livadas • • Nancy Lynch Keith Marzullo Yoav Sasson Jeremy Sussman Alex Shvartsman Igor Tarashchanskiy Roman Vitenberg Esti Yeger-Lotem

Outline • Motivation • Group communication - background • A novel architecture for scalable group communication services in WAN • A new scalable group membership algorithm – – Specification Algorithm Implementation Performance • Conclusions

Modern Distributed Applications (in WANs) • Highly available servers – Web – Video-on-Demand • Collaborative computing – Shared white-board, shared editor, etc. – Military command control – On-line strategy games • Stock market

Important Issues in Building Distributed Applications • Consistency of view – Same picture of game, same shared file • Fault tolerance, high availability • Performance – Conflicts with consistency? • Scalability – Topology - WAN, long unpredictable delays – Number of participants

Generic Primitives - Middleware, “Building Blocks” • E. g. , total order, group communication • Abstract away difficulties, e. g. , – Total order - a basis for replication – Mask failures • Important issues: – Well specified semantics - complete – Performance

Research Approach • Rigorous modeling, specification, proofs, performance analysis • Implementation and performance tuning • Services Applications • Specific examples General observations

Group Communication Se (G) nd G • Group abstraction - a group of processes is one logica • Dynamic Groups (join, leave, crash) Systems: Ensemble, Horus, ISIS, Newtop, Psync, Sphyn

![Virtual Synchrony [Birman, Joseph 87] • Group members all see events in same order Virtual Synchrony [Birman, Joseph 87] • Group members all see events in same order](https://present5.com/presentation/37cd4984652c2382157cad14c492d38f/image-9.jpg)

Virtual Synchrony [Birman, Joseph 87] • Group members all see events in same order – Events: messages, process crash/join • Powerful abstraction for replication • Framework for fault tolerance, high availability • Basic component: group membership – Reports changes in set of group members

![Example: Highly Available Vo. D [Anker, Dolev, Keidar ICDCS 1999] • Dynamic set of Example: Highly Available Vo. D [Anker, Dolev, Keidar ICDCS 1999] • Dynamic set of](https://present5.com/presentation/37cd4984652c2382157cad14c492d38f/image-10.jpg)

Example: Highly Available Vo. D [Anker, Dolev, Keidar ICDCS 1999] • Dynamic set of servers • Clients talk to “abstract” service • Server can crash, client shouldn’t know

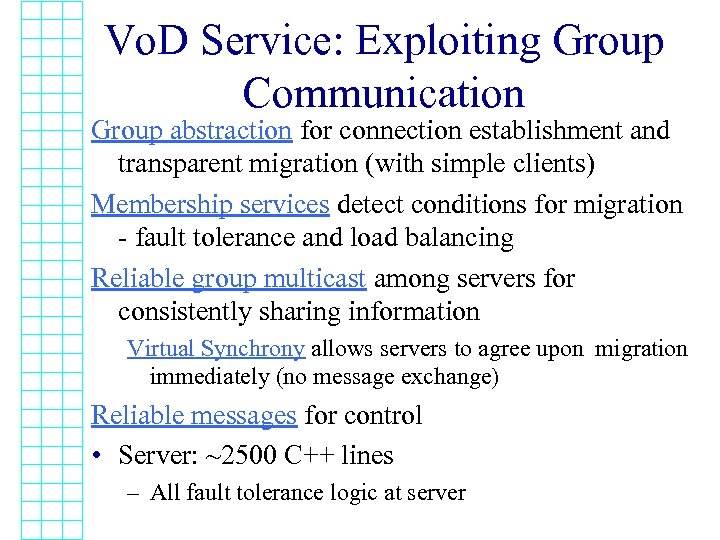

Vo. D Service: Exploiting Group Communication Group abstraction for connection establishment and transparent migration (with simple clients) Membership services detect conditions for migration - fault tolerance and load balancing Reliable group multicast among servers for consistently sharing information Virtual Synchrony allows servers to agree upon migration immediately (no message exchange) Reliable messages for control • Server: ~2500 C++ lines – All fault tolerance logic at server

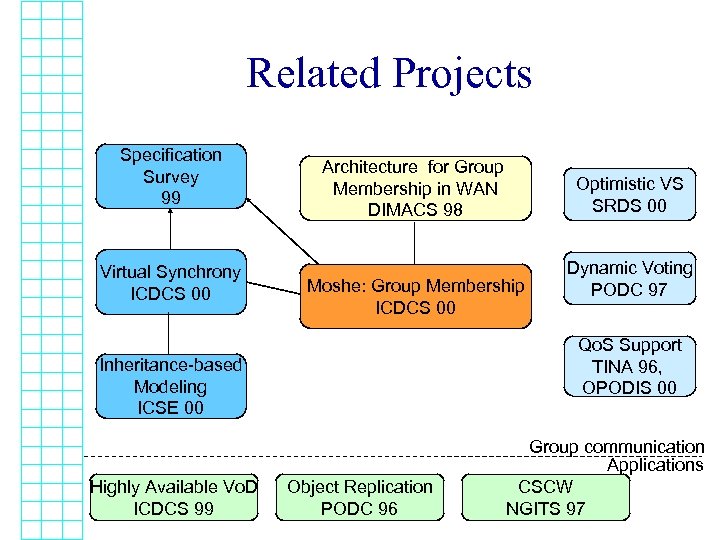

Related Projects Specification Survey 99 Virtual Synchrony ICDCS 00 Architecture for Group Membership in WAN DIMACS 98 Moshe: Group Membership ICDCS 00 Dynamic Voting PODC 97 Qo. S Support TINA 96, OPODIS 00 Inheritance-based Modeling ICSE 00 Highly Available Vo. D ICDCS 99 Optimistic VS SRDS 00 Object Replication PODC 96 Group communication Applications CSCW NGITS 97

A Scalable Architecture for Group Membership in WANs Tal Anker, Gregory Chockler, Danny Dolev, Idit Keidar DIMACS Workshop 1998

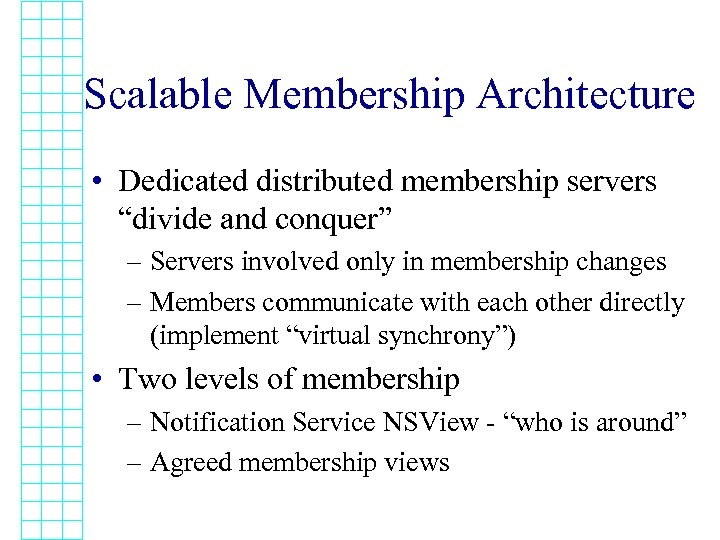

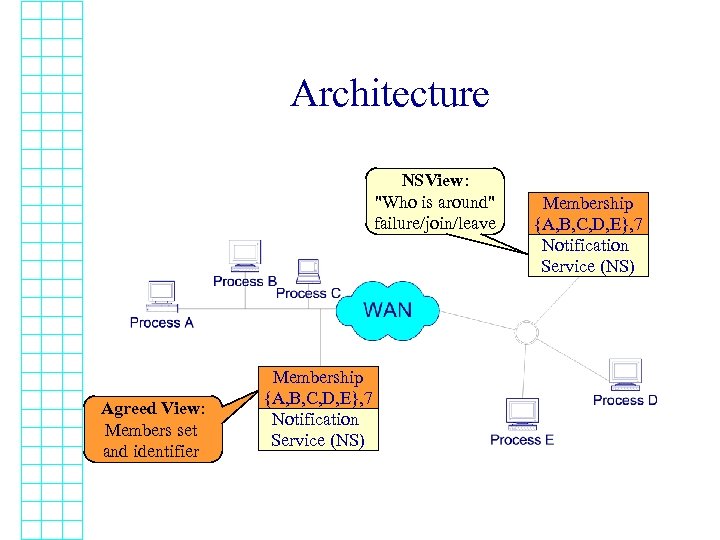

Scalable Membership Architecture • Dedicated distributed membership servers “divide and conquer” – Servers involved only in membership changes – Members communicate with each other directly (implement “virtual synchrony”) • Two levels of membership – Notification Service NSView - “who is around” – Agreed membership views

Architecture NSView: "Who is around" failure/join/leave Agreed View: Members set and identifier Membership {A, B, C, D, E}, 7 Notification Service (NS)

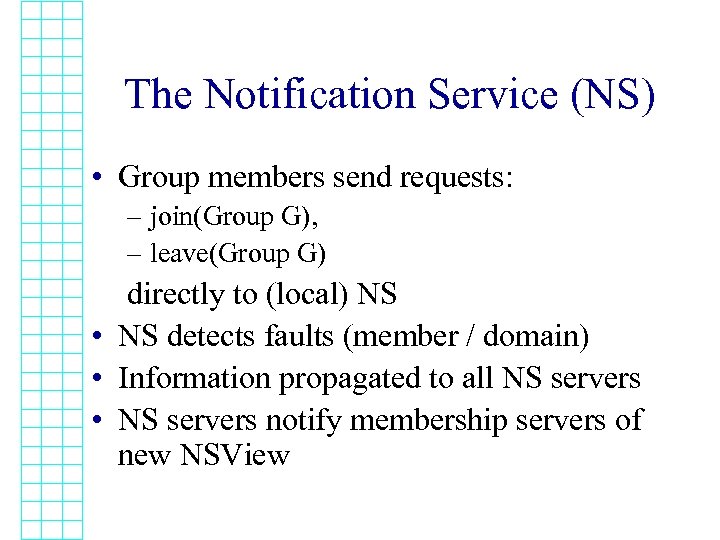

The Notification Service (NS) • Group members send requests: – join(Group G), – leave(Group G) directly to (local) NS • NS detects faults (member / domain) • Information propagated to all NS servers • NS servers notify membership servers of new NSView

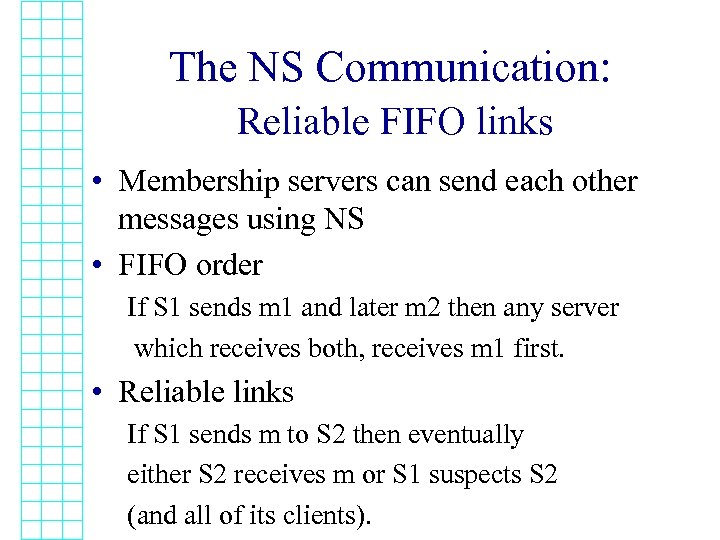

The NS Communication: Reliable FIFO links • Membership servers can send each other messages using NS • FIFO order If S 1 sends m 1 and later m 2 then any server which receives both, receives m 1 first. • Reliable links If S 1 sends m to S 2 then eventually either S 2 receives m or S 1 suspects S 2 (and all of its clients).

Moshe: A Group Membership Algorithm for WANs Idit Keidar, Jeremy Sussman Keith Marzullo, Danny Dolev ICDCS 2000

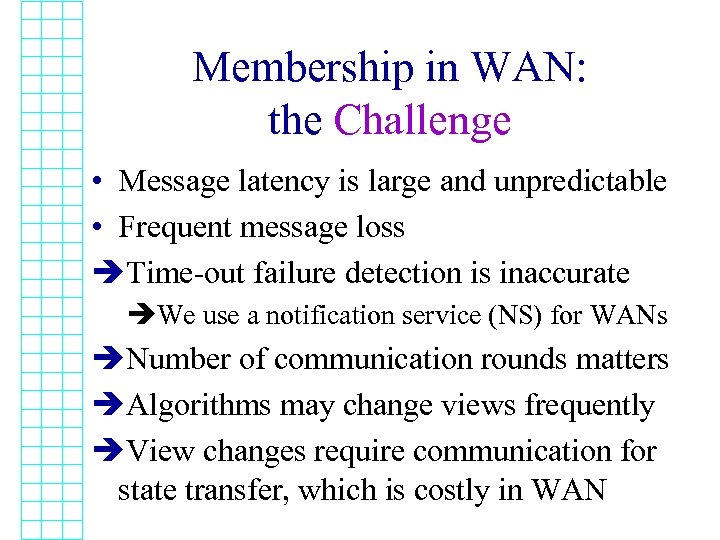

Membership in WAN: the Challenge • Message latency is large and unpredictable • Frequent message loss èTime-out failure detection is inaccurate èWe use a notification service (NS) for WANs èNumber of communication rounds matters èAlgorithms may change views frequently èView changes require communication for state transfer, which is costly in WAN

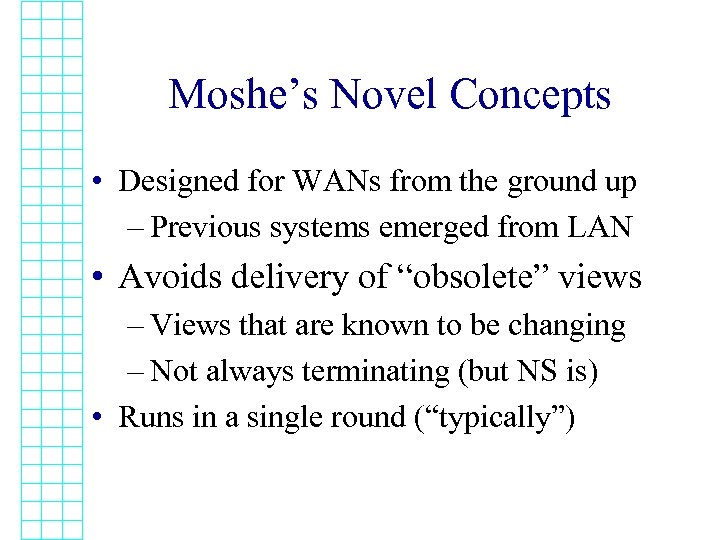

Moshe’s Novel Concepts • Designed for WANs from the ground up – Previous systems emerged from LAN • Avoids delivery of “obsolete” views – Views that are known to be changing – Not always terminating (but NS is) • Runs in a single round (“typically”)

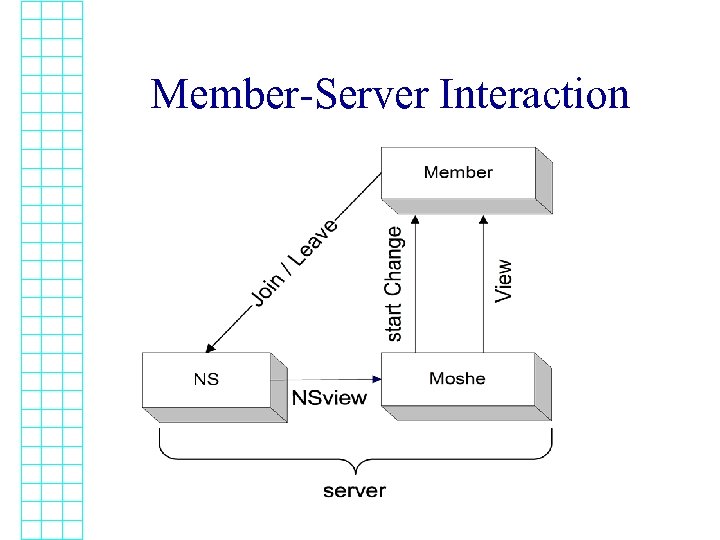

Member-Server Interaction

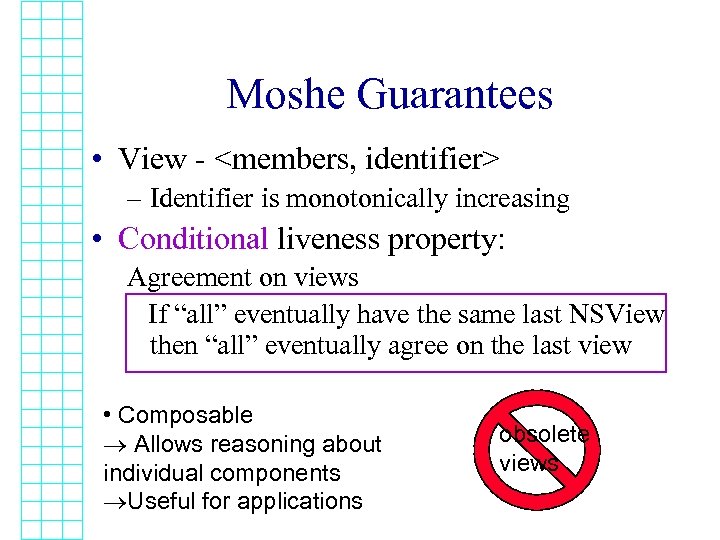

Moshe Guarantees • View - <members, identifier> – Identifier is monotonically increasing • Conditional liveness property: Agreement on views If “all” eventually have the same last NSView then “all” eventually agree on the last view • Composable ® Allows reasoning about individual components ®Useful for applications obsolete views

Moshe Operation: Typical Case • In response to new NSView (members), – send proposal to other servers with NSView – send start. Change to local members (clients) • Once proposals from all servers of NSView members arrive, deliver view: – members - NSView, – identifier higher than all previous to local members

Goal: Self Stabilizing • Once the same last NSView is received by all servers: – All send proposals for this NSView – All the proposals reach all the servers – All servers use these proposals to deliver the same view And they live happily ever after!

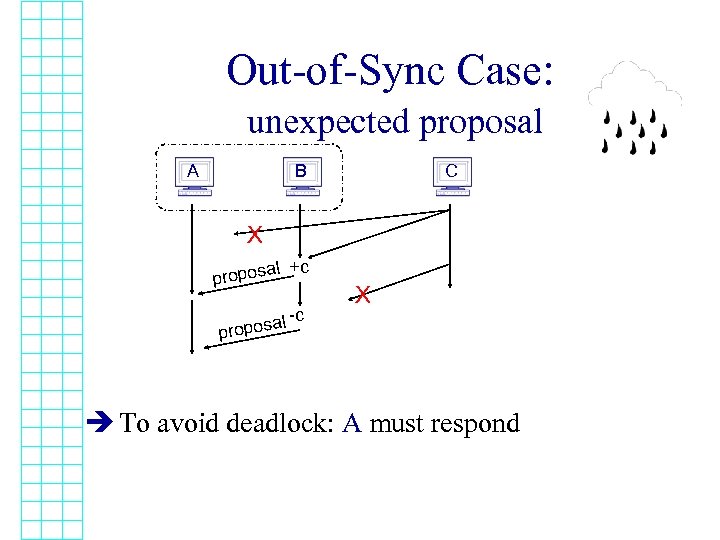

Out-of-Sync Case: unexpected proposal A B C X al +c propos al propos -c X è To avoid deadlock: A must respond

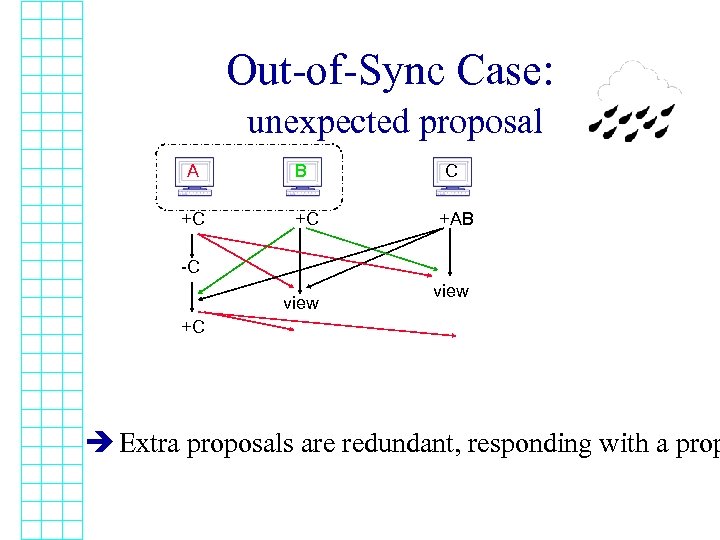

Out-of-Sync Case: unexpected proposal A +C B +C C +AB -C view +C è Extra proposals are redundant, responding with a prop

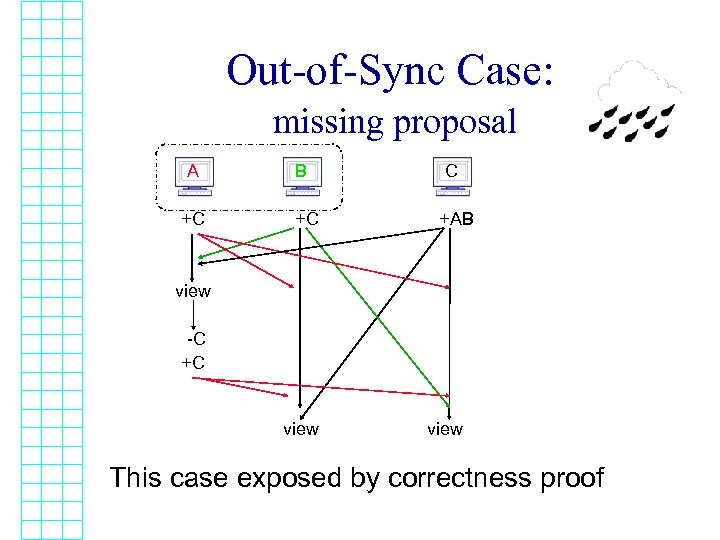

Out-of-Sync Case: missing proposal A +C B +C C +AB view -C +C view This case exposed by correctness proof

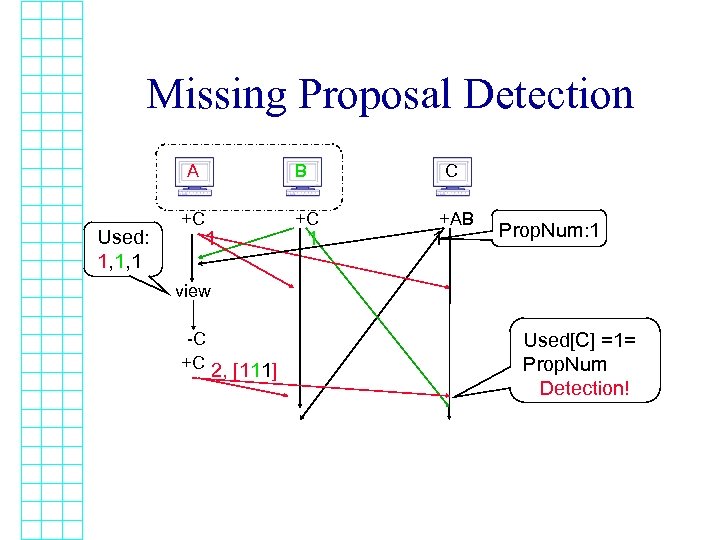

Missing Proposal Detection A Used: 1, 1, 1 +C B 1 C +C 1 +AB 1 Prop. Num: 1 view -C +C 2, [111] Used[C] =1= Prop. Num Detection!

Handling Out-of-Sync Cases: “Slow Agreement” • Also sends proposals, tagged “SA” • Invoked upon blocking detection or upon receipt of “SA” proposal • Upon receipt of “SA” proposal with bigger number than Prop. Num, respond with same number • Deliver view only with “full house” of same number proposals

How Typical is the “typical” Case? • Depends on the notification service (NS) – Classify NS good behaviors: symmetric and transitive perception of failures • Transitivity depends on logical topology, how suspicions propagate • Typical case should be very common • Need to measure

![Implementation • Use CONGRESS [Anker et al] – NS for WAN – Always symmetric, Implementation • Use CONGRESS [Anker et al] – NS for WAN – Always symmetric,](https://present5.com/presentation/37cd4984652c2382157cad14c492d38f/image-31.jpg)

Implementation • Use CONGRESS [Anker et al] – NS for WAN – Always symmetric, can be non-transitive – Logical topology can be configured • Moshe servers extend CONGRESS servers • Socket interface with processes

The Experiment • Run over the Internet – In the US: MIT, Cornell (CU), UCSD – In Taiwan: NTU – In Israel: HUJI • Run for 10 days in one configuration, 2. 5 days in another • 10 clients at each location – continuously join/leave 10 groups

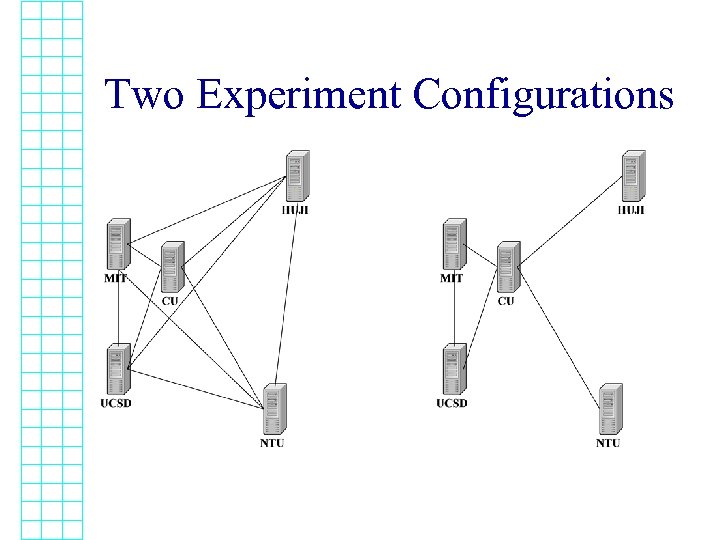

Two Experiment Configurations

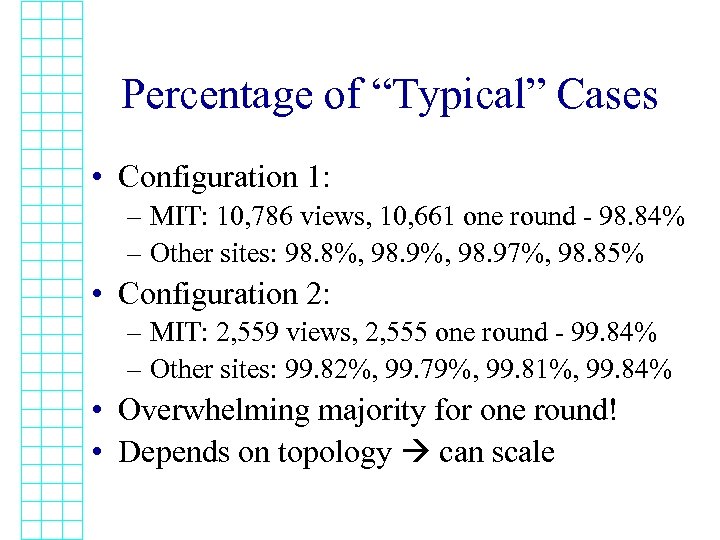

Percentage of “Typical” Cases • Configuration 1: – MIT: 10, 786 views, 10, 661 one round - 98. 84% – Other sites: 98. 8%, 98. 97%, 98. 85% • Configuration 2: – MIT: 2, 559 views, 2, 555 one round - 99. 84% – Other sites: 99. 82%, 99. 79%, 99. 81%, 99. 84% • Overwhelming majority for one round! • Depends on topology can scale

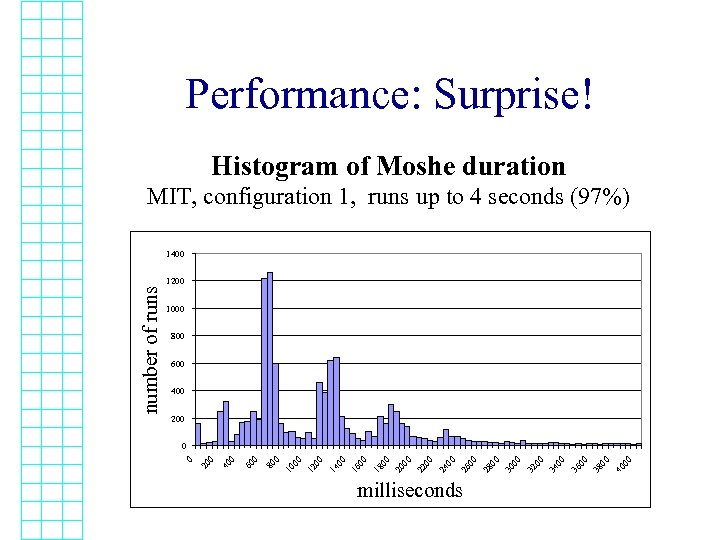

Performance: Surprise! Histogram of Moshe duration MIT, configuration 1, runs up to 4 seconds (97%) 1200 1000 800 600 400 200 milliseconds 00 40 00 38 00 36 00 34 00 32 00 30 00 28 00 26 00 24 00 22 00 20 00 18 00 16 00 14 00 12 0 00 10 80 0 60 0 40 20 0 number of runs 1400

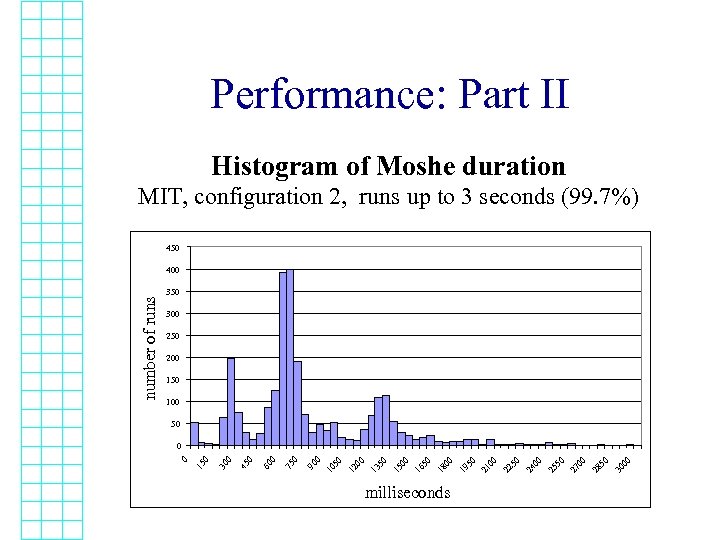

Performance: Part II Histogram of Moshe duration MIT, configuration 2, runs up to 3 seconds (99. 7%) 450 300 250 200 150 100 50 milliseconds 00 30 50 28 00 27 50 25 00 24 50 22 00 21 50 19 00 18 50 16 00 15 50 13 00 12 0 50 10 90 0 75 0 60 0 45 0 30 15 0 0 0 number of runs 400

Performance over the Internet: What is Going on? • Without message loss, running time is close to biggest round-trip-time, ~650 ms. – As expected • Message loss has a big impact • Configuration 2 has much less loss, è more cases of good performance

“Slow” versus “Typical” • Slow can take 1 or 2 rounds once it is run – Depending on Prop. Num • Slow after NE – One-round is run first, then detection, and slow – Without loss - 900 ms. , 40% more than usual • Slow without NE – Detection by unexpected proposal – Only slow algorithm is run – Runs less time than one-round

Unstable Periods: No Obsolete Views • “Unstable” = – constant changes; or – connected processes differ in failure detection • Configuration 1: – 379 of the 10, 786 views 4 seconds, 3. 5% – 167 20 seconds, 1. 5% – Longest running time 32 minutes • Configuration 2: – 14 of 2, 559 views 4 seconds, 0. 5% – Longest running time 31 seconds

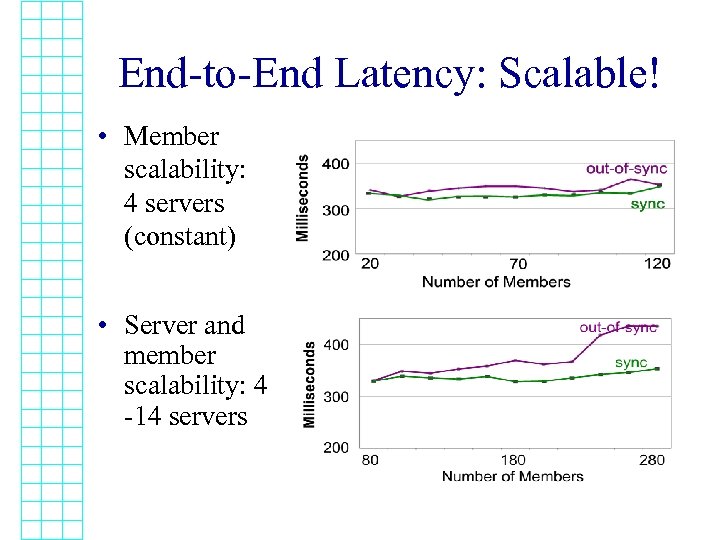

Scalability Measurements • Controlled experiment at MIT and UCSD – Prototype NS, based on TCP/IP (Sasson) – Inject faults to test “slow” case • Vary number of members, servers • Measure end-to-end latencies at member, from join/leave/suspicion to corresponding view • Average of 150 (50 slow) runs

End-to-End Latency: Scalable! • Member scalability: 4 servers (constant) • Server and member scalability: 4 -14 servers

Conclusion: Moshe Features • Avoiding obsolete views • A single round – 98% of the time in one configuration – 99. 8% of the time in another • Using a notification service for WANs – Good abstraction – Flexibility to configure multiple ways – Future work: configure more ways • Scalable “divide and conquer” architecture

Retrospective: Role of Theory • Specification – Possible to implement – Useful for applications (composable) • Specification can be met in one round “typically” (unlike Consensus) • Correctness proof exposes subtleties – Need to avoid live-lock – Two types of detection mechanisms needed

Future Work: The Qo. S Challenge • Some distributed applications require Qo. S – Guaranteed available bandwidth – Bounded delay, bounded jitter • Membership algorithm terminates in one round under certain circumstances – Can we leverage on that to guarantee Qo. S under certain assumptions? • Can other primitives guarantee Qo. S?

37cd4984652c2382157cad14c492d38f.ppt