ba8e3d5ce828e22c25097a5c059c8e5e.ppt

- Количество слайдов: 22

Scalable-Grain Pipeline Parallelization Method for Multi-Core Systems Peng Liu, Chunming Huang, Jun Guo, Yang Geng, Weidong Wang, and Mei Yang Zhejiang University 1

Motivation The enormous number of transistors available on a single chip enables the integration of tens or hundreds of processing cores on MPSo. Cs. To efficiently utilize parallel resources available on MPSo. Cs, one challenge is how to parallelize the legacy sequential programs. Existing efforts to exploit pipeline parallelism in C programs are mostly fine-grained. Partition individual instructions across processors: DOALL[ 1], DOACROSS [2], HELLIX [3], DSWP [4] Integration of parallelization techniques: speculation DSWP [5], parallel-stage DSWP [6], and etc. For streaming applications, these techniques are not sufficient, because the pipeline parallelism in streaming applications is coarse-grained and more complex. [1] Allen, R. , et al. , Optimizing Compilers for Modern Architectures. Morgan Kaufmann, 2001 [2] Cytron, R. : Doacross: Beyond Vectorization for Multiprocessors. ICPP 1986 [3] Campanoni, S. , et al. , HELIX: Automatic Parallelization of Irregular Programs for Chip Multiprocessoing, CGO 2012. [4] Ottoni, G. , et al. , Automatic Thread Extraction with Decoupled Software Pipelining. Microarch. 2005. [5] Vachharajani, N. , et al. , Speculative Decoupled Software Pipeline, PACT 2007. [6] Raman, S. , Parallel-stage Decoupled Software Pipelining, CGO 2008. 2

Motivation Currently no method can solve the problem of extracting coarse-grained pipeline parallelism well. Paralax [1], a compiler-based parallelization framework MAPS [2], semi-automatic parallelism and support parallel dataflow programming Language-extension approach proposed by Thies et al. [3] Streaming applications represent a large set of MPSo. C applications, such as video, audio, cryptographic, etc. These programs are characterized by heavy use of pointers, multi-layer loop structures, and streaming data input. The parallelism within these programs usually is implicit. Our focus is on exploiting coarse-grained pipeline parallelism in the C programs of streaming applications, which are characterized by multi-layer loop structures with intricate dependence relations and fixed data flow. [1] Ceng, J. , et al. , MAPS: An Integrated Framework for MPSo. C Application Parallelization, DAC 2008. [2] Vandierendonck, H. , The Paralax Infrastructure: Automatic Parallelization with a Helping Hand, PACT 2010. [3] Thies, W. , A Practical Approach to Exploiting Coarse-grained Pipeline Parallelism in C Programs. Microarch. 2007. 3

Outline Motivation Problem formulation Framework Experimental results Summary 4

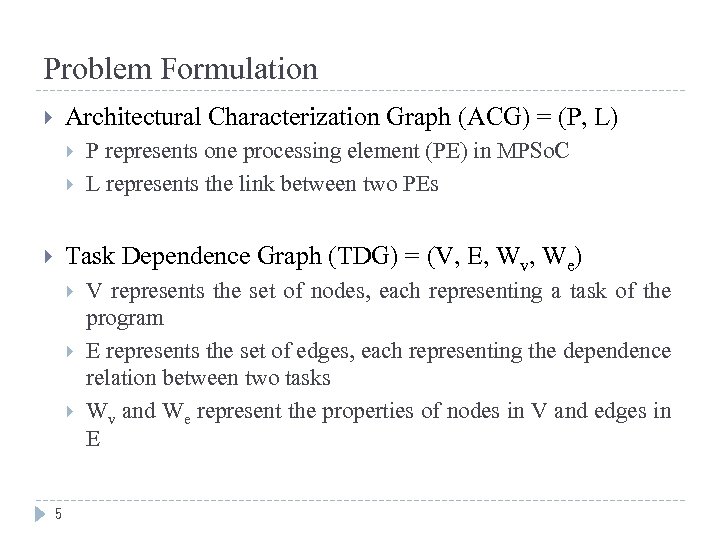

Problem Formulation Architectural Characterization Graph (ACG) = (P, L) P represents one processing element (PE) in MPSo. C L represents the link between two PEs Task Dependence Graph (TDG) = (V, E, Wv, We) 5 V represents the set of nodes, each representing a task of the program E represents the set of edges, each representing the dependence relation between two tasks Wv and We represent the properties of nodes in V and edges in E

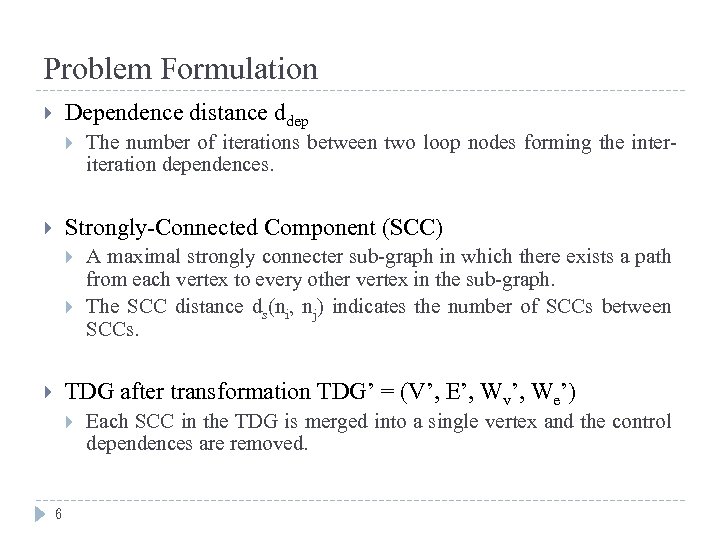

Problem Formulation Dependence distance ddep The number of iterations between two loop nodes forming the interiteration dependences. Strongly-Connected Component (SCC) A maximal strongly connecter sub-graph in which there exists a path from each vertex to every other vertex in the sub-graph. The SCC distance ds(ni, nj) indicates the number of SCCs between SCCs. TDG after transformation TDG’ = (V’, E’, Wv’, We’) 6 Each SCC in the TDG is merged into a single vertex and the control dependences are removed.

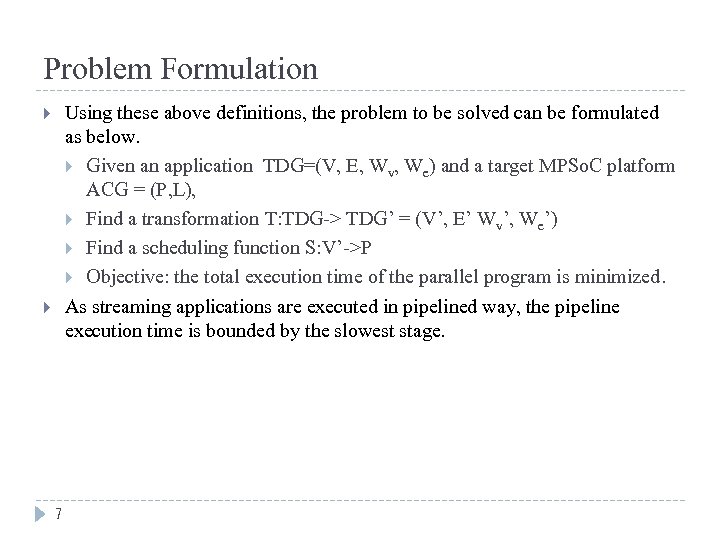

Problem Formulation Using these above definitions, the problem to be solved can be formulated as below. Given an application TDG=(V, E, Wv, We) and a target MPSo. C platform ACG = (P, L), Find a transformation T: TDG-> TDG’ = (V’, E’ Wv’, We’) Find a scheduling function S: V’->P Objective: the total execution time of the parallel program is minimized. As streaming applications are executed in pipelined way, the pipeline execution time is bounded by the slowest stage. 7

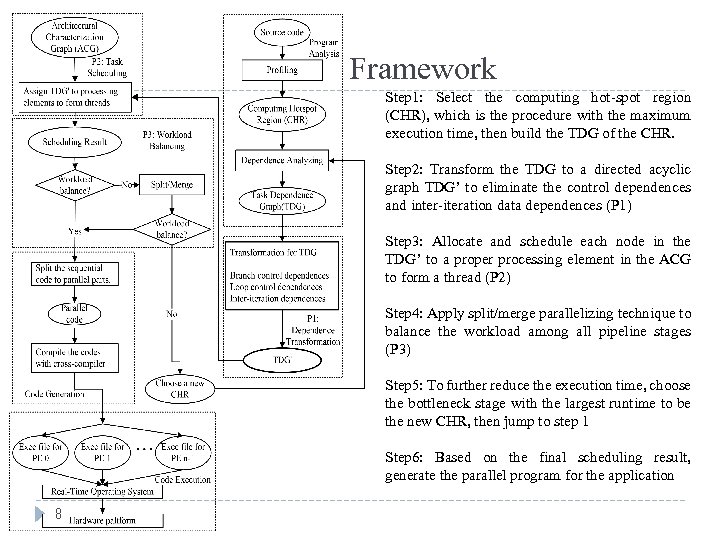

Framework Step 1: Select the computing hot-spot region (CHR), which is the procedure with the maximum execution time, then build the TDG of the CHR. Step 2: Transform the TDG to a directed acyclic graph TDG’ to eliminate the control dependences and inter-iteration data dependences (P 1) Step 3: Allocate and schedule each node in the TDG’ to a proper processing element in the ACG to form a thread (P 2) Step 4: Apply split/merge parallelizing technique to balance the workload among all pipeline stages (P 3) Step 5: To further reduce the execution time, choose the bottleneck stage with the largest runtime to be the new CHR, then jump to step 1 Step 6: Based on the final scheduling result, generate the parallel program for the application 8

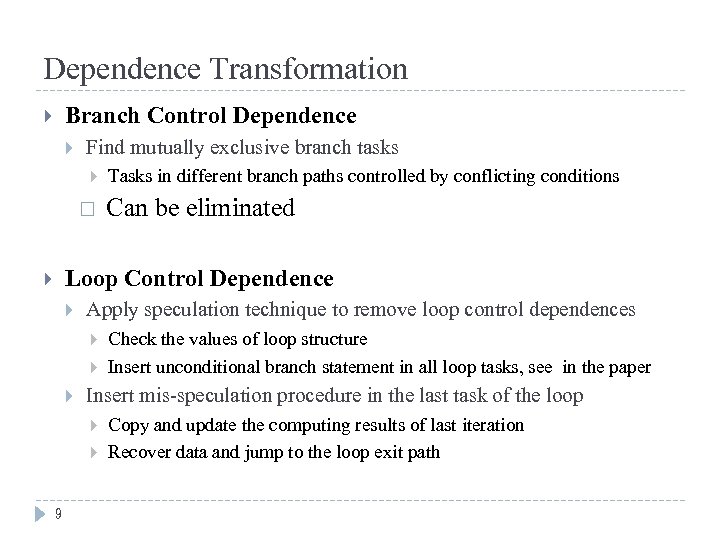

Dependence Transformation Branch Control Dependence Find mutually exclusive branch tasks Tasks in different branch paths controlled by conflicting conditions Can be eliminated Loop Control Dependence Apply speculation technique to remove loop control dependences Insert mis-speculation procedure in the last task of the loop 9 Check the values of loop structure Insert unconditional branch statement in all loop tasks, see in the paper Copy and update the computing results of last iteration Recover data and jump to the loop exit path

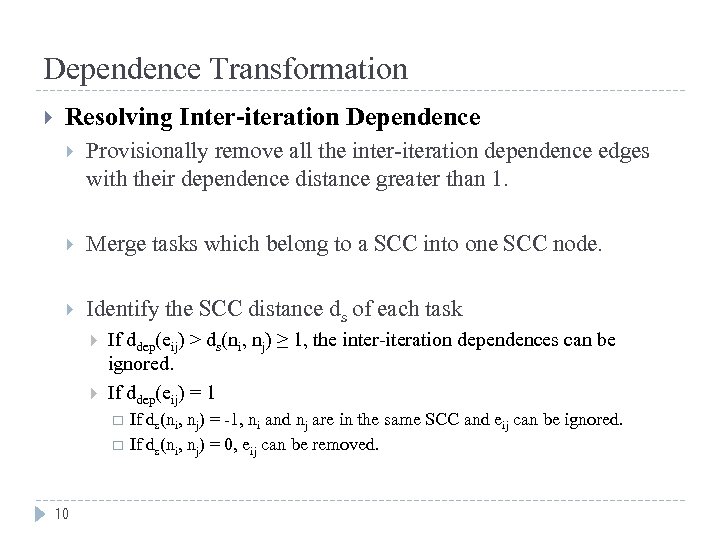

Dependence Transformation Resolving Inter-iteration Dependence Provisionally remove all the inter-iteration dependence edges with their dependence distance greater than 1. Merge tasks which belong to a SCC into one SCC node. Identify the SCC distance ds of each task If ddep(eij) > ds(ni, nj) ≥ 1, the inter-iteration dependences can be ignored. If ddep(eij) = 1 10 If ds(ni, nj) = -1, ni and nj are in the same SCC and eij can be ignored. If ds(ni, nj) = 0, eij can be removed.

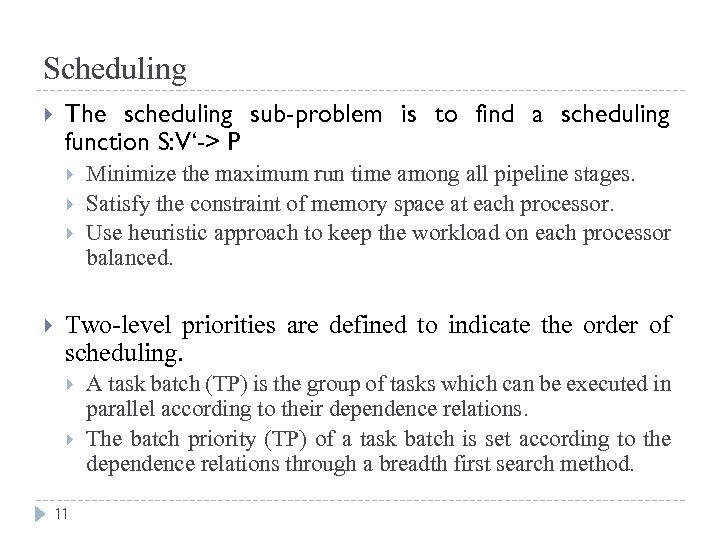

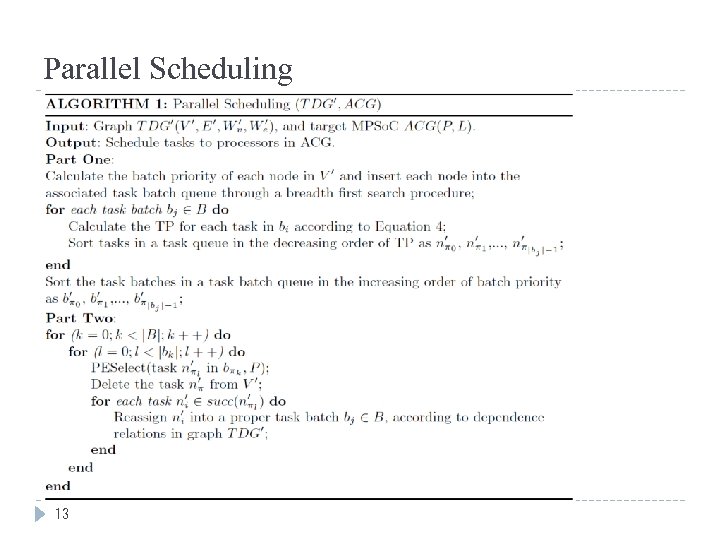

Scheduling The scheduling sub-problem is to find a scheduling function S: V‘-> P Minimize the maximum run time among all pipeline stages. Satisfy the constraint of memory space at each processor. Use heuristic approach to keep the workload on each processor balanced. Two-level priorities are defined to indicate the order of scheduling. 11 A task batch (TP) is the group of tasks which can be executed in parallel according to their dependence relations. The batch priority (TP) of a task batch is set according to the dependence relations through a breadth first search method.

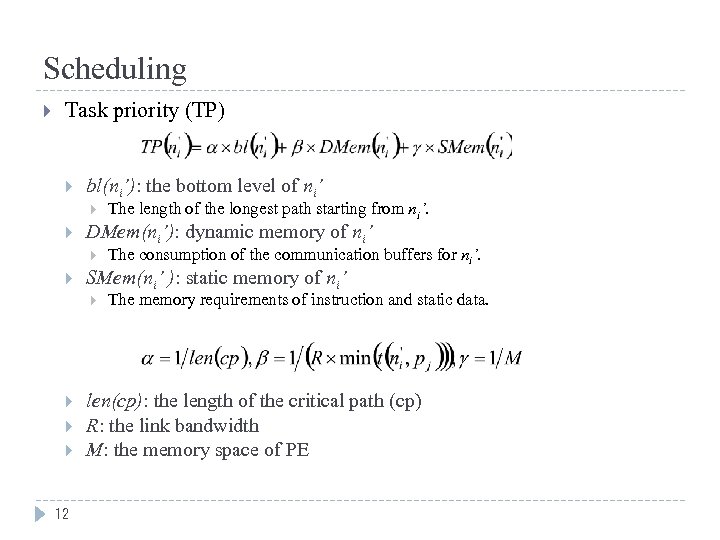

Scheduling Task priority (TP) bl(ni’): the bottom level of ni’ DMem(ni’): dynamic memory of ni’ 12 The consumption of the communication buffers for ni’. SMem(ni’ ): static memory of ni’ The length of the longest path starting from ni’. The memory requirements of instruction and static data. len(cp): the length of the critical path (cp) R: the link bandwidth M: the memory space of PE

Parallel Scheduling 13

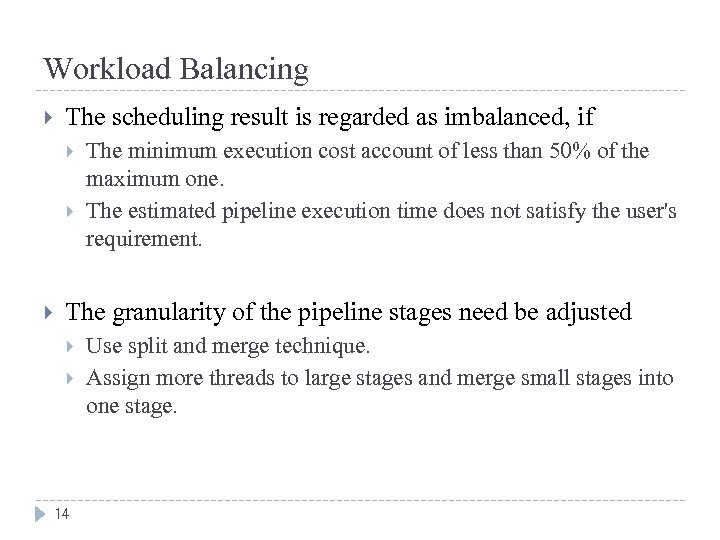

Workload Balancing The scheduling result is regarded as imbalanced, if The minimum execution cost account of less than 50% of the maximum one. The estimated pipeline execution time does not satisfy the user's requirement. The granularity of the pipeline stages need be adjusted 14 Use split and merge technique. Assign more threads to large stages and merge small stages into one stage.

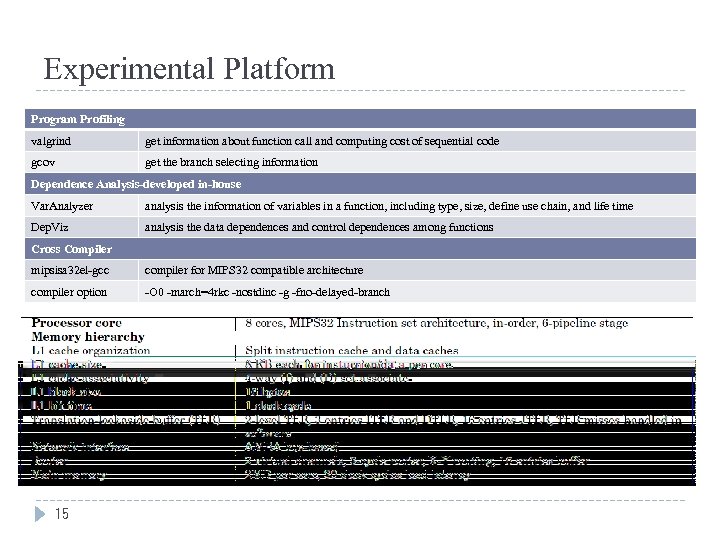

Experimental Platform Program Profiling valgrind get information about function call and computing cost of sequential code gcov get the branch selecting information Dependence Analysis-developed in-house Var. Analyzer analysis the information of variables in a function, including type, size, define use chain, and life time Dep. Viz analysis the data dependences and control dependences among functions Cross Compiler mipsisa 32 el-gcc compiler for MIPS 32 compatible architecture compiler option -O 0 -march=4 rkc -nostdinc -g -fno-delayed-branch 15

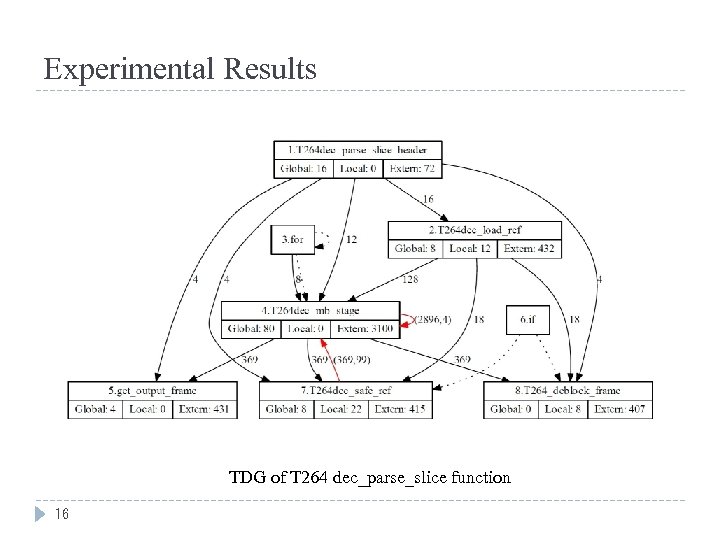

Experimental Results TDG of T 264 dec_parse_slice function 16

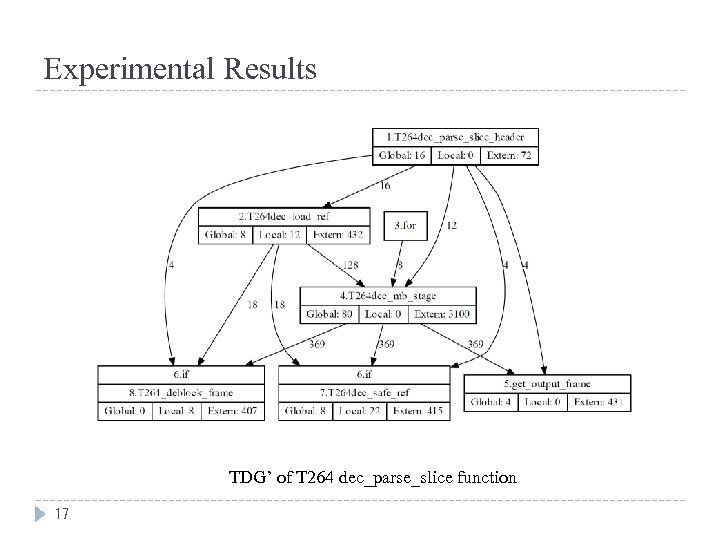

Experimental Results TDG’ of T 264 dec_parse_slice function 17

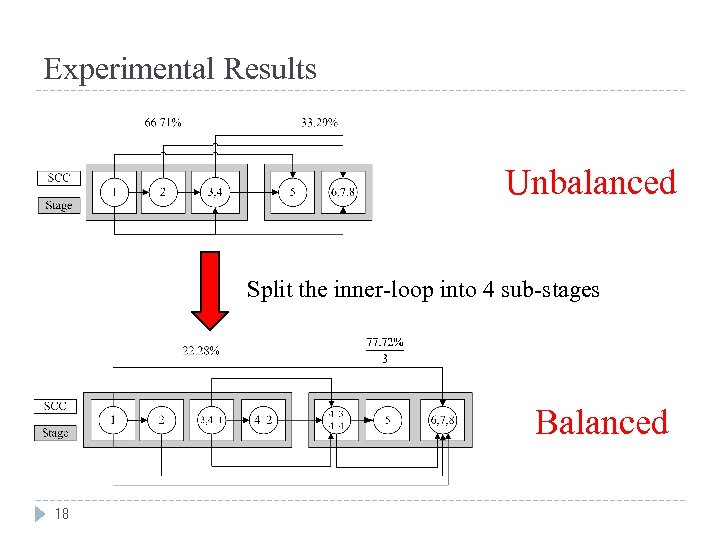

Experimental Results Unbalanced Split the inner-loop into 4 sub-stages Balanced 18

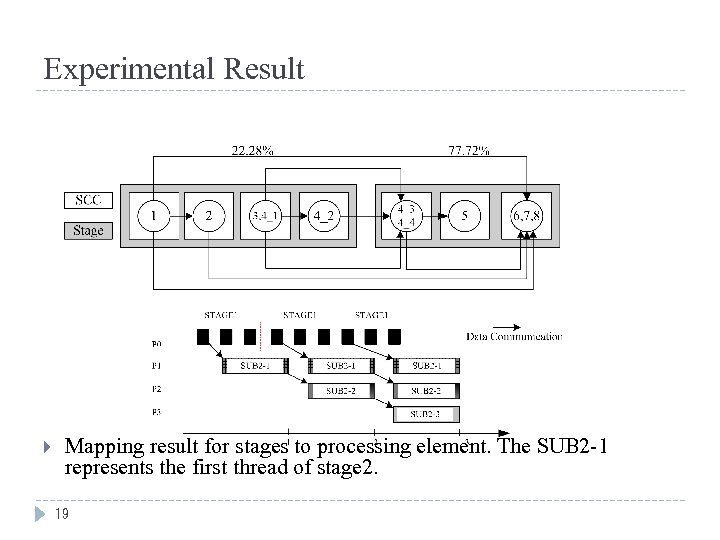

Experimental Result Mapping result for stages to processing element. The SUB 2 -1 represents the first thread of stage 2. 19

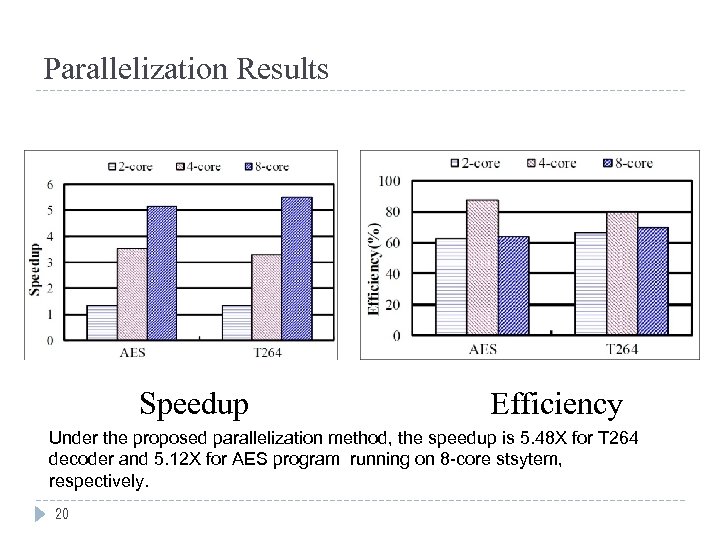

Parallelization Results Speedup Efficiency Under the proposed parallelization method, the speedup is 5. 48 X for T 264 decoder and 5. 12 X for AES program running on 8 -core stsytem, respectively. 20

Summary We have proposed a method to exploit the coarse-grained pipeline parallelism hidden in multi-layer loops. Transform the task dependence graph of a streaming application to resolve intricate dependence. Schedule tasks to the target MPSo. C with the objective of minimizing the maximal execution time of all pipeline stages. Adjust the granularity of pipeline stages to balance the workload among all stages in a heuristic way to further improve performance. The proposed method can be applied to parallelizing other embedded applications on multi-core embedded systems. 21

Scalable-Grain Pipeline Parallelization Method for Multi-Core Systems Peng Liu, Chunming Huang, Jun Guo, Yang Geng, Weidong Wang, and Mei Yang Zhejiang University 22

ba8e3d5ce828e22c25097a5c059c8e5e.ppt