91befce1d20b81f9c275dfd53811eade.ppt

- Количество слайдов: 45

SAT and CSP competitions & benchmark libraries: some lessons learnt? Toby Walsh NICTA & UNSW Sydney, Australia

Whats the best way to benchmark systems?

Outline » Benchmark libraries » Founding CSPLib. org » Competitions » SAT competition judge » TPTP competition judge » …

Why? » Why did I set up CSPLib. org » I needed problems against which to benchmark my latest inference techniques » Zebra and random problems don’t cut it! » I thought it would help unify and advance the CP community

Random problems » +ve » Easy to generate » Hard (if chosen from phase transition) » Impossible to cheat » You can solve 1000 variable random 3 SAT problems at l/n=4. 2, I’ll be impressed

Random problems » -ve » Lack structures found in real world » Unrepresentative » E. g. random 3 SAT either have many solutions or none » Different methods work well on them » Random SAT: forward looking algorithms » Industrial SAT: backward looking algorithms

Why? » Thesis: every mature field has a benchmark library » Deduction started in 1960 s » TPTP set up in 1993 » SAT started in 1960 s » SAT DIMACS challenge in 1992 » SATLib set up in 1999 » CP started in 1970 s » CSPLib set up in 1998

Why? » Thesis: every mature field has a benchmark library » Spatial and temporal reasoning started in early 80 s (or before? ) » It’s been approximately 30 years so it’s about time you guys set one up!

Benchmark libraries » CSPLib. org » Over 35 k unique visitors » Still not everything I’d want it to be » But state of the art for experimentation is now much better than it was » I haven’t seen a zebra for a very long time

An ideal library » Desiderata taken from: » CSPLib: a benchmark library for constraints, Proc. CP-99

An ideal library » Location » On the web and easy to find » » » TPTP. org CSPLib. org SATLib. org QBFLib. org … http: //elib. zib. de/pub/mptestdata/tsplib/tsplib. html » http: //mat. gsia. cmu. edu/COLOR/instances. html

An ideal library » Easy to use » Tools to make benchmarking as painless as possible » tptp 2 X, … » Diverse » To help prevent over-fitting

An ideal library » Large » Growing continuously » Again helps to prevent over-fitting » Extensible » To new problems or domains

An ideal library » Complete » One stop for your problems » Topical » For instance, it should report current best solutions found

An ideal library » Independent » Not tied to a particular solver or proprietary input language » Mix of difficulties » Hard and easy problems » Solved and open problems » With perhaps even a difficulty index?

An ideal library » Accurate » It should be trusted » Used » A valued resource for the community

Problem format » Lo-tech or hi-tech?

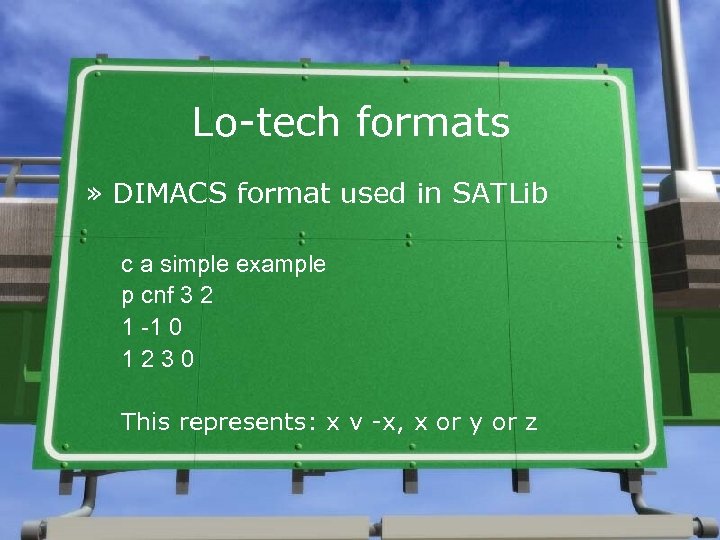

Lo-tech formats » DIMACS format used in SATLib c a simple example p cnf 3 2 1 -1 0 1230 This represents: x v -x, x or y or z

Lo-tech formats » DIMACS format used in SATLib » +ve » All programming languages can read integers! » Small amount of extensibility built in (e. g. QBF) » -ve » Larger extensions are problematic (e. g. beyond CNF to arbitrary Boolean circuits)

Hi-tech formats » CP competition <instance> <presentation name="4 -queens" description="This problem involves placing 4 queens on a chessboard" nb. Solutions="at least 1" format="XCSP 1. 1 (XML CSP Representation 1. 1)" /> <domains nb. Domains="1"> <domain name="dom 0" nb. Values="4" values="1. . 4" /> </domains> <variables nb. Variables="4"> <variable name="X 0" domain="dom 0"/> … </variables> <relations nb. Relations="3"> <relation name="rel 0" domain="dom 0” nb. Conflicts="10 conflicts="(1, 1)(1, 2)(2, 1)(2, 2)(2, 3)(3, 2)(3, 3)(3, 4)(4, 3)(4, 4)" /> … </relations > <constraints nb. Constraints="6"> <constraint name="C 0" scope="X 0 X 1" relation="rel 0"/> …

Hi-tech formats » XML » +ve » Easy to extend » Parsing tools can be provided » -ve » Complex and verbose » Computers can parse terse structures easily

No-tech formats » CSPLib » Problems are specified in natural language » No agreement at that time for an input language » One focus was on how you model a problem » Today there is more consensus on modelling languages like Zinc

No-tech formats » CSPLib » Problems are specified in natural language » But you can still provide in one place » Input data » Results » Code » Parsers …

Getting problems » Submit them yourself » Initially, you must do this so library has some critical mass first time people look at it » But it becomes tiresome and unrepresentative to do so continually » Ask at every talk » Tried for several years but it (almost) never worked

Getting problems » Need some incentive » Offer money? » Price of entry for the competition? » If you have a competition, users will submit problems that their solver is good at?

Competitions

Libraries + Competitions » You can have a library without a competition » But you can’t have a competition without a library

Libraries + Competitions » Libraries then competition » TPTP then CASC » Easy and safe! » Libraries and competition » Planning » Robo. Cup » …

Increasing complexity » Constraints » 1 st year, binary extensional » 2 nd year, limited number of globals » 3 rd year, unlimited » Planning » Increasing complexity » Time, metrics, uncertainty, …

Benefits » Gets ideas implemented » Rewards engineering » Progress needs both science and engineering! » Puts it all together

Benefits » Gives greater importance to important low-level issues » In SAT: » Watched literals » VSIDS » …

Benefits » Witness the progress in SAT » 1985, 10 s vars » 1995, 100 s vars » 2005, 1000 s vars » … » Not just Moore’s law at play!

Pitfalls » Competitions require lots of work » Organizers get limited (academic) reward » One solution is to organize also competition special issues

Pitfalls » Competitions encourage incremental improvements » Don’t have them too often! » You may discover a local minimum » E. g. MDPs for speech recognition » Give out best new solver prize?

The Chaff story » Industrial problems, SAT & UNSAT instances » » » » 2008, 2007, 2006, 2005, 2004, 2003, 2002, 1 st 1 st Mini. SAT (son of z. Chaff) RSAT (son of Mini. SAT) Mini. SAT Sat. ELite GTI (Mini. SAT+preprocessor) z. Chaff (Forklift from 2003 was better) Forklift z. Chaff

Other issues » Man-power » Organizers » One is not enough? » Judges » All rules need interpretation » Compute-power » Find a friendly cluster

Other issues » Multiple tracks » SAT/UNSAT » Random/industrial/crafted » … » Certificate/Uncertificated

Other issues » Holding problems back if possible » Release some problems so competitors can ensure solver compliance » But hold most back so competition is blind!

Other issues » Multiple phases » Too many solvers for all to compete with long timeouts » First phase to test correctness » Second phase to throw out the slow solvers (who cost you many timeouts) » Third phase to differentiate between better solvers

Other issues » Reward function » <#completed, average time, …> » solution purse + speed purse » Points for each problem divided between those solvers that solve it » Getting buy in from competitors » It will (and should) evolve over time!

Other issues » Prizes » Give out many! » Good for people’s CVs » Good motivator future years

Other issues » Open or closed source? » Open to share progress » Closed to get the best » Last year’s winner » Condition of entry » To see progress is being made!

Other issues » Smallest unsolved problem » Give a prize! » Timing » » Run during the conference Creates a buzz so people enter next year Get a slot in program to discuss results Get a slot in banquet to give out prizes

Conclusions » Benchmark libraries » When an area is several decades old, why wouldn’t you have one? » Competitions » Designed well, held not too frequently, & with buy-in from the community, why wouldn’t you?

Questions » Disagreements » Other opinions » Different experiences » …

91befce1d20b81f9c275dfd53811eade.ppt