21bb4e84db1828107f9ee33582fca852.ppt

- Количество слайдов: 56

Santa Fe 6/18/03 Timothy L. Thomas 1

Santa Fe 6/18/03 Timothy L. Thomas 1

“UCF” Computing Capabilities at UNM HPC Timothy L. Thomas UNM Dept of Physics and Astronomy Santa Fe 6/18/03 Timothy L. Thomas 2

“UCF” Computing Capabilities at UNM HPC Timothy L. Thomas UNM Dept of Physics and Astronomy Santa Fe 6/18/03 Timothy L. Thomas 2

Santa Fe 6/18/03 Timothy L. Thomas 3

Santa Fe 6/18/03 Timothy L. Thomas 3

Santa Fe 6/18/03 Timothy L. Thomas 4

Santa Fe 6/18/03 Timothy L. Thomas 4

Santa Fe 6/18/03 Timothy L. Thomas 5

Santa Fe 6/18/03 Timothy L. Thomas 5

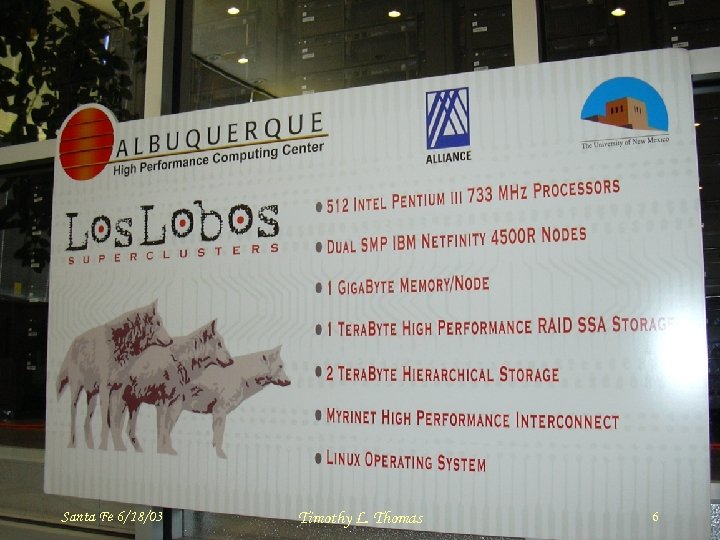

Santa Fe 6/18/03 Timothy L. Thomas 6

Santa Fe 6/18/03 Timothy L. Thomas 6

Santa Fe 6/18/03 Timothy L. Thomas 7

Santa Fe 6/18/03 Timothy L. Thomas 7

Santa Fe 6/18/03 Timothy L. Thomas 8

Santa Fe 6/18/03 Timothy L. Thomas 8

Santa Fe 6/18/03 Timothy L. Thomas 9

Santa Fe 6/18/03 Timothy L. Thomas 9

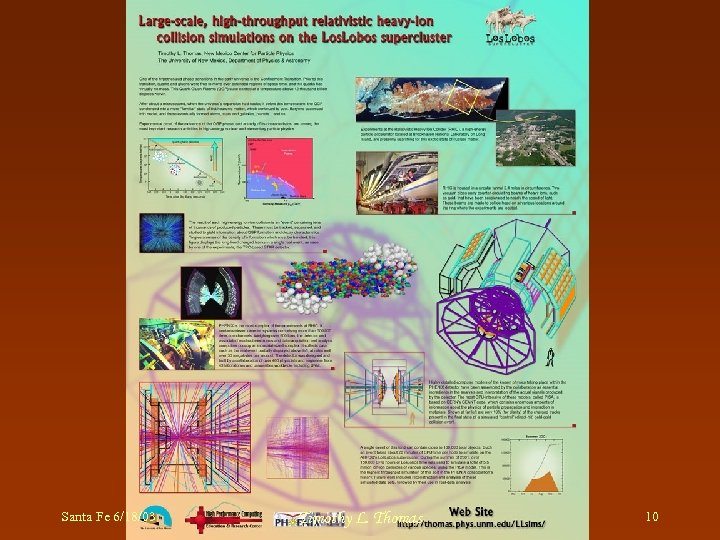

Santa Fe 6/18/03 Timothy L. Thomas 10

Santa Fe 6/18/03 Timothy L. Thomas 10

Santa Fe 6/18/03 Timothy L. Thomas 11

Santa Fe 6/18/03 Timothy L. Thomas 11

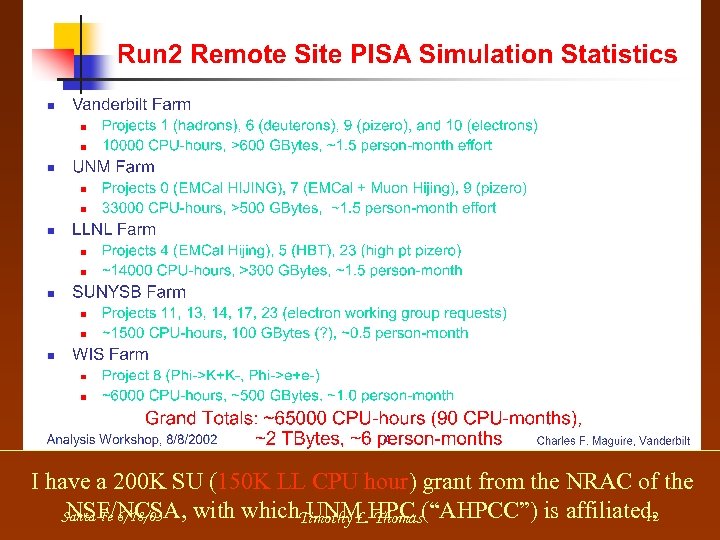

I have a 200 K SU (150 K LL CPU hour) grant from the NRAC of the NSF/NCSA, with which. Timothy L. HPC (“AHPCC”) is affiliated. UNM Thomas 12 Santa Fe 6/18/03

I have a 200 K SU (150 K LL CPU hour) grant from the NRAC of the NSF/NCSA, with which. Timothy L. HPC (“AHPCC”) is affiliated. UNM Thomas 12 Santa Fe 6/18/03

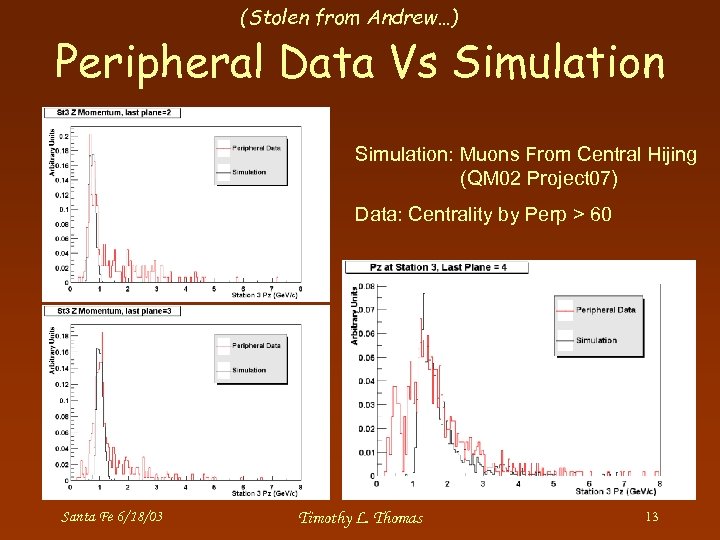

(Stolen from Andrew…) Peripheral Data Vs Simulation: Muons From Central Hijing (QM 02 Project 07) Data: Centrality by Perp > 60 Santa Fe 6/18/03 Timothy L. Thomas 13

(Stolen from Andrew…) Peripheral Data Vs Simulation: Muons From Central Hijing (QM 02 Project 07) Data: Centrality by Perp > 60 Santa Fe 6/18/03 Timothy L. Thomas 13

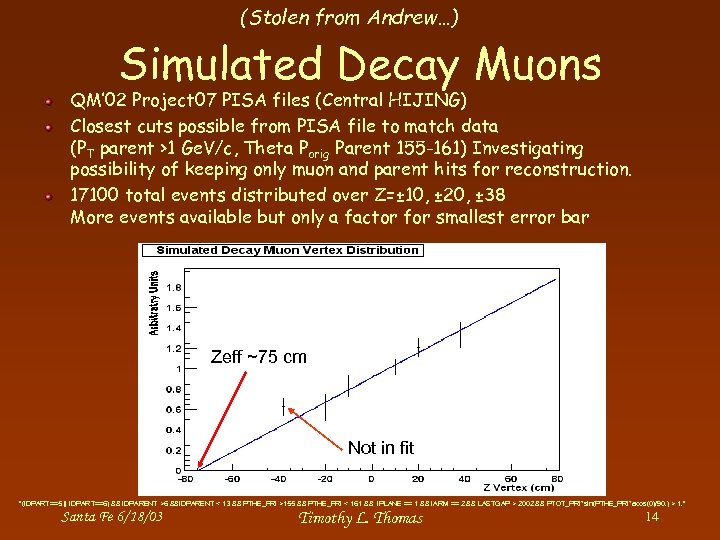

(Stolen from Andrew…) Simulated Decay Muons QM’ 02 Project 07 PISA files (Central HIJING) Closest cuts possible from PISA file to match data (PT parent >1 Ge. V/c, Theta Porig Parent 155 -161) Investigating possibility of keeping only muon and parent hits for reconstruction. 17100 total events distributed over Z=± 10, ± 20, ± 38 More events available but only a factor for smallest error bar Zeff ~75 cm Not in fit "(IDPART==5 || IDPART==6) && IDPARENT >6 &&IDPARENT < 13 && PTHE_PRI >155 && PTHE_PRI < 161 && IPLANE == 1 && IARM == 2 && LASTGAP > 2002 && PTOT_PRI*sin(PTHE_PRI*acos(0)/90. ) > 1. " Santa Fe 6/18/03 Timothy L. Thomas 14

(Stolen from Andrew…) Simulated Decay Muons QM’ 02 Project 07 PISA files (Central HIJING) Closest cuts possible from PISA file to match data (PT parent >1 Ge. V/c, Theta Porig Parent 155 -161) Investigating possibility of keeping only muon and parent hits for reconstruction. 17100 total events distributed over Z=± 10, ± 20, ± 38 More events available but only a factor for smallest error bar Zeff ~75 cm Not in fit "(IDPART==5 || IDPART==6) && IDPARENT >6 &&IDPARENT < 13 && PTHE_PRI >155 && PTHE_PRI < 161 && IPLANE == 1 && IARM == 2 && LASTGAP > 2002 && PTOT_PRI*sin(PTHE_PRI*acos(0)/90. ) > 1. " Santa Fe 6/18/03 Timothy L. Thomas 14

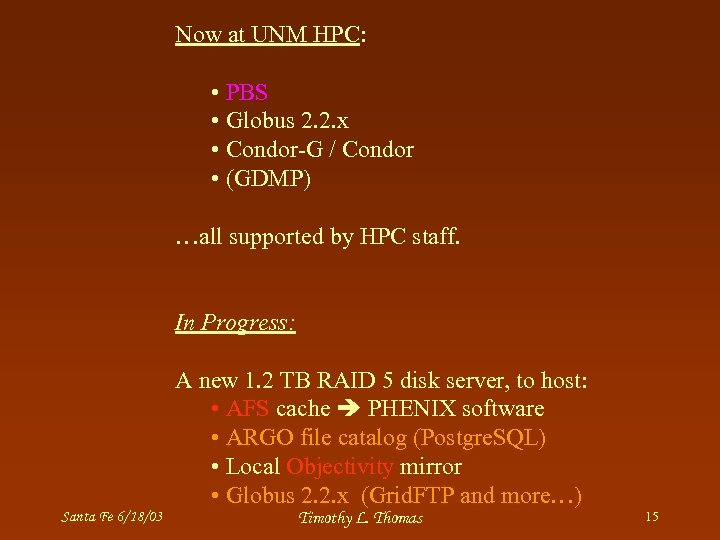

Now at UNM HPC: • PBS • Globus 2. 2. x • Condor-G / Condor • (GDMP) …all supported by HPC staff. In Progress: A new 1. 2 TB RAID 5 disk server, to host: • AFS cache PHENIX software • ARGO file catalog (Postgre. SQL) • Local Objectivity mirror • Globus 2. 2. x (Grid. FTP and more…) Santa Fe 6/18/03 Timothy L. Thomas 15

Now at UNM HPC: • PBS • Globus 2. 2. x • Condor-G / Condor • (GDMP) …all supported by HPC staff. In Progress: A new 1. 2 TB RAID 5 disk server, to host: • AFS cache PHENIX software • ARGO file catalog (Postgre. SQL) • Local Objectivity mirror • Globus 2. 2. x (Grid. FTP and more…) Santa Fe 6/18/03 Timothy L. Thomas 15

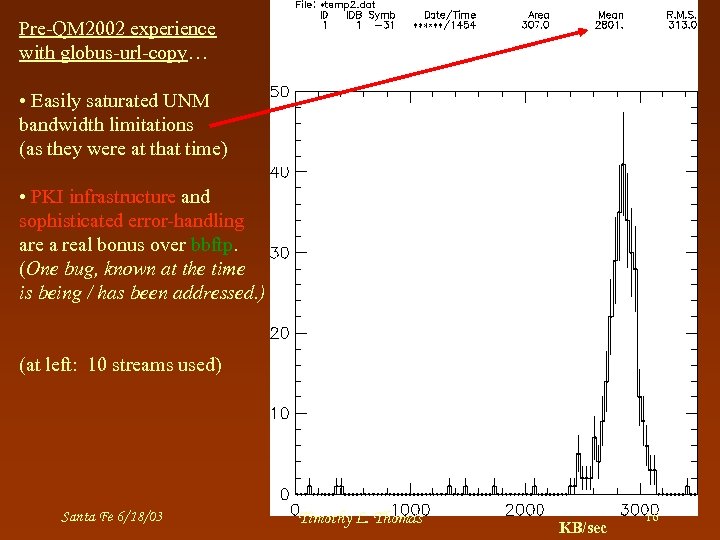

Pre-QM 2002 experience with globus-url-copy… • Easily saturated UNM bandwidth limitations (as they were at that time) • PKI infrastructure and sophisticated error-handling are a real bonus over bbftp. (One bug, known at the time is being / has been addressed. ) (at left: 10 streams used) Santa Fe 6/18/03 Timothy L. Thomas KB/sec 16

Pre-QM 2002 experience with globus-url-copy… • Easily saturated UNM bandwidth limitations (as they were at that time) • PKI infrastructure and sophisticated error-handling are a real bonus over bbftp. (One bug, known at the time is being / has been addressed. ) (at left: 10 streams used) Santa Fe 6/18/03 Timothy L. Thomas KB/sec 16

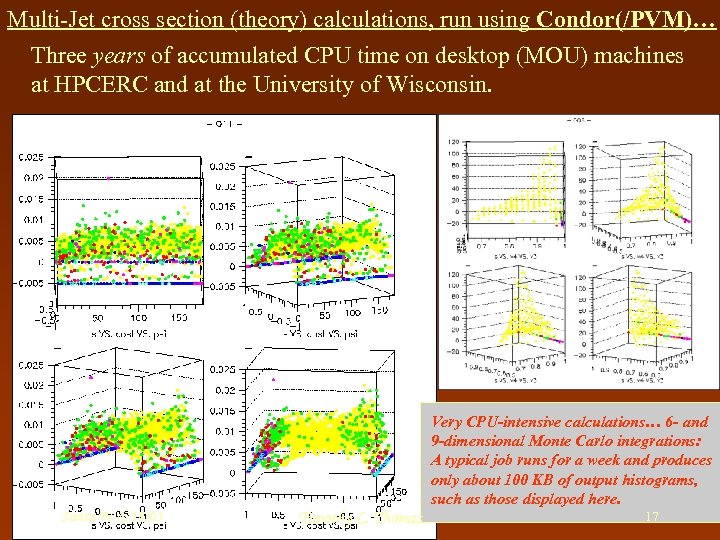

Multi-Jet cross section (theory) calculations, run using Condor(/PVM)… Three years of accumulated CPU time on desktop (MOU) machines at HPCERC and at the University of Wisconsin. Very CPU-intensive calculations… 6 - and 9 -dimensional Monte Carlo integrations: A typical job runs for a week and produces only about 100 KB of output histograms, such as those displayed here. Santa Fe 6/18/03 Timothy L. Thomas 17

Multi-Jet cross section (theory) calculations, run using Condor(/PVM)… Three years of accumulated CPU time on desktop (MOU) machines at HPCERC and at the University of Wisconsin. Very CPU-intensive calculations… 6 - and 9 -dimensional Monte Carlo integrations: A typical job runs for a week and produces only about 100 KB of output histograms, such as those displayed here. Santa Fe 6/18/03 Timothy L. Thomas 17

LLDIMU. HPC. UNM. EDU Santa Fe 6/18/03 Timothy L. Thomas 18

LLDIMU. HPC. UNM. EDU Santa Fe 6/18/03 Timothy L. Thomas 18

Santa Fe 6/18/03 Timothy L. Thomas 19

Santa Fe 6/18/03 Timothy L. Thomas 19

Santa Fe 6/18/03 Timothy L. Thomas 20

Santa Fe 6/18/03 Timothy L. Thomas 20

Santa Fe 6/18/03 Timothy L. Thomas 21

Santa Fe 6/18/03 Timothy L. Thomas 21

Santa Fe 6/18/03 Timothy L. Thomas 22

Santa Fe 6/18/03 Timothy L. Thomas 22

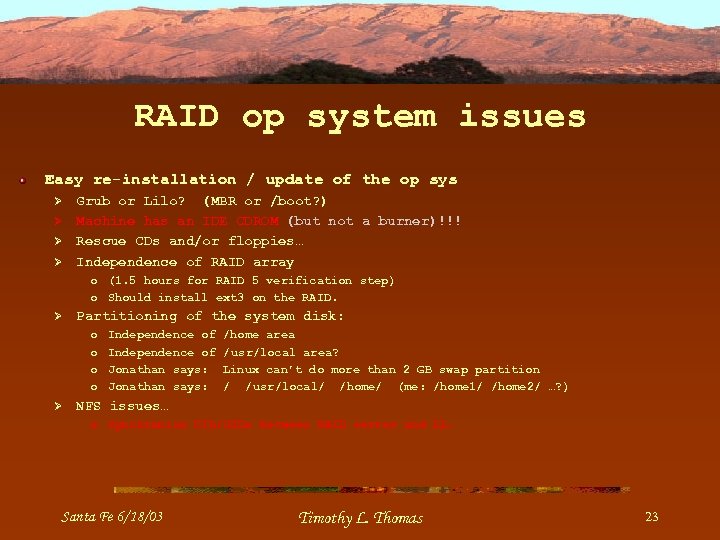

RAID op system issues Easy re-installation / update of the op sys Ø Ø Grub or Lilo? (MBR or /boot? ) Machine has an IDE CDROM (but not a burner)!!! Rescue CDs and/or floppies… Independence of RAID array o (1. 5 hours for RAID 5 verification step) o Should install ext 3 on the RAID. Ø Partitioning of the system disk: o o Ø Independence of Jonathan says: /home area /usr/local area? Linux can’t do more than 2 GB swap partition / /usr/local/ /home/ (me: /home 1/ /home 2/ …? ) NFS issues… o Synchronize UID/GIDs between RAID server and LL. Santa Fe 6/18/03 Timothy L. Thomas 23

RAID op system issues Easy re-installation / update of the op sys Ø Ø Grub or Lilo? (MBR or /boot? ) Machine has an IDE CDROM (but not a burner)!!! Rescue CDs and/or floppies… Independence of RAID array o (1. 5 hours for RAID 5 verification step) o Should install ext 3 on the RAID. Ø Partitioning of the system disk: o o Ø Independence of Jonathan says: /home area /usr/local area? Linux can’t do more than 2 GB swap partition / /usr/local/ /home/ (me: /home 1/ /home 2/ …? ) NFS issues… o Synchronize UID/GIDs between RAID server and LL. Santa Fe 6/18/03 Timothy L. Thomas 23

RAID op system issues Compilers and glibc… Santa Fe 6/18/03 Timothy L. Thomas 24

RAID op system issues Compilers and glibc… Santa Fe 6/18/03 Timothy L. Thomas 24

RAID op system issues File systems… Ø Ø Ø What quotas? Ext 3? (Quotas working OK? ) Reiser. FS? (Need special kernel modules for this? ) Santa Fe 6/18/03 Timothy L. Thomas 25

RAID op system issues File systems… Ø Ø Ø What quotas? Ext 3? (Quotas working OK? ) Reiser. FS? (Need special kernel modules for this? ) Santa Fe 6/18/03 Timothy L. Thomas 25

RAID op system issues Support for the following apps: Ø Raid software Ø Globus… Ø PHENIX application software Ø Objectivity o gcc 2. 95. 3 Ø Ø Post. Gress Open AFS Kerberos 4 Santa Fe 6/18/03 Timothy L. Thomas 26

RAID op system issues Support for the following apps: Ø Raid software Ø Globus… Ø PHENIX application software Ø Objectivity o gcc 2. 95. 3 Ø Ø Post. Gress Open AFS Kerberos 4 Santa Fe 6/18/03 Timothy L. Thomas 26

RAID op system issues Security issues… Ø Ø IP#: fixed or DHCP? What services to run or avoid? o Ø Ø NFS… Tripwire or equiv… Kerberos (for Open AFS)… Globus… ipchains firewall rules; /etc/services; /etc/ xinetd config; etc… Santa Fe 6/18/03 Timothy L. Thomas 27

RAID op system issues Security issues… Ø Ø IP#: fixed or DHCP? What services to run or avoid? o Ø Ø NFS… Tripwire or equiv… Kerberos (for Open AFS)… Globus… ipchains firewall rules; /etc/services; /etc/ xinetd config; etc… Santa Fe 6/18/03 Timothy L. Thomas 27

RAID op system issues Application-level issues… Ø Ø Ø Which framework? Both? Who maintains framework and how can this job be properly divided up among locals? SHOULD THE RAID ARRAY BE PARTITONED, a la the PHENIX counting house buffer boxes’ /a and /b file systems? Santa Fe 6/18/03 Timothy L. Thomas 28

RAID op system issues Application-level issues… Ø Ø Ø Which framework? Both? Who maintains framework and how can this job be properly divided up among locals? SHOULD THE RAID ARRAY BE PARTITONED, a la the PHENIX counting house buffer boxes’ /a and /b file systems? Santa Fe 6/18/03 Timothy L. Thomas 28

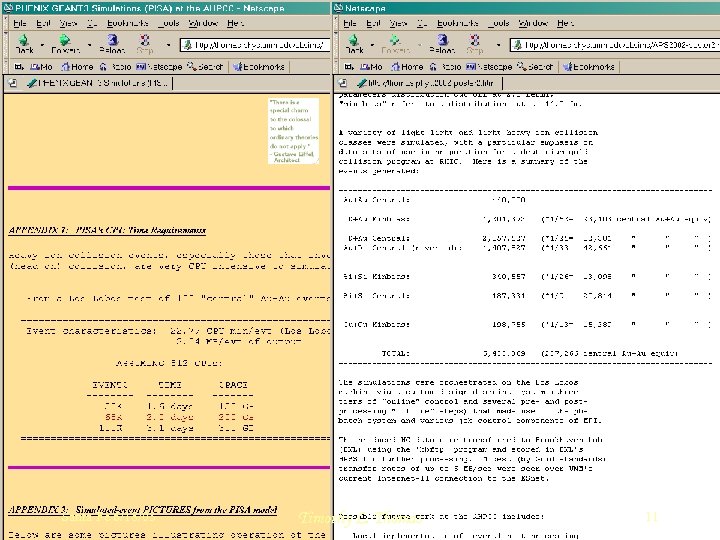

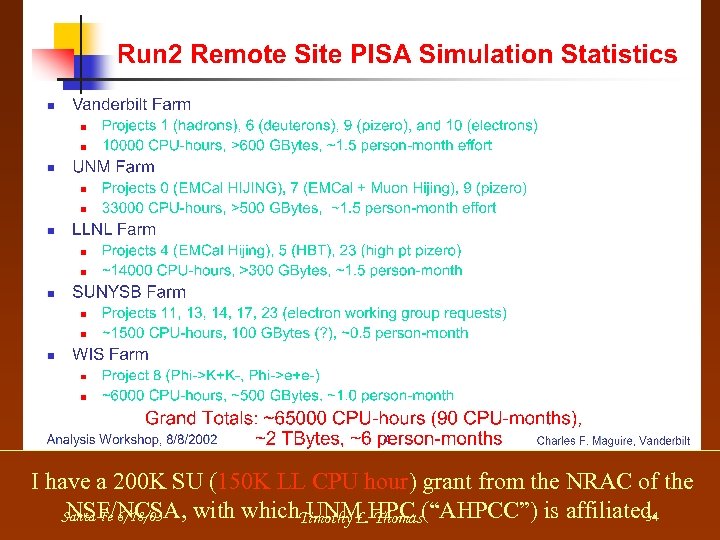

![Resources Assume 90 KByte/event and 0. 1 GByte/hour/CPU Trigger Signal Lumi[nb^-1] #Event[M] Size[Gbyte] CPU[hour] Resources Assume 90 KByte/event and 0. 1 GByte/hour/CPU Trigger Signal Lumi[nb^-1] #Event[M] Size[Gbyte] CPU[hour]](https://present5.com/presentation/21bb4e84db1828107f9ee33582fca852/image-29.jpg) Resources Assume 90 KByte/event and 0. 1 GByte/hour/CPU Trigger Signal Lumi[nb^-1] #Event[M] Size[Gbyte] CPU[hour] 100 CPU[day] mu-mu mu e-mu ERT_electron 193 13. 0 11700 4. 9 1 MUIDN_1 D_&BBCLL 1 238 34. 0 30600 12. 8 1 1 1 59 0. 2 18 180 0. 1 1 MUIDN_1 D 1 S&BBCL 1 254 4. 8 4320 1. 8 1 1 1 MUIDN_1 D 1 S&NTCN 230 18. 0 16200 6. 8 1 MUIDS_1 D&BBCLL 1 274 10. 7 9630 4. 0 1 1 1 MUIDS_1 D 1 S&BBCLL 1 293 1. 3 1170 0. 5 1 MUIDS_1 D 1 S&NTCS 278 5. 0 4500 1. 9 1 ALL PRDF 350 6600. 0 33, 000 330, 000 137. 5 MUIDN_1 D&MUIDS_1 D&BBCLL 1 Filtered event can be analyzed, but not ALL PRDF event Many trigger has overlap. Santa Fe 6/18/03 Timothy L. Thomas 29

Resources Assume 90 KByte/event and 0. 1 GByte/hour/CPU Trigger Signal Lumi[nb^-1] #Event[M] Size[Gbyte] CPU[hour] 100 CPU[day] mu-mu mu e-mu ERT_electron 193 13. 0 11700 4. 9 1 MUIDN_1 D_&BBCLL 1 238 34. 0 30600 12. 8 1 1 1 59 0. 2 18 180 0. 1 1 MUIDN_1 D 1 S&BBCL 1 254 4. 8 4320 1. 8 1 1 1 MUIDN_1 D 1 S&NTCN 230 18. 0 16200 6. 8 1 MUIDS_1 D&BBCLL 1 274 10. 7 9630 4. 0 1 1 1 MUIDS_1 D 1 S&BBCLL 1 293 1. 3 1170 0. 5 1 MUIDS_1 D 1 S&NTCS 278 5. 0 4500 1. 9 1 ALL PRDF 350 6600. 0 33, 000 330, 000 137. 5 MUIDN_1 D&MUIDS_1 D&BBCLL 1 Filtered event can be analyzed, but not ALL PRDF event Many trigger has overlap. Santa Fe 6/18/03 Timothy L. Thomas 29

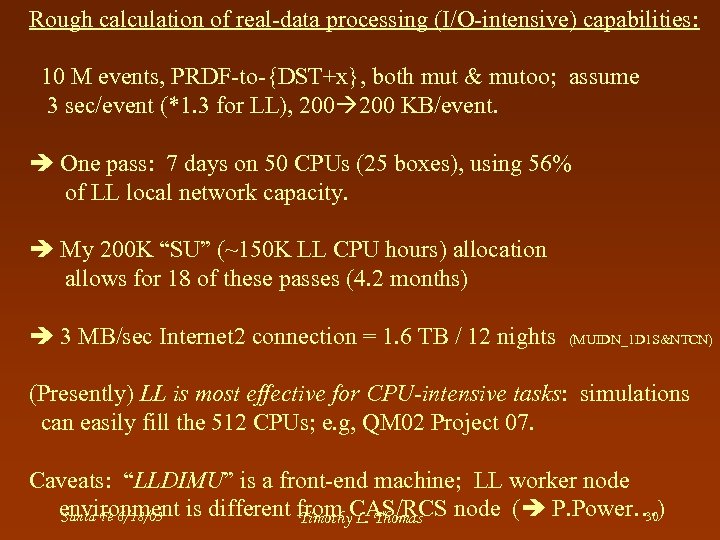

Rough calculation of real-data processing (I/O-intensive) capabilities: 10 M events, PRDF-to-{DST+x}, both mut & mutoo; assume 3 sec/event (*1. 3 for LL), 200 KB/event. One pass: 7 days on 50 CPUs (25 boxes), using 56% of LL local network capacity. My 200 K “SU” (~150 K LL CPU hours) allocation allows for 18 of these passes (4. 2 months) 3 MB/sec Internet 2 connection = 1. 6 TB / 12 nights (MUIDN_1 D 1 S&NTCN) (Presently) LL is most effective for CPU-intensive tasks: simulations can easily fill the 512 CPUs; e. g, QM 02 Project 07. Caveats: “LLDIMU” is a front-end machine; LL worker node environment is different from CAS/RCS node ( P. Power…) 30 Santa Fe 6/18/03 Timothy L. Thomas

Rough calculation of real-data processing (I/O-intensive) capabilities: 10 M events, PRDF-to-{DST+x}, both mut & mutoo; assume 3 sec/event (*1. 3 for LL), 200 KB/event. One pass: 7 days on 50 CPUs (25 boxes), using 56% of LL local network capacity. My 200 K “SU” (~150 K LL CPU hours) allocation allows for 18 of these passes (4. 2 months) 3 MB/sec Internet 2 connection = 1. 6 TB / 12 nights (MUIDN_1 D 1 S&NTCN) (Presently) LL is most effective for CPU-intensive tasks: simulations can easily fill the 512 CPUs; e. g, QM 02 Project 07. Caveats: “LLDIMU” is a front-end machine; LL worker node environment is different from CAS/RCS node ( P. Power…) 30 Santa Fe 6/18/03 Timothy L. Thomas

Santa Fe 6/18/03 Timothy L. Thomas 31

Santa Fe 6/18/03 Timothy L. Thomas 31

Santa Fe 6/18/03 Timothy L. Thomas 32

Santa Fe 6/18/03 Timothy L. Thomas 32

On UNM Grid activities T. L. Thomas Santa Fe 6/18/03 Timothy L. Thomas 33

On UNM Grid activities T. L. Thomas Santa Fe 6/18/03 Timothy L. Thomas 33

I have a 200 K SU (150 K LL CPU hour) grant from the NRAC of the NSF/NCSA, with which. Timothy L. HPC (“AHPCC”) is affiliated. UNM Thomas 34 Santa Fe 6/18/03

I have a 200 K SU (150 K LL CPU hour) grant from the NRAC of the NSF/NCSA, with which. Timothy L. HPC (“AHPCC”) is affiliated. UNM Thomas 34 Santa Fe 6/18/03

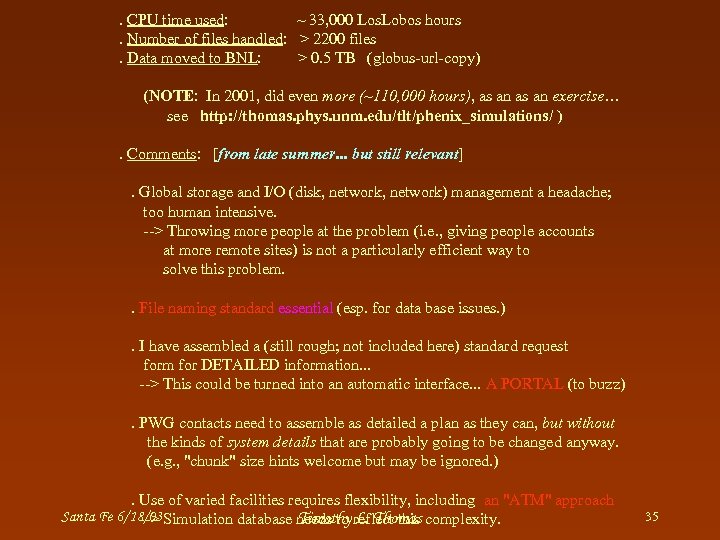

. CPU time used: ~ 33, 000 Los. Lobos hours. Number of files handled: > 2200 files. Data moved to BNL: > 0. 5 TB (globus-url-copy) (NOTE: In 2001, did even more (~110, 000 hours), as an exercise… see http: //thomas. phys. unm. edu/tlt/phenix_simulations/ ). Comments: [from late summer. . . but still relevant]. Global storage and I/O (disk, network) management a headache; too human intensive. --> Throwing more people at the problem (i. e. , giving people accounts at more remote sites) is not a particularly efficient way to solve this problem. . File naming standard essential (esp. for data base issues. ). I have assembled a (still rough; not included here) standard request form for DETAILED information. . . --> This could be turned into an automatic interface. . . A PORTAL (to buzz). PWG contacts need to assemble as detailed a plan as they can, but without the kinds of system details that are probably going to be changed anyway. (e. g. , "chunk" size hints welcome but may be ignored. ). Use of varied facilities requires flexibility, including an "ATM" approach Santa Fe 6/18/03 Simulation database needs to reflect this complexity. Timothy L. Thomas --> 35

. CPU time used: ~ 33, 000 Los. Lobos hours. Number of files handled: > 2200 files. Data moved to BNL: > 0. 5 TB (globus-url-copy) (NOTE: In 2001, did even more (~110, 000 hours), as an exercise… see http: //thomas. phys. unm. edu/tlt/phenix_simulations/ ). Comments: [from late summer. . . but still relevant]. Global storage and I/O (disk, network) management a headache; too human intensive. --> Throwing more people at the problem (i. e. , giving people accounts at more remote sites) is not a particularly efficient way to solve this problem. . File naming standard essential (esp. for data base issues. ). I have assembled a (still rough; not included here) standard request form for DETAILED information. . . --> This could be turned into an automatic interface. . . A PORTAL (to buzz). PWG contacts need to assemble as detailed a plan as they can, but without the kinds of system details that are probably going to be changed anyway. (e. g. , "chunk" size hints welcome but may be ignored. ). Use of varied facilities requires flexibility, including an "ATM" approach Santa Fe 6/18/03 Simulation database needs to reflect this complexity. Timothy L. Thomas --> 35

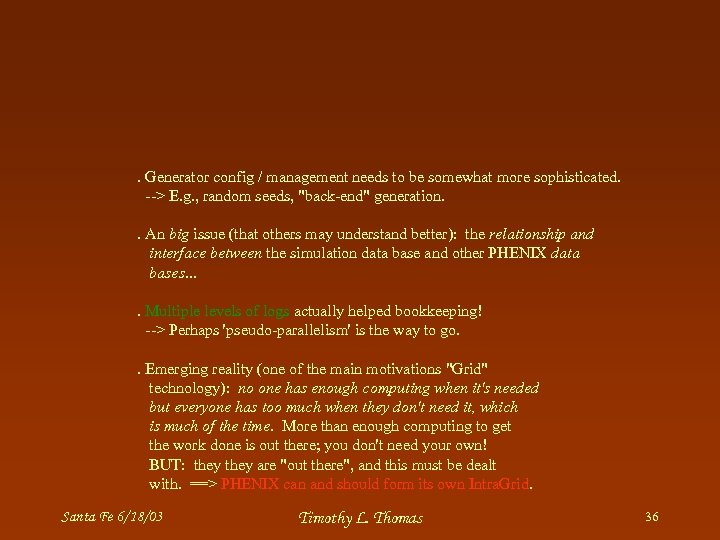

. Generator config / management needs to be somewhat more sophisticated. --> E. g. , random seeds, "back-end" generation. . An big issue (that others may understand better): the relationship and interface between the simulation data base and other PHENIX data bases. . Multiple levels of logs actually helped bookkeeping! --> Perhaps 'pseudo-parallelism' is the way to go. . Emerging reality (one of the main motivations "Grid" technology): no one has enough computing when it's needed but everyone has too much when they don't need it, which is much of the time. More than enough computing to get the work done is out there; you don't need your own! BUT: they are "out there", and this must be dealt with. ==> PHENIX can and should form its own Intra. Grid. Santa Fe 6/18/03 Timothy L. Thomas 36

. Generator config / management needs to be somewhat more sophisticated. --> E. g. , random seeds, "back-end" generation. . An big issue (that others may understand better): the relationship and interface between the simulation data base and other PHENIX data bases. . Multiple levels of logs actually helped bookkeeping! --> Perhaps 'pseudo-parallelism' is the way to go. . Emerging reality (one of the main motivations "Grid" technology): no one has enough computing when it's needed but everyone has too much when they don't need it, which is much of the time. More than enough computing to get the work done is out there; you don't need your own! BUT: they are "out there", and this must be dealt with. ==> PHENIX can and should form its own Intra. Grid. Santa Fe 6/18/03 Timothy L. Thomas 36

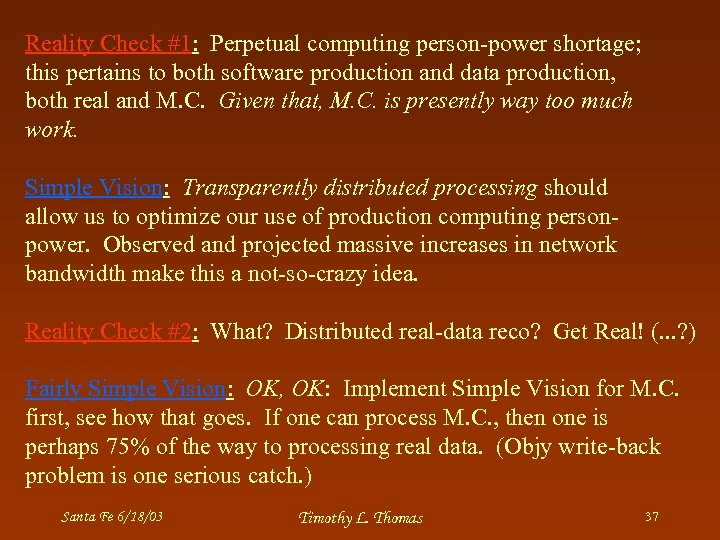

Reality Check #1: Perpetual computing person-power shortage; this pertains to both software production and data production, both real and M. C. Given that, M. C. is presently way too much work. Simple Vision: Transparently distributed processing should allow us to optimize our use of production computing personpower. Observed and projected massive increases in network bandwidth make this a not-so-crazy idea. Reality Check #2: What? Distributed real-data reco? Get Real! (. . . ? ) Fairly Simple Vision: OK, OK: Implement Simple Vision for M. C. first, see how that goes. If one can process M. C. , then one is perhaps 75% of the way to processing real data. (Objy write-back problem is one serious catch. ) Santa Fe 6/18/03 Timothy L. Thomas 37

Reality Check #1: Perpetual computing person-power shortage; this pertains to both software production and data production, both real and M. C. Given that, M. C. is presently way too much work. Simple Vision: Transparently distributed processing should allow us to optimize our use of production computing personpower. Observed and projected massive increases in network bandwidth make this a not-so-crazy idea. Reality Check #2: What? Distributed real-data reco? Get Real! (. . . ? ) Fairly Simple Vision: OK, OK: Implement Simple Vision for M. C. first, see how that goes. If one can process M. C. , then one is perhaps 75% of the way to processing real data. (Objy write-back problem is one serious catch. ) Santa Fe 6/18/03 Timothy L. Thomas 37

(The following slides are from a presentation that I was invited to give to the UNM-led multi-institutional “Internet 2 Day” this past March…) Santa Fe 6/18/03 Timothy L. Thomas 38

(The following slides are from a presentation that I was invited to give to the UNM-led multi-institutional “Internet 2 Day” this past March…) Santa Fe 6/18/03 Timothy L. Thomas 38

Internet 2 and the Grid The Future of Computing for Big Science at UNM Timothy L. Thomas UNM Dept of Physics and Astronomy Santa Fe 6/18/03 Timothy L. Thomas 39

Internet 2 and the Grid The Future of Computing for Big Science at UNM Timothy L. Thomas UNM Dept of Physics and Astronomy Santa Fe 6/18/03 Timothy L. Thomas 39

Grokking The Grid Grok v. To perceive a subject so deeply that one no longer knows it, but rather understands it on a fundamental level. Coined by Robert Heinlein in his 1961 novel, Stranger in a Strange Land. (Quotes from a colleague of mine…) Feb 2002: “This grid stuff is garbage. ” Dec 2002: “Hey, these grid visionaries are serious!” Santa Fe 6/18/03 Timothy L. Thomas 40

Grokking The Grid Grok v. To perceive a subject so deeply that one no longer knows it, but rather understands it on a fundamental level. Coined by Robert Heinlein in his 1961 novel, Stranger in a Strange Land. (Quotes from a colleague of mine…) Feb 2002: “This grid stuff is garbage. ” Dec 2002: “Hey, these grid visionaries are serious!” Santa Fe 6/18/03 Timothy L. Thomas 40

So what is a “Grid”? Santa Fe 6/18/03 Timothy L. Thomas 41

So what is a “Grid”? Santa Fe 6/18/03 Timothy L. Thomas 41

Santa Fe 6/18/03 Timothy L. Thomas 42

Santa Fe 6/18/03 Timothy L. Thomas 42

Santa Fe 6/18/03 Timothy L. Thomas 43

Santa Fe 6/18/03 Timothy L. Thomas 43

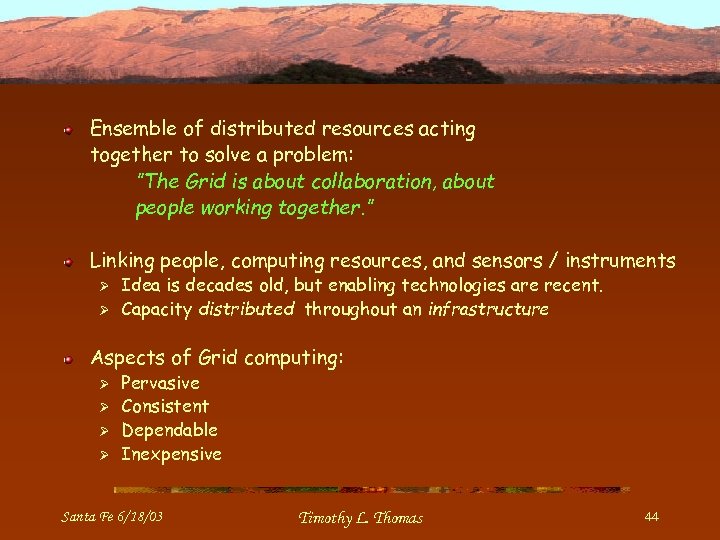

Ensemble of distributed resources acting together to solve a problem: ”The Grid is about collaboration, about people working together. ” Linking people, computing resources, and sensors / instruments Ø Ø Idea is decades old, but enabling technologies are recent. Capacity distributed throughout an infrastructure Aspects of Grid computing: Ø Ø Pervasive Consistent Dependable Inexpensive Santa Fe 6/18/03 Timothy L. Thomas 44

Ensemble of distributed resources acting together to solve a problem: ”The Grid is about collaboration, about people working together. ” Linking people, computing resources, and sensors / instruments Ø Ø Idea is decades old, but enabling technologies are recent. Capacity distributed throughout an infrastructure Aspects of Grid computing: Ø Ø Pervasive Consistent Dependable Inexpensive Santa Fe 6/18/03 Timothy L. Thomas 44

Virtual Organizations (VOs) Ø Security implications Ian Foster’s Three Requirements: Ø VOs that span multiple administrative domains Ø Participant services based on open standards Ø Delivery of serious Quality of Service Santa Fe 6/18/03 Timothy L. Thomas 45

Virtual Organizations (VOs) Ø Security implications Ian Foster’s Three Requirements: Ø VOs that span multiple administrative domains Ø Participant services based on open standards Ø Delivery of serious Quality of Service Santa Fe 6/18/03 Timothy L. Thomas 45

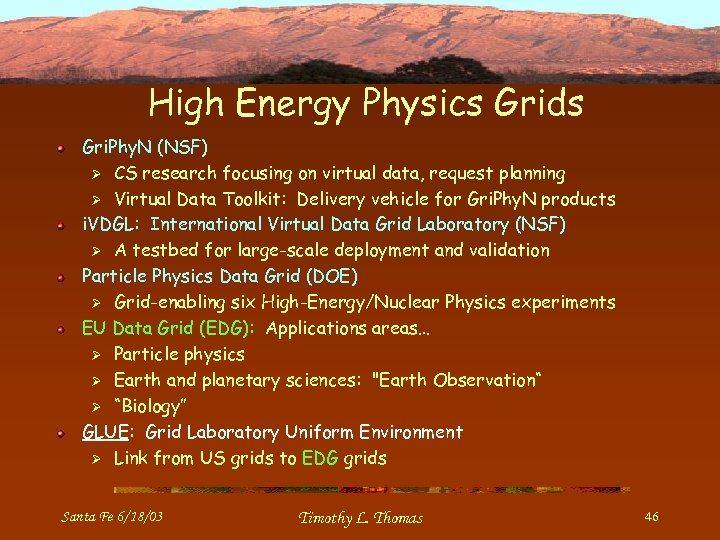

High Energy Physics Grids Gri. Phy. N (NSF) Ø CS research focusing on virtual data, request planning Ø Virtual Data Toolkit: Delivery vehicle for Gri. Phy. N products i. VDGL: International Virtual Data Grid Laboratory (NSF) Ø A testbed for large-scale deployment and validation Particle Physics Data Grid (DOE) Ø Grid-enabling six High-Energy/Nuclear Physics experiments EU Data Grid (EDG): Applications areas… Ø Particle physics Ø Earth and planetary sciences: "Earth Observation“ Ø “Biology” GLUE: Grid Laboratory Uniform Environment Ø Link from US grids to EDG grids Santa Fe 6/18/03 Timothy L. Thomas 46

High Energy Physics Grids Gri. Phy. N (NSF) Ø CS research focusing on virtual data, request planning Ø Virtual Data Toolkit: Delivery vehicle for Gri. Phy. N products i. VDGL: International Virtual Data Grid Laboratory (NSF) Ø A testbed for large-scale deployment and validation Particle Physics Data Grid (DOE) Ø Grid-enabling six High-Energy/Nuclear Physics experiments EU Data Grid (EDG): Applications areas… Ø Particle physics Ø Earth and planetary sciences: "Earth Observation“ Ø “Biology” GLUE: Grid Laboratory Uniform Environment Ø Link from US grids to EDG grids Santa Fe 6/18/03 Timothy L. Thomas 46

<<< Grid Hype >>> (“Grids: Grease or Glue? ”) Santa Fe 6/18/03 Timothy L. Thomas 47

<<< Grid Hype >>> (“Grids: Grease or Glue? ”) Santa Fe 6/18/03 Timothy L. Thomas 47

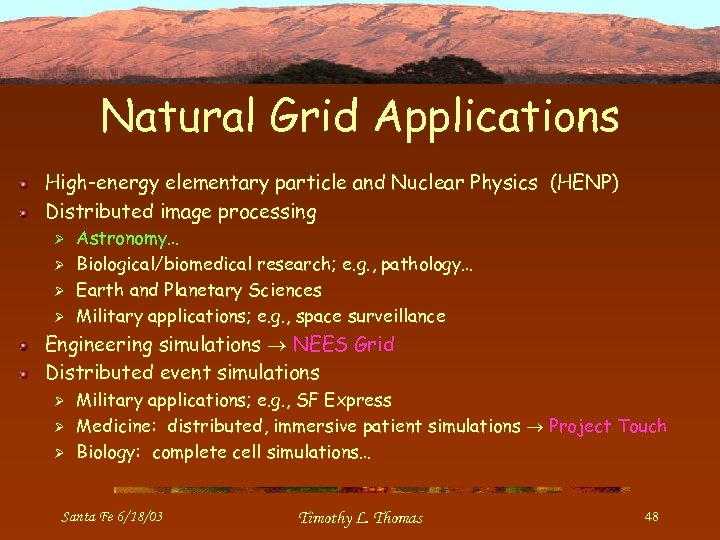

Natural Grid Applications High-energy elementary particle and Nuclear Physics (HENP) Distributed image processing Ø Ø Astronomy… Biological/biomedical research; e. g. , pathology… Earth and Planetary Sciences Military applications; e. g. , space surveillance Engineering simulations NEES Grid Distributed event simulations Ø Ø Ø Military applications; e. g. , SF Express Medicine: distributed, immersive patient simulations Project Touch Biology: complete cell simulations… Santa Fe 6/18/03 Timothy L. Thomas 48

Natural Grid Applications High-energy elementary particle and Nuclear Physics (HENP) Distributed image processing Ø Ø Astronomy… Biological/biomedical research; e. g. , pathology… Earth and Planetary Sciences Military applications; e. g. , space surveillance Engineering simulations NEES Grid Distributed event simulations Ø Ø Ø Military applications; e. g. , SF Express Medicine: distributed, immersive patient simulations Project Touch Biology: complete cell simulations… Santa Fe 6/18/03 Timothy L. Thomas 48

Processing requirements Two examples Example 1: High-energy Nuclear Physics Ø 10’s of petabytes of data per year Ø 10’s of teraflops of distributed CPU power o Comparable to today’s largest supercomputers… Santa Fe 6/18/03 Timothy L. Thomas 49

Processing requirements Two examples Example 1: High-energy Nuclear Physics Ø 10’s of petabytes of data per year Ø 10’s of teraflops of distributed CPU power o Comparable to today’s largest supercomputers… Santa Fe 6/18/03 Timothy L. Thomas 49

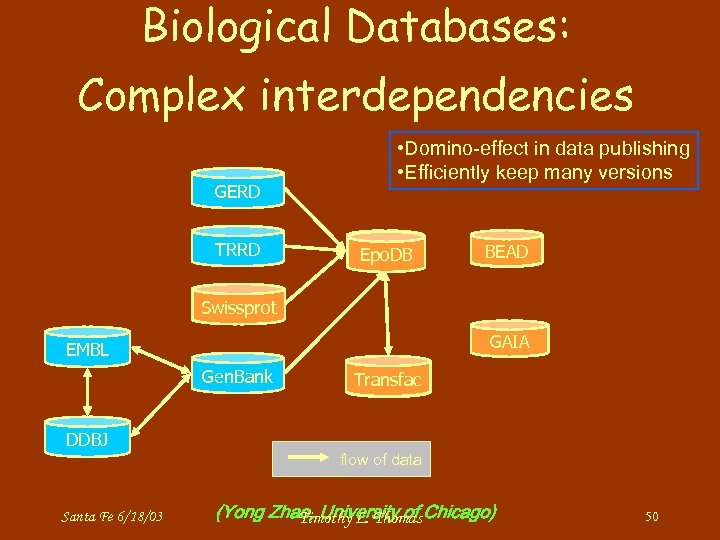

Biological Databases: Complex interdependencies GERD TRRD • Domino-effect in data publishing • Efficiently keep many versions Epo. DB BEAD Swissprot GAIA EMBL Gen. Bank Transfac DDBJ flow of data Santa Fe 6/18/03 (Yong Zhao, University of Chicago) Timothy L. Thomas 50

Biological Databases: Complex interdependencies GERD TRRD • Domino-effect in data publishing • Efficiently keep many versions Epo. DB BEAD Swissprot GAIA EMBL Gen. Bank Transfac DDBJ flow of data Santa Fe 6/18/03 (Yong Zhao, University of Chicago) Timothy L. Thomas 50

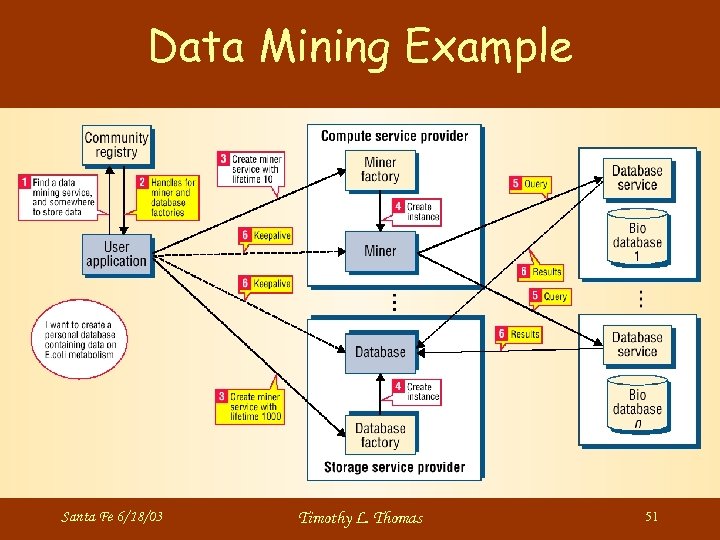

Data Mining Example Santa Fe 6/18/03 Timothy L. Thomas 51

Data Mining Example Santa Fe 6/18/03 Timothy L. Thomas 51

…and the role of Internet 2. It is clear that advanced networking will play a critical role in the development of an intergrid and its eventual evolution into The Grid… Ø Ø Ø Broadband capacity Advanced networking protocols Well-defined, finely graded, clearly-costed high Qualities of Service Santa Fe 6/18/03 Timothy L. Thomas 52

…and the role of Internet 2. It is clear that advanced networking will play a critical role in the development of an intergrid and its eventual evolution into The Grid… Ø Ø Ø Broadband capacity Advanced networking protocols Well-defined, finely graded, clearly-costed high Qualities of Service Santa Fe 6/18/03 Timothy L. Thomas 52

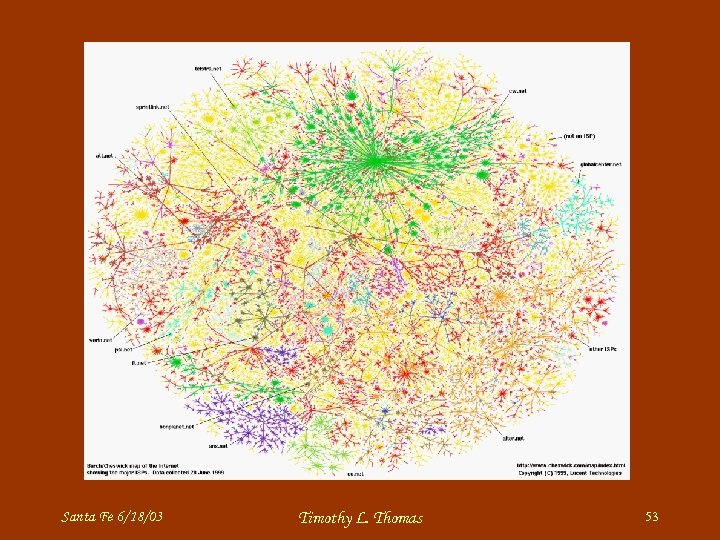

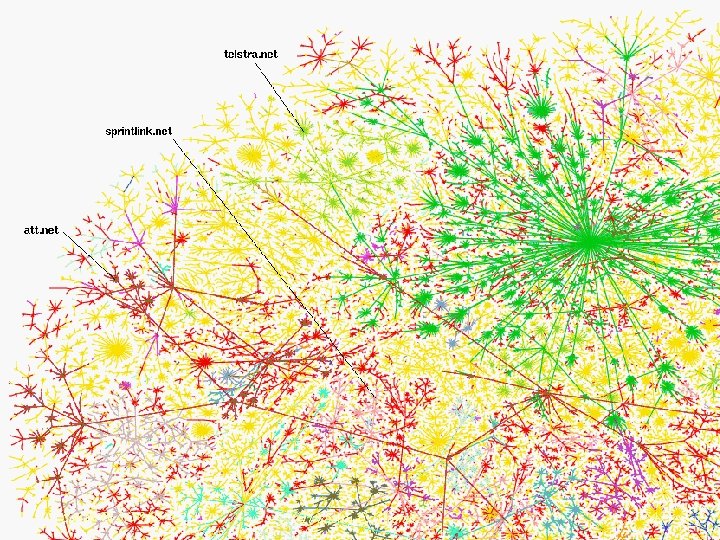

Santa Fe 6/18/03 Timothy L. Thomas 53

Santa Fe 6/18/03 Timothy L. Thomas 53

Santa Fe 6/18/03 Timothy L. Thomas 54

Santa Fe 6/18/03 Timothy L. Thomas 54

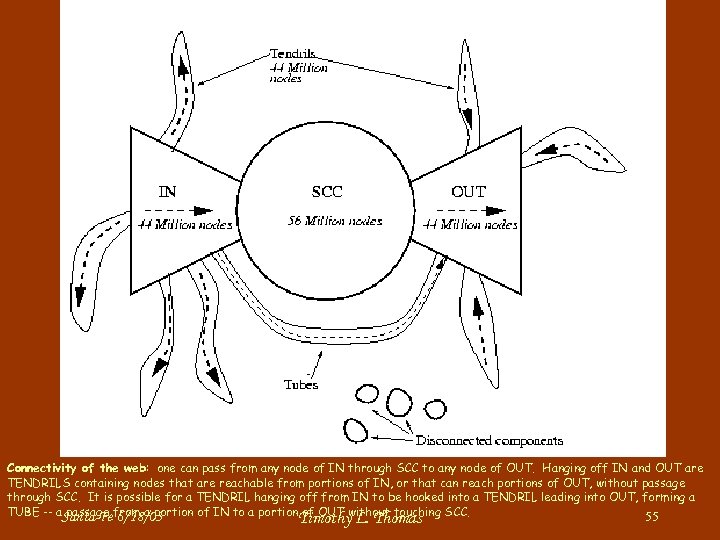

Connectivity of the web: one can pass from any node of IN through SCC to any node of OUT. Hanging off IN and OUT are TENDRILS containing nodes that are reachable from portions of IN, or that can reach portions of OUT, without passage through SCC. It is possible for a TENDRIL hanging off from IN to be hooked into a TENDRIL leading into OUT, forming a TUBE -- a. Santa Fefrom a portion of IN to a portion of OUT without touching SCC. passage 6/18/03 55 Timothy L. Thomas

Connectivity of the web: one can pass from any node of IN through SCC to any node of OUT. Hanging off IN and OUT are TENDRILS containing nodes that are reachable from portions of IN, or that can reach portions of OUT, without passage through SCC. It is possible for a TENDRIL hanging off from IN to be hooked into a TENDRIL leading into OUT, forming a TUBE -- a. Santa Fefrom a portion of IN to a portion of OUT without touching SCC. passage 6/18/03 55 Timothy L. Thomas

…In other words: barely predictable But no doubt inevitable, disruptive, transformative, …and very exciting! Santa Fe 6/18/03 Timothy L. Thomas 56

…In other words: barely predictable But no doubt inevitable, disruptive, transformative, …and very exciting! Santa Fe 6/18/03 Timothy L. Thomas 56