467f7a83a6f1b5652a2fed3542c70ae4.ppt

- Количество слайдов: 63

SAN Disk Metrics Measured on Sun Ultra & HP PA-RISC Servers, Storage. Works MAs & EVAs, using iozone V 3. 152

SAN Disk Metrics Measured on Sun Ultra & HP PA-RISC Servers, Storage. Works MAs & EVAs, using iozone V 3. 152

Current Situation UNIX External Storage has migrated to SAN Oracle Data File Sizes: 1 to 36 GB (R&D) Oracle Servers are predominantly Sun “Entry Level” HPQ Storage. Works: 24 MAs, 2 EVAs 2 Q 03 SAN LUN restructuring using RAID 5 only Oracle DBAs continue to request RAID 1+0 Roadmap for future - needed

Current Situation UNIX External Storage has migrated to SAN Oracle Data File Sizes: 1 to 36 GB (R&D) Oracle Servers are predominantly Sun “Entry Level” HPQ Storage. Works: 24 MAs, 2 EVAs 2 Q 03 SAN LUN restructuring using RAID 5 only Oracle DBAs continue to request RAID 1+0 Roadmap for future - needed

Purpose Of Filesystem Benchmarks Find Best Performance n Storage, Server, HW options, OS, and Filesystem Find Best Price/Performance n Restrain Costs Replace “Opinions” with Factual Analysis Continue Abbott UNIX Benchmarks n Filesystems, Disks, and SAN w Benchmarking began in 1999

Purpose Of Filesystem Benchmarks Find Best Performance n Storage, Server, HW options, OS, and Filesystem Find Best Price/Performance n Restrain Costs Replace “Opinions” with Factual Analysis Continue Abbott UNIX Benchmarks n Filesystems, Disks, and SAN w Benchmarking began in 1999

Goals Measure Current Capabilities Find Bottlenecks Find Best Price/Performance Set Cost Expectations For Customers n Provide a Menu of Configurations Find Simplest Configuration Satisfy Oracle DBA Expectations n Harmonize Abbott Oracle Filesystem Configuration Create a Road Map for Data Storage

Goals Measure Current Capabilities Find Bottlenecks Find Best Price/Performance Set Cost Expectations For Customers n Provide a Menu of Configurations Find Simplest Configuration Satisfy Oracle DBA Expectations n Harmonize Abbott Oracle Filesystem Configuration Create a Road Map for Data Storage

Preconceptions UNIX Sys. Admins n n n RAID 1+0 does not vastly outperform RAID 5 Distribute Busy Filesystems among LUNs At least 3+ LUNs should be used for Oracle DBAs n n n RAID 1+0 is Required for Production I Paid For It, So I Should Get It Filesystem Expansion On Demand

Preconceptions UNIX Sys. Admins n n n RAID 1+0 does not vastly outperform RAID 5 Distribute Busy Filesystems among LUNs At least 3+ LUNs should be used for Oracle DBAs n n n RAID 1+0 is Required for Production I Paid For It, So I Should Get It Filesystem Expansion On Demand

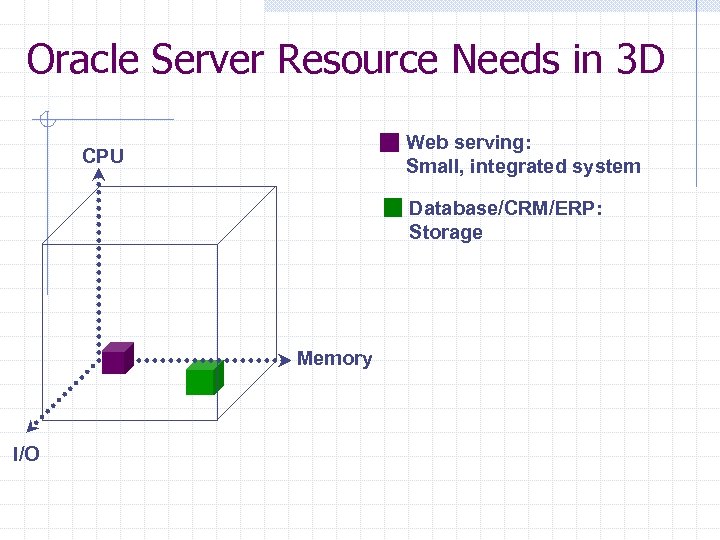

Oracle Server Resource Needs in 3 D Web serving: Small, integrated system CPU Database/CRM/ERP: Storage Memory I/O

Oracle Server Resource Needs in 3 D Web serving: Small, integrated system CPU Database/CRM/ERP: Storage Memory I/O

Sun Servers for Oracle Databases Sun Ultra. SPARC UPA Bus Entry Level Servers n n n Ultra 2, 2 x 300 MHz Ultra SPARC-II, Sbus, 2 GB 220 R, 2 x 450 MHz Ultra SPARC-II, PCI, 2 GB 420 R, 4 x 450 MHz Ultra SPARC-II, PCI, 4 GB Enterprise Class Sun UPA Bus Servers n E 3500, 4 x 400 MHz Ultra SPARC-II, UPA, Sbus, 8 GB Sun Ultra. SPARC Fireplane (Safari) Entry Level Servers n n n 280 R, 2 x 750 MHz Ultra SPARC-III, Fireplane, PCI, 8 GB 480 R, 4 x 900 MHz Ultra SPARC-III, Fireplane, PCI, 32 GB V 880, 8 x 900 MHz Ultra SPARC-III, Fireplane, PCI, 64 GB Other UNIX n HP L 1000, 2 x 450 PA-RISC, Astro, PCI, 1024 MB

Sun Servers for Oracle Databases Sun Ultra. SPARC UPA Bus Entry Level Servers n n n Ultra 2, 2 x 300 MHz Ultra SPARC-II, Sbus, 2 GB 220 R, 2 x 450 MHz Ultra SPARC-II, PCI, 2 GB 420 R, 4 x 450 MHz Ultra SPARC-II, PCI, 4 GB Enterprise Class Sun UPA Bus Servers n E 3500, 4 x 400 MHz Ultra SPARC-II, UPA, Sbus, 8 GB Sun Ultra. SPARC Fireplane (Safari) Entry Level Servers n n n 280 R, 2 x 750 MHz Ultra SPARC-III, Fireplane, PCI, 8 GB 480 R, 4 x 900 MHz Ultra SPARC-III, Fireplane, PCI, 32 GB V 880, 8 x 900 MHz Ultra SPARC-III, Fireplane, PCI, 64 GB Other UNIX n HP L 1000, 2 x 450 PA-RISC, Astro, PCI, 1024 MB

Oracle UNIX Filesystems Cooperative Standard between UNIX and R&D DBAs 8 Filesystems in 3 LUNs n n /exp/array. 1/oracle/

Oracle UNIX Filesystems Cooperative Standard between UNIX and R&D DBAs 8 Filesystems in 3 LUNs n n /exp/array. 1/oracle/

Storage. Works SAN Storage Nodes Storage. Works: DEC -> Compaq -> HPQ n A traditional DEC Shop Initial SAN equipment vendor n Brocade Switches resold under Storage. Works label Only vendor with complete UNIX coverage (2000) n n Sun, HP, SGI, Tru 64 UNIX, Linux EMC, Hitachi, etc… could not match UNIX coverage Enterprise Modular Array (MA) – “Stone Soup” SAN n n Buy the controller, then 2 to 6 disk shelves, then disks 2 -3 disk shelf configs have led to problem RAIDsets which have finally been reconfigured in 2 Q 2003 Enterprise Virtual Array (EVA) – Next Generation

Storage. Works SAN Storage Nodes Storage. Works: DEC -> Compaq -> HPQ n A traditional DEC Shop Initial SAN equipment vendor n Brocade Switches resold under Storage. Works label Only vendor with complete UNIX coverage (2000) n n Sun, HP, SGI, Tru 64 UNIX, Linux EMC, Hitachi, etc… could not match UNIX coverage Enterprise Modular Array (MA) – “Stone Soup” SAN n n Buy the controller, then 2 to 6 disk shelves, then disks 2 -3 disk shelf configs have led to problem RAIDsets which have finally been reconfigured in 2 Q 2003 Enterprise Virtual Array (EVA) – Next Generation

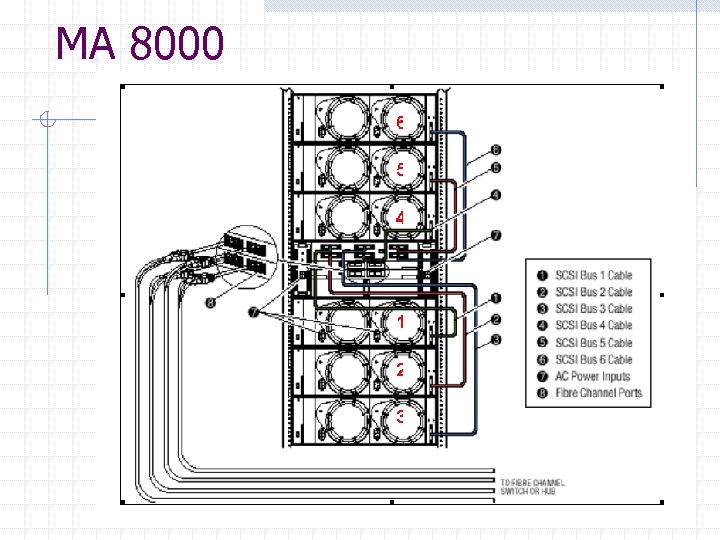

MA 8000

MA 8000

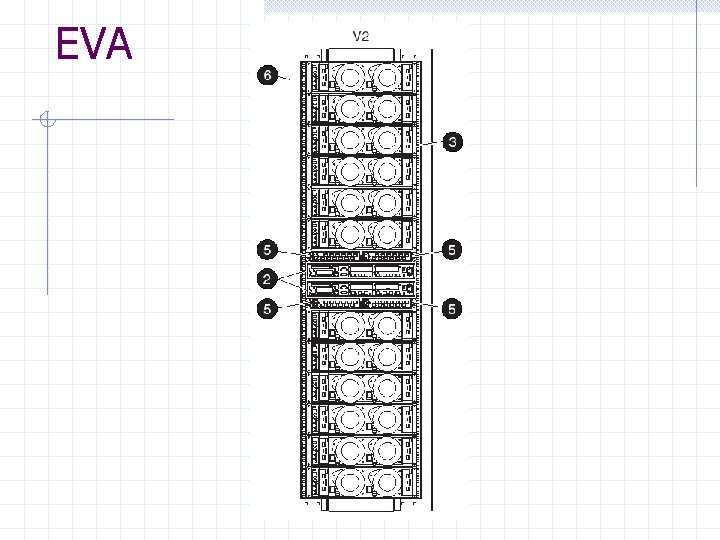

EVA

EVA

2 Q 03 LUN Restructuring – 2 nd Gen SAN “Far” LUNs pulled back to “near” Data Center 6 disk, 6 shelf MA RAID 5 RAIDsets LUNs are partitioned from RAIDsets LUNs are sized as multiples of disk size Multiple LUNs from different RAIDsets Busy filesystems are distributed among LUNs Server and Storage Node SAN Fabric Connections mated to common switch

2 Q 03 LUN Restructuring – 2 nd Gen SAN “Far” LUNs pulled back to “near” Data Center 6 disk, 6 shelf MA RAID 5 RAIDsets LUNs are partitioned from RAIDsets LUNs are sized as multiples of disk size Multiple LUNs from different RAIDsets Busy filesystems are distributed among LUNs Server and Storage Node SAN Fabric Connections mated to common switch

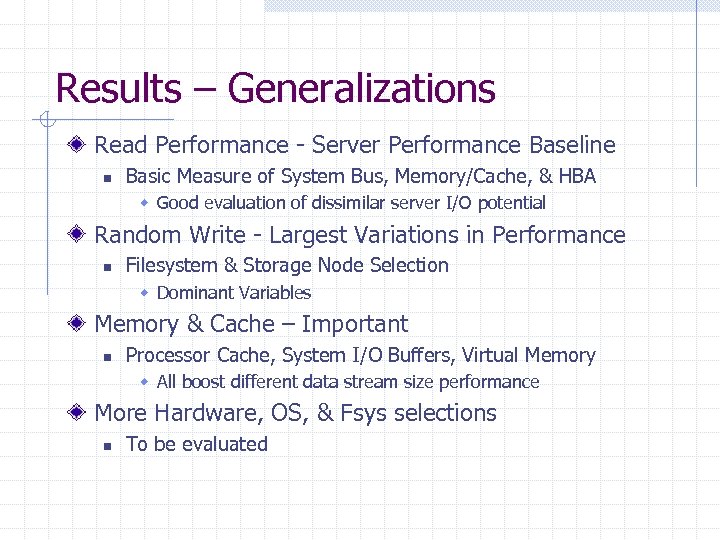

Results – Generalizations Read Performance - Server Performance Baseline n Basic Measure of System Bus, Memory/Cache, & HBA w Good evaluation of dissimilar server I/O potential Random Write - Largest Variations in Performance n Filesystem & Storage Node Selection w Dominant Variables Memory & Cache – Important n Processor Cache, System I/O Buffers, Virtual Memory w All boost different data stream size performance More Hardware, OS, & Fsys selections n To be evaluated

Results – Generalizations Read Performance - Server Performance Baseline n Basic Measure of System Bus, Memory/Cache, & HBA w Good evaluation of dissimilar server I/O potential Random Write - Largest Variations in Performance n Filesystem & Storage Node Selection w Dominant Variables Memory & Cache – Important n Processor Cache, System I/O Buffers, Virtual Memory w All boost different data stream size performance More Hardware, OS, & Fsys selections n To be evaluated

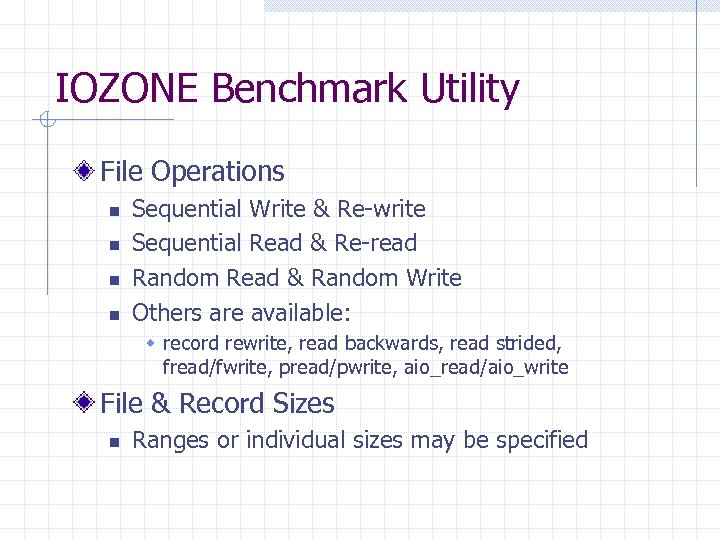

IOZONE Benchmark Utility File Operations n n Sequential Write & Re-write Sequential Read & Re-read Random Read & Random Write Others are available: w record rewrite, read backwards, read strided, fread/fwrite, pread/pwrite, aio_read/aio_write File & Record Sizes n Ranges or individual sizes may be specified

IOZONE Benchmark Utility File Operations n n Sequential Write & Re-write Sequential Read & Re-read Random Read & Random Write Others are available: w record rewrite, read backwards, read strided, fread/fwrite, pread/pwrite, aio_read/aio_write File & Record Sizes n Ranges or individual sizes may be specified

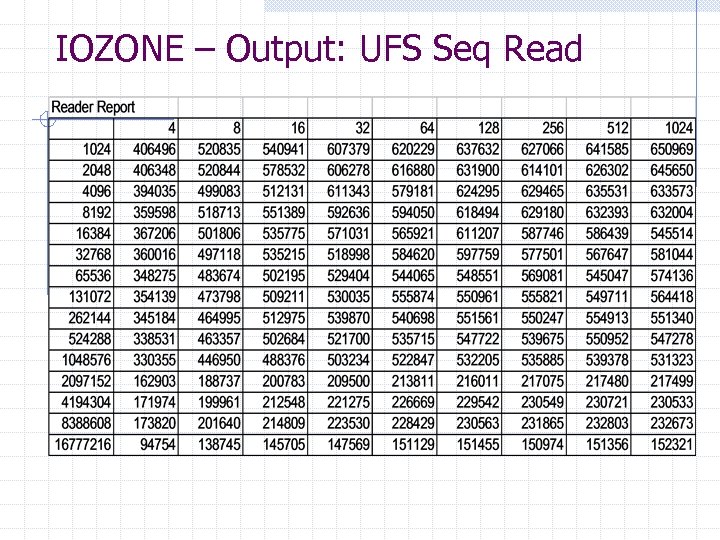

IOZONE – Output: UFS Seq Read

IOZONE – Output: UFS Seq Read

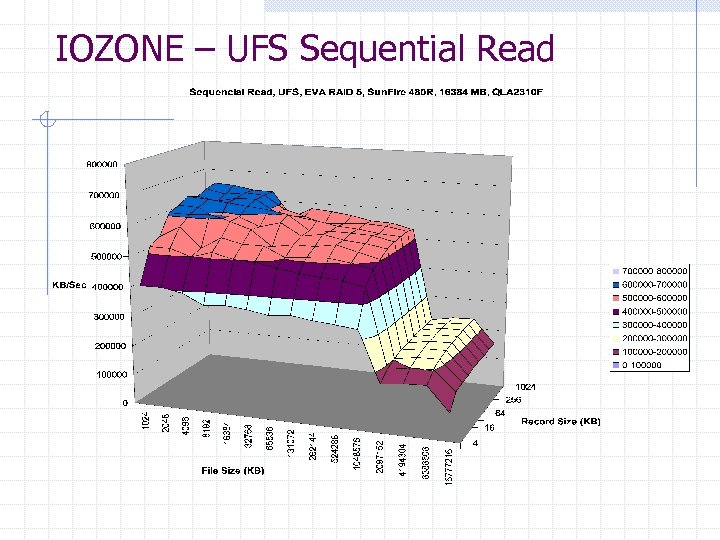

IOZONE – UFS Sequential Read

IOZONE – UFS Sequential Read

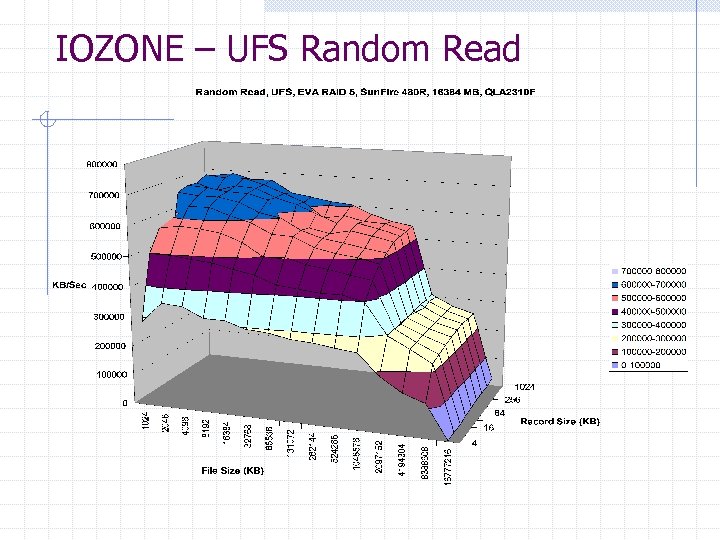

IOZONE – UFS Random Read

IOZONE – UFS Random Read

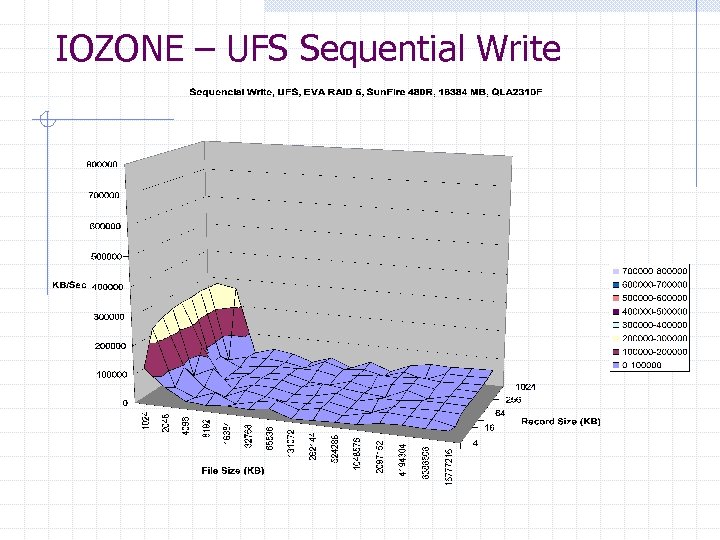

IOZONE – UFS Sequential Write

IOZONE – UFS Sequential Write

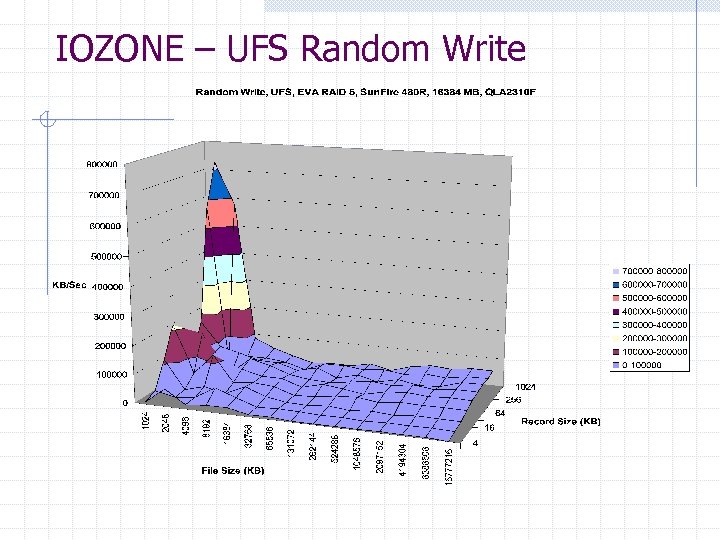

IOZONE – UFS Random Write

IOZONE – UFS Random Write

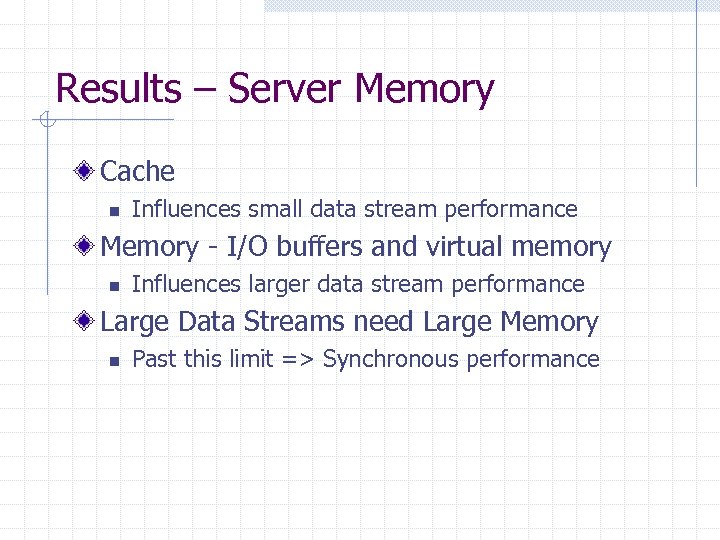

Results – Server Memory Cache n Influences small data stream performance Memory - I/O buffers and virtual memory n Influences larger data stream performance Large Data Streams need Large Memory n Past this limit => Synchronous performance

Results – Server Memory Cache n Influences small data stream performance Memory - I/O buffers and virtual memory n Influences larger data stream performance Large Data Streams need Large Memory n Past this limit => Synchronous performance

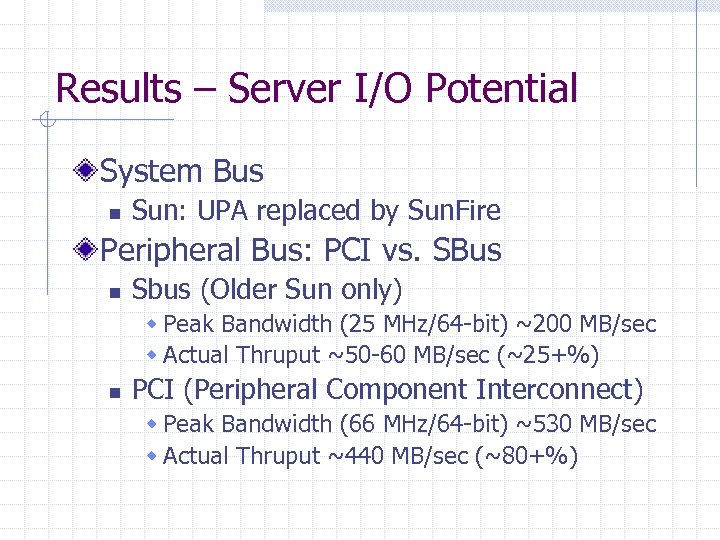

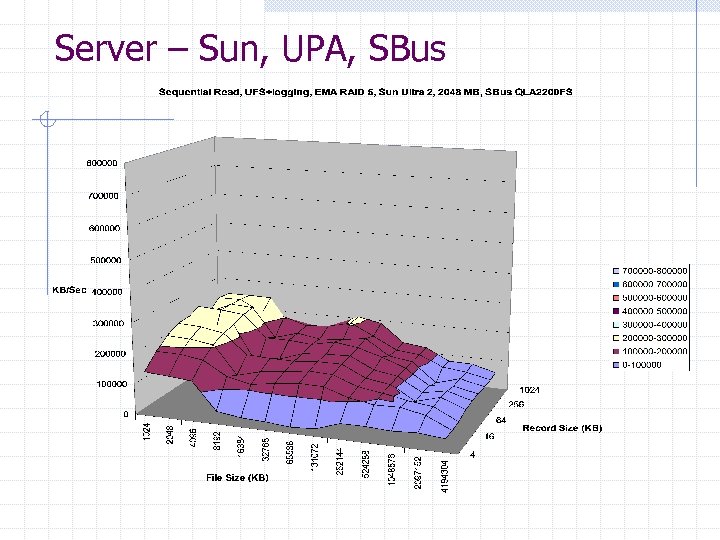

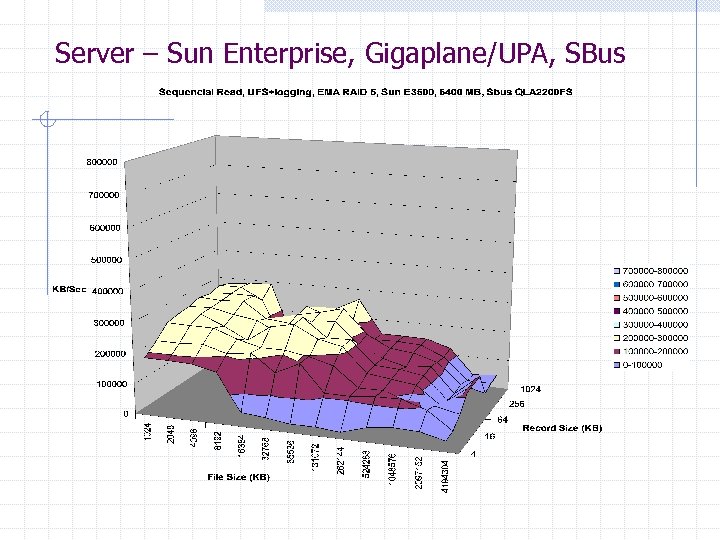

Results – Server I/O Potential System Bus n Sun: UPA replaced by Sun. Fire Peripheral Bus: PCI vs. SBus n Sbus (Older Sun only) w Peak Bandwidth (25 MHz/64 -bit) ~200 MB/sec w Actual Thruput ~50 -60 MB/sec (~25+%) n PCI (Peripheral Component Interconnect) w Peak Bandwidth (66 MHz/64 -bit) ~530 MB/sec w Actual Thruput ~440 MB/sec (~80+%)

Results – Server I/O Potential System Bus n Sun: UPA replaced by Sun. Fire Peripheral Bus: PCI vs. SBus n Sbus (Older Sun only) w Peak Bandwidth (25 MHz/64 -bit) ~200 MB/sec w Actual Thruput ~50 -60 MB/sec (~25+%) n PCI (Peripheral Component Interconnect) w Peak Bandwidth (66 MHz/64 -bit) ~530 MB/sec w Actual Thruput ~440 MB/sec (~80+%)

Server – Sun, UPA, SBus

Server – Sun, UPA, SBus

Server – Sun Enterprise, Gigaplane/UPA, SBus

Server – Sun Enterprise, Gigaplane/UPA, SBus

Server – Sun, UPA, PCI

Server – Sun, UPA, PCI

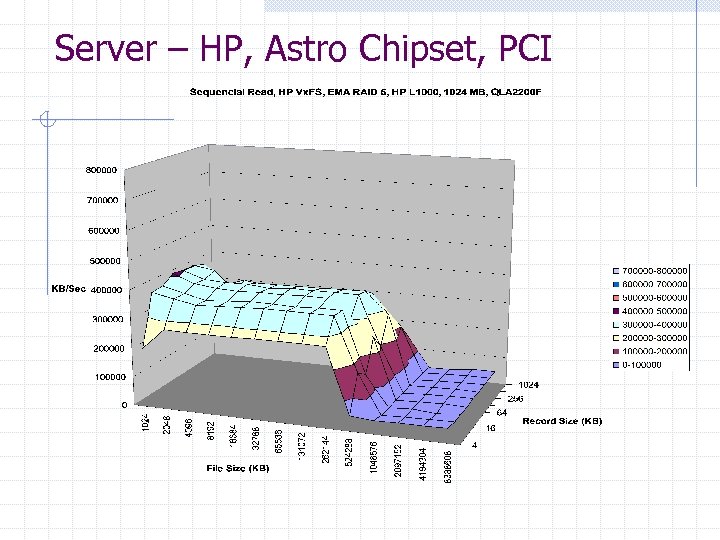

Server – HP, Astro Chipset, PCI

Server – HP, Astro Chipset, PCI

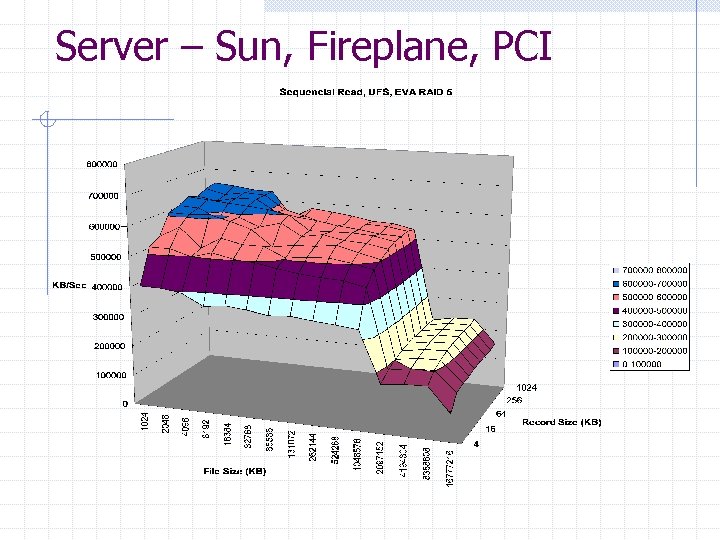

Server – Sun, Fireplane, PCI

Server – Sun, Fireplane, PCI

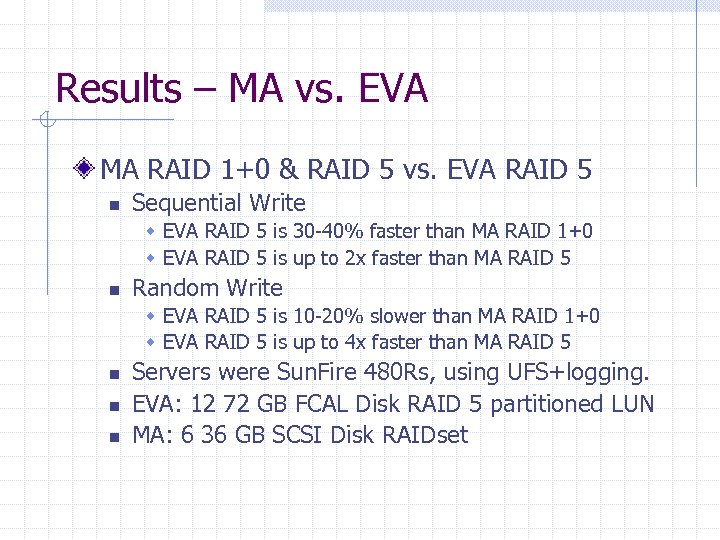

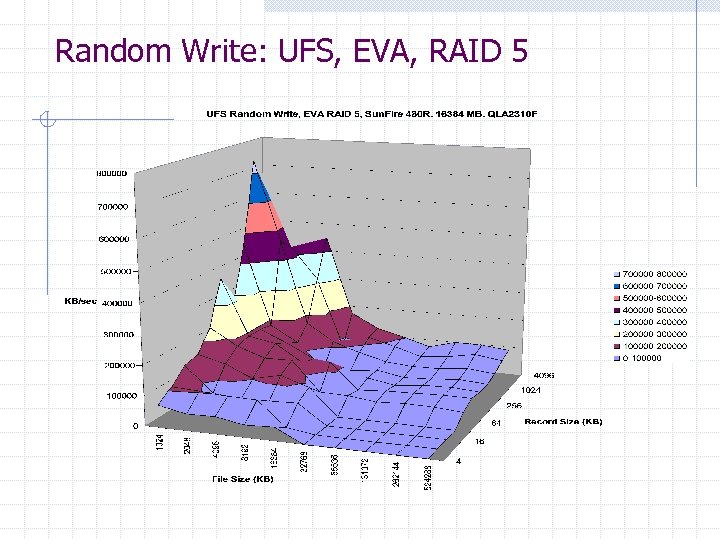

Results – MA vs. EVA MA RAID 1+0 & RAID 5 vs. EVA RAID 5 n Sequential Write w EVA RAID 5 is 30 -40% faster than MA RAID 1+0 w EVA RAID 5 is up to 2 x faster than MA RAID 5 n Random Write w EVA RAID 5 is 10 -20% slower than MA RAID 1+0 w EVA RAID 5 is up to 4 x faster than MA RAID 5 n n n Servers were Sun. Fire 480 Rs, using UFS+logging. EVA: 12 72 GB FCAL Disk RAID 5 partitioned LUN MA: 6 36 GB SCSI Disk RAIDset

Results – MA vs. EVA MA RAID 1+0 & RAID 5 vs. EVA RAID 5 n Sequential Write w EVA RAID 5 is 30 -40% faster than MA RAID 1+0 w EVA RAID 5 is up to 2 x faster than MA RAID 5 n Random Write w EVA RAID 5 is 10 -20% slower than MA RAID 1+0 w EVA RAID 5 is up to 4 x faster than MA RAID 5 n n n Servers were Sun. Fire 480 Rs, using UFS+logging. EVA: 12 72 GB FCAL Disk RAID 5 partitioned LUN MA: 6 36 GB SCSI Disk RAIDset

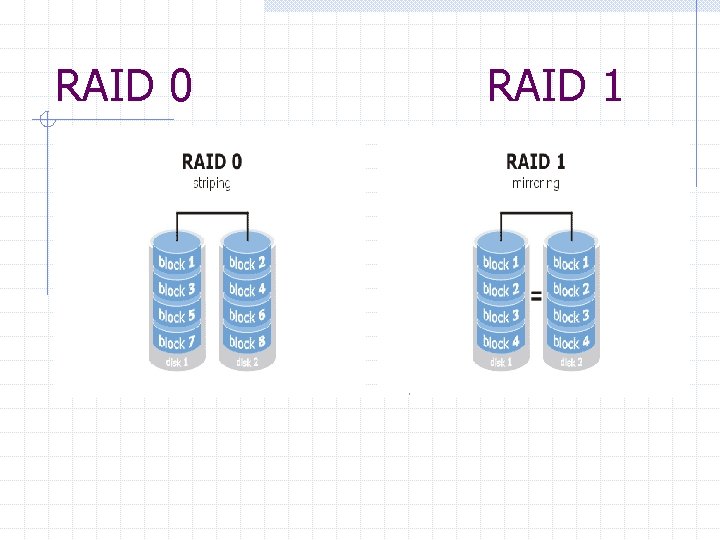

RAID 0 RAID 1

RAID 0 RAID 1

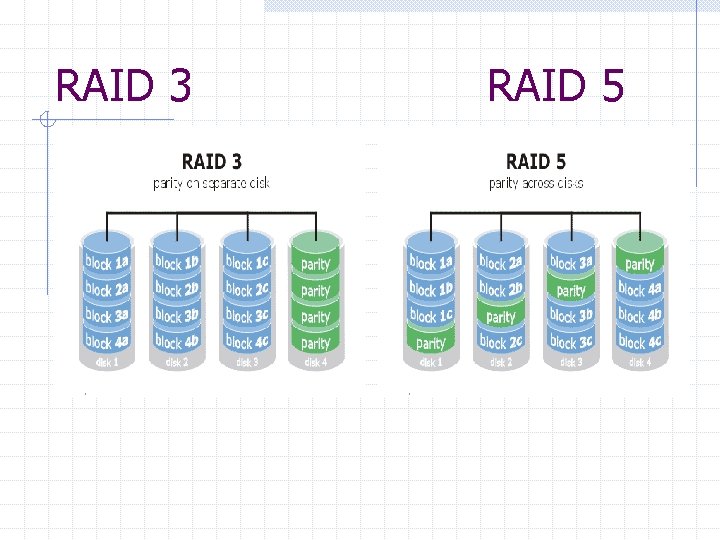

RAID 3 RAID 5

RAID 3 RAID 5

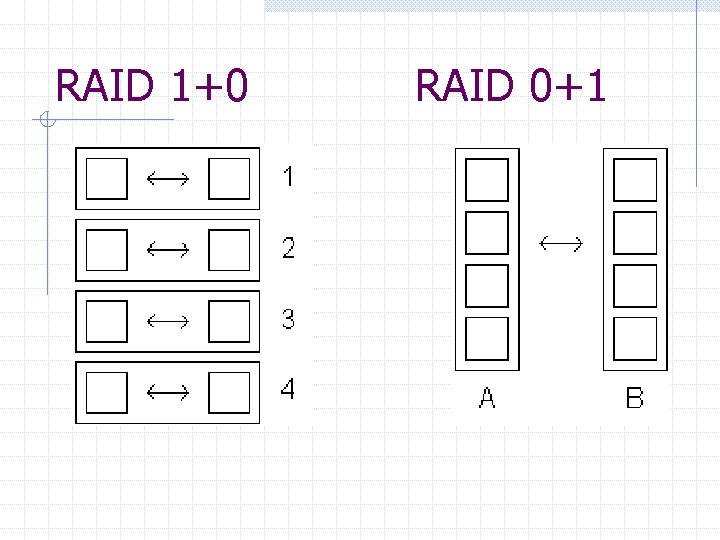

RAID 1+0 RAID 0+1

RAID 1+0 RAID 0+1

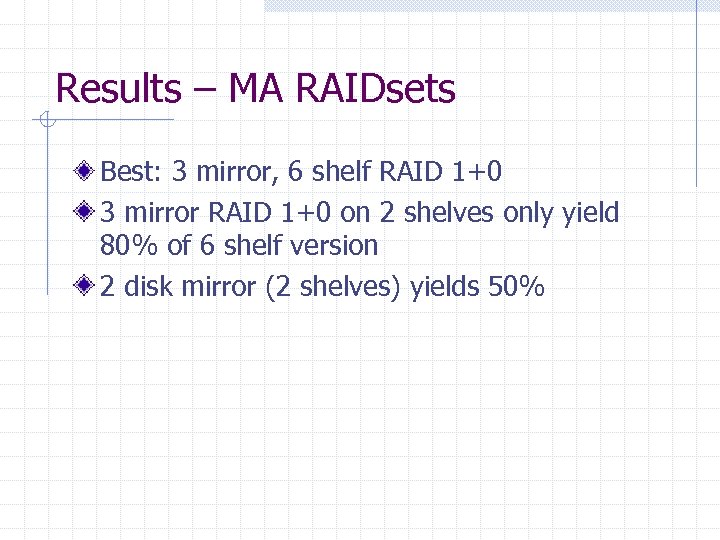

Results – MA RAIDsets Best: 3 mirror, 6 shelf RAID 1+0 3 mirror RAID 1+0 on 2 shelves only yield 80% of 6 shelf version 2 disk mirror (2 shelves) yields 50%

Results – MA RAIDsets Best: 3 mirror, 6 shelf RAID 1+0 3 mirror RAID 1+0 on 2 shelves only yield 80% of 6 shelf version 2 disk mirror (2 shelves) yields 50%

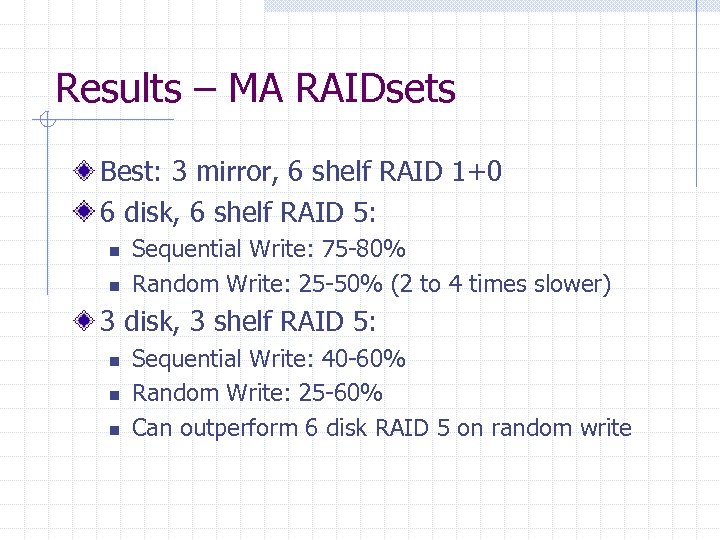

Results – MA RAIDsets Best: 3 mirror, 6 shelf RAID 1+0 6 disk, 6 shelf RAID 5: n n Sequential Write: 75 -80% Random Write: 25 -50% (2 to 4 times slower) 3 disk, 3 shelf RAID 5: n n n Sequential Write: 40 -60% Random Write: 25 -60% Can outperform 6 disk RAID 5 on random write

Results – MA RAIDsets Best: 3 mirror, 6 shelf RAID 1+0 6 disk, 6 shelf RAID 5: n n Sequential Write: 75 -80% Random Write: 25 -50% (2 to 4 times slower) 3 disk, 3 shelf RAID 5: n n n Sequential Write: 40 -60% Random Write: 25 -60% Can outperform 6 disk RAID 5 on random write

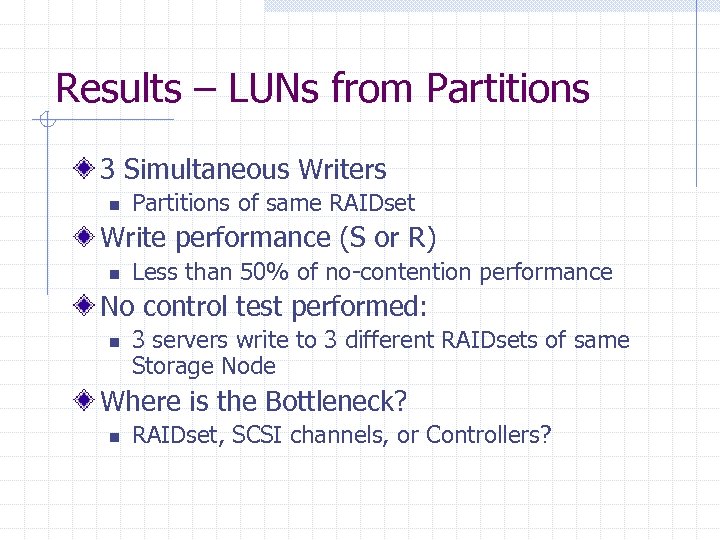

Results – LUNs from Partitions 3 Simultaneous Writers n Partitions of same RAIDset Write performance (S or R) n Less than 50% of no-contention performance No control test performed: n 3 servers write to 3 different RAIDsets of same Storage Node Where is the Bottleneck? n RAIDset, SCSI channels, or Controllers?

Results – LUNs from Partitions 3 Simultaneous Writers n Partitions of same RAIDset Write performance (S or R) n Less than 50% of no-contention performance No control test performed: n 3 servers write to 3 different RAIDsets of same Storage Node Where is the Bottleneck? n RAIDset, SCSI channels, or Controllers?

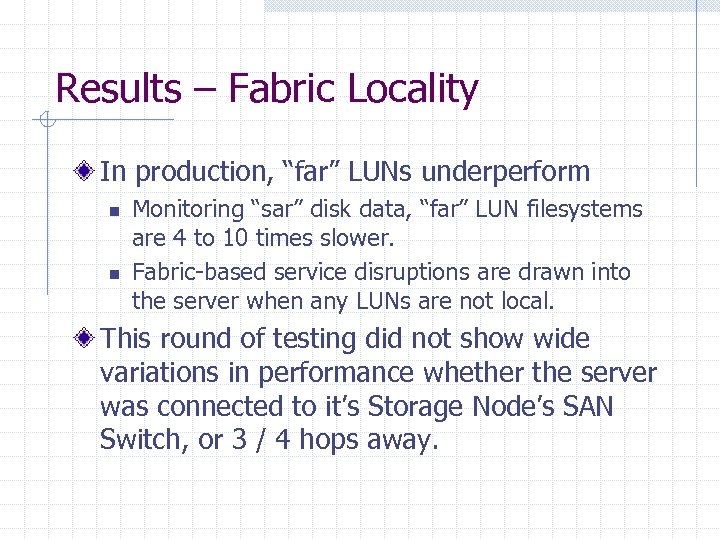

Results – Fabric Locality In production, “far” LUNs underperform n n Monitoring “sar” disk data, “far” LUN filesystems are 4 to 10 times slower. Fabric-based service disruptions are drawn into the server when any LUNs are not local. This round of testing did not show wide variations in performance whether the server was connected to it’s Storage Node’s SAN Switch, or 3 / 4 hops away.

Results – Fabric Locality In production, “far” LUNs underperform n n Monitoring “sar” disk data, “far” LUN filesystems are 4 to 10 times slower. Fabric-based service disruptions are drawn into the server when any LUNs are not local. This round of testing did not show wide variations in performance whether the server was connected to it’s Storage Node’s SAN Switch, or 3 / 4 hops away.

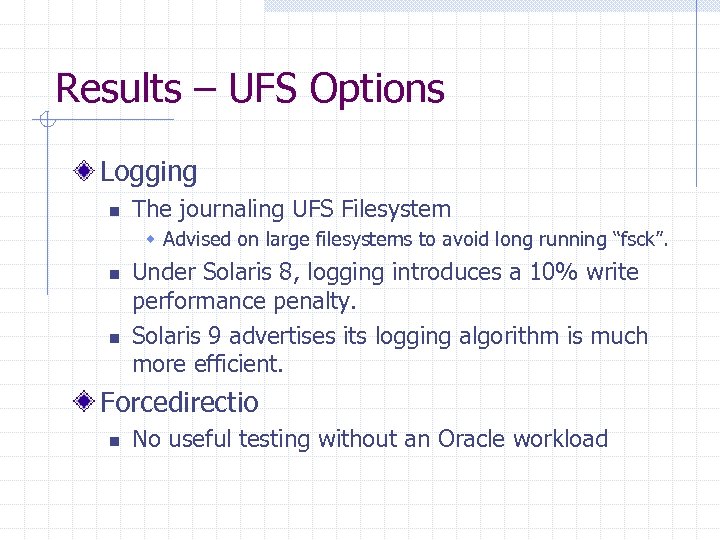

Results – UFS Options Logging n The journaling UFS Filesystem w Advised on large filesystems to avoid long running “fsck”. n n Under Solaris 8, logging introduces a 10% write performance penalty. Solaris 9 advertises its logging algorithm is much more efficient. Forcedirectio n No useful testing without an Oracle workload

Results – UFS Options Logging n The journaling UFS Filesystem w Advised on large filesystems to avoid long running “fsck”. n n Under Solaris 8, logging introduces a 10% write performance penalty. Solaris 9 advertises its logging algorithm is much more efficient. Forcedirectio n No useful testing without an Oracle workload

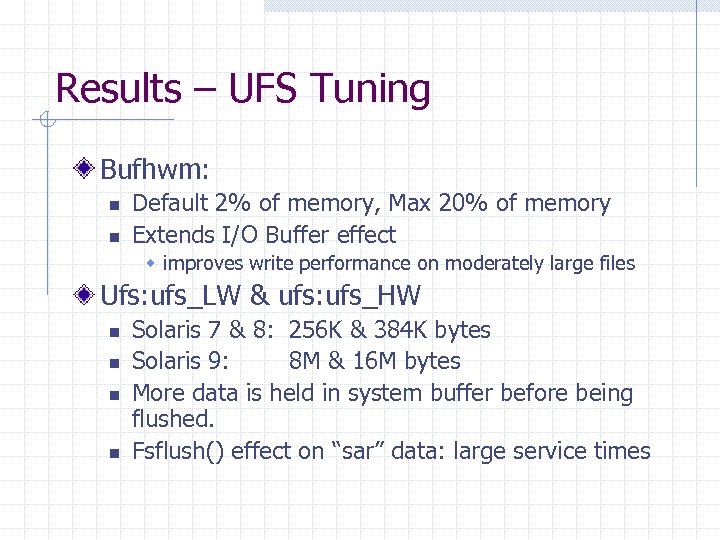

Results – UFS Tuning Bufhwm: n n Default 2% of memory, Max 20% of memory Extends I/O Buffer effect w improves write performance on moderately large files Ufs: ufs_LW & ufs: ufs_HW n n Solaris 7 & 8: 256 K & 384 K bytes Solaris 9: 8 M & 16 M bytes More data is held in system buffer before being flushed. Fsflush() effect on “sar” data: large service times

Results – UFS Tuning Bufhwm: n n Default 2% of memory, Max 20% of memory Extends I/O Buffer effect w improves write performance on moderately large files Ufs: ufs_LW & ufs: ufs_HW n n Solaris 7 & 8: 256 K & 384 K bytes Solaris 9: 8 M & 16 M bytes More data is held in system buffer before being flushed. Fsflush() effect on “sar” data: large service times

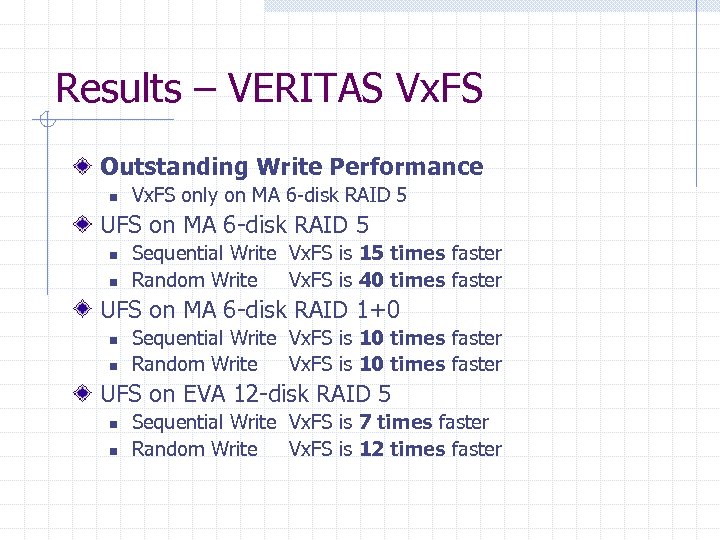

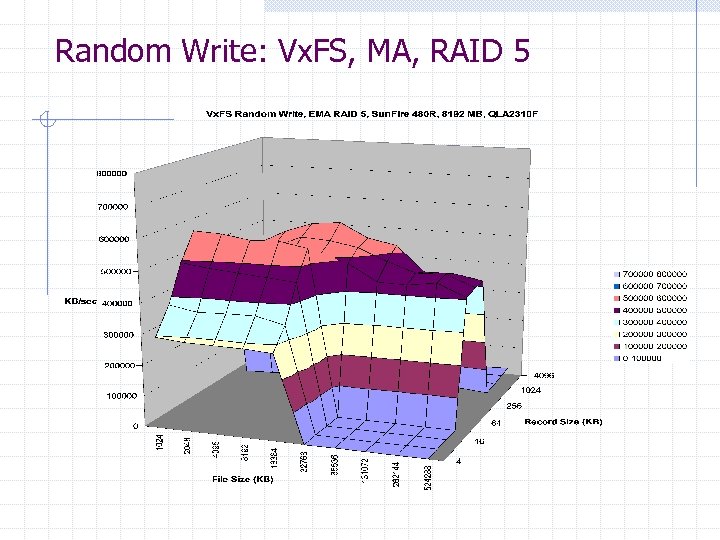

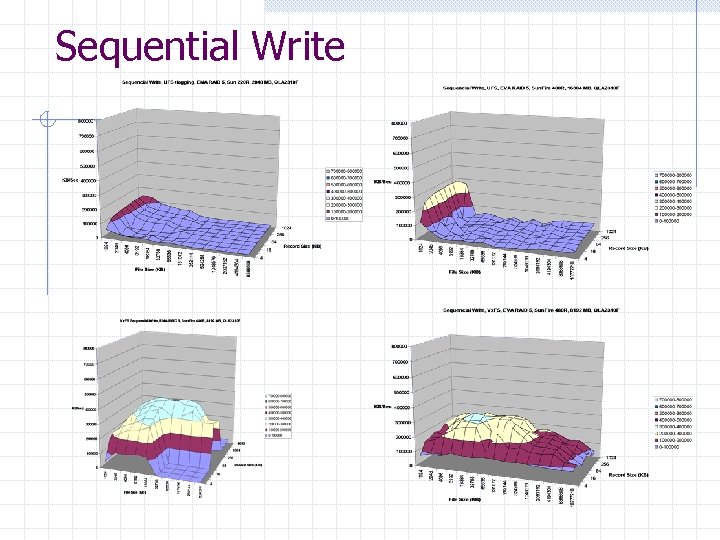

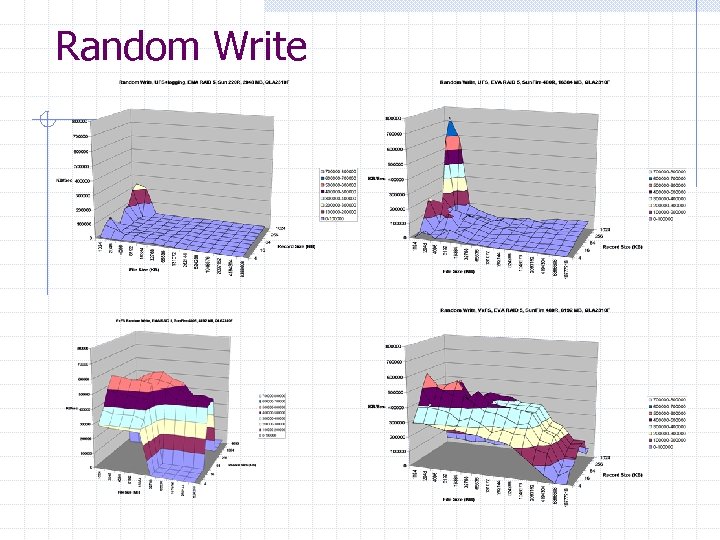

Results – VERITAS Vx. FS Outstanding Write Performance n Vx. FS only on MA 6 -disk RAID 5 UFS on MA 6 -disk RAID 5 n n Sequential Write Vx. FS is 15 times faster Random Write Vx. FS is 40 times faster UFS on MA 6 -disk RAID 1+0 n n Sequential Write Vx. FS is 10 times faster Random Write Vx. FS is 10 times faster UFS on EVA 12 -disk RAID 5 n n Sequential Write Vx. FS is 7 times faster Random Write Vx. FS is 12 times faster

Results – VERITAS Vx. FS Outstanding Write Performance n Vx. FS only on MA 6 -disk RAID 5 UFS on MA 6 -disk RAID 5 n n Sequential Write Vx. FS is 15 times faster Random Write Vx. FS is 40 times faster UFS on MA 6 -disk RAID 1+0 n n Sequential Write Vx. FS is 10 times faster Random Write Vx. FS is 10 times faster UFS on EVA 12 -disk RAID 5 n n Sequential Write Vx. FS is 7 times faster Random Write Vx. FS is 12 times faster

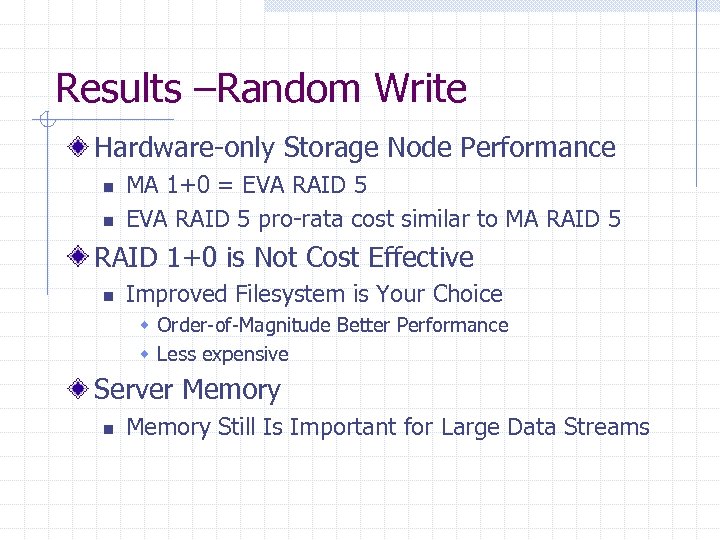

Results –Random Write Hardware-only Storage Node Performance n n MA 1+0 = EVA RAID 5 pro-rata cost similar to MA RAID 5 RAID 1+0 is Not Cost Effective n Improved Filesystem is Your Choice w Order-of-Magnitude Better Performance w Less expensive Server Memory n Memory Still Is Important for Large Data Streams

Results –Random Write Hardware-only Storage Node Performance n n MA 1+0 = EVA RAID 5 pro-rata cost similar to MA RAID 5 RAID 1+0 is Not Cost Effective n Improved Filesystem is Your Choice w Order-of-Magnitude Better Performance w Less expensive Server Memory n Memory Still Is Important for Large Data Streams

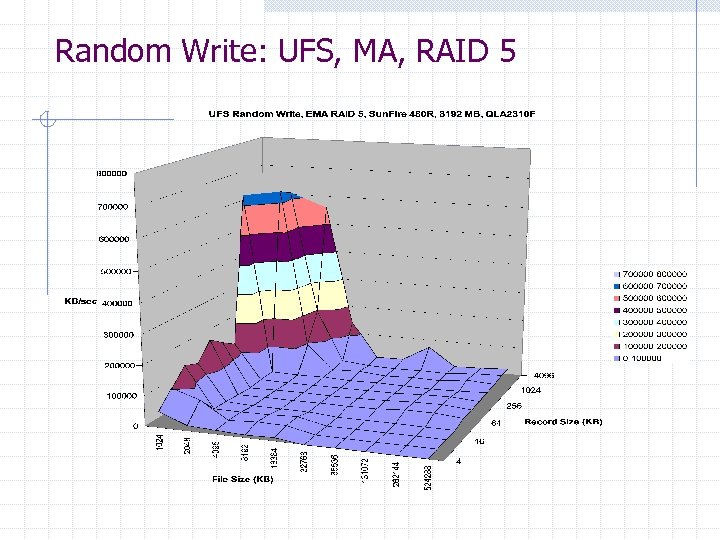

Random Write: UFS, MA, RAID 5

Random Write: UFS, MA, RAID 5

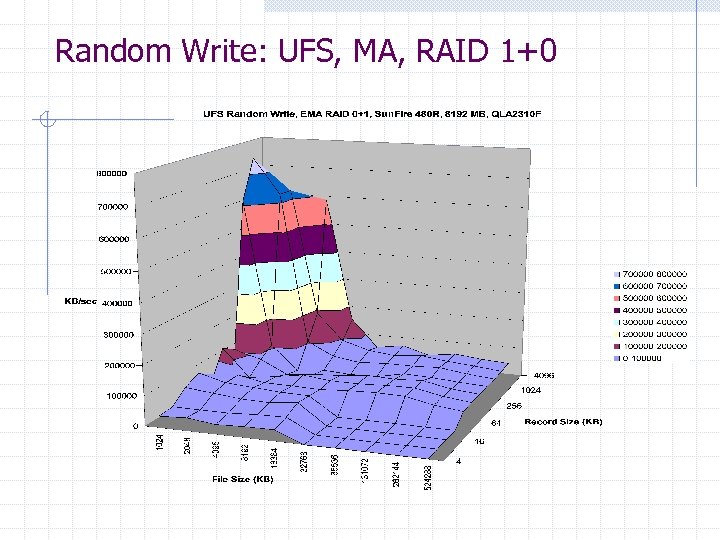

Random Write: UFS, MA, RAID 1+0

Random Write: UFS, MA, RAID 1+0

Random Write: UFS, EVA, RAID 5

Random Write: UFS, EVA, RAID 5

Random Write: Vx. FS, MA, RAID 5

Random Write: Vx. FS, MA, RAID 5

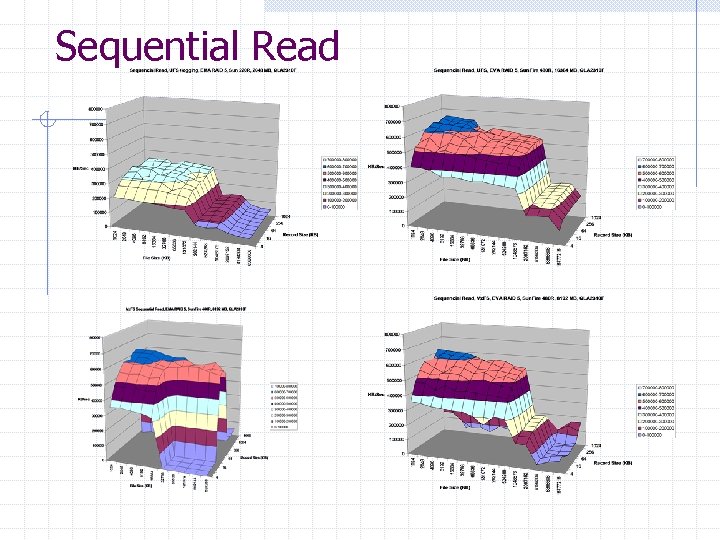

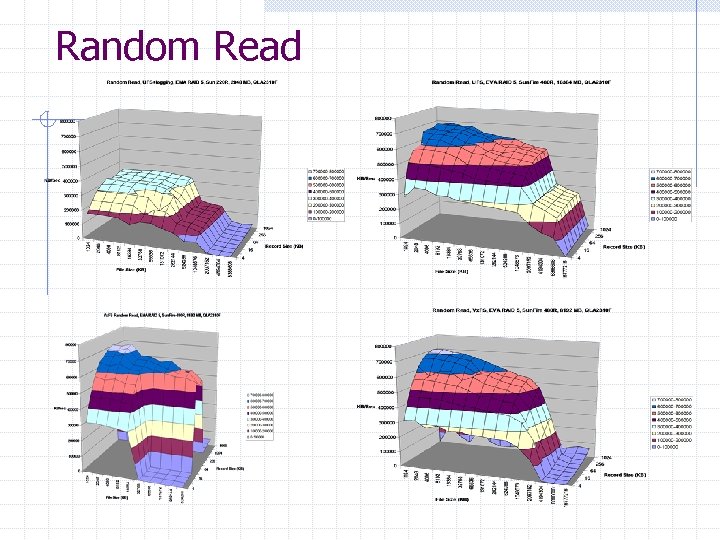

Closer Look: Vx. FS vs. UFS Graphical Comparison: n Sun Servers provided with RAID 5 LUNs w UFS EMA w Vx. FS EMA n File Operations w w Sequential Read Random Read Sequential Write Random Write UFS Vx. FS EVA

Closer Look: Vx. FS vs. UFS Graphical Comparison: n Sun Servers provided with RAID 5 LUNs w UFS EMA w Vx. FS EMA n File Operations w w Sequential Read Random Read Sequential Write Random Write UFS Vx. FS EVA

Sequential Read

Sequential Read

Random Read

Random Read

Sequential Write

Sequential Write

Random Write

Random Write

Results – VERITAS Vx. FS Biggest Performance gains n Everything else is of secondary importance Memory Overhead for Vx. FS n n Dominates Sequential Write of small files Needs further investigation Vx. FS & EVA RAID 1+0 not measured n Don’t mention what you don’t want to sell

Results – VERITAS Vx. FS Biggest Performance gains n Everything else is of secondary importance Memory Overhead for Vx. FS n n Dominates Sequential Write of small files Needs further investigation Vx. FS & EVA RAID 1+0 not measured n Don’t mention what you don’t want to sell

Implications – VERITAS Vx. FS Where is the Bottleneck? n Changes at Storage Node w Modest Increases in Performance n Changes within Server w Dramatically Increase Performance The Bottleneck is in the Server, not the SAN n The relative cost is just good fortune w Changing the filesystem is much less expensive

Implications – VERITAS Vx. FS Where is the Bottleneck? n Changes at Storage Node w Modest Increases in Performance n Changes within Server w Dramatically Increase Performance The Bottleneck is in the Server, not the SAN n The relative cost is just good fortune w Changing the filesystem is much less expensive

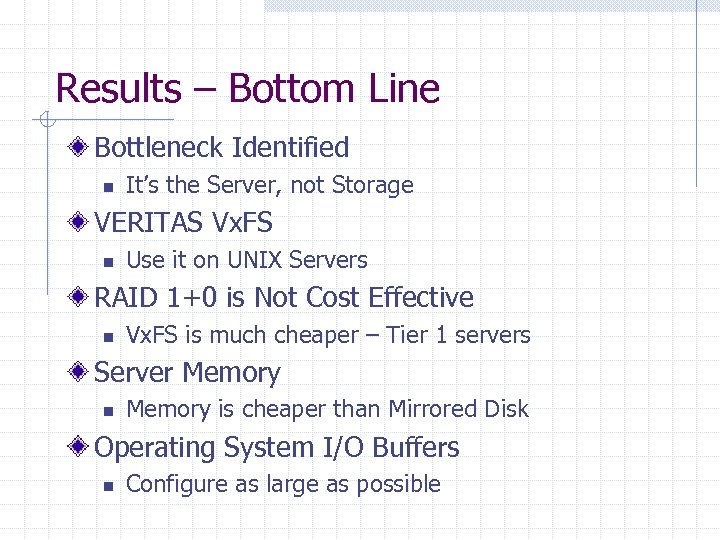

Results – Bottom Line Bottleneck Identified n It’s the Server, not Storage VERITAS Vx. FS n Use it on UNIX Servers RAID 1+0 is Not Cost Effective n Vx. FS is much cheaper – Tier 1 servers Server Memory n Memory is cheaper than Mirrored Disk Operating System I/O Buffers n Configure as large as possible

Results – Bottom Line Bottleneck Identified n It’s the Server, not Storage VERITAS Vx. FS n Use it on UNIX Servers RAID 1+0 is Not Cost Effective n Vx. FS is much cheaper – Tier 1 servers Server Memory n Memory is cheaper than Mirrored Disk Operating System I/O Buffers n Configure as large as possible

Price & Performance Cost Of Computing n n Hardware Software One time costs Ongoing costs How Much Does Vx. FS Cost? How Much Do RAID 1+0 / 5 Cost?

Price & Performance Cost Of Computing n n Hardware Software One time costs Ongoing costs How Much Does Vx. FS Cost? How Much Do RAID 1+0 / 5 Cost?

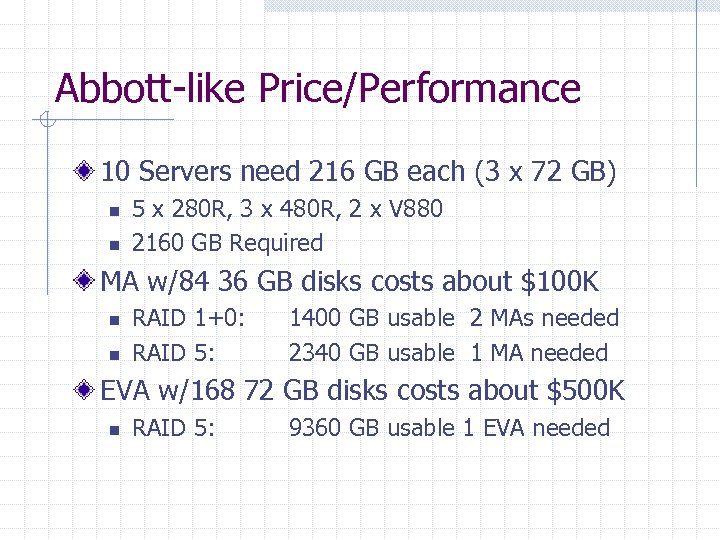

Abbott-like Price/Performance 10 Servers need 216 GB each (3 x 72 GB) n n 5 x 280 R, 3 x 480 R, 2 x V 880 2160 GB Required MA w/84 36 GB disks costs about $100 K n n RAID 1+0: RAID 5: 1400 GB usable 2 MAs needed 2340 GB usable 1 MA needed EVA w/168 72 GB disks costs about $500 K n RAID 5: 9360 GB usable 1 EVA needed

Abbott-like Price/Performance 10 Servers need 216 GB each (3 x 72 GB) n n 5 x 280 R, 3 x 480 R, 2 x V 880 2160 GB Required MA w/84 36 GB disks costs about $100 K n n RAID 1+0: RAID 5: 1400 GB usable 2 MAs needed 2340 GB usable 1 MA needed EVA w/168 72 GB disks costs about $500 K n RAID 5: 9360 GB usable 1 EVA needed

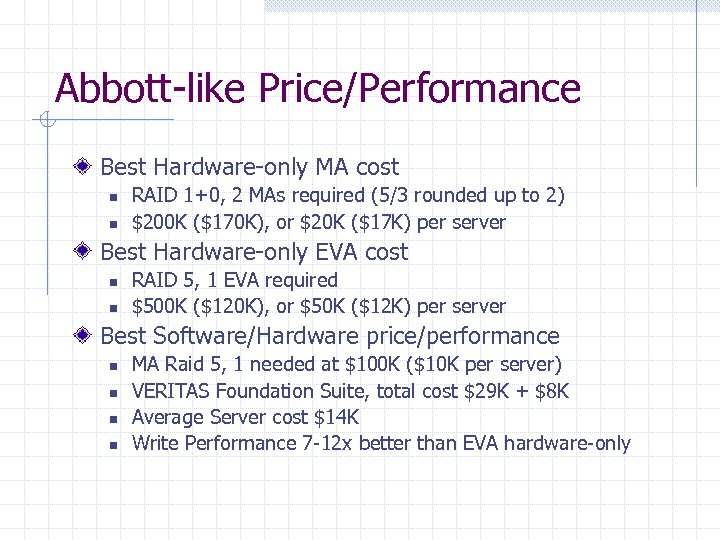

Abbott-like Price/Performance Best Hardware-only MA cost n n RAID 1+0, 2 MAs required (5/3 rounded up to 2) $200 K ($170 K), or $20 K ($17 K) per server Best Hardware-only EVA cost n n RAID 5, 1 EVA required $500 K ($120 K), or $50 K ($12 K) per server Best Software/Hardware price/performance n n MA Raid 5, 1 needed at $100 K ($10 K per server) VERITAS Foundation Suite, total cost $29 K + $8 K Average Server cost $14 K Write Performance 7 -12 x better than EVA hardware-only

Abbott-like Price/Performance Best Hardware-only MA cost n n RAID 1+0, 2 MAs required (5/3 rounded up to 2) $200 K ($170 K), or $20 K ($17 K) per server Best Hardware-only EVA cost n n RAID 5, 1 EVA required $500 K ($120 K), or $50 K ($12 K) per server Best Software/Hardware price/performance n n MA Raid 5, 1 needed at $100 K ($10 K per server) VERITAS Foundation Suite, total cost $29 K + $8 K Average Server cost $14 K Write Performance 7 -12 x better than EVA hardware-only

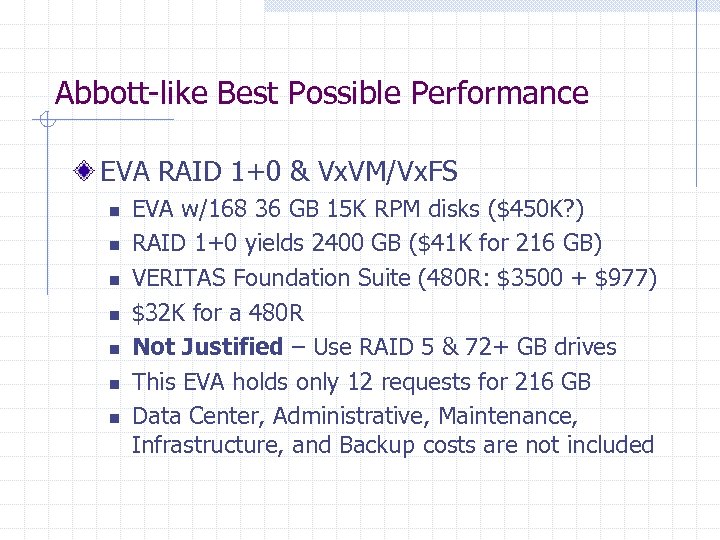

Abbott-like Best Possible Performance EVA RAID 1+0 & Vx. VM/Vx. FS n n n n EVA w/168 36 GB 15 K RPM disks ($450 K? ) RAID 1+0 yields 2400 GB ($41 K for 216 GB) VERITAS Foundation Suite (480 R: $3500 + $977) $32 K for a 480 R Not Justified – Use RAID 5 & 72+ GB drives This EVA holds only 12 requests for 216 GB Data Center, Administrative, Maintenance, Infrastructure, and Backup costs are not included

Abbott-like Best Possible Performance EVA RAID 1+0 & Vx. VM/Vx. FS n n n n EVA w/168 36 GB 15 K RPM disks ($450 K? ) RAID 1+0 yields 2400 GB ($41 K for 216 GB) VERITAS Foundation Suite (480 R: $3500 + $977) $32 K for a 480 R Not Justified – Use RAID 5 & 72+ GB drives This EVA holds only 12 requests for 216 GB Data Center, Administrative, Maintenance, Infrastructure, and Backup costs are not included

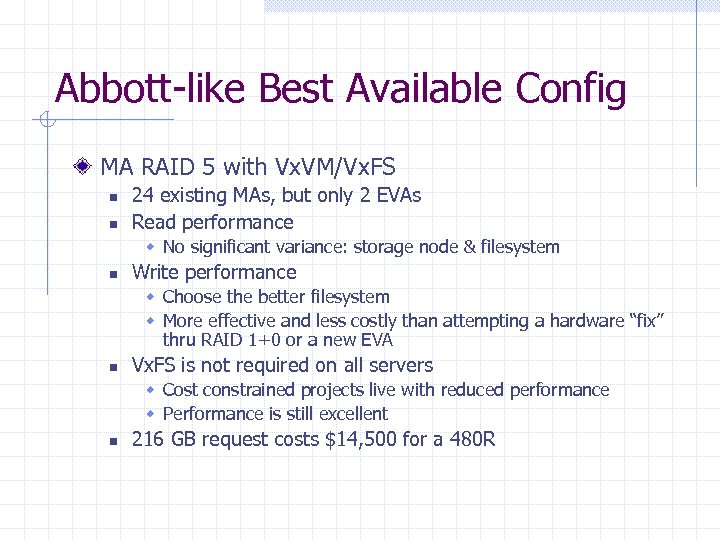

Abbott-like Best Available Config MA RAID 5 with Vx. VM/Vx. FS n n 24 existing MAs, but only 2 EVAs Read performance w No significant variance: storage node & filesystem n Write performance w Choose the better filesystem w More effective and less costly than attempting a hardware “fix” thru RAID 1+0 or a new EVA n Vx. FS is not required on all servers w Cost constrained projects live with reduced performance w Performance is still excellent n 216 GB request costs $14, 500 for a 480 R

Abbott-like Best Available Config MA RAID 5 with Vx. VM/Vx. FS n n 24 existing MAs, but only 2 EVAs Read performance w No significant variance: storage node & filesystem n Write performance w Choose the better filesystem w More effective and less costly than attempting a hardware “fix” thru RAID 1+0 or a new EVA n Vx. FS is not required on all servers w Cost constrained projects live with reduced performance w Performance is still excellent n 216 GB request costs $14, 500 for a 480 R

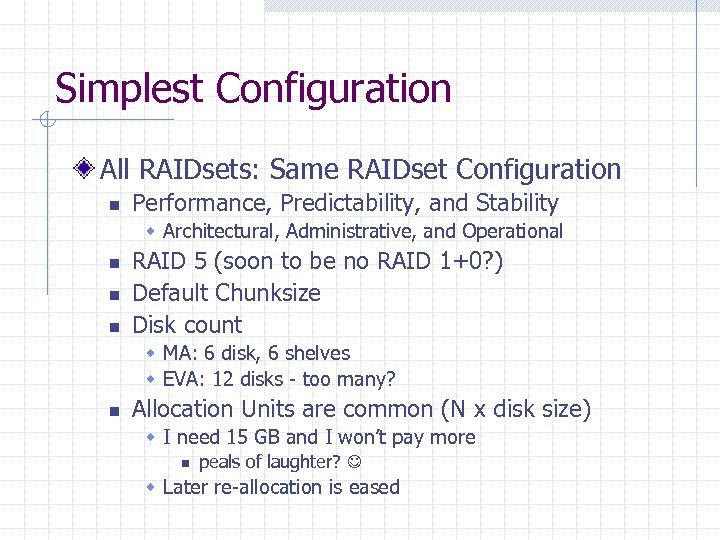

Simplest Configuration All RAIDsets: Same RAIDset Configuration n Performance, Predictability, and Stability w Architectural, Administrative, and Operational n n n RAID 5 (soon to be no RAID 1+0? ) Default Chunksize Disk count w MA: 6 disk, 6 shelves w EVA: 12 disks - too many? n Allocation Units are common (N x disk size) w I need 15 GB and I won’t pay more n peals of laughter? w Later re-allocation is eased

Simplest Configuration All RAIDsets: Same RAIDset Configuration n Performance, Predictability, and Stability w Architectural, Administrative, and Operational n n n RAID 5 (soon to be no RAID 1+0? ) Default Chunksize Disk count w MA: 6 disk, 6 shelves w EVA: 12 disks - too many? n Allocation Units are common (N x disk size) w I need 15 GB and I won’t pay more n peals of laughter? w Later re-allocation is eased

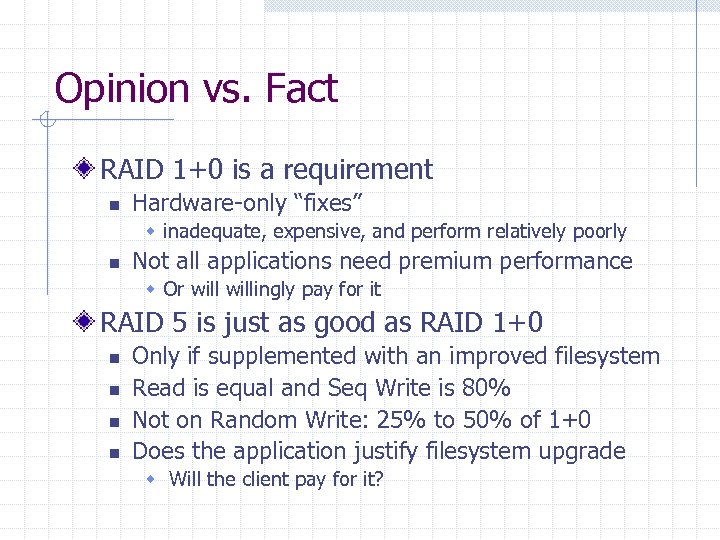

Opinion vs. Fact RAID 1+0 is a requirement n Hardware-only “fixes” w inadequate, expensive, and perform relatively poorly n Not all applications need premium performance w Or willingly pay for it RAID 5 is just as good as RAID 1+0 n n Only if supplemented with an improved filesystem Read is equal and Seq Write is 80% Not on Random Write: 25% to 50% of 1+0 Does the application justify filesystem upgrade w Will the client pay for it?

Opinion vs. Fact RAID 1+0 is a requirement n Hardware-only “fixes” w inadequate, expensive, and perform relatively poorly n Not all applications need premium performance w Or willingly pay for it RAID 5 is just as good as RAID 1+0 n n Only if supplemented with an improved filesystem Read is equal and Seq Write is 80% Not on Random Write: 25% to 50% of 1+0 Does the application justify filesystem upgrade w Will the client pay for it?

Yet To Be Tested: Wish List Oracle Workload Other Solaris Servers n Larger Sun Servers: E 4800, E 10 K, E 15 K w Multiple/Max I/O Channels – Is Scaling Linear? n n New Sun Entry Level J-Bus Servers: V 240, V 440 Fujitsu Servers: Much Faster System Bus w New Sun/Fujitsu Alliance Other UNIX Servers: IBM, Alpha, Intel Linux, etc… Other HBAs (Emulex, JNI? ) EVA RAID 1+0 Raw Filesystems i. SCSI

Yet To Be Tested: Wish List Oracle Workload Other Solaris Servers n Larger Sun Servers: E 4800, E 10 K, E 15 K w Multiple/Max I/O Channels – Is Scaling Linear? n n New Sun Entry Level J-Bus Servers: V 240, V 440 Fujitsu Servers: Much Faster System Bus w New Sun/Fujitsu Alliance Other UNIX Servers: IBM, Alpha, Intel Linux, etc… Other HBAs (Emulex, JNI? ) EVA RAID 1+0 Raw Filesystems i. SCSI

Roadmap RAID 5 configs on all SAN Storage Nodes Client may supplement with Vx. FS UFS r. MAins on system drives n No mirrors for system drives w Contingency root filesystem on 2 nd internal disk Use 32 K Oracle db_block_size (8 K default)

Roadmap RAID 5 configs on all SAN Storage Nodes Client may supplement with Vx. FS UFS r. MAins on system drives n No mirrors for system drives w Contingency root filesystem on 2 nd internal disk Use 32 K Oracle db_block_size (8 K default)

Metrics Data /da/adm/rcsupport/sys/admin/metrics n bonnie w Y 2000 & Y 2001 data n iozone w bin w output n Date Stamped Directories w scripts

Metrics Data /da/adm/rcsupport/sys/admin/metrics n bonnie w Y 2000 & Y 2001 data n iozone w bin w output n Date Stamped Directories w scripts

References Configuration and Capacity Planning for Solaris Servers n Brian L Wong, Prentice Hall, 1997 Solaris System Performance Management n SA-400, Sun Educational Services The Sun Fireplane System Interconnect n Alan Charlesworth w http: //www. sc 2001. org/papers/pap. pap 150. pdf

References Configuration and Capacity Planning for Solaris Servers n Brian L Wong, Prentice Hall, 1997 Solaris System Performance Management n SA-400, Sun Educational Services The Sun Fireplane System Interconnect n Alan Charlesworth w http: //www. sc 2001. org/papers/pap. pap 150. pdf

References Iozone Source & Documentation n Author: William Norcott (wnorcott@us. oracle. com) w http: //www. iozone. org/

References Iozone Source & Documentation n Author: William Norcott (wnorcott@us. oracle. com) w http: //www. iozone. org/

Questions

Questions