b3f16315fd46eec2547737a9457b146a.ppt

- Количество слайдов: 45

Safety Critical Computer Systems - Open Questions and Approaches Institut für Computertechnik ICT Institute of Computer Technology Andreas Gerstinger Institute for Computer Technology February 16, 2007

Safety Critical Computer Systems - Open Questions and Approaches Institut für Computertechnik ICT Institute of Computer Technology Andreas Gerstinger Institute for Computer Technology February 16, 2007

Agenda n n n Safety-Critical Systems Project Partners Three research topics Safety Engineering n Diversity n Software Metrics n n Conclusion and Outlook Institut für Computertechnik 2

Agenda n n n Safety-Critical Systems Project Partners Three research topics Safety Engineering n Diversity n Software Metrics n n Conclusion and Outlook Institut für Computertechnik 2

Safety-Critical Systems Institut für Computertechnik

Safety-Critical Systems Institut für Computertechnik

Safety Critical Systems n n A safety-critical computer system is a computer system whose failure may cause injury or death to human beings or the environment Examples: n n n n Aircraft control system (fly-by-wire, . . . ) Nuclear power station control system Control systems in cars (anti-lock brakes, . . . ) Health systems (heart pacemakers, . . . ) Railway control systems Communication systems Wireless Sensor Networks Applications? Institut für Computertechnik 4

Safety Critical Systems n n A safety-critical computer system is a computer system whose failure may cause injury or death to human beings or the environment Examples: n n n n Aircraft control system (fly-by-wire, . . . ) Nuclear power station control system Control systems in cars (anti-lock brakes, . . . ) Health systems (heart pacemakers, . . . ) Railway control systems Communication systems Wireless Sensor Networks Applications? Institut für Computertechnik 4

SYSARI Project n n SYSARI = SYstem SAfety Research in Industry Goal of the project n n to conduct and promote the research in system safety engineering and safety-critical system design and development Close cooperation between ICT and Industry One "shared" Employee (me) n Students conducting practical Diploma Theses n Ph. D Theses n Institut für Computertechnik 5

SYSARI Project n n SYSARI = SYstem SAfety Research in Industry Goal of the project n n to conduct and promote the research in system safety engineering and safety-critical system design and development Close cooperation between ICT and Industry One "shared" Employee (me) n Students conducting practical Diploma Theses n Ph. D Theses n Institut für Computertechnik 5

What is Safety? “The avoidance of death, injury or poor health to customers, employees, contractors and the general public; also avoidance of damage to property and the environment” Safety is also defined as "freedom from unacceptable risk of harm" A basic concept in System Safety Engineering is the avoidance of "hazards" Safety is NOT an absolute quantity! Institut für Computertechnik 6

What is Safety? “The avoidance of death, injury or poor health to customers, employees, contractors and the general public; also avoidance of damage to property and the environment” Safety is also defined as "freedom from unacceptable risk of harm" A basic concept in System Safety Engineering is the avoidance of "hazards" Safety is NOT an absolute quantity! Institut für Computertechnik 6

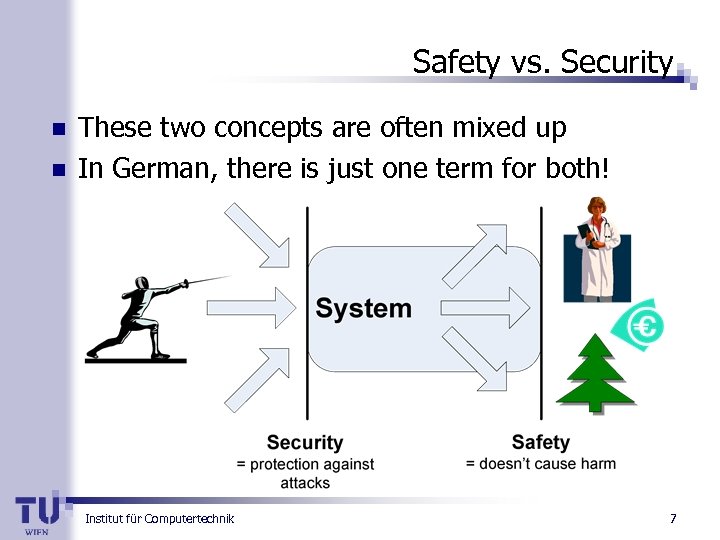

Safety vs. Security n n These two concepts are often mixed up In German, there is just one term for both! Institut für Computertechnik 7

Safety vs. Security n n These two concepts are often mixed up In German, there is just one term for both! Institut für Computertechnik 7

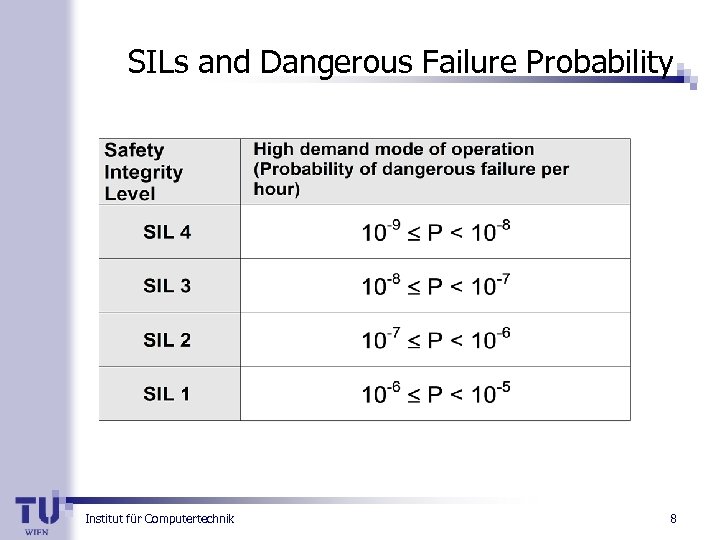

SILs and Dangerous Failure Probability Institut für Computertechnik 8

SILs and Dangerous Failure Probability Institut für Computertechnik 8

Project Partners Institut für Computertechnik

Project Partners Institut für Computertechnik

Project Partner: n n Austrian High Tech company World leader in air traffic control communication systems n 700 employees, company based in Vienna, customers all over the world n http: //www. frequentis. com Institut für Computertechnik 10

Project Partner: n n Austrian High Tech company World leader in air traffic control communication systems n 700 employees, company based in Vienna, customers all over the world n http: //www. frequentis. com Institut für Computertechnik 10

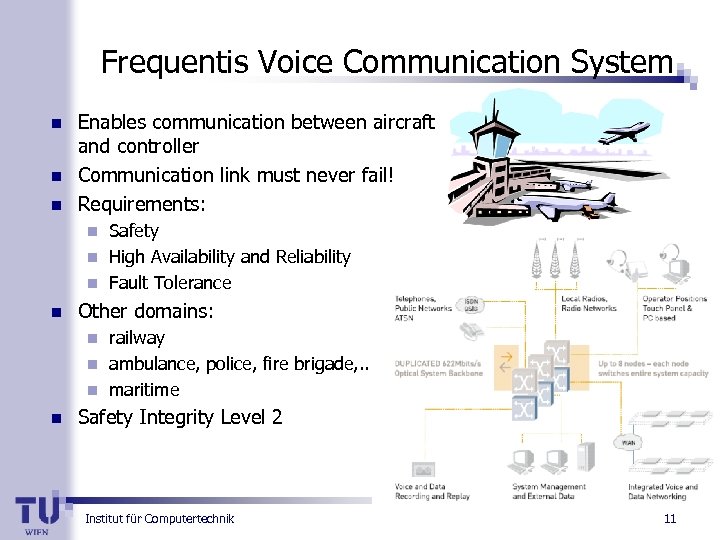

Frequentis Voice Communication System n n n Enables communication between aircraft and controller Communication link must never fail! Requirements: Safety n High Availability and Reliability n Fault Tolerance n n Other domains: railway n ambulance, police, fire brigade, . . . n maritime n n Safety Integrity Level 2 Institut für Computertechnik 11

Frequentis Voice Communication System n n n Enables communication between aircraft and controller Communication link must never fail! Requirements: Safety n High Availability and Reliability n Fault Tolerance n n Other domains: railway n ambulance, police, fire brigade, . . . n maritime n n Safety Integrity Level 2 Institut für Computertechnik 11

Project Partner: n n n French company 68000 employees worldwide Mission critical information systems 25000 researchers Nobel Prize in Physics 2007 awarded to Albert Fert, scientific director of Thales research lab http: //www. thalesgroup. com Institut für Computertechnik 12

Project Partner: n n n French company 68000 employees worldwide Mission critical information systems 25000 researchers Nobel Prize in Physics 2007 awarded to Albert Fert, scientific director of Thales research lab http: //www. thalesgroup. com Institut für Computertechnik 12

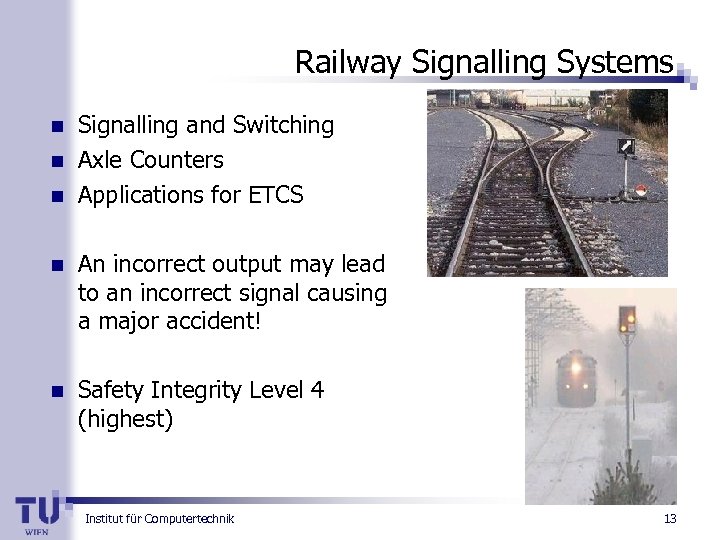

Railway Signalling Systems n n n Signalling and Switching Axle Counters Applications for ETCS n An incorrect output may lead to an incorrect signal causing a major accident! n Safety Integrity Level 4 (highest) Institut für Computertechnik 13

Railway Signalling Systems n n n Signalling and Switching Axle Counters Applications for ETCS n An incorrect output may lead to an incorrect signal causing a major accident! n Safety Integrity Level 4 (highest) Institut für Computertechnik 13

(Old) Interlocking Systems Mechanical / Electromechanical Systems Institut für Computertechnik 14

(Old) Interlocking Systems Mechanical / Electromechanical Systems Institut für Computertechnik 14

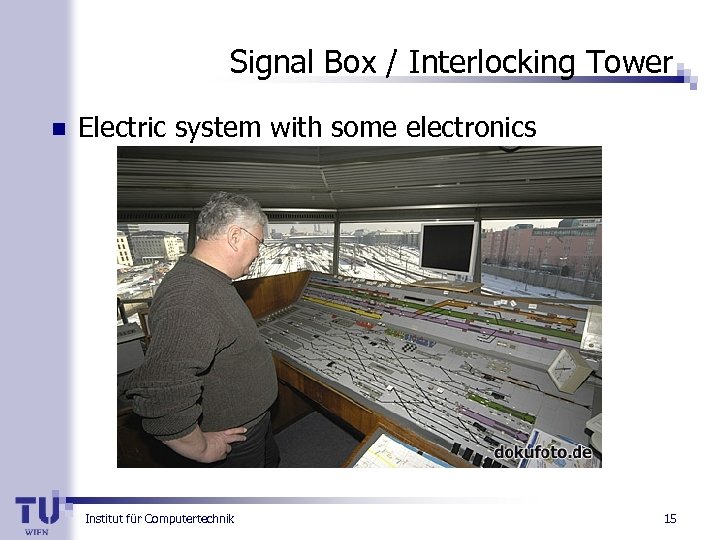

Signal Box / Interlocking Tower n Electric system with some electronics Institut für Computertechnik 15

Signal Box / Interlocking Tower n Electric system with some electronics Institut für Computertechnik 15

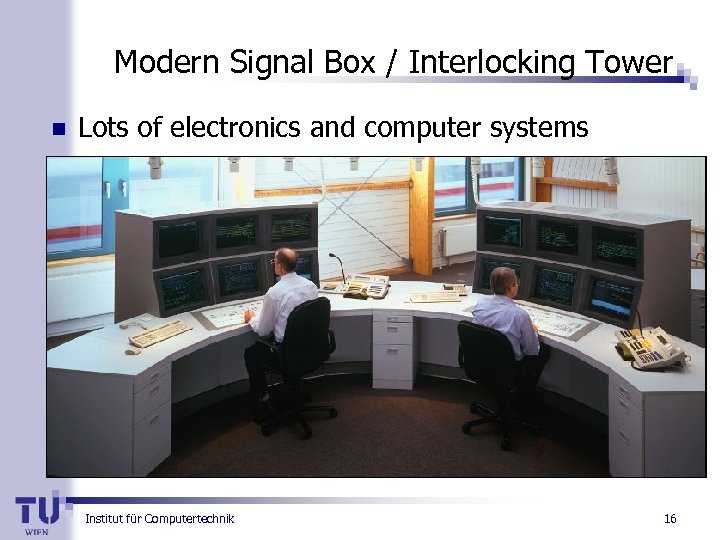

Modern Signal Box / Interlocking Tower n Lots of electronics and computer systems Institut für Computertechnik 16

Modern Signal Box / Interlocking Tower n Lots of electronics and computer systems Institut für Computertechnik 16

Safety Engineering Institut für Computertechnik

Safety Engineering Institut für Computertechnik

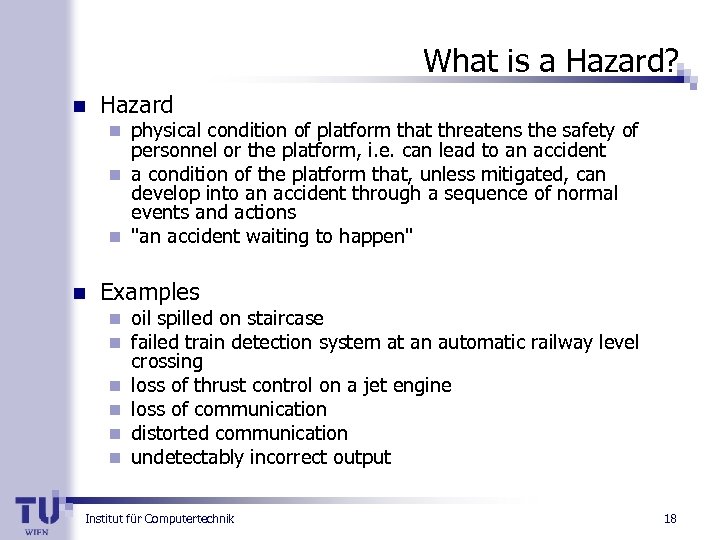

What is a Hazard? n Hazard physical condition of platform that threatens the safety of personnel or the platform, i. e. can lead to an accident n a condition of the platform that, unless mitigated, can develop into an accident through a sequence of normal events and actions n "an accident waiting to happen" n n Examples n n n oil spilled on staircase failed train detection system at an automatic railway level crossing loss of thrust control on a jet engine loss of communication distorted communication undetectably incorrect output Institut für Computertechnik 18

What is a Hazard? n Hazard physical condition of platform that threatens the safety of personnel or the platform, i. e. can lead to an accident n a condition of the platform that, unless mitigated, can develop into an accident through a sequence of normal events and actions n "an accident waiting to happen" n n Examples n n n oil spilled on staircase failed train detection system at an automatic railway level crossing loss of thrust control on a jet engine loss of communication distorted communication undetectably incorrect output Institut für Computertechnik 18

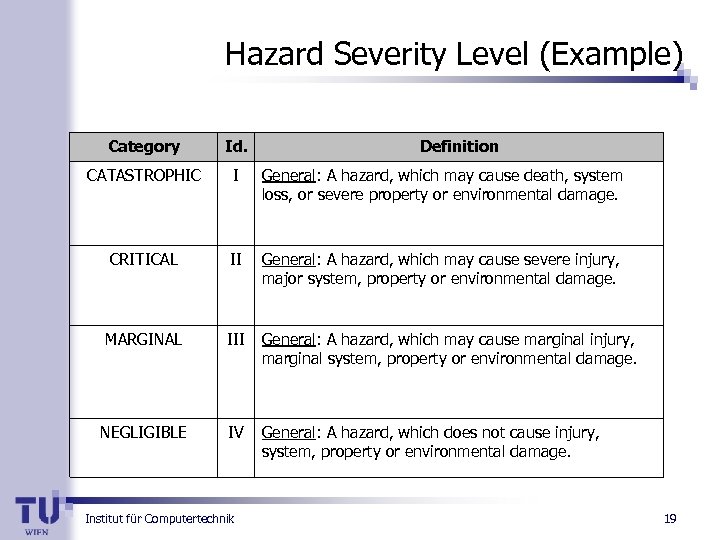

Hazard Severity Level (Example) Category Id. CATASTROPHIC I General: A hazard, which may cause death, system loss, or severe property or environmental damage. CRITICAL II General: A hazard, which may cause severe injury, major system, property or environmental damage. MARGINAL III General: A hazard, which may cause marginal injury, marginal system, property or environmental damage. NEGLIGIBLE IV General: A hazard, which does not cause injury, system, property or environmental damage. Institut für Computertechnik Definition 19

Hazard Severity Level (Example) Category Id. CATASTROPHIC I General: A hazard, which may cause death, system loss, or severe property or environmental damage. CRITICAL II General: A hazard, which may cause severe injury, major system, property or environmental damage. MARGINAL III General: A hazard, which may cause marginal injury, marginal system, property or environmental damage. NEGLIGIBLE IV General: A hazard, which does not cause injury, system, property or environmental damage. Institut für Computertechnik Definition 19

![Hazard Probability Level (Example) Occurrences per year Level Probability [h-1] Definition Frequent P ≥ Hazard Probability Level (Example) Occurrences per year Level Probability [h-1] Definition Frequent P ≥](https://present5.com/presentation/b3f16315fd46eec2547737a9457b146a/image-20.jpg) Hazard Probability Level (Example) Occurrences per year Level Probability [h-1] Definition Frequent P ≥ 10 -3 may occur several times More than 10 a month Probable 10 -3 > P ≥ 10 -4 likely to occur once a 1 to 10 year Occasional 10 -4 > P ≥ 10 -5 likely to occur in the life 10 -1 to 1 of the system Remote 10 -5 > P ≥ 10 -6 unlikely but possible to occur in the life of the 10 -2 to 10 -1 system Improbable 10 -6 > P ≥ 10 -7 very unlikely to occur Incredible P < 10 -7 extremely unlikely, if not Less than 10 -3 inconceivable to occur Institut für Computertechnik 10 -3 to 10 -2 20

Hazard Probability Level (Example) Occurrences per year Level Probability [h-1] Definition Frequent P ≥ 10 -3 may occur several times More than 10 a month Probable 10 -3 > P ≥ 10 -4 likely to occur once a 1 to 10 year Occasional 10 -4 > P ≥ 10 -5 likely to occur in the life 10 -1 to 1 of the system Remote 10 -5 > P ≥ 10 -6 unlikely but possible to occur in the life of the 10 -2 to 10 -1 system Improbable 10 -6 > P ≥ 10 -7 very unlikely to occur Incredible P < 10 -7 extremely unlikely, if not Less than 10 -3 inconceivable to occur Institut für Computertechnik 10 -3 to 10 -2 20

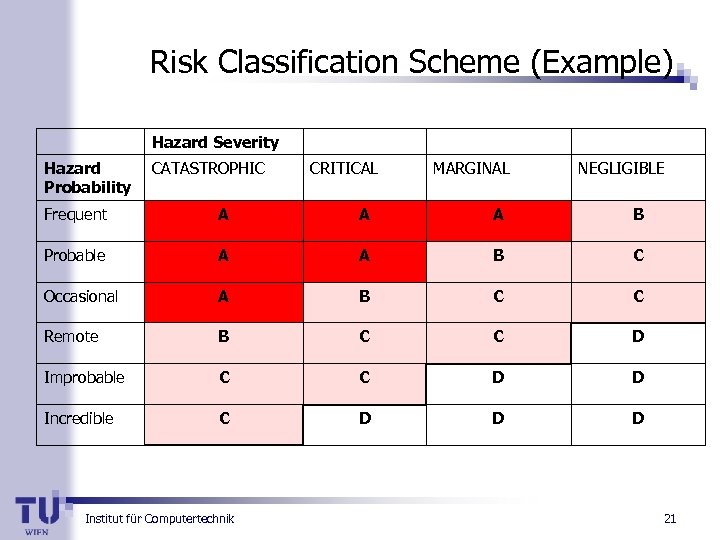

Risk Classification Scheme (Example) Hazard Severity Hazard Probability CATASTROPHIC CRITICAL MARGINAL NEGLIGIBLE Frequent A A A B Probable A A B C Occasional A B C C Remote B C C D Improbable C C D D Incredible C D D D Institut für Computertechnik 21

Risk Classification Scheme (Example) Hazard Severity Hazard Probability CATASTROPHIC CRITICAL MARGINAL NEGLIGIBLE Frequent A A A B Probable A A B C Occasional A B C C Remote B C C D Improbable C C D D Incredible C D D D Institut für Computertechnik 21

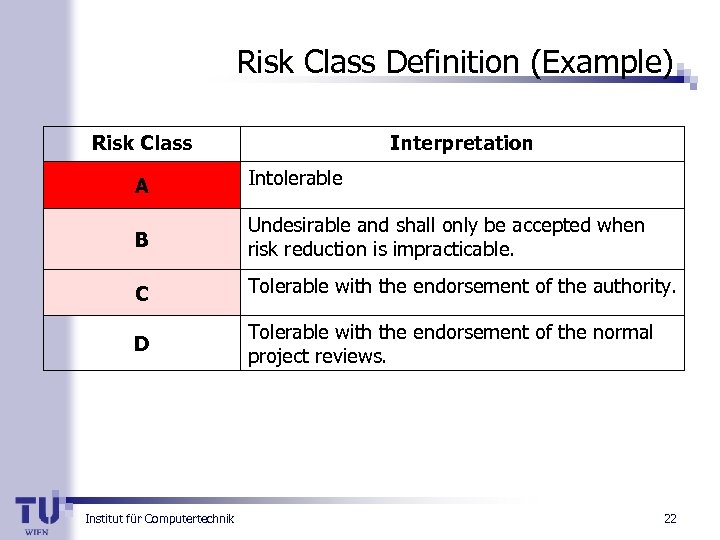

Risk Class Definition (Example) Risk Class Interpretation A Intolerable B Undesirable and shall only be accepted when risk reduction is impracticable. C Tolerable with the endorsement of the authority. D Tolerable with the endorsement of the normal project reviews. Institut für Computertechnik 22

Risk Class Definition (Example) Risk Class Interpretation A Intolerable B Undesirable and shall only be accepted when risk reduction is impracticable. C Tolerable with the endorsement of the authority. D Tolerable with the endorsement of the normal project reviews. Institut für Computertechnik 22

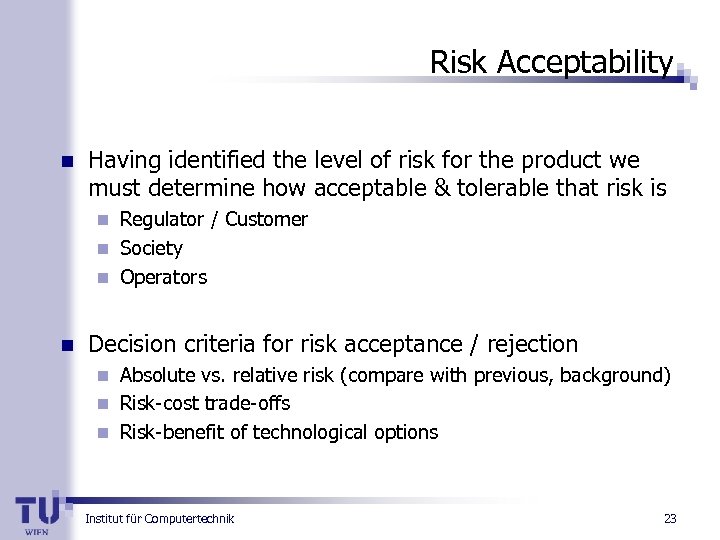

Risk Acceptability n Having identified the level of risk for the product we must determine how acceptable & tolerable that risk is Regulator / Customer n Society n Operators n n Decision criteria for risk acceptance / rejection Absolute vs. relative risk (compare with previous, background) n Risk-cost trade-offs n Risk-benefit of technological options n Institut für Computertechnik 23

Risk Acceptability n Having identified the level of risk for the product we must determine how acceptable & tolerable that risk is Regulator / Customer n Society n Operators n n Decision criteria for risk acceptance / rejection Absolute vs. relative risk (compare with previous, background) n Risk-cost trade-offs n Risk-benefit of technological options n Institut für Computertechnik 23

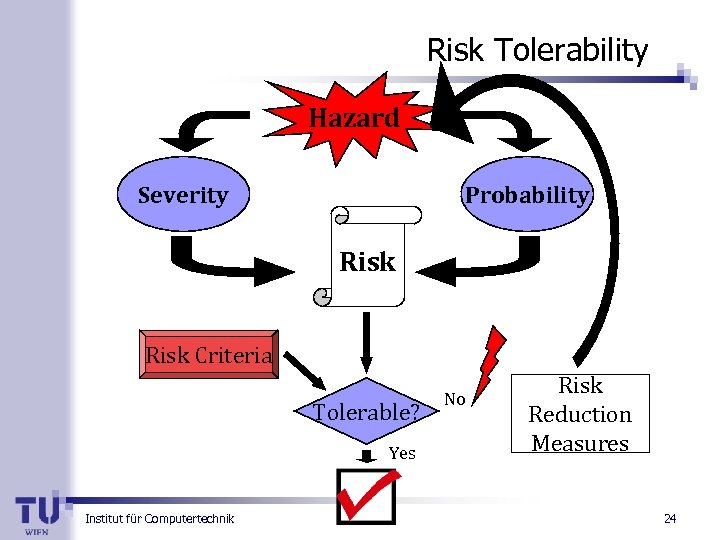

Risk Tolerability Hazard Severity Probability Risk Criteria Tolerable? Yes Institut für Computertechnik No Risk Reduction Measures 24

Risk Tolerability Hazard Severity Probability Risk Criteria Tolerable? Yes Institut für Computertechnik No Risk Reduction Measures 24

Diversity Institut für Computertechnik

Diversity Institut für Computertechnik

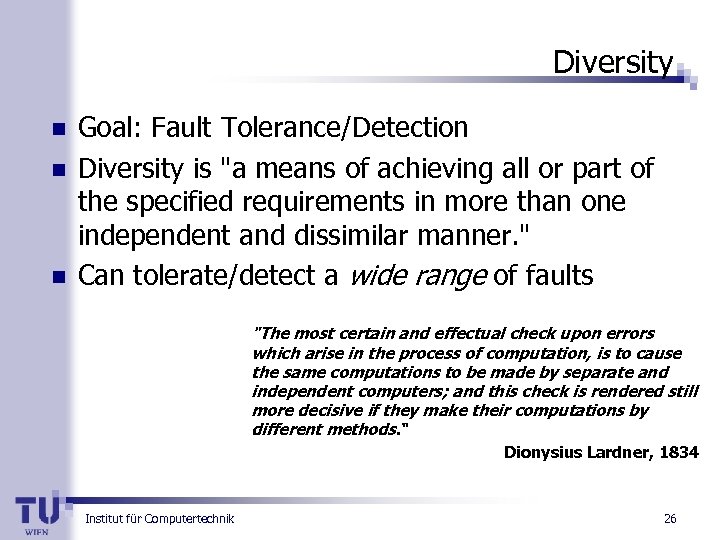

Diversity n n n Goal: Fault Tolerance/Detection Diversity is "a means of achieving all or part of the specified requirements in more than one independent and dissimilar manner. " Can tolerate/detect a wide range of faults "The most certain and effectual check upon errors which arise in the process of computation, is to cause the same computations to be made by separate and independent computers; and this check is rendered still more decisive if they make their computations by different methods. " Dionysius Lardner, 1834 Institut für Computertechnik 26

Diversity n n n Goal: Fault Tolerance/Detection Diversity is "a means of achieving all or part of the specified requirements in more than one independent and dissimilar manner. " Can tolerate/detect a wide range of faults "The most certain and effectual check upon errors which arise in the process of computation, is to cause the same computations to be made by separate and independent computers; and this check is rendered still more decisive if they make their computations by different methods. " Dionysius Lardner, 1834 Institut für Computertechnik 26

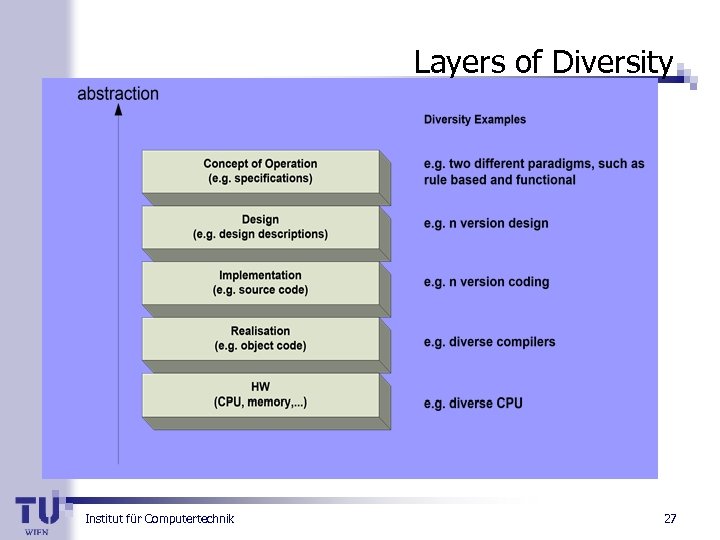

Layers of Diversity Institut für Computertechnik 27

Layers of Diversity Institut für Computertechnik 27

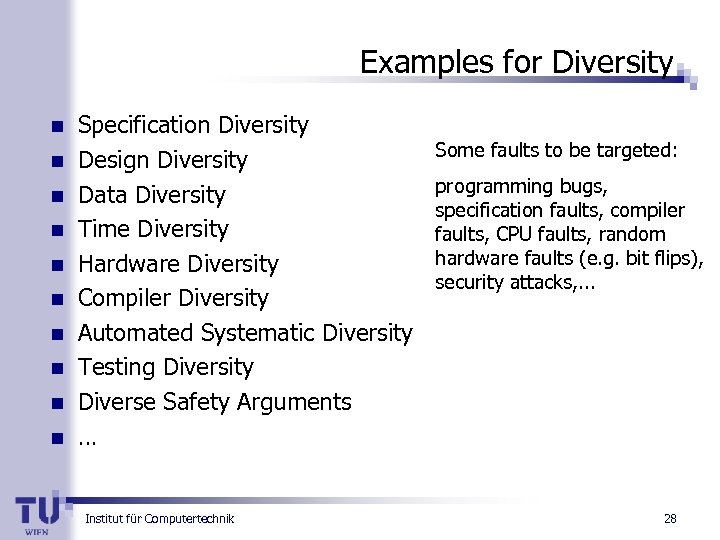

Examples for Diversity n n n n n Specification Diversity Design Diversity Data Diversity Time Diversity Hardware Diversity Compiler Diversity Automated Systematic Diversity Testing Diversity Diverse Safety Arguments … Institut für Computertechnik Some faults to be targeted: programming bugs, specification faults, compiler faults, CPU faults, random hardware faults (e. g. bit flips), security attacks, . . . 28

Examples for Diversity n n n n n Specification Diversity Design Diversity Data Diversity Time Diversity Hardware Diversity Compiler Diversity Automated Systematic Diversity Testing Diversity Diverse Safety Arguments … Institut für Computertechnik Some faults to be targeted: programming bugs, specification faults, compiler faults, CPU faults, random hardware faults (e. g. bit flips), security attacks, . . . 28

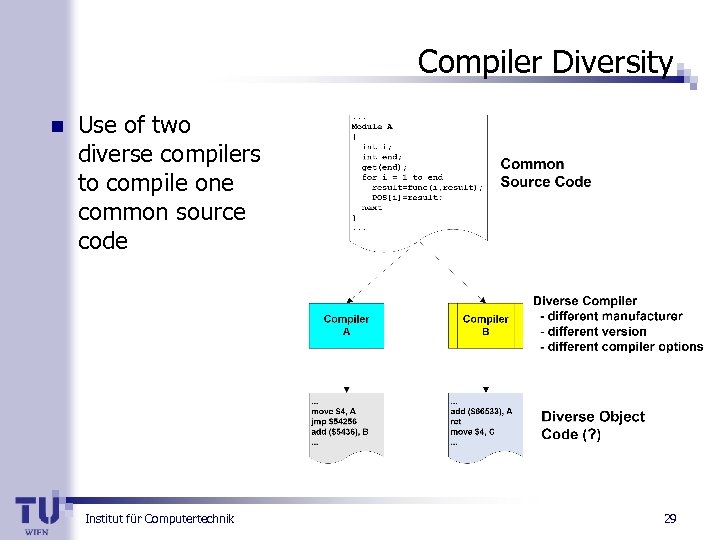

Compiler Diversity n Use of two diverse compilers to compile one common source code Institut für Computertechnik 29

Compiler Diversity n Use of two diverse compilers to compile one common source code Institut für Computertechnik 29

Compiler Diversity: Issues n Targeted Faults: Systematic compiler faults n Some Heisenbugs n Some systematic and permanent hardware faults (if executed on one board) n n Issues: To some degree possible with one compiler and different compile options (optimization on/off, …) n If compilers from different manufacturers are taken, independence must be ensured n Institut für Computertechnik 30

Compiler Diversity: Issues n Targeted Faults: Systematic compiler faults n Some Heisenbugs n Some systematic and permanent hardware faults (if executed on one board) n n Issues: To some degree possible with one compiler and different compile options (optimization on/off, …) n If compilers from different manufacturers are taken, independence must be ensured n Institut für Computertechnik 30

Systematic Automatic Diversity n n Artificial introduction of diversity to tolerate HW Faults (Automatic) Transformation of program P to a semantically equivalent program P' which uses the HW differently n e. g. different memory areas, different registers, different comparisons, . . . if A=B then if A-B = 0 then A or B not (not A and not B) Institut für Computertechnik 31

Systematic Automatic Diversity n n Artificial introduction of diversity to tolerate HW Faults (Automatic) Transformation of program P to a semantically equivalent program P' which uses the HW differently n e. g. different memory areas, different registers, different comparisons, . . . if A=B then if A-B = 0 then A or B not (not A and not B) Institut für Computertechnik 31

Systematic Automatic Diversity n What can be "diversified": n n n n n memory usage execution sequence statement structures array references data coding register usage addressing modes pointers mathematical and logic rules Institut für Computertechnik 32

Systematic Automatic Diversity n What can be "diversified": n n n n n memory usage execution sequence statement structures array references data coding register usage addressing modes pointers mathematical and logic rules Institut für Computertechnik 32

Systematic Automatic Diversity: Issues n Targeted Faults: Systematic hardware faults n Permanent random hardware faults n n Issues: Can be performed on source code or assembler level n If performed on source code level, it must be ensured that compiler does not "cancel out" diversity n (Software) Fault injection experiments showed an improvement of a factor ~100 regarding HW faults n Institut für Computertechnik 33

Systematic Automatic Diversity: Issues n Targeted Faults: Systematic hardware faults n Permanent random hardware faults n n Issues: Can be performed on source code or assembler level n If performed on source code level, it must be ensured that compiler does not "cancel out" diversity n (Software) Fault injection experiments showed an improvement of a factor ~100 regarding HW faults n Institut für Computertechnik 33

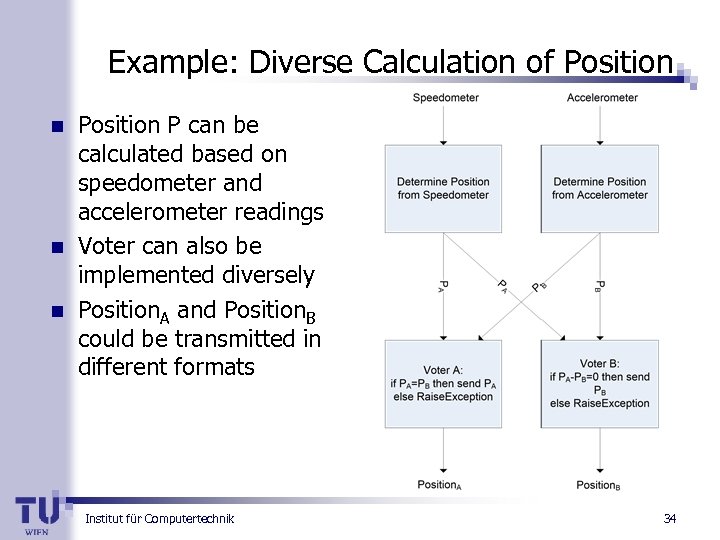

Example: Diverse Calculation of Position n Position P can be calculated based on speedometer and accelerometer readings Voter can also be implemented diversely Position. A and Position. B could be transmitted in different formats Institut für Computertechnik 34

Example: Diverse Calculation of Position n Position P can be calculated based on speedometer and accelerometer readings Voter can also be implemented diversely Position. A and Position. B could be transmitted in different formats Institut für Computertechnik 34

Open Issues n n n How can diversity be used most efficiently? Can diversity be introduced automatically? Which faults are detected/tolerated to which extent? How can the quality fo the diversity be measured? Can diversity be also used to detect security intrusions? Institut für Computertechnik 35

Open Issues n n n How can diversity be used most efficiently? Can diversity be introduced automatically? Which faults are detected/tolerated to which extent? How can the quality fo the diversity be measured? Can diversity be also used to detect security intrusions? Institut für Computertechnik 35

Software Metrics Institut für Computertechnik

Software Metrics Institut für Computertechnik

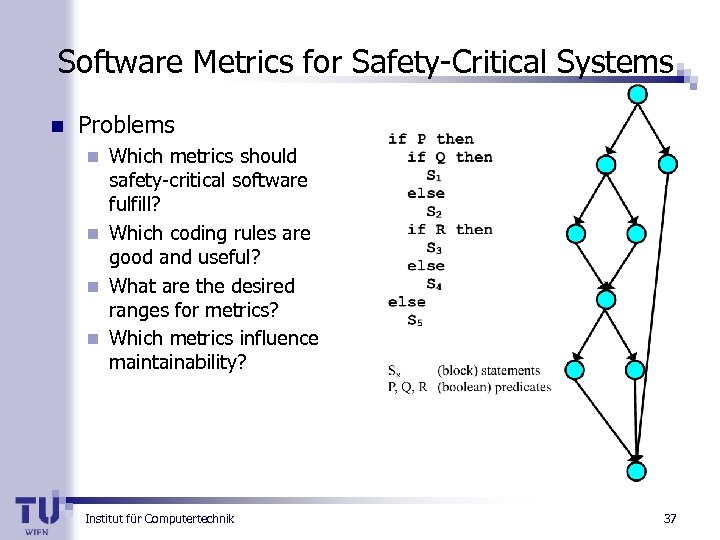

Software Metrics for Safety-Critical Systems n Problems Which metrics should safety-critical software fulfill? n Which coding rules are good and useful? n What are the desired ranges for metrics? n Which metrics influence maintainability? n Institut für Computertechnik 37

Software Metrics for Safety-Critical Systems n Problems Which metrics should safety-critical software fulfill? n Which coding rules are good and useful? n What are the desired ranges for metrics? n Which metrics influence maintainability? n Institut für Computertechnik 37

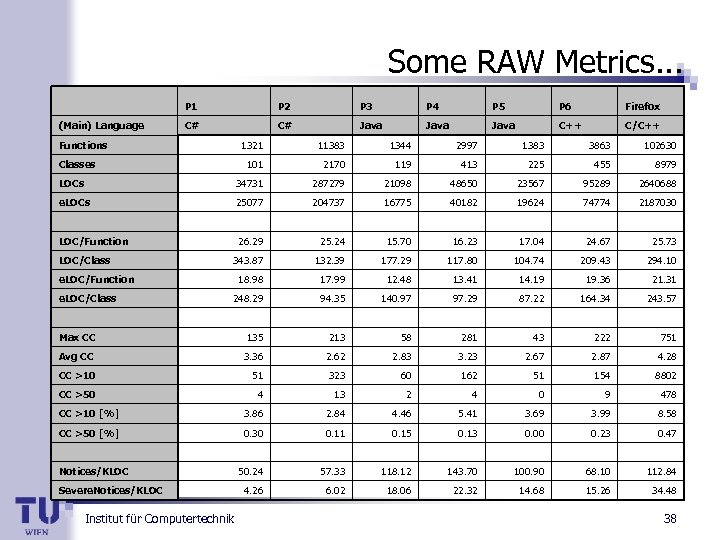

Some RAW Metrics. . . P 1 P 2 P 3 P 4 P 5 P 6 Firefox (Main) Language C# C# Java C++ C/C++ Functions 1321 11383 1344 2997 1383 3863 102630 101 2170 119 413 225 455 8979 LOCs 34731 287279 21098 48650 23567 95289 2640688 e. LOCs 25077 204737 16775 40182 19624 74774 2187030 Classes LOC/Function 26. 29 25. 24 15. 70 16. 23 17. 04 24. 67 25. 73 343. 87 132. 39 177. 29 117. 80 104. 74 209. 43 294. 10 18. 98 17. 99 12. 48 13. 41 14. 19 19. 36 21. 31 248. 29 LOC/Class 94. 35 140. 97 97. 29 87. 22 164. 34 243. 57 e. LOC/Function e. LOC/Class Max CC 135 213 58 281 43 222 751 Avg CC 3. 36 2. 62 2. 83 3. 23 2. 67 2. 87 4. 28 CC >10 51 323 60 162 51 154 8802 CC >50 4 13 2 4 0 9 478 CC >10 [%] 3. 86 2. 84 4. 46 5. 41 3. 69 3. 99 8. 58 CC >50 [%] 0. 30 0. 11 0. 15 0. 13 0. 00 0. 23 0. 47 Notices/KLOC Severe. Notices/KLOC Institut für Computertechnik 50. 24 57. 33 118. 12 143. 70 100. 90 68. 10 112. 84 4. 26 6. 02 18. 06 22. 32 14. 68 15. 26 34. 48 38

Some RAW Metrics. . . P 1 P 2 P 3 P 4 P 5 P 6 Firefox (Main) Language C# C# Java C++ C/C++ Functions 1321 11383 1344 2997 1383 3863 102630 101 2170 119 413 225 455 8979 LOCs 34731 287279 21098 48650 23567 95289 2640688 e. LOCs 25077 204737 16775 40182 19624 74774 2187030 Classes LOC/Function 26. 29 25. 24 15. 70 16. 23 17. 04 24. 67 25. 73 343. 87 132. 39 177. 29 117. 80 104. 74 209. 43 294. 10 18. 98 17. 99 12. 48 13. 41 14. 19 19. 36 21. 31 248. 29 LOC/Class 94. 35 140. 97 97. 29 87. 22 164. 34 243. 57 e. LOC/Function e. LOC/Class Max CC 135 213 58 281 43 222 751 Avg CC 3. 36 2. 62 2. 83 3. 23 2. 67 2. 87 4. 28 CC >10 51 323 60 162 51 154 8802 CC >50 4 13 2 4 0 9 478 CC >10 [%] 3. 86 2. 84 4. 46 5. 41 3. 69 3. 99 8. 58 CC >50 [%] 0. 30 0. 11 0. 15 0. 13 0. 00 0. 23 0. 47 Notices/KLOC Severe. Notices/KLOC Institut für Computertechnik 50. 24 57. 33 118. 12 143. 70 100. 90 68. 10 112. 84 4. 26 6. 02 18. 06 22. 32 14. 68 15. 26 34. 48 38

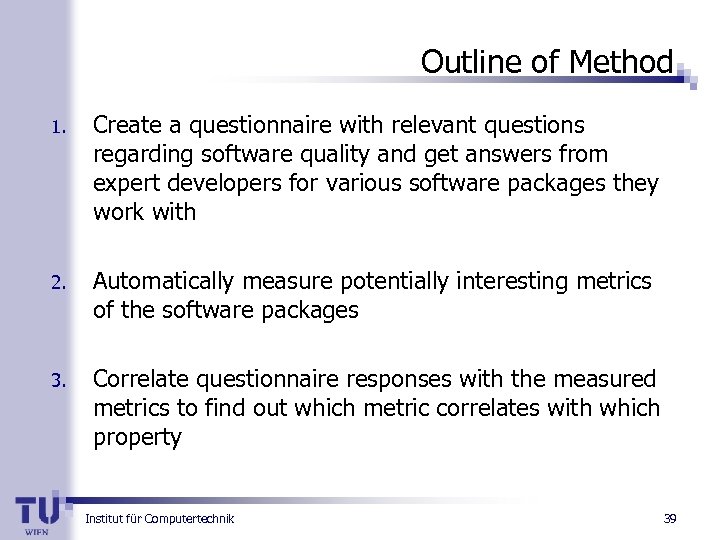

Outline of Method 1. Create a questionnaire with relevant questions regarding software quality and get answers from expert developers for various software packages they work with 2. Automatically measure potentially interesting metrics of the software packages 3. Correlate questionnaire responses with the measured metrics to find out which metric correlates with which property Institut für Computertechnik 39

Outline of Method 1. Create a questionnaire with relevant questions regarding software quality and get answers from expert developers for various software packages they work with 2. Automatically measure potentially interesting metrics of the software packages 3. Correlate questionnaire responses with the measured metrics to find out which metric correlates with which property Institut für Computertechnik 39

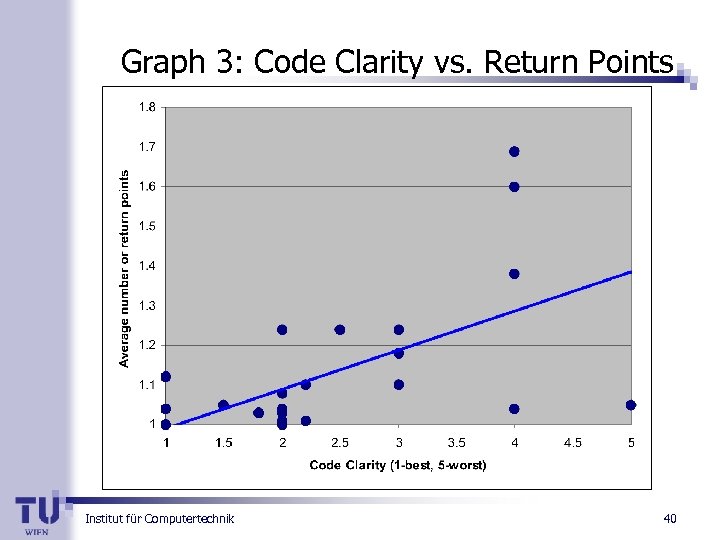

Graph 3: Code Clarity vs. Return Points Institut für Computertechnik 40

Graph 3: Code Clarity vs. Return Points Institut für Computertechnik 40

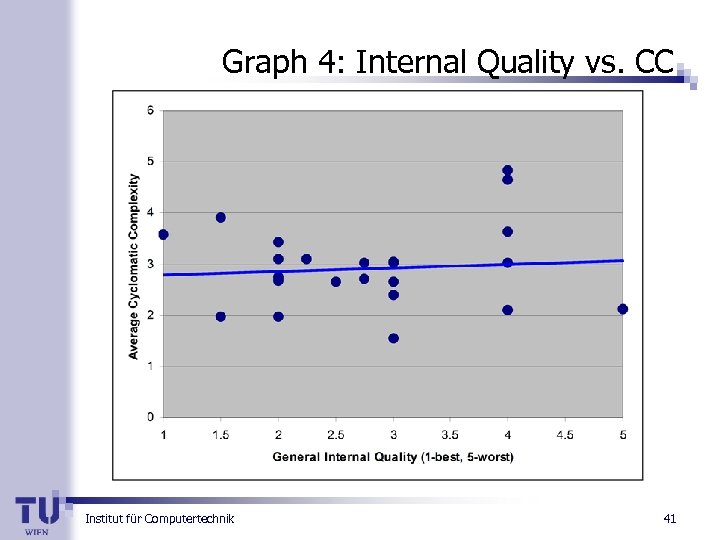

Graph 4: Internal Quality vs. CC Institut für Computertechnik 41

Graph 4: Internal Quality vs. CC Institut für Computertechnik 41

Summary of Results n Strongest correlation with perceived internal quality: Comment density n Control Flow Anomalies n n No correlation with perceived internal quality: Cyclomatic Complexity n Average Method Size n Average File Size n. . . n Institut für Computertechnik 42

Summary of Results n Strongest correlation with perceived internal quality: Comment density n Control Flow Anomalies n n No correlation with perceived internal quality: Cyclomatic Complexity n Average Method Size n Average File Size n. . . n Institut für Computertechnik 42

Conclusion and Outlook Institut für Computertechnik

Conclusion and Outlook Institut für Computertechnik

Further Related Topics n n n n n Agile Methods in Safety Critical Development Hazard Analysis Methods Safety Standards Safety of Operating Systems COTS Components for Safety-Critical Systems Safety Aspects of Modern Programming Languages (Java, C#. NET) Fault Detection, Correction and Tolerance Safety and Security Harmonisation Linux in Safety-Critical Environments Online Tests to detect hardware faults Institut für Computertechnik 44

Further Related Topics n n n n n Agile Methods in Safety Critical Development Hazard Analysis Methods Safety Standards Safety of Operating Systems COTS Components for Safety-Critical Systems Safety Aspects of Modern Programming Languages (Java, C#. NET) Fault Detection, Correction and Tolerance Safety and Security Harmonisation Linux in Safety-Critical Environments Online Tests to detect hardware faults Institut für Computertechnik 44

Conclusion n n Many open issues in this field. . . All research activities in SYSARI project practically motivated Number of safety-critical systems increases International Standards play a vital role (e. g. IEC 61508) Contact: Andreas Gerstinger: gerstinger@ict. tuwien. ac. at Institut für Computertechnik 45

Conclusion n n Many open issues in this field. . . All research activities in SYSARI project practically motivated Number of safety-critical systems increases International Standards play a vital role (e. g. IEC 61508) Contact: Andreas Gerstinger: gerstinger@ict. tuwien. ac. at Institut für Computertechnik 45