101452e2dfe085d90481c2b0e829d0cf.ppt

- Количество слайдов: 13

S 6 Data Production/LSC Data Grid Plans: RDS HOFT LDG March 2008 LSC/DASWG F 2 F Meetings Gregory Mendell LIGO Hanford Observatory LIGO-Gxxxxx-00 -W LIGO-G 050374 -00 -W LIGO-G 050635 -00 -W

S 6 Data Production/LSC Data Grid Plans: RDS HOFT LDG March 2008 LSC/DASWG F 2 F Meetings Gregory Mendell LIGO Hanford Observatory LIGO-Gxxxxx-00 -W LIGO-G 050374 -00 -W LIGO-G 050635 -00 -W

S 5 Reduced Data Sets (RDS) • RDS frames are subsets of the raw data, with some channels downsampled • Level 1 RDS (/archive/frames/S 5/L 1; type == RDS_R_L 1) – separate frames for LHO and LLO: • LHO: 131 H 1 channels and 143 H 2 channels • LLO: 181 L 1 channels • Level 3 RDS (/archive/frames/S 5/L 3; type == RDS_R_L 3) – DARM_ERR, STATE_VECTOR, and SEGNUM; separate frames for LHO and LLO. • Level 4 RDS (/archive/frames/S 5/L 4; type == [H 1|H 2|L 1]_RDS_R_L 1) – DARM_ERR downsampled by a factor 4, STATE_VECTOR, and SEGNUM; separate frame for H 1, H 2 and L 1 data.

S 5 Reduced Data Sets (RDS) • RDS frames are subsets of the raw data, with some channels downsampled • Level 1 RDS (/archive/frames/S 5/L 1; type == RDS_R_L 1) – separate frames for LHO and LLO: • LHO: 131 H 1 channels and 143 H 2 channels • LLO: 181 L 1 channels • Level 3 RDS (/archive/frames/S 5/L 3; type == RDS_R_L 3) – DARM_ERR, STATE_VECTOR, and SEGNUM; separate frames for LHO and LLO. • Level 4 RDS (/archive/frames/S 5/L 4; type == [H 1|H 2|L 1]_RDS_R_L 1) – DARM_ERR downsampled by a factor 4, STATE_VECTOR, and SEGNUM; separate frame for H 1, H 2 and L 1 data.

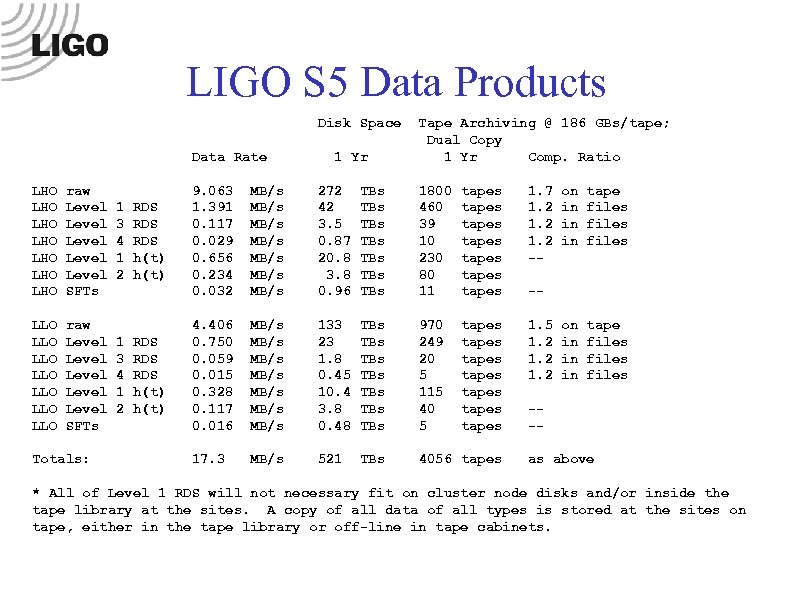

LIGO S 5 Data Products Disk Space Data Rate LHO LHO raw Level Level SFTs LLO LLO raw Level Level SFTs Totals: 1 3 4 1 2 RDS RDS RDS h(t) 1 Yr Tape Archiving @ 186 GBs/tape; Dual Copy 1 Yr Comp. Ratio 9. 063 1. 391 0. 117 0. 029 0. 656 0. 234 0. 032 MB/s MB/s 272 42 3. 5 0. 87 20. 8 3. 8 0. 96 TBs TBs 1800 460 39 10 230 80 11 tapes tapes 1. 7 1. 2 -- 4. 406 0. 750 0. 059 0. 015 0. 328 0. 117 0. 016 MB/s MB/s 133 23 1. 8 0. 45 10. 4 3. 8 0. 48 TBs TBs 970 249 20 5 115 40 5 tapes tapes 1. 5 1. 2 17. 3 MB/s 521 TBs 4056 tapes on in in in tape files files -- --as above * All of Level 1 RDS will not necessary fit on cluster node disks and/or inside the tape library at the sites. A copy of all data of all types is stored at the sites on tape, either in the tape library or off-line in tape cabinets.

LIGO S 5 Data Products Disk Space Data Rate LHO LHO raw Level Level SFTs LLO LLO raw Level Level SFTs Totals: 1 3 4 1 2 RDS RDS RDS h(t) 1 Yr Tape Archiving @ 186 GBs/tape; Dual Copy 1 Yr Comp. Ratio 9. 063 1. 391 0. 117 0. 029 0. 656 0. 234 0. 032 MB/s MB/s 272 42 3. 5 0. 87 20. 8 3. 8 0. 96 TBs TBs 1800 460 39 10 230 80 11 tapes tapes 1. 7 1. 2 -- 4. 406 0. 750 0. 059 0. 015 0. 328 0. 117 0. 016 MB/s MB/s 133 23 1. 8 0. 45 10. 4 3. 8 0. 48 TBs TBs 970 249 20 5 115 40 5 tapes tapes 1. 5 1. 2 17. 3 MB/s 521 TBs 4056 tapes on in in in tape files files -- --as above * All of Level 1 RDS will not necessary fit on cluster node disks and/or inside the tape library at the sites. A copy of all data of all types is stored at the sites on tape, either in the tape library or off-line in tape cabinets.

A 5/S 6 Change to RDS compression • The compression of the RDS frames has changed from gzip to zero_suppress_int_float_otherwise_gzip in the A 5 Astrowatch data. This will continue with the S 6 RDS data. • The new compression method is already in the C and C++ frame libraries, but not in the fast frame library used by dtt. A request to add this has been sent to Daniel Sigg. • Tests that show that zero_suppress_int_float_otherwise_gzip reduces the size of the Level 1 RDS frames by 30% and speeds up output (and probably input) by around 20%.

A 5/S 6 Change to RDS compression • The compression of the RDS frames has changed from gzip to zero_suppress_int_float_otherwise_gzip in the A 5 Astrowatch data. This will continue with the S 6 RDS data. • The new compression method is already in the C and C++ frame libraries, but not in the fast frame library used by dtt. A request to add this has been sent to Daniel Sigg. • Tests that show that zero_suppress_int_float_otherwise_gzip reduces the size of the Level 1 RDS frames by 30% and speeds up output (and probably input) by around 20%.

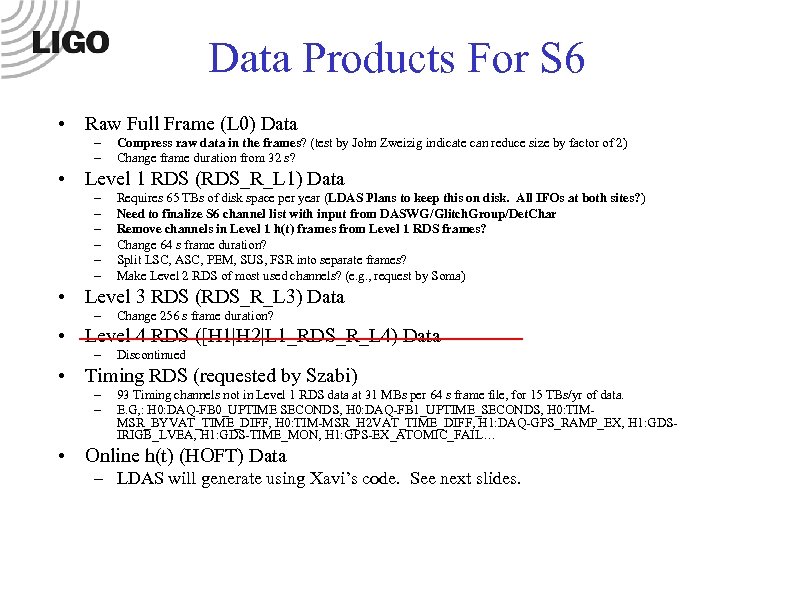

Data Products For S 6 • Raw Full Frame (L 0) Data – – Compress raw data in the frames? (test by John Zweizig indicate can reduce size by factor of 2) Change frame duration from 32 s? • Level 1 RDS (RDS_R_L 1) Data – – – Requires 65 TBs of disk space per year (LDAS Plans to keep this on disk. All IFOs at both sites? ) Need to finalize S 6 channel list with input from DASWG/Glitch. Group/Det. Char Remove channels in Level 1 h(t) frames from Level 1 RDS frames? Change 64 s frame duration? Split LSC, ASC, PEM, SUS, FSR into separate frames? Make Level 2 RDS of most used channels? (e. g. , request by Soma) • Level 3 RDS (RDS_R_L 3) Data – Change 256 s frame duration? • Level 4 RDS ([H 1|H 2|L 1_RDS_R_L 4) Data – Discontinued • Timing RDS (requested by Szabi) – – 93 Timing channels not in Level 1 RDS data at 31 MBs per 64 s frame file, for 15 TBs/yr of data. E. G, : H 0: DAQ-FB 0_UPTIME SECONDS, H 0: DAQ-FB 1_UPTIME_SECONDS, H 0: TIMMSR_BYVAT_TIME_DIFF, H 0: TIM-MSR_H 2 VAT_TIME_DIFF, H 1: DAQ-GPS_RAMP_EX, H 1: GDSIRIGB_LVEA, H 1: GDS-TIME_MON, H 1: GPS-EX_ATOMIC_FAIL… • Online h(t) (HOFT) Data – LDAS will generate using Xavi’s code. See next slides.

Data Products For S 6 • Raw Full Frame (L 0) Data – – Compress raw data in the frames? (test by John Zweizig indicate can reduce size by factor of 2) Change frame duration from 32 s? • Level 1 RDS (RDS_R_L 1) Data – – – Requires 65 TBs of disk space per year (LDAS Plans to keep this on disk. All IFOs at both sites? ) Need to finalize S 6 channel list with input from DASWG/Glitch. Group/Det. Char Remove channels in Level 1 h(t) frames from Level 1 RDS frames? Change 64 s frame duration? Split LSC, ASC, PEM, SUS, FSR into separate frames? Make Level 2 RDS of most used channels? (e. g. , request by Soma) • Level 3 RDS (RDS_R_L 3) Data – Change 256 s frame duration? • Level 4 RDS ([H 1|H 2|L 1_RDS_R_L 4) Data – Discontinued • Timing RDS (requested by Szabi) – – 93 Timing channels not in Level 1 RDS data at 31 MBs per 64 s frame file, for 15 TBs/yr of data. E. G, : H 0: DAQ-FB 0_UPTIME SECONDS, H 0: DAQ-FB 1_UPTIME_SECONDS, H 0: TIMMSR_BYVAT_TIME_DIFF, H 0: TIM-MSR_H 2 VAT_TIME_DIFF, H 1: DAQ-GPS_RAMP_EX, H 1: GDSIRIGB_LVEA, H 1: GDS-TIME_MON, H 1: GPS-EX_ATOMIC_FAIL… • Online h(t) (HOFT) Data – LDAS will generate using Xavi’s code. See next slides.

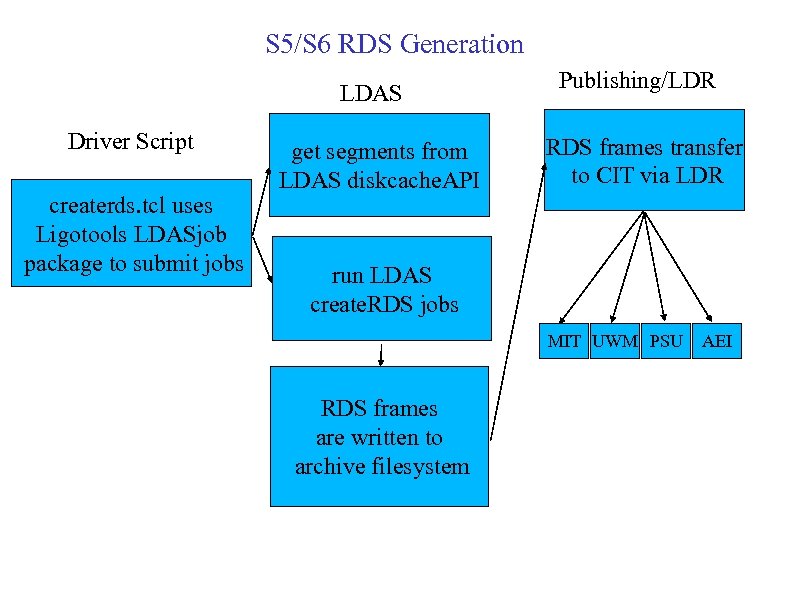

S 5/S 6 RDS Generation LDAS Driver Script createrds. tcl uses Ligotools LDASjob package to submit jobs get segments from LDAS diskcache. API Publishing/LDR RDS frames transfer to CIT via LDR run LDAS create. RDS jobs MIT UWM PSU RDS frames are written to archive filesystem AEI

S 5/S 6 RDS Generation LDAS Driver Script createrds. tcl uses Ligotools LDASjob package to submit jobs get segments from LDAS diskcache. API Publishing/LDR RDS frames transfer to CIT via LDR run LDAS create. RDS jobs MIT UWM PSU RDS frames are written to archive filesystem AEI

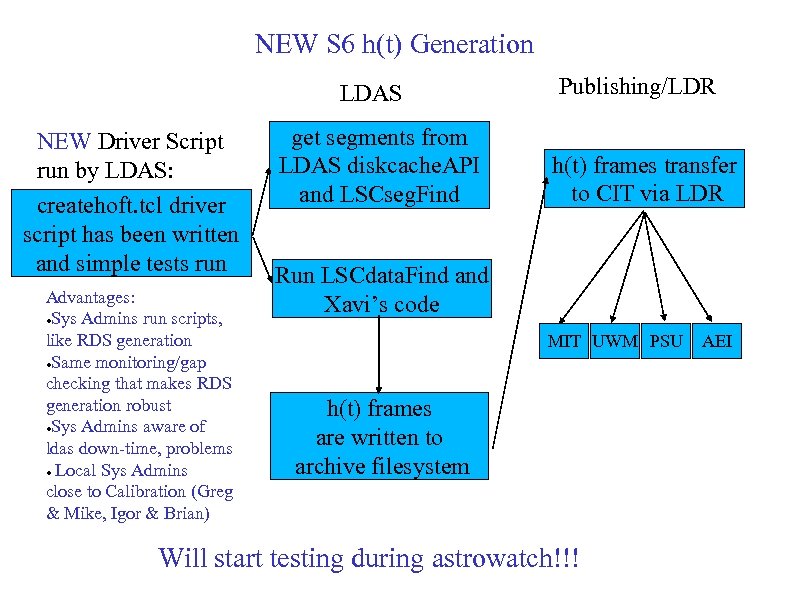

NEW S 6 h(t) Generation LDAS NEW Driver Script run by LDAS: createhoft. tcl driver script has been written and simple tests run Advantages: ●Sys Admins run scripts, like RDS generation ●Same monitoring/gap checking that makes RDS generation robust ●Sys Admins aware of ldas down-time, problems ● Local Sys Admins close to Calibration (Greg & Mike, Igor & Brian) get segments from LDAS diskcache. API and LSCseg. Find Publishing/LDR h(t) frames transfer to CIT via LDR Run LSCdata. Find and Xavi’s code MIT UWM PSU h(t) frames are written to archive filesystem Will start testing during astrowatch!!! AEI

NEW S 6 h(t) Generation LDAS NEW Driver Script run by LDAS: createhoft. tcl driver script has been written and simple tests run Advantages: ●Sys Admins run scripts, like RDS generation ●Same monitoring/gap checking that makes RDS generation robust ●Sys Admins aware of ldas down-time, problems ● Local Sys Admins close to Calibration (Greg & Mike, Igor & Brian) get segments from LDAS diskcache. API and LSCseg. Find Publishing/LDR h(t) frames transfer to CIT via LDR Run LSCdata. Find and Xavi’s code MIT UWM PSU h(t) frames are written to archive filesystem Will start testing during astrowatch!!! AEI

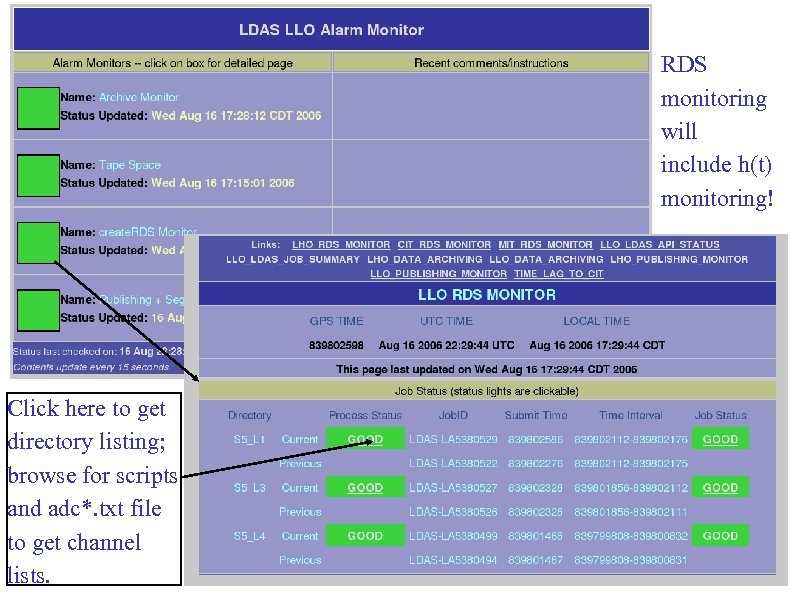

RDS monitoring will include h(t) monitoring! Click here to get directory listing; browse for scripts and adc*. txt file to get channel lists.

RDS monitoring will include h(t) monitoring! Click here to get directory listing; browse for scripts and adc*. txt file to get channel lists.

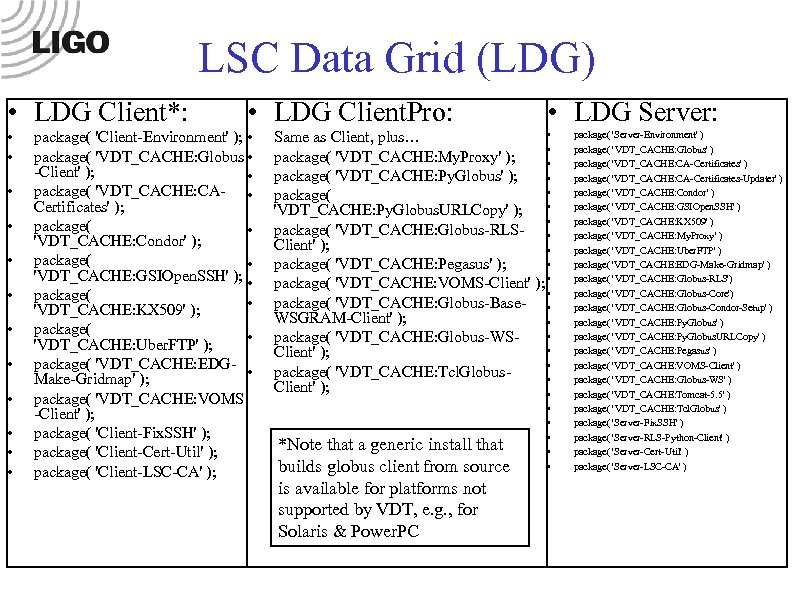

LSC Data Grid (LDG) • LDG Client*: • • • • LDG Client. Pro: package( 'Client-Environment' ); • package( 'VDT_CACHE: Globus • -Client' ); • package( 'VDT_CACHE: CA- • Certificates' ); package( • 'VDT_CACHE: Condor' ); package( • 'VDT_CACHE: GSIOpen. SSH' ); • package( • 'VDT_CACHE: KX 509' ); package( • 'VDT_CACHE: Uber. FTP' ); package( 'VDT_CACHE: EDG- • Make-Gridmap' ); package( 'VDT_CACHE: VOMS -Client' ); package( 'Client-Fix. SSH' ); package( 'Client-Cert-Util' ); package( 'Client-LSC-CA' ); • LDG Server: • Same as Client, plus… • package( 'VDT_CACHE: My. Proxy' ); • package( 'VDT_CACHE: Py. Globus' ); • • package( • 'VDT_CACHE: Py. Globus. URLCopy' ); • package( 'VDT_CACHE: Globus-RLS- • Client' ); • • package( 'VDT_CACHE: Pegasus' ); package( 'VDT_CACHE: VOMS-Client' ); • • package( 'VDT_CACHE: Globus-Base- • WSGRAM-Client' ); • • package( 'VDT_CACHE: Globus-WS • Client' ); • package( 'VDT_CACHE: Tcl. Globus • Client' ); • *Note that a generic install that builds globus client from source is available for platforms not supported by VDT, e. g. , for Solaris & Power. PC • • • package( 'Server-Environment' ) package( 'VDT_CACHE: Globus' ) package( 'VDT_CACHE: CA-Certificates-Updater' ) package( 'VDT_CACHE: Condor' ) package( 'VDT_CACHE: GSIOpen. SSH' ) package( 'VDT_CACHE: KX 509' ) package( 'VDT_CACHE: My. Proxy' ) package( 'VDT_CACHE: Uber. FTP' ) package( 'VDT_CACHE: EDG-Make-Gridmap' ) package( 'VDT_CACHE: Globus-RLS') package( 'VDT_CACHE: Globus-Core') package( 'VDT_CACHE: Globus-Condor-Setup' ) package( 'VDT_CACHE: Py. Globus. URLCopy' ) package( 'VDT_CACHE: Pegasus' ) package( 'VDT_CACHE: VOMS-Client' ) package( 'VDT_CACHE: Globus-WS' ) package( 'VDT_CACHE: Tomcat-5. 5' ) package( 'VDT_CACHE: Tcl. Globus' ) package( 'Server-Fix. SSH' ) package( 'Server-RLS-Python-Client' ) package( 'Server-Cert-Util' ) package( 'Server-LSC-CA' )

LSC Data Grid (LDG) • LDG Client*: • • • • LDG Client. Pro: package( 'Client-Environment' ); • package( 'VDT_CACHE: Globus • -Client' ); • package( 'VDT_CACHE: CA- • Certificates' ); package( • 'VDT_CACHE: Condor' ); package( • 'VDT_CACHE: GSIOpen. SSH' ); • package( • 'VDT_CACHE: KX 509' ); package( • 'VDT_CACHE: Uber. FTP' ); package( 'VDT_CACHE: EDG- • Make-Gridmap' ); package( 'VDT_CACHE: VOMS -Client' ); package( 'Client-Fix. SSH' ); package( 'Client-Cert-Util' ); package( 'Client-LSC-CA' ); • LDG Server: • Same as Client, plus… • package( 'VDT_CACHE: My. Proxy' ); • package( 'VDT_CACHE: Py. Globus' ); • • package( • 'VDT_CACHE: Py. Globus. URLCopy' ); • package( 'VDT_CACHE: Globus-RLS- • Client' ); • • package( 'VDT_CACHE: Pegasus' ); package( 'VDT_CACHE: VOMS-Client' ); • • package( 'VDT_CACHE: Globus-Base- • WSGRAM-Client' ); • • package( 'VDT_CACHE: Globus-WS • Client' ); • package( 'VDT_CACHE: Tcl. Globus • Client' ); • *Note that a generic install that builds globus client from source is available for platforms not supported by VDT, e. g. , for Solaris & Power. PC • • • package( 'Server-Environment' ) package( 'VDT_CACHE: Globus' ) package( 'VDT_CACHE: CA-Certificates-Updater' ) package( 'VDT_CACHE: Condor' ) package( 'VDT_CACHE: GSIOpen. SSH' ) package( 'VDT_CACHE: KX 509' ) package( 'VDT_CACHE: My. Proxy' ) package( 'VDT_CACHE: Uber. FTP' ) package( 'VDT_CACHE: EDG-Make-Gridmap' ) package( 'VDT_CACHE: Globus-RLS') package( 'VDT_CACHE: Globus-Core') package( 'VDT_CACHE: Globus-Condor-Setup' ) package( 'VDT_CACHE: Py. Globus. URLCopy' ) package( 'VDT_CACHE: Pegasus' ) package( 'VDT_CACHE: VOMS-Client' ) package( 'VDT_CACHE: Globus-WS' ) package( 'VDT_CACHE: Tomcat-5. 5' ) package( 'VDT_CACHE: Tcl. Globus' ) package( 'Server-Fix. SSH' ) package( 'Server-RLS-Python-Client' ) package( 'Server-Cert-Util' ) package( 'Server-LSC-CA' )

LDG Plans • Support for Cent. OS 5 (done) and Debian Etch (next release) • Further support for Mac OS X on Intel (Leopard) • Most Frequent User Requests: • Make it work on my platform. Problems: • Missing dependencies, so should work towards improved dependency checking • Minor bug with work around (so should document fixes in easy to find location) • Lite version that just installs gsi-enabled ssh, certificate management tools • Help getting/renewing certificates (See Authentication Committee’s work)

LDG Plans • Support for Cent. OS 5 (done) and Debian Etch (next release) • Further support for Mac OS X on Intel (Leopard) • Most Frequent User Requests: • Make it work on my platform. Problems: • Missing dependencies, so should work towards improved dependency checking • Minor bug with work around (so should document fixes in easy to find location) • Lite version that just installs gsi-enabled ssh, certificate management tools • Help getting/renewing certificates (See Authentication Committee’s work)

LDG Middleware Packaging Email from Stuart: As suggested by Scott in the Feb 28 Comp. Comm meeting I would like to initiate the Comp. Comm Middleware Packaging Group to address how LIGO should manage the distribution of grid middleware. The initial group is to consist of Greg and Scott, though they are encouraged to recruit other expertise and opinions as needed. The primary questions to address are: 1) Should the LDG server bundle be replaced with the OSG stack? If so, what about sites, such as the Observatories, that are not running OSG jobs. 2) If we stick with LDG as a rebundle of the VDT: a) Do we have the right split between client, clientpro, and server (more or less splits? ) b) How should the Server bundle be enhanced to effectively support WS-GRAM services (aka GT 4)--e. g. , VDT enhancement to support distinct LDG_SERVER_LOCATION and VDT_LOCATION or modify LDG to make these equal? c) Should we actively push for the replacement of Pacman? and if so what should we request, e. g. , RPM, Solaris packages, DEB, . . . 3) What LIGO applications should be modified to use standard LDG middleware installations, e. g. , LDAS is currently investigating the feasibility of using Tcl. Globus and Globus from LDG Server rather than a parallel install in /ldcg, what about LDR, others?

LDG Middleware Packaging Email from Stuart: As suggested by Scott in the Feb 28 Comp. Comm meeting I would like to initiate the Comp. Comm Middleware Packaging Group to address how LIGO should manage the distribution of grid middleware. The initial group is to consist of Greg and Scott, though they are encouraged to recruit other expertise and opinions as needed. The primary questions to address are: 1) Should the LDG server bundle be replaced with the OSG stack? If so, what about sites, such as the Observatories, that are not running OSG jobs. 2) If we stick with LDG as a rebundle of the VDT: a) Do we have the right split between client, clientpro, and server (more or less splits? ) b) How should the Server bundle be enhanced to effectively support WS-GRAM services (aka GT 4)--e. g. , VDT enhancement to support distinct LDG_SERVER_LOCATION and VDT_LOCATION or modify LDG to make these equal? c) Should we actively push for the replacement of Pacman? and if so what should we request, e. g. , RPM, Solaris packages, DEB, . . . 3) What LIGO applications should be modified to use standard LDG middleware installations, e. g. , LDAS is currently investigating the feasibility of using Tcl. Globus and Globus from LDG Server rather than a parallel install in /ldcg, what about LDR, others?

END

END

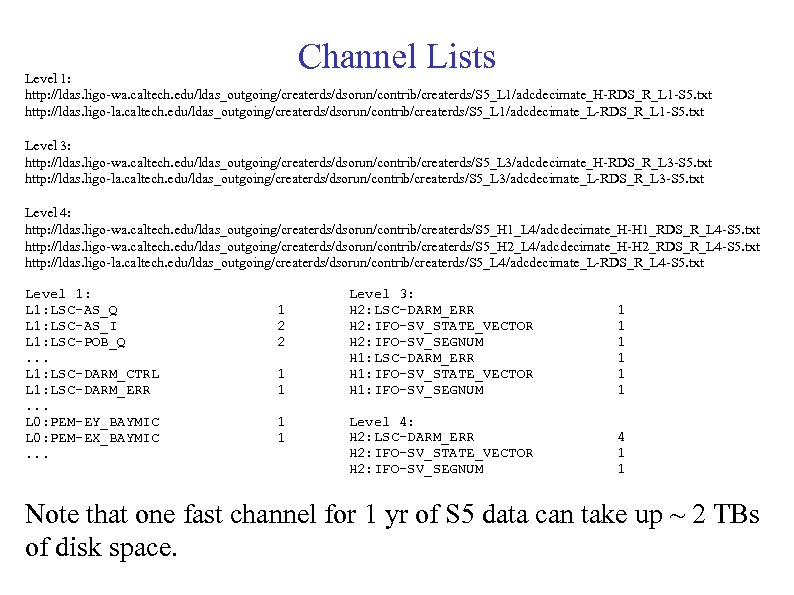

Channel Lists Level 1: http: //ldas. ligo-wa. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 1/adcdecimate_H-RDS_R_L 1 -S 5. txt http: //ldas. ligo-la. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 1/adcdecimate_L-RDS_R_L 1 -S 5. txt Level 3: http: //ldas. ligo-wa. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 3/adcdecimate_H-RDS_R_L 3 -S 5. txt http: //ldas. ligo-la. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 3/adcdecimate_L-RDS_R_L 3 -S 5. txt Level 4: http: //ldas. ligo-wa. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_H 1_L 4/adcdecimate_H-H 1_RDS_R_L 4 -S 5. txt http: //ldas. ligo-wa. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_H 2_L 4/adcdecimate_H-H 2_RDS_R_L 4 -S 5. txt http: //ldas. ligo-la. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 4/adcdecimate_L-RDS_R_L 4 -S 5. txt Level 1: LSC-AS_Q L 1: LSC-AS_I L 1: LSC-POB_Q. . . L 1: LSC-DARM_CTRL L 1: LSC-DARM_ERR. . . L 0: PEM-EY_BAYMIC L 0: PEM-EX_BAYMIC. . . 1 2 2 1 1 Level 3: H 2: LSC-DARM_ERR H 2: IFO-SV_STATE_VECTOR H 2: IFO-SV_SEGNUM H 1: LSC-DARM_ERR H 1: IFO-SV_STATE_VECTOR H 1: IFO-SV_SEGNUM 1 1 1 Level 4: H 2: LSC-DARM_ERR H 2: IFO-SV_STATE_VECTOR H 2: IFO-SV_SEGNUM 4 1 1 Note that one fast channel for 1 yr of S 5 data can take up ~ 2 TBs of disk space.

Channel Lists Level 1: http: //ldas. ligo-wa. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 1/adcdecimate_H-RDS_R_L 1 -S 5. txt http: //ldas. ligo-la. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 1/adcdecimate_L-RDS_R_L 1 -S 5. txt Level 3: http: //ldas. ligo-wa. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 3/adcdecimate_H-RDS_R_L 3 -S 5. txt http: //ldas. ligo-la. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 3/adcdecimate_L-RDS_R_L 3 -S 5. txt Level 4: http: //ldas. ligo-wa. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_H 1_L 4/adcdecimate_H-H 1_RDS_R_L 4 -S 5. txt http: //ldas. ligo-wa. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_H 2_L 4/adcdecimate_H-H 2_RDS_R_L 4 -S 5. txt http: //ldas. ligo-la. caltech. edu/ldas_outgoing/createrds/dsorun/contrib/createrds/S 5_L 4/adcdecimate_L-RDS_R_L 4 -S 5. txt Level 1: LSC-AS_Q L 1: LSC-AS_I L 1: LSC-POB_Q. . . L 1: LSC-DARM_CTRL L 1: LSC-DARM_ERR. . . L 0: PEM-EY_BAYMIC L 0: PEM-EX_BAYMIC. . . 1 2 2 1 1 Level 3: H 2: LSC-DARM_ERR H 2: IFO-SV_STATE_VECTOR H 2: IFO-SV_SEGNUM H 1: LSC-DARM_ERR H 1: IFO-SV_STATE_VECTOR H 1: IFO-SV_SEGNUM 1 1 1 Level 4: H 2: LSC-DARM_ERR H 2: IFO-SV_STATE_VECTOR H 2: IFO-SV_SEGNUM 4 1 1 Note that one fast channel for 1 yr of S 5 data can take up ~ 2 TBs of disk space.