53ea14a292dc3f8331a3f55317413e4e.ppt

- Количество слайдов: 19

Running Scientific Workflow Applications on the Amazon EC 2 Cloud Bruce Berriman NASA Exoplanet Science Institute, IPAC Gideon Juve, Ewa Deelman, Karan Vahi, Gaurang Mehta Information Sciences Institute, USC Benjamin Berman USC Epigenome Center Phil Maechling So Cal Earthquake Center

Running Scientific Workflow Applications on the Amazon EC 2 Cloud Bruce Berriman NASA Exoplanet Science Institute, IPAC Gideon Juve, Ewa Deelman, Karan Vahi, Gaurang Mehta Information Sciences Institute, USC Benjamin Berman USC Epigenome Center Phil Maechling So Cal Earthquake Center

Clouds (Utility Computing) v Pay for what you use rather than purchase compute and storage resources that end up underutilized v Analogous to household utilities v Originated in the business domain to provide services for small companies who did not want to maintain an IT Department v Provided by data centers that are built on compute and storage virtualization technologies. v Clouds built with commodity hardware. They are a “new purchasing paradigm” rather than a new technology.

Clouds (Utility Computing) v Pay for what you use rather than purchase compute and storage resources that end up underutilized v Analogous to household utilities v Originated in the business domain to provide services for small companies who did not want to maintain an IT Department v Provided by data centers that are built on compute and storage virtualization technologies. v Clouds built with commodity hardware. They are a “new purchasing paradigm” rather than a new technology.

Benefits and Concerns Benefits Concerns v Pay only for what you v What if they become oversubscribed and user cannot increase capacity on demand? v How will the cost structure change with time? v If we become dependent on them, will we be at the cloud providers’ mercy? v Are clouds secure? v Are they up to the demands of science applications? need v Elasticity - increase or decrease capacity within minutes v Ease strain on local physical plant v Control local system administration costs

Benefits and Concerns Benefits Concerns v Pay only for what you v What if they become oversubscribed and user cannot increase capacity on demand? v How will the cost structure change with time? v If we become dependent on them, will we be at the cloud providers’ mercy? v Are clouds secure? v Are they up to the demands of science applications? need v Elasticity - increase or decrease capacity within minutes v Ease strain on local physical plant v Control local system administration costs

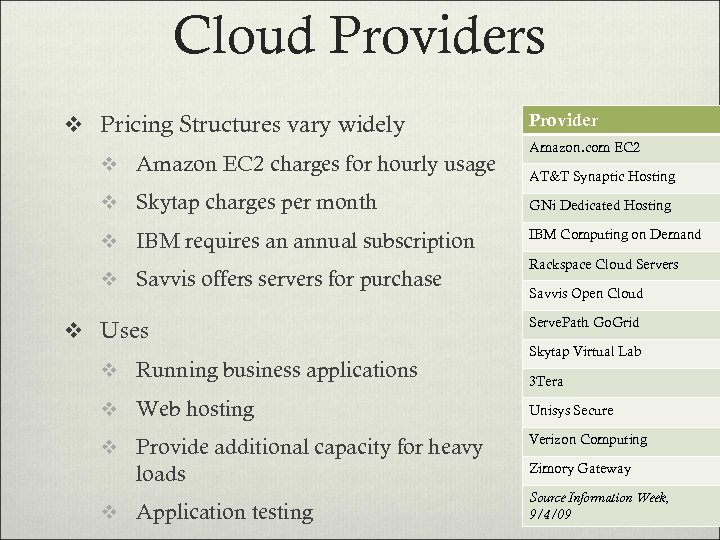

Cloud Providers v Pricing Structures vary widely v Amazon EC 2 charges for hourly usage Provider Amazon. com EC 2 AT&T Synaptic Hosting v Skytap charges per month GNi Dedicated Hosting v IBM requires an annual subscription IBM Computing on Demand v Savvis offers servers for purchase v Uses v Running business applications Rackspace Cloud Servers Savvis Open Cloud Serve. Path Go. Grid Skytap Virtual Lab 3 Tera v Web hosting Unisys Secure v Provide additional capacity for heavy Verizon Computing loads v Application testing Zimory Gateway Source Information Week, 9/4/09

Cloud Providers v Pricing Structures vary widely v Amazon EC 2 charges for hourly usage Provider Amazon. com EC 2 AT&T Synaptic Hosting v Skytap charges per month GNi Dedicated Hosting v IBM requires an annual subscription IBM Computing on Demand v Savvis offers servers for purchase v Uses v Running business applications Rackspace Cloud Servers Savvis Open Cloud Serve. Path Go. Grid Skytap Virtual Lab 3 Tera v Web hosting Unisys Secure v Provide additional capacity for heavy Verizon Computing loads v Application testing Zimory Gateway Source Information Week, 9/4/09

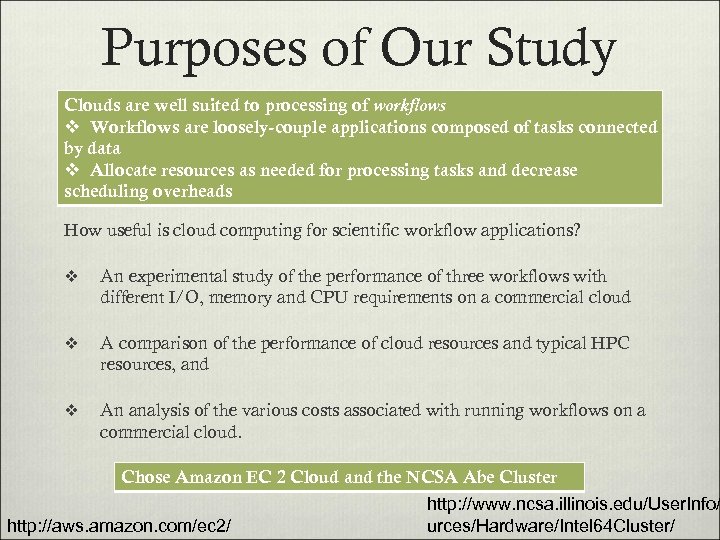

Purposes of Our Study Clouds are well suited to processing of workflows v Workflows are loosely-couple applications composed of tasks connected by data v Allocate resources as needed for processing tasks and decrease scheduling overheads How useful is cloud computing for scientific workflow applications? v An experimental study of the performance of three workflows with different I/O, memory and CPU requirements on a commercial cloud v A comparison of the performance of cloud resources and typical HPC resources, and v An analysis of the various costs associated with running workflows on a commercial cloud. Chose Amazon EC 2 Cloud and the NCSA Abe Cluster http: //aws. amazon. com/ec 2/ http: //www. ncsa. illinois. edu/User. Info/ urces/Hardware/Intel 64 Cluster/

Purposes of Our Study Clouds are well suited to processing of workflows v Workflows are loosely-couple applications composed of tasks connected by data v Allocate resources as needed for processing tasks and decrease scheduling overheads How useful is cloud computing for scientific workflow applications? v An experimental study of the performance of three workflows with different I/O, memory and CPU requirements on a commercial cloud v A comparison of the performance of cloud resources and typical HPC resources, and v An analysis of the various costs associated with running workflows on a commercial cloud. Chose Amazon EC 2 Cloud and the NCSA Abe Cluster http: //aws. amazon. com/ec 2/ http: //www. ncsa. illinois. edu/User. Info/ urces/Hardware/Intel 64 Cluster/

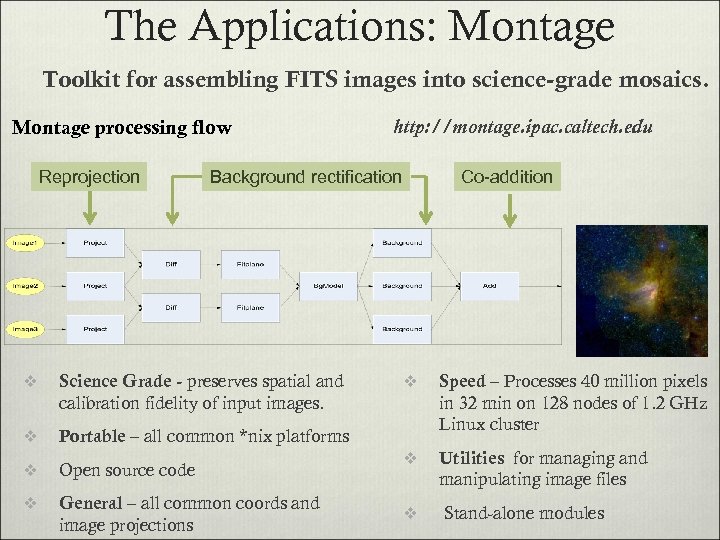

The Applications: Montage Toolkit for assembling FITS images into science-grade mosaics. Montage processing flow Reprojection http: //montage. ipac. caltech. edu Background rectification v Science Grade - preserves spatial and calibration fidelity of input images. v Portable – all common *nix platforms v Open source code v General – all common coords and image projections Co-addition v Speed – Processes 40 million pixels in 32 min on 128 nodes of 1. 2 GHz Linux cluster v Utilities for managing and manipulating image files v Stand-alone modules

The Applications: Montage Toolkit for assembling FITS images into science-grade mosaics. Montage processing flow Reprojection http: //montage. ipac. caltech. edu Background rectification v Science Grade - preserves spatial and calibration fidelity of input images. v Portable – all common *nix platforms v Open source code v General – all common coords and image projections Co-addition v Speed – Processes 40 million pixels in 32 min on 128 nodes of 1. 2 GHz Linux cluster v Utilities for managing and manipulating image files v Stand-alone modules

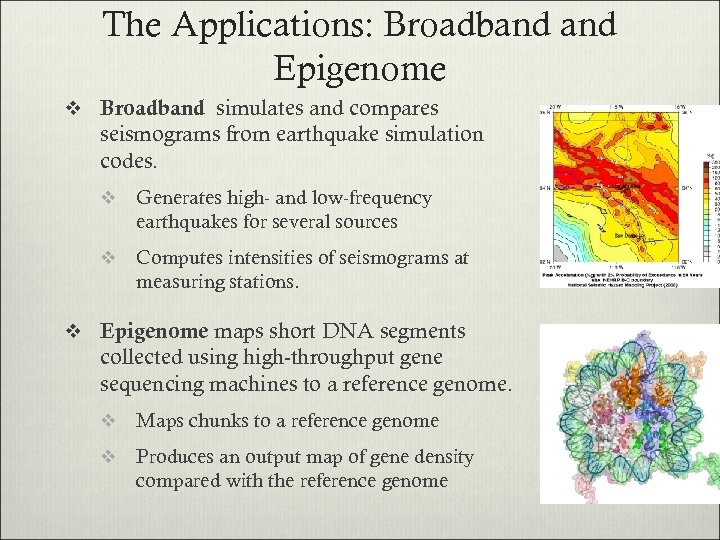

The Applications: Broadband Epigenome v Broadband simulates and compares seismograms from earthquake simulation codes. v Generates high- and low-frequency earthquakes for several sources v Computes intensities of seismograms at measuring stations. v Epigenome maps short DNA segments collected using high-throughput gene sequencing machines to a reference genome. v Maps chunks to a reference genome v Produces an output map of gene density compared with the reference genome

The Applications: Broadband Epigenome v Broadband simulates and compares seismograms from earthquake simulation codes. v Generates high- and low-frequency earthquakes for several sources v Computes intensities of seismograms at measuring stations. v Epigenome maps short DNA segments collected using high-throughput gene sequencing machines to a reference genome. v Maps chunks to a reference genome v Produces an output map of gene density compared with the reference genome

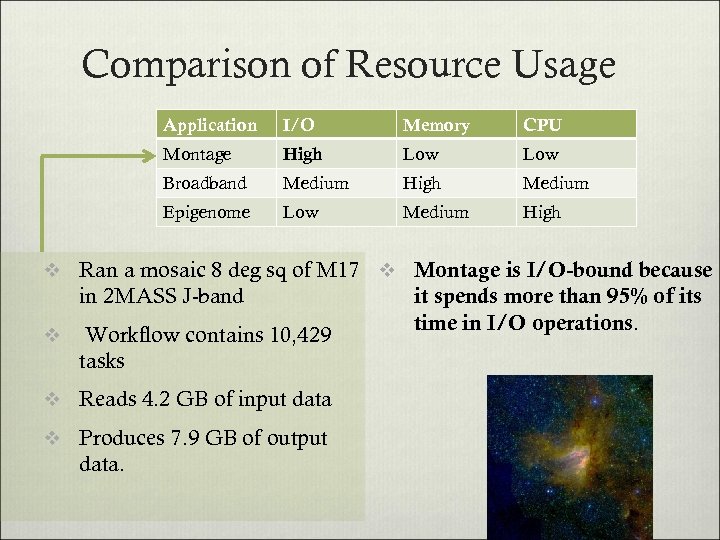

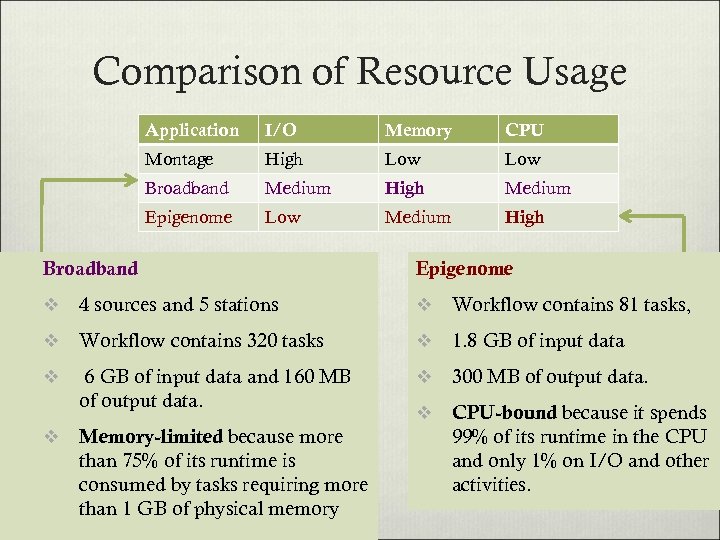

Comparison of Resource Usage Application I/O Memory CPU Montage High Low Broadband Medium High Medium Epigenome Low Medium High v Ran a mosaic 8 deg sq of M 17 v Montage is I/O-bound because in 2 MASS J-band v Workflow contains 10, 429 tasks v Reads 4. 2 GB of input data v Produces 7. 9 GB of output data. it spends more than 95% of its time in I/O operations.

Comparison of Resource Usage Application I/O Memory CPU Montage High Low Broadband Medium High Medium Epigenome Low Medium High v Ran a mosaic 8 deg sq of M 17 v Montage is I/O-bound because in 2 MASS J-band v Workflow contains 10, 429 tasks v Reads 4. 2 GB of input data v Produces 7. 9 GB of output data. it spends more than 95% of its time in I/O operations.

Comparison of Resource Usage Application I/O Memory CPU Montage High Low Broadband Medium High Medium Epigenome Low Medium High Broadband Epigenome v 4 sources and 5 stations v Workflow contains 81 tasks, v Workflow contains 320 tasks v 1. 8 GB of input data v 6 GB of input data and 160 MB of output data. v 300 MB of output data. v CPU-bound because it spends 99% of its runtime in the CPU and only 1% on I/O and other activities. v Memory-limited because more than 75% of its runtime is consumed by tasks requiring more than 1 GB of physical memory

Comparison of Resource Usage Application I/O Memory CPU Montage High Low Broadband Medium High Medium Epigenome Low Medium High Broadband Epigenome v 4 sources and 5 stations v Workflow contains 81 tasks, v Workflow contains 320 tasks v 1. 8 GB of input data v 6 GB of input data and 160 MB of output data. v 300 MB of output data. v CPU-bound because it spends 99% of its runtime in the CPU and only 1% on I/O and other activities. v Memory-limited because more than 75% of its runtime is consumed by tasks requiring more than 1 GB of physical memory

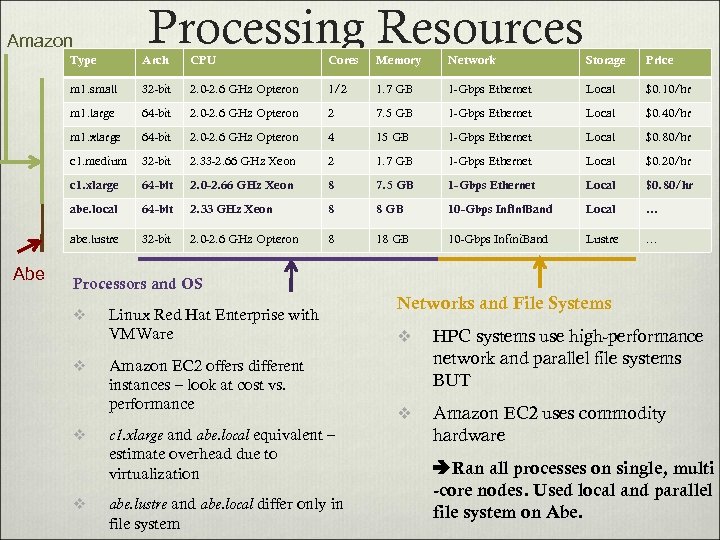

Processing Resources Amazon Type CPU Cores Memory Network Storage Price m 1. small 32 -bit 2. 0 -2. 6 GHz Opteron 1/2 1. 7 GB 1 -Gbps Ethernet Local $0. 10/hr m 1. large 64 -bit 2. 0 -2. 6 GHz Opteron 2 7. 5 GB 1 -Gbps Ethernet Local $0. 40/hr m 1. xlarge 64 -bit 2. 0 -2. 6 GHz Opteron 4 15 GB 1 -Gbps Ethernet Local $0. 80/hr c 1. medium 32 -bit 2. 33 -2. 66 GHz Xeon 2 1. 7 GB 1 -Gbps Ethernet Local $0. 20/hr c 1. xlarge 64 -bit 2. 0 -2. 66 GHz Xeon 8 7. 5 GB 1 -Gbps Ethernet Local $0. 80/hr abe. local 64 -bit 2. 33 GHz Xeon 8 8 GB 10 -Gbps Infini. Band Local … abe. lustre Abe Arch 32 -bit 2. 0 -2. 6 GHz Opteron 8 18 GB 10 -Gbps Infini. Band Lustre … Processors and OS v v Linux Red Hat Enterprise with VMWare Amazon EC 2 offers different instances – look at cost vs. performance c 1. xlarge and abe. local equivalent – estimate overhead due to virtualization abe. lustre and abe. local differ only in file system Networks and File Systems v HPC systems use high-performance network and parallel file systems BUT v Amazon EC 2 uses commodity hardware Ran all processes on single, multi -core nodes. Used local and parallel file system on Abe.

Processing Resources Amazon Type CPU Cores Memory Network Storage Price m 1. small 32 -bit 2. 0 -2. 6 GHz Opteron 1/2 1. 7 GB 1 -Gbps Ethernet Local $0. 10/hr m 1. large 64 -bit 2. 0 -2. 6 GHz Opteron 2 7. 5 GB 1 -Gbps Ethernet Local $0. 40/hr m 1. xlarge 64 -bit 2. 0 -2. 6 GHz Opteron 4 15 GB 1 -Gbps Ethernet Local $0. 80/hr c 1. medium 32 -bit 2. 33 -2. 66 GHz Xeon 2 1. 7 GB 1 -Gbps Ethernet Local $0. 20/hr c 1. xlarge 64 -bit 2. 0 -2. 66 GHz Xeon 8 7. 5 GB 1 -Gbps Ethernet Local $0. 80/hr abe. local 64 -bit 2. 33 GHz Xeon 8 8 GB 10 -Gbps Infini. Band Local … abe. lustre Abe Arch 32 -bit 2. 0 -2. 6 GHz Opteron 8 18 GB 10 -Gbps Infini. Band Lustre … Processors and OS v v Linux Red Hat Enterprise with VMWare Amazon EC 2 offers different instances – look at cost vs. performance c 1. xlarge and abe. local equivalent – estimate overhead due to virtualization abe. lustre and abe. local differ only in file system Networks and File Systems v HPC systems use high-performance network and parallel file systems BUT v Amazon EC 2 uses commodity hardware Ran all processes on single, multi -core nodes. Used local and parallel file system on Abe.

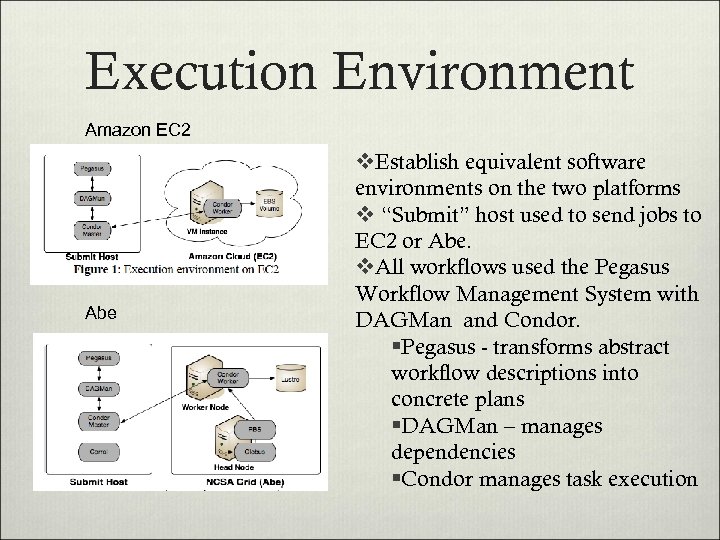

Execution Environment Amazon EC 2 Abe v. Establish equivalent software environments on the two platforms v “Submit” host used to send jobs to EC 2 or Abe. v. All workflows used the Pegasus Workflow Management System with DAGMan and Condor. §Pegasus - transforms abstract workflow descriptions into concrete plans §DAGMan – manages dependencies §Condor manages task execution

Execution Environment Amazon EC 2 Abe v. Establish equivalent software environments on the two platforms v “Submit” host used to send jobs to EC 2 or Abe. v. All workflows used the Pegasus Workflow Management System with DAGMan and Condor. §Pegasus - transforms abstract workflow descriptions into concrete plans §DAGMan – manages dependencies §Condor manages task execution

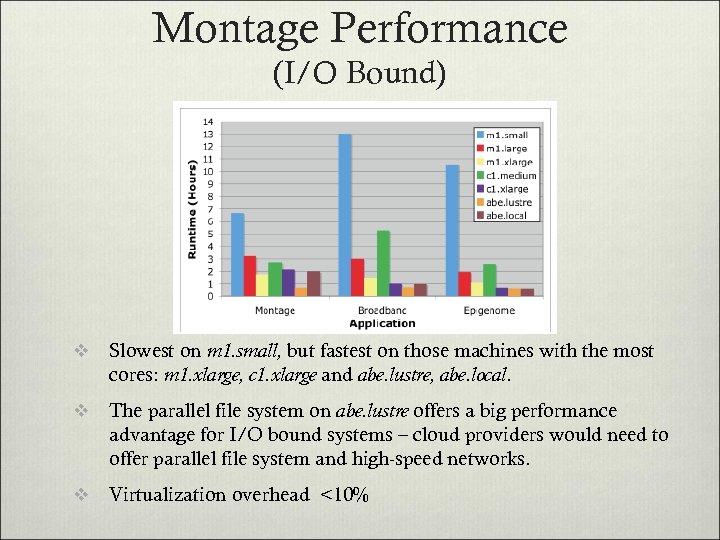

Montage Performance (I/O Bound) v Slowest on m 1. small, but fastest on those machines with the most cores: m 1. xlarge, c 1. xlarge and abe. lustre, abe. local. v The parallel file system on abe. lustre offers a big performance advantage for I/O bound systems – cloud providers would need to offer parallel file system and high-speed networks. v Virtualization overhead <10%

Montage Performance (I/O Bound) v Slowest on m 1. small, but fastest on those machines with the most cores: m 1. xlarge, c 1. xlarge and abe. lustre, abe. local. v The parallel file system on abe. lustre offers a big performance advantage for I/O bound systems – cloud providers would need to offer parallel file system and high-speed networks. v Virtualization overhead <10%

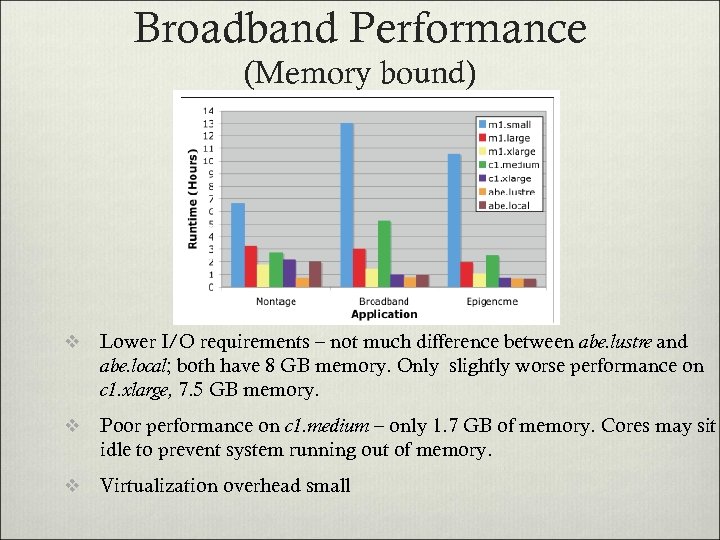

Broadband Performance (Memory bound) v Lower I/O requirements – not much difference between abe. lustre and abe. local; both have 8 GB memory. Only slightly worse performance on c 1. xlarge, 7. 5 GB memory. v Poor performance on c 1. medium – only 1. 7 GB of memory. Cores may sit idle to prevent system running out of memory. v Virtualization overhead small

Broadband Performance (Memory bound) v Lower I/O requirements – not much difference between abe. lustre and abe. local; both have 8 GB memory. Only slightly worse performance on c 1. xlarge, 7. 5 GB memory. v Poor performance on c 1. medium – only 1. 7 GB of memory. Cores may sit idle to prevent system running out of memory. v Virtualization overhead small

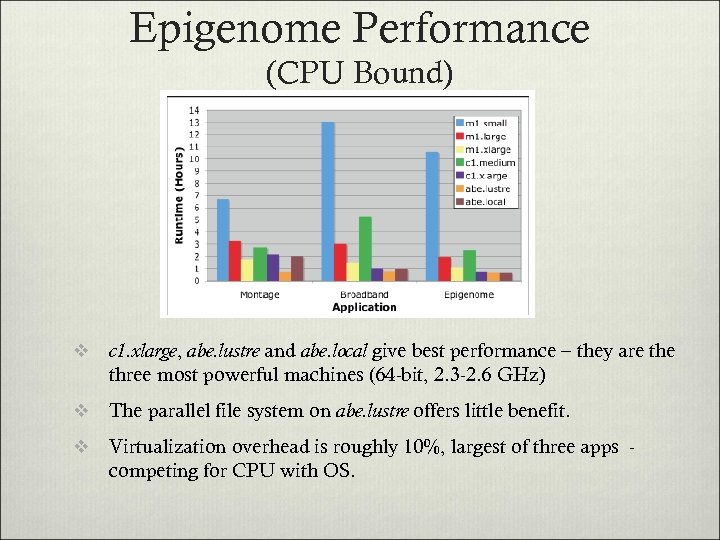

Epigenome Performance (CPU Bound) v c 1. xlarge, abe. lustre and abe. local give best performance – they are three most powerful machines (64 -bit, 2. 3 -2. 6 GHz) v The parallel file system on abe. lustre offers little benefit. v Virtualization overhead is roughly 10%, largest of three apps competing for CPU with OS.

Epigenome Performance (CPU Bound) v c 1. xlarge, abe. lustre and abe. local give best performance – they are three most powerful machines (64 -bit, 2. 3 -2. 6 GHz) v The parallel file system on abe. lustre offers little benefit. v Virtualization overhead is roughly 10%, largest of three apps competing for CPU with OS.

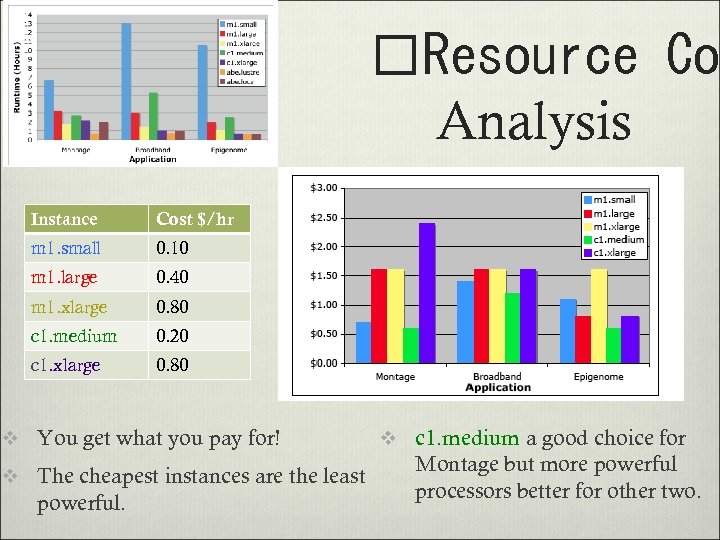

Resource Cos Analysis Instance Cost $/hr m 1. small 0. 10 m 1. large 0. 40 m 1. xlarge 0. 80 c 1. medium 0. 20 c 1. xlarge 0. 80 v You get what you pay for! v The cheapest instances are the least powerful. v c 1. medium a good choice for Montage but more powerful processors better for other two.

Resource Cos Analysis Instance Cost $/hr m 1. small 0. 10 m 1. large 0. 40 m 1. xlarge 0. 80 c 1. medium 0. 20 c 1. xlarge 0. 80 v You get what you pay for! v The cheapest instances are the least powerful. v c 1. medium a good choice for Montage but more powerful processors better for other two.

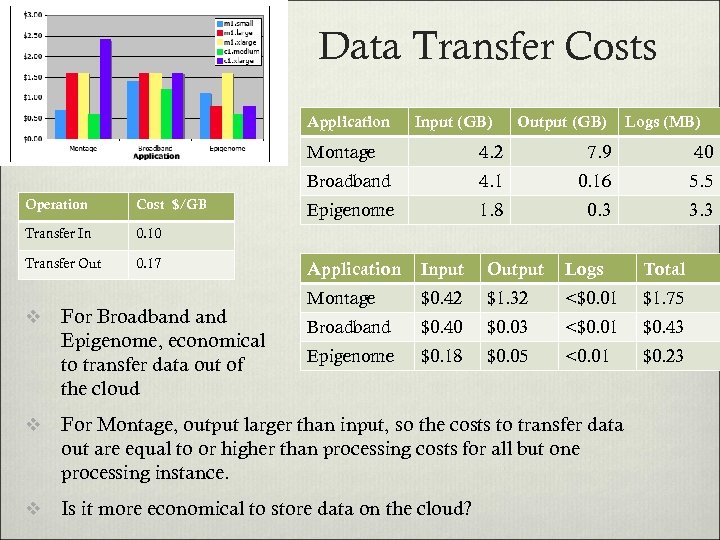

Data Transfer Costs Application Input (GB) Output (GB) Logs (MB) Montage Cost $/GB Transfer In 0. 17 40 4. 1 0. 16 5. 5 Epigenome 1. 8 0. 3 3. 3 0. 10 Transfer Out 7. 9 Broadband Operation 4. 2 v For Broadband Epigenome, economical to transfer data out of the cloud Application Input Output Logs Total Montage $0. 42 $1. 32 <$0. 01 $1. 75 Broadband $0. 40 $0. 03 <$0. 01 $0. 43 Epigenome $0. 18 $0. 05 <0. 01 $0. 23 v For Montage, output larger than input, so the costs to transfer data out are equal to or higher than processing costs for all but one processing instance. v Is it more economical to store data on the cloud?

Data Transfer Costs Application Input (GB) Output (GB) Logs (MB) Montage Cost $/GB Transfer In 0. 17 40 4. 1 0. 16 5. 5 Epigenome 1. 8 0. 3 3. 3 0. 10 Transfer Out 7. 9 Broadband Operation 4. 2 v For Broadband Epigenome, economical to transfer data out of the cloud Application Input Output Logs Total Montage $0. 42 $1. 32 <$0. 01 $1. 75 Broadband $0. 40 $0. 03 <$0. 01 $0. 43 Epigenome $0. 18 $0. 05 <0. 01 $0. 23 v For Montage, output larger than input, so the costs to transfer data out are equal to or higher than processing costs for all but one processing instance. v Is it more economical to store data on the cloud?

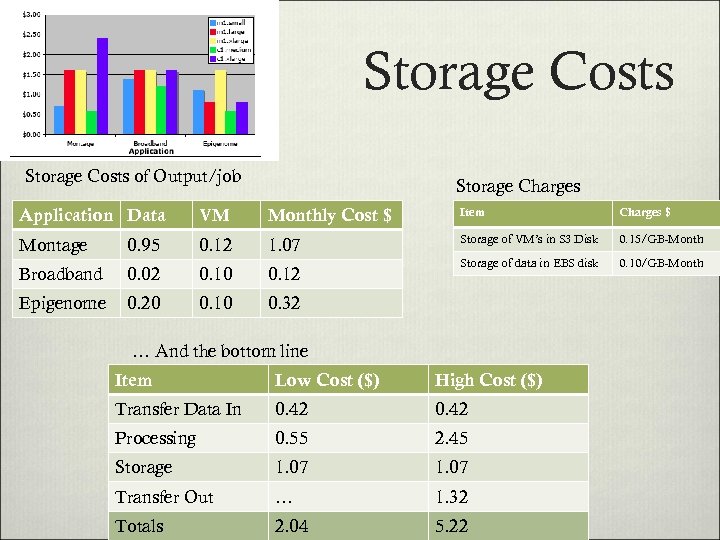

Storage Costs of Output/job Storage Charges Application Data VM Monthly Cost $ Item Charges $ Montage 0. 95 0. 12 1. 07 Storage of VM’s in S 3 Disk 0. 15/GB-Month Broadband 0. 02 0. 10 0. 12 Storage of data in EBS disk 0. 10/GB-Month Epigenome 0. 20 0. 10 0. 32 … And the bottom line Item Low Cost ($) High Cost ($) Transfer Data In 0. 42 Processing 0. 55 2. 45 Storage 1. 07 Transfer Out … 1. 32 Totals 2. 04 5. 22

Storage Costs of Output/job Storage Charges Application Data VM Monthly Cost $ Item Charges $ Montage 0. 95 0. 12 1. 07 Storage of VM’s in S 3 Disk 0. 15/GB-Month Broadband 0. 02 0. 10 0. 12 Storage of data in EBS disk 0. 10/GB-Month Epigenome 0. 20 0. 10 0. 32 … And the bottom line Item Low Cost ($) High Cost ($) Transfer Data In 0. 42 Processing 0. 55 2. 45 Storage 1. 07 Transfer Out … 1. 32 Totals 2. 04 5. 22

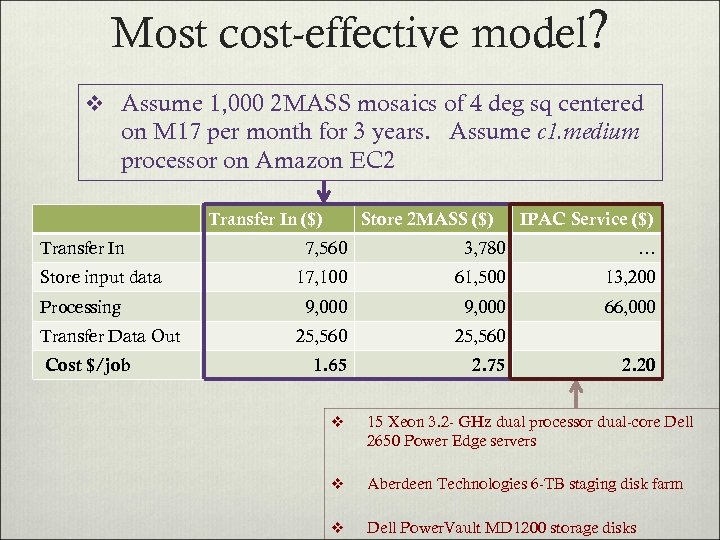

Most cost-effective model? v Assume 1, 000 2 MASS mosaics of 4 deg sq centered on M 17 per month for 3 years. Assume c 1. medium processor on Amazon EC 2 Transfer In ($) Transfer In Store input data Processing Transfer Data Out Cost $/job Store 2 MASS ($) IPAC Service ($) 7, 560 3, 780 … 17, 100 61, 500 13, 200 9, 000 66, 000 25, 560 1. 65 2. 75 2. 20 v 15 Xeon 3. 2 - GHz dual processor dual-core Dell 2650 Power Edge servers v Aberdeen Technologies 6 -TB staging disk farm v Dell Power. Vault MD 1200 storage disks

Most cost-effective model? v Assume 1, 000 2 MASS mosaics of 4 deg sq centered on M 17 per month for 3 years. Assume c 1. medium processor on Amazon EC 2 Transfer In ($) Transfer In Store input data Processing Transfer Data Out Cost $/job Store 2 MASS ($) IPAC Service ($) 7, 560 3, 780 … 17, 100 61, 500 13, 200 9, 000 66, 000 25, 560 1. 65 2. 75 2. 20 v 15 Xeon 3. 2 - GHz dual processor dual-core Dell 2650 Power Edge servers v Aberdeen Technologies 6 -TB staging disk farm v Dell Power. Vault MD 1200 storage disks

Conclusions v Clouds can be used effectively and fairly efficiently for scientific applications. The virtualization overhead is low. v The high speed network and parallel file systems give HPC clusters a significant performance advantage over cloud computing for I/O bound applications. v On Amazon EC 2, primary cost for Montage is data transfer. Processing is primary cost for Broadband, epigenome. v Amazon EC 2 offers no dramatic cost benefits over a locally mounted image-mosaic service. Reference: G. Juve, E. Deelman, K. Vahi, G. Mehta, B. Berriman, B. P. Berman, and P. Maechling, "Scientific Workflow Applications on Amazon EC 2, " in Cloud. Computing Workshop in Conjunction with e-Science Oxford, UK: IEEE, 2009

Conclusions v Clouds can be used effectively and fairly efficiently for scientific applications. The virtualization overhead is low. v The high speed network and parallel file systems give HPC clusters a significant performance advantage over cloud computing for I/O bound applications. v On Amazon EC 2, primary cost for Montage is data transfer. Processing is primary cost for Broadband, epigenome. v Amazon EC 2 offers no dramatic cost benefits over a locally mounted image-mosaic service. Reference: G. Juve, E. Deelman, K. Vahi, G. Mehta, B. Berriman, B. P. Berman, and P. Maechling, "Scientific Workflow Applications on Amazon EC 2, " in Cloud. Computing Workshop in Conjunction with e-Science Oxford, UK: IEEE, 2009