e910502582b5eafb85a43aff9e1947a7.ppt

- Количество слайдов: 53

Rules of Thumb in Data Engineering Jim Gray Microsoft Storage Lunch 10 July 2001 Gray@Microsoft. com, http: //research. Microsoft. com/~Gray/Talks/ 1

Outline Moore’s Law and consequences Storage rules of thumb Balanced systems rules revisited Networking rules of thumb Caching rules of thumb 2

Meta-Message: Technology Ratios Matter Price and Performance change. If everything changes in the same way, then nothing really changes. If some things get much cheaper/faster than others, then that is real change. Some things are not changing much: n n n Cost of people Speed of light … And some things are changing a LOT 3

Trends: Moore’s Law Performance/Price doubles every 18 months 100 x per decade Progress in next 18 months = ALL previous progress n n New storage = sum of all old storage (ever) New processing = sum of all old processing. E. coli double ever 20 minutes! 15 years ago 4

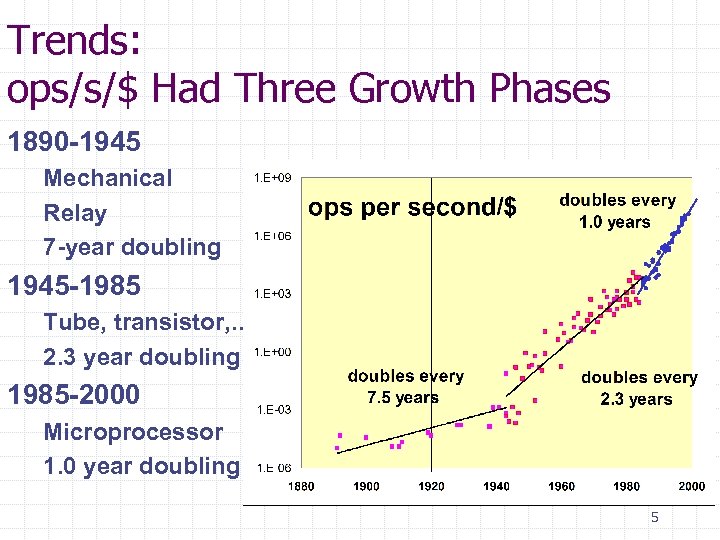

Trends: ops/s/$ Had Three Growth Phases 1890 -1945 Mechanical Relay 7 -year doubling 1945 -1985 Tube, transistor, . . 2. 3 year doubling 1985 -2000 Microprocessor 1. 0 year doubling 5

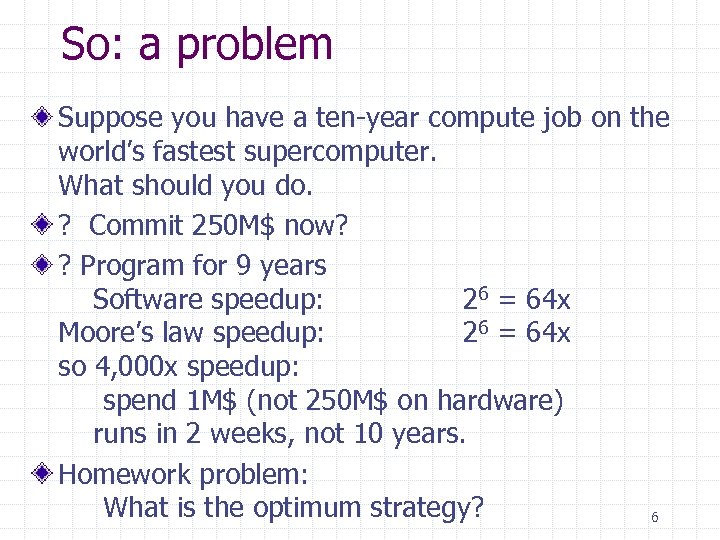

So: a problem Suppose you have a ten-year compute job on the world’s fastest supercomputer. What should you do. ? Commit 250 M$ now? ? Program for 9 years Software speedup: 26 = 64 x Moore’s law speedup: 26 = 64 x so 4, 000 x speedup: spend 1 M$ (not 250 M$ on hardware) runs in 2 weeks, not 10 years. Homework problem: What is the optimum strategy? 6

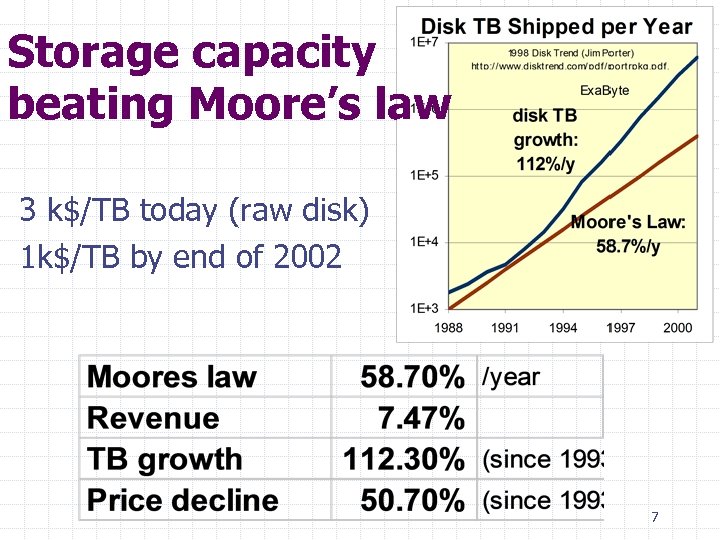

Storage capacity beating Moore’s law 3 k$/TB today (raw disk) 1 k$/TB by end of 2002 7

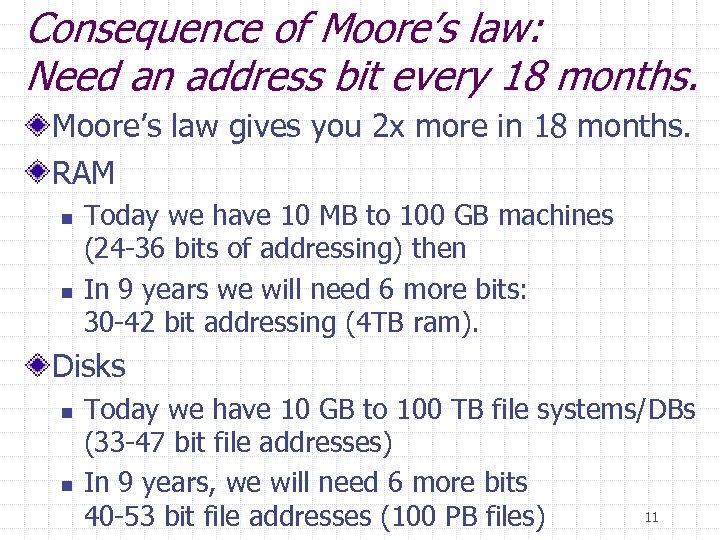

Consequence of Moore’s law: Need an address bit every 18 months. Moore’s law gives you 2 x more in 18 months. RAM n n Today we have 10 MB to 100 GB machines (24 -36 bits of addressing) then In 9 years we will need 6 more bits: 30 -42 bit addressing (4 TB ram). Disks n n Today we have 10 GB to 100 TB file systems/DBs (33 -47 bit file addresses) In 9 years, we will need 6 more bits 11 40 -53 bit file addresses (100 PB files)

Architecture could change this 1 -level store: n n n System 48, AS 400 has 1 -level store. Never re-uses an address. Needs 96 -bit addressing today. NUMAs and Clusters n n Willing to buy a 100 M$ computer? Then add 6 more address bits. Only 1 -level store pushes us beyond 64 -bits Still, these are “logical” addresses, 64 -bit physical will last many years 12

Trends: Gilder’s Law: 3 x bandwidth/year for 25 more years Today: n n n 40 Gbps per channel (λ) 12 channels per fiber (wdm): 500 Gbps 32 fibers/bundle = 16 Tbps/bundle In lab 3 Tbps/fiber (400 x WDM) In theory 25 Tbps per fiber 1 Tbps = USA 1996 WAN bisection bandwidth Aggregate bandwidth doubles every 8 months! 1 fiber = 25 Tbps 13

Outline Moore’s Law and consequences Storage rules of thumb Balanced systems rules revisited Networking rules of thumb Caching rules of thumb 14

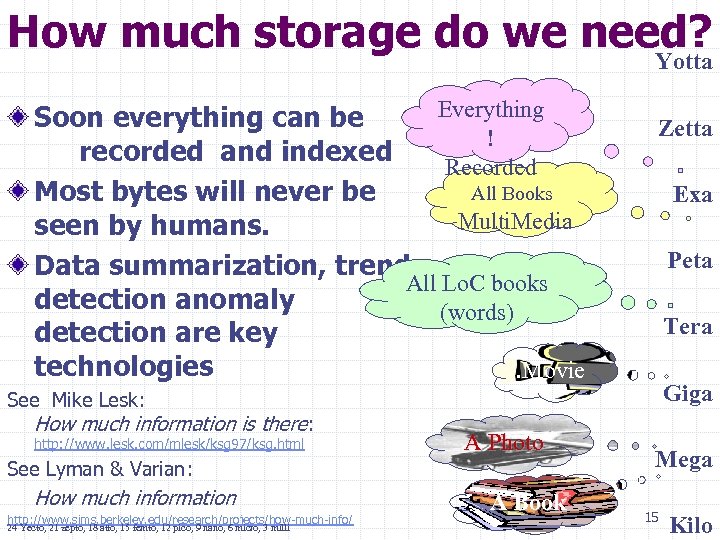

How much storage do we need? Yotta Everything Soon everything can be ! recorded and indexed Recorded All Books Most bytes will never be Multi. Media seen by humans. Data summarization, trend All Lo. C books detection anomaly (words) detection are key technologies. Movie Zetta Exa Peta Tera Giga See Mike Lesk: How much information is there: http: //www. lesk. com/mlesk/ksg 97/ksg. html A Photo See Lyman & Varian: How much information http: //www. sims. berkeley. edu/research/projects/how-much-info/ 24 Yecto, 21 zepto, 18 atto, 15 femto, 12 pico, 9 nano, 6 micro, 3 milli A Book Mega 15 Kilo

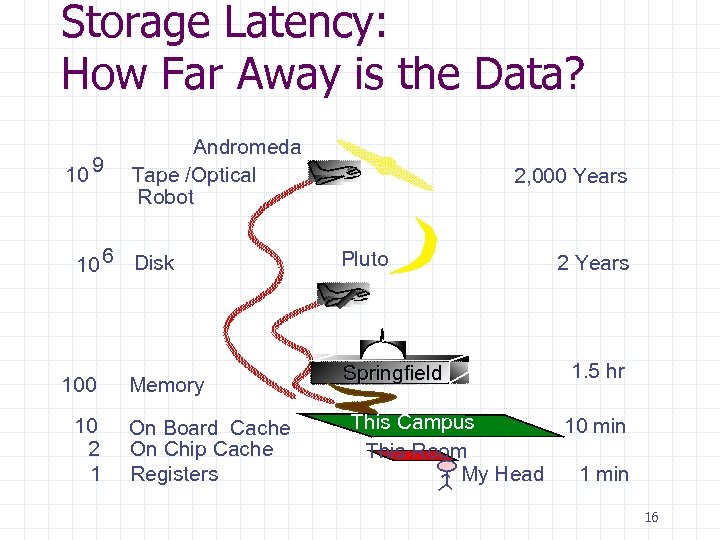

Storage Latency: How Far Away is the Data? 10 9 Andromeda Tape /Optical Robot 10 6 Disk 100 10 2 1 Memory On Board Cache On Chip Cache Registers 2, 000 Years Pluto Springfield 2 Years 1. 5 hr This Campus 10 min This Room My Head 1 min 16

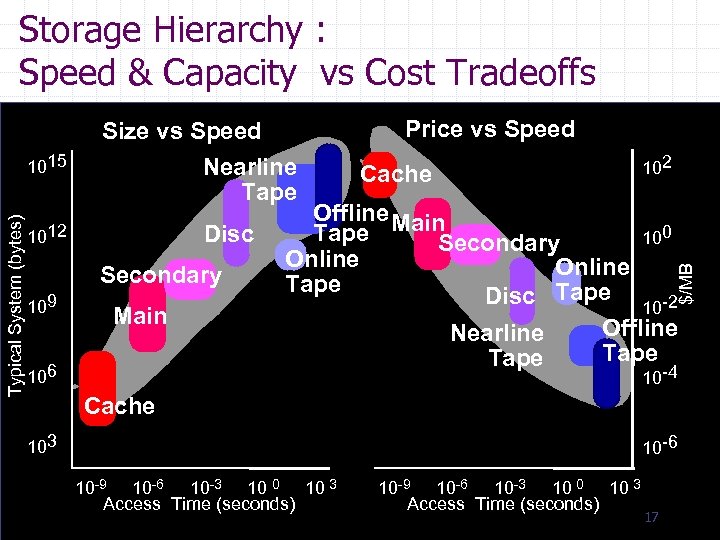

Storage Hierarchy : Speed & Capacity vs Cost Tradeoffs Disc 1012 Secondary 109 Main 106 Price vs Speed Cache 102 Offline Main Tape 100 Secondary Online Tape Disc Tape 10 -2 Offline Nearline Tape $/MB Typical System (bytes) 1015 Size vs Speed Nearline Tape 10 -4 Cache 103 10 -6 10 -9 10 -6 10 -3 10 0 10 3 Access Time (seconds) 17

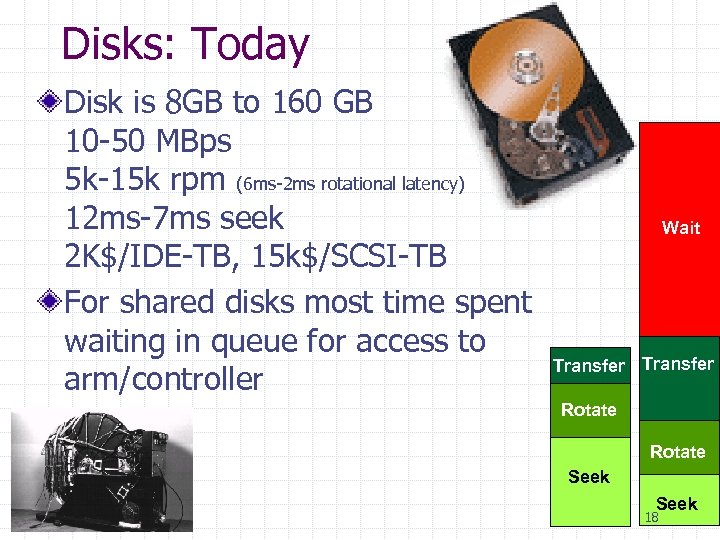

Disks: Today Disk is 8 GB to 160 GB 10 -50 MBps 5 k-15 k rpm (6 ms-2 ms rotational latency) 12 ms-7 ms seek 2 K$/IDE-TB, 15 k$/SCSI-TB For shared disks most time spent waiting in queue for access to arm/controller Wait Transfer Rotate Seek 18

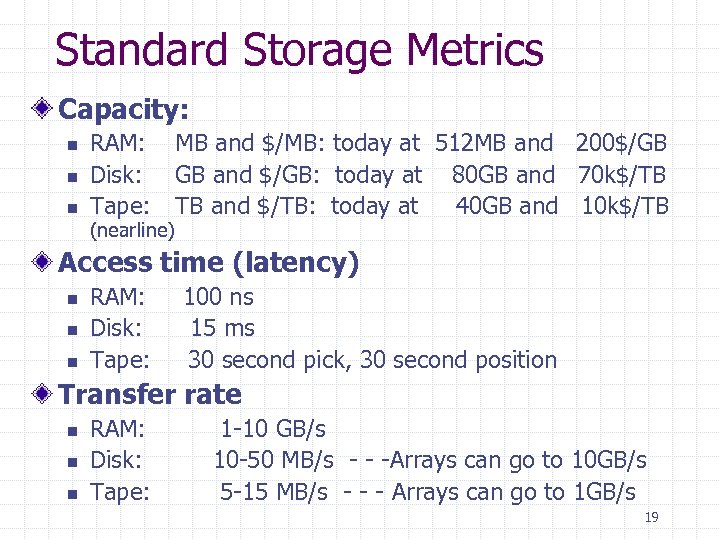

Standard Storage Metrics Capacity: n n n RAM: Disk: Tape: (nearline) MB and $/MB: today at 512 MB and 200$/GB GB and $/GB: today at 80 GB and 70 k$/TB TB and $/TB: today at 40 GB and 10 k$/TB Access time (latency) n n n RAM: Disk: Tape: 100 ns 15 ms 30 second pick, 30 second position Transfer rate n n n RAM: Disk: Tape: 1 -10 GB/s 10 -50 MB/s - - -Arrays can go to 10 GB/s 5 -15 MB/s - - - Arrays can go to 1 GB/s 19

New Storage Metrics: Kaps, Maps, SCAN Kaps: How many kilobyte objects served per second n n The file server, transaction processing metric This is the OLD metric. Maps: How many megabyte objects served per sec n The Multi-Media metric SCAN: How long to scan all the data n the data mining and utility metric And n Kaps/$, Maps/$, TBscan/$ 20

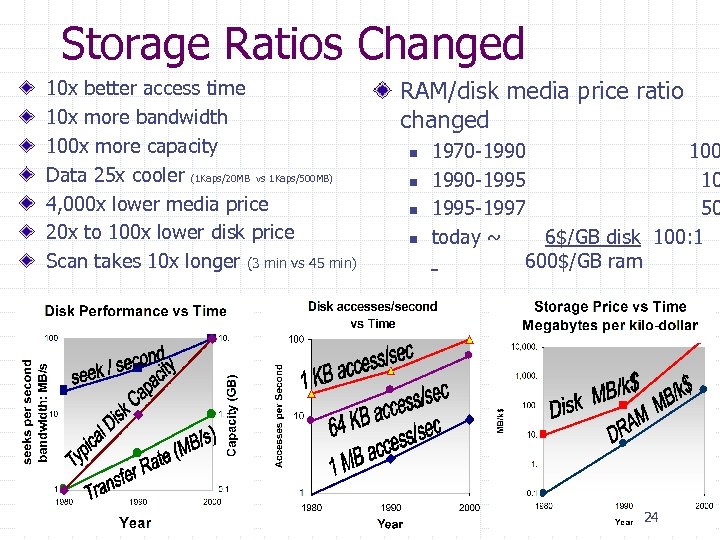

Storage Ratios Changed 10 x better access time 10 x more bandwidth 100 x more capacity Data 25 x cooler (1 Kaps/20 MB vs 1 Kaps/500 MB) 4, 000 x lower media price 20 x to 100 x lower disk price Scan takes 10 x longer (3 min vs 45 min) RAM/disk media price ratio changed n n 1970 -1990 100 1990 -1995 10 1995 -1997 50 today ~ 6$/GB disk 100: 1 600$/GB ram 24

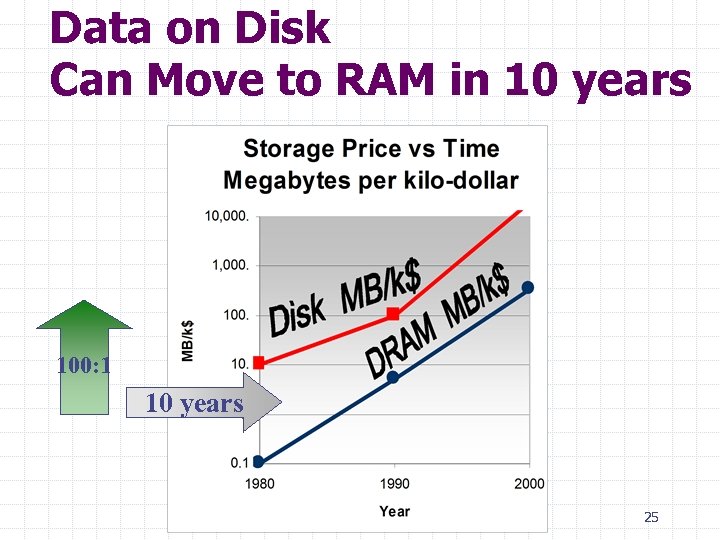

Data on Disk Can Move to RAM in 10 years 100: 1 10 years 25

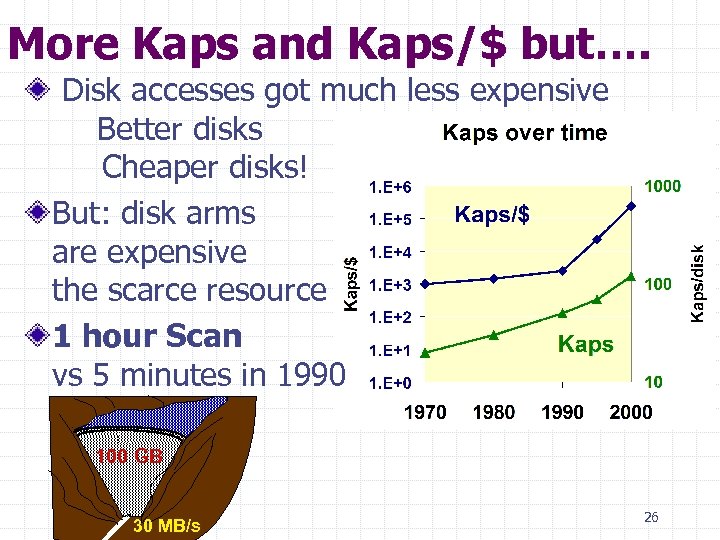

More Kaps and Kaps/$ but…. Disk accesses got much less expensive Better disks Cheaper disks! But: disk arms are expensive the scarce resource 1 hour Scan vs 5 minutes in 1990 100 GB 30 MB/s 26

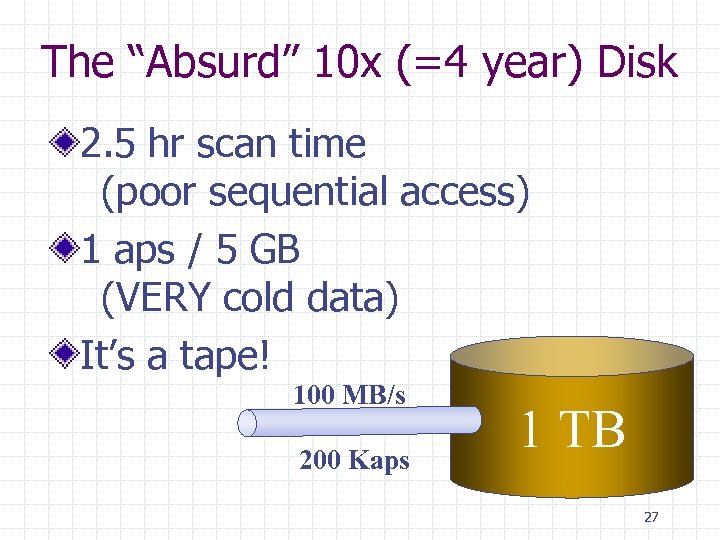

The “Absurd” 10 x (=4 year) Disk 2. 5 hr scan time (poor sequential access) 1 aps / 5 GB (VERY cold data) It’s a tape! 100 MB/s 200 Kaps 1 TB 27

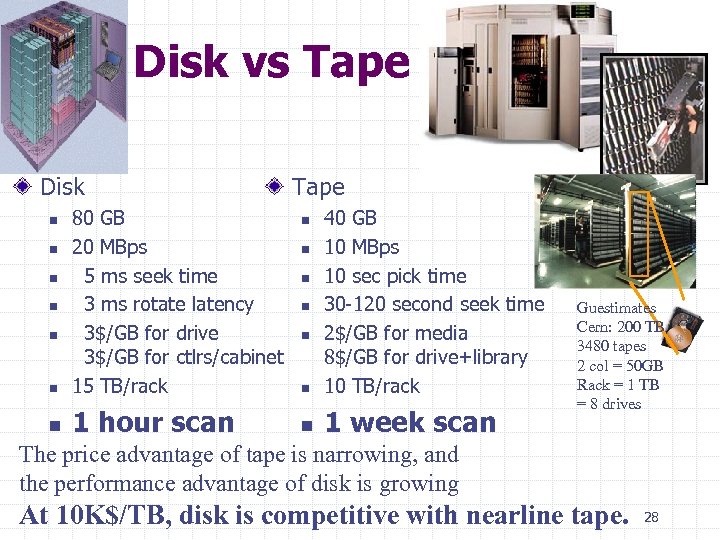

Disk vs Tape Disk n 80 GB 20 MBps 5 ms seek time 3 ms rotate latency 3$/GB for drive 3$/GB for ctlrs/cabinet 15 TB/rack n 1 hour scan n n Tape n 40 GB 10 MBps 10 sec pick time 30 -120 second seek time 2$/GB for media 8$/GB for drive+library 10 TB/rack n 1 week scan n n Guestimates Cern: 200 TB 3480 tapes 2 col = 50 GB Rack = 1 TB = 8 drives The price advantage of tape is narrowing, and the performance advantage of disk is growing At 10 K$/TB, disk is competitive with nearline tape. 28

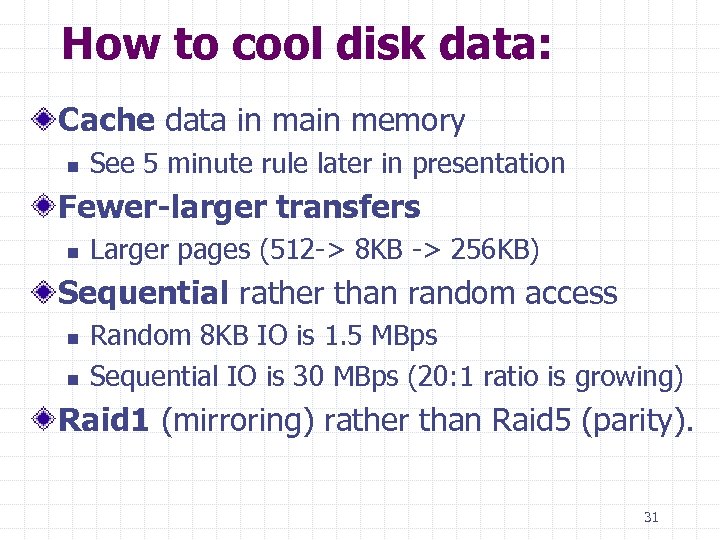

How to cool disk data: Cache data in main memory n See 5 minute rule later in presentation Fewer-larger transfers n Larger pages (512 -> 8 KB -> 256 KB) Sequential rather than random access n n Random 8 KB IO is 1. 5 MBps Sequential IO is 30 MBps (20: 1 ratio is growing) Raid 1 (mirroring) rather than Raid 5 (parity). 31

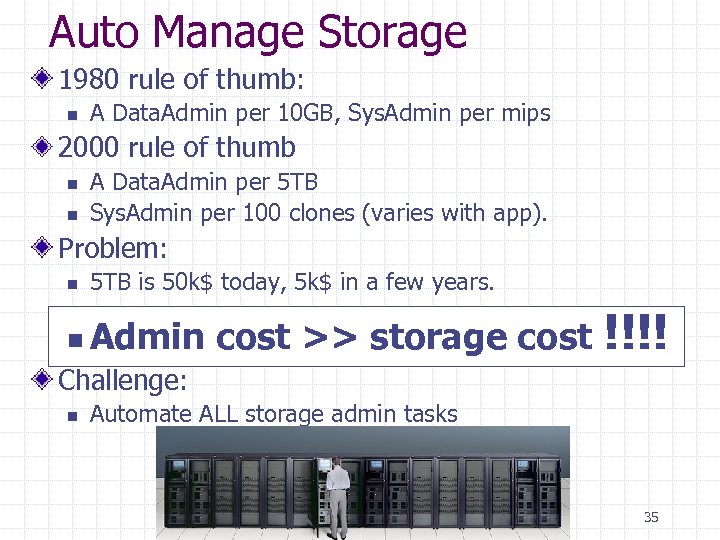

Auto Manage Storage 1980 rule of thumb: n A Data. Admin per 10 GB, Sys. Admin per mips 2000 rule of thumb n n A Data. Admin per 5 TB Sys. Admin per 100 clones (varies with app). Problem: n 5 TB is 50 k$ today, 5 k$ in a few years. n Admin cost >> storage cost !!!! Challenge: n Automate ALL storage admin tasks 35

Summarizing storage rules of thumb (1) Moore’s law: 4 x every 3 years 100 x more per decade Implies 2 bit of addressing every 3 years. Storage capacities increase 100 x/decade Storage costs drop 100 x per decade Storage throughput increases 10 x/decade Data cools 10 x/decade Disk page sizes increase 5 x per decade. 36

Summarizing storage rules of thumb (2) RAM: Disk and Disk: Tape cost ratios are 100: 1 and 3: 1 So, in 10 years, disk data can move to RAM since prices decline 100 x per decade. A person can administer a million dollars of disk storage: that is 1 TB - 100 TB today Disks are replacing tapes as backup devices. You can’t backup/restore a Petabyte quickly so geoplex it. Mirroring rather than Parity to save disk arms 37

Outline Moore’s Law and consequences Storage rules of thumb Balanced systems rules revisited Networking rules of thumb Caching rules of thumb 38

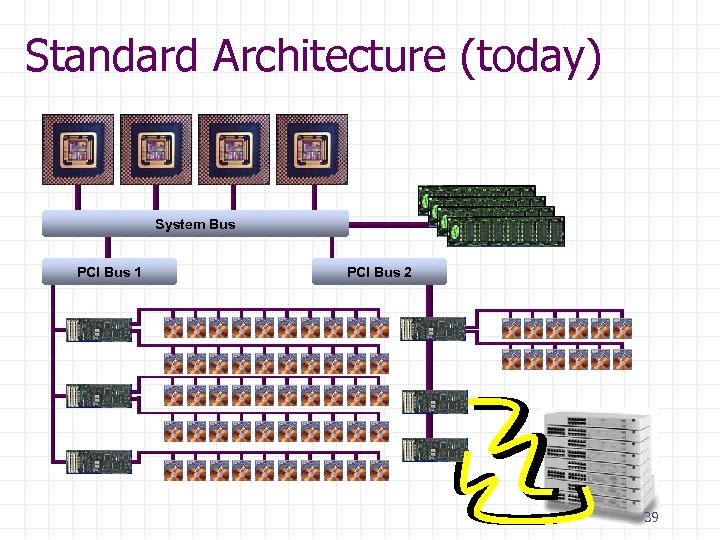

Standard Architecture (today) System Bus PCI Bus 1 PCI Bus 2 39

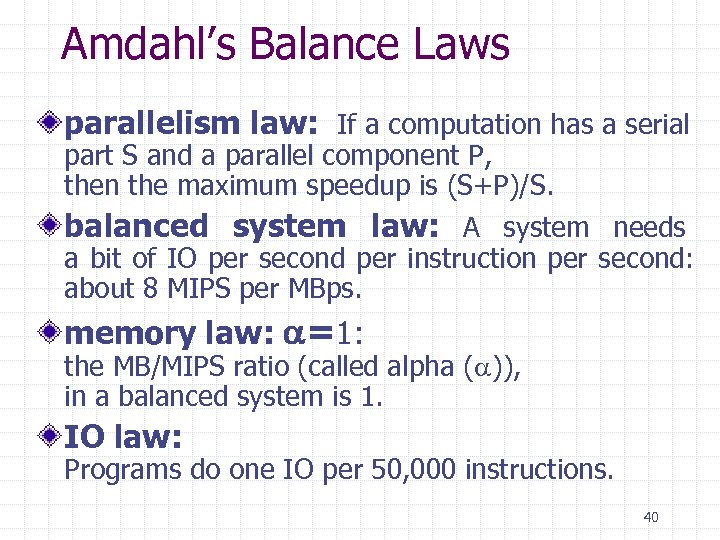

Amdahl’s Balance Laws parallelism law: If a computation has a serial part S and a parallel component P, then the maximum speedup is (S+P)/S. balanced system law: A system needs a bit of IO per second per instruction per second: about 8 MIPS per MBps. memory law: =1: the MB/MIPS ratio (called alpha ( )), in a balanced system is 1. IO law: Programs do one IO per 50, 000 instructions. 40

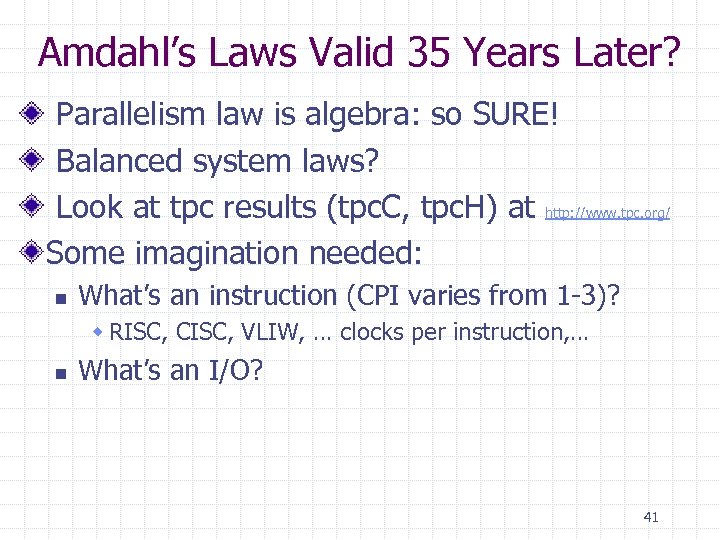

Amdahl’s Laws Valid 35 Years Later? Parallelism law is algebra: so SURE! Balanced system laws? Look at tpc results (tpc. C, tpc. H) at http: //www. tpc. org/ Some imagination needed: n What’s an instruction (CPI varies from 1 -3)? w RISC, CISC, VLIW, … clocks per instruction, … n What’s an I/O? 41

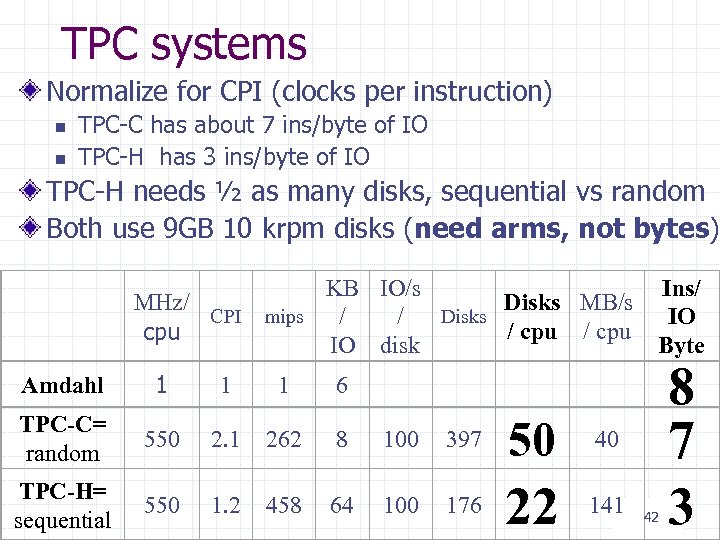

TPC systems Normalize for CPI (clocks per instruction) n n TPC-C has about 7 ins/byte of IO TPC-H has 3 ins/byte of IO TPC-H needs ½ as many disks, sequential vs random Both use 9 GB 10 krpm disks (need arms, not bytes) KB IO/s MHz/ Disks MB/s CPI mips Disks / / / cpu cpu IO disk Amdahl 1 1 1 6 TPC-C= random 550 2. 1 262 8 100 397 50 40 TPC-H= sequential 550 1. 2 458 64 100 176 22 141 Ins/ IO Byte 42 8 7 3

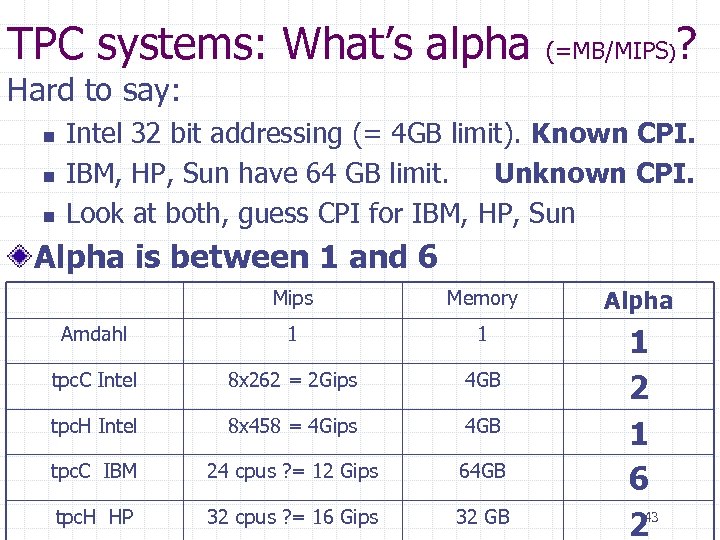

TPC systems: What’s alpha (=MB/MIPS) ? Hard to say: n n n Intel 32 bit addressing (= 4 GB limit). Known CPI. IBM, HP, Sun have 64 GB limit. Unknown CPI. Look at both, guess CPI for IBM, HP, Sun Alpha is between 1 and 6 Mips Memory Alpha Amdahl 1 1 tpc. C Intel 8 x 262 = 2 Gips 4 GB tpc. H Intel 8 x 458 = 4 Gips 4 GB tpc. C IBM 24 cpus ? = 12 Gips 64 GB tpc. H HP 32 cpus ? = 16 Gips 32 GB 1 2 1 6 243

Instructions per IO? We know 8 mips per MBps of IO So, 8 KB page is 64 K instructions And 64 KB page is 512 K instructions. But, sequential has fewer instructions/byte. (3 vs 7 in tpc. H vs tpc. C). So, 64 KB page is 200 K instructions. 44

Amdahl’s Balance Laws Revised Laws right, just need “interpretation” Balanced System Law: A system needs 8 MIPS/MBps. IO, (imagination? ) but instruction rate must be measured on the workload. n Sequential workloads have low CPI (clocks per instruction), n random workloads tend to have higher CPI. Alpha (the MB/MIPS ratio) is rising from 1 to 6. This trend will likely continue. One Random IO’s per 50 k instructions. Sequential IOs are larger One sequential IO per 200 k instructions 45

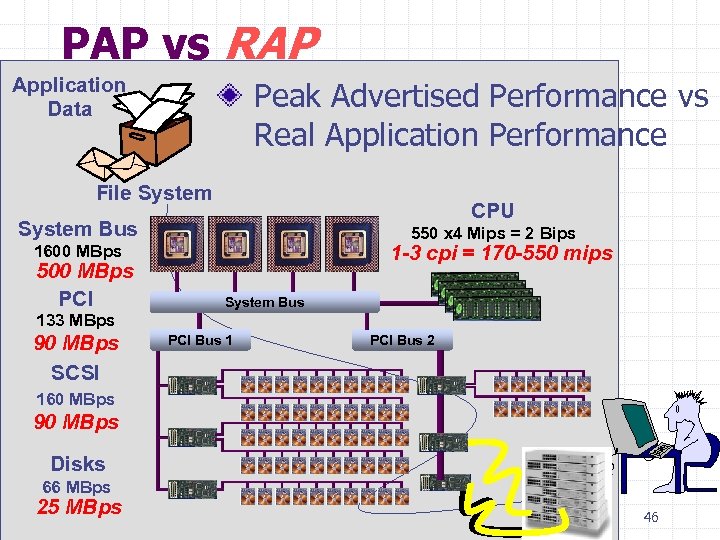

PAP vs RAP Application Data Peak Advertised Performance vs Real Application Performance File System CPU System Bus 550 x 4 Mips = 2 Bips 1600 MBps 500 MBps PCI 1 -3 cpi = 170 -550 mips System Bus 133 MBps 90 MBps SCSI PCI Bus 1 PCI Bus 2 160 MBps 90 MBps Disks 66 MBps 25 MBps 46

Outline Moore’s Law and consequences Storage rules of thumb Balanced systems rules revisited Networking rules of thumb Caching rules of thumb 47

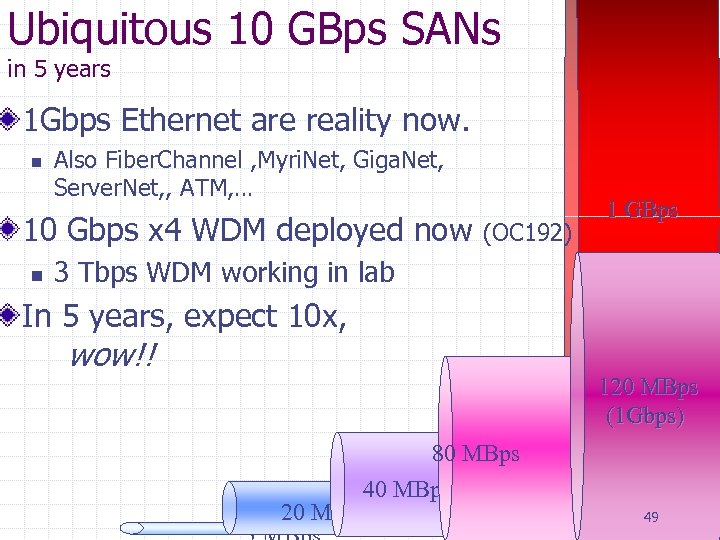

Ubiquitous 10 GBps SANs in 5 years 1 Gbps Ethernet are reality now. n Also Fiber. Channel , Myri. Net, Giga. Net, Server. Net, , ATM, … 10 Gbps x 4 WDM deployed now n (OC 192) 1 GBps 3 Tbps WDM working in lab In 5 years, expect 10 x, wow!! 120 MBps (1 Gbps) 80 MBps 40 MBps 20 MBps 49

Networking WANS are getting faster than LANS G 8 = OC 192 = 8 Gbps is “standard” Link bandwidth improves 4 x per 3 years Speed of light (60 ms round trip in US) Software stacks have always been the problem. Time = Sender. CPU + Receiver. CPU + bytes/bandwidth This has been the problem 50

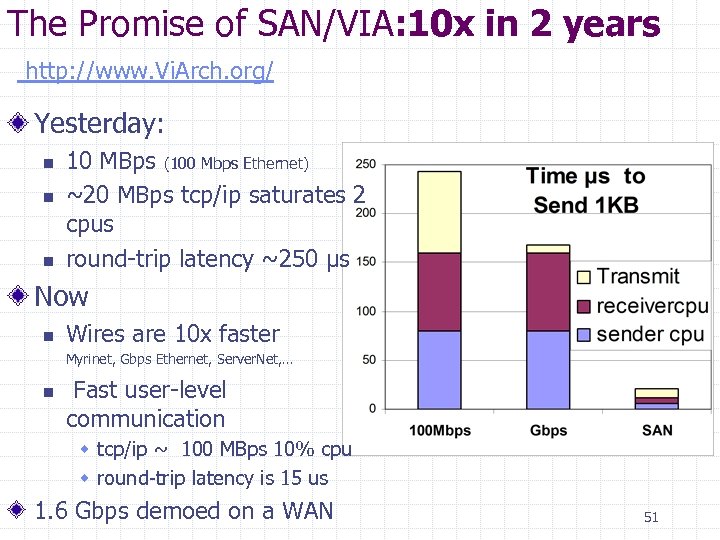

The Promise of SAN/VIA: 10 x in 2 years http: //www. Vi. Arch. org/ Yesterday: n n n 10 MBps (100 Mbps Ethernet) ~20 MBps tcp/ip saturates 2 cpus round-trip latency ~250 µs Now n Wires are 10 x faster Myrinet, Gbps Ethernet, Server. Net, … n Fast user-level communication w tcp/ip ~ 100 MBps 10% cpu w round-trip latency is 15 us 1. 6 Gbps demoed on a WAN 51

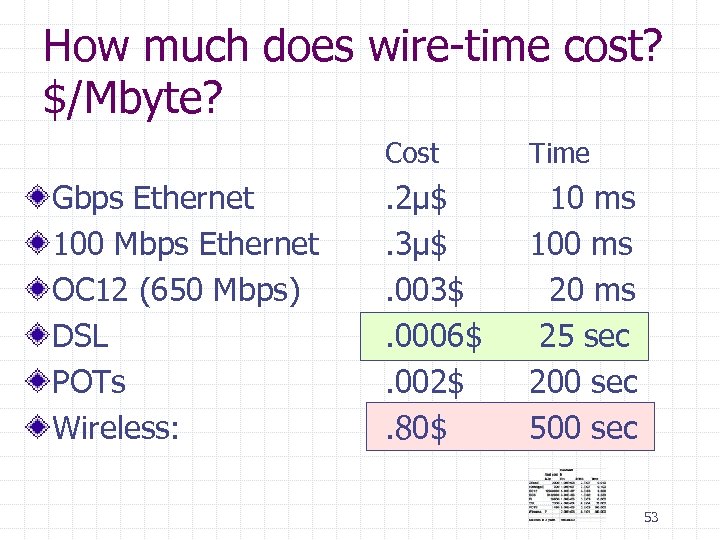

How much does wire-time cost? $/Mbyte? Cost Gbps Ethernet 100 Mbps Ethernet OC 12 (650 Mbps) DSL POTs Wireless: Time . 2µ$. 3µ$. 003$. 0006$. 002$. 80$ 10 ms 100 ms 25 sec 200 sec 53

Data delivery costs 1$/GB today Rent for “big” customers: 300$/megabit per second per month Improved 3 x in last 6 years (!). That translates to 1$/GB you send. You can mail a 160 GB disk for 20$. n n 3 x 160 GB ~ ½ TB That’s 16 x cheaper If overnight it’s 3 MBps. 54

Outline Moore’s Law and consequences Storage rules of thumb Balanced systems rules revisited Networking rules of thumb Caching rules of thumb 55

The Five Minute Rule Trade DRAM for Disk Accesses Cost of an access (Drive_Cost / Access_per_second) Cost of a DRAM page ( $/MB/ pages_per_MB) Break even has two terms: Technology term and an Economic term Grew page size to compensate for changing ratios. Now at 5 minutes for random, 10 seconds sequential 56

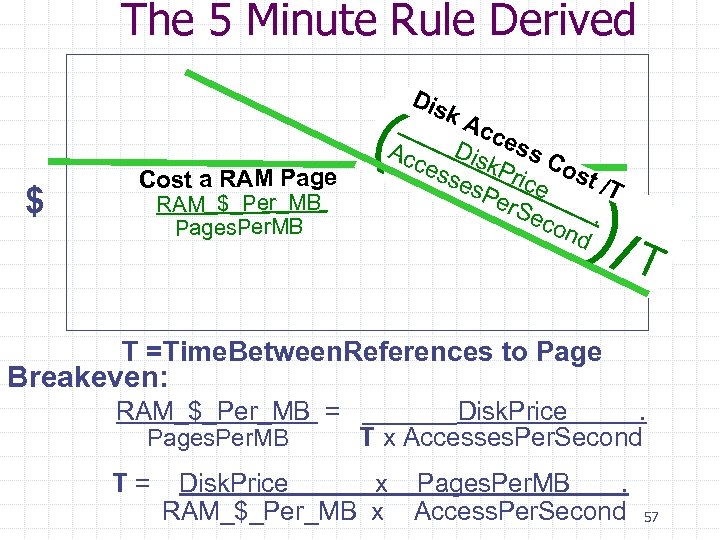

The 5 Minute Rule Derived $ Cost a RAM Page RAM_$_Per_MB Pages. Per. MB Dis k. A cce Acc Dis ss C ess k. Pr ice ost /T es. P er. S eco. nd ( ) /T T =Time. Between. References to Page Breakeven: RAM_$_Per_MB = Pages. Per. MB T= _____Disk. Price . T x Accesses. Per. Second Disk. Price x Pages. Per. MB. RAM_$_Per_MB x Access. Per. Second 57

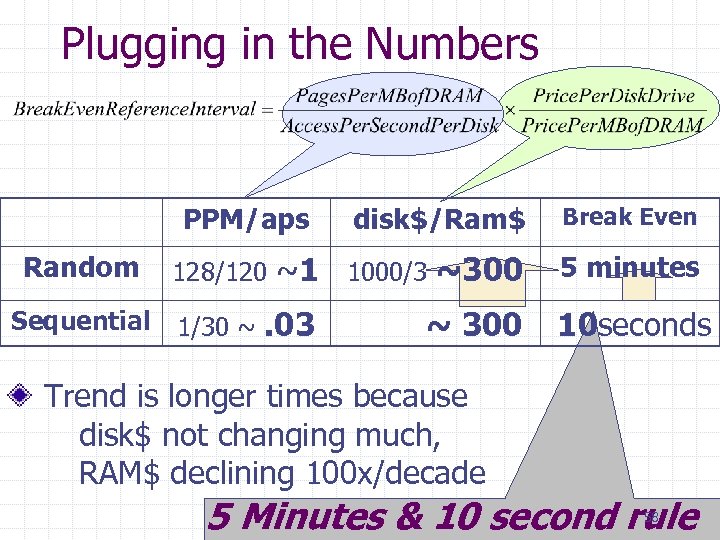

Plugging in the Numbers PPM/aps Random 128/120 Sequential 1/30 ~ disk$/Ram$ Break Even ~1 1000/3 ~300 5 minutes . 03 ~ 300 10 seconds Trend is longer times because disk$ not changing much, RAM$ declining 100 x/decade 5 Minutes & 10 second rule 58

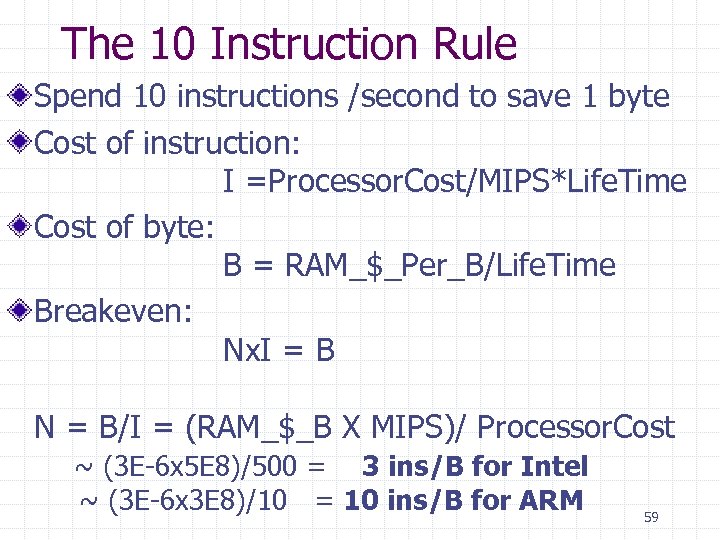

The 10 Instruction Rule Spend 10 instructions /second to save 1 byte Cost of instruction: I =Processor. Cost/MIPS*Life. Time Cost of byte: B = RAM_$_Per_B/Life. Time Breakeven: Nx. I = B N = B/I = (RAM_$_B X MIPS)/ Processor. Cost ~ (3 E-6 x 5 E 8)/500 = 3 ins/B for Intel ~ (3 E-6 x 3 E 8)/10 = 10 ins/B for ARM 59

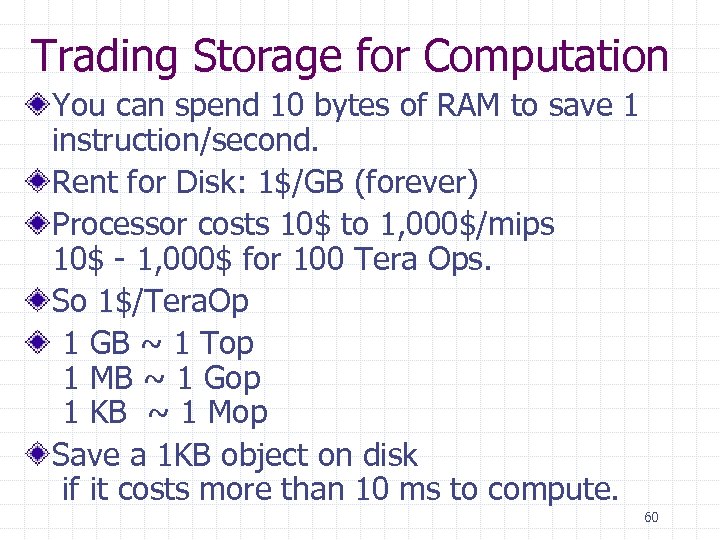

Trading Storage for Computation You can spend 10 bytes of RAM to save 1 instruction/second. Rent for Disk: 1$/GB (forever) Processor costs 10$ to 1, 000$/mips 10$ - 1, 000$ for 100 Tera Ops. So 1$/Tera. Op 1 GB ~ 1 Top 1 MB ~ 1 Gop 1 KB ~ 1 Mop Save a 1 KB object on disk if it costs more than 10 ms to compute. 60

When to Cache Web Pages. Caching n n saves user time saves wire time costs storage only works sometimes: New pages are a miss Stale pages are a miss 61

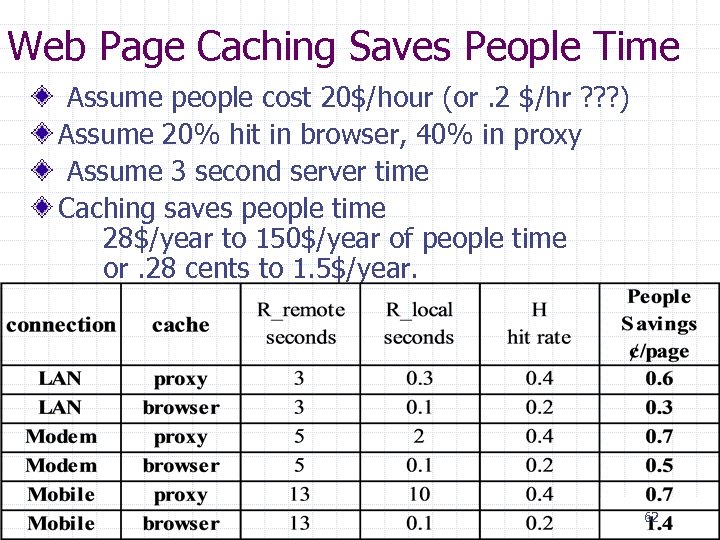

Web Page Caching Saves People Time Assume people cost 20$/hour (or. 2 $/hr ? ? ? ) Assume 20% hit in browser, 40% in proxy Assume 3 second server time Caching saves people time 28$/year to 150$/year of people time or. 28 cents to 1. 5$/year. 62

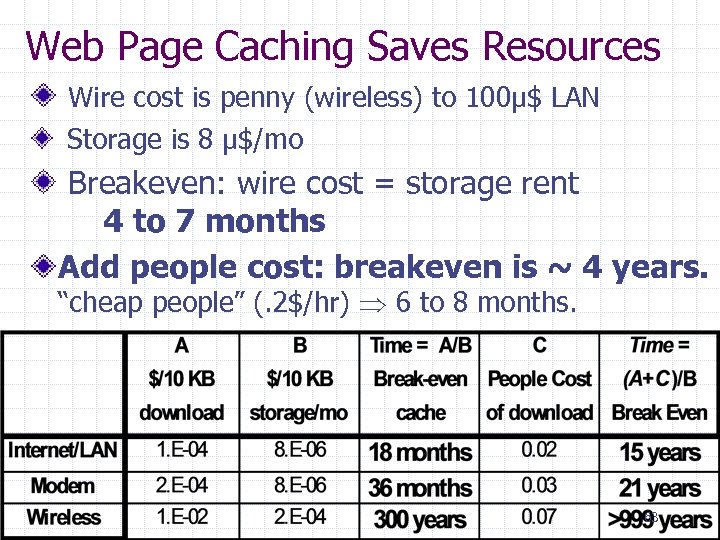

Web Page Caching Saves Resources Wire cost is penny (wireless) to 100µ$ LAN Storage is 8 µ$/mo Breakeven: wire cost = storage rent 4 to 7 months Add people cost: breakeven is ~ 4 years. “cheap people” (. 2$/hr) 6 to 8 months. 63

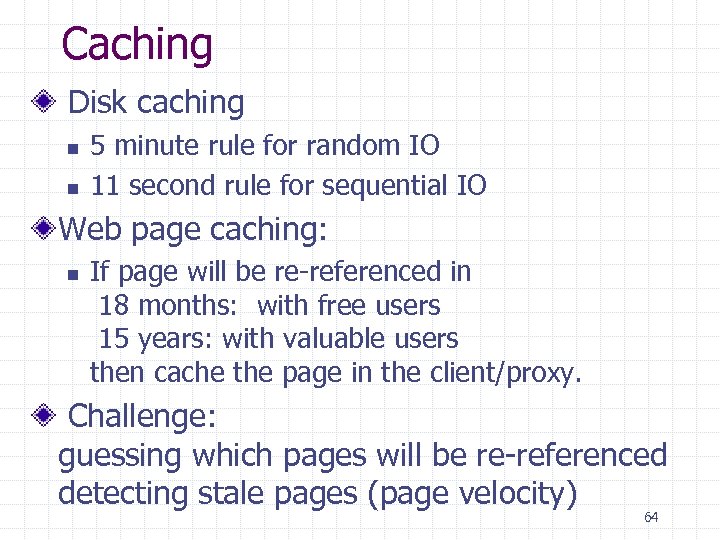

Caching Disk caching n n 5 minute rule for random IO 11 second rule for sequential IO Web page caching: n If page will be re-referenced in 18 months: with free users 15 years: with valuable users then cache the page in the client/proxy. Challenge: guessing which pages will be re-referenced detecting stale pages (page velocity) 64

Meta-Message: Technology Ratios Matter Price and Performance change. If everything changes in the same way, then nothing really changes. If some things get much cheaper/faster than others, then that is real change. Some things are not changing much: n n n Cost of people Speed of light … And some things are changing a LOT 65

Outline Moore’s Law and consequences Storage rules of thumb Balanced systems rules revisited Networking rules of thumb Caching rules of thumb 66

e910502582b5eafb85a43aff9e1947a7.ppt