bafbef196a8554c243cc4889b61a31a1.ppt

- Количество слайдов: 34

Router-assisted congestion control Lecture 8 CS 653, Fall 2010

Router-assisted congestion control Lecture 8 CS 653, Fall 2010

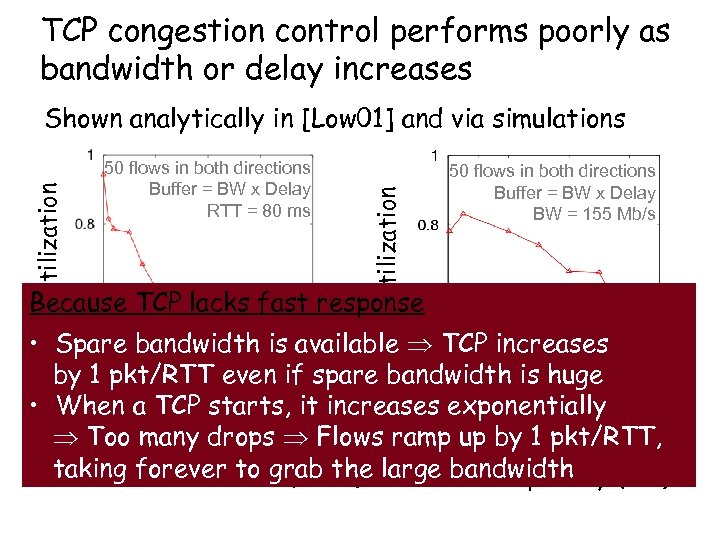

TCP congestion control performs poorly as bandwidth or delay increases 50 flows in both directions Buffer = BW x Delay RTT = 80 ms Avg. TCP Utilization Shown analytically in [Low 01] and via simulations 50 flows in both directions Buffer = BW x Delay BW = 155 Mb/s Because TCP lacks fast response • Spare bandwidth is available TCP increases by 1 pkt/RTT even if spare bandwidth is huge • When a TCP starts, it increases exponentially Too many drops Flows ramp up by 1 pkt/RTT, taking forever to grab the Bottleneck Bandwidth (Mb/s) large bandwidth Round Trip Delay (sec)

TCP congestion control performs poorly as bandwidth or delay increases 50 flows in both directions Buffer = BW x Delay RTT = 80 ms Avg. TCP Utilization Shown analytically in [Low 01] and via simulations 50 flows in both directions Buffer = BW x Delay BW = 155 Mb/s Because TCP lacks fast response • Spare bandwidth is available TCP increases by 1 pkt/RTT even if spare bandwidth is huge • When a TCP starts, it increases exponentially Too many drops Flows ramp up by 1 pkt/RTT, taking forever to grab the Bottleneck Bandwidth (Mb/s) large bandwidth Round Trip Delay (sec)

Proposed Solution: Decouple Congestion Control from Fairness High Utilization; Small Queues; Few Drops Bandwidth Allocation Policy

Proposed Solution: Decouple Congestion Control from Fairness High Utilization; Small Queues; Few Drops Bandwidth Allocation Policy

Proposed Solution: Decouple Congestion Control from Fairness Coupled because a single mechanism controls both Example: In TCP, Additive-Increase Multiplicative. Decrease (AIMD) controls both How does decoupling solve the problem? 1. To control congestion: use MIMD which shows fast response 2. To control fairness: use AIMD which converges to fairness

Proposed Solution: Decouple Congestion Control from Fairness Coupled because a single mechanism controls both Example: In TCP, Additive-Increase Multiplicative. Decrease (AIMD) controls both How does decoupling solve the problem? 1. To control congestion: use MIMD which shows fast response 2. To control fairness: use AIMD which converges to fairness

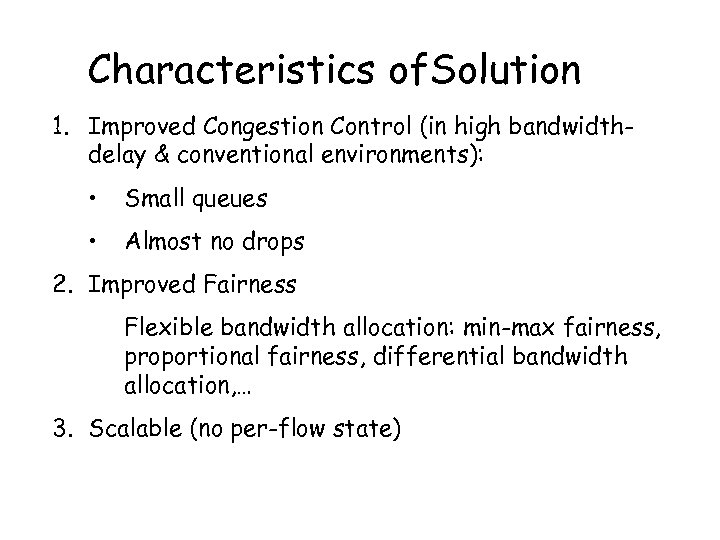

Characteristics of. Solution 1. Improved Congestion Control (in high bandwidthdelay & conventional environments): • Small queues • Almost no drops 2. Improved Fairness Flexible bandwidth allocation: min-max fairness, proportional fairness, differential bandwidth allocation, … 3. Scalable (no per-flow state)

Characteristics of. Solution 1. Improved Congestion Control (in high bandwidthdelay & conventional environments): • Small queues • Almost no drops 2. Improved Fairness Flexible bandwidth allocation: min-max fairness, proportional fairness, differential bandwidth allocation, … 3. Scalable (no per-flow state)

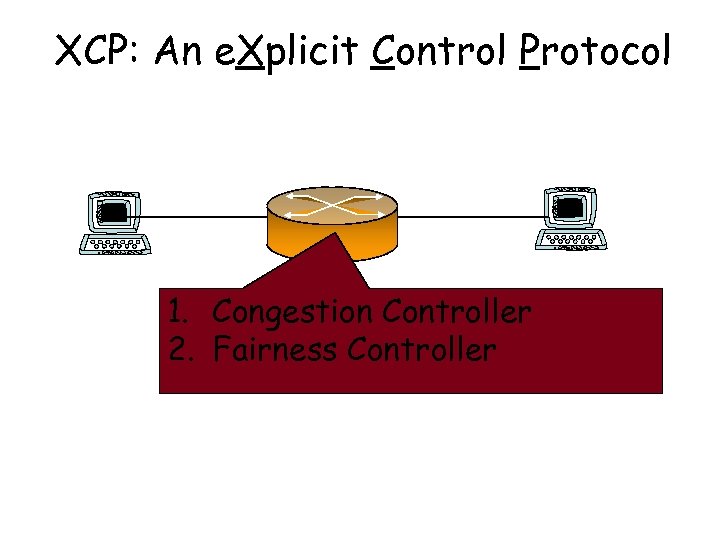

XCP: An e. Xplicit Control Protocol 1. Congestion Controller 2. Fairness Controller

XCP: An e. Xplicit Control Protocol 1. Congestion Controller 2. Fairness Controller

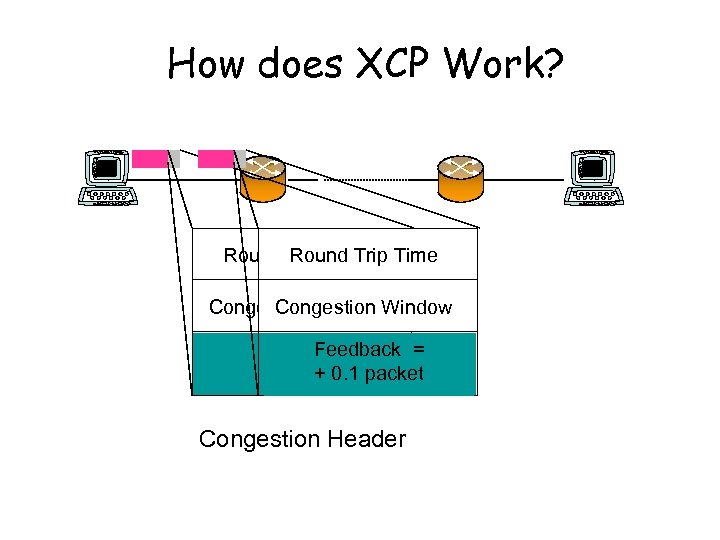

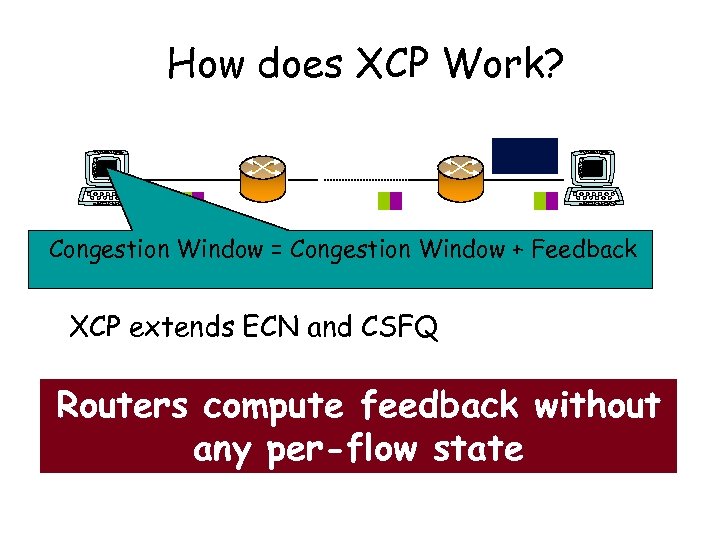

How does XCP Work? Round Trip Time Round Trip Congestion Window Feedback = Feedback + 0. 1 packet Congestion Header

How does XCP Work? Round Trip Time Round Trip Congestion Window Feedback = Feedback + 0. 1 packet Congestion Header

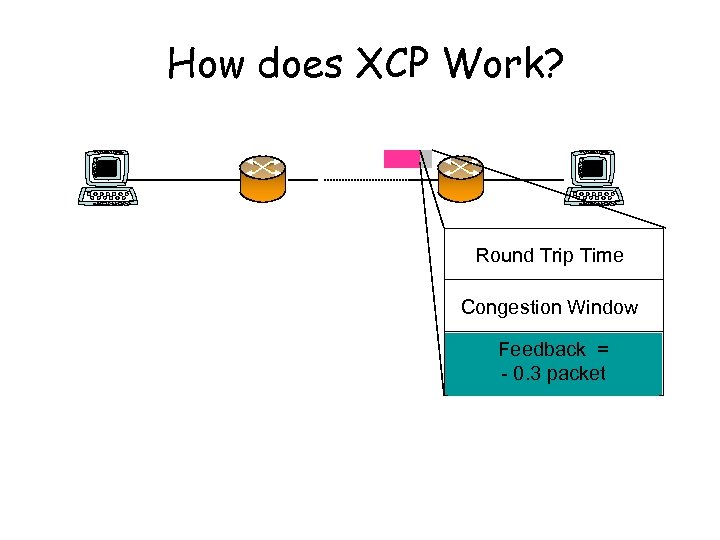

How does XCP Work? Round Trip Time Congestion Window Feedback = + 0. 3 packet - 0. 1

How does XCP Work? Round Trip Time Congestion Window Feedback = + 0. 3 packet - 0. 1

How does XCP Work? Congestion Window = Congestion Window + Feedback XCP extends ECN and CSFQ Routers compute feedback without any per-flow state

How does XCP Work? Congestion Window = Congestion Window + Feedback XCP extends ECN and CSFQ Routers compute feedback without any per-flow state

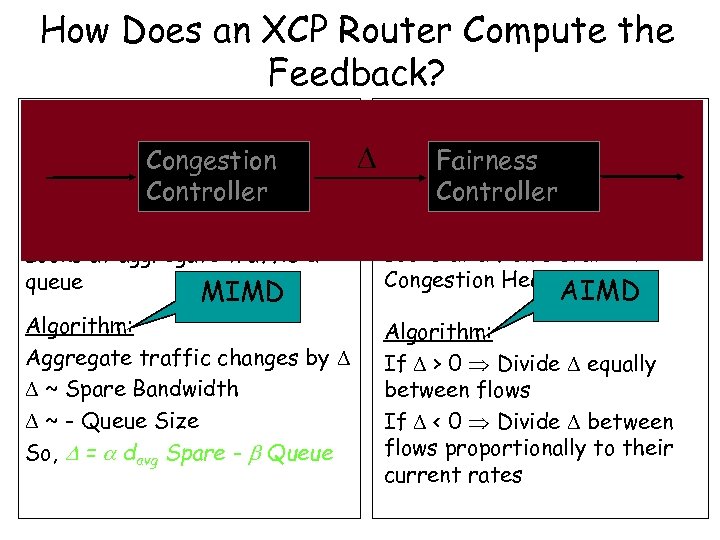

How Does an XCP Router Compute the Feedback? Congestion Controller Congestion Goal: Matches input traffic to Controller link capacity & drains the queue Fairness Controller Goal: Fairnessbetween flows Divides Controller to converge to fairness Looks at aggregate traffic & queue Looks at a flow’s state in Congestion Header Algorithm: Aggregate traffic changes by ~ Spare Bandwidth ~ - Queue Size So, = davg Spare - Queue Algorithm: If > 0 Divide equally between flows If < 0 Divide between flows proportionally to their current rates MIMD AIMD

How Does an XCP Router Compute the Feedback? Congestion Controller Congestion Goal: Matches input traffic to Controller link capacity & drains the queue Fairness Controller Goal: Fairnessbetween flows Divides Controller to converge to fairness Looks at aggregate traffic & queue Looks at a flow’s state in Congestion Header Algorithm: Aggregate traffic changes by ~ Spare Bandwidth ~ - Queue Size So, = davg Spare - Queue Algorithm: If > 0 Divide equally between flows If < 0 Divide between flows proportionally to their current rates MIMD AIMD

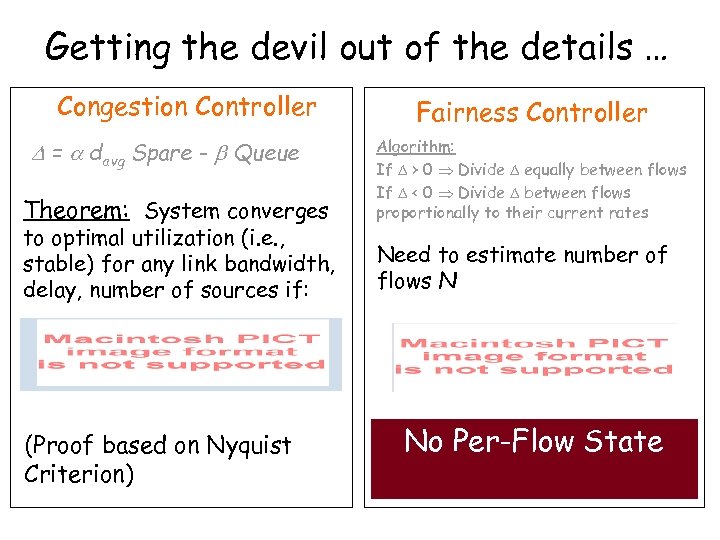

Getting the devil out of the details … Congestion Controller = davg Spare - Queue Theorem: System converges to optimal utilization (i. e. , stable) for any link bandwidth, delay, number of sources if: (Proof based on Nyquist Criterion) Fairness Controller Algorithm: If > 0 Divide equally between flows If < 0 Divide between flows proportionally to their current rates Need to estimate number of flows N No Per-Flow State RTTpkt : Round Trip Time in header Cwndpkt : Congestion Window in header T: Counting Interval

Getting the devil out of the details … Congestion Controller = davg Spare - Queue Theorem: System converges to optimal utilization (i. e. , stable) for any link bandwidth, delay, number of sources if: (Proof based on Nyquist Criterion) Fairness Controller Algorithm: If > 0 Divide equally between flows If < 0 Divide between flows proportionally to their current rates Need to estimate number of flows N No Per-Flow State RTTpkt : Round Trip Time in header Cwndpkt : Congestion Window in header T: Counting Interval

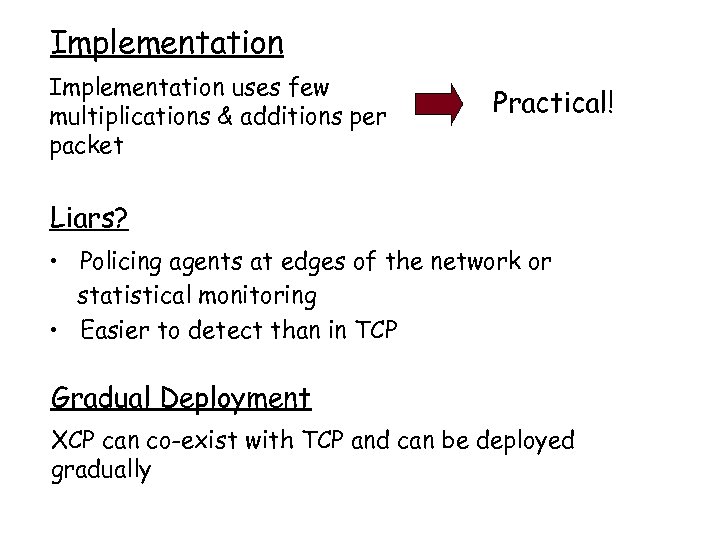

Implementation uses few multiplications & additions per packet Practical! Liars? • Policing agents at edges of the network or statistical monitoring • Easier to detect than in TCP Gradual Deployment XCP can co-exist with TCP and can be deployed gradually

Implementation uses few multiplications & additions per packet Practical! Liars? • Policing agents at edges of the network or statistical monitoring • Easier to detect than in TCP Gradual Deployment XCP can co-exist with TCP and can be deployed gradually

Performance

Performance

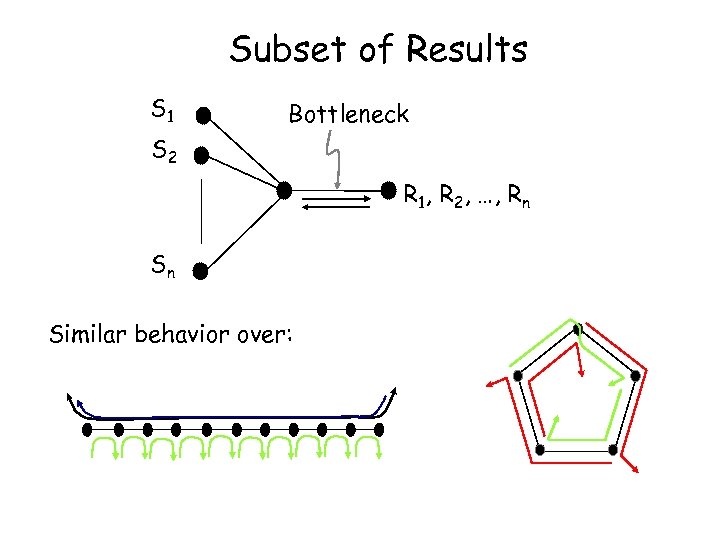

Subset of Results S 1 Bottleneck S 2 R 1, R 2, …, Rn Sn Similar behavior over:

Subset of Results S 1 Bottleneck S 2 R 1, R 2, …, Rn Sn Similar behavior over:

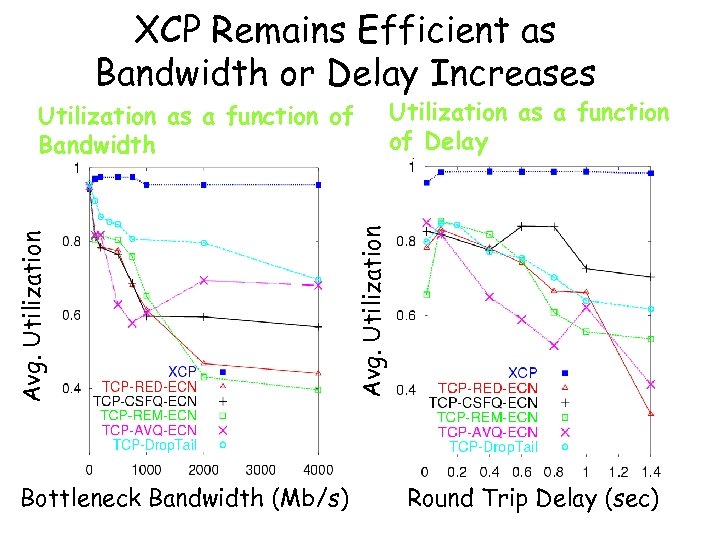

XCP Remains Efficient as Bandwidth or Delay Increases Utilization as a function of Delay Bottleneck Bandwidth (Mb/s) Avg. Utilization as a function of Bandwidth Round Trip Delay (sec)

XCP Remains Efficient as Bandwidth or Delay Increases Utilization as a function of Delay Bottleneck Bandwidth (Mb/s) Avg. Utilization as a function of Bandwidth Round Trip Delay (sec)

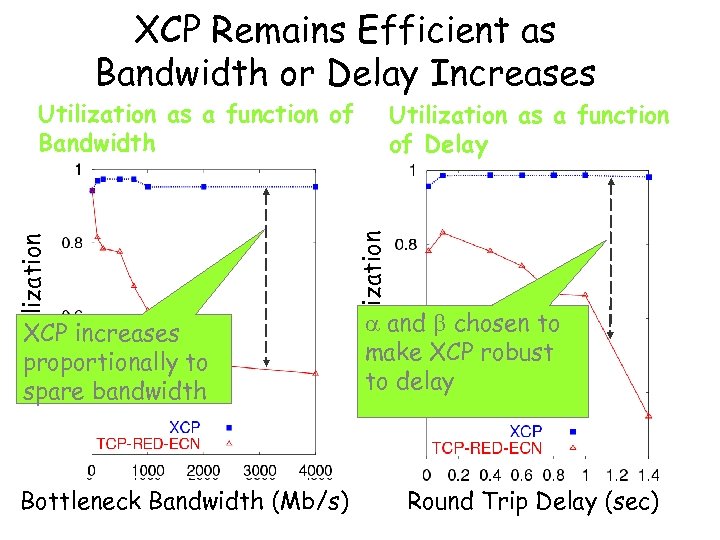

XCP Remains Efficient as Bandwidth or Delay Increases XCP increases proportionally to spare bandwidth Bottleneck Bandwidth (Mb/s) Utilization as a function of Delay Avg. Utilization as a function of Bandwidth and chosen to make XCP robust to delay Round Trip Delay (sec)

XCP Remains Efficient as Bandwidth or Delay Increases XCP increases proportionally to spare bandwidth Bottleneck Bandwidth (Mb/s) Utilization as a function of Delay Avg. Utilization as a function of Bandwidth and chosen to make XCP robust to delay Round Trip Delay (sec)

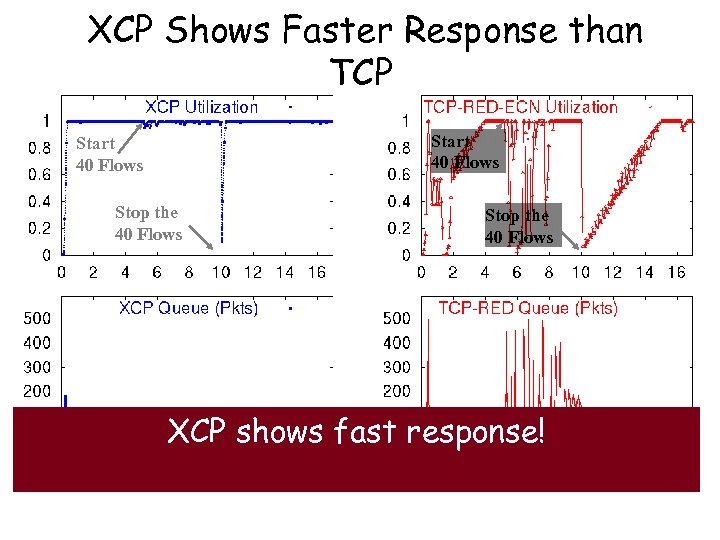

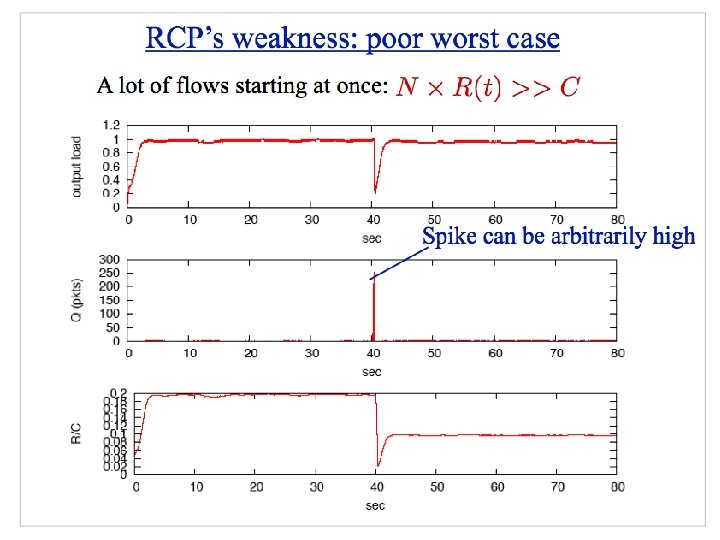

XCP Shows Faster Response than TCP Start 40 Flows Stop the 40 Flows XCP shows fast response!

XCP Shows Faster Response than TCP Start 40 Flows Stop the 40 Flows XCP shows fast response!

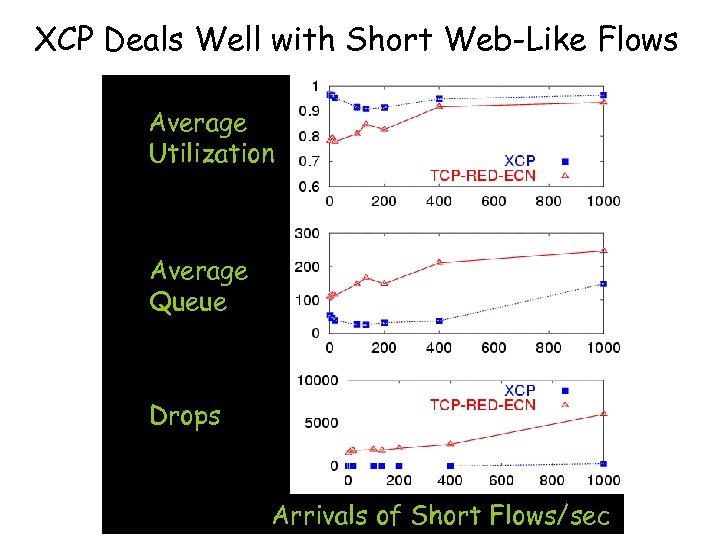

XCP Deals Well with Short Web-Like Flows Average Utilization Average Queue Drops Arrivals of Short Flows/sec

XCP Deals Well with Short Web-Like Flows Average Utilization Average Queue Drops Arrivals of Short Flows/sec

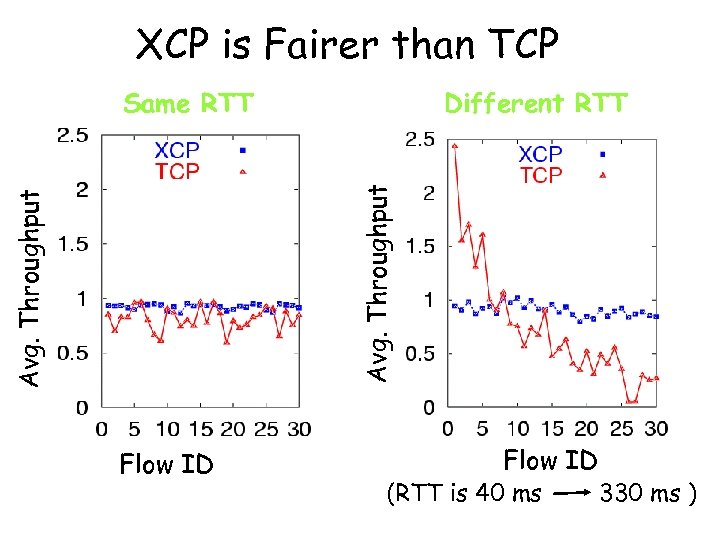

XCP is Fairer than TCP Same RTT Avg. Throughput Different RTT Flow ID (RTT is 40 ms 330 ms )

XCP is Fairer than TCP Same RTT Avg. Throughput Different RTT Flow ID (RTT is 40 ms 330 ms )

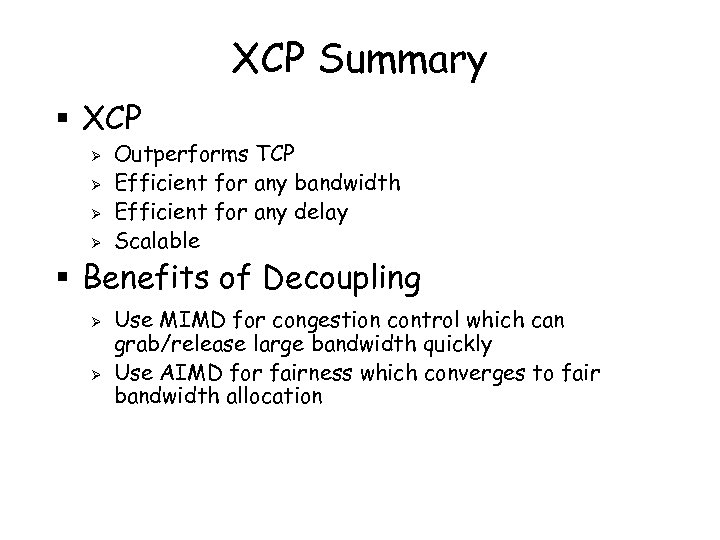

XCP Summary § XCP Ø Ø Outperforms TCP Efficient for any bandwidth Efficient for any delay Scalable § Benefits of Decoupling Ø Ø Use MIMD for congestion control which can grab/release large bandwidth quickly Use AIMD for fairness which converges to fair bandwidth allocation

XCP Summary § XCP Ø Ø Outperforms TCP Efficient for any bandwidth Efficient for any delay Scalable § Benefits of Decoupling Ø Ø Use MIMD for congestion control which can grab/release large bandwidth quickly Use AIMD for fairness which converges to fair bandwidth allocation

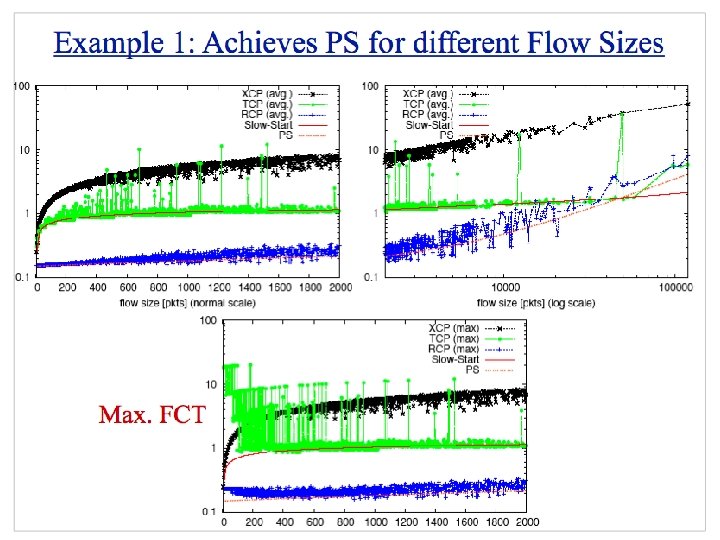

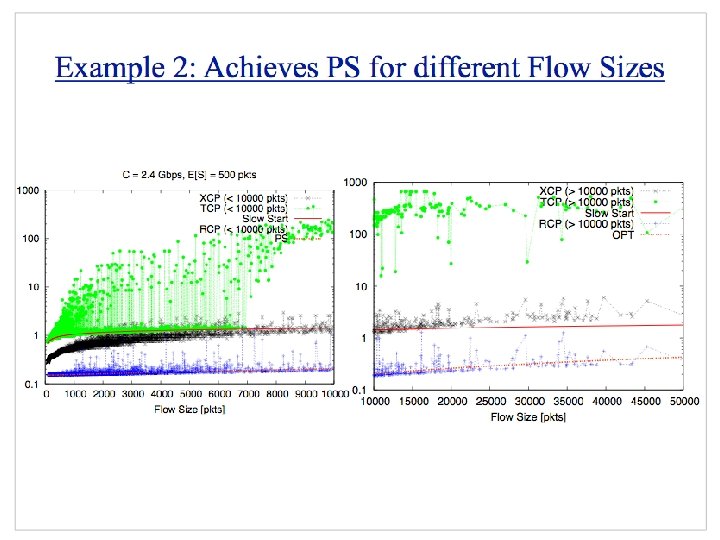

XCP Pros and Cons § Long-lived flows: Works well Ø Convergence to fair share rates, high link utilization, small queue, low loss § Mix of flow lengths: Deviates from processor sharing Ø Ø Non-trivial convergence time Flow durations longer

XCP Pros and Cons § Long-lived flows: Works well Ø Convergence to fair share rates, high link utilization, small queue, low loss § Mix of flow lengths: Deviates from processor sharing Ø Ø Non-trivial convergence time Flow durations longer

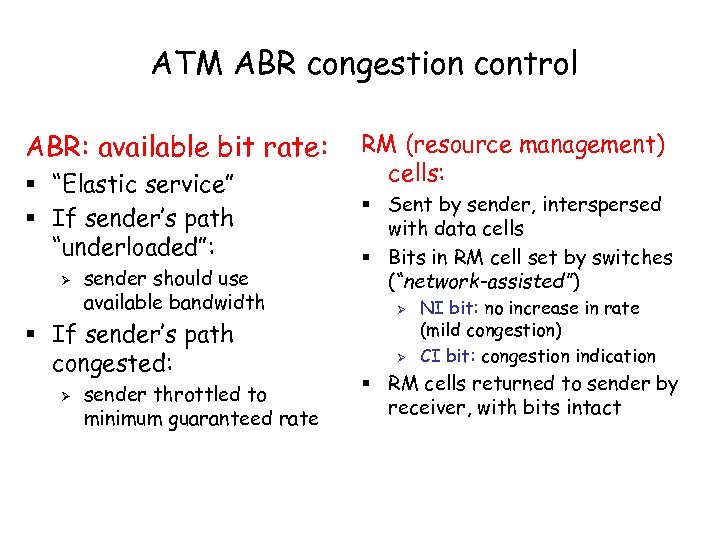

ATM ABR congestion control ABR: available bit rate: § “Elastic service” § If sender’s path “underloaded”: Ø sender should use available bandwidth § If sender’s path congested: Ø sender throttled to minimum guaranteed rate RM (resource management) cells: § Sent by sender, interspersed with data cells § Bits in RM cell set by switches (“network-assisted”) Ø Ø NI bit: no increase in rate (mild congestion) CI bit: congestion indication § RM cells returned to sender by receiver, with bits intact

ATM ABR congestion control ABR: available bit rate: § “Elastic service” § If sender’s path “underloaded”: Ø sender should use available bandwidth § If sender’s path congested: Ø sender throttled to minimum guaranteed rate RM (resource management) cells: § Sent by sender, interspersed with data cells § Bits in RM cell set by switches (“network-assisted”) Ø Ø NI bit: no increase in rate (mild congestion) CI bit: congestion indication § RM cells returned to sender by receiver, with bits intact

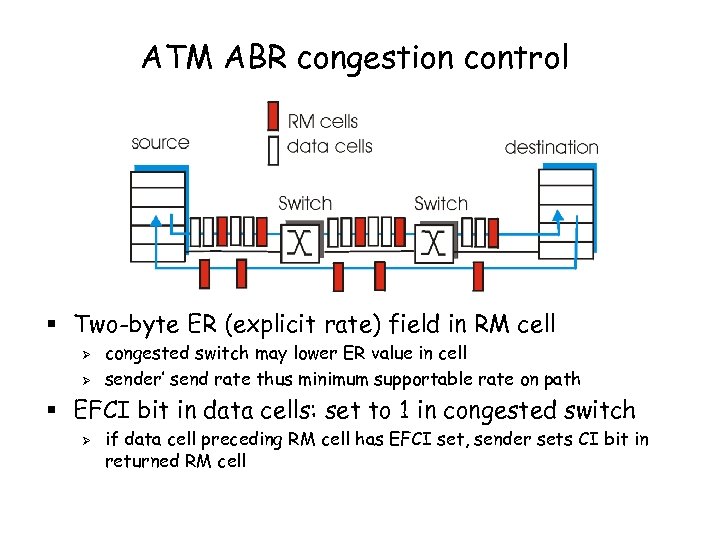

ATM ABR congestion control § Two-byte ER (explicit rate) field in RM cell Ø Ø congested switch may lower ER value in cell sender’ send rate thus minimum supportable rate on path § EFCI bit in data cells: set to 1 in congested switch Ø if data cell preceding RM cell has EFCI set, sender sets CI bit in returned RM cell

ATM ABR congestion control § Two-byte ER (explicit rate) field in RM cell Ø Ø congested switch may lower ER value in cell sender’ send rate thus minimum supportable rate on path § EFCI bit in data cells: set to 1 in congested switch Ø if data cell preceding RM cell has EFCI set, sender sets CI bit in returned RM cell

ATM ERICA Switch Algorithm § ERICA: Explicit rate indication for congestion avoidance goals: Ø Ø Utilization: allocate all available capacity to ABR flows Queueing delay: keep queue small Fairness: max-min “sought only after utilization achieved” (decoupled from utilization? ) Stability, ie reaches steady-state, and robustness, ie graceful degradation, when no steady-state

ATM ERICA Switch Algorithm § ERICA: Explicit rate indication for congestion avoidance goals: Ø Ø Utilization: allocate all available capacity to ABR flows Queueing delay: keep queue small Fairness: max-min “sought only after utilization achieved” (decoupled from utilization? ) Stability, ie reaches steady-state, and robustness, ie graceful degradation, when no steady-state

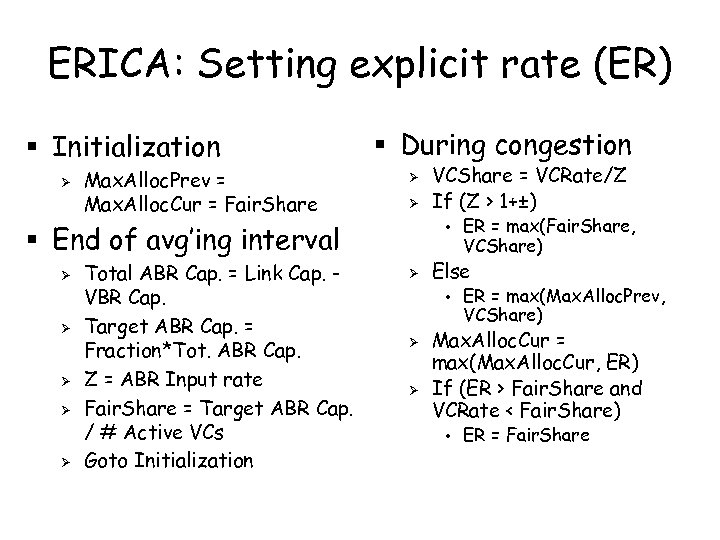

ERICA: Setting explicit rate (ER) § Initialization Ø Max. Alloc. Prev = Max. Alloc. Cur = Fair. Share § During congestion Ø Ø § End of avg’ing interval Ø Ø Ø Total ABR Cap. = Link Cap. VBR Cap. Target ABR Cap. = Fraction*Tot. ABR Cap. Z = ABR Input rate Fair. Share = Target ABR Cap. / # Active VCs Goto Initialization VCShare = VCRate/Z If (Z > 1+±) • Ø Else • Ø Ø ER = max(Fair. Share, VCShare) ER = max(Max. Alloc. Prev, VCShare) Max. Alloc. Cur = max(Max. Alloc. Cur, ER) If (ER > Fair. Share and VCRate < Fair. Share) • ER = Fair. Share

ERICA: Setting explicit rate (ER) § Initialization Ø Max. Alloc. Prev = Max. Alloc. Cur = Fair. Share § During congestion Ø Ø § End of avg’ing interval Ø Ø Ø Total ABR Cap. = Link Cap. VBR Cap. Target ABR Cap. = Fraction*Tot. ABR Cap. Z = ABR Input rate Fair. Share = Target ABR Cap. / # Active VCs Goto Initialization VCShare = VCRate/Z If (Z > 1+±) • Ø Else • Ø Ø ER = max(Fair. Share, VCShare) ER = max(Max. Alloc. Prev, VCShare) Max. Alloc. Cur = max(Max. Alloc. Cur, ER) If (ER > Fair. Share and VCRate < Fair. Share) • ER = Fair. Share

ABR vs. XCP or RCP? § Similarities? § Differences?

ABR vs. XCP or RCP? § Similarities? § Differences?