e5f4c7fdb1d6fadc7a461fd205c0e958.ppt

- Количество слайдов: 47

Robust Web Extraction Domain Centric Extraction Philip Bohannon 5. 19. 2010 Automatic Knowledge Base Construction Workshop Grenoble, France, May 17 -20, 2010.

Yahoo! Presentation, Confidential 2 3/16/2018

3/16/2018

Robust Web Extraction, A Principled Approach Philip Bohannon 5. 19. 2010 Joint work with Nilesh Dalvi, Fei Sha. Published SIGMOD 2009.

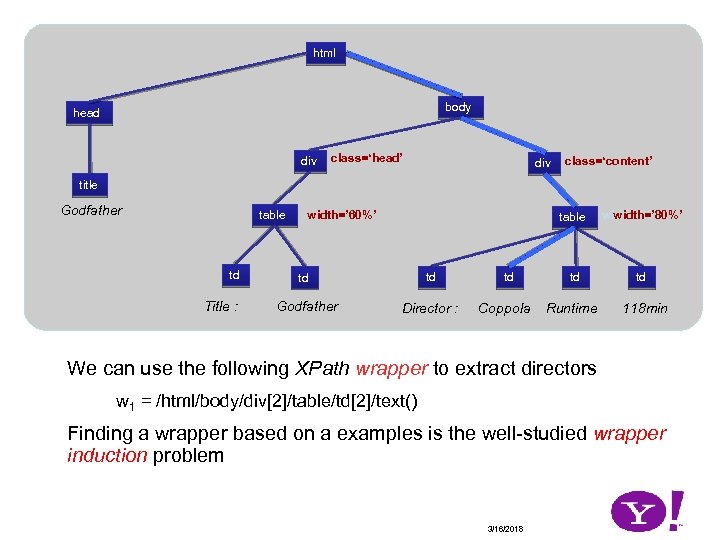

html body head div class=‘head’ div class=‘content’ title Godfather table td Title : width=’ 60%’ table td td Godfather Director : td Coppola width=80% width=’ 80%’ td td Runtime 118 min We can use the following XPath wrapper to extract directors w 1 = /html/body/div[2]/table/td[2]/text() Finding a wrapper based on a examples is the well-studied wrapper induction problem 3/16/2018

![Background: Wrapper Induction Wrapper generator labeled data /html/div[2]/ /html//table wrapper test data Goal: based Background: Wrapper Induction Wrapper generator labeled data /html/div[2]/ /html//table wrapper test data Goal: based](https://present5.com/presentation/e5f4c7fdb1d6fadc7a461fd205c0e958/image-6.jpg)

Background: Wrapper Induction Wrapper generator labeled data /html/div[2]/ /html//table wrapper test data Goal: based on few labeled examples, maximize precision and recall on test data Challenge: test data is structurally similar to labeled data, but not same 3/16/2018

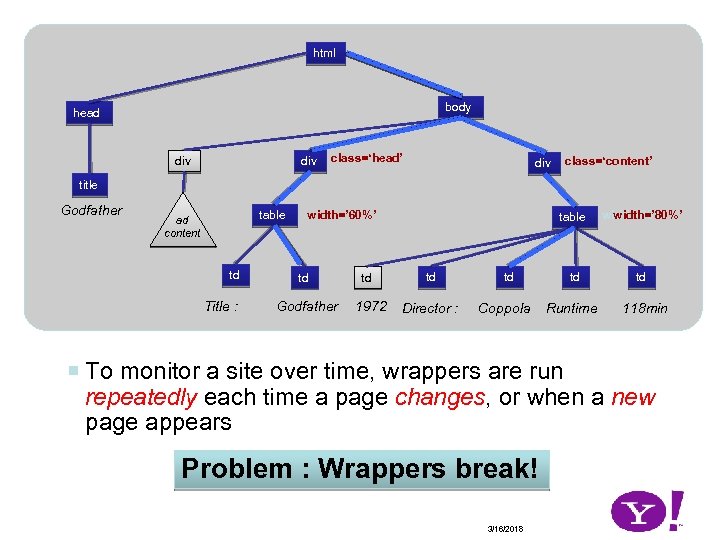

html body head div class=‘head’ div class=‘content’ title Godfather table ad content td Title : width=’ 60%’ td Godfather td 1972 table td Director : td Coppola width=80% width=’ 80%’ td td Runtime 118 min ¡ To monitor a site over time, wrappers are run repeatedly each time a page changes, or when a new page appears Problem : Wrappers break! 3/16/2018

![Background: Wrapper Repair Old labeled data Old wrapper /html/div[2]/ /html//table Wrapper Repair /html/div[3]/ /html//table Background: Wrapper Repair Old labeled data Old wrapper /html/div[2]/ /html//table Wrapper Repair /html/div[3]/ /html//table](https://present5.com/presentation/e5f4c7fdb1d6fadc7a461fd205c0e958/image-8.jpg)

Background: Wrapper Repair Old labeled data Old wrapper /html/div[2]/ /html//table Wrapper Repair /html/div[3]/ /html//table New wrapper New test data (overlaps old) Goal: based on old labeled data, old wrapper and old results produce a new wrapper [Lerman 2003] [Meng 2003], etc. Problems with Repair: 1. Some breakage is hard to detect, especially for values that change frequently (e. g. price) 2. Wrapper repair is offline, and thus breakage impacts production until repair is effected 3/16/2018

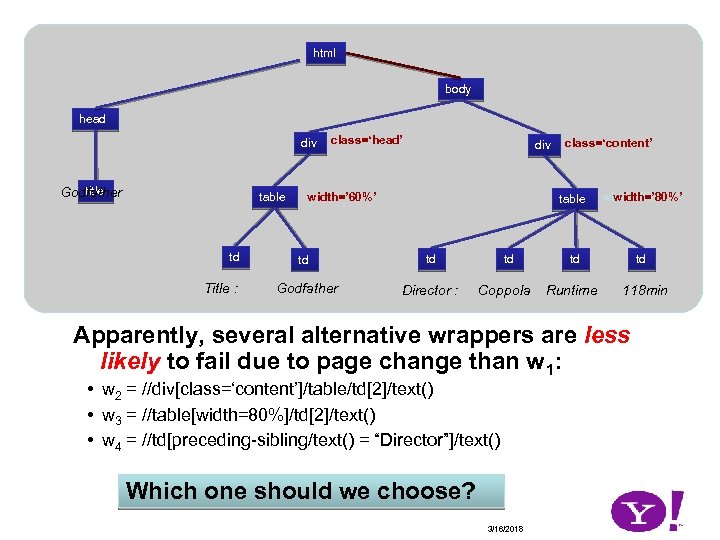

html body head div title Godfather table td Title : class=‘head’ div width=’ 60%’ td Godfather class=‘content’ table td Director : td Coppola width=80% width=’ 80%’ td td Runtime 118 min Apparently, several alternative wrappers are less likely to fail due to page change than w 1: • w 2 = //div[class=‘content’]/table/td[2]/text() • w 3 = //table[width=80%]/td[2]/text() • w 4 = //td[preceding-sibling/text() = “Director”]/text() Which one should we choose? 3/16/2018

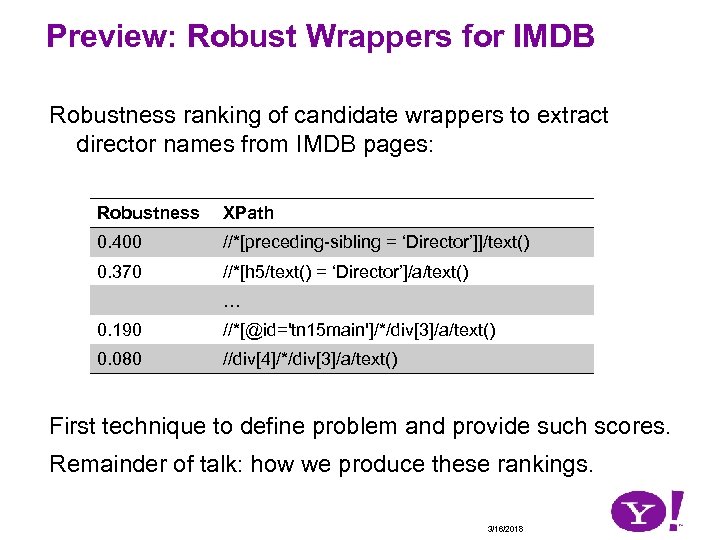

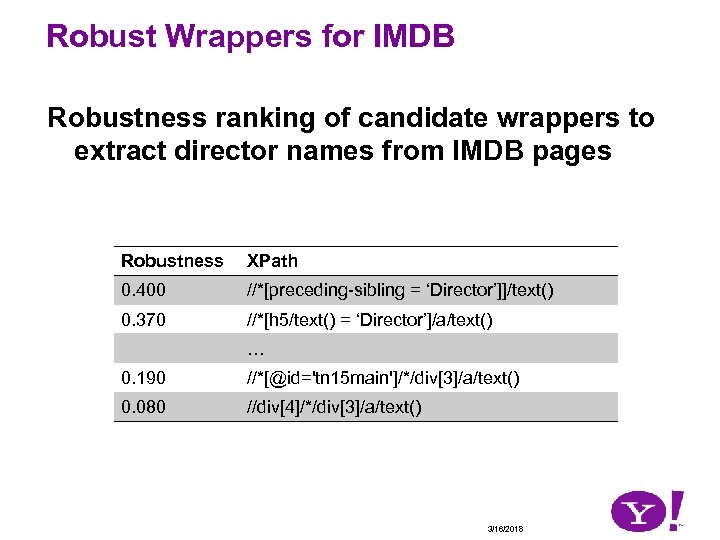

Preview: Robust Wrappers for IMDB Robustness ranking of candidate wrappers to extract director names from IMDB pages: Robustness XPath 0. 400 //*[preceding-sibling = ‘Director’]]/text() 0. 370 //*[h 5/text() = ‘Director’]/a/text() … 0. 190 //*[@id='tn 15 main']/*/div[3]/a/text() 0. 080 //div[4]/*/div[3]/a/text() First technique to define problem and provide such scores. Remainder of talk: how we produce these rankings. 3/16/2018

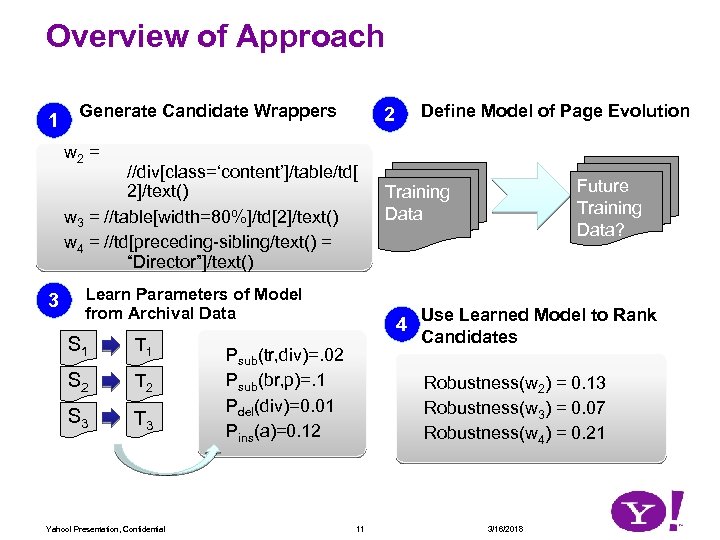

Overview of Approach 1 Generate Candidate Wrappers Define Model of Page Evolution 2 w 2 = //div[class=‘content’]/table/td[ 2]/text() w 3 = //table[width=80%]/td[2]/text() w 4 = //td[preceding-sibling/text() = “Director”]/text() 3 Learn Parameters of Model from Archival Data S 1 T 1 S 2 T 2 S 3 T 3 Yahoo! Presentation, Confidential 4 Psub(tr, div)=. 02 Psub(br, p)=. 1 Pdel(div)=0. 01 Pins(a)=0. 12 Future Training Data? Training Data Use Learned Model to Rank Candidates Robustness(w 2) = 0. 13 Robustness(w 3) = 0. 07 Robustness(w 4) = 0. 21 11 3/16/2018

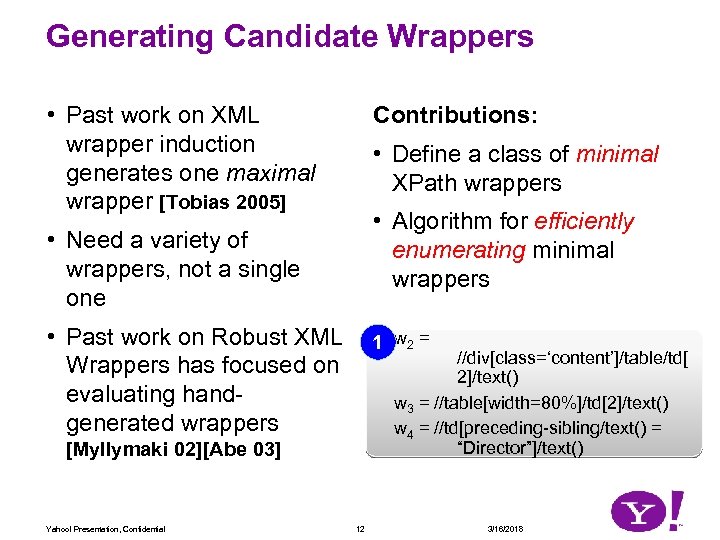

Generating Candidate Wrappers • Past work on XML wrapper induction generates one maximal wrapper [Tobias 2005] Contributions: • Define a class of minimal XPath wrappers • Algorithm for efficiently enumerating minimal wrappers • Need a variety of wrappers, not a single one • Past work on Robust XML Wrappers has focused on evaluating handgenerated wrappers 1 w 2 = //div[class=‘content’]/table/td[ 2]/text() w 3 = //table[width=80%]/td[2]/text() w 4 = //td[preceding-sibling/text() = “Director”]/text() [Myllymaki 02][Abe 03] Yahoo! Presentation, Confidential 12 3/16/2018

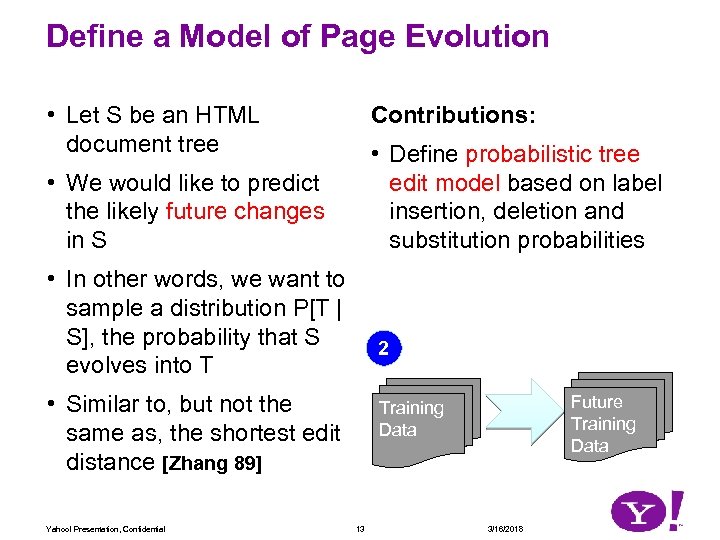

Define a Model of Page Evolution • Let S be an HTML document tree Contributions: • Define probabilistic tree edit model based on label insertion, deletion and substitution probabilities • We would like to predict the likely future changes in S • In other words, we want to sample a distribution P[T | S], the probability that S evolves into T 2 • Similar to, but not the same as, the shortest edit distance [Zhang 89] Yahoo! Presentation, Confidential Future Training Data 13 3/16/2018

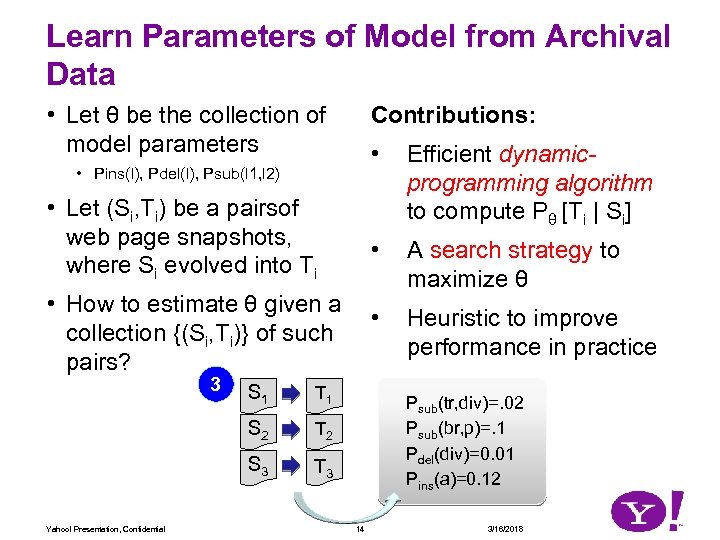

Learn Parameters of Model from Archival Data • Let θ be the collection of model parameters Contributions: • • • Let (Si, Ti) be a pairsof web page snapshots, where Si evolved into Ti • How to estimate θ given a collection {(Si, Ti)} of such pairs? 3 T 1 S 2 T 2 S 3 Yahoo! Presentation, Confidential S 1 T 3 A search strategy to maximize θ • • Pins(l), Pdel(l), Psub(l 1, l 2) Efficient dynamicprogramming algorithm to compute Pθ [Ti | Si] Heuristic to improve performance in practice Psub(tr, div)=. 02 Psub(br, p)=. 1 Pdel(div)=0. 01 Pins(a)=0. 12 14 3/16/2018

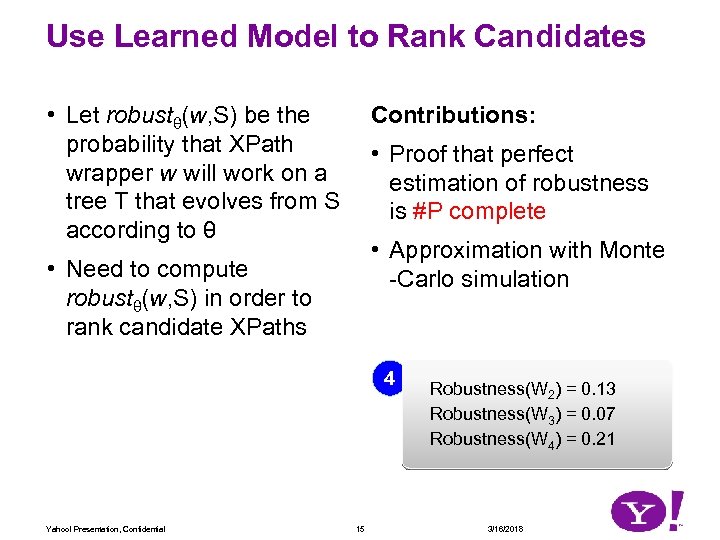

Use Learned Model to Rank Candidates • Let robustθ(w, S) be the probability that XPath wrapper w will work on a tree T that evolves from S according to θ Contributions: • Proof that perfect estimation of robustness is #P complete • Approximation with Monte -Carlo simulation • Need to compute robustθ(w, S) in order to rank candidate XPaths 4 Yahoo! Presentation, Confidential 15 Robustness(W 2) = 0. 13 Robustness(W 3) = 0. 07 Robustness(W 4) = 0. 21 3/16/2018

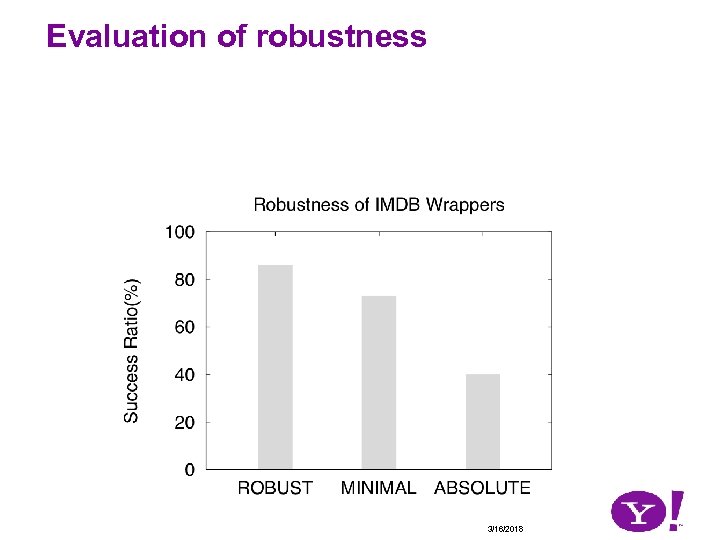

Datasets Faculty : a set of faculty homepages monitored over last 5 years NOAA : webpages from www. noaa. gov, a public website on environmental information, over last 5 years IMDB : webpages from www. imdb. com, monitored over last 5 years. 3/16/2018

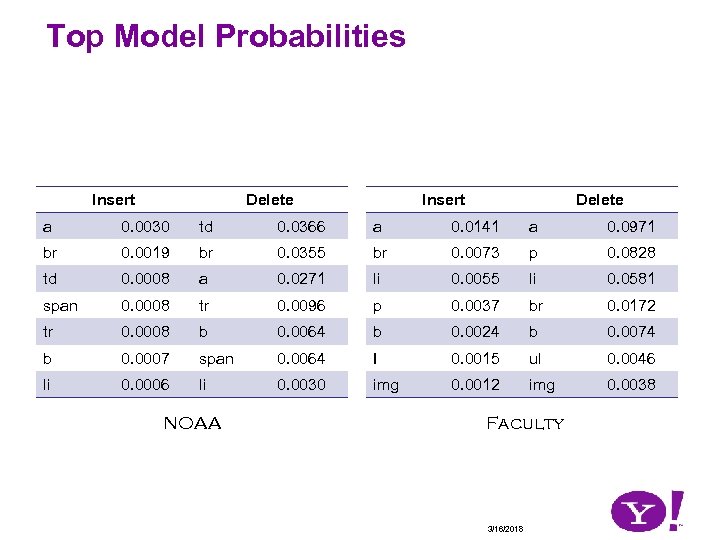

Top Model Probabilities Insert Delete a 0. 0030 td 0. 0366 a 0. 0141 a 0. 0971 br 0. 0019 br 0. 0355 br 0. 0073 p 0. 0828 td 0. 0008 a 0. 0271 li 0. 0055 li 0. 0581 span 0. 0008 tr 0. 0096 p 0. 0037 br 0. 0172 tr 0. 0008 b 0. 0064 b 0. 0024 b 0. 0074 b 0. 0007 span 0. 0064 I 0. 0015 ul 0. 0046 li 0. 0006 li 0. 0030 img 0. 0012 img 0. 0038 NOAA Faculty 3/16/2018

Robust Wrappers for IMDB Robustness ranking of candidate wrappers to extract director names from IMDB pages Robustness XPath 0. 400 //*[preceding-sibling = ‘Director’]]/text() 0. 370 //*[h 5/text() = ‘Director’]/a/text() … 0. 190 //*[@id='tn 15 main']/*/div[3]/a/text() 0. 080 //div[4]/*/div[3]/a/text() 3/16/2018

Evaluation of robustness 3/16/2018

3/16/2018

Domain-Centric Extraction (work in progress) Philip Bohannon 5. 19. 2010 Joint work with Ashwin Machanavajjhala, Keerthi Selvaraj, Nilesh Dalvi, Anish Das Sarma, Srujana Merugu, Raghu Ramakrishnan, Srinivasan Segmundu, Cong Yu.

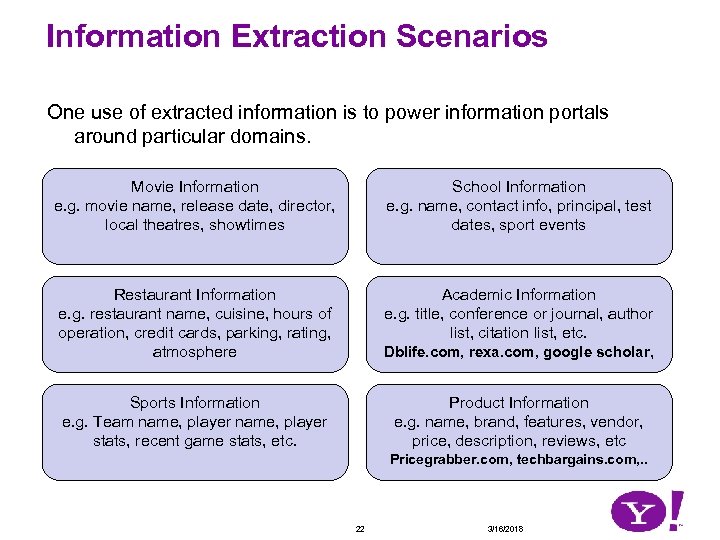

Information Extraction Scenarios One use of extracted information is to power information portals around particular domains. Movie Information e. g. movie name, release date, director, local theatres, showtimes School Information e. g. name, contact info, principal, test dates, sport events Restaurant Information e. g. restaurant name, cuisine, hours of operation, credit cards, parking, rating, atmosphere Academic Information e. g. title, conference or journal, author list, citation list, etc. Dblife. com, rexa. com, google scholar, Sports Information e. g. Team name, player stats, recent game stats, etc. Product Information e. g. name, brand, features, vendor, price, description, reviews, etc Pricegrabber. com, techbargains. com, . . 22 3/16/2018

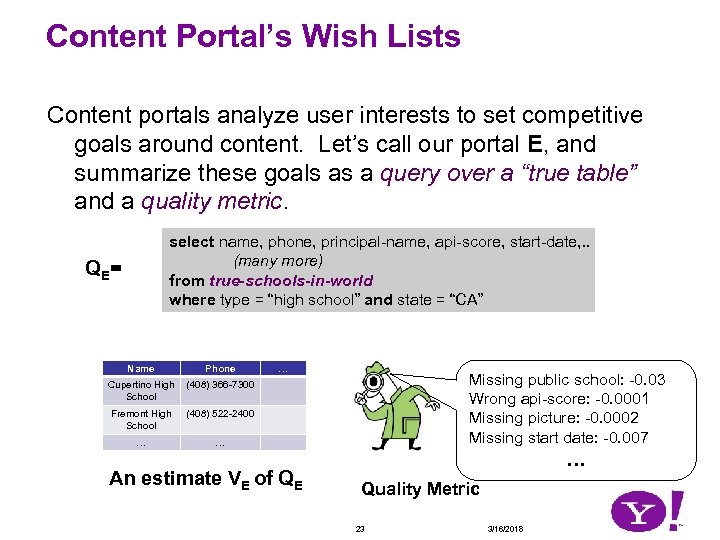

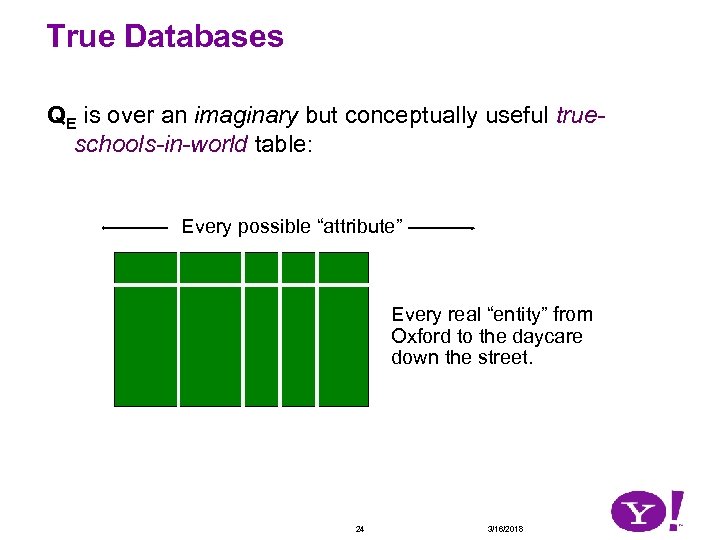

Content Portal’s Wish Lists Content portals analyze user interests to set competitive goals around content. Let’s call our portal E, and summarize these goals as a query over a “true table” and a quality metric. select name, phone, principal-name, api-score, start-date, . . (many more) from true-schools-in-world where type = “high school” and state = “CA” QE= Name Phone Cupertino High School (408) 366 -7300 Fremont High School (408) 522 -2400 … … … An estimate VE of QE Missing public school: -0. 03 Wrong api-score: -0. 0001 Missing picture: -0. 0002 Missing start date: -0. 007 … Quality Metric 23 3/16/2018

True Databases QE is over an imaginary but conceptually useful trueschools-in-world table: Every possible “attribute” Every real “entity” from Oxford to the daycare down the street. 24 3/16/2018

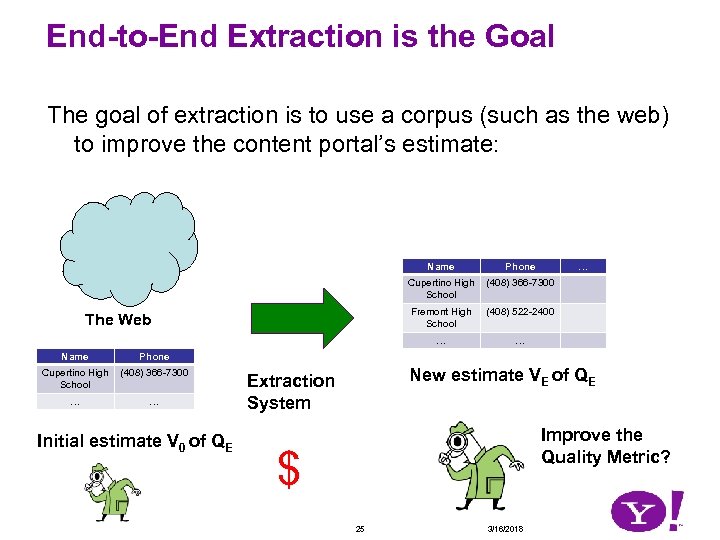

End-to-End Extraction is the Goal The goal of extraction is to use a corpus (such as the web) to improve the content portal’s estimate: Name Cupertino High School (408) 366 -7300 … … Phone Cupertino High School (408) 522 -2400 … Name (408) 366 -7300 Fremont High School The Web Phone Initial estimate V 0 of QE New estimate VE of QE Extraction System Improve the Quality Metric? $ 25 3/16/2018

What’s new with this perspective? Observations: 1. Quality metrics change – need quality of final database, not for each extraction 2. Must account supervision full cost – if using unsupervised extraction, then pay to supervise integration 3. As competition continues, the number of sites needed to further improve coverage grows 26 3/16/2018

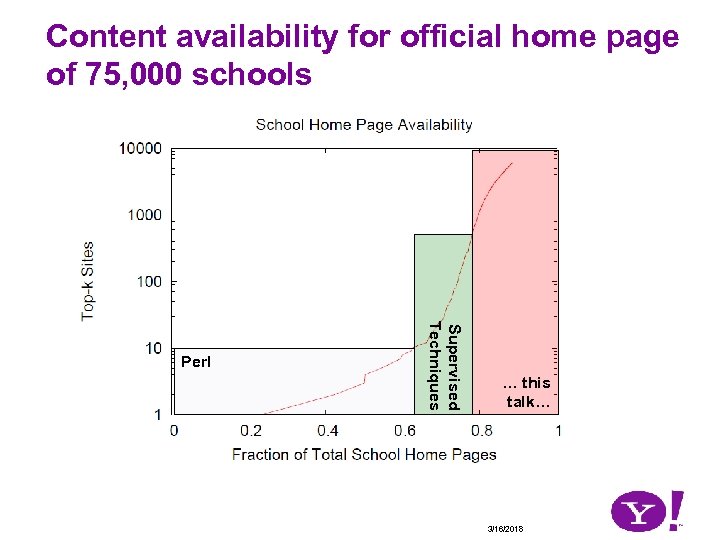

Competitive Extraction: an Illustrative Experiment Observation: as competition continues, the number of sites needed to further improve coverage grows Experiment study distribution of “homepage” attribute of schools: • Start with a list of 75, 000 schools and their official home page URLs from Yahoo! Local • Find in-links for each URL, and group by host (i. e. for this attribute, webmap tells us where it can be extracted) • Order hosts (other than Yahoo! Local) by the number of OHPs they know (decreasing) • Now, to get a particular coverage, (on x-axis), how many sites must we extract from (y-axis)? 27 3/16/2018

Content availability for official home page of 75, 000 schools Supervised Techniques Perl … this talk… 3/16/2018

Surfacing • Clearly, constructing an interesting corpus is not trivial, but neither is it usually considered an academic contribution • Pleasant exceptions include a variety of Deep Web work, Webtables, and [Blanco, JUCS Journal, 2008] and citations. • Automatically and rapidly finding a good corpus for extracting tail information in a domain (last rectangle) is far from easy • How to scale to domain after domain, attribute after attribute? • This needs definition as a formal problem, and attention from community! 29 3/16/2018

Story so far… • Goals: end-to-end extraction, improve quality metrics • Challenge: surfacing gets harder over time • Challenge: so many sites to extract from Supervised Techniques Perl 30 … this talk … 3/16/2018

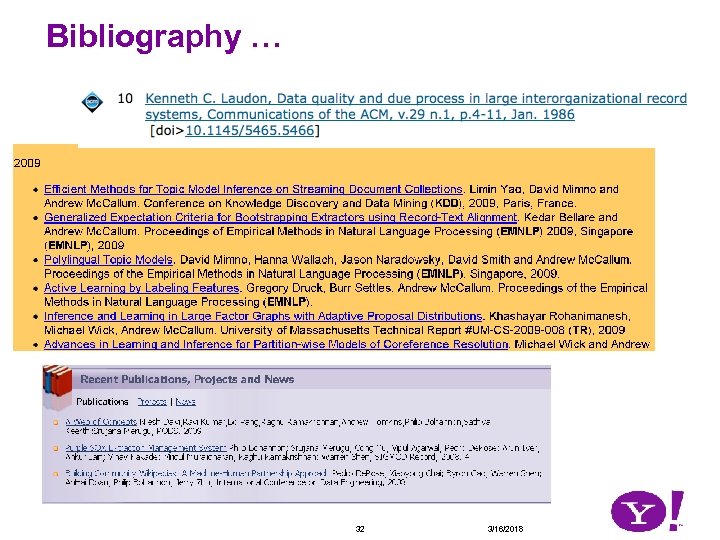

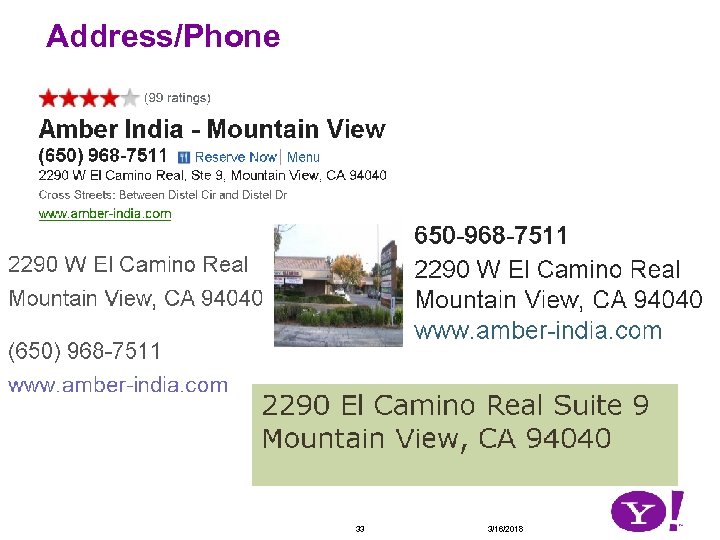

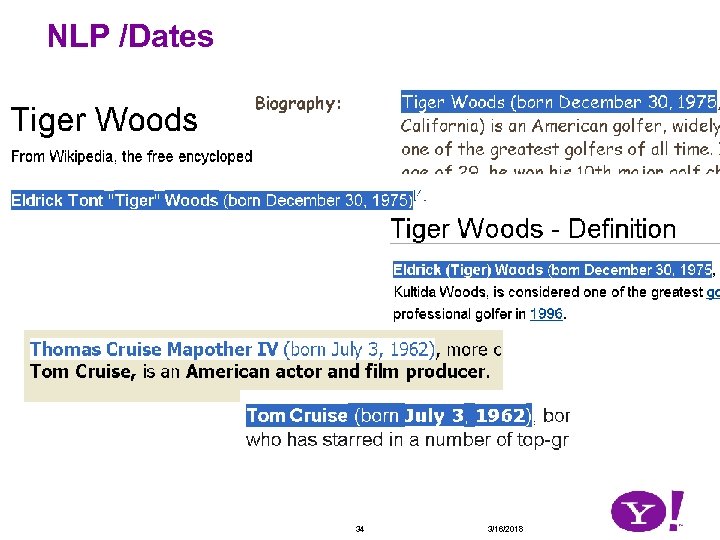

Domains with Strong Cross-Site Formatting Conventions Bibliographic Records (DBLife, REXA, Google Scholar) NLP-pattern extraction (Knowitall) Address/Phone/Date extractors (Regex/CRF) The success of techniques for such domains comes from identifying and leveraging cross-site redundancies in the way information is embedded in web sites. 31 3/16/2018

Bibliography … 32 3/16/2018

Address/Phone 33 3/16/2018

NLP /Dates 34 3/16/2018

Cross-site signal does not always work Attributes that can be extracted across sites with domainspecific techniques are exceptionally valuable, but limited There are many attributes without such strong formatting conventions. (E. g. principal’s name, school sports team name, etc. ) In addition to different formatting, there is different nesting, choices of attributes, etc. 35 3/16/2018

Content Diversity in the Tail 36 3/16/2018

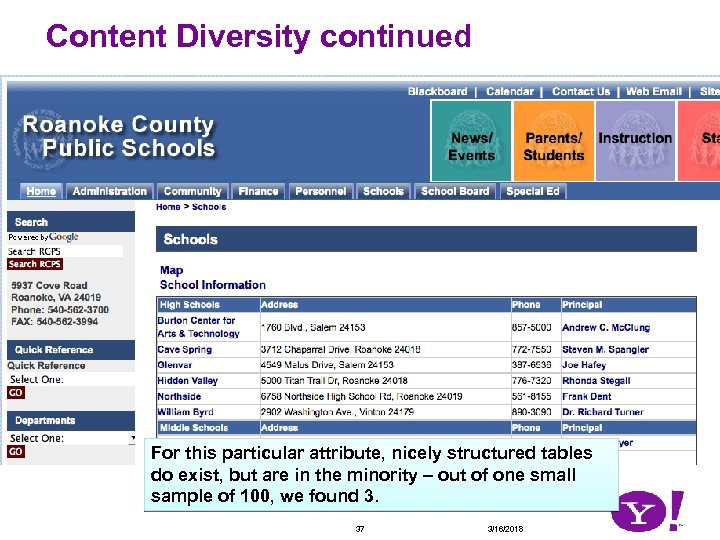

Content Diversity continued For this particular attribute, nicely structured tables do exist, but are in the minority – out of one small sample of 100, we found 3. 37 3/16/2018

Story so far… • Goal: end-to-end extraction, improve quality metrics • Challenge: surfacing gets harder over time • Challenge: content diversity • These challenges will outlast particular techniques Time to switch gears – now a brief introduction to principles of our approach, “domain-centric extraction” 38 3/16/2018

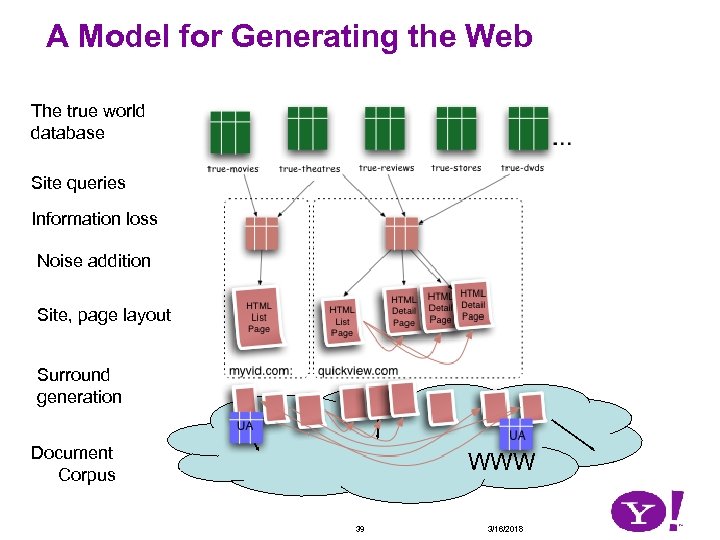

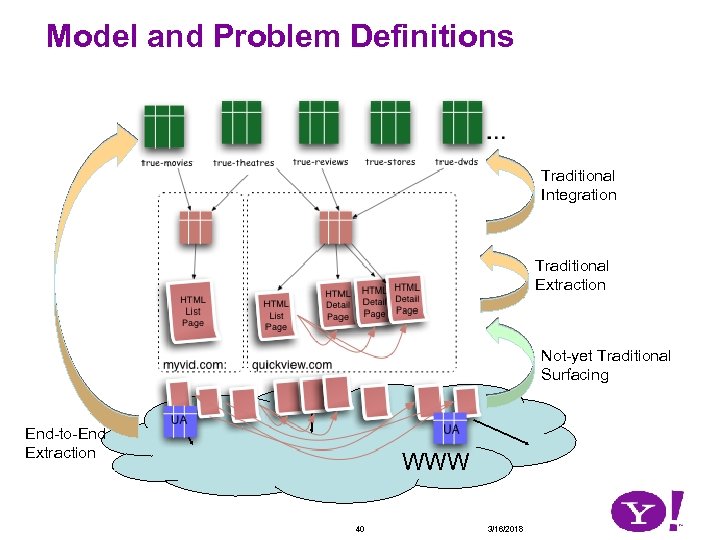

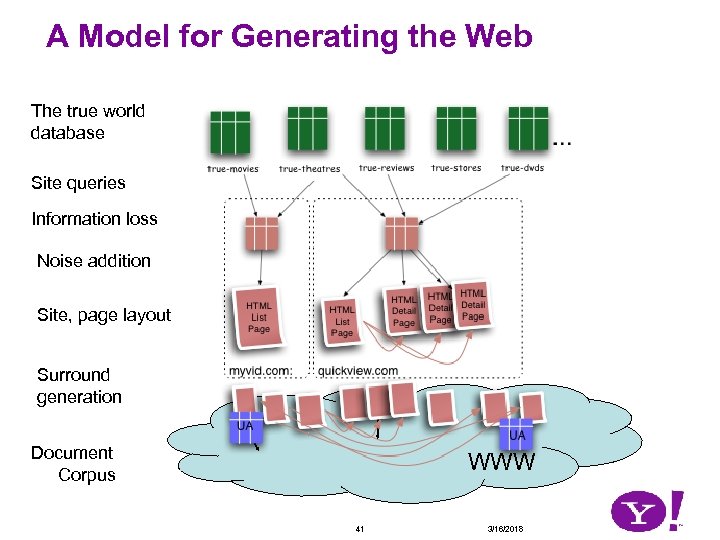

A Model for Generating the Web The true world database Site queries Information loss Noise addition Site, page layout Surround generation Document Corpus WWW 39 3/16/2018

Model and Problem Definitions Traditional Integration Traditional Extraction Not-yet Traditional Surfacing End-to-End Extraction WWW 40 3/16/2018

A Model for Generating the Web The true world database Site queries Information loss Noise addition Site, page layout Surround generation Document Corpus WWW 41 3/16/2018

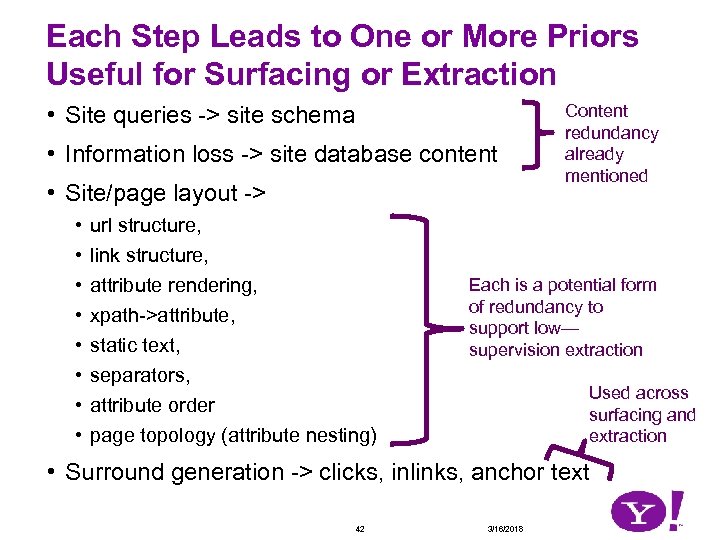

Each Step Leads to One or More Priors Useful for Surfacing or Extraction • Site queries -> site schema • Information loss -> site database content • Site/page layout -> • • url structure, link structure, attribute rendering, xpath->attribute, static text, separators, attribute order page topology (attribute nesting) Content redundancy already mentioned Each is a potential form of redundancy to support low— supervision extraction Used across surfacing and extraction • Surround generation -> clicks, inlinks, anchor text 42 3/16/2018

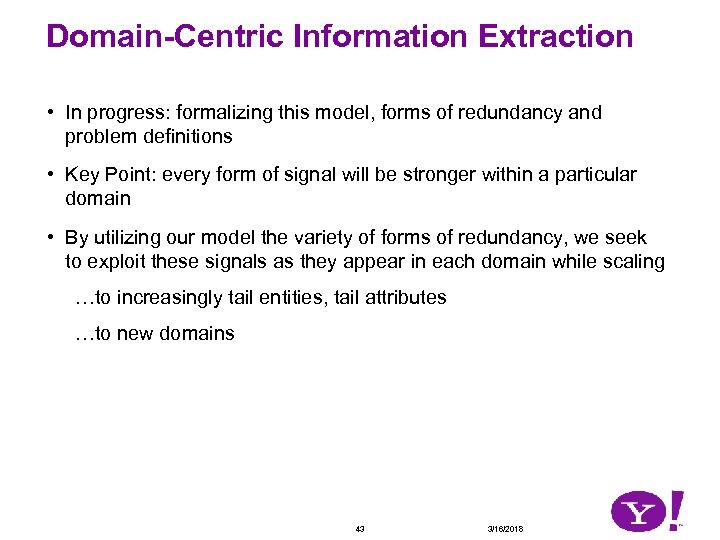

Domain-Centric Information Extraction • In progress: formalizing this model, forms of redundancy and problem definitions • Key Point: every form of signal will be stronger within a particular domain • By utilizing our model the variety of forms of redundancy, we seek to exploit these signals as they appear in each domain while scaling …to increasingly tail entities, tail attributes …to new domains 43 3/16/2018

Domain Centric Extraction – Some key points Know an estimate of the data Name Phone Cupertino High School (408) 366 -7300 … … noisy labeling on new pages See [Gulharne, Rastogi, Segmundu, Tengli, WWW 2010] (Yahoo! Labs Bangalore) for a start on using content redundancy, also WWT Partial data embedded in many web pages refine estimate of local site/page features based on generative model Same attributes and similar data appear on a variety of new pages across sites joint extraction-integration 44 3/16/2018

Example Application in Production 3/16/2018

In summary • End-to-end extraction problem definition • Importance of competitive extraction (pushes toward the tail) • Challenge: surfacing • Challenge: diversity • Generative model of site creation leads to catalog of forms of surfacing and extraction signal • Domain-Centric Extraction: Proceed domain-by-domain to maximize signal against diversity 46 3/16/2018

The End

e5f4c7fdb1d6fadc7a461fd205c0e958.ppt