cf036ecb319f057a8173ef4d6923f147.ppt

- Количество слайдов: 23

Rethinking Internet Bulk Data Transfers Krishna P. Gummadi Networked Systems Group Max-Planck Institute for Software Systems Saarbruecken, Germany

Why rethink Internet bulk data transfers? • The demand for bulk content in the Internet is rapidly rising – e. g. , movies, home videos, software, backups, scientific data • Yet, bulk transfers are expensive and inefficient – postal mail is often preferred for delivering bulk content • e. g. , netflix mails Dv. Ds, even Google uses sneaker-nets • The problem is inherent to the current Internet design – it was designed for connecting end hosts, not content delivery How to design a future network for bulk content delivery?

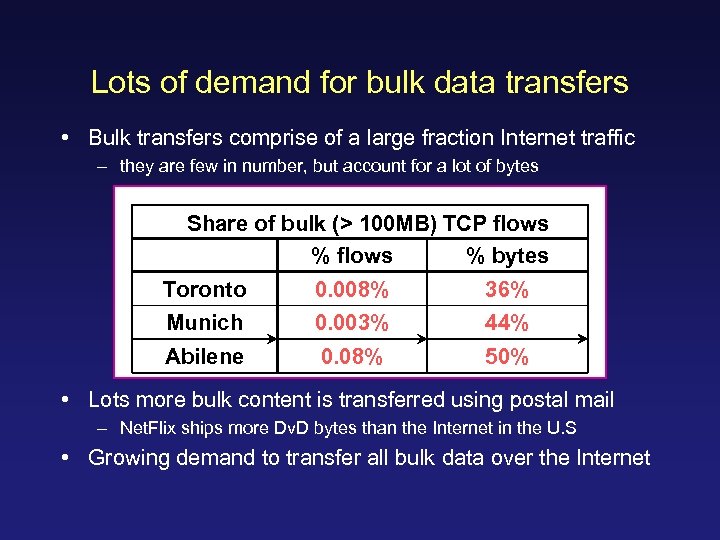

Lots of demand for bulk data transfers • Bulk transfers comprise of a large fraction Internet traffic – they are few in number, but account for a lot of bytes Share of bulk (> 100 MB) TCP flows % bytes Toronto 0. 008% 36% Munich 0. 003% 44% Abilene 0. 08% 50% • Lots more bulk content is transferred using postal mail – Net. Flix ships more Dv. D bytes than the Internet in the U. S • Growing demand to transfer all bulk data over the Internet

Internet bulk transfers are expensive and inefficient • Attempted 1. 2 TB transfer between U. S. and Germany – between Univ. of Washington and Max-Planck Institute • MPI’s happy hours were UW’s unhappy hours • network transfer would have lasted several weeks • Ultimately, used physical disks and DHL to transfer data • Other examples: – Amazon’s on-demand Simple Storage Service (S 3) • storage: 0. 15 cents/GB, data transfer: 0. 28 cents/GB – ISPs routinely rate-limit bulk transfers • even academic networks rate-limit You. Tube and file-sharing

The Internet is not designed for bulk content delivery • For many bulk transfers, users primarily care about completion time – i. e. , when the last data packet was delivered • But, Internet routing / transport focus on individual packets – such as their latency, jitter, loss, and ordered delivery • What would an Internet for bulk transfers focus on? – optimizing routes & transfer schedules for efficiency & cost • finding routes with most bandwidth, not min. delay • delaying transfers to save cost, if deadlines can be met

Rest of the talk • Identify opportunities for inexpensive and efficient data transfers • Explore network designs that exploit the opportunity

Opportunity 1: Spare network capacity • Average utilization of most Internet links is very low – backbone links typically run at 30% utilization – peering links are used more, but nowhere near capacity – residential access links are unused most of the time

Opportunity 1: Spare network capacity • Why do ISPs over-provision? – we do not know how to manage congested networks – TCP reacts badly to congestion • Over-provisioning avoids congestion & guarantees Qo. S! – backbone ISPs offer strict SLAs on reliability, jitter, and loss – customers buy bigger access pipes than they need • Why not use spare link capacities for bulk transfers?

Opportunity 2: Large diurnal variation in network load • Internet traffic shows large diurnal variation – much of it driven by human activity

Opportunity 2: Large diurnal variation in network load • ISPs charge customers based on their peak load – 95 th percentile of load averaged over 5 min intervals • Because networks have to be built to withstand peak load • Why not delay bulk transfers till periods of low load? – reduces peak load and evens out load variations – predictable load is easier for ISPs to handle – lower costs for customers

Opportunity 2: Large diurnal variation in network load • Can significantly reduce peak load by delaying bulk traffic – allowing a 2 hour delay can decrease peak load by 50%

Opportunity 3: Non-shortest paths with most bandwidth • The Internet picks a single short route between end hosts • Why not use non-shortest, multi-paths with most b. w. ?

Rest of the talk • Identify opportunities for inexpensive and efficient data transfers • Explore network designs that exploit the opportunity

Many potential network designs • How to exploit spare bandwidth for bulk transfers? – network-level differentiation using router queues? – transport-level separation using TCP-NICE? • How and where to delay bulk data for scheduling? – at every-router? at selected routers at peering points? – storage-enabled routers? data centers attached to routers? • How to select non-shortest multiple paths? – separately within each ISP? or across different ISPs? • But, all designs share few key architectural features

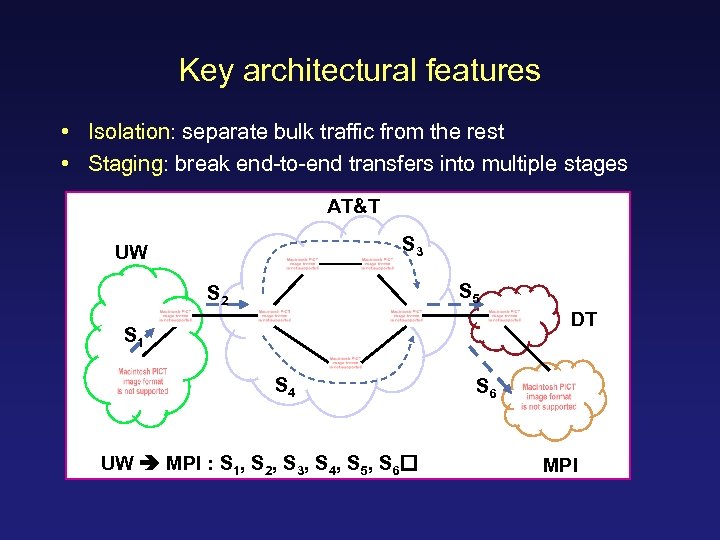

Key architectural features • Isolation: separate bulk traffic from the rest • Staging: break end-to-end transfers into multiple stages AT&T S 3 UW S 5 S 2 DT S 1 S 4 UW MPI : S 1, S 2, S 3, S 4, S 5, S 6 MPI

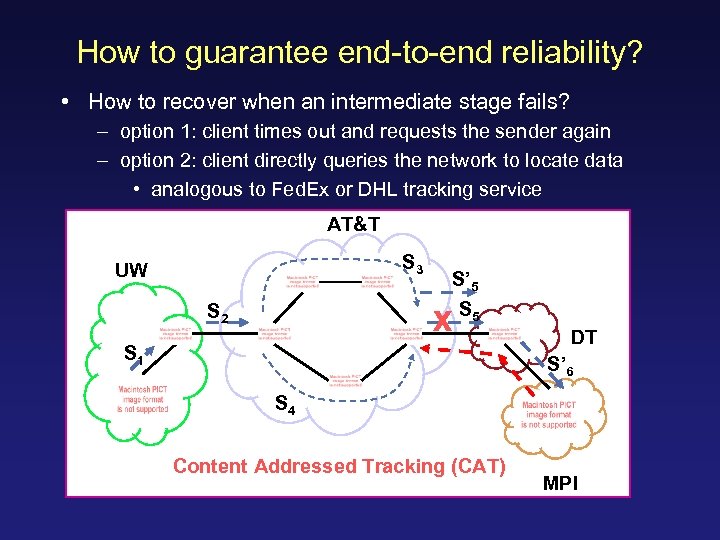

How to guarantee end-to-end reliability? • How to recover when an intermediate stage fails? – option 1: client times out and requests the sender again – option 2: client directly queries the network to locate data • analogous to Fed. Ex or DHL tracking service AT&T S 3 UW S 2 S’ 5 S 5 X S 1 DT S’ 6 S 4 Content Addressed Tracking (CAT) MPI

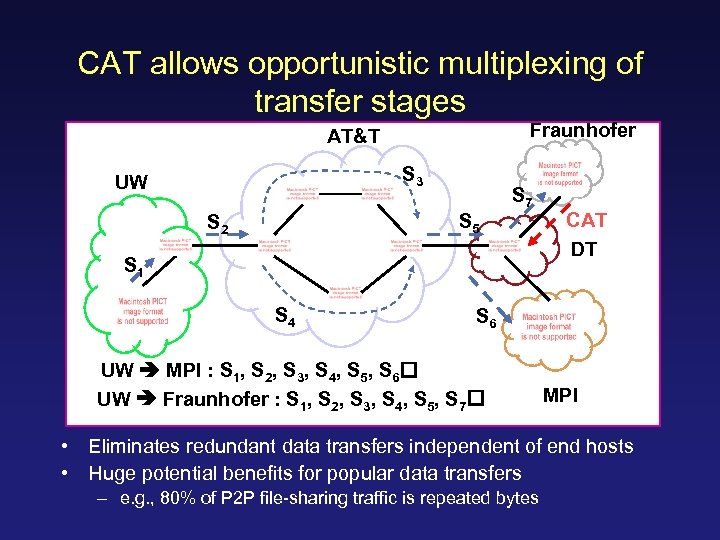

CAT allows opportunistic multiplexing of transfer stages Fraunhofer AT&T S 3 UW S 5 S 2 S 7 S 1 S 4 CAT DT S 6 UW MPI : S 1, S 2, S 3, S 4, S 5, S 6 UW Fraunhofer : S 1, S 2, S 3, S 4, S 5, S 7 MPI • Eliminates redundant data transfers independent of end hosts • Huge potential benefits for popular data transfers – e. g. , 80% of P 2 P file-sharing traffic is repeated bytes

Service model of the new architecture • Our architecture has 3 key features – isolation, staging and content addressed tracking • Data transfers in our architecture are not end-to-end – packets are not individually ack’ed by end hosts • Our architecture provides an offline data transfer service – the sender pushes the data into the network – the network delivers the data to the receiver

Evaluating the architecture • CERN’s LHC workload – 15 Petabytes of data per year -- 41 TB/day – transfer data from Tier 1 site at FNAL and 6 Tier 2 sites

Benefits of our architecture • Abilene (10 Gbps) backbone was deemed insufficient – plan to use NSF’s new Tera. Grid (40 Gbps) network • Could our new architecture help? Max. Time (days) required to transfer 41 TB over Abilene • With our architecture, the CERN data could be transferred using less than 20% of spare b. w. in Abilene!

Are bulk transfers worth a new network architecture? • The economics of distributed computing – computation costs: falling at Moore’s law – storage costs: falling faster than Moore’s law – networking costs: falling slower than Moore’s law • Wide-area bandwidth is the most expensive resource today – strong incentive to put computation near data • Lowering bulk transfer costs enables new distributed systems – encourages data replication near computation • e. g. , Web on my disk, Google might become PC software

Conclusion • A large and growing demand for bulk data in the Internet • Yet, bulk transfers are expensive and inefficient • The Internet is not designed for bulk content transfers • Proposed an offline data transfer architecture – to exploit spare resources, to schedule transfers, to route efficiently, and to eliminate redundant transfers • It relies on isolation, staging, & content-addressed tracking – preliminary evaluation shows lots of promise

Acknowldgements • Students at MPI-SWS – Massimiliano Marcon – Andreas Haeberlen – Marcel Dischinger • Faculty – Stefan Savage, UCSD – Amin Vahdat, UCSD – Peter Druschel, MPI-SWS

cf036ecb319f057a8173ef4d6923f147.ppt