cfb8302b75dd003216f10d4ff5ff0734.ppt

- Количество слайдов: 23

Resource Management for High-Throughput Computing at the ESRF G. Förstner and R. Wilcke ESRF, Grenoble (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 1

Resource Management for High-Throughput Computing at the ESRF G. Förstner and R. Wilcke ESRF, Grenoble (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 1

Why and how high-throughput computing? Basic problem: process user data “fast” – bigger and faster detectors – data rate increases – time available for analysis gets shorter Brute-force solution: buy more faster computers – – Moore’s law: transistor density doubles every 18 months limits: mono-atomic layers higher clock speed Þ exponential temperature rise may need more support staff, new software licenses, electrical power, cooling, rooms. . . Þ total cost of ownership can become unacceptable (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 2

Why and how high-throughput computing? Basic problem: process user data “fast” – bigger and faster detectors – data rate increases – time available for analysis gets shorter Brute-force solution: buy more faster computers – – Moore’s law: transistor density doubles every 18 months limits: mono-atomic layers higher clock speed Þ exponential temperature rise may need more support staff, new software licenses, electrical power, cooling, rooms. . . Þ total cost of ownership can become unacceptable (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 2

Thus: brute force no longer sufficient Þ look at precise needs of “fast data processing” Two scenarios are typically found: – many (possibly small) sets of independent data Þ important is overall throughput, not execution time for each job – (possibly few) sequence-dependent data sets with much data and / or complex processing Þ important is elapsed time / job, as job n must finish before n + 1 can start (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 3

Thus: brute force no longer sufficient Þ look at precise needs of “fast data processing” Two scenarios are typically found: – many (possibly small) sets of independent data Þ important is overall throughput, not execution time for each job – (possibly few) sequence-dependent data sets with much data and / or complex processing Þ important is elapsed time / job, as job n must finish before n + 1 can start (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 3

Improve overall throughput: – – – split task in several (many) independent jobs distribute jobs to several (many) different processors select “most appropriate” processor for each job scales well with number of processors no change to program code needed Þ simple parallel processing Reduce elapsed time for each job: – – distribute each job over several processors (re-)structure program into independent loops optimize data access for each processor needs change (possibly restructuring/rewrite) of program code Þ task for parallel programming (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 4

Improve overall throughput: – – – split task in several (many) independent jobs distribute jobs to several (many) different processors select “most appropriate” processor for each job scales well with number of processors no change to program code needed Þ simple parallel processing Reduce elapsed time for each job: – – distribute each job over several processors (re-)structure program into independent loops optimize data access for each processor needs change (possibly restructuring/rewrite) of program code Þ task for parallel programming (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 4

Even parallelization has its limits (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 5

Even parallelization has its limits (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 5

Very expensive to provide for “worst case” Better: use existing resources more efficiently – select “most appropriate” processor for each job – balance processing load between processors – fill under-used periods (nights, weekends, . . . ) Þ task for resource management system Required features: – – – resource distribution resource monitoring job queuing mechanism scheduling policy priority scheme ESRF uses OAR (ENSIMAG Grenoble: oar. imag. fr) (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 6

Very expensive to provide for “worst case” Better: use existing resources more efficiently – select “most appropriate” processor for each job – balance processing load between processors – fill under-used periods (nights, weekends, . . . ) Þ task for resource management system Required features: – – – resource distribution resource monitoring job queuing mechanism scheduling policy priority scheme ESRF uses OAR (ENSIMAG Grenoble: oar. imag. fr) (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 6

Resource Manager OAR: Basic Use – OAR features: – – – interactive or batch mode controls processor and memory placement of jobs (cpuset) request resources (cores, memory, time. . . ) specify properties (manufacturer, speed, network access. . . ) can define installation-specific properties and rules – manage OAR with 3 basic commands: – request resources (submit job) (oarsub) – inquire status of requests (oarstat) – if necessary, cancel requests (oardel) – after submitting jobs, user can log off and go home – OAR starts jobs when convenient, delivers results (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 7

Resource Manager OAR: Basic Use – OAR features: – – – interactive or batch mode controls processor and memory placement of jobs (cpuset) request resources (cores, memory, time. . . ) specify properties (manufacturer, speed, network access. . . ) can define installation-specific properties and rules – manage OAR with 3 basic commands: – request resources (submit job) (oarsub) – inquire status of requests (oarstat) – if necessary, cancel requests (oardel) – after submitting jobs, user can log off and go home – OAR starts jobs when convenient, delivers results (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 7

OAR at ESRF: present status – – – 1 public, 2 private clusters: total 109 nodes, 803 CPUs access to clusters only via front-end computers (30 nodes) no direct login to high-performance computers dedicated clusters are strong incentive for using OAR front-ends are deliberately low performance: discourage production jobs – any computer can be front-end: need to install OAR client (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 8

OAR at ESRF: present status – – – 1 public, 2 private clusters: total 109 nodes, 803 CPUs access to clusters only via front-end computers (30 nodes) no direct login to high-performance computers dedicated clusters are strong incentive for using OAR front-ends are deliberately low performance: discourage production jobs – any computer can be front-end: need to install OAR client (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 8

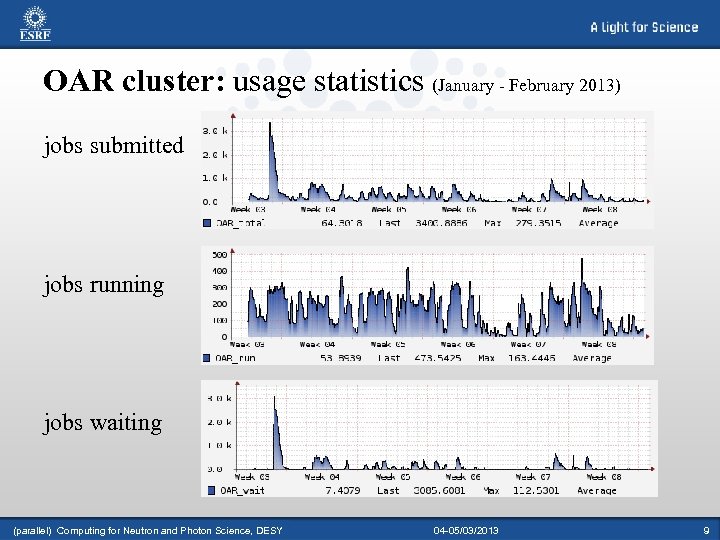

OAR cluster: usage statistics (January - February 2013) jobs submitted jobs running jobs waiting (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 9

OAR cluster: usage statistics (January - February 2013) jobs submitted jobs running jobs waiting (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 9

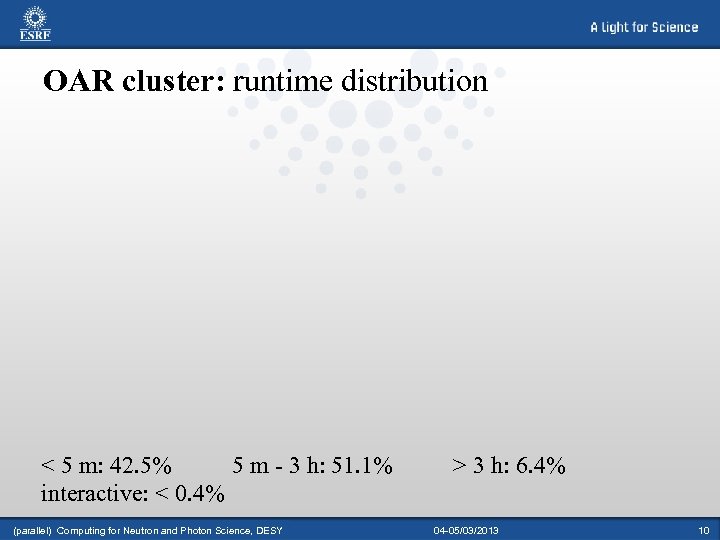

OAR cluster: runtime distribution < 5 m: 42. 5% 5 m - 3 h: 51. 1% interactive: < 0. 4% (parallel) Computing for Neutron and Photon Science, DESY > 3 h: 6. 4% 04 -05/03/2013 10

OAR cluster: runtime distribution < 5 m: 42. 5% 5 m - 3 h: 51. 1% interactive: < 0. 4% (parallel) Computing for Neutron and Photon Science, DESY > 3 h: 6. 4% 04 -05/03/2013 10

OAR monitoring tools: for efficient resource management, need to know: – load on computing system (active / waiting jobs, available / free / used resources, . . . ) important for users: – see available resources – estimate wait time for jobs – hardware status of computing system (hosts down, memory use, network traffic, . . . ) important for administrators to spot problems – Draw. Oar. Gantt (part of OAR): shows load on computing system – Ganglia (ganglia. sourceforge. net): monitors hardware of system (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 11

OAR monitoring tools: for efficient resource management, need to know: – load on computing system (active / waiting jobs, available / free / used resources, . . . ) important for users: – see available resources – estimate wait time for jobs – hardware status of computing system (hosts down, memory use, network traffic, . . . ) important for administrators to spot problems – Draw. Oar. Gantt (part of OAR): shows load on computing system – Ganglia (ganglia. sourceforge. net): monitors hardware of system (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 11

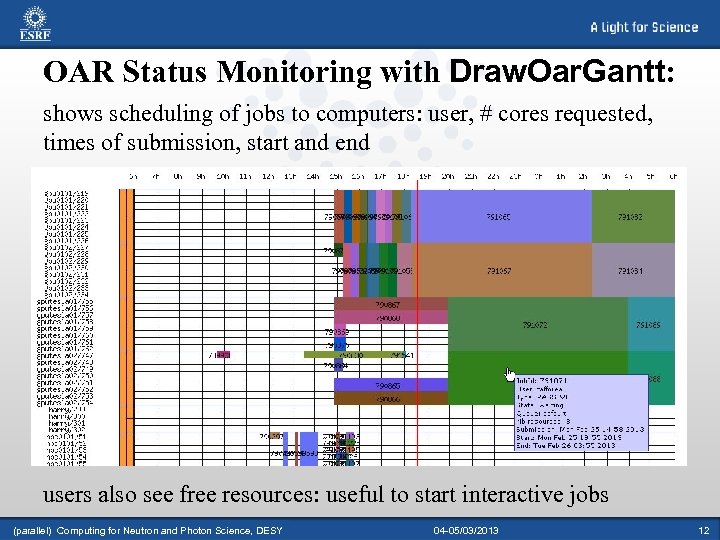

OAR Status Monitoring with Draw. Oar. Gantt: shows scheduling of jobs to computers: user, # cores requested, times of submission, start and end users also see free resources: useful to start interactive jobs (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 12

OAR Status Monitoring with Draw. Oar. Gantt: shows scheduling of jobs to computers: user, # cores requested, times of submission, start and end users also see free resources: useful to start interactive jobs (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 12

Hardware Monitoring with Ganglia: – show performance (e. g. load, memory, network traffic. . . can be configured) – select time period (hour, day, week, month, year) (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 13

Hardware Monitoring with Ganglia: – show performance (e. g. load, memory, network traffic. . . can be configured) – select time period (hour, day, week, month, year) (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 13

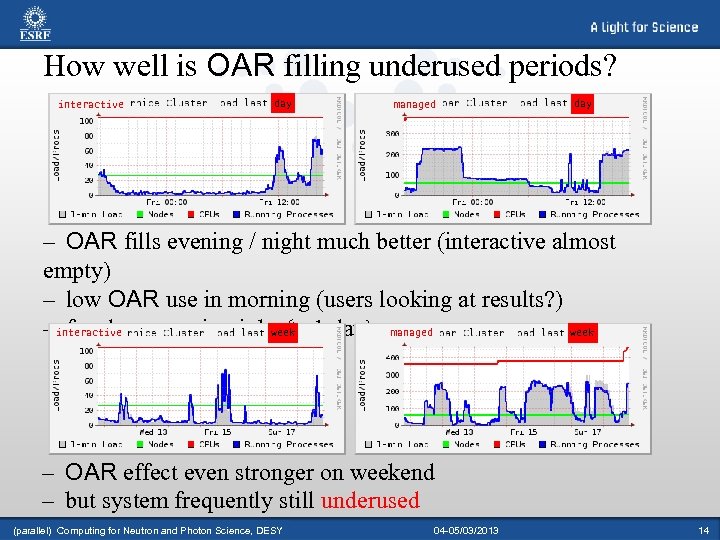

How well is OAR filling underused periods? interactive day managed day – OAR fills evening / night much better (interactive almost empty) – low OAR use in morning (users looking at results? ) – interactive few long-running jobs (> 1 day) managed week – OAR effect even stronger on weekend – but system frequently still underused (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 14

How well is OAR filling underused periods? interactive day managed day – OAR fills evening / night much better (interactive almost empty) – low OAR use in morning (users looking at results? ) – interactive few long-running jobs (> 1 day) managed week – OAR effect even stronger on weekend – but system frequently still underused (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 14

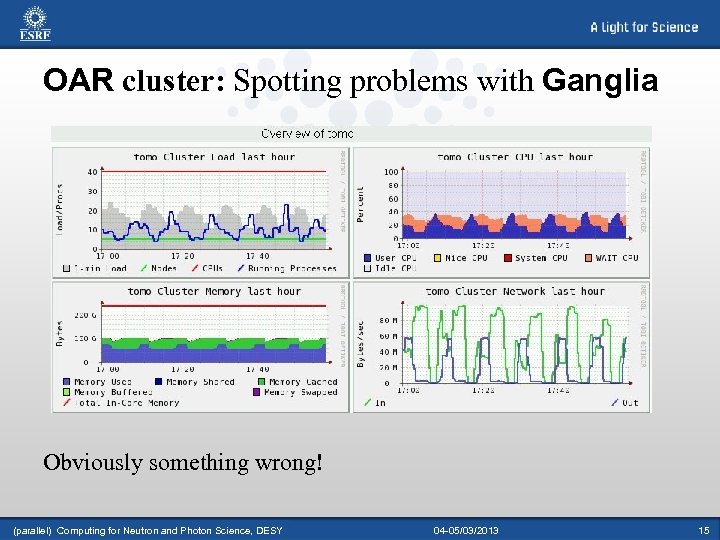

OAR cluster: Spotting problems with Ganglia Obviously something wrong! (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 15

OAR cluster: Spotting problems with Ganglia Obviously something wrong! (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 15

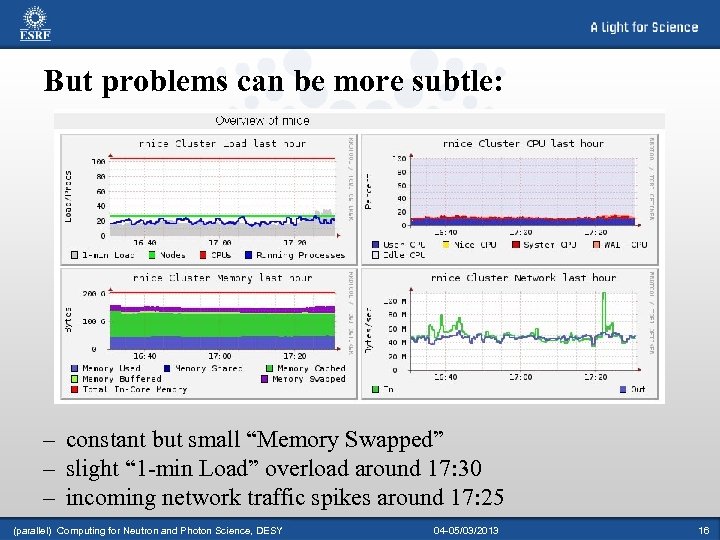

But problems can be more subtle: – constant but small “Memory Swapped” – slight “ 1 -min Load” overload around 17: 30 – incoming network traffic spikes around 17: 25 (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 16

But problems can be more subtle: – constant but small “Memory Swapped” – slight “ 1 -min Load” overload around 17: 30 – incoming network traffic spikes around 17: 25 (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 16

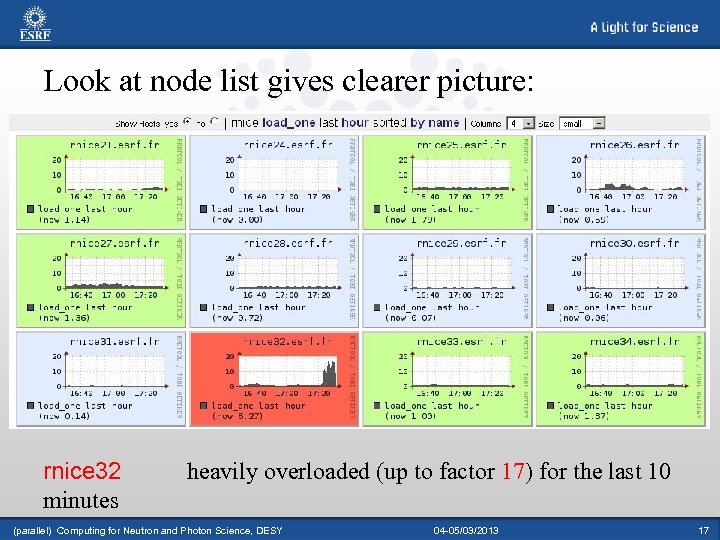

Look at node list gives clearer picture: rnice 32 minutes heavily overloaded (up to factor 17) for the last 10 (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 17

Look at node list gives clearer picture: rnice 32 minutes heavily overloaded (up to factor 17) for the last 10 (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 17

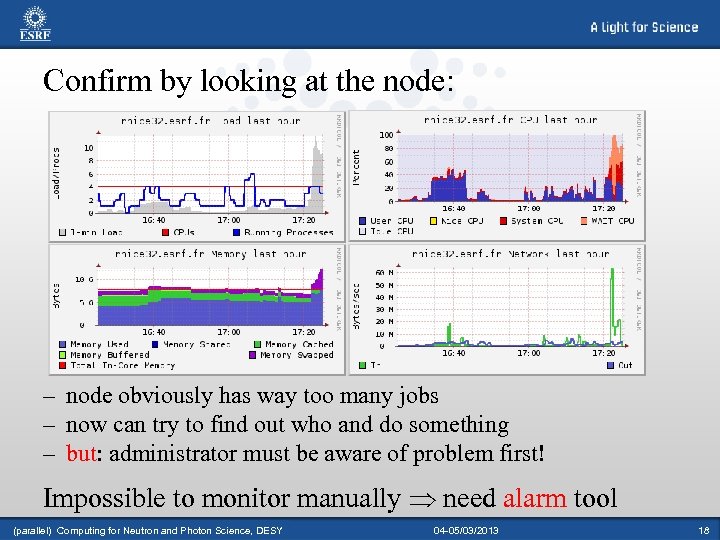

Confirm by looking at the node: – node obviously has way too many jobs – now can try to find out who and do something – but: administrator must be aware of problem first! Impossible to monitor manually Þ need alarm tool (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 18

Confirm by looking at the node: – node obviously has way too many jobs – now can try to find out who and do something – but: administrator must be aware of problem first! Impossible to monitor manually Þ need alarm tool (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 18

Icinga alarm monitor (www. icinga. org, fork from Nagios) – Zulu for “it examines” (with click sound for the “c”) – monitors network services, host resources and software problems – integrates installation-developed services and checks – notifies of problems via email and monitor window (Nagstamon) – more detailed status information from the web interface (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 19

Icinga alarm monitor (www. icinga. org, fork from Nagios) – Zulu for “it examines” (with click sound for the “c”) – monitors network services, host resources and software problems – integrates installation-developed services and checks – notifies of problems via email and monitor window (Nagstamon) – more detailed status information from the web interface (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 19

OAR: Problems / FAQs (1) Syntax of submit command is not user-friendly – true (for non-SQL specialists) – in sample of 618428 requests, » 12% terminate with error – simple request (gives 1 core and default time 2 h): interactive (oarsub -I) or not (oarsub prog_name) – complicated request: write script file using documentation, modify script as needed – most efficient way to accomplish a task often not obvious Þ users need help to set up and run OAR jobs (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 20

OAR: Problems / FAQs (1) Syntax of submit command is not user-friendly – true (for non-SQL specialists) – in sample of 618428 requests, » 12% terminate with error – simple request (gives 1 core and default time 2 h): interactive (oarsub -I) or not (oarsub prog_name) – complicated request: write script file using documentation, modify script as needed – most efficient way to accomplish a task often not obvious Þ users need help to set up and run OAR jobs (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 20

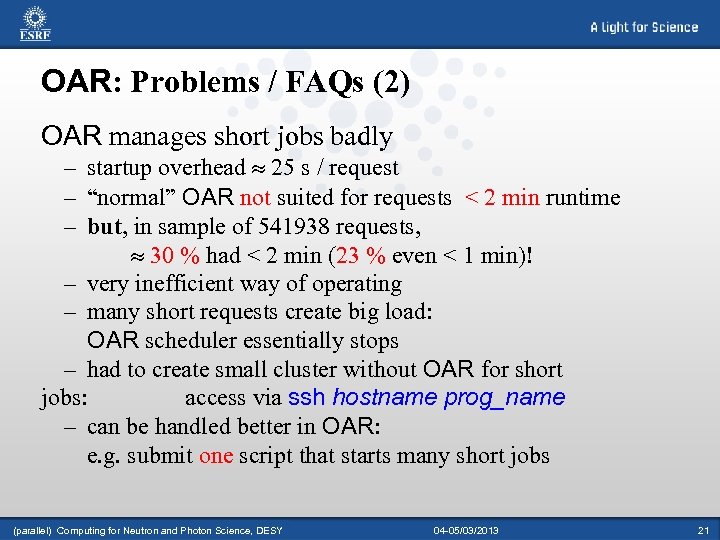

OAR: Problems / FAQs (2) OAR manages short jobs badly – startup overhead » 25 s / request – “normal” OAR not suited for requests < 2 min runtime – but, in sample of 541938 requests, » 30 % had < 2 min (23 % even < 1 min)! – very inefficient way of operating – many short requests create big load: OAR scheduler essentially stops – had to create small cluster without OAR for short jobs: access via ssh hostname prog_name – can be handled better in OAR: e. g. submit one script that starts many short jobs (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 21

OAR: Problems / FAQs (2) OAR manages short jobs badly – startup overhead » 25 s / request – “normal” OAR not suited for requests < 2 min runtime – but, in sample of 541938 requests, » 30 % had < 2 min (23 % even < 1 min)! – very inefficient way of operating – many short requests create big load: OAR scheduler essentially stops – had to create small cluster without OAR for short jobs: access via ssh hostname prog_name – can be handled better in OAR: e. g. submit one script that starts many short jobs (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 21

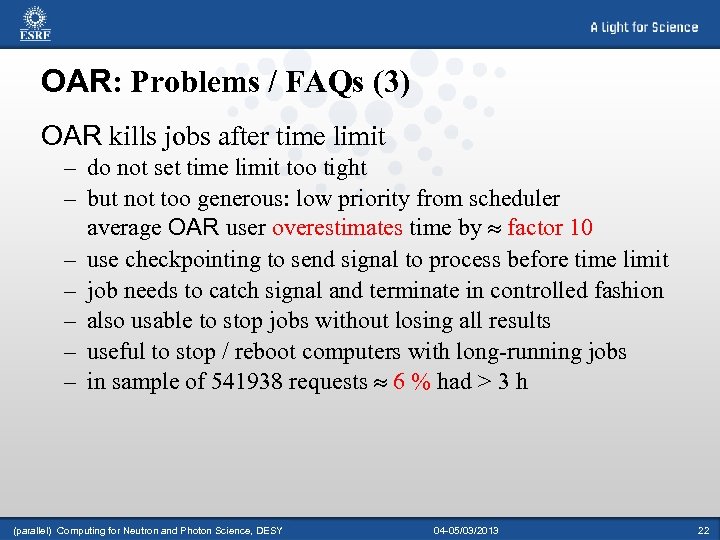

OAR: Problems / FAQs (3) OAR kills jobs after time limit – do not set time limit too tight – but not too generous: low priority from scheduler average OAR user overestimates time by » factor 10 – use checkpointing to send signal to process before time limit – job needs to catch signal and terminate in controlled fashion – also usable to stop jobs without losing all results – useful to stop / reboot computers with long-running jobs – in sample of 541938 requests » 6 % had > 3 h (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 22

OAR: Problems / FAQs (3) OAR kills jobs after time limit – do not set time limit too tight – but not too generous: low priority from scheduler average OAR user overestimates time by » factor 10 – use checkpointing to send signal to process before time limit – job needs to catch signal and terminate in controlled fashion – also usable to stop jobs without losing all results – useful to stop / reboot computers with long-running jobs – in sample of 541938 requests » 6 % had > 3 h (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 22

Conclusions: – – – – OAR helps to fill underused periods (nights / weekends) efficient and relatively easy for batch jobs administration of interactive jobs more complex need hardware and OAR queue monitoring for efficiency system at ESRF frequently underused problems in particular with very short and very long jobs users need help to set up OAR jobs still room for improvement Þ watch this space! Acknowledgements: – C. Ferrero, J. Kieffer, A. Mirone and B. Rousselle – ESRF Systems & Communications - Unix Unit – ESRF Data Analysis Unit (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 23

Conclusions: – – – – OAR helps to fill underused periods (nights / weekends) efficient and relatively easy for batch jobs administration of interactive jobs more complex need hardware and OAR queue monitoring for efficiency system at ESRF frequently underused problems in particular with very short and very long jobs users need help to set up OAR jobs still room for improvement Þ watch this space! Acknowledgements: – C. Ferrero, J. Kieffer, A. Mirone and B. Rousselle – ESRF Systems & Communications - Unix Unit – ESRF Data Analysis Unit (parallel) Computing for Neutron and Photon Science, DESY 04 -05/03/2013 23