02bc3655af28ee7ff06236c8ed7a1c34.ppt

- Количество слайдов: 32

Resource bounded dimension and learning Joint work with Ricard Gavaldà, María López-Valdés, and Vinodchandran N. Variyam Elvira Mayordomo, U. Zaragoza CIRM, 2009

Contents 1. Resource-bounded dimension 2. Learning models 3. A few results on the size of learnable classes 4. Consequences Work in progress

Effective dimension • Effective dimension is based in a characterization of Hausdorff dimension on given by Lutz (2000) • The characterization is a very clever way to deal with a single covering using gambling

Hausdorff dimension in (Lutz characterization) Let s (0, 1). An s-gale is such that It is the capital corresponding to a fixed strategy and a the house taking a fraction of d(w) is an s-gale iff | |(1 -s)|w|d(w) is a martingale

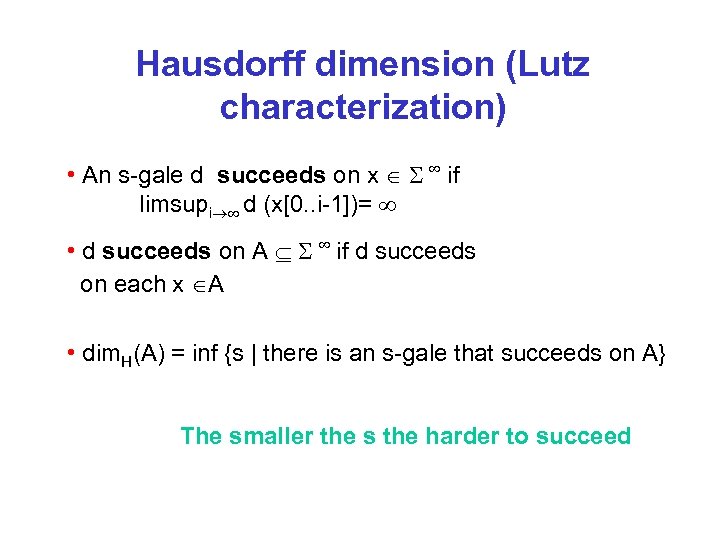

Hausdorff dimension (Lutz characterization) • An s-gale d succeeds on x if limsupi d (x[0. . i-1])= • d succeeds on A if d succeeds on each x A • dim. H(A) = inf {s | there is an s-gale that succeeds on A} The smaller the s the harder to succeed

Effectivizing Hausdorff dimension • We restrict to constructive or effective gales and get the corresponding “dimensions” that are meaningful in subsets of we are interested in

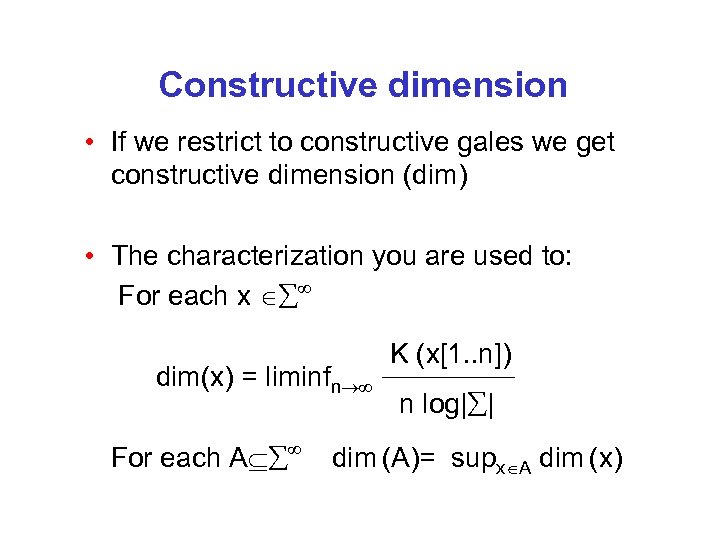

Constructive dimension • If we restrict to constructive gales we get constructive dimension (dim) • The characterization you are used to: For each x dim(x) = liminfn For each A K (x[1. . n]) n log| | dim (A)= supx A dim (x)

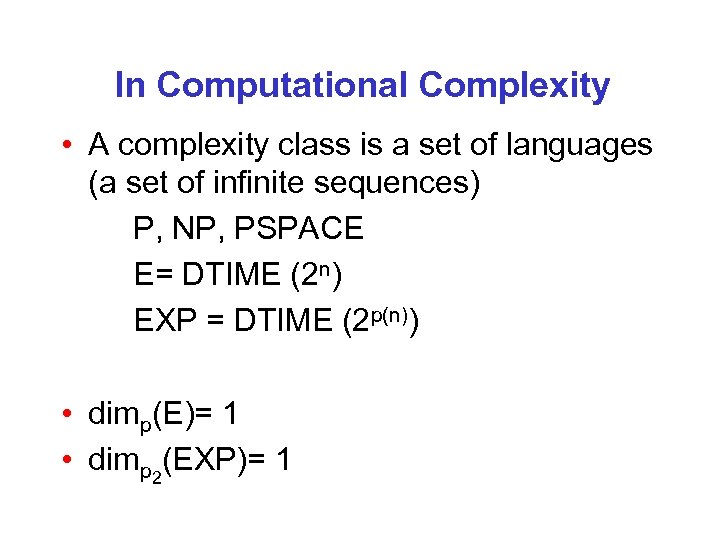

Resource-bounded dimensions • Restricting to effectively computable gales we have: – computable in polynomial time dimp – computable in quasi-polynomial time dimp 2 – computable in polynomial space dimpspace • Each of this effective dimensions is “the right one” for a set of sequences (complexity class)

In Computational Complexity • A complexity class is a set of languages (a set of infinite sequences) P, NP, PSPACE E= DTIME (2 n) EXP = DTIME (2 p(n)) • dimp(E)= 1 • dimp 2(EXP)= 1

What for? • We use dimp to estimate size of subclasses of E (and call it dimension in E) Important: Every set has a dimension Notice that dimp(X)<1 implies X E • Same for dimp 2 inside of EXP (dimension in EXP), etc • I will also mention a dimension to be used inside PSPACE

My goal today • I will use resource-bounded dimension to estimate the size of interesting subclasses of E, EXP and PSPACE • If I show that X a subclass of E has dimension 0 (or dimension <1) in E this means: – X is quite smaller than E (most elements of E are outside of X) – It is easy to construct an element out of X (I can even combine this with other dim 0 properties) • Today I will be looking at learnable subclasses

My goal today • We want to use dimension to compare the power of different learning models • We also want to estimate the amount of languages that can be learned

Contents 1. Resource-bounded dimension 2. Learning models 3. A few results on the size of learnable classes 4. Consequences

Learning algorithms • The teacher has a finite set T with T {0, 1}n in mind, the concept • The learner goal is to identify exactly T, by asking queries to the teacher or making guesses about T • The teacher is faithful but adversarial • The learner goal is to identify exactly T • Learner=algorithm, limited resources

Learning … • Learning algorithms are extensively used in practical applications • It is quite interesting as an alternative formalism for information content

Two learning models • Online mistake-bound model (Littlestone) • PAC- learning (Valiant)

Littlestone model (Online mistake-bound model) • Let the concept be T {0, 1}n • The learner receives a series of cases x 1, x 2, . . . from {0, 1}n • For each of them the learner guesses whether it belongs to T • After guessing on case xi the learner receives the correct answer

Littlestone model • “Online mistake-bound model” • The following are restricted – The maximum number of mistakes – The time to guess case xi in terms of n and i

PAC-learning • A PAC-learner is a polynomial-time probabilistic algorithm A that given n, , and produces a list of random membership queries q 1, …, qt to the concept T {0, 1}n and from the answers it computes a hypothesis A(n, , ) that is “ - close to the concept with probability 1 - ” Membership query q: is q in the concept?

PAC-learning • An algorithm A PAC-learns a class C if – A is a probabilistic algorithm running in polynomial time – for every L in C and for every n, (T= L=n) – for every >0 and every >0 – A outputs a concept AL(n, r, , ) with Pr( ||AL(n, r, , ) L=n||< 2 n ) > 1 - * r is the size of the representation of L=n

What can be PAC-learned • AC 0 • Everything can be PACNP-learned • Note: We are specially interested in learning parts of P/poly= languages that have a polynomial representation

Related work • Lindner, Schuler, and Watanabe (2000) study the size of PAC-learnable classes using resource-bounded measure • Hitchcock (2000) looked at the online mistake-bound model for a particular case (sublinear number of mistakes)

Contents 1. Resource-bounded dimension 2. Learning models 3. A few results on the size of learnable classes 4. Consequences

Our result Theorem If EXP≠MA then every PAC-learnable subclass of P/poly has dimension 0 in EXP In other words: If weak pseudorandom generators exist then every PAC-learnable class (with polynomial representations) has dimension 0 in EXP

![Immediate consequences • From [Regan et al] If strong pseudorandom generators exist then P/poly Immediate consequences • From [Regan et al] If strong pseudorandom generators exist then P/poly](https://present5.com/presentation/02bc3655af28ee7ff06236c8ed7a1c34/image-25.jpg)

Immediate consequences • From [Regan et al] If strong pseudorandom generators exist then P/poly has dimension 1 in EXP So under this hypothesis most of P/poly cannot be PAC-learned

Further results • Every class that can be PAC-learned with polylog space has dimension 0 in PSPACE

Littlestone Theorem For each a 1/2 every class that is Littlestone learnable with at most a 2 n mistakes has dimension H(a)= -a log a –(1 -a) log(1 -a) E =DTIME(2 O(n))

![Can we Littlestone-learn P/poly? • We mentioned From [Regan et al] If strong pseudorandom Can we Littlestone-learn P/poly? • We mentioned From [Regan et al] If strong pseudorandom](https://present5.com/presentation/02bc3655af28ee7ff06236c8ed7a1c34/image-28.jpg)

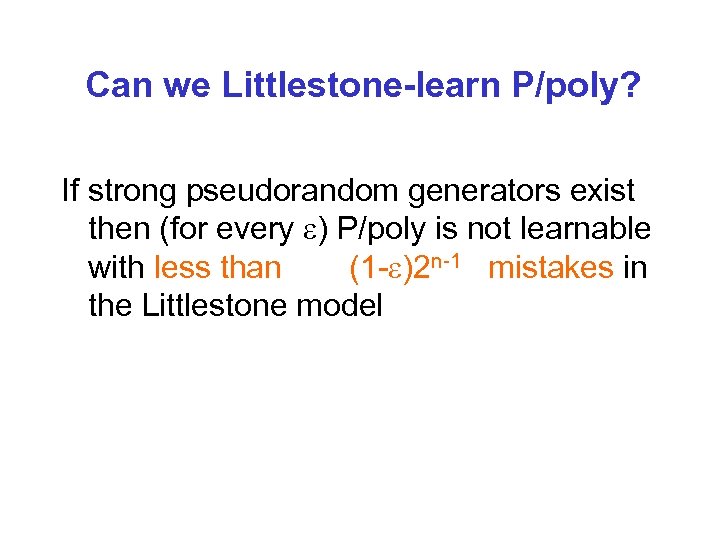

Can we Littlestone-learn P/poly? • We mentioned From [Regan et al] If strong pseudorandom generators exist then P/poly has dimension 1 in EXP

Can we Littlestone-learn P/poly? If strong pseudorandom generators exist then (for every ) P/poly is not learnable with less than (1 - )2 n-1 mistakes in the Littlestone model

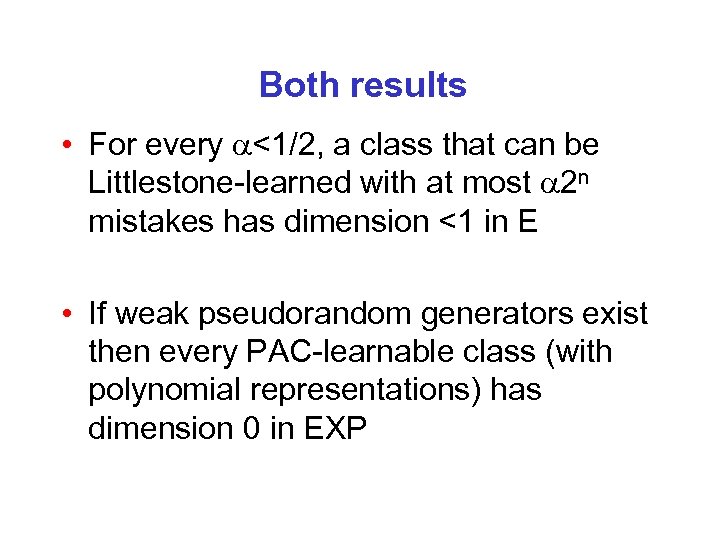

Both results • For every <1/2, a class that can be Littlestone-learned with at most 2 n mistakes has dimension <1 in E • If weak pseudorandom generators exist then every PAC-learnable class (with polynomial representations) has dimension 0 in EXP

Comparison • It is not clear how to go from PAC to Littlestone (or vice versa) • We can go – from Equivalence queries to PAC – from Equivalence queries to Littlestone

Directions • Look at other models for exact learning (membership, equivalence). • Find quantitative results that separate them.

02bc3655af28ee7ff06236c8ed7a1c34.ppt