cf999634122b24f7d3d33668c3fc85ad.ppt

- Количество слайдов: 125

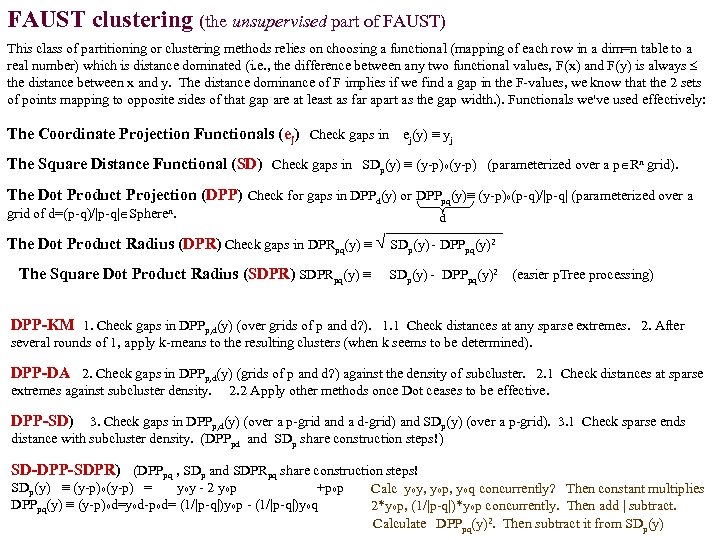

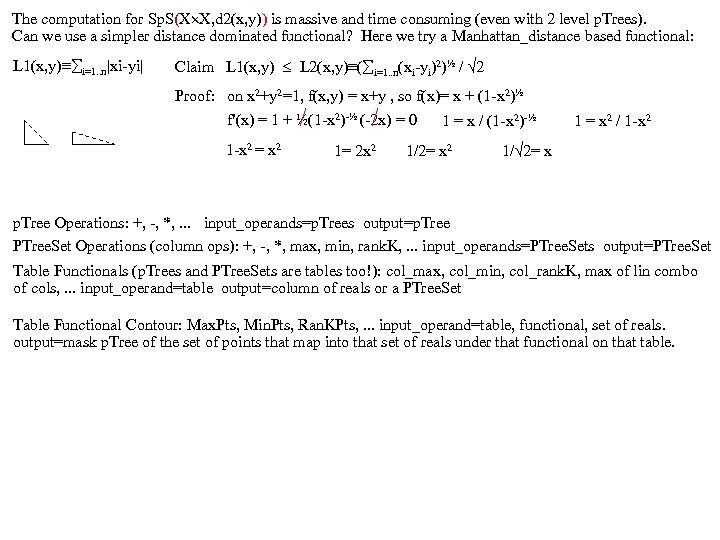

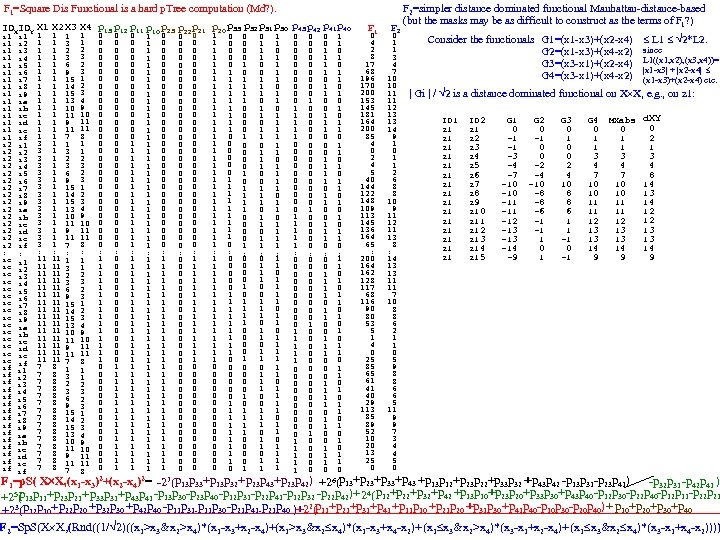

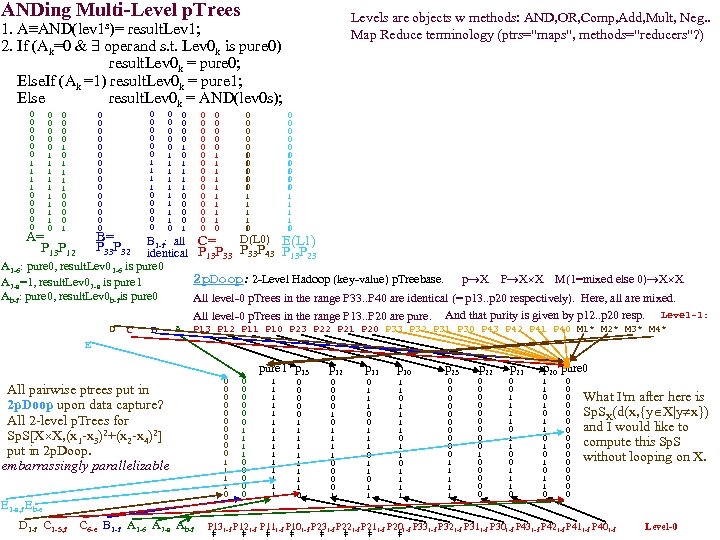

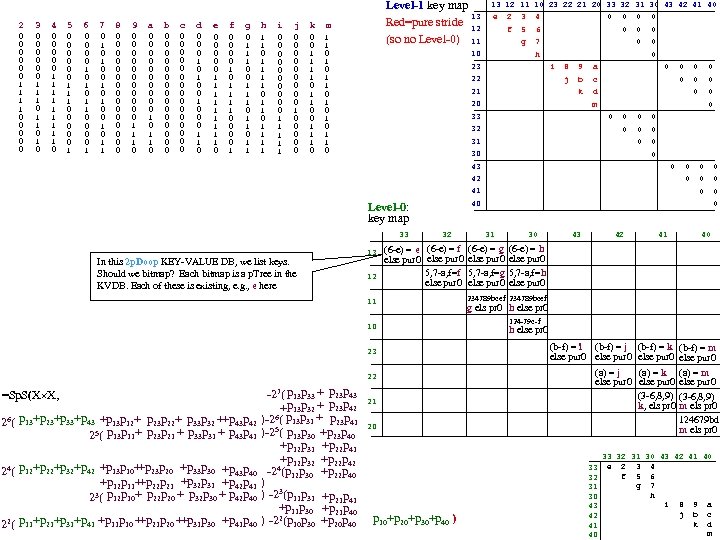

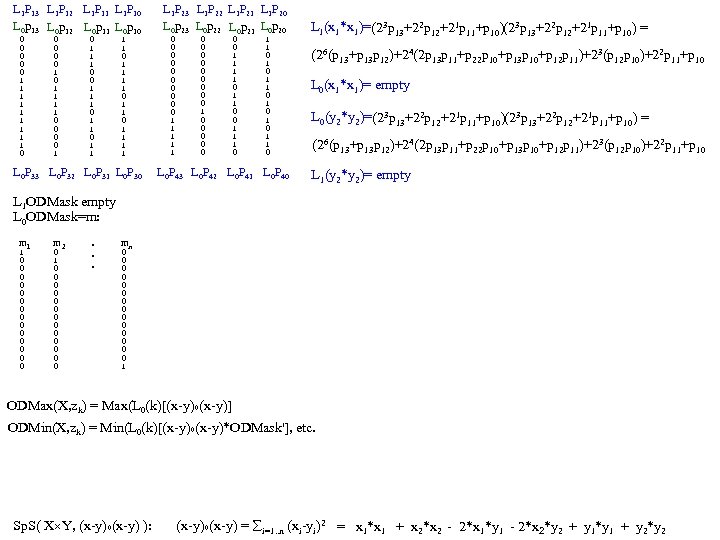

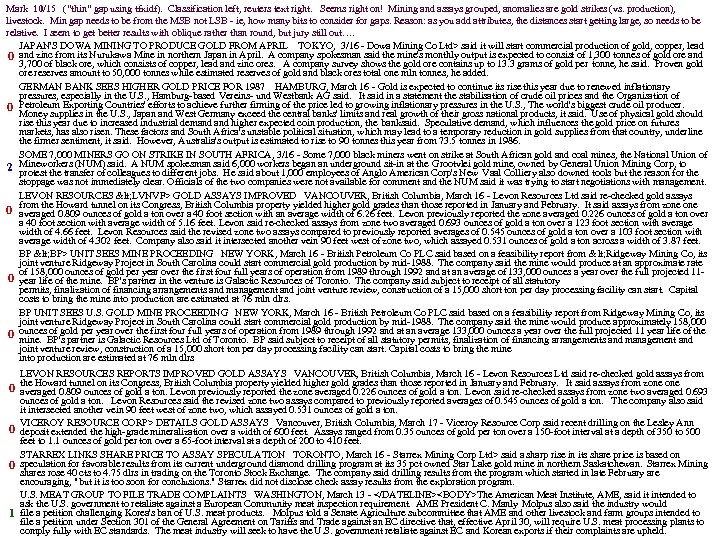

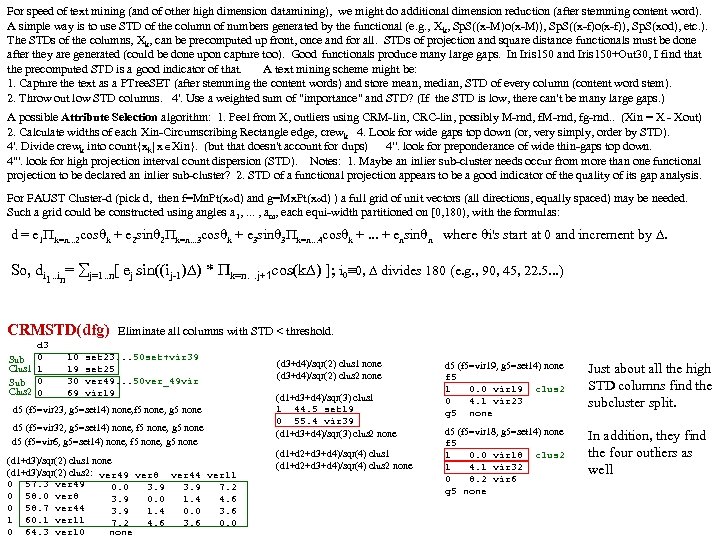

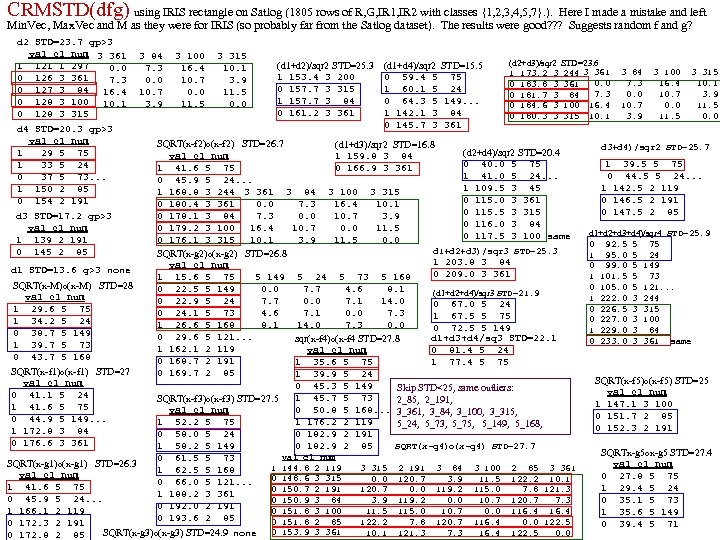

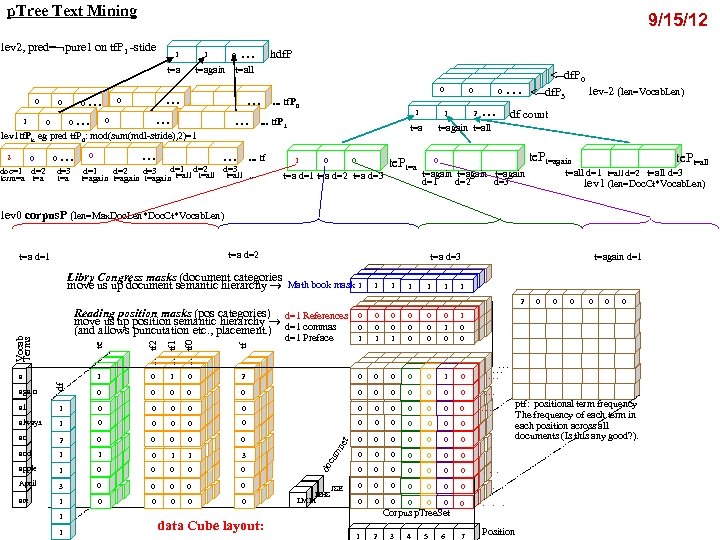

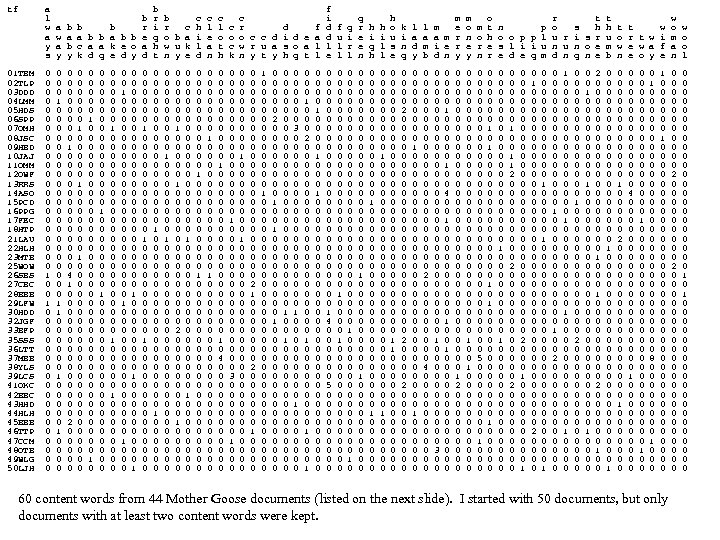

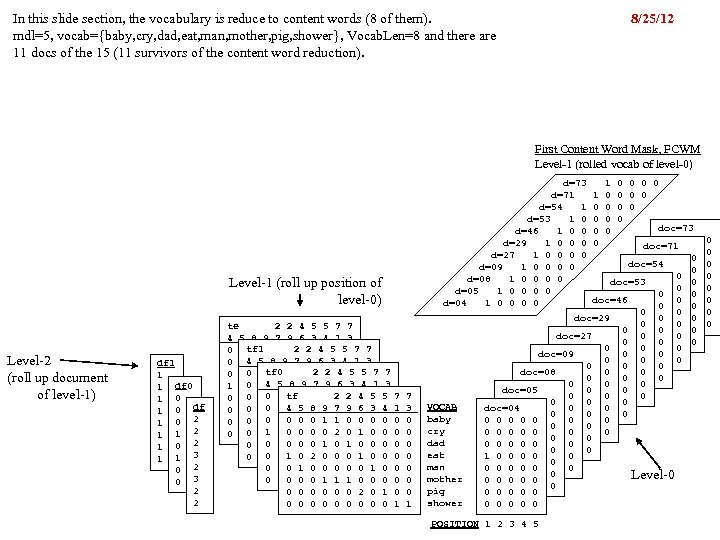

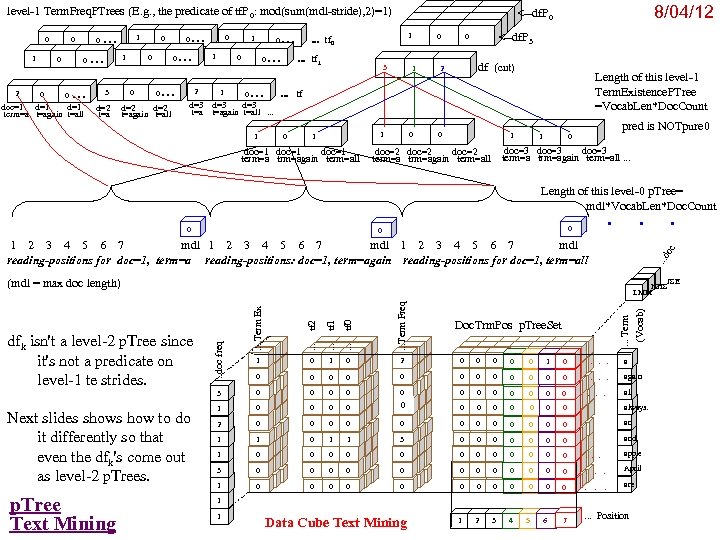

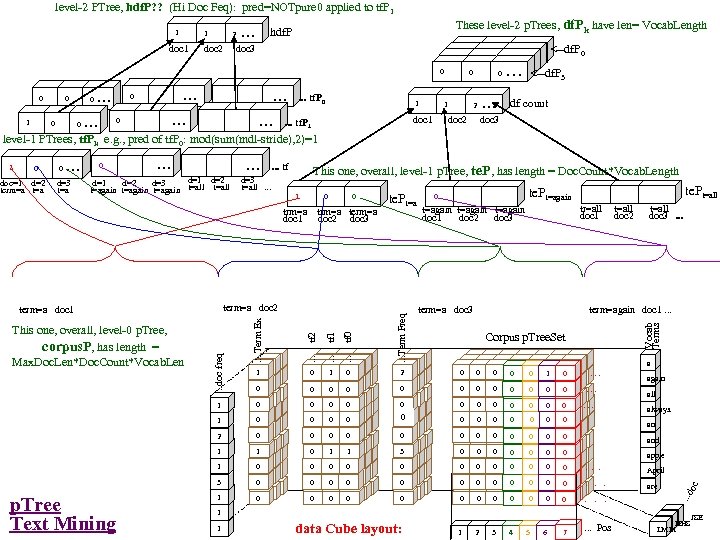

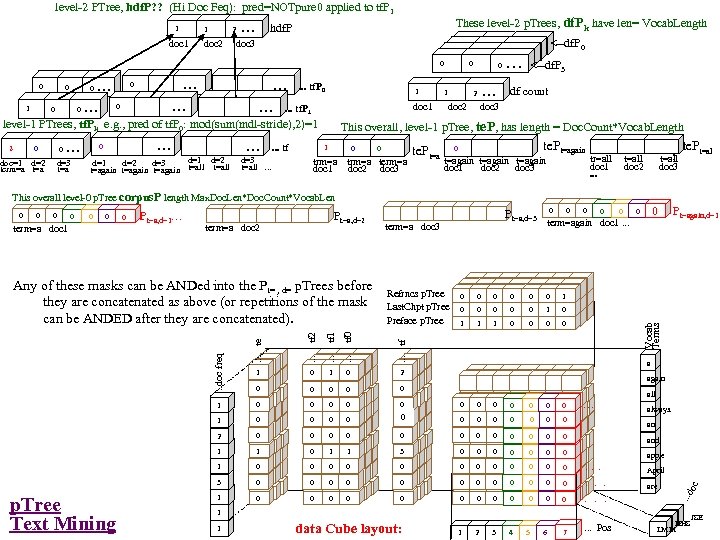

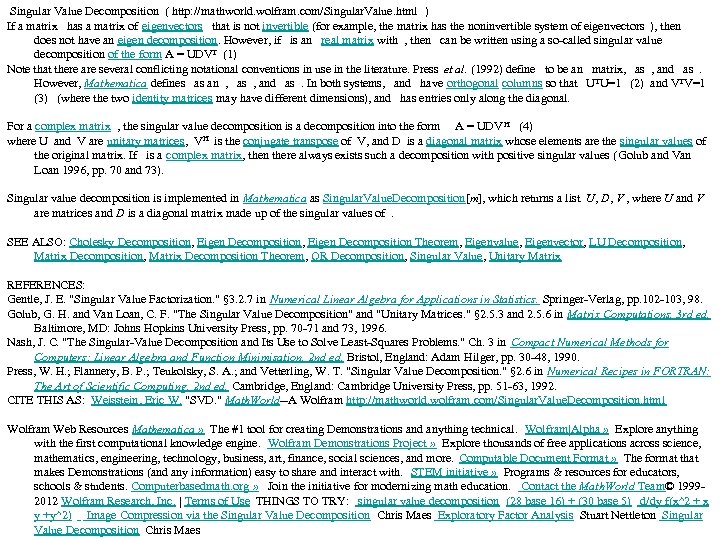

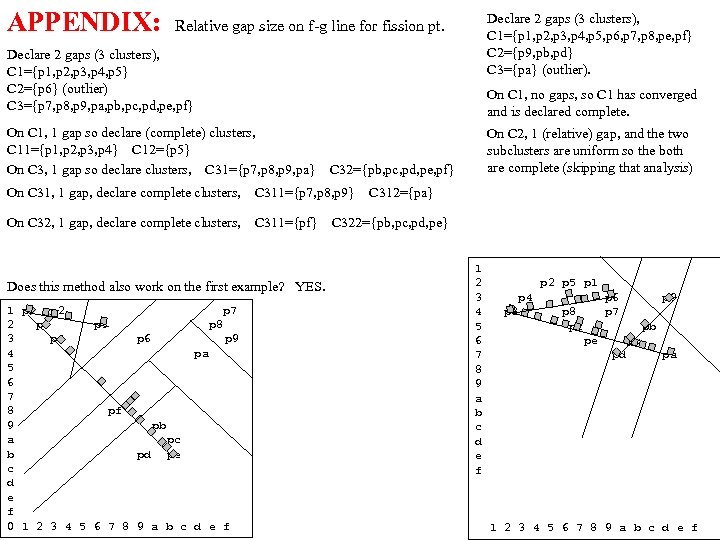

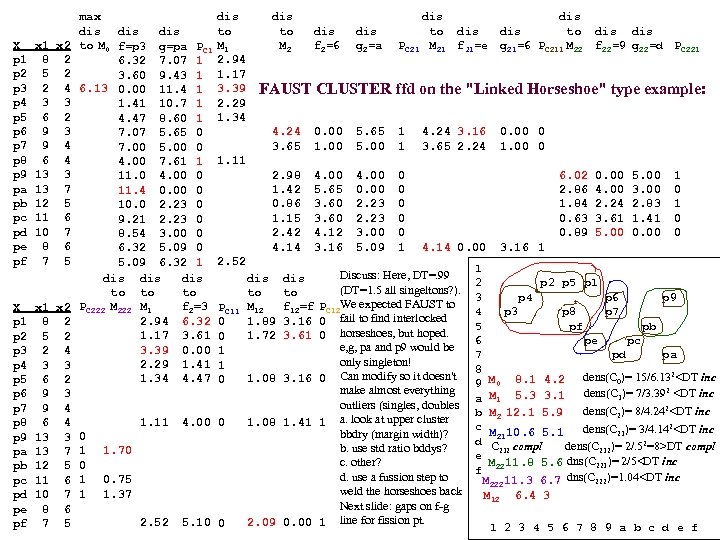

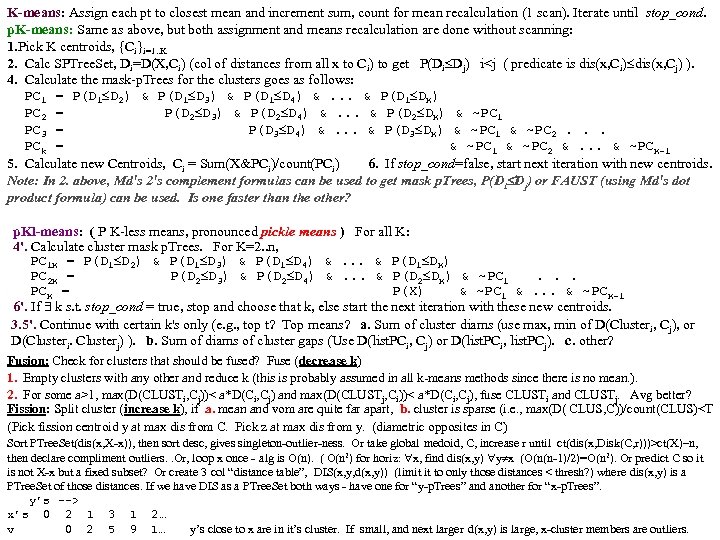

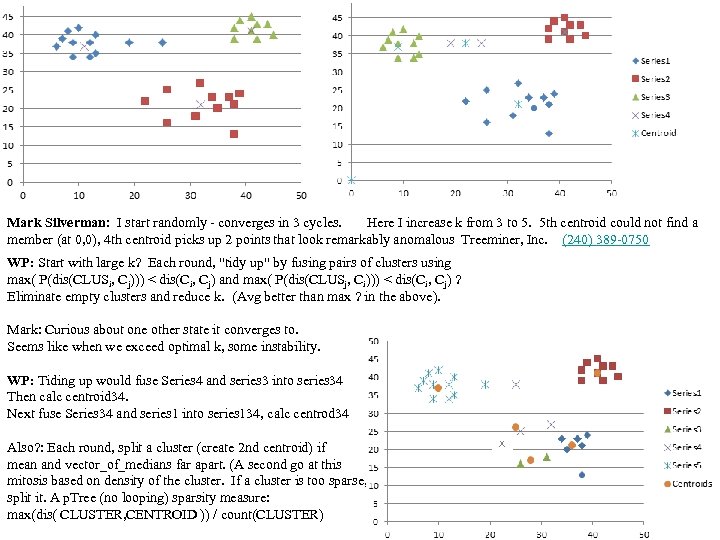

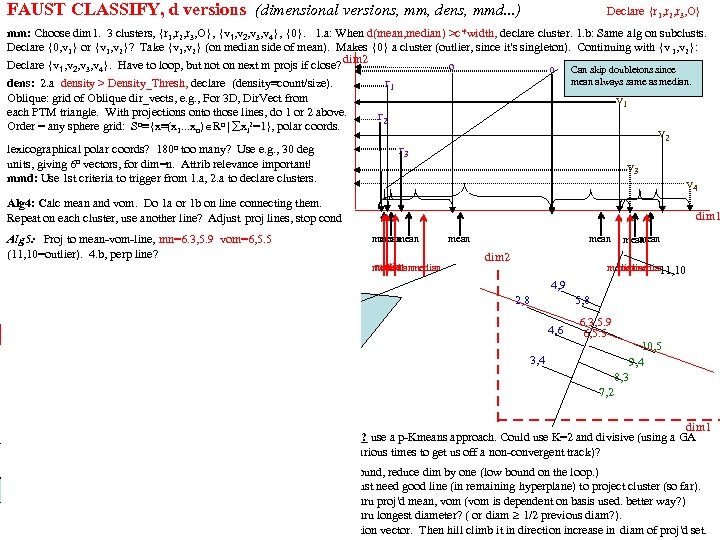

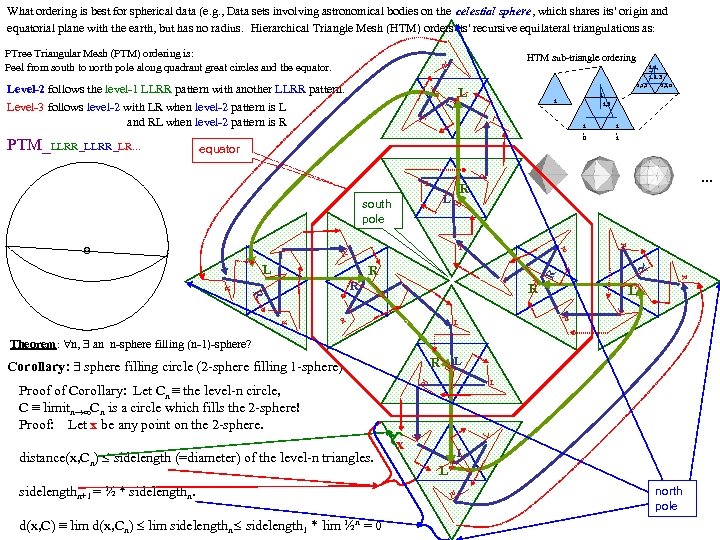

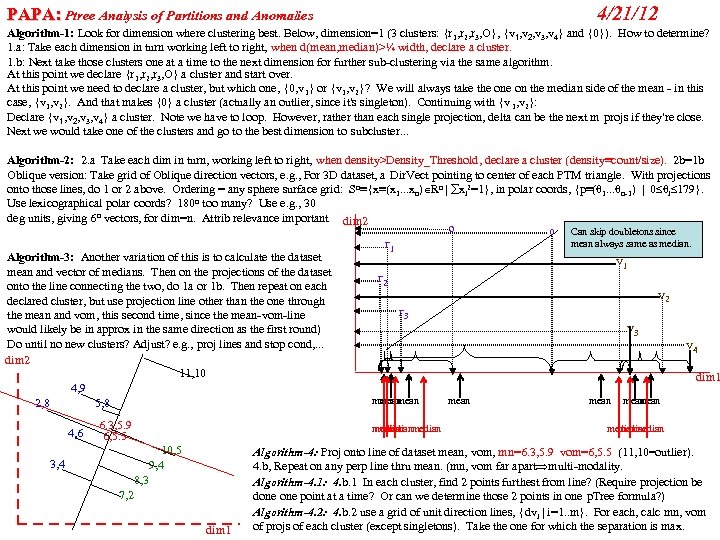

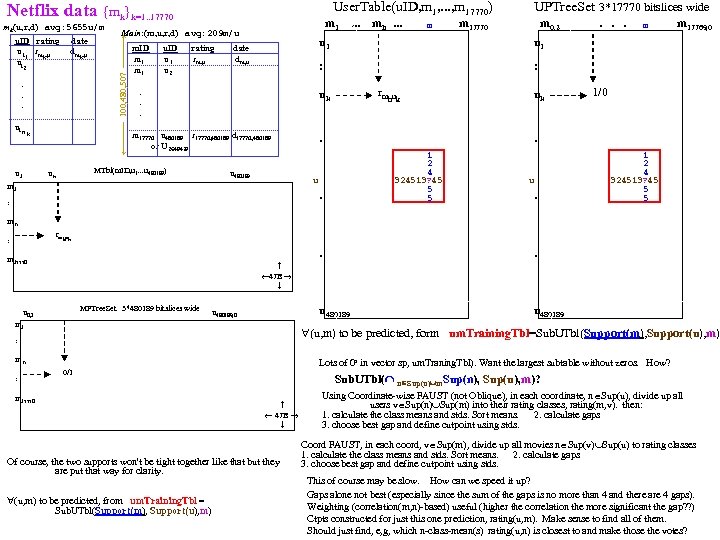

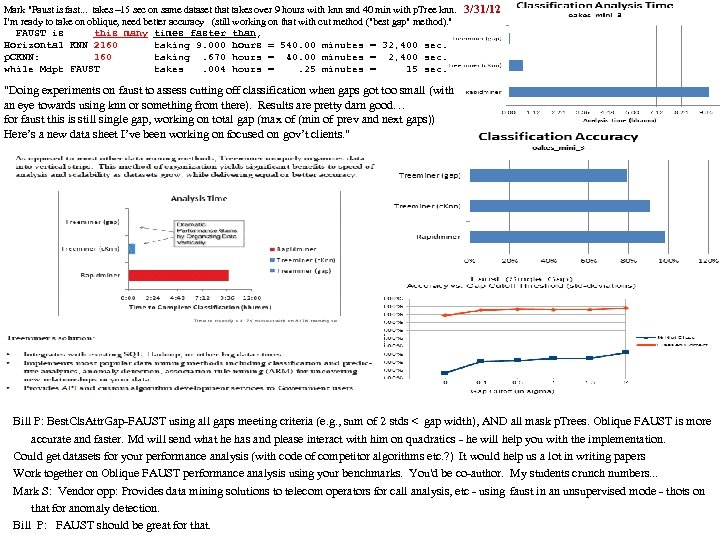

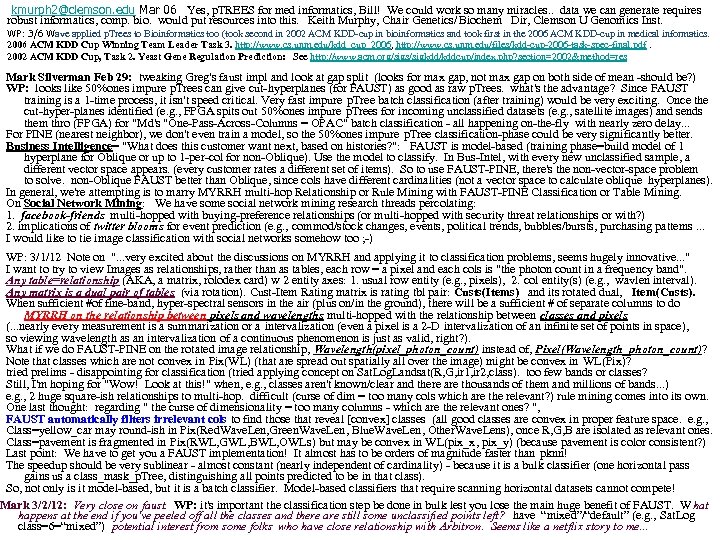

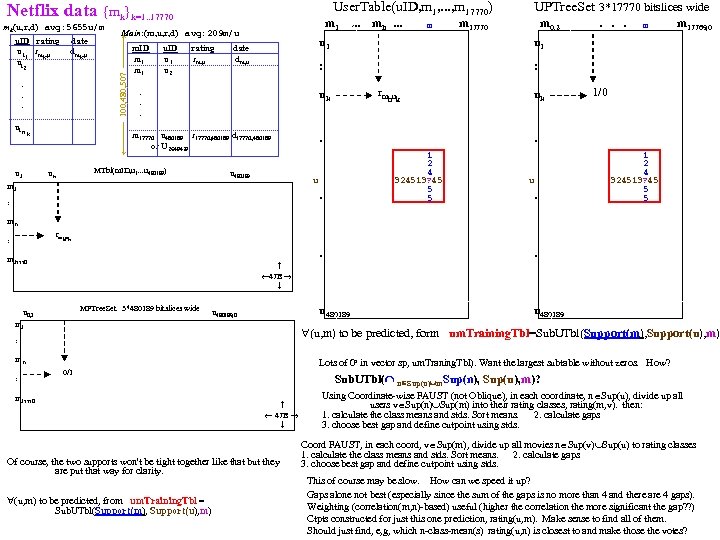

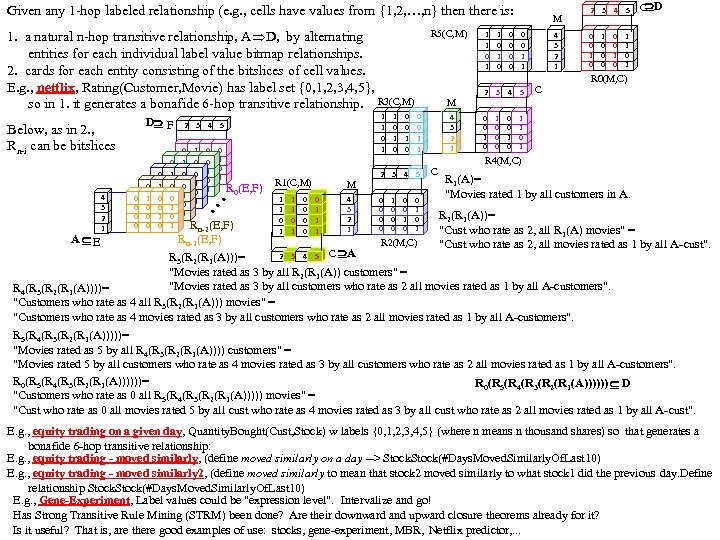

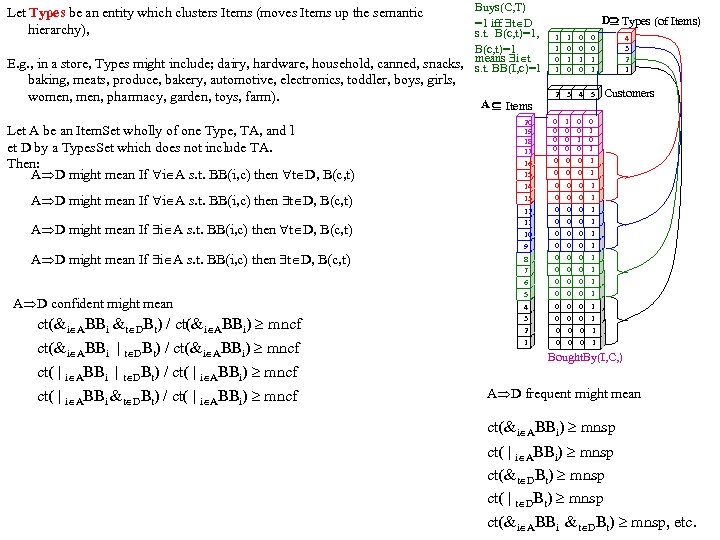

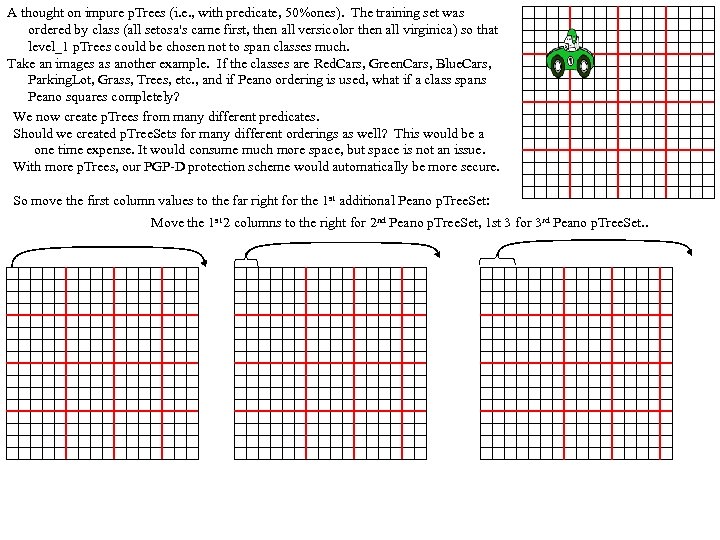

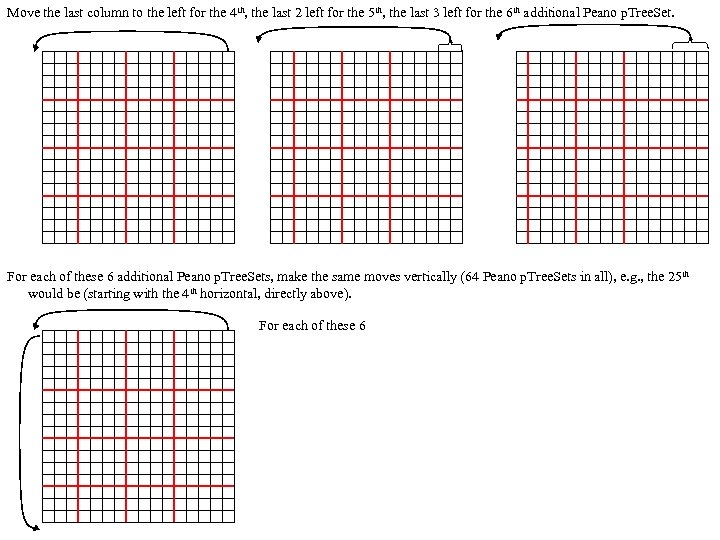

Research of William Perrizo, C. S. Department, NDSU I datamine big data (big data ≡ trillions of rows and, sometimes, thousands of columns (which can complicate data mining trillions of rows). How do I do it? I structure the data table as [compressed] vertical bit columns (called "predicate Trees" or " p. Trees"). I process those p. Trees horizontally (because processing across thousands of column structures is orders of magnitude faster than processing down trillions of row structures. As a result, some tasks that might have taken forever can be done in a humanly acceptable amount of time. What is data mining? Largely it is classification (assigning a class label to a row based on a training table of previously classified rows). Clustering and Association Rule Mining (ARM) are important areas of data mining also, and they are related to classification. The purpose of clustering is usually to create [or improve] a training table. It is also used for anomaly detection, a huge area in data mining. ARM is used to data mine more complex data (relationship matrixes between two entities, not just single entity training tables). Recommenders recommend products to customers based on their previous purchases or rents (or based on their ratings of items)". To make a decision, we typically search our memory for similar situations (near neighbor cases) and base our decision on the decisions we (or an expert) made in those similar cases. We do what worked before (for us or for others). I. e. , we let near neighbor cases vote. But which neighbor vote? "The Magical Number Seven, Plus or Minus Two. . . " Information"[2] is one of the most highly cited papers in psychology cognitive psychologist George A. Miller of Princeton University's Department of Psychology in Psychological Review. It argues that the number of objects an average human can hold in working memory is 7 ± 2 (called Miller's Law). Classification provides a better 7. Some current p. Tree Data Mining research projects 1. Map. Reduce FAUST (FAUST= Functional Analytic Unsupervised and Supervised machine Teaching): Map. Reduce and Hadoop are key-value approaches to organizing and managing Big. Data. In FAUST CLASSIFY we start with a Training TABLE and in FAUST CLUSTER we start with a vector space. 2. p. Tree Text Mining: : I am trying to capturiethe reading sequence, not just the term-frequency matrix (lossless capture) of a text corpus. Preliminary work on the term frequency matrix suggests that attribute selection via simple Standard Deviations really helps (select those columns with high St. D because of their separation potential. =). 3. FAUST CLUSTER/ANOMALASER: This is a method finding anomalies very quickly. 4. Secure p. Tree. Bases: This involves anonymizing the identities of the individual p. Trees and randomly padding them to mask their initial bit positions. 5. FAUST PREDICTOR/CLASSIFIER: This technology is described above. 6. p. Tree Algorithmic Tools: An expanded algorithmic tool set is being developed to include quadratic tools and even higher degree tools. 7. p. Tree Alternative Algorithm Implementation: Implementing p. Tree algorithms in hardware (e. g. , FPGAs) should result in orders of magnitude performance increases? 8. p. Tree O/S Infrastructure: Computers and Operating Systems are designed to do logical operations (AND, OR. . . ) rapidly. Exploit this for p. Tree processing speed. 9. p. Tree Recommenders: This includes, Singular Value Decomposition (SVD) recommenders, p. Tree Near Neighbor Recommenders and p. Tree ARM Recommenders.

Research of William Perrizo, C. S. Department, NDSU I datamine big data (big data ≡ trillions of rows and, sometimes, thousands of columns (which can complicate data mining trillions of rows). How do I do it? I structure the data table as [compressed] vertical bit columns (called "predicate Trees" or " p. Trees"). I process those p. Trees horizontally (because processing across thousands of column structures is orders of magnitude faster than processing down trillions of row structures. As a result, some tasks that might have taken forever can be done in a humanly acceptable amount of time. What is data mining? Largely it is classification (assigning a class label to a row based on a training table of previously classified rows). Clustering and Association Rule Mining (ARM) are important areas of data mining also, and they are related to classification. The purpose of clustering is usually to create [or improve] a training table. It is also used for anomaly detection, a huge area in data mining. ARM is used to data mine more complex data (relationship matrixes between two entities, not just single entity training tables). Recommenders recommend products to customers based on their previous purchases or rents (or based on their ratings of items)". To make a decision, we typically search our memory for similar situations (near neighbor cases) and base our decision on the decisions we (or an expert) made in those similar cases. We do what worked before (for us or for others). I. e. , we let near neighbor cases vote. But which neighbor vote? "The Magical Number Seven, Plus or Minus Two. . . " Information"[2] is one of the most highly cited papers in psychology cognitive psychologist George A. Miller of Princeton University's Department of Psychology in Psychological Review. It argues that the number of objects an average human can hold in working memory is 7 ± 2 (called Miller's Law). Classification provides a better 7. Some current p. Tree Data Mining research projects 1. Map. Reduce FAUST (FAUST= Functional Analytic Unsupervised and Supervised machine Teaching): Map. Reduce and Hadoop are key-value approaches to organizing and managing Big. Data. In FAUST CLASSIFY we start with a Training TABLE and in FAUST CLUSTER we start with a vector space. 2. p. Tree Text Mining: : I am trying to capturiethe reading sequence, not just the term-frequency matrix (lossless capture) of a text corpus. Preliminary work on the term frequency matrix suggests that attribute selection via simple Standard Deviations really helps (select those columns with high St. D because of their separation potential. =). 3. FAUST CLUSTER/ANOMALASER: This is a method finding anomalies very quickly. 4. Secure p. Tree. Bases: This involves anonymizing the identities of the individual p. Trees and randomly padding them to mask their initial bit positions. 5. FAUST PREDICTOR/CLASSIFIER: This technology is described above. 6. p. Tree Algorithmic Tools: An expanded algorithmic tool set is being developed to include quadratic tools and even higher degree tools. 7. p. Tree Alternative Algorithm Implementation: Implementing p. Tree algorithms in hardware (e. g. , FPGAs) should result in orders of magnitude performance increases? 8. p. Tree O/S Infrastructure: Computers and Operating Systems are designed to do logical operations (AND, OR. . . ) rapidly. Exploit this for p. Tree processing speed. 9. p. Tree Recommenders: This includes, Singular Value Decomposition (SVD) recommenders, p. Tree Near Neighbor Recommenders and p. Tree ARM Recommenders.

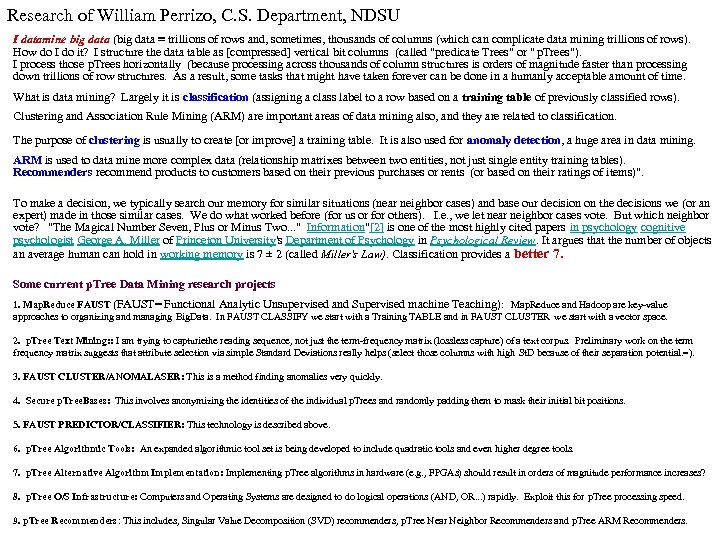

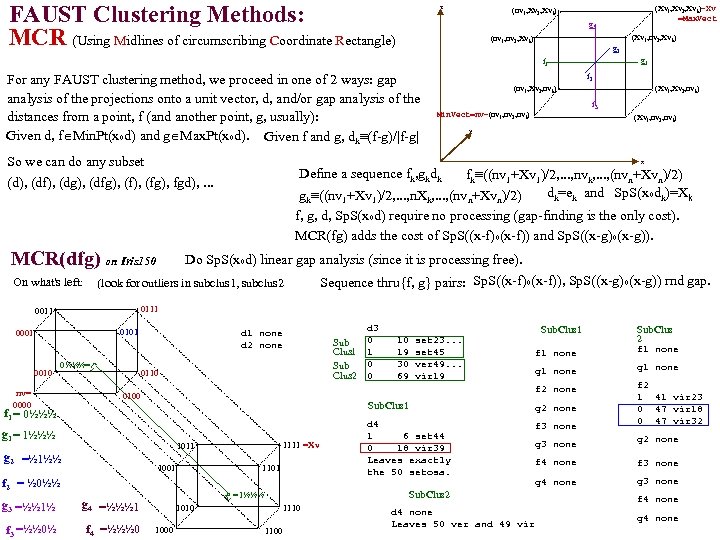

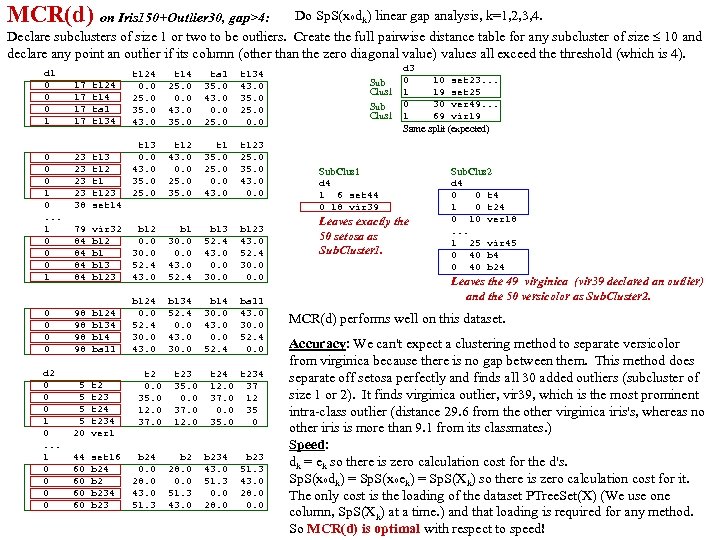

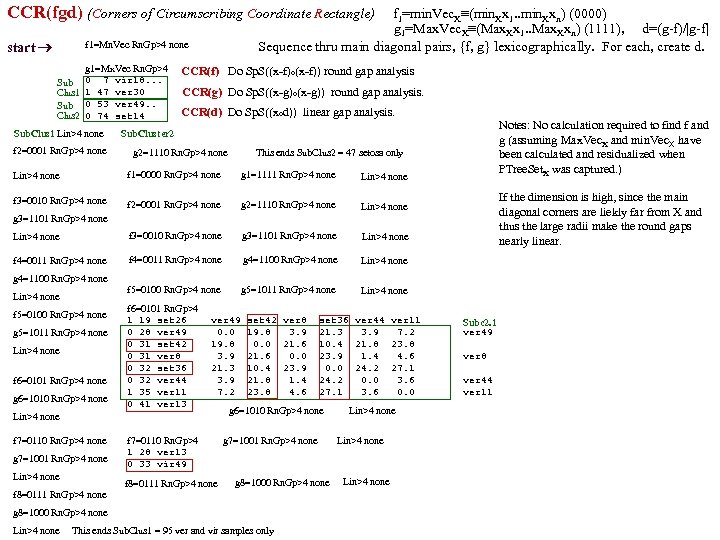

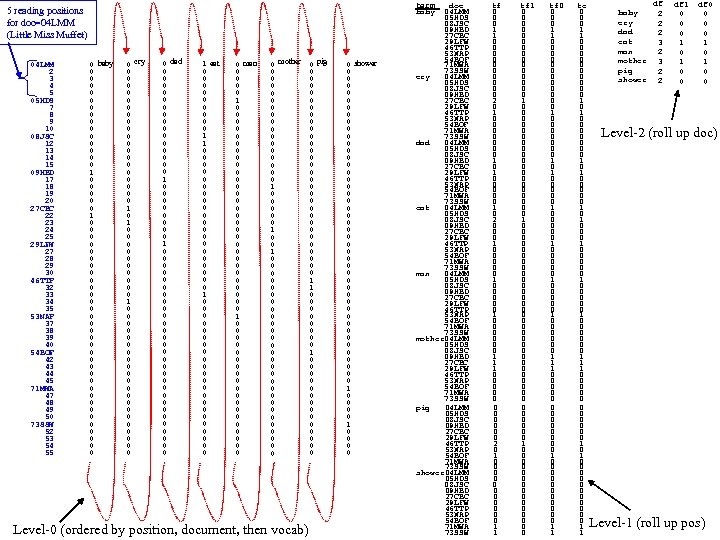

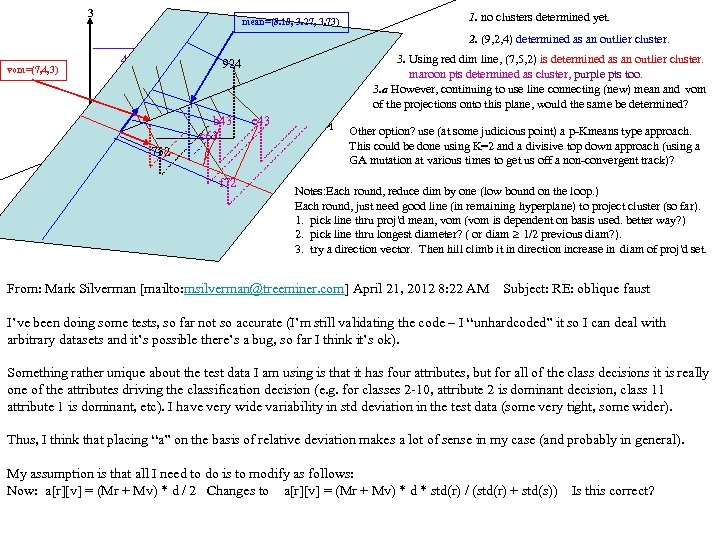

FAUST clustering (the unsupervised part of FAUST) This class of partitioning or clustering methods relies on choosing a functional (mapping of each row in a dim=n table to a real number) which is distance dominated (i. e. , the difference between any two functional values, F(x) and F(y) is always the distance between x and y. The distance dominance of F implies if we find a gap in the F-values, we know that the 2 sets of points mapping to opposite sides of that gap are at least as far apart as the gap width. ). Functionals we've used effectively: The Coordinate Projection Functionals (ej) Check gaps in ej(y) ≡ yj The Square Distance Functional (SD) Check gaps in SDp(y) ≡ (y-p)o(y-p) (parameterized over a p Rn grid). The Dot Product Projection (DPP) Check for gaps in DPPd(y) or DPPpq(y)≡ (y-p)o(p-q)/|p-q| (parameterized over a grid of d=(p-q)/|p-q| Spheren. d The Dot Product Radius (DPR) Check gaps in DPRpq(y) ≡ √ SDp(y) - DPPpq(y)2 The Square Dot Product Radius (SDPR) SDPRpq(y) ≡ SDp(y) - DPPpq(y)2 (easier p. Tree processing) DPP-KM 1. Check gaps in DPPp, d(y) (over grids of p and d? ). 1. 1 Check distances at any sparse extremes. 2. After several rounds of 1, apply k-means to the resulting clusters (when k seems to be determined). DPP-DA 2. Check gaps in DPPp, d(y) (grids of p and d? ) against the density of subcluster. 2. 1 Check distances at sparse extremes against subcluster density. 2. 2 Apply other methods once Dot ceases to be effective. DPP-SD) 3. Check gaps in DPPp, d(y) (over a p-grid and a d-grid) and SDp(y) (over a p-grid). 3. 1 Check sparse ends distance with subcluster density. (DPPpd and SDp share construction steps!) SD-DPP-SDPR) (DPPpq , SDp and SDPRpq share construction steps! SDp(y) ≡ (y-p)o(y-p) = yoy - 2 yop +pop Calc yoy, yop, yoq concurrently? Then constant multiplies DPPpq(y) ≡ (y-p)od=yod-pod= (1/|p-q|)yop - (1/|p-q|)yoq 2*yop, (1/|p-q|)*yop concurrently. Then add | subtract. Calculate DPPpq(y)2. Then subtract it from SDp(y)

FAUST clustering (the unsupervised part of FAUST) This class of partitioning or clustering methods relies on choosing a functional (mapping of each row in a dim=n table to a real number) which is distance dominated (i. e. , the difference between any two functional values, F(x) and F(y) is always the distance between x and y. The distance dominance of F implies if we find a gap in the F-values, we know that the 2 sets of points mapping to opposite sides of that gap are at least as far apart as the gap width. ). Functionals we've used effectively: The Coordinate Projection Functionals (ej) Check gaps in ej(y) ≡ yj The Square Distance Functional (SD) Check gaps in SDp(y) ≡ (y-p)o(y-p) (parameterized over a p Rn grid). The Dot Product Projection (DPP) Check for gaps in DPPd(y) or DPPpq(y)≡ (y-p)o(p-q)/|p-q| (parameterized over a grid of d=(p-q)/|p-q| Spheren. d The Dot Product Radius (DPR) Check gaps in DPRpq(y) ≡ √ SDp(y) - DPPpq(y)2 The Square Dot Product Radius (SDPR) SDPRpq(y) ≡ SDp(y) - DPPpq(y)2 (easier p. Tree processing) DPP-KM 1. Check gaps in DPPp, d(y) (over grids of p and d? ). 1. 1 Check distances at any sparse extremes. 2. After several rounds of 1, apply k-means to the resulting clusters (when k seems to be determined). DPP-DA 2. Check gaps in DPPp, d(y) (grids of p and d? ) against the density of subcluster. 2. 1 Check distances at sparse extremes against subcluster density. 2. 2 Apply other methods once Dot ceases to be effective. DPP-SD) 3. Check gaps in DPPp, d(y) (over a p-grid and a d-grid) and SDp(y) (over a p-grid). 3. 1 Check sparse ends distance with subcluster density. (DPPpd and SDp share construction steps!) SD-DPP-SDPR) (DPPpq , SDp and SDPRpq share construction steps! SDp(y) ≡ (y-p)o(y-p) = yoy - 2 yop +pop Calc yoy, yop, yoq concurrently? Then constant multiplies DPPpq(y) ≡ (y-p)od=yod-pod= (1/|p-q|)yop - (1/|p-q|)yoq 2*yop, (1/|p-q|)*yop concurrently. Then add | subtract. Calculate DPPpq(y)2. Then subtract it from SDp(y)

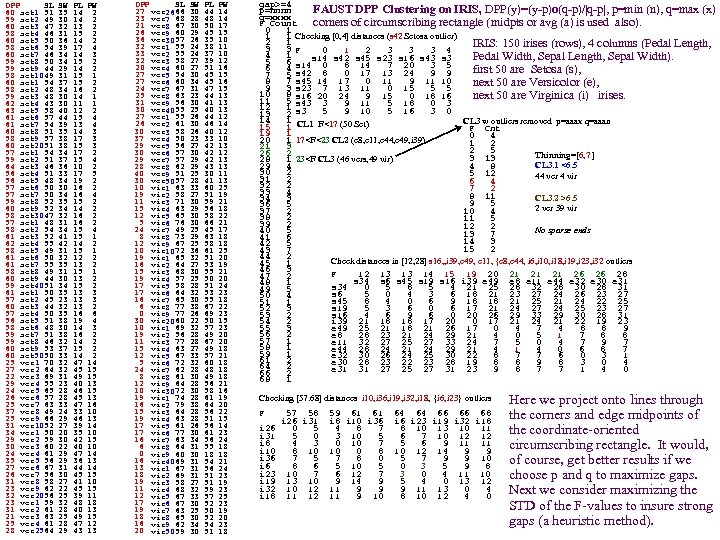

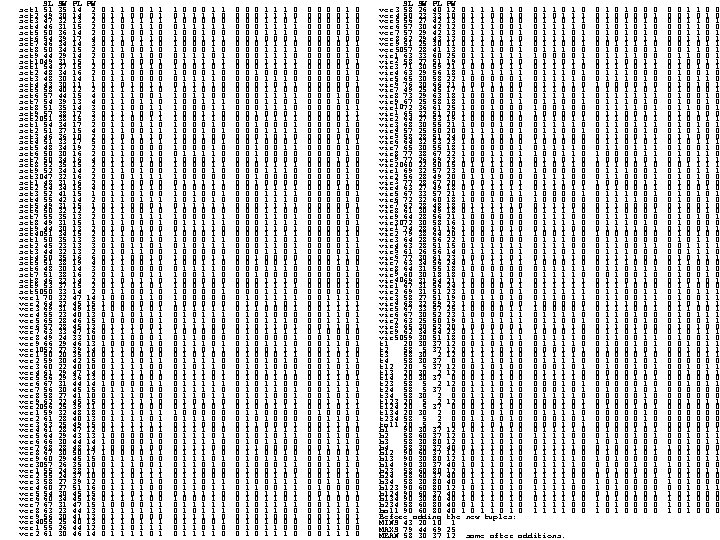

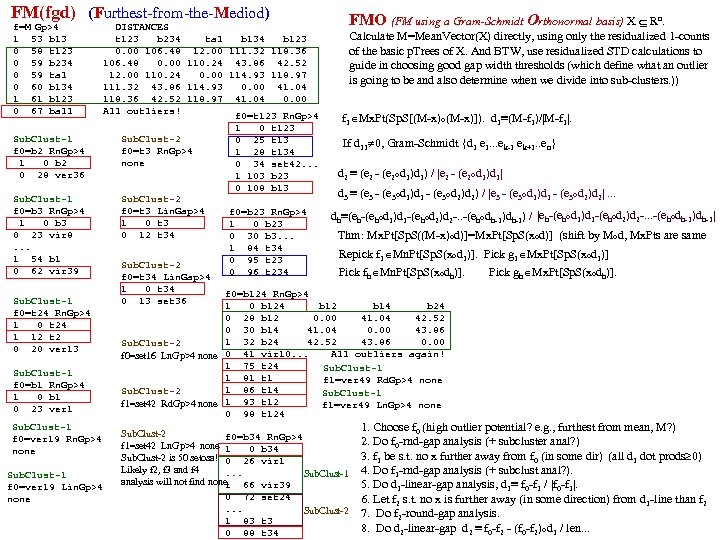

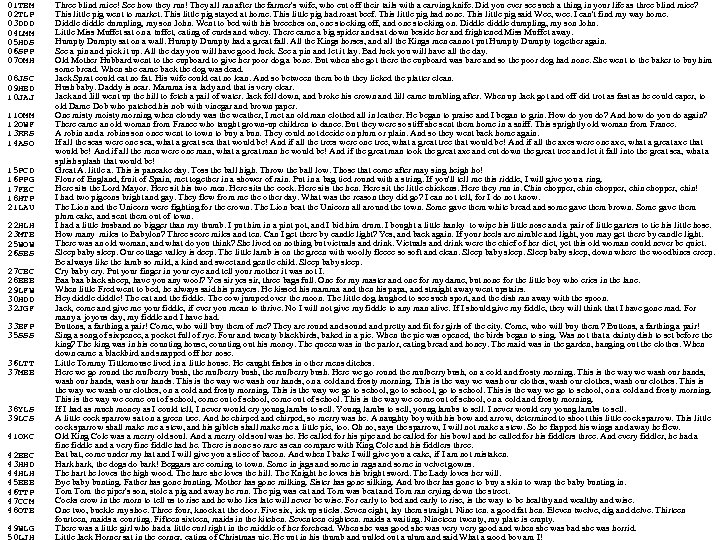

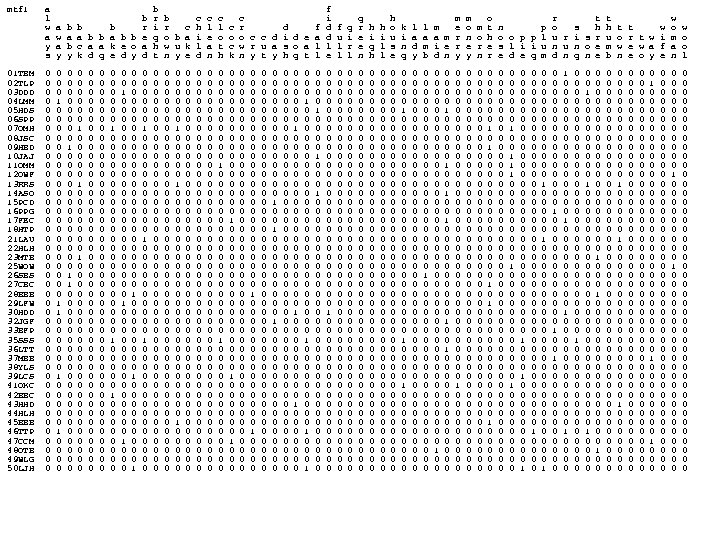

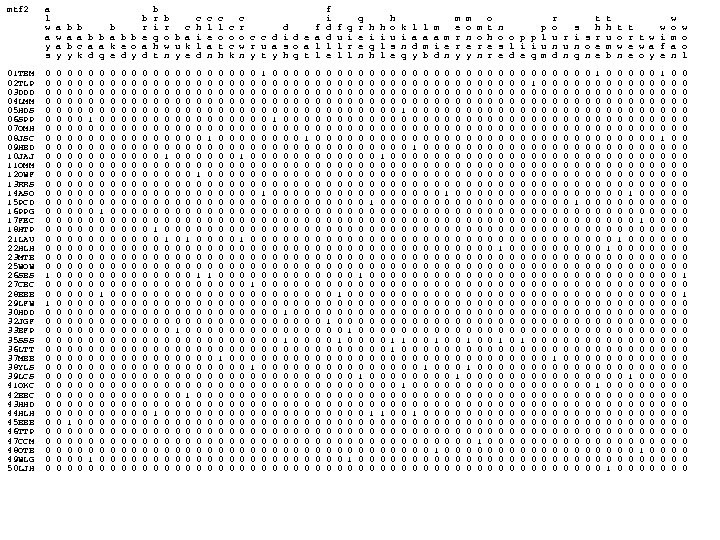

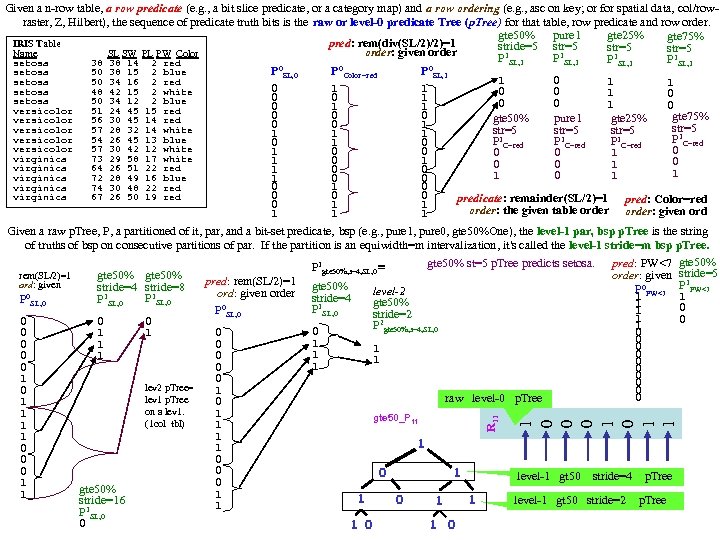

DPP SL SW PL PW 60 set 51 35 14 2 1 59 set 49 30 14 2 2 60 set 47 32 13 2 3 58 set 46 31 15 2 4 60 set 50 36 14 2 5 58 set 54 39 17 4 6 60 set 46 34 14 3 7 59 set 50 34 15 2 8 59 set 44 29 14 2 9 58 set 49 31 15 1 10 60 set 54 37 15 2 1 58 set 48 34 16 2 2 59 set 48 30 14 1 3 62 set 43 30 11 1 4 63 set 58 40 12 2 5 61 set 57 44 15 4 6 61 set 54 39 13 4 7 60 set 51 35 14 3 8 58 set 57 38 17 3 9 60 set 51 38 15 3 20 57 set 54 34 17 2 1 59 set 51 37 15 4 2 64 set 46 36 10 2 3 56 set 51 33 17 5 4 56 set 48 34 19 2 5 57 set 50 30 16 2 6 57 set 50 34 16 4 7 59 set 52 35 15 2 8 60 set 52 34 14 2 9 58 set 47 32 16 2 30 57 set 48 31 16 2 1 58 set 54 34 15 4 2 61 set 52 41 15 1 3 62 set 55 42 14 2 4 58 set 49 31 15 1 5 61 set 50 32 12 2 6 61 set 55 35 13 2 7 58 set 49 31 15 1 8 60 set 44 30 13 2 9 59 set 51 34 15 2 40 61 set 50 35 13 3 1 57 set 45 23 13 3 2 60 set 44 32 13 2 3 57 set 50 35 16 6 4 56 set 51 38 19 4 5 58 set 48 30 14 3 6 59 set 51 38 16 2 7 59 set 46 32 14 2 8 60 set 53 37 15 2 9 60 set 50 33 14 2 50 1 25 ver 70 32 47 14 2 27 ver 64 32 45 15 3 22 ver 69 31 49 15 4 29 ver 55 23 40 13 5 24 ver 65 28 46 15 6 26 ver 57 28 45 13 7 25 ver 63 33 47 16 8 37 ver 49 24 33 10 9 25 ver 66 29 46 13 10 31 ver 52 27 39 14 1 34 ver 50 20 35 10 2 29 ver 59 30 42 15 3 30 ver 60 22 40 10 4 24 ver 61 29 47 14 5 35 ver 56 29 36 13 6 27 ver 67 31 44 14 7 26 ver 56 30 45 15 8 31 ver 58 27 41 10 9 23 ver 62 22 45 15 20 32 ver 56 25 39 11 1 23 ver 59 32 48 18 2 31 ver 61 28 40 13 3 21 ver 63 25 49 15 4 25 ver 61 28 47 12 25 28 ver 64 29 43 13 DPP SL SW PL PW 27 ver 66 30 44 14 26 23 ver 68 28 48 14 7 21 ver 67 30 50 17 8 26 ver 60 29 45 15 9 36 ver 57 26 35 10 30 32 ver 55 24 38 11 1 33 ver 55 24 37 10 2 32 ver 58 27 39 12 3 20 ver 60 27 51 16 4 27 ver 54 30 45 15 5 27 ver 60 34 45 16 6 24 ver 67 31 47 15 7 25 ver 63 23 44 13 8 31 ver 56 30 41 13 9 30 ver 55 25 40 13 40 27 ver 55 26 44 12 1 26 ver 61 30 46 14 2 30 ver 58 26 40 12 3 37 ver 50 23 33 10 4 29 ver 56 27 42 13 5 30 ver 57 30 42 12 6 29 ver 57 29 42 13 7 28 ver 62 29 43 13 8 40 ver 51 25 30 11 9 30 ver 57 28 41 13 50 10 vir 63 33 60 25 1 19 vir 58 27 51 19 2 11 vir 71 30 59 21 3 15 vir 63 29 56 18 4 12 vir 65 30 58 22 5 5 vir 76 30 66 21 6 24 vir 49 25 45 17 7 8 vir 73 29 63 18 8 12 vir 67 25 58 18 9 10 vir 72 36 61 25 10 19 vir 65 32 51 20 1 16 vir 64 27 53 19 2 15 vir 68 30 55 21 3 19 vir 57 25 50 20 4 17 vir 58 28 51 24 5 17 vir 64 32 53 23 6 16 vir 65 30 55 18 7 6 vir 77 38 67 22 8 0 vir 77 26 69 23 9 30 vir 60 22 50 15 20 10 vir 69 32 57 23 1 19 vir 56 28 49 20 2 11 vir 77 28 67 20 3 15 vir 63 27 49 18 4 12 vir 67 33 57 21 5 5 vir 72 32 60 18 6 24 vir 62 28 48 18 7 8 vir 61 30 49 18 8 12 vir 64 28 56 21 9 10 vir 72 30 58 16 30 19 vir 74 28 61 19 1 16 vir 79 38 64 20 2 15 vir 64 28 56 22 3 19 vir 63 28 51 15 4 17 vir 61 26 56 14 5 17 vir 77 30 61 23 6 16 vir 63 34 56 24 7 6 vir 64 31 55 18 8 0 vir 60 30 18 18 9 16 vir 69 31 54 21 40 13 vir 67 31 56 24 1 18 vir 69 31 51 23 2 19 vir 58 27 51 19 3 11 vir 68 32 59 23 4 12 vir 67 33 57 25 5 17 vir 67 30 52 23 6 19 vir 63 25 50 19 7 18 vir 65 30 52 20 8 16 vir 62 34 54 23 9 20 vir 59 30 51 18 50 gap>=4 p=nnnn FAUST DPP Clustering on IRIS, DPP(y)=(y-p)o(q-p)/|q-p|, p=min (n), q=max (x) q=xxxx F Count corners of circumscribing rectangle (midpts or avg (a) is used also). 0 1 1 1 Checking [0, 4] distances (s 42 Setosa outlier) 2 1 IRIS: 150 irises (rows), 4 columns (Pedal Length, 3 3 F 0 1 2 3 3 3 4 4 1 s 14 s 42 s 45 s 23 s 16 s 43 s 3 Pedal Width, Sepal Length, Sepal Width). 5 6 s 14 0 8 14 7 20 3 5 6 4 first 50 are Setosa (s), s 42 8 0 17 13 24 9 9 7 5 8 7 s 45 14 17 0 11 9 11 10 next 50 are Versicolor (e), 9 3 s 23 7 13 11 0 15 5 5 10 8 s 16 20 24 9 15 0 18 16 next 50 are Virginica (i) irises. 11 5 s 43 3 9 11 5 18 0 3 12 1 s 3 5 9 10 5 16 3 0 13 2 14 1 CL 1 F<17 (50 Set) CL 3 w outliers removed p=aaax q=aaan 15 1 F Cnt 19 1 0 4 20 1 17

DPP SL SW PL PW 60 set 51 35 14 2 1 59 set 49 30 14 2 2 60 set 47 32 13 2 3 58 set 46 31 15 2 4 60 set 50 36 14 2 5 58 set 54 39 17 4 6 60 set 46 34 14 3 7 59 set 50 34 15 2 8 59 set 44 29 14 2 9 58 set 49 31 15 1 10 60 set 54 37 15 2 1 58 set 48 34 16 2 2 59 set 48 30 14 1 3 62 set 43 30 11 1 4 63 set 58 40 12 2 5 61 set 57 44 15 4 6 61 set 54 39 13 4 7 60 set 51 35 14 3 8 58 set 57 38 17 3 9 60 set 51 38 15 3 20 57 set 54 34 17 2 1 59 set 51 37 15 4 2 64 set 46 36 10 2 3 56 set 51 33 17 5 4 56 set 48 34 19 2 5 57 set 50 30 16 2 6 57 set 50 34 16 4 7 59 set 52 35 15 2 8 60 set 52 34 14 2 9 58 set 47 32 16 2 30 57 set 48 31 16 2 1 58 set 54 34 15 4 2 61 set 52 41 15 1 3 62 set 55 42 14 2 4 58 set 49 31 15 1 5 61 set 50 32 12 2 6 61 set 55 35 13 2 7 58 set 49 31 15 1 8 60 set 44 30 13 2 9 59 set 51 34 15 2 40 61 set 50 35 13 3 1 57 set 45 23 13 3 2 60 set 44 32 13 2 3 57 set 50 35 16 6 4 56 set 51 38 19 4 5 58 set 48 30 14 3 6 59 set 51 38 16 2 7 59 set 46 32 14 2 8 60 set 53 37 15 2 9 60 set 50 33 14 2 50 1 25 ver 70 32 47 14 2 27 ver 64 32 45 15 3 22 ver 69 31 49 15 4 29 ver 55 23 40 13 5 24 ver 65 28 46 15 6 26 ver 57 28 45 13 7 25 ver 63 33 47 16 8 37 ver 49 24 33 10 9 25 ver 66 29 46 13 10 31 ver 52 27 39 14 1 34 ver 50 20 35 10 2 29 ver 59 30 42 15 3 30 ver 60 22 40 10 4 24 ver 61 29 47 14 5 35 ver 56 29 36 13 6 27 ver 67 31 44 14 7 26 ver 56 30 45 15 8 31 ver 58 27 41 10 9 23 ver 62 22 45 15 20 32 ver 56 25 39 11 1 23 ver 59 32 48 18 2 31 ver 61 28 40 13 3 21 ver 63 25 49 15 4 25 ver 61 28 47 12 25 28 ver 64 29 43 13 DPP SL SW PL PW 27 ver 66 30 44 14 26 23 ver 68 28 48 14 7 21 ver 67 30 50 17 8 26 ver 60 29 45 15 9 36 ver 57 26 35 10 30 32 ver 55 24 38 11 1 33 ver 55 24 37 10 2 32 ver 58 27 39 12 3 20 ver 60 27 51 16 4 27 ver 54 30 45 15 5 27 ver 60 34 45 16 6 24 ver 67 31 47 15 7 25 ver 63 23 44 13 8 31 ver 56 30 41 13 9 30 ver 55 25 40 13 40 27 ver 55 26 44 12 1 26 ver 61 30 46 14 2 30 ver 58 26 40 12 3 37 ver 50 23 33 10 4 29 ver 56 27 42 13 5 30 ver 57 30 42 12 6 29 ver 57 29 42 13 7 28 ver 62 29 43 13 8 40 ver 51 25 30 11 9 30 ver 57 28 41 13 50 10 vir 63 33 60 25 1 19 vir 58 27 51 19 2 11 vir 71 30 59 21 3 15 vir 63 29 56 18 4 12 vir 65 30 58 22 5 5 vir 76 30 66 21 6 24 vir 49 25 45 17 7 8 vir 73 29 63 18 8 12 vir 67 25 58 18 9 10 vir 72 36 61 25 10 19 vir 65 32 51 20 1 16 vir 64 27 53 19 2 15 vir 68 30 55 21 3 19 vir 57 25 50 20 4 17 vir 58 28 51 24 5 17 vir 64 32 53 23 6 16 vir 65 30 55 18 7 6 vir 77 38 67 22 8 0 vir 77 26 69 23 9 30 vir 60 22 50 15 20 10 vir 69 32 57 23 1 19 vir 56 28 49 20 2 11 vir 77 28 67 20 3 15 vir 63 27 49 18 4 12 vir 67 33 57 21 5 5 vir 72 32 60 18 6 24 vir 62 28 48 18 7 8 vir 61 30 49 18 8 12 vir 64 28 56 21 9 10 vir 72 30 58 16 30 19 vir 74 28 61 19 1 16 vir 79 38 64 20 2 15 vir 64 28 56 22 3 19 vir 63 28 51 15 4 17 vir 61 26 56 14 5 17 vir 77 30 61 23 6 16 vir 63 34 56 24 7 6 vir 64 31 55 18 8 0 vir 60 30 18 18 9 16 vir 69 31 54 21 40 13 vir 67 31 56 24 1 18 vir 69 31 51 23 2 19 vir 58 27 51 19 3 11 vir 68 32 59 23 4 12 vir 67 33 57 25 5 17 vir 67 30 52 23 6 19 vir 63 25 50 19 7 18 vir 65 30 52 20 8 16 vir 62 34 54 23 9 20 vir 59 30 51 18 50 gap>=4 p=nnnn FAUST DPP Clustering on IRIS, DPP(y)=(y-p)o(q-p)/|q-p|, p=min (n), q=max (x) q=xxxx F Count corners of circumscribing rectangle (midpts or avg (a) is used also). 0 1 1 1 Checking [0, 4] distances (s 42 Setosa outlier) 2 1 IRIS: 150 irises (rows), 4 columns (Pedal Length, 3 3 F 0 1 2 3 3 3 4 4 1 s 14 s 42 s 45 s 23 s 16 s 43 s 3 Pedal Width, Sepal Length, Sepal Width). 5 6 s 14 0 8 14 7 20 3 5 6 4 first 50 are Setosa (s), s 42 8 0 17 13 24 9 9 7 5 8 7 s 45 14 17 0 11 9 11 10 next 50 are Versicolor (e), 9 3 s 23 7 13 11 0 15 5 5 10 8 s 16 20 24 9 15 0 18 16 next 50 are Virginica (i) irises. 11 5 s 43 3 9 11 5 18 0 3 12 1 s 3 5 9 10 5 16 3 0 13 2 14 1 CL 1 F<17 (50 Set) CL 3 w outliers removed p=aaax q=aaan 15 1 F Cnt 19 1 0 4 20 1 17

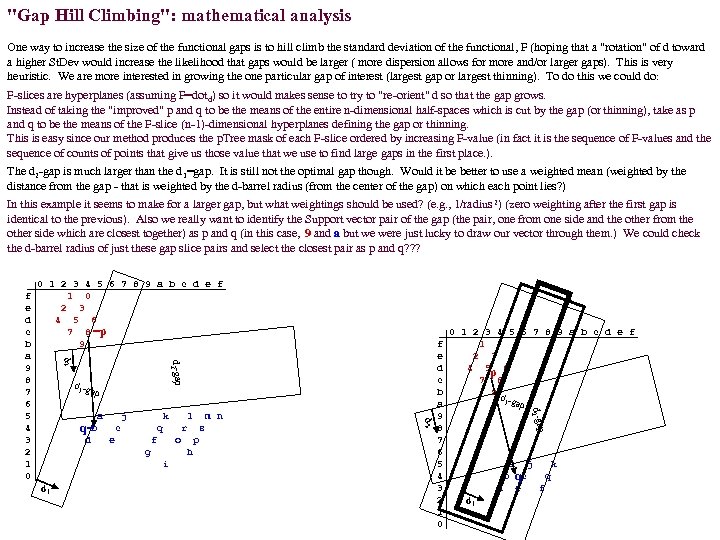

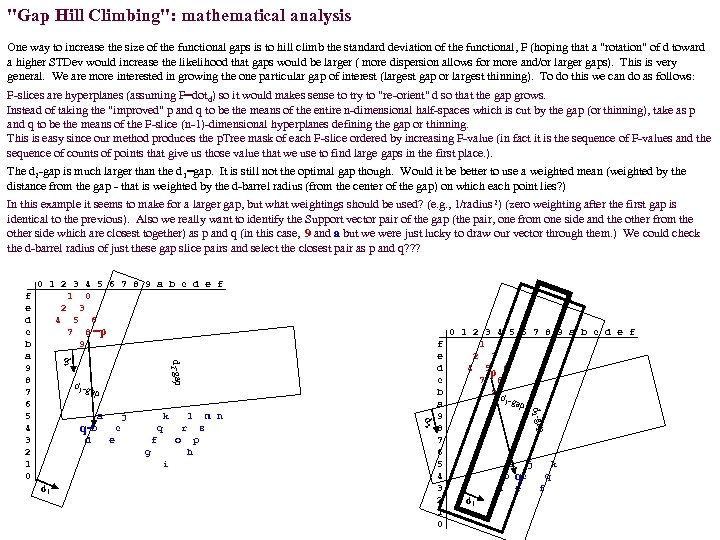

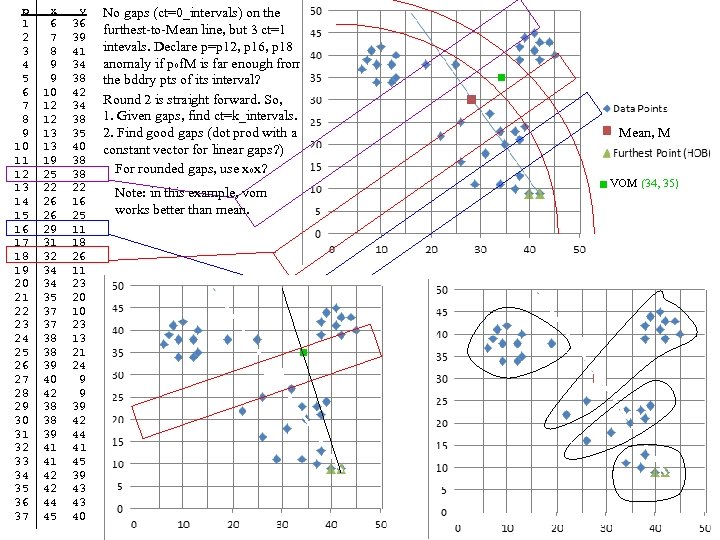

"Gap Hill Climbing": mathematical analysis One way to increase the size of the functional gaps is to hill climb the standard deviation of the functional, F (hoping that a "rotation" of d toward a higher St. Dev would increase the likelihood that gaps would be larger ( more dispersion allows for more and/or larger gaps). This is very heuristic. We are more interested in growing the one particular gap of interest (largest gap or largest thinning). To do this we could do: F-slices are hyperplanes (assuming F=dotd) so it would makes sense to try to "re-orient" d so that the gap grows. Instead of taking the "improved" p and q to be the means of the entire n-dimensional half-spaces which is cut by the gap (or thinning), take as p and q to be the means of the F-slice (n-1)-dimensional hyperplanes defining the gap or thinning. This is easy since our method produces the p. Tree mask of each F-slice ordered by increasing F-value (in fact it is the sequence of F-values and the sequence of counts of points that give us those value that we use to find large gaps in the first place. ). The d 2 -gap is much larger than the d 1=gap. It is still not the optimal gap though. Would it be better to use a weighted mean (weighted by the distance from the gap - that is weighted by the d-barrel radius (from the center of the gap) on which each point lies? ) In this example it seems to make for a larger gap, but what weightings should be used? (e. g. , 1/radius 2) (zero weighting after the first gap is identical to the previous). Also we really want to identify the Support vector pair of the gap (the pair, one from one side and the other from the other side which are closest together) as p and q (in this case, 9 and a but we were just lucky to draw our vector through them. ) We could check the d-barrel radius of just these gap slice pairs and select the closest pair as p and q? ? ? 0 1 2 3 4 5 6 7 8 9 a b c d e f 1 0 2 3 4 5 6 7 8 =p 9 d 2 -gap d 2 d 1 -g ap j e m n r f s o g p h i d 1 l q 0 1 2 3 4 5 6 7 8 9 a b c d e f 1 2 3 4 5 p 6 7 8 9 d 1 -gap p d k c f e d c b a 9 8 7 6 5 4 3 2 1 0 d 2 -ga a b q= d 2 f e d c b a 9 8 7 6 5 4 3 2 1 0 a b d d 1 j k qc e q f

"Gap Hill Climbing": mathematical analysis One way to increase the size of the functional gaps is to hill climb the standard deviation of the functional, F (hoping that a "rotation" of d toward a higher St. Dev would increase the likelihood that gaps would be larger ( more dispersion allows for more and/or larger gaps). This is very heuristic. We are more interested in growing the one particular gap of interest (largest gap or largest thinning). To do this we could do: F-slices are hyperplanes (assuming F=dotd) so it would makes sense to try to "re-orient" d so that the gap grows. Instead of taking the "improved" p and q to be the means of the entire n-dimensional half-spaces which is cut by the gap (or thinning), take as p and q to be the means of the F-slice (n-1)-dimensional hyperplanes defining the gap or thinning. This is easy since our method produces the p. Tree mask of each F-slice ordered by increasing F-value (in fact it is the sequence of F-values and the sequence of counts of points that give us those value that we use to find large gaps in the first place. ). The d 2 -gap is much larger than the d 1=gap. It is still not the optimal gap though. Would it be better to use a weighted mean (weighted by the distance from the gap - that is weighted by the d-barrel radius (from the center of the gap) on which each point lies? ) In this example it seems to make for a larger gap, but what weightings should be used? (e. g. , 1/radius 2) (zero weighting after the first gap is identical to the previous). Also we really want to identify the Support vector pair of the gap (the pair, one from one side and the other from the other side which are closest together) as p and q (in this case, 9 and a but we were just lucky to draw our vector through them. ) We could check the d-barrel radius of just these gap slice pairs and select the closest pair as p and q? ? ? 0 1 2 3 4 5 6 7 8 9 a b c d e f 1 0 2 3 4 5 6 7 8 =p 9 d 2 -gap d 2 d 1 -g ap j e m n r f s o g p h i d 1 l q 0 1 2 3 4 5 6 7 8 9 a b c d e f 1 2 3 4 5 p 6 7 8 9 d 1 -gap p d k c f e d c b a 9 8 7 6 5 4 3 2 1 0 d 2 -ga a b q= d 2 f e d c b a 9 8 7 6 5 4 3 2 1 0 a b d d 1 j k qc e q f

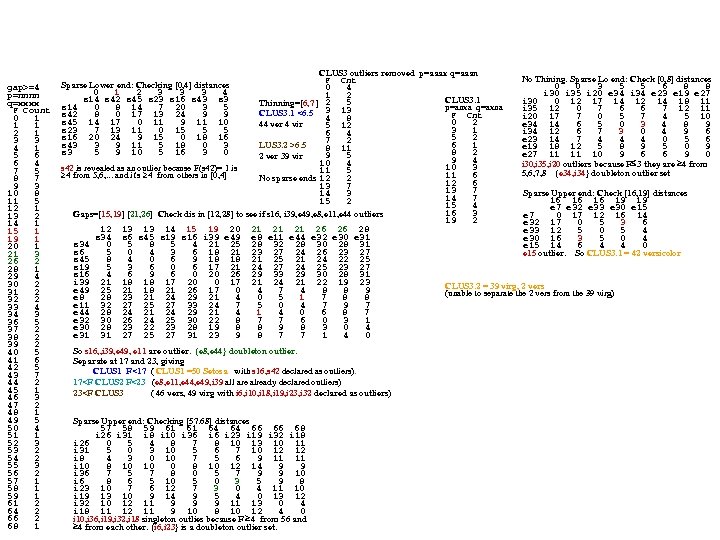

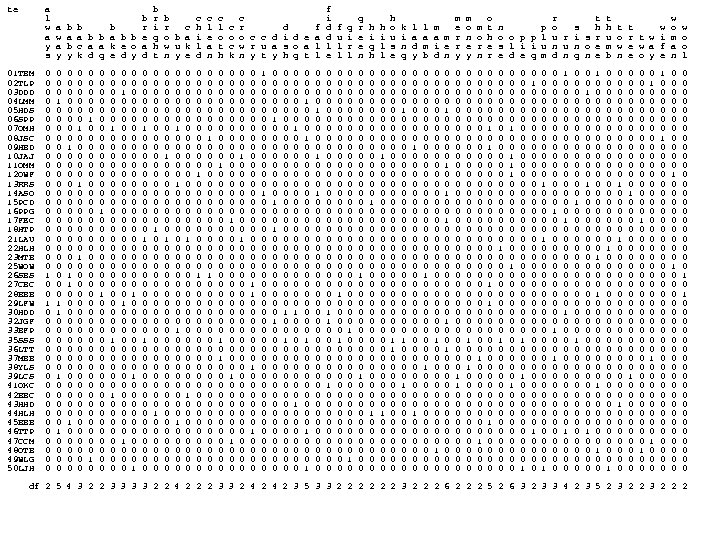

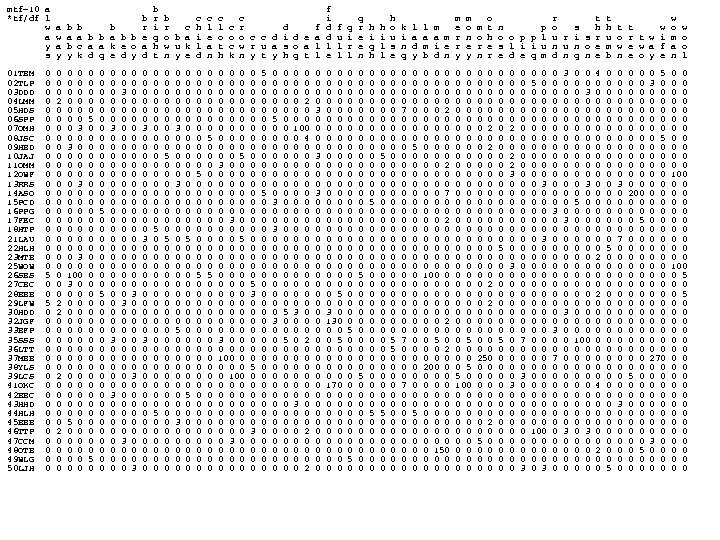

gap>=4 p=nnnn q=xxxx F Count 0 1 1 1 2 1 3 3 4 1 5 6 6 4 7 5 8 7 9 3 10 8 11 5 12 1 13 2 14 1 15 1 19 1 20 1 21 3 26 2 28 1 29 4 30 2 31 2 32 2 33 4 34 3 36 5 37 2 38 2 39 2 40 5 41 6 42 5 43 7 44 2 45 1 46 3 47 2 48 1 49 5 50 4 51 1 52 3 53 2 54 2 55 3 56 2 57 1 58 1 59 1 61 2 64 2 66 2 68 1 CLUS 3 outliers removed p=aaax q=aaan No Thining. Sparse Lo end: Check [0, 8] distances F Cnt 0 0 3 5 5 6 8 8 0 4 i 30 i 35 i 20 e 34 i 34 e 23 e 19 e 27 1 2 CLUS 3. 1 i 30 0 12 17 14 12 14 18 11 5 Thinning=[6, 7 ] 2 p=anxa q=axna i 35 12 0 7 6 6 7 12 11 CLUS 3. 1 <6. 5 3 13 F Cnt i 20 17 7 0 5 7 4 5 10 4 8 0 2 e 34 14 6 5 0 3 4 8 9 44 ver 4 vir 5 12 3 1 i 34 12 6 7 3 0 4 9 6 6 4 5 2 e 23 14 7 4 4 4 0 5 6 7 2 6 1 LUS 3. 2 >6. 5 e 19 18 12 5 8 9 5 0 9 8 11 8 2 e 27 11 11 10 9 6 6 9 0 9 5 2 ver 39 vir 9 4 10 4 i 30, i 35, i 20 outliers because F 3 they are 4 from 10 3 s 42 is revealed as an outlier because F(s 42)= 1 is 11 5 5, 6, 7, 8 {e 34, i 34} doubleton outlier set 11 6 4 from 5, 6, . . . and it's 4 from others in [0, 4] No sparse ends 12 2 12 6 13 7 Sparse Upper end: Check [16, 19] distances 14 3 14 7 16 16 16 19 19 15 2 15 4 e 7 e 32 e 33 e 30 e 15 16 3 Gaps=[15, 19] [21, 26] Check dis in [12, 28] to see if s 16, i 39, e 49, e 8, e 11, e 44 outliers e 7 0 17 12 16 14 19 2 e 32 17 0 5 3 6 12 13 13 14 15 19 20 21 21 21 26 26 28 e 33 12 5 0 5 4 s 34 s 6 s 45 s 19 s 16 i 39 e 49 e 8 e 11 e 44 e 32 e 30 e 31 e 30 16 3 5 0 4 s 34 0 5 8 5 4 21 25 28 32 28 30 28 31 e 15 14 6 4 4 0 s 6 5 0 4 3 6 18 21 23 27 24 26 23 27 e 15 outlier. So CLUS 3. 1 = 42 versicolor s 45 8 4 0 6 9 18 18 21 25 21 24 22 25 s 19 5 3 6 0 6 17 21 24 27 24 25 23 27 s 16 4 6 9 6 0 20 26 29 33 29 30 28 31 i 39 21 18 18 17 20 0 17 21 24 21 22 19 23 CLUS 3. 2 = 39 virg, 2 vers e 49 25 21 18 21 26 17 0 4 7 4 8 8 9 (unable to separate the 2 vers from the 39 virg) e 8 28 23 21 24 29 21 4 0 5 1 7 8 8 e 11 32 27 25 27 33 24 7 5 0 4 7 9 7 e 44 28 24 21 24 29 21 4 0 6 8 7 e 32 30 26 24 25 30 22 8 7 7 6 0 3 1 e 30 28 23 22 23 28 19 8 8 9 8 3 0 4 e 31 31 27 25 27 31 23 9 8 7 7 1 4 0 Sparse Lower end: Checking [0, 4] distances 0 1 2 3 3 3 4 s 14 s 42 s 45 s 23 s 16 s 43 s 14 0 8 14 7 20 3 5 s 42 8 0 17 13 24 9 9 s 45 14 17 0 11 9 11 10 s 23 7 13 11 0 15 5 5 s 16 20 24 9 15 0 18 16 s 43 3 9 11 5 18 0 3 s 3 5 9 10 5 16 3 0 So s 16, , i 39, e 49, e 11 are outlier. {e 8, e 44} doubleton outlier. Separate at 17 and 23, giving CLUS 1 F<17 ( CLUS 1 =50 Setosa with s 16, s 42 declared as outliers). 17

gap>=4 p=nnnn q=xxxx F Count 0 1 1 1 2 1 3 3 4 1 5 6 6 4 7 5 8 7 9 3 10 8 11 5 12 1 13 2 14 1 15 1 19 1 20 1 21 3 26 2 28 1 29 4 30 2 31 2 32 2 33 4 34 3 36 5 37 2 38 2 39 2 40 5 41 6 42 5 43 7 44 2 45 1 46 3 47 2 48 1 49 5 50 4 51 1 52 3 53 2 54 2 55 3 56 2 57 1 58 1 59 1 61 2 64 2 66 2 68 1 CLUS 3 outliers removed p=aaax q=aaan No Thining. Sparse Lo end: Check [0, 8] distances F Cnt 0 0 3 5 5 6 8 8 0 4 i 30 i 35 i 20 e 34 i 34 e 23 e 19 e 27 1 2 CLUS 3. 1 i 30 0 12 17 14 12 14 18 11 5 Thinning=[6, 7 ] 2 p=anxa q=axna i 35 12 0 7 6 6 7 12 11 CLUS 3. 1 <6. 5 3 13 F Cnt i 20 17 7 0 5 7 4 5 10 4 8 0 2 e 34 14 6 5 0 3 4 8 9 44 ver 4 vir 5 12 3 1 i 34 12 6 7 3 0 4 9 6 6 4 5 2 e 23 14 7 4 4 4 0 5 6 7 2 6 1 LUS 3. 2 >6. 5 e 19 18 12 5 8 9 5 0 9 8 11 8 2 e 27 11 11 10 9 6 6 9 0 9 5 2 ver 39 vir 9 4 10 4 i 30, i 35, i 20 outliers because F 3 they are 4 from 10 3 s 42 is revealed as an outlier because F(s 42)= 1 is 11 5 5, 6, 7, 8 {e 34, i 34} doubleton outlier set 11 6 4 from 5, 6, . . . and it's 4 from others in [0, 4] No sparse ends 12 2 12 6 13 7 Sparse Upper end: Check [16, 19] distances 14 3 14 7 16 16 16 19 19 15 2 15 4 e 7 e 32 e 33 e 30 e 15 16 3 Gaps=[15, 19] [21, 26] Check dis in [12, 28] to see if s 16, i 39, e 49, e 8, e 11, e 44 outliers e 7 0 17 12 16 14 19 2 e 32 17 0 5 3 6 12 13 13 14 15 19 20 21 21 21 26 26 28 e 33 12 5 0 5 4 s 34 s 6 s 45 s 19 s 16 i 39 e 49 e 8 e 11 e 44 e 32 e 30 e 31 e 30 16 3 5 0 4 s 34 0 5 8 5 4 21 25 28 32 28 30 28 31 e 15 14 6 4 4 0 s 6 5 0 4 3 6 18 21 23 27 24 26 23 27 e 15 outlier. So CLUS 3. 1 = 42 versicolor s 45 8 4 0 6 9 18 18 21 25 21 24 22 25 s 19 5 3 6 0 6 17 21 24 27 24 25 23 27 s 16 4 6 9 6 0 20 26 29 33 29 30 28 31 i 39 21 18 18 17 20 0 17 21 24 21 22 19 23 CLUS 3. 2 = 39 virg, 2 vers e 49 25 21 18 21 26 17 0 4 7 4 8 8 9 (unable to separate the 2 vers from the 39 virg) e 8 28 23 21 24 29 21 4 0 5 1 7 8 8 e 11 32 27 25 27 33 24 7 5 0 4 7 9 7 e 44 28 24 21 24 29 21 4 0 6 8 7 e 32 30 26 24 25 30 22 8 7 7 6 0 3 1 e 30 28 23 22 23 28 19 8 8 9 8 3 0 4 e 31 31 27 25 27 31 23 9 8 7 7 1 4 0 Sparse Lower end: Checking [0, 4] distances 0 1 2 3 3 3 4 s 14 s 42 s 45 s 23 s 16 s 43 s 14 0 8 14 7 20 3 5 s 42 8 0 17 13 24 9 9 s 45 14 17 0 11 9 11 10 s 23 7 13 11 0 15 5 5 s 16 20 24 9 15 0 18 16 s 43 3 9 11 5 18 0 3 s 3 5 9 10 5 16 3 0 So s 16, , i 39, e 49, e 11 are outlier. {e 8, e 44} doubleton outlier. Separate at 17 and 23, giving CLUS 1 F<17 ( CLUS 1 =50 Setosa with s 16, s 42 declared as outliers). 17

![CLUS 1 Sparse low end (check [0, 9] p=nxnn 0 2 4 6 6 CLUS 1 Sparse low end (check [0, 9] p=nxnn 0 2 4 6 6](https://present5.com/presentation/cf999634122b24f7d3d33668c3fc85ad/image-6.jpg) CLUS 1 Sparse low end (check [0, 9] p=nxnn 0 2 4 6 6 9 10 q=xnxx i 23 i 6 i 36 i 8 i 31 i 3 i 26 0 1 2 3 3 4 4 4 4 5 6 6 6 6 7 7 i 23 0 3 7 6 7 10 10 Dotgp>=4 2 1 i 18 i 19 i 10 i 37 i 5 i 6 i 23 i 32 i 44 i 45 i 49 i 25 i 8 i 15 i 41 i 21 i 33 i 29 i 4 i 3 i 16 i 6 3 0 5 5 6 9 8 p=xnnn 4 1 i 1 0 17 18 10 4 5 15 17 18 6 5 6 6 13 11 6 7 7 8 9 9 7 i 36 7 5 0 7 5 7 7 q=nxxx 6 2 i 18 17 0 12 9 18 17 8 10 4 13 15 20 15 11 27 17 14 20 20 20 13 20 i 8 6 5 7 0 3 5 4 0 1 9 1 i 19 18 12 0 14 21 17 5 4 13 15 17 23 17 9 26 17 16 19 19 20 12 21 i 31 7 6 5 3 0 5 5 1 1 10 1 i 10 10 9 14 0 11 10 10 12 9 6 7 13 8 10 19 9 7 13 13 14 8 12 i 3 10 9 7 5 5 0 4 2 1 11 2 i 37 4 18 21 11 0 5 17 19 19 6 4 2 5 14 9 5 6 6 7 8 10 4 i 26 10 8 7 4 5 4 0 3 2 12 2 i 5 5 17 17 10 5 0 14 15 17 4 5 6 4 10 10 4 5 3 3 5 6 6 i 3, i 26, i 36 >=4 singleton outliers 4 7 13 3 i 6 15 8 5 10 17 14 0 3 9 11 14 19 13 5 24 14 12 16 16 17 9 18 {i 23, i 6}, {i 8, i 31} doubleton ols 5 1 14 3 i 23 17 10 4 12 19 15 3 0 11 13 16 21 15 6 25 16 14 17 17 18 10 20 6 7 15 2 i 32 18 4 13 9 19 17 9 11 0 14 16 20 15 11 27 17 14 20 20 20 12 20 7 5 16 2 i 44 6 13 15 6 6 4 11 13 14 0 3 8 3 9 13 3 2 6 7 8 4 7 8 9 17 4 i 45 5 15 17 7 4 5 14 16 16 3 0 6 4 12 12 2 3 7 7 9 7 5 9 3 18 3 i 49 6 20 23 13 2 6 19 21 20 8 6 0 6 16 8 7 7 7 11 3 10 7 19 3 i 25 6 15 17 8 5 4 13 15 15 3 4 6 0 10 12 4 3 6 6 6 5 5 11 3 20 2 i 8 13 11 9 10 14 10 5 6 11 9 12 16 10 0 20 11 9 12 12 12 5 15 12 5 21 5 i 15 11 27 26 19 9 10 24 25 27 13 12 8 12 20 0 11 13 8 8 9 16 8 13 4 22 6 i 41 6 17 17 9 5 4 14 16 17 3 2 6 4 11 11 0 3 5 5 7 6 4 14 5 23 5 i 21 7 14 16 7 6 5 12 14 14 2 3 8 3 9 13 3 0 7 7 8 4 6 15 4 Sparse hi end (checking [34, 43] 24 2 i 33 7 20 19 13 6 3 16 17 20 6 7 7 6 12 8 5 7 0 1 4 8 5 16 8 34 35 36 36 37 37 39 41 42 43 25 7 i 29 8 20 19 13 7 3 16 17 20 7 7 7 6 12 8 5 7 1 0 3 8 5 17 4 e 20 e 31 e 10 e 32 e 15 e 30 e 11 e 44 e 8 e 49 26 3 i 4 9 20 20 14 8 5 17 18 20 8 9 7 6 12 9 7 8 4 3 0 9 7 18 7 e 20 0 2 5 3 5 4 9 9 9 10 27 2 i 3 9 13 12 8 10 6 9 10 12 4 7 11 5 5 16 6 4 8 8 9 0 10 19 3 e 31 2 0 5 1 6 4 7 7 8 9 28 2 i 16 7 20 21 12 4 6 18 20 20 7 5 3 5 15 8 4 6 5 5 7 10 0 20 5 e 10 5 5 0 6 5 8 9 8 8 10 29 1 i 26 11 11 13 8 12 9 8 10 10 6 9 13 7 4 18 9 7 11 10 10 4 12 21 1 e 32 3 1 6 0 6 3 7 6 7 8 30 3 i 36 14 10 9 8 15 12 5 7 9 9 11 17 11 7 22 11 9 14 14 16 7 15 22 4 e 15 5 6 0 4 11 9 10 9 31 3 i 38 9 19 20 13 7 5 17 18 19 8 8 6 5 12 10 7 7 5 4 2 9 5 23 1 e 30 4 4 8 3 4 0 9 8 8 8 32 7 i 1, i 18, i 19, i 10, i 37, i 32 >=4 outliers 24 1 e 11 9 7 11 9 0 4 5 7 33 4 gap: (24, 31) CLUS 1<27. 5 (50 versi, 49 virg) CLUS 2>27. 5 (50 set, 1 virg) 31 2 e 44 9 7 8 6 9 8 4 0 1 4 34 1 33 2 e 8 9 8 8 7 10 8 5 1 0 4 35 1 34 12 Sparse hi end (checking [38, 39] e 49 10 8 9 8 7 4 4 0 36 2 35 8 38 38 39 39 e 30, e 49, ei 15, e 11 >=4 singleton ols 37 2 36 17 s 42 s 36 s 37 s 1 {e 44, e 8} doubleton ols 39 1 Thinning (8, 13) 37 6 s 42 0 10 16 21 41 1 CLUS 1 Split in middle=10. 5 38 2 s 36 10 0 6 11 42 1 Dotgp>=4 CLUS_1. 1<10. 5 (21 virg, 2 ver) 39 2 s 37 16 6 0 6 43 1 p=nnnn CLUS_1. 2>10. 5 (12 virg, 42 ver) s 15 21 11 6 0 q=xxxx Clus 1 s 37, s 1 outliers 0 1 p=nnxn Sparse hi end (checking [10, 13] Thinning (7, 9) 1 2 q=xxnx 10 10 11 11 13 13 Split in middle=7. 5 2 2 0 2 CLUS 1 e 34 i 2 i 14 i 43 e 41 i 20 i 7 i 35 CLUS_1. 2. 1 < 7. 5 (10 virg, 4 ver) 3 1 1 1 Dotgp>=4 CLUS 1. 2 CLUS_1. 2. 2 > 7. 5 ( 1 virg, 38 ver) e 34 0 4 5 4 10 5 13 6 4 2 2 5 p=nnnx Dotgp>=4 i 15 gap>=4 outlier at F=0 i 2 4 0 3 0 10 7 11 8 5 1 3 8 q=xxxn p=aaan hi end gap outlier i 30 i 14 5 3 0 3 10 7 10 9 6 6 4 9 CLUS 1. 2. 1 0 1 q=aaax i 43 4 0 3 0 10 7 11 8 7 2 5 6 Dotgp>=4 4 1 CLUS 1. 2. 1 0 1 e 41 10 10 0 9 8 14 8 3 6 9 p=anaa 5 3 Dotgp>=4 4 4 i 20 5 7 7 7 9 0 13 7 9 1 7 14 q=axaa 6 5 p=aana 5 3 i 7 13 11 10 11 8 13 0 17 10 2 8 11 0 1 7 4 q=aaxa 6 3 i 35 6 8 9 8 14 7 17 0 11 2 9 7 1 1 8 3 0 5 7 4 i 7, i 35 >=4 singleton outliers 12 2 10 4 2 1 9 6 1 2 8 1 13 6 11 2 4 2 10 7 2 3 9 5 14 6 13 2 6 3 11 3 3 2 10 7 15 7 7 4 12 4 4 1 11 3 16 2 C. 2. 1 0 0 1 2 3 3 4 4 5 5 6 7 9 2 13 8 6 1 12 5 i 24 e 7 i 34 i 47 i 28 e 34 e 36 e 21 i 50 i 2 i 43 i 14 i 22 17 2 14 4 13 3 i 24 0 7 4 2 2 4 4 9 6 5 5 5 7 7 18 3 15 4 14 6 e 7 7 0 6 9 6 5 8 4 5 7 9 9 11 10 19 3 CLUS 1. 2. 1 16 3 i 34 4 6 0 5 4 5 3 9 7 5 6 6 8 9 15 1 20 2 p=naaa 17 8 i 47 2 9 5 0 4 6 5 11 8 7 5 5 6 8 16 4 21 2 q=xaaa i 27 2 6 4 4 0 2 4 7 5 5 6 6 18 5 17 1 22 3 0 4 i 28 4 5 5 6 2 0 4 6 3 3 5 5 7 6 19 3 18 1 e 34 4 8 3 5 4 4 0 9 6 4 4 4 5 6 23 4 1 1 20 1 19 2 e 36 9 4 9 11 7 6 9 0 4 8 10 10 11 9 24 2 2 1 21 1 e 21 6 5 7 8 5 3 6 4 0 4 6 6 8 5 25 1 3 2 22 3 i 50 5 7 5 3 4 8 4 0 3 3 6 5 26 2 4 2 23 1 i 2 5 9 6 5 5 5 4 10 6 3 0 0 3 3 27 3 5 2 i 43 5 9 6 5 5 5 4 10 6 3 0 0 3 3 28 1 6 1 i 14 7 11 8 6 6 7 5 11 8 6 3 3 0 3 i 22 7 10 9 8 6 6 6 9 5 5 3 3 3 0 29 1 7 1 DPP (other corners) Check Dotp, d(y) gaps>=4 Check sparse ends. Sparse low end (checking [0, 7]

CLUS 1 Sparse low end (check [0, 9] p=nxnn 0 2 4 6 6 9 10 q=xnxx i 23 i 6 i 36 i 8 i 31 i 3 i 26 0 1 2 3 3 4 4 4 4 5 6 6 6 6 7 7 i 23 0 3 7 6 7 10 10 Dotgp>=4 2 1 i 18 i 19 i 10 i 37 i 5 i 6 i 23 i 32 i 44 i 45 i 49 i 25 i 8 i 15 i 41 i 21 i 33 i 29 i 4 i 3 i 16 i 6 3 0 5 5 6 9 8 p=xnnn 4 1 i 1 0 17 18 10 4 5 15 17 18 6 5 6 6 13 11 6 7 7 8 9 9 7 i 36 7 5 0 7 5 7 7 q=nxxx 6 2 i 18 17 0 12 9 18 17 8 10 4 13 15 20 15 11 27 17 14 20 20 20 13 20 i 8 6 5 7 0 3 5 4 0 1 9 1 i 19 18 12 0 14 21 17 5 4 13 15 17 23 17 9 26 17 16 19 19 20 12 21 i 31 7 6 5 3 0 5 5 1 1 10 1 i 10 10 9 14 0 11 10 10 12 9 6 7 13 8 10 19 9 7 13 13 14 8 12 i 3 10 9 7 5 5 0 4 2 1 11 2 i 37 4 18 21 11 0 5 17 19 19 6 4 2 5 14 9 5 6 6 7 8 10 4 i 26 10 8 7 4 5 4 0 3 2 12 2 i 5 5 17 17 10 5 0 14 15 17 4 5 6 4 10 10 4 5 3 3 5 6 6 i 3, i 26, i 36 >=4 singleton outliers 4 7 13 3 i 6 15 8 5 10 17 14 0 3 9 11 14 19 13 5 24 14 12 16 16 17 9 18 {i 23, i 6}, {i 8, i 31} doubleton ols 5 1 14 3 i 23 17 10 4 12 19 15 3 0 11 13 16 21 15 6 25 16 14 17 17 18 10 20 6 7 15 2 i 32 18 4 13 9 19 17 9 11 0 14 16 20 15 11 27 17 14 20 20 20 12 20 7 5 16 2 i 44 6 13 15 6 6 4 11 13 14 0 3 8 3 9 13 3 2 6 7 8 4 7 8 9 17 4 i 45 5 15 17 7 4 5 14 16 16 3 0 6 4 12 12 2 3 7 7 9 7 5 9 3 18 3 i 49 6 20 23 13 2 6 19 21 20 8 6 0 6 16 8 7 7 7 11 3 10 7 19 3 i 25 6 15 17 8 5 4 13 15 15 3 4 6 0 10 12 4 3 6 6 6 5 5 11 3 20 2 i 8 13 11 9 10 14 10 5 6 11 9 12 16 10 0 20 11 9 12 12 12 5 15 12 5 21 5 i 15 11 27 26 19 9 10 24 25 27 13 12 8 12 20 0 11 13 8 8 9 16 8 13 4 22 6 i 41 6 17 17 9 5 4 14 16 17 3 2 6 4 11 11 0 3 5 5 7 6 4 14 5 23 5 i 21 7 14 16 7 6 5 12 14 14 2 3 8 3 9 13 3 0 7 7 8 4 6 15 4 Sparse hi end (checking [34, 43] 24 2 i 33 7 20 19 13 6 3 16 17 20 6 7 7 6 12 8 5 7 0 1 4 8 5 16 8 34 35 36 36 37 37 39 41 42 43 25 7 i 29 8 20 19 13 7 3 16 17 20 7 7 7 6 12 8 5 7 1 0 3 8 5 17 4 e 20 e 31 e 10 e 32 e 15 e 30 e 11 e 44 e 8 e 49 26 3 i 4 9 20 20 14 8 5 17 18 20 8 9 7 6 12 9 7 8 4 3 0 9 7 18 7 e 20 0 2 5 3 5 4 9 9 9 10 27 2 i 3 9 13 12 8 10 6 9 10 12 4 7 11 5 5 16 6 4 8 8 9 0 10 19 3 e 31 2 0 5 1 6 4 7 7 8 9 28 2 i 16 7 20 21 12 4 6 18 20 20 7 5 3 5 15 8 4 6 5 5 7 10 0 20 5 e 10 5 5 0 6 5 8 9 8 8 10 29 1 i 26 11 11 13 8 12 9 8 10 10 6 9 13 7 4 18 9 7 11 10 10 4 12 21 1 e 32 3 1 6 0 6 3 7 6 7 8 30 3 i 36 14 10 9 8 15 12 5 7 9 9 11 17 11 7 22 11 9 14 14 16 7 15 22 4 e 15 5 6 0 4 11 9 10 9 31 3 i 38 9 19 20 13 7 5 17 18 19 8 8 6 5 12 10 7 7 5 4 2 9 5 23 1 e 30 4 4 8 3 4 0 9 8 8 8 32 7 i 1, i 18, i 19, i 10, i 37, i 32 >=4 outliers 24 1 e 11 9 7 11 9 0 4 5 7 33 4 gap: (24, 31) CLUS 1<27. 5 (50 versi, 49 virg) CLUS 2>27. 5 (50 set, 1 virg) 31 2 e 44 9 7 8 6 9 8 4 0 1 4 34 1 33 2 e 8 9 8 8 7 10 8 5 1 0 4 35 1 34 12 Sparse hi end (checking [38, 39] e 49 10 8 9 8 7 4 4 0 36 2 35 8 38 38 39 39 e 30, e 49, ei 15, e 11 >=4 singleton ols 37 2 36 17 s 42 s 36 s 37 s 1 {e 44, e 8} doubleton ols 39 1 Thinning (8, 13) 37 6 s 42 0 10 16 21 41 1 CLUS 1 Split in middle=10. 5 38 2 s 36 10 0 6 11 42 1 Dotgp>=4 CLUS_1. 1<10. 5 (21 virg, 2 ver) 39 2 s 37 16 6 0 6 43 1 p=nnnn CLUS_1. 2>10. 5 (12 virg, 42 ver) s 15 21 11 6 0 q=xxxx Clus 1 s 37, s 1 outliers 0 1 p=nnxn Sparse hi end (checking [10, 13] Thinning (7, 9) 1 2 q=xxnx 10 10 11 11 13 13 Split in middle=7. 5 2 2 0 2 CLUS 1 e 34 i 2 i 14 i 43 e 41 i 20 i 7 i 35 CLUS_1. 2. 1 < 7. 5 (10 virg, 4 ver) 3 1 1 1 Dotgp>=4 CLUS 1. 2 CLUS_1. 2. 2 > 7. 5 ( 1 virg, 38 ver) e 34 0 4 5 4 10 5 13 6 4 2 2 5 p=nnnx Dotgp>=4 i 15 gap>=4 outlier at F=0 i 2 4 0 3 0 10 7 11 8 5 1 3 8 q=xxxn p=aaan hi end gap outlier i 30 i 14 5 3 0 3 10 7 10 9 6 6 4 9 CLUS 1. 2. 1 0 1 q=aaax i 43 4 0 3 0 10 7 11 8 7 2 5 6 Dotgp>=4 4 1 CLUS 1. 2. 1 0 1 e 41 10 10 0 9 8 14 8 3 6 9 p=anaa 5 3 Dotgp>=4 4 4 i 20 5 7 7 7 9 0 13 7 9 1 7 14 q=axaa 6 5 p=aana 5 3 i 7 13 11 10 11 8 13 0 17 10 2 8 11 0 1 7 4 q=aaxa 6 3 i 35 6 8 9 8 14 7 17 0 11 2 9 7 1 1 8 3 0 5 7 4 i 7, i 35 >=4 singleton outliers 12 2 10 4 2 1 9 6 1 2 8 1 13 6 11 2 4 2 10 7 2 3 9 5 14 6 13 2 6 3 11 3 3 2 10 7 15 7 7 4 12 4 4 1 11 3 16 2 C. 2. 1 0 0 1 2 3 3 4 4 5 5 6 7 9 2 13 8 6 1 12 5 i 24 e 7 i 34 i 47 i 28 e 34 e 36 e 21 i 50 i 2 i 43 i 14 i 22 17 2 14 4 13 3 i 24 0 7 4 2 2 4 4 9 6 5 5 5 7 7 18 3 15 4 14 6 e 7 7 0 6 9 6 5 8 4 5 7 9 9 11 10 19 3 CLUS 1. 2. 1 16 3 i 34 4 6 0 5 4 5 3 9 7 5 6 6 8 9 15 1 20 2 p=naaa 17 8 i 47 2 9 5 0 4 6 5 11 8 7 5 5 6 8 16 4 21 2 q=xaaa i 27 2 6 4 4 0 2 4 7 5 5 6 6 18 5 17 1 22 3 0 4 i 28 4 5 5 6 2 0 4 6 3 3 5 5 7 6 19 3 18 1 e 34 4 8 3 5 4 4 0 9 6 4 4 4 5 6 23 4 1 1 20 1 19 2 e 36 9 4 9 11 7 6 9 0 4 8 10 10 11 9 24 2 2 1 21 1 e 21 6 5 7 8 5 3 6 4 0 4 6 6 8 5 25 1 3 2 22 3 i 50 5 7 5 3 4 8 4 0 3 3 6 5 26 2 4 2 23 1 i 2 5 9 6 5 5 5 4 10 6 3 0 0 3 3 27 3 5 2 i 43 5 9 6 5 5 5 4 10 6 3 0 0 3 3 28 1 6 1 i 14 7 11 8 6 6 7 5 11 8 6 3 3 0 3 i 22 7 10 9 8 6 6 6 9 5 5 3 3 3 0 29 1 7 1 DPP (other corners) Check Dotp, d(y) gaps>=4 Check sparse ends. Sparse low end (checking [0, 7]

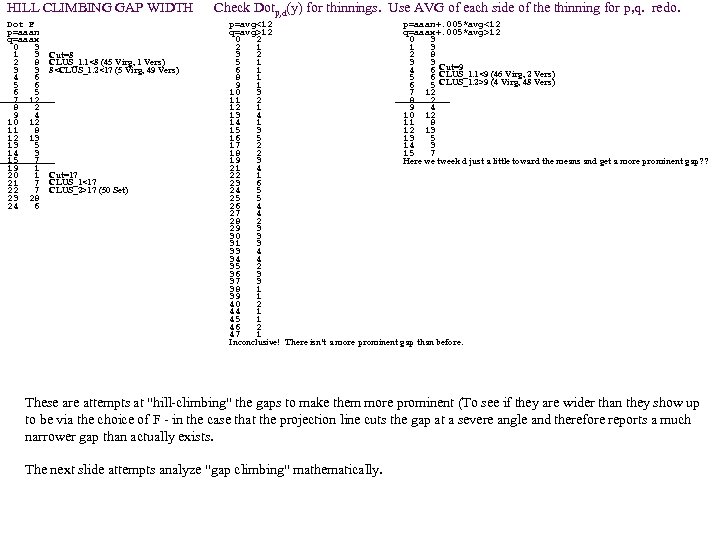

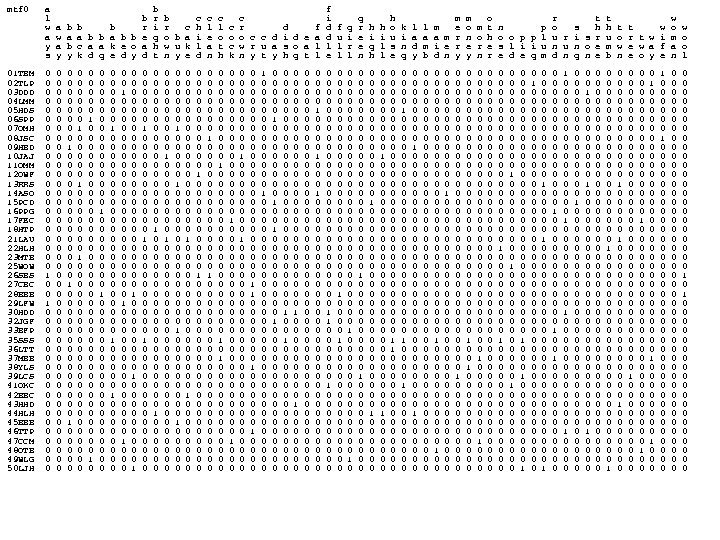

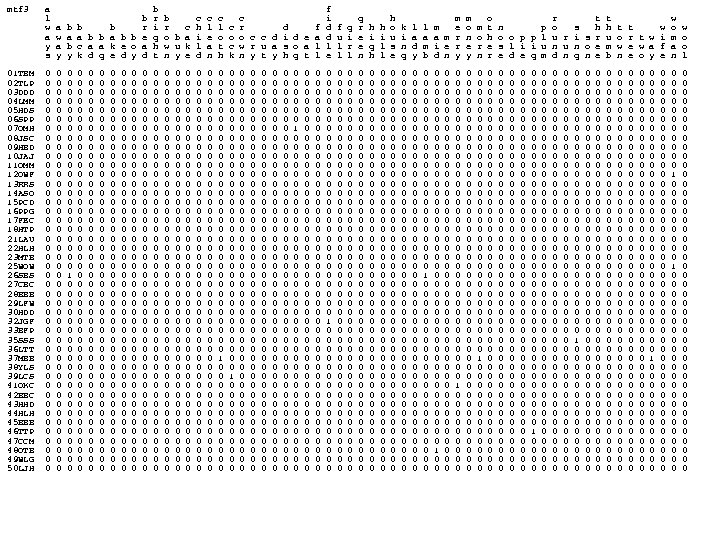

HILL CLIMBING GAP WIDTH Check Dotp, d(y) for thinnings. Use AVG of each side of the thinning for p, q. redo. Dot F p=aaan q=aaax 0 3 1 3 2 8 3 3 4 6 5 6 6 5 7 12 8 2 9 4 10 12 11 8 12 13 13 5 14 3 15 7 19 1 20 1 21 7 22 7 23 28 24 6 Cut=8 CLUS_1. 1<8 (45 Virg, 1 Vers) 8

HILL CLIMBING GAP WIDTH Check Dotp, d(y) for thinnings. Use AVG of each side of the thinning for p, q. redo. Dot F p=aaan q=aaax 0 3 1 3 2 8 3 3 4 6 5 6 6 5 7 12 8 2 9 4 10 12 11 8 12 13 13 5 14 3 15 7 19 1 20 1 21 7 22 7 23 28 24 6 Cut=8 CLUS_1. 1<8 (45 Virg, 1 Vers) 8

"Gap Hill Climbing": mathematical analysis One way to increase the size of the functional gaps is to hill climb the standard deviation of the functional, F (hoping that a "rotation" of d toward a higher STDev would increase the likelihood that gaps would be larger ( more dispersion allows for more and/or larger gaps). This is very general. We are more interested in growing the one particular gap of interest (largest gap or largest thinning). To do this we can do as follows: F-slices are hyperplanes (assuming F=dotd) so it would makes sense to try to "re-orient" d so that the gap grows. Instead of taking the "improved" p and q to be the means of the entire n-dimensional half-spaces which is cut by the gap (or thinning), take as p and q to be the means of the F-slice (n-1)-dimensional hyperplanes defining the gap or thinning. This is easy since our method produces the p. Tree mask of each F-slice ordered by increasing F-value (in fact it is the sequence of F-values and the sequence of counts of points that give us those value that we use to find large gaps in the first place. ). The d 2 -gap is much larger than the d 1=gap. It is still not the optimal gap though. Would it be better to use a weighted mean (weighted by the distance from the gap - that is weighted by the d-barrel radius (from the center of the gap) on which each point lies? ) In this example it seems to make for a larger gap, but what weightings should be used? (e. g. , 1/radius 2) (zero weighting after the first gap is identical to the previous). Also we really want to identify the Support vector pair of the gap (the pair, one from one side and the other from the other side which are closest together) as p and q (in this case, 9 and a but we were just lucky to draw our vector through them. ) We could check the d-barrel radius of just these gap slice pairs and select the closest pair as p and q? ? ? 0 1 2 3 4 5 6 7 8 9 a b c d e f 1 0 2 3 4 5 6 7 8 =p 9 d 2 -gap d 2 d 1 -g ap j e m n r f s o g p h i d 1 l q 0 1 2 3 4 5 6 7 8 9 a b c d e f 1 2 3 4 5 p 6 7 8 9 d 1 -gap p d k c f e d c b a 9 8 7 6 5 4 3 2 1 0 d 2 -ga a b q= d 2 f e d c b a 9 8 7 6 5 4 3 2 1 0 a b d d 1 j k qc e q f

"Gap Hill Climbing": mathematical analysis One way to increase the size of the functional gaps is to hill climb the standard deviation of the functional, F (hoping that a "rotation" of d toward a higher STDev would increase the likelihood that gaps would be larger ( more dispersion allows for more and/or larger gaps). This is very general. We are more interested in growing the one particular gap of interest (largest gap or largest thinning). To do this we can do as follows: F-slices are hyperplanes (assuming F=dotd) so it would makes sense to try to "re-orient" d so that the gap grows. Instead of taking the "improved" p and q to be the means of the entire n-dimensional half-spaces which is cut by the gap (or thinning), take as p and q to be the means of the F-slice (n-1)-dimensional hyperplanes defining the gap or thinning. This is easy since our method produces the p. Tree mask of each F-slice ordered by increasing F-value (in fact it is the sequence of F-values and the sequence of counts of points that give us those value that we use to find large gaps in the first place. ). The d 2 -gap is much larger than the d 1=gap. It is still not the optimal gap though. Would it be better to use a weighted mean (weighted by the distance from the gap - that is weighted by the d-barrel radius (from the center of the gap) on which each point lies? ) In this example it seems to make for a larger gap, but what weightings should be used? (e. g. , 1/radius 2) (zero weighting after the first gap is identical to the previous). Also we really want to identify the Support vector pair of the gap (the pair, one from one side and the other from the other side which are closest together) as p and q (in this case, 9 and a but we were just lucky to draw our vector through them. ) We could check the d-barrel radius of just these gap slice pairs and select the closest pair as p and q? ? ? 0 1 2 3 4 5 6 7 8 9 a b c d e f 1 0 2 3 4 5 6 7 8 =p 9 d 2 -gap d 2 d 1 -g ap j e m n r f s o g p h i d 1 l q 0 1 2 3 4 5 6 7 8 9 a b c d e f 1 2 3 4 5 p 6 7 8 9 d 1 -gap p d k c f e d c b a 9 8 7 6 5 4 3 2 1 0 d 2 -ga a b q= d 2 f e d c b a 9 8 7 6 5 4 3 2 1 0 a b d d 1 j k qc e q f

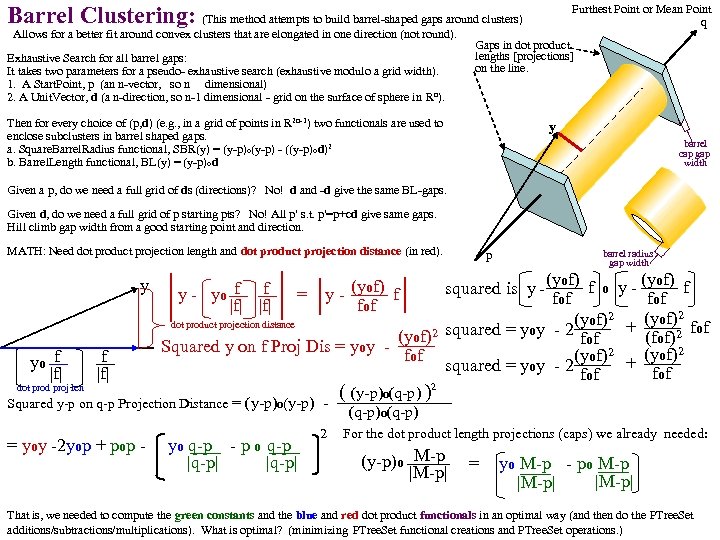

Barrel Clustering: (This method attempts to build barrel-shaped gaps around clusters) Allows for a better fit around convex clusters that are elongated in one direction (not round). Exhaustive Search for all barrel gaps: It takes two parameters for a pseudo- exhaustive search (exhaustive modulo a grid width). 1. A Start. Point, p (an n-vector, so n dimensional) 2. A Unit. Vector, d (a n-direction, so n-1 dimensional - grid on the surface of sphere in Rn). Furthest Point or Mean Point q Gaps in dot product lengths [projections] on the line. Then for every choice of (p, d) (e. g. , in a grid of points in R 2 n-1) two functionals are used to enclose subclusters in barrel shaped gaps. a. Square. Barrel. Radius functional, SBR(y) = (y-p)o(y-p) - ((y-p)od)2 b. Barrel. Length functional, BL(y) = (y-p)od y barrel cap gap width Given a p, do we need a full grid of ds (directions)? No! d and -d give the same BL-gaps. Given d, do we need a full grid of p starting pts? No! All p' s. t. p'=p+cd give same gaps. Hill climb gap width from a good starting point and direction. MATH: Need dot product projection length and dot product projection distance (in red). y yo f |f| p barrel radius gap width (yof) f o y - of) f (y squared is y - fof (yof)2 + fof dot product projection distance (yof)2 squared = yoy - 2 fof (fof)2 Squared y on f Proj Dis = yoy f (yof)2 + fof squared = yoy - 2 fof y - yo f f |f| (y = y - of) f fof ( (y-p)o(q-p) )2 Squared y-p on q-p Projection Distance = (y-p)o(y-p) (q-p)o(q-p) dot prod proj len 1 st = yoy -2 yop + pop - ( yo(q-p) - p o(q-p |q-p| 2 For the dot product length projections (caps) we already needed: (y-p)o M-p = ( yo(M-p) - po M-p ) |M-p| That is, we needed to compute the green constants and the blue and red dot product functionals in an optimal way (and then do the PTree. Set additions/subtractions/multiplications). What is optimal? (minimizing PTree. Set functional creations and PTree. Set operations. )

Barrel Clustering: (This method attempts to build barrel-shaped gaps around clusters) Allows for a better fit around convex clusters that are elongated in one direction (not round). Exhaustive Search for all barrel gaps: It takes two parameters for a pseudo- exhaustive search (exhaustive modulo a grid width). 1. A Start. Point, p (an n-vector, so n dimensional) 2. A Unit. Vector, d (a n-direction, so n-1 dimensional - grid on the surface of sphere in Rn). Furthest Point or Mean Point q Gaps in dot product lengths [projections] on the line. Then for every choice of (p, d) (e. g. , in a grid of points in R 2 n-1) two functionals are used to enclose subclusters in barrel shaped gaps. a. Square. Barrel. Radius functional, SBR(y) = (y-p)o(y-p) - ((y-p)od)2 b. Barrel. Length functional, BL(y) = (y-p)od y barrel cap gap width Given a p, do we need a full grid of ds (directions)? No! d and -d give the same BL-gaps. Given d, do we need a full grid of p starting pts? No! All p' s. t. p'=p+cd give same gaps. Hill climb gap width from a good starting point and direction. MATH: Need dot product projection length and dot product projection distance (in red). y yo f |f| p barrel radius gap width (yof) f o y - of) f (y squared is y - fof (yof)2 + fof dot product projection distance (yof)2 squared = yoy - 2 fof (fof)2 Squared y on f Proj Dis = yoy f (yof)2 + fof squared = yoy - 2 fof y - yo f f |f| (y = y - of) f fof ( (y-p)o(q-p) )2 Squared y-p on q-p Projection Distance = (y-p)o(y-p) (q-p)o(q-p) dot prod proj len 1 st = yoy -2 yop + pop - ( yo(q-p) - p o(q-p |q-p| 2 For the dot product length projections (caps) we already needed: (y-p)o M-p = ( yo(M-p) - po M-p ) |M-p| That is, we needed to compute the green constants and the blue and red dot product functionals in an optimal way (and then do the PTree. Set additions/subtractions/multiplications). What is optimal? (minimizing PTree. Set functional creations and PTree. Set operations. )

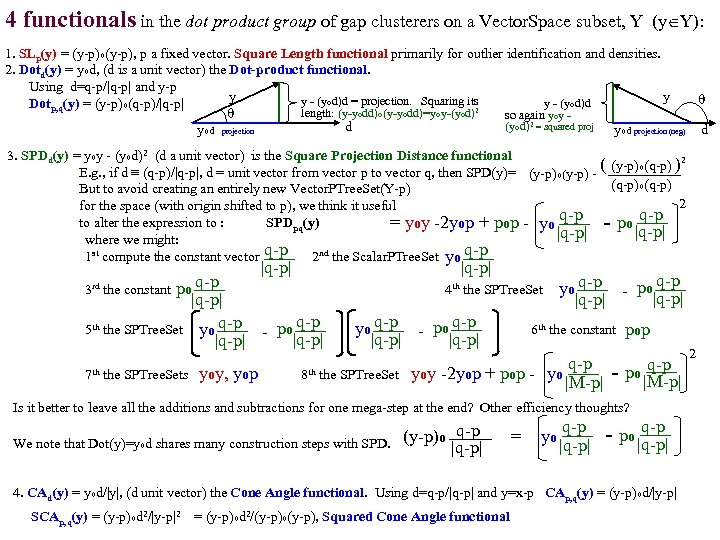

4 functionals in the dot product group of gap clusterers on a Vector. Space subset, Y (y Y): 1. SLp(y) = (y-p)o(y-p), p a fixed vector. Square Length functional primarily for outlier identification and densities. 2. Dotd(y) = yod, (d is a unit vector) the Dot-product functional. Using d=q-p/|q-p| and y-p y y y - (yod)d = projection. Squaring its y - (yod)d Dotp, q(y) = (y-p)o(q-p)/|q-p| 2 length: (y-yodd)o(y-yodd)=yoy-(yod) so again yoy - (yod)2 = squared proj d yod projection yod projection (neg) d 3. SPDd(y) = yoy - (yod)2 (d a unit vector) is the Square Projection Distance functional ( (y-p)o(q-p) )2 E. g. , if d ≡ (q-p)/|q-p|, d = unit vector from vector p to vector q, then SPD(y)= (y-p)o(y-p) (q-p)o(q-p) But to avoid creating an entirely new Vector. PTree. Set(Y-p) 2 for the space (with origin shifted to p), we think it useful q-p to alter the expression to : SPDpq(y) = yoy -2 yop + pop - yo - po |q-p| where we might: q-p 1 st compute the constant vector 2 nd the Scalar. PTree. Set yo q-p |q-p| q-p po 3 rd the constant |q-p| 5 th the SPTree. Set yo q-p - po q-p |q-p| 7 th the SPTree. Sets yoy, yop |q-p| 4 th the SPTree. Set yo q-p - po q-p |q-p| 6 th the constant pop q-p - po |M-p| 8 th the SPTree. Set= yoy -2 yop + pop - yo |M-p| Is it better to leave all the additions and subtractions for one mega-step at the end? Other efficiency thoughts? We note that Dot(y)=yod shares many construction steps with SPD. (y-p)o q-p |q-p| = yo q-p - po |q-p| 4. CAd(y) = yod/|y|, (d unit vector) the Cone Angle functional. Using d=q-p/|q-p| and y=x-p CAp, q(y) = (y-p)od/|y-p| SCAp, q(y) = (y-p)od 2/|y-p|2 = (y-p)od 2/(y-p)o(y-p), Squared Cone Angle functional 2

4 functionals in the dot product group of gap clusterers on a Vector. Space subset, Y (y Y): 1. SLp(y) = (y-p)o(y-p), p a fixed vector. Square Length functional primarily for outlier identification and densities. 2. Dotd(y) = yod, (d is a unit vector) the Dot-product functional. Using d=q-p/|q-p| and y-p y y y - (yod)d = projection. Squaring its y - (yod)d Dotp, q(y) = (y-p)o(q-p)/|q-p| 2 length: (y-yodd)o(y-yodd)=yoy-(yod) so again yoy - (yod)2 = squared proj d yod projection yod projection (neg) d 3. SPDd(y) = yoy - (yod)2 (d a unit vector) is the Square Projection Distance functional ( (y-p)o(q-p) )2 E. g. , if d ≡ (q-p)/|q-p|, d = unit vector from vector p to vector q, then SPD(y)= (y-p)o(y-p) (q-p)o(q-p) But to avoid creating an entirely new Vector. PTree. Set(Y-p) 2 for the space (with origin shifted to p), we think it useful q-p to alter the expression to : SPDpq(y) = yoy -2 yop + pop - yo - po |q-p| where we might: q-p 1 st compute the constant vector 2 nd the Scalar. PTree. Set yo q-p |q-p| q-p po 3 rd the constant |q-p| 5 th the SPTree. Set yo q-p - po q-p |q-p| 7 th the SPTree. Sets yoy, yop |q-p| 4 th the SPTree. Set yo q-p - po q-p |q-p| 6 th the constant pop q-p - po |M-p| 8 th the SPTree. Set= yoy -2 yop + pop - yo |M-p| Is it better to leave all the additions and subtractions for one mega-step at the end? Other efficiency thoughts? We note that Dot(y)=yod shares many construction steps with SPD. (y-p)o q-p |q-p| = yo q-p - po |q-p| 4. CAd(y) = yod/|y|, (d unit vector) the Cone Angle functional. Using d=q-p/|q-p| and y=x-p CAp, q(y) = (y-p)od/|y-p| SCAp, q(y) = (y-p)od 2/|y-p|2 = (y-p)od 2/(y-p)o(y-p), Squared Cone Angle functional 2

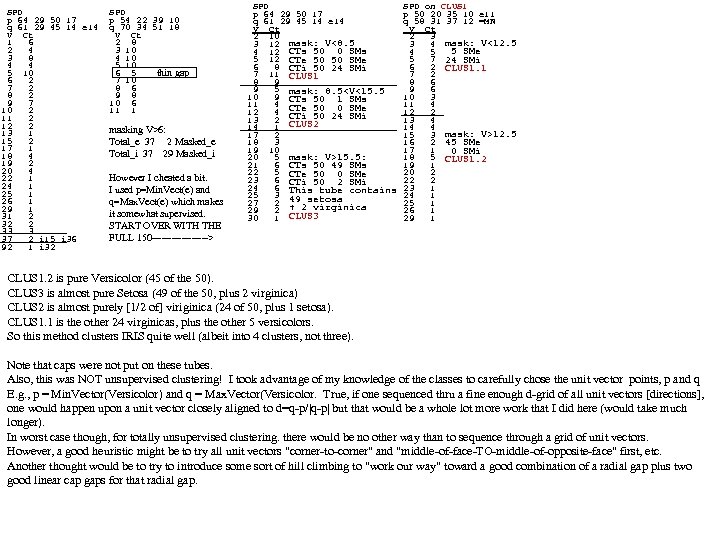

SPD p 64 29 50 17 q 61 29 45 14 e 14 V Ct 1 6 2 4 3 8 4 4 5 10 6 2 7 2 8 2 9 7 10 2 11 2 12 2 13 1 15 2 17 1 18 4 19 2 20 4 22 1 24 1 25 1 26 1 29 1 31 2 32 2 33 3 37 2 i 15 i 36 92 1 i 32 SPD p 54 22 39 10 q 70 34 51 18 V Ct 2 8 3 10 4 10 5 10 6 5 thin gap 7 10 8 6 9 8 10 6 11 1 masking V>6: Total_e 37 2 Masked_e Total_i 37 29 Masked_i However I cheated a bit. I used p=Min. Vect(e) and q=Max. Vect(e) which makes it somewhat supervised. START OVER WITH THE FULL 150 ---------> SPD on CLUS 1 p 64 29 50 17 p 50 20 35 10 e 11 q 61 29 45 14 e 14 q 58 31 37 12 =MN V Ct 2 10 2 3 3 12 mask: V<8. 5 3 4 mask: V<12. 5 4 12 CTs 50 0 SMs 4 5 5 SMe 5 12 CTe 50 50 SMe 5 7 24 SMi 6 8 CTi 50 24 SMi 6 2 CLUS 1. 1 7 11 CLUS 1 7 2 8 9 8 6 9 5 mask: 8. 5

SPD p 64 29 50 17 q 61 29 45 14 e 14 V Ct 1 6 2 4 3 8 4 4 5 10 6 2 7 2 8 2 9 7 10 2 11 2 12 2 13 1 15 2 17 1 18 4 19 2 20 4 22 1 24 1 25 1 26 1 29 1 31 2 32 2 33 3 37 2 i 15 i 36 92 1 i 32 SPD p 54 22 39 10 q 70 34 51 18 V Ct 2 8 3 10 4 10 5 10 6 5 thin gap 7 10 8 6 9 8 10 6 11 1 masking V>6: Total_e 37 2 Masked_e Total_i 37 29 Masked_i However I cheated a bit. I used p=Min. Vect(e) and q=Max. Vect(e) which makes it somewhat supervised. START OVER WITH THE FULL 150 ---------> SPD on CLUS 1 p 64 29 50 17 p 50 20 35 10 e 11 q 61 29 45 14 e 14 q 58 31 37 12 =MN V Ct 2 10 2 3 3 12 mask: V<8. 5 3 4 mask: V<12. 5 4 12 CTs 50 0 SMs 4 5 5 SMe 5 12 CTe 50 50 SMe 5 7 24 SMi 6 8 CTi 50 24 SMi 6 2 CLUS 1. 1 7 11 CLUS 1 7 2 8 9 8 6 9 5 mask: 8. 5

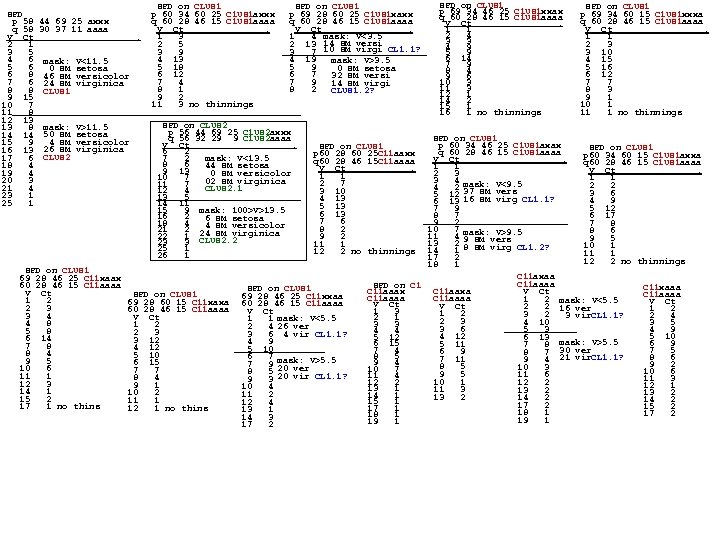

SPD p 58 44 69 25 axxx q 58 30 37 11 aaaa V Ct. 2 1 3 5 4 6 mask: V<11. 5 5 6 0 SM setosa 6 8 46 SM versicolor 7 6 24 SM virginica 8 8 CLUS 1 9 15 10 7 11 8 12 13 13 8 mask: V>11. 5 14 14 50 SM setosa 4 SM versicolor 15 9 16 13 26 SM virginica CLUS 2 17 6 18 4 19 4 20 3 21 4 23 1 25 1 SPD on CLUS 1 69 28 46 25 C 11 xaax 60 28 46 15 C 11 aaaa V Ct 1 2 2 3 3 4 4 8 5 8 6 14 7 8 8 4 9 5 10 6 11 1 12 3 14 1 15 2 17 1 no thins SPD on CLUS 1 p 60 34 60 25 C 1 US 1 axxx q 60 28 46 15 C 1 US 1 aaaa V Ct. 1 3 2 5 3 9 4 13 5 18 6 12 7 4 8 1 9 2 11 3 no thinnings SPD on CLUS 1 p 69 28 60 25 C 1 US 1 xaxx q 60 28 46 15 C 1 US 1 aaaa V Ct. 1 4 mask: V<3. 5 2 13 14 SM versi 3 7 10 SM virgi CL 1. 1? 4 19 mask: V>3. 5 5 9 0 SM setosa 6 7 32 SM versi 7 9 14 SM virgi 8 2 CLUS 1. 2? SPD on CLUS 2 p 56 44 69 25 C 1 US 2 axxx q 56 32 29 9 C 1 US 2 aaaa V Ct. 6 2 7 2 mask: V<13. 5 8 6 44 SM setosa 9 13 0 SM versicolor 10 7 02 SM virginica 11 7 CLUS 2. 1 12 4 13 5 14 11 15 9 mask: 100>V>13. 5 16 2 6 SM setosa 18 4 4 21 2 24 SM versicolor SM virginica 22 1 CLUS 2. 2 23 3 25 1 26 1 SPD on CLUS 1 69 28 60 15 C 11 xaxa 60 28 46 15 C 11 aaaa V Ct 1 2 2 3 3 12 4 12 5 10 6 15 7 7 8 4 9 1 10 2 11 1 12 1 no thins SPD on CLUS 1 p 69 34 60 15 C 1 US 1 xxxa q 60 28 46 15 C 1 US 1 aaaa V Ct. 1 1 2 3 3 10 4 15 5 16 6 12 7 7 8 3 9 1 10 1 11 1 no thinnings SPD on CLUS 1 p 60 34 46 25 C 1 US 1 axax SPD on CLUS 1 q 60 28 46 15 C 1 US 1 aaaa p 60 34 60 15 C 1 US 1 axxa V Ct. q 60 28 46 15 C 1 US 1 aaaa 1 1 V Ct. 2 3 1 1 3 4 mask: V<9. 5 2 2 4 2 3 6 5 12 37 SM vers 4 9 6 13 16 SM virg CL 1. 1? 5 12 7 9 8 7 6 17 9 2 7 8 10 7 mask: V>9. 5 8 6 11 4 9 SM vers 9 5 13 2 8 SM virg CL 1. 2? 10 1 14 1 11 1 17 2 12 2 no thinnings 18 1 C 11 axaa C 11 aaaa SPD on C 11 xaaa C 11 aaxa C 11 aaax V Ct C 11 aaaa 1 2 mask: V<5. 5 V Ct 2 2 16 ver 1 2 1 3 1 2 3 2 2 4 3 vir. CL 1. 1? 2 1 2 3 4 10 3 5 3 3 3 6 4 9 5 3 4 4 5 10 4 12 5 12 6 13 6 9 6 15 5 11 7 8 mask: V>5. 5 7 4 7 5 6 9 8 7 30 ver 8 5 8 6 7 11 9 4 21 vir. CL 1. 1? 9 4 9 2 8 5 10 3 10 7 10 6 9 5 11 6 11 4 11 3 10 1 12 2 12 1 13 1 11 3 13 2 14 1 13 2 14 2 15 1 17 2 15 2 17 1 18 1 17 2 18 1 19 1 SPD on CLUS 1 p 60 28 60 25 C 11 aaxx q 60 28 46 15 C 11 aaaa V Ct. 1 1 2 7 3 10 4 13 5 13 6 13 7 6 8 2 9 2 11 1 12 2 no thinnings SPD on CLUS 1 69 28 46 25 C 11 xxaa 60 28 46 15 C 11 aaaa V Ct 1 1 mask: V<5. 5 2 4 26 ver 3 6 4 vir CL 1. 1? 4 9 5 10 6 7 mask: V>5. 5 7 9 20 ver 8 5 20 vir CL 1. 1? 9 3 10 4 11 2 12 4 13 1 14 3 17 2 SPD on CLUS 1 p 69 34 46 25 C 1 US 1 xxax q 60 28 46 15 C 1 US 1 aaaa V Ct. 1 1 2 4 3 3 4 9 5 9 6 14 7 9 8 4 9 6 10 3 11 3 12 1 14 2 15 1 16 1 no thinnings

SPD p 58 44 69 25 axxx q 58 30 37 11 aaaa V Ct. 2 1 3 5 4 6 mask: V<11. 5 5 6 0 SM setosa 6 8 46 SM versicolor 7 6 24 SM virginica 8 8 CLUS 1 9 15 10 7 11 8 12 13 13 8 mask: V>11. 5 14 14 50 SM setosa 4 SM versicolor 15 9 16 13 26 SM virginica CLUS 2 17 6 18 4 19 4 20 3 21 4 23 1 25 1 SPD on CLUS 1 69 28 46 25 C 11 xaax 60 28 46 15 C 11 aaaa V Ct 1 2 2 3 3 4 4 8 5 8 6 14 7 8 8 4 9 5 10 6 11 1 12 3 14 1 15 2 17 1 no thins SPD on CLUS 1 p 60 34 60 25 C 1 US 1 axxx q 60 28 46 15 C 1 US 1 aaaa V Ct. 1 3 2 5 3 9 4 13 5 18 6 12 7 4 8 1 9 2 11 3 no thinnings SPD on CLUS 1 p 69 28 60 25 C 1 US 1 xaxx q 60 28 46 15 C 1 US 1 aaaa V Ct. 1 4 mask: V<3. 5 2 13 14 SM versi 3 7 10 SM virgi CL 1. 1? 4 19 mask: V>3. 5 5 9 0 SM setosa 6 7 32 SM versi 7 9 14 SM virgi 8 2 CLUS 1. 2? SPD on CLUS 2 p 56 44 69 25 C 1 US 2 axxx q 56 32 29 9 C 1 US 2 aaaa V Ct. 6 2 7 2 mask: V<13. 5 8 6 44 SM setosa 9 13 0 SM versicolor 10 7 02 SM virginica 11 7 CLUS 2. 1 12 4 13 5 14 11 15 9 mask: 100>V>13. 5 16 2 6 SM setosa 18 4 4 21 2 24 SM versicolor SM virginica 22 1 CLUS 2. 2 23 3 25 1 26 1 SPD on CLUS 1 69 28 60 15 C 11 xaxa 60 28 46 15 C 11 aaaa V Ct 1 2 2 3 3 12 4 12 5 10 6 15 7 7 8 4 9 1 10 2 11 1 12 1 no thins SPD on CLUS 1 p 69 34 60 15 C 1 US 1 xxxa q 60 28 46 15 C 1 US 1 aaaa V Ct. 1 1 2 3 3 10 4 15 5 16 6 12 7 7 8 3 9 1 10 1 11 1 no thinnings SPD on CLUS 1 p 60 34 46 25 C 1 US 1 axax SPD on CLUS 1 q 60 28 46 15 C 1 US 1 aaaa p 60 34 60 15 C 1 US 1 axxa V Ct. q 60 28 46 15 C 1 US 1 aaaa 1 1 V Ct. 2 3 1 1 3 4 mask: V<9. 5 2 2 4 2 3 6 5 12 37 SM vers 4 9 6 13 16 SM virg CL 1. 1? 5 12 7 9 8 7 6 17 9 2 7 8 10 7 mask: V>9. 5 8 6 11 4 9 SM vers 9 5 13 2 8 SM virg CL 1. 2? 10 1 14 1 11 1 17 2 12 2 no thinnings 18 1 C 11 axaa C 11 aaaa SPD on C 11 xaaa C 11 aaxa C 11 aaax V Ct C 11 aaaa 1 2 mask: V<5. 5 V Ct 2 2 16 ver 1 2 1 3 1 2 3 2 2 4 3 vir. CL 1. 1? 2 1 2 3 4 10 3 5 3 3 3 6 4 9 5 3 4 4 5 10 4 12 5 12 6 13 6 9 6 15 5 11 7 8 mask: V>5. 5 7 4 7 5 6 9 8 7 30 ver 8 5 8 6 7 11 9 4 21 vir. CL 1. 1? 9 4 9 2 8 5 10 3 10 7 10 6 9 5 11 6 11 4 11 3 10 1 12 2 12 1 13 1 11 3 13 2 14 1 13 2 14 2 15 1 17 2 15 2 17 1 18 1 17 2 18 1 19 1 SPD on CLUS 1 p 60 28 60 25 C 11 aaxx q 60 28 46 15 C 11 aaaa V Ct. 1 1 2 7 3 10 4 13 5 13 6 13 7 6 8 2 9 2 11 1 12 2 no thinnings SPD on CLUS 1 69 28 46 25 C 11 xxaa 60 28 46 15 C 11 aaaa V Ct 1 1 mask: V<5. 5 2 4 26 ver 3 6 4 vir CL 1. 1? 4 9 5 10 6 7 mask: V>5. 5 7 9 20 ver 8 5 20 vir CL 1. 1? 9 3 10 4 11 2 12 4 13 1 14 3 17 2 SPD on CLUS 1 p 69 34 46 25 C 1 US 1 xxax q 60 28 46 15 C 1 US 1 aaaa V Ct. 1 1 2 4 3 3 4 9 5 9 6 14 7 9 8 4 9 6 10 3 11 3 12 1 14 2 15 1 16 1 no thinnings

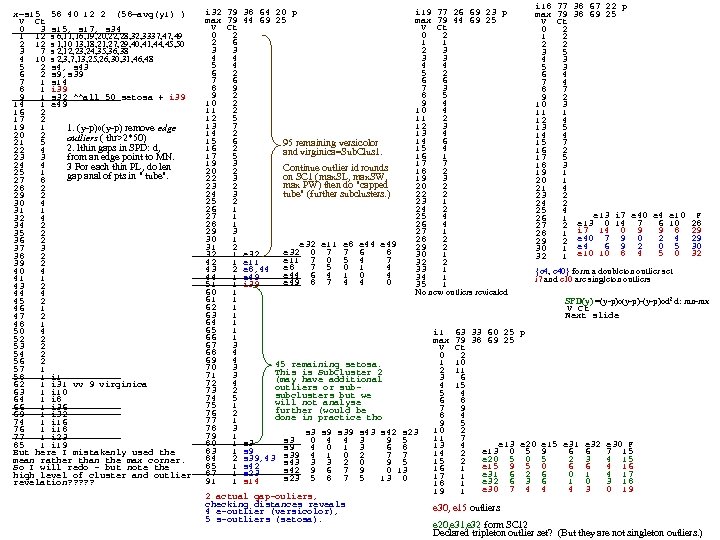

x=s 15 58 40 12 2 (58=avg(y 1) ) V Ct 0 3 s 15, s 17, s 34 1 12 s 6, 11, 16, 19, 20, 22, 28, 32, 3337, 49 2 12 s 1, 10 13, 18, 21, 27, 29, 40, 41, 44, 45, 50 3 7 s 2, 12, 23, 24, 35, 36, 38 4 10 s 2, 3, 7, 13, 25, 26, 30, 31, 46, 48 5 2 s 4, s 43 6 2 s 9, s 39 7 1 s 14 8 1 i 39 9 1 s 32 ^^all 50 setosa + i 39 14 1 e 49 16 2 17 2 19 1 1. (y-p)o(y-p) remove edge 20 2 outliers ( thr>2*50) 21 5 2. lthin gaps in SPD: d, 22 4 from an edge point to MN. 23 3 24 4 3 For each thin PL, do len 25 1 gap anal of pts in " tube". 27 8 28 2 29 2 30 4 31 1 32 4 34 2 35 2 36 2 37 3 38 2 39 2 40 4 41 1 43 2 44 4 45 2 46 1 47 2 48 1 50 4 52 2 53 2 54 2 56 2 57 1 58 1 i 1 62 1 i 31 vv 9 virginica 63 1 i 10 64 1 i 8 66 1 i 36 69 1 i 32 74 1 i 16 76 1 i 18 77 1 i 23 85 1 i 19 But here I mistakenly used the mean rather than the max corner. So I will redo - but note the high level of cluster and outlier revelation? ? ? i 18 77 38 67 22 p i 32 79 38 64 20 p i 19 77 26 69 23 p max 79 38 69 25 max 79 44 69 25 V Ct 0 2 1 2 2 6 1 1 2 2 3 3 2 3 3 5 4 4 3 3 4 3 5 4 4 4 5 3 6 2 5 2 6 4 7 6 6 6 7 4 8 9 7 3 8 7 9 2 8 5 9 2 10 2 9 4 10 3 11 2 10 4 11 1 12 5 11 2 12 4 13 7 12 3 13 5 14 2 13 4 14 4 15 6 14 6 15 7 95 remaining versicolor 16 2 15 4 16 2 and virginica=Sub. Clus 1. 17 5 16 1 17 5 19 3 17 7 18 3 Continue outlier id rounds 20 2 18 2 19 1 on SC 1 (max. SL, max. SW, 22 3 19 3 20 1 max PW) then do "capped 23 2 20 2 21 4 tube" (further subclusters. ) 24 3 22 2 23 2 25 2 23 1 24 2 26 1 24 2 25 4 27 1 25 4 26 1 e 13 i 7 e 40 e 4 e 10 F 28 1 26 4 27 2 e 13 0 14 7 6 10 28 29 3 27 1 28 1 i 7 14 0 9 9 8 29 30 1 28 2 29 2 e 40 7 9 0 2 4 29 e 32 e 11 e 8 e 44 e 49 31 2 29 2 30 1 e 4 6 9 2 0 5 30 e 32 0 7 7 6 8 32 1 e 32 30 1 32 1 e 10 10 8 4 5 0 32 e 11 7 0 5 4 7 42 1 e 11 32 2 e 8 7 5 0 1 4 43 2 e 8, 44 33 1 {e 4, e 40} form a doubleton outlier set e 44 6 4 1 0 4 44 1 e 49 34 1 i 7 and e 10 are singleton outliers e 49 8 7 4 4 0 51 1 i 39 35 1 60 1 No new outliers reviealed 61 1 SPD(y) =(y-p)o(y-p)-(y-p)od 2 d: mn-mx 62 1 V Ct 63 1 Next slide 64 1 65 1 i 1 63 33 60 25 p 66 1 max 79 38 69 25 67 3 V Ct 68 4 0 2 69 4 1 10 45 remaining setosa. 70 3 2 11 This is Sub. Cluster 2 71 3 3 6 (may have additional 72 4 4 15 outliers or sub 73 2 5 4 subclusters but we 74 5 6 8 will not analyse 75 1 7 9 further (would be 76 2 8 4 done in practice tho 77 1 9 5 78 3 10 2 s 3 s 9 s 39 s 43 s 42 s 23 79 1 11 7 s 3 0 4 4 3 9 5 80 1 s 3 e 13 e 20 e 15 e 31 e 32 e 30 F 13 4 s 9 4 0 1 3 6 8 83 1 s 9 e 13 0 5 9 6 6 7 15 14 2 s 39 4 1 0 2 7 7 84 2 s 39, 43 s 43 3 3 2 0 9 5 e 20 5 2 3 4 15 15 2 85 1 s 42 e 15 9 5 0 6 6 4 16 16 1 s 42 9 6 7 9 0 13 87 1 s 23 e 31 6 2 6 0 1 4 17 17 1 s 23 5 8 7 5 13 0 91 1 s 14 e 32 6 3 6 1 0 3 18 18 1 e 30 7 4 4 4 3 0 19 19 1 2 actual gap-ouliers, checking distances reveals e 30, e 15 outliers 4 e-outlier (versicolor), 5 s-outliers (setosa). e 20, e 31, e 32 form SC 12 Declared tripleton outlier set? (But they are not singleton outliers. )

x=s 15 58 40 12 2 (58=avg(y 1) ) V Ct 0 3 s 15, s 17, s 34 1 12 s 6, 11, 16, 19, 20, 22, 28, 32, 3337, 49 2 12 s 1, 10 13, 18, 21, 27, 29, 40, 41, 44, 45, 50 3 7 s 2, 12, 23, 24, 35, 36, 38 4 10 s 2, 3, 7, 13, 25, 26, 30, 31, 46, 48 5 2 s 4, s 43 6 2 s 9, s 39 7 1 s 14 8 1 i 39 9 1 s 32 ^^all 50 setosa + i 39 14 1 e 49 16 2 17 2 19 1 1. (y-p)o(y-p) remove edge 20 2 outliers ( thr>2*50) 21 5 2. lthin gaps in SPD: d, 22 4 from an edge point to MN. 23 3 24 4 3 For each thin PL, do len 25 1 gap anal of pts in " tube". 27 8 28 2 29 2 30 4 31 1 32 4 34 2 35 2 36 2 37 3 38 2 39 2 40 4 41 1 43 2 44 4 45 2 46 1 47 2 48 1 50 4 52 2 53 2 54 2 56 2 57 1 58 1 i 1 62 1 i 31 vv 9 virginica 63 1 i 10 64 1 i 8 66 1 i 36 69 1 i 32 74 1 i 16 76 1 i 18 77 1 i 23 85 1 i 19 But here I mistakenly used the mean rather than the max corner. So I will redo - but note the high level of cluster and outlier revelation? ? ? i 18 77 38 67 22 p i 32 79 38 64 20 p i 19 77 26 69 23 p max 79 38 69 25 max 79 44 69 25 V Ct 0 2 1 2 2 6 1 1 2 2 3 3 2 3 3 5 4 4 3 3 4 3 5 4 4 4 5 3 6 2 5 2 6 4 7 6 6 6 7 4 8 9 7 3 8 7 9 2 8 5 9 2 10 2 9 4 10 3 11 2 10 4 11 1 12 5 11 2 12 4 13 7 12 3 13 5 14 2 13 4 14 4 15 6 14 6 15 7 95 remaining versicolor 16 2 15 4 16 2 and virginica=Sub. Clus 1. 17 5 16 1 17 5 19 3 17 7 18 3 Continue outlier id rounds 20 2 18 2 19 1 on SC 1 (max. SL, max. SW, 22 3 19 3 20 1 max PW) then do "capped 23 2 20 2 21 4 tube" (further subclusters. ) 24 3 22 2 23 2 25 2 23 1 24 2 26 1 24 2 25 4 27 1 25 4 26 1 e 13 i 7 e 40 e 4 e 10 F 28 1 26 4 27 2 e 13 0 14 7 6 10 28 29 3 27 1 28 1 i 7 14 0 9 9 8 29 30 1 28 2 29 2 e 40 7 9 0 2 4 29 e 32 e 11 e 8 e 44 e 49 31 2 29 2 30 1 e 4 6 9 2 0 5 30 e 32 0 7 7 6 8 32 1 e 32 30 1 32 1 e 10 10 8 4 5 0 32 e 11 7 0 5 4 7 42 1 e 11 32 2 e 8 7 5 0 1 4 43 2 e 8, 44 33 1 {e 4, e 40} form a doubleton outlier set e 44 6 4 1 0 4 44 1 e 49 34 1 i 7 and e 10 are singleton outliers e 49 8 7 4 4 0 51 1 i 39 35 1 60 1 No new outliers reviealed 61 1 SPD(y) =(y-p)o(y-p)-(y-p)od 2 d: mn-mx 62 1 V Ct 63 1 Next slide 64 1 65 1 i 1 63 33 60 25 p 66 1 max 79 38 69 25 67 3 V Ct 68 4 0 2 69 4 1 10 45 remaining setosa. 70 3 2 11 This is Sub. Cluster 2 71 3 3 6 (may have additional 72 4 4 15 outliers or sub 73 2 5 4 subclusters but we 74 5 6 8 will not analyse 75 1 7 9 further (would be 76 2 8 4 done in practice tho 77 1 9 5 78 3 10 2 s 3 s 9 s 39 s 43 s 42 s 23 79 1 11 7 s 3 0 4 4 3 9 5 80 1 s 3 e 13 e 20 e 15 e 31 e 32 e 30 F 13 4 s 9 4 0 1 3 6 8 83 1 s 9 e 13 0 5 9 6 6 7 15 14 2 s 39 4 1 0 2 7 7 84 2 s 39, 43 s 43 3 3 2 0 9 5 e 20 5 2 3 4 15 15 2 85 1 s 42 e 15 9 5 0 6 6 4 16 16 1 s 42 9 6 7 9 0 13 87 1 s 23 e 31 6 2 6 0 1 4 17 17 1 s 23 5 8 7 5 13 0 91 1 s 14 e 32 6 3 6 1 0 3 18 18 1 e 30 7 4 4 4 3 0 19 19 1 2 actual gap-ouliers, checking distances reveals e 30, e 15 outliers 4 e-outlier (versicolor), 5 s-outliers (setosa). e 20, e 31, e 32 form SC 12 Declared tripleton outlier set? (But they are not singleton outliers. )

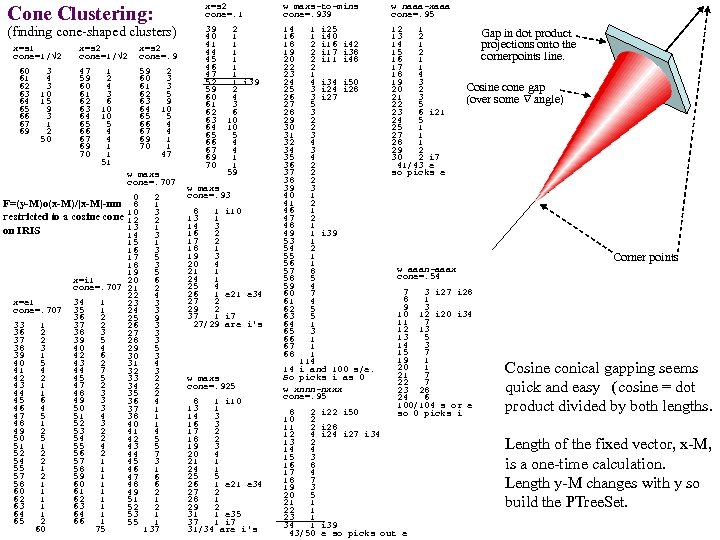

Cone Clustering: (finding cone-shaped clusters) x=s 1 cone=1/√ 2 x=s 2 cone=. 9 60 3 61 4 62 3 63 10 64 15 65 9 66 3 67 1 69 2 50 47 1 59 2 60 4 61 3 62 6 63 10 64 10 65 5 66 4 67 4 69 1 70 1 51 59 2 60 3 61 3 62 5 63 9 64 10 65 5 66 4 67 4 69 1 70 1 47 w maxs cone=. 707 0 2 F=(y-M)o(x-M)/|x-M|-mn 8 1 restricted to a cosine cone 10 3 12 2 13 1 on IRIS 14 3 15 1 16 3 17 5 18 3 19 5 x=i 1 20 6 cone=. 707 21 2 22 4 x=e 1 34 1 23 3 cone=. 707 35 1 24 3 36 2 25 9 33 1 37 2 26 3 36 2 38 3 27 3 37 2 39 5 28 3 38 3 40 4 29 5 39 1 42 6 30 3 40 5 43 2 31 4 44 7 32 3 42 2 45 5 33 2 43 1 47 2 34 2 44 1 48 3 35 2 45 6 49 3 36 4 46 4 50 3 37 1 47 5 51 4 38 1 48 1 52 3 40 1 49 2 53 2 41 4 50 5 54 2 42 5 51 1 55 4 43 5 52 2 56 2 44 7 54 2 57 1 45 3 55 1 58 1 46 1 57 2 59 1 47 6 58 1 60 1 48 6 60 1 61 1 49 2 62 1 51 1 63 1 52 2 64 1 53 1 65 2 66 1 55 1 60 75 137 x=s 2 cone=. 1 w maxs-to-mins cone=. 939 w naaa-xaaa cone=. 95 39 2 40 1 41 1 44 1 45 1 46 1 47 1 52 1 i 39 59 2 60 4 61 3 62 6 63 10 64 10 65 5 66 4 67 4 69 1 70 1 59 14 1 i 25 16 1 i 40 18 2 i 16 i 42 19 2 i 17 i 38 20 2 i 11 i 48 22 2 23 1 24 4 i 34 i 50 25 3 i 24 i 28 26 3 i 27 27 5 28 3 29 2 30 2 31 3 32 4 34 3 35 4 36 2 37 2 38 2 39 3 40 1 41 2 46 1 47 2 48 1 49 1 i 39 53 1 54 2 55 1 56 1 57 8 58 5 59 4 60 7 61 4 62 5 63 5 64 1 65 3 66 1 67 1 68 1 114 14 i and 100 s/e. So picks i as 0 w xnnn-nxxx cone=. 95 12 1 13 2 14 1 15 2 16 1 17 1 18 4 19 3 20 2 21 3 22 5 23 6 i 21 24 5 25 1 27 1 28 1 29 2 30 2 i 7 41/43 e so picks e w maxs cone=. 93 8 1 i 10 13 1 14 3 16 2 17 2 18 1 19 3 20 4 21 1 24 1 25 4 26 1 e 21 e 34 27 2 29 2 37 1 i 7 27/29 are i's w maxs cone=. 925 8 1 i 10 13 1 14 3 16 3 17 2 18 2 19 3 20 4 21 1 24 1 25 5 26 1 e 21 e 34 27 2 28 1 29 2 31 1 e 35 37 1 i 7 31/34 are i's Gap in dot product projections onto the cornerpoints line. Cosine cone gap (over some angle) Corner points w aaan-aaax cone=. 54 7 3 i 27 i 28 8 1 9 3 10 12 i 20 i 34 11 7 12 13 13 5 14 3 15 7 19 1 20 1 21 7 22 7 23 28 24 6 100/104 s or e so 0 picks i 8 2 i 22 i 50 10 2 11 2 i 28 12 4 i 27 i 34 13 2 14 4 15 3 16 8 17 4 18 7 19 3 20 5 21 1 22 1 23 1 34 1 i 39 43/50 e so picks out e Cosine conical gapping seems quick and easy (cosine = dot product divided by both lengths. Length of the fixed vector, x-M, is a one-time calculation. Length y-M changes with y so build the PTree. Set.

Cone Clustering: (finding cone-shaped clusters) x=s 1 cone=1/√ 2 x=s 2 cone=. 9 60 3 61 4 62 3 63 10 64 15 65 9 66 3 67 1 69 2 50 47 1 59 2 60 4 61 3 62 6 63 10 64 10 65 5 66 4 67 4 69 1 70 1 51 59 2 60 3 61 3 62 5 63 9 64 10 65 5 66 4 67 4 69 1 70 1 47 w maxs cone=. 707 0 2 F=(y-M)o(x-M)/|x-M|-mn 8 1 restricted to a cosine cone 10 3 12 2 13 1 on IRIS 14 3 15 1 16 3 17 5 18 3 19 5 x=i 1 20 6 cone=. 707 21 2 22 4 x=e 1 34 1 23 3 cone=. 707 35 1 24 3 36 2 25 9 33 1 37 2 26 3 36 2 38 3 27 3 37 2 39 5 28 3 38 3 40 4 29 5 39 1 42 6 30 3 40 5 43 2 31 4 44 7 32 3 42 2 45 5 33 2 43 1 47 2 34 2 44 1 48 3 35 2 45 6 49 3 36 4 46 4 50 3 37 1 47 5 51 4 38 1 48 1 52 3 40 1 49 2 53 2 41 4 50 5 54 2 42 5 51 1 55 4 43 5 52 2 56 2 44 7 54 2 57 1 45 3 55 1 58 1 46 1 57 2 59 1 47 6 58 1 60 1 48 6 60 1 61 1 49 2 62 1 51 1 63 1 52 2 64 1 53 1 65 2 66 1 55 1 60 75 137 x=s 2 cone=. 1 w maxs-to-mins cone=. 939 w naaa-xaaa cone=. 95 39 2 40 1 41 1 44 1 45 1 46 1 47 1 52 1 i 39 59 2 60 4 61 3 62 6 63 10 64 10 65 5 66 4 67 4 69 1 70 1 59 14 1 i 25 16 1 i 40 18 2 i 16 i 42 19 2 i 17 i 38 20 2 i 11 i 48 22 2 23 1 24 4 i 34 i 50 25 3 i 24 i 28 26 3 i 27 27 5 28 3 29 2 30 2 31 3 32 4 34 3 35 4 36 2 37 2 38 2 39 3 40 1 41 2 46 1 47 2 48 1 49 1 i 39 53 1 54 2 55 1 56 1 57 8 58 5 59 4 60 7 61 4 62 5 63 5 64 1 65 3 66 1 67 1 68 1 114 14 i and 100 s/e. So picks i as 0 w xnnn-nxxx cone=. 95 12 1 13 2 14 1 15 2 16 1 17 1 18 4 19 3 20 2 21 3 22 5 23 6 i 21 24 5 25 1 27 1 28 1 29 2 30 2 i 7 41/43 e so picks e w maxs cone=. 93 8 1 i 10 13 1 14 3 16 2 17 2 18 1 19 3 20 4 21 1 24 1 25 4 26 1 e 21 e 34 27 2 29 2 37 1 i 7 27/29 are i's w maxs cone=. 925 8 1 i 10 13 1 14 3 16 3 17 2 18 2 19 3 20 4 21 1 24 1 25 5 26 1 e 21 e 34 27 2 28 1 29 2 31 1 e 35 37 1 i 7 31/34 are i's Gap in dot product projections onto the cornerpoints line. Cosine cone gap (over some angle) Corner points w aaan-aaax cone=. 54 7 3 i 27 i 28 8 1 9 3 10 12 i 20 i 34 11 7 12 13 13 5 14 3 15 7 19 1 20 1 21 7 22 7 23 28 24 6 100/104 s or e so 0 picks i 8 2 i 22 i 50 10 2 11 2 i 28 12 4 i 27 i 34 13 2 14 4 15 3 16 8 17 4 18 7 19 3 20 5 21 1 22 1 23 1 34 1 i 39 43/50 e so picks out e Cosine conical gapping seems quick and easy (cosine = dot product divided by both lengths. Length of the fixed vector, x-M, is a one-time calculation. Length y-M changes with y so build the PTree. Set.

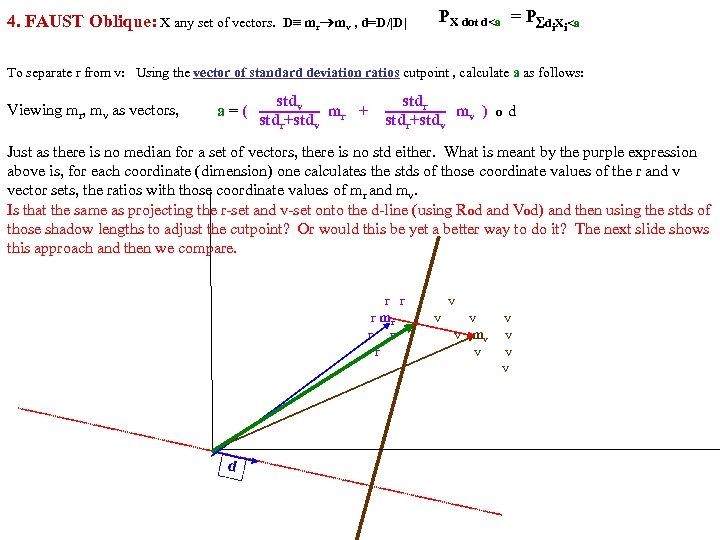

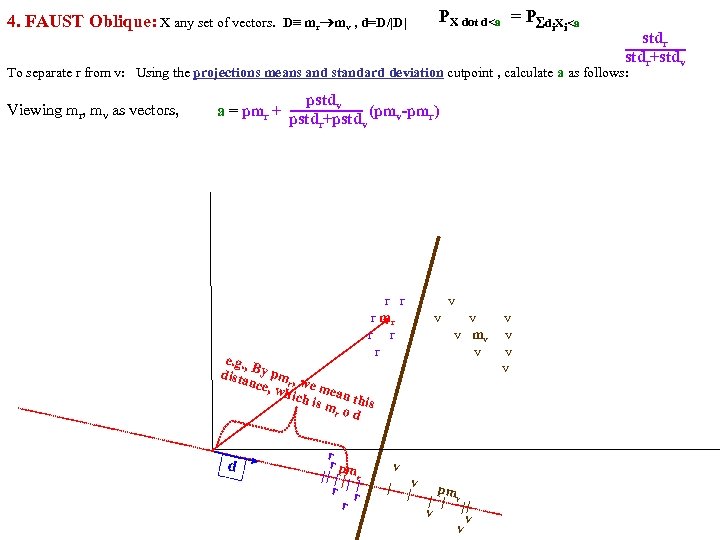

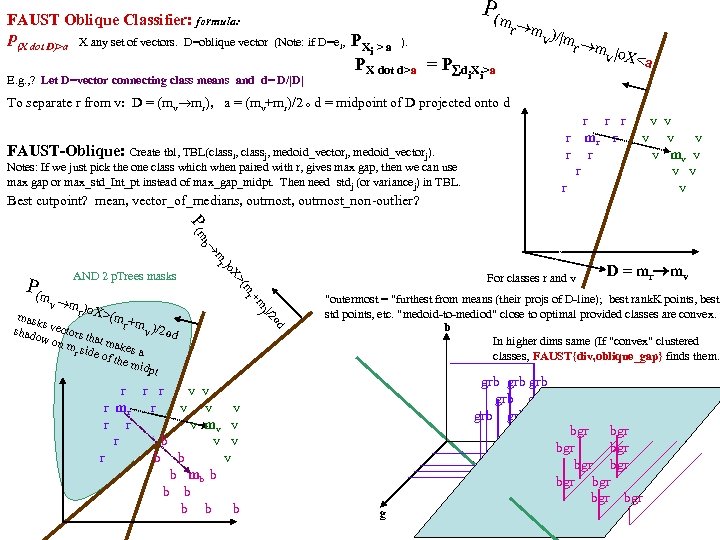

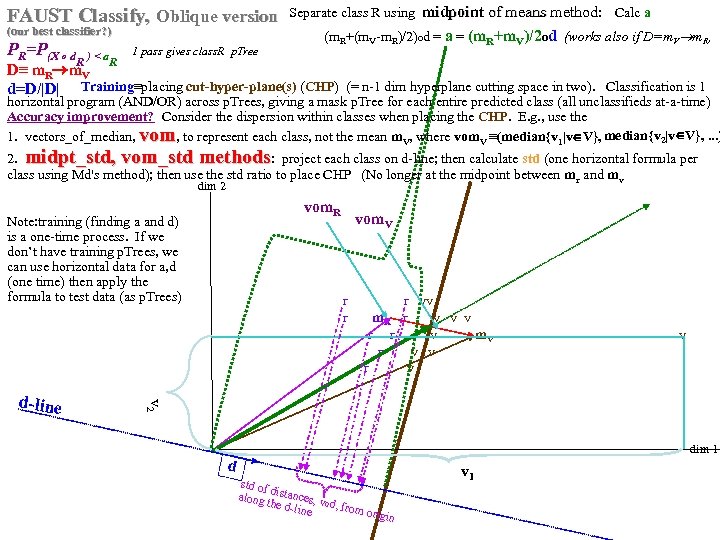

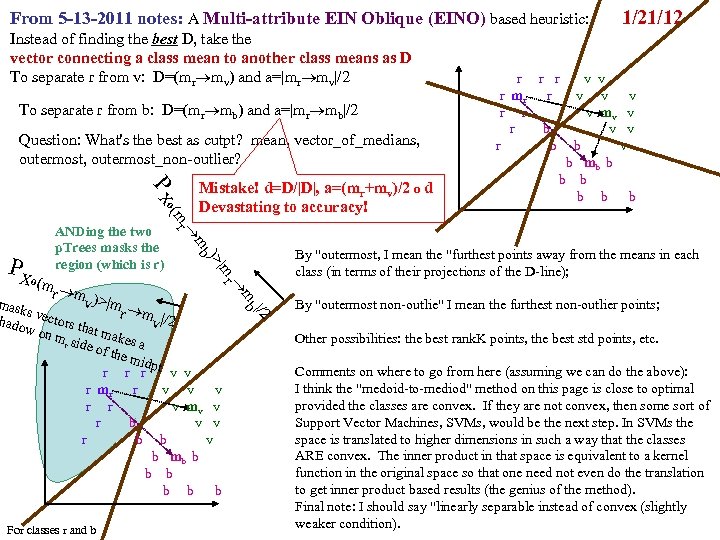

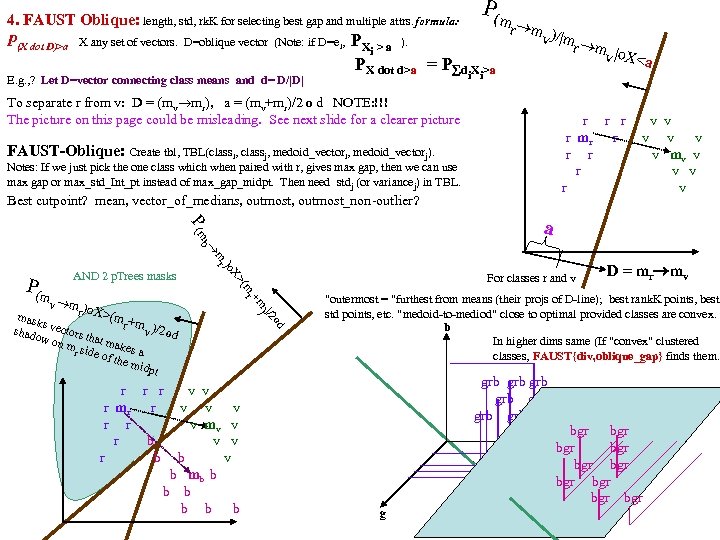

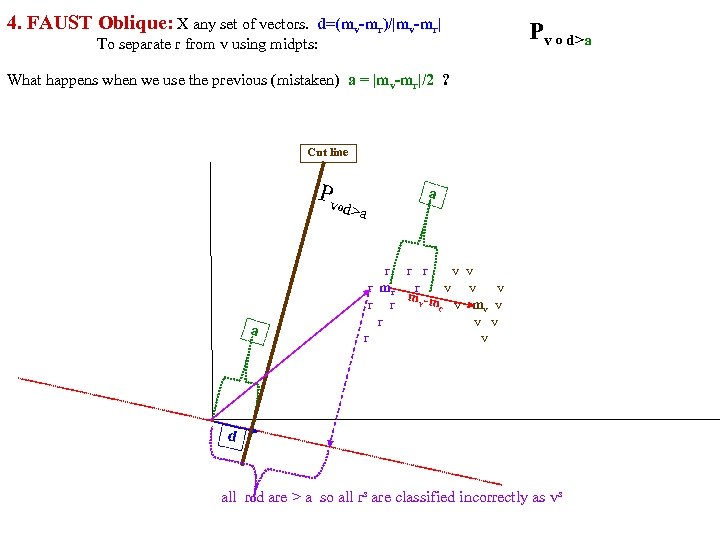

FAUST Oblique Classifier: formula: P(X dot D)>a X any set of vectors. D=oblique vector (Note: if D=ei, PX E. g. , ? Let D=vector connecting class means and d= D/|D| P(m i > a ). PX dot d>a = P d X >a i i r m )/ v |m r m |o v X< a To separate r from v: D = (mv mr), a = (mv+mr)/2 o d = midpoint of D projected onto d FAUST-Oblique: Create tbl, TBL(classi, classj, medoid_vectori, medoid_vectorj). Notes: If we just pick the one class which when paired with r, gives max gap, then we can use max gap or max_std_Int_pt instead of max_gap_midpt. Then need stdj (or variancej) in TBL. Best cutpoint? mean, vector_of_medians, outmost_non-outlier? r r r v v r mr r v v v r r v mv v r v v r v P (m b a For classes r and v +m (m r X> od w on rs that m akes m si r de of th a e mid pt r r r v v r mr r v v v r r v mv v r b v v r b b v b mb b b b b b b od hado |/2 ) v m )o r X>(m mask s vec r +mv )/2 to s )o mr P(m AND 2 p. Trees masks D = mr mv "outermost = "furthest from means (their projs of D-line); best rank. K points, best std points, etc. "medoid-to-mediod" close to optimal provided classes are convex. b In higher dims same (If "convex" clustered classes, FAUST{div, oblique_gap} finds them. grb grb bgr D bgr grb bgr g r

FAUST Oblique Classifier: formula: P(X dot D)>a X any set of vectors. D=oblique vector (Note: if D=ei, PX E. g. , ? Let D=vector connecting class means and d= D/|D| P(m i > a ). PX dot d>a = P d X >a i i r m )/ v |m r m |o v X< a To separate r from v: D = (mv mr), a = (mv+mr)/2 o d = midpoint of D projected onto d FAUST-Oblique: Create tbl, TBL(classi, classj, medoid_vectori, medoid_vectorj). Notes: If we just pick the one class which when paired with r, gives max gap, then we can use max gap or max_std_Int_pt instead of max_gap_midpt. Then need stdj (or variancej) in TBL. Best cutpoint? mean, vector_of_medians, outmost_non-outlier? r r r v v r mr r v v v r r v mv v r v v r v P (m b a For classes r and v +m (m r X> od w on rs that m akes m si r de of th a e mid pt r r r v v r mr r v v v r r v mv v r b v v r b b v b mb b b b b b b od hado |/2 ) v m )o r X>(m mask s vec r +mv )/2 to s )o mr P(m AND 2 p. Trees masks D = mr mv "outermost = "furthest from means (their projs of D-line); best rank. K points, best std points, etc. "medoid-to-mediod" close to optimal provided classes are convex. b In higher dims same (If "convex" clustered classes, FAUST{div, oblique_gap} finds them. grb grb bgr D bgr grb bgr g r

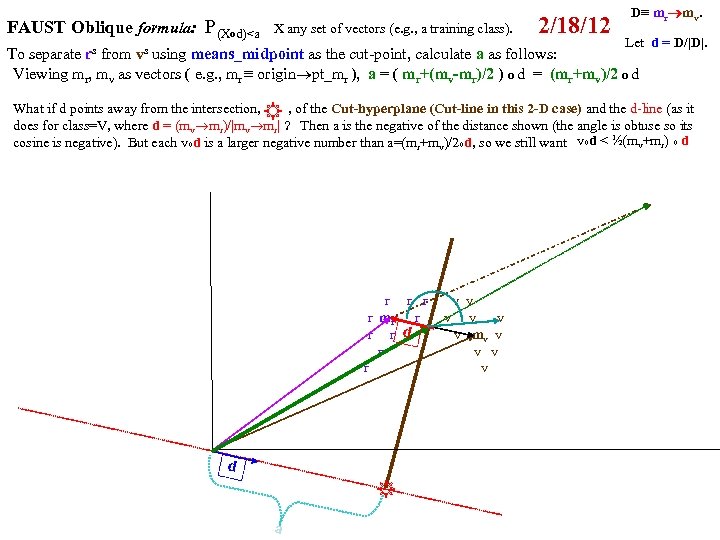

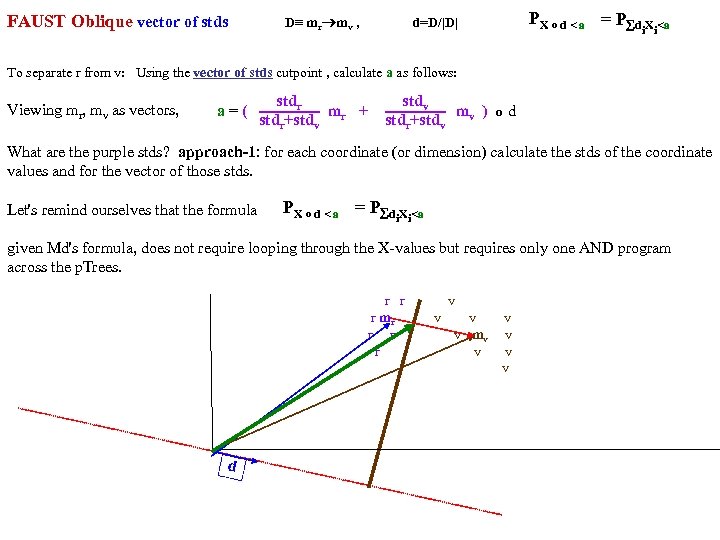

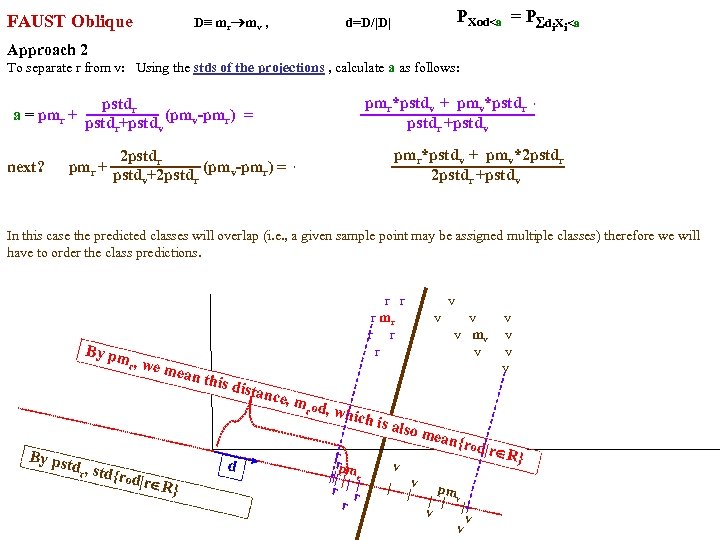

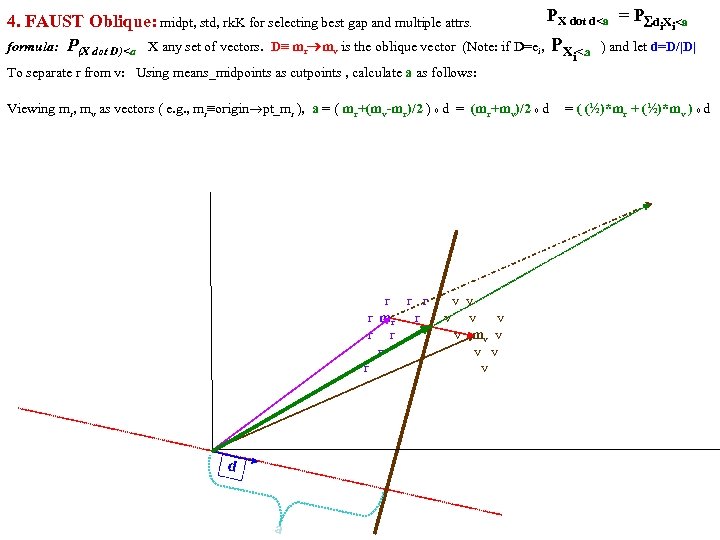

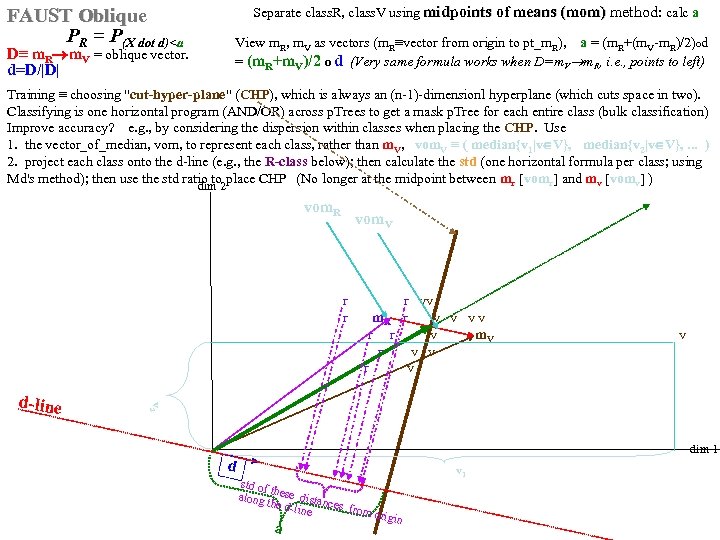

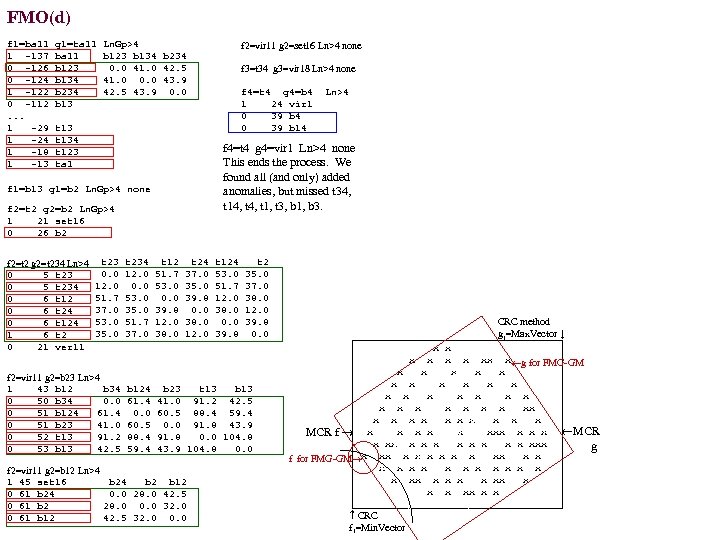

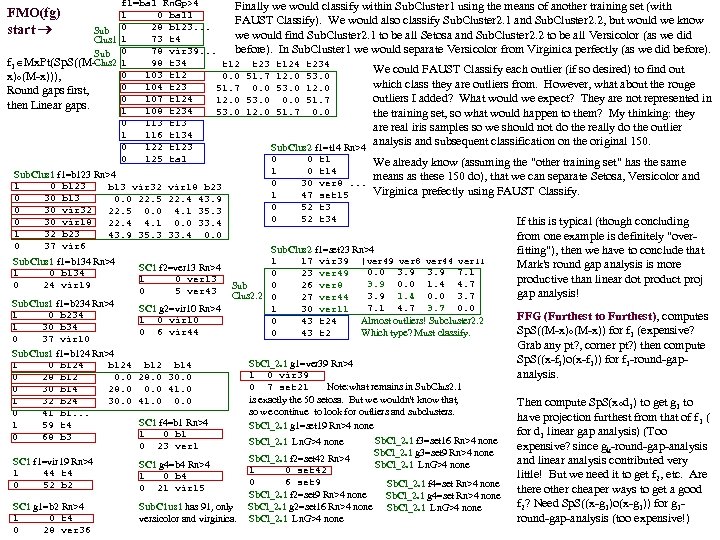

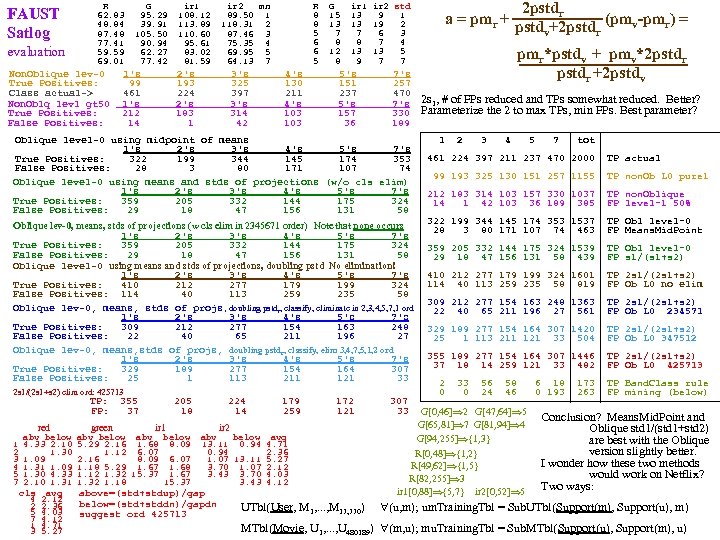

Separate class. R, class. V using midpoints of means (mom) method: calc a midpoints of means (mom) FAUST Oblique PR = P(X dot d)

Separate class. R, class. V using midpoints of means (mom) method: calc a midpoints of means (mom) FAUST Oblique PR = P(X dot d)

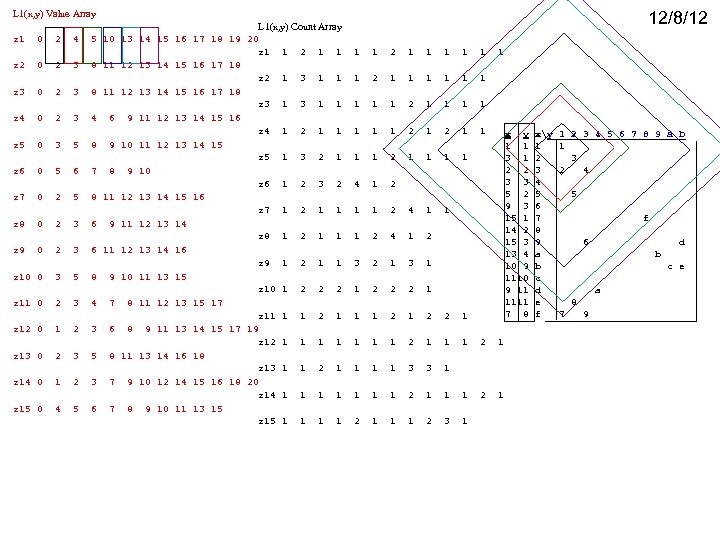

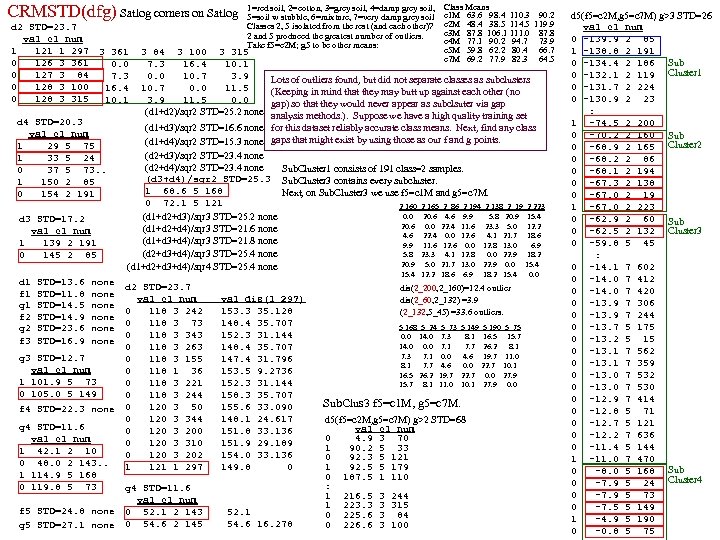

L 1(x, y) Value Array 12/8/12 L 1(x, y) Count Array z 1 0 2 4 5 10 13 14 15 16 17 18 19 20 z 1 1 2 1 1 1 1 z 2 0 2 3 8 11 12 13 14 15 16 17 18 z 2 1 3 1 1 1 2 1 1 1 z 3 0 2 3 8 11 12 13 14 15 16 17 18 z 3 1 1 1 2 1 1 z 4 0 2 3 4 6 9 11 12 13 14 15 16 z 4 1 2 1 1 x y xy 1 2 3 4 5 6 7 8 9 a b z 5 0 3 5 8 9 10 11 12 13 14 15 1 1 1 1 z 5 1 3 2 1 1 1 1 3 1 2 3 2 2 3 2 4 z 6 0 5 6 7 8 9 10 3 3 4 z 6 1 2 3 2 4 1 2 5 5 z 7 0 2 5 8 11 12 13 14 15 16 9 3 6 z 7 1 2 1 1 2 4 1 1 15 1 7 f z 8 0 2 3 6 9 11 12 13 14 14 2 8 z 8 1 2 1 1 1 2 4 1 2 15 3 9 6 d z 9 0 2 3 6 11 12 13 14 16 13 4 a b z 9 1 2 1 1 3 2 1 3 1 10 9 b c e z 10 0 3 5 8 9 10 11 13 15 1110 c z 10 1 2 2 2 1 9 11 d a 1111 e 8 z 11 0 2 3 4 7 8 11 12 13 15 17 7 8 f 7 9 z 11 1 1 2 1 2 2 1 z 12 0 1 2 3 6 8 9 11 13 14 15 17 19 z 12 1 1 1 1 2 1 z 13 0 2 3 5 8 11 13 14 16 18 z 13 1 1 2 1 1 3 3 1 z 14 0 1 2 3 7 9 10 12 14 15 16 18 20 z 14 1 1 1 1 2 1 z 15 0 4 5 6 7 8 9 10 11 13 15 z 15 1 1 2 1 1 1 2 3 1

L 1(x, y) Value Array 12/8/12 L 1(x, y) Count Array z 1 0 2 4 5 10 13 14 15 16 17 18 19 20 z 1 1 2 1 1 1 1 z 2 0 2 3 8 11 12 13 14 15 16 17 18 z 2 1 3 1 1 1 2 1 1 1 z 3 0 2 3 8 11 12 13 14 15 16 17 18 z 3 1 1 1 2 1 1 z 4 0 2 3 4 6 9 11 12 13 14 15 16 z 4 1 2 1 1 x y xy 1 2 3 4 5 6 7 8 9 a b z 5 0 3 5 8 9 10 11 12 13 14 15 1 1 1 1 z 5 1 3 2 1 1 1 1 3 1 2 3 2 2 3 2 4 z 6 0 5 6 7 8 9 10 3 3 4 z 6 1 2 3 2 4 1 2 5 5 z 7 0 2 5 8 11 12 13 14 15 16 9 3 6 z 7 1 2 1 1 2 4 1 1 15 1 7 f z 8 0 2 3 6 9 11 12 13 14 14 2 8 z 8 1 2 1 1 1 2 4 1 2 15 3 9 6 d z 9 0 2 3 6 11 12 13 14 16 13 4 a b z 9 1 2 1 1 3 2 1 3 1 10 9 b c e z 10 0 3 5 8 9 10 11 13 15 1110 c z 10 1 2 2 2 1 9 11 d a 1111 e 8 z 11 0 2 3 4 7 8 11 12 13 15 17 7 8 f 7 9 z 11 1 1 2 1 2 2 1 z 12 0 1 2 3 6 8 9 11 13 14 15 17 19 z 12 1 1 1 1 2 1 z 13 0 2 3 5 8 11 13 14 16 18 z 13 1 1 2 1 1 3 3 1 z 14 0 1 2 3 7 9 10 12 14 15 16 18 20 z 14 1 1 1 1 2 1 z 15 0 4 5 6 7 8 9 10 11 13 15 z 15 1 1 2 1 1 1 2 3 1

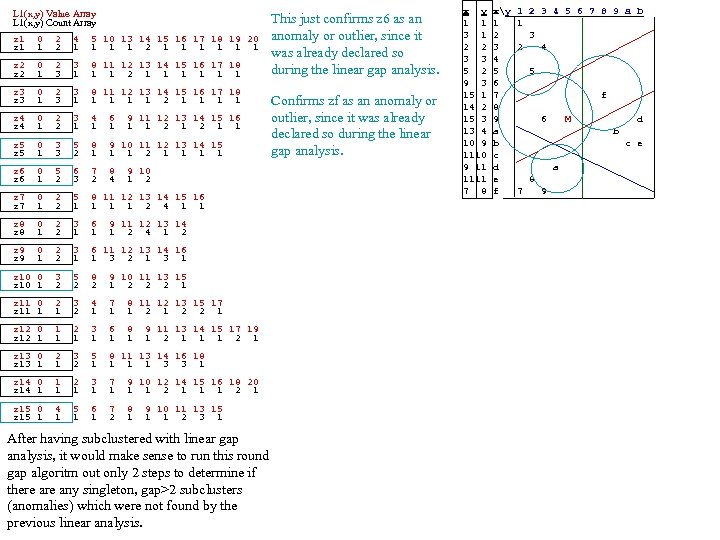

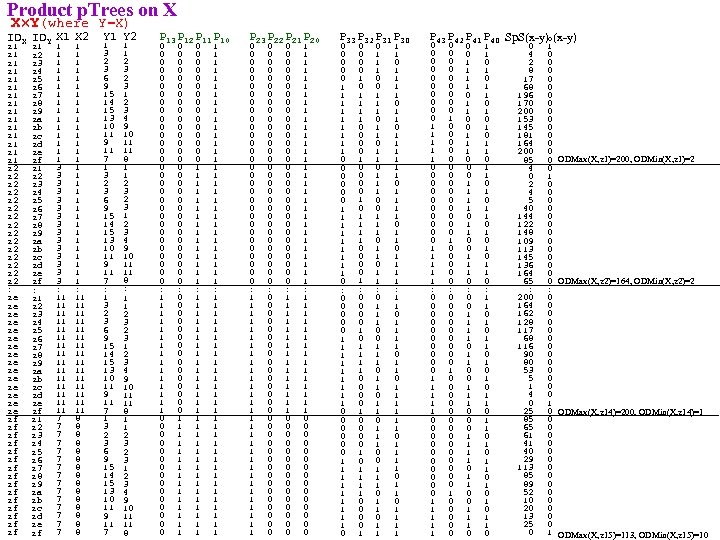

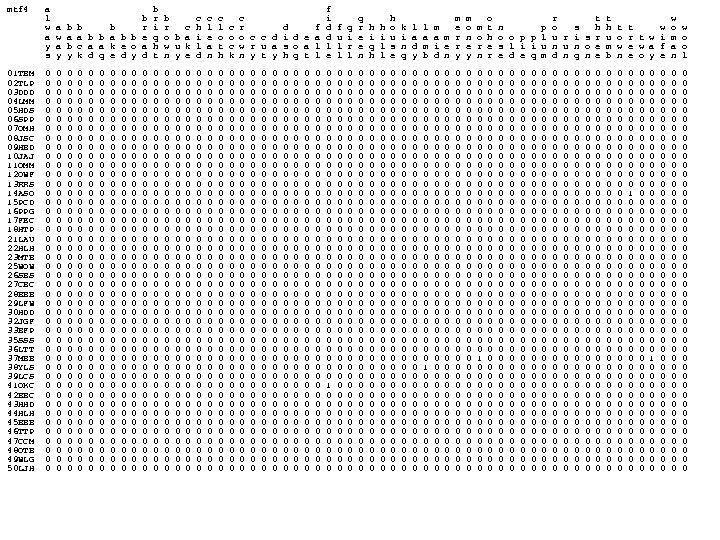

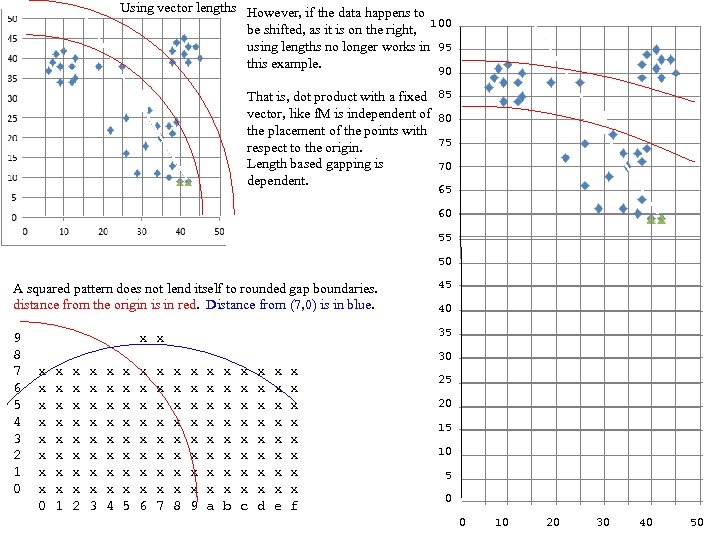

L 1(x, y) Value Array L 1(x, y) Count Array z 1 0 2 4 5 10 13 14 15 16 17 18 19 20 z 1 1 2 1 1 1 1 z 2 0 2 3 8 11 12 13 14 15 16 17 18 z 2 1 3 1 1 1 2 1 1 1 z 3 0 2 3 8 11 12 13 14 15 16 17 18 z 3 1 1 1 2 1 1 z 4 0 2 3 4 6 9 11 12 13 14 15 16 z 4 1 2 1 1 z 5 0 3 5 8 9 10 11 12 13 14 15 z 5 1 3 2 1 1 1 1 This just confirms z 6 as an anomaly or outlier, since it was already declared so during the linear gap analysis. Confirms zf as an anomaly or outlier, since it was already declared so during the linear gap analysis. z 6 0 5 6 7 8 9 10 z 6 1 2 3 2 4 1 2 z 7 0 2 5 8 11 12 13 14 15 16 z 7 1 2 1 1 2 4 1 1 z 8 0 2 3 6 9 11 12 13 14 z 8 1 2 1 1 1 2 4 1 2 z 9 0 2 3 6 11 12 13 14 16 z 9 1 2 1 1 3 2 1 3 1 z 10 0 3 5 8 9 10 11 13 15 z 10 1 2 2 2 1 z 11 0 2 3 4 7 8 11 12 13 15 17 z 11 1 1 2 1 2 2 1 z 12 0 1 2 3 6 8 9 11 13 14 15 17 19 z 12 1 1 1 1 2 1 z 13 0 2 3 5 8 11 13 14 16 18 z 13 1 1 2 1 1 3 3 1 z 14 0 1 2 3 7 9 10 12 14 15 16 18 20 z 14 1 1 1 1 2 1 z 15 0 4 5 6 7 8 9 10 11 13 15 z 15 1 1 2 1 1 1 2 3 1 After having subclustered with linear gap analysis, it would make sense to run this round gap algoritm out only 2 steps to determine if there any singleton, gap>2 subclusters (anomalies) which were not found by the previous linear analysis. x y xy 1 2 3 4 5 6 7 8 9 a b 1 1 1 1 3 1 2 3 2 2 3 2 4 3 3 4 5 2 5 5 9 3 6 15 1 7 f 14 2 8 15 3 9 6 M d 13 4 a b 10 9 b c e 1110 c 9 11 d a 1111 e 8 7 8 f 7 9

L 1(x, y) Value Array L 1(x, y) Count Array z 1 0 2 4 5 10 13 14 15 16 17 18 19 20 z 1 1 2 1 1 1 1 z 2 0 2 3 8 11 12 13 14 15 16 17 18 z 2 1 3 1 1 1 2 1 1 1 z 3 0 2 3 8 11 12 13 14 15 16 17 18 z 3 1 1 1 2 1 1 z 4 0 2 3 4 6 9 11 12 13 14 15 16 z 4 1 2 1 1 z 5 0 3 5 8 9 10 11 12 13 14 15 z 5 1 3 2 1 1 1 1 This just confirms z 6 as an anomaly or outlier, since it was already declared so during the linear gap analysis. Confirms zf as an anomaly or outlier, since it was already declared so during the linear gap analysis. z 6 0 5 6 7 8 9 10 z 6 1 2 3 2 4 1 2 z 7 0 2 5 8 11 12 13 14 15 16 z 7 1 2 1 1 2 4 1 1 z 8 0 2 3 6 9 11 12 13 14 z 8 1 2 1 1 1 2 4 1 2 z 9 0 2 3 6 11 12 13 14 16 z 9 1 2 1 1 3 2 1 3 1 z 10 0 3 5 8 9 10 11 13 15 z 10 1 2 2 2 1 z 11 0 2 3 4 7 8 11 12 13 15 17 z 11 1 1 2 1 2 2 1 z 12 0 1 2 3 6 8 9 11 13 14 15 17 19 z 12 1 1 1 1 2 1 z 13 0 2 3 5 8 11 13 14 16 18 z 13 1 1 2 1 1 3 3 1 z 14 0 1 2 3 7 9 10 12 14 15 16 18 20 z 14 1 1 1 1 2 1 z 15 0 4 5 6 7 8 9 10 11 13 15 z 15 1 1 2 1 1 1 2 3 1 After having subclustered with linear gap analysis, it would make sense to run this round gap algoritm out only 2 steps to determine if there any singleton, gap>2 subclusters (anomalies) which were not found by the previous linear analysis. x y xy 1 2 3 4 5 6 7 8 9 a b 1 1 1 1 3 1 2 3 2 2 3 2 4 3 3 4 5 2 5 5 9 3 6 15 1 7 f 14 2 8 15 3 9 6 M d 13 4 a b 10 9 b c e 1110 c 9 11 d a 1111 e 8 7 8 f 7 9

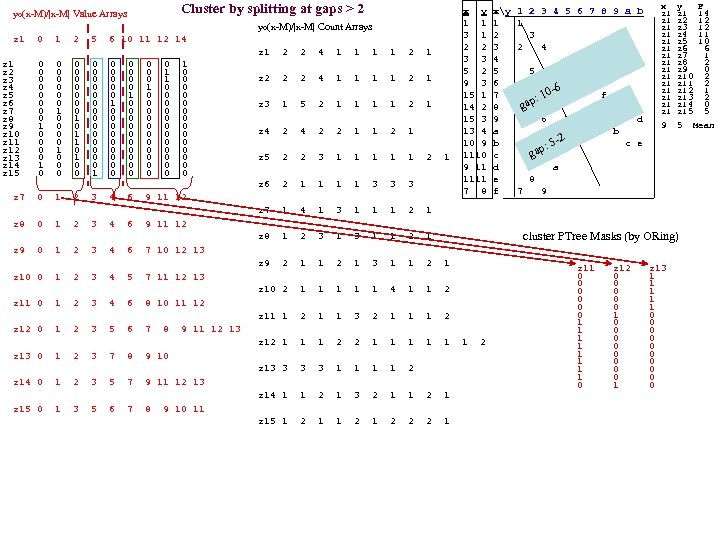

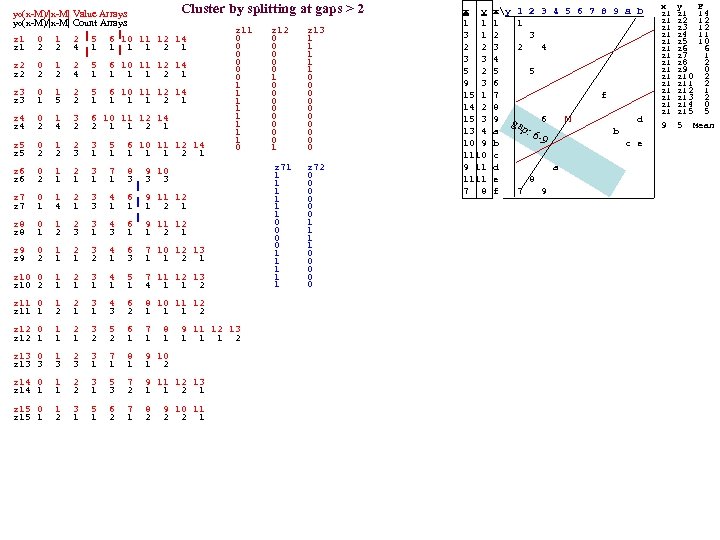

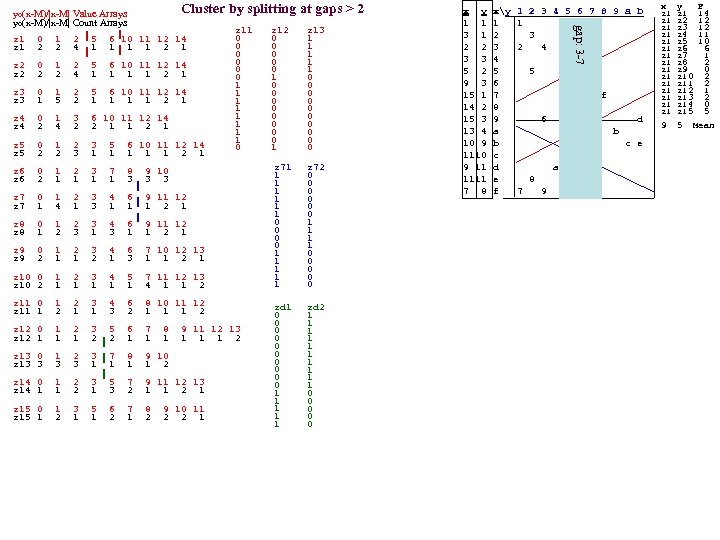

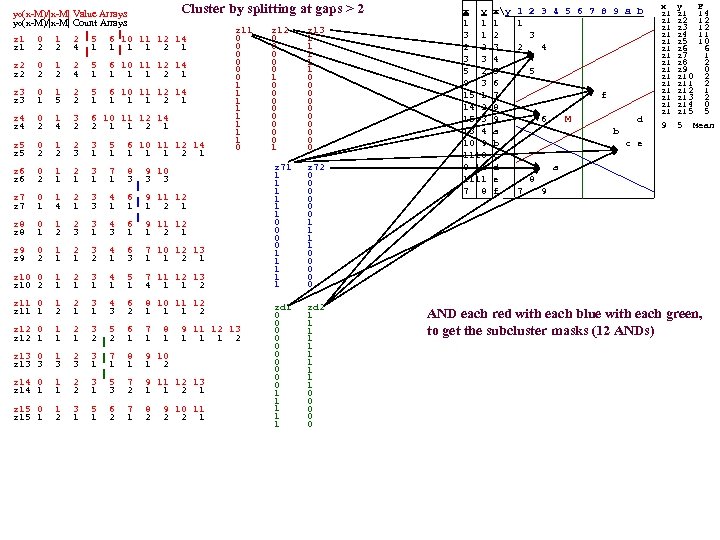

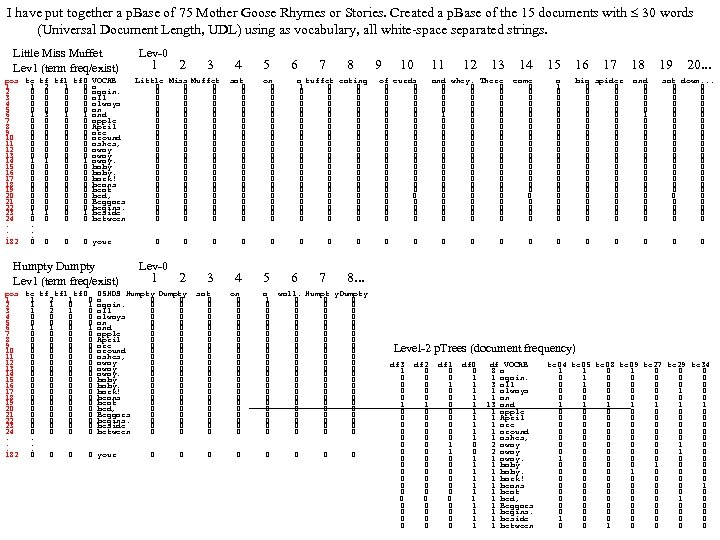

yo(x-M)/|x-M| Value Arrays Cluster by splitting at gaps > 2 yo(x-M)/|x-M| Count Arrays z 1 0 1 2 5 6 10 11 12 14 z 1 2 2 4 1 1 2 1 0 0 0 0 1 z 1 z 2 0 1 2 5 6 10 11 12 14 0 0 0 0 1 0 z 2 0 0 0 0 1 0 z 3 0 0 0 1 0 0 z 4 z 3 0 1 2 5 6 10 11 12 14 0 0 0 1 0 0 0 z 5 0 0 1 0 0 z 6 0 1 0 0 0 0 z 7 0 0 1 0 0 0 z 8 z 4 0 1 3 6 10 11 12 14 1 0 0 0 0 z 9 z 10 0 0 1 0 0 0 z 11 0 0 0 0 z 5 0 1 2 3 5 6 10 11 12 14 z 12 0 1 0 0 0 0 z 13 0 0 1 0 0 0 z 14 1 0 0 0 0 z 6 0 1 2 3 7 8 9 10 z 15 0 0 0 1 0 0 0 z 2 2 2 4 1 1 2 1 z 3 1 5 2 1 1 2 1 z 4 2 2 1 1 2 1 z 5 2 2 3 1 1 1 2 1 z 6 2 1 1 3 3 3 z 7 0 1 2 3 4 6 9 11 12 x y xy 1 2 3 4 5 6 7 8 9 a b 1 1 1 1 3 1 2 3 2 2 3 2 4 3 3 4 5 2 5 5 9 3 6 -6 15 1 7 f : 10 gap 14 2 8 15 3 9 6 M d 13 4 a b 10 9 b c e 5 -2 ap: g 1110 c 9 11 d a 1111 e 8 7 8 f 7 9 x y F z 1 14 z 1 z 2 12 z 1 z 3 12 z 1 z 4 11 z 5 10 z 1 z 6 6 z 1 z 7 1 z 8 2 z 1 z 9 0 z 10 2 z 11 2 z 12 1 z 13 2 z 14 0 z 15 5 9 5 Mean z 7 1 4 1 3 1 1 1 2 1 z 8 0 1 2 3 4 6 9 11 12 z 8 1 2 3 1 1 2 1 cluster PTree Masks (by ORing) z 9 0 1 2 3 4 6 7 10 12 13 z 9 2 1 1 2 1 3 1 1 2 1 z 10 0 1 2 3 4 5 7 11 12 13 z 10 2 1 1 1 4 1 1 2 z 11 0 1 2 3 4 6 8 10 11 12 z 11 1 2 1 1 3 2 1 1 1 2 z 12 0 1 2 3 5 6 7 8 9 11 12 13 z 12 1 1 1 2 z 13 0 1 2 3 7 8 9 10 z 13 3 1 1 2 z 14 0 1 2 3 5 7 9 11 12 13 z 14 1 1 2 1 3 2 1 1 2 1 z 15 0 1 3 5 6 7 8 9 10 11 z 15 1 2 1 2 2 2 1 z 11 0 0 0 1 1 1 1 0 z 12 0 0 0 1 0 0 0 0 1 z 13 1 1 1 0 0 0 0 0

yo(x-M)/|x-M| Value Arrays Cluster by splitting at gaps > 2 yo(x-M)/|x-M| Count Arrays z 1 0 1 2 5 6 10 11 12 14 z 1 2 2 4 1 1 2 1 0 0 0 0 1 z 1 z 2 0 1 2 5 6 10 11 12 14 0 0 0 0 1 0 z 2 0 0 0 0 1 0 z 3 0 0 0 1 0 0 z 4 z 3 0 1 2 5 6 10 11 12 14 0 0 0 1 0 0 0 z 5 0 0 1 0 0 z 6 0 1 0 0 0 0 z 7 0 0 1 0 0 0 z 8 z 4 0 1 3 6 10 11 12 14 1 0 0 0 0 z 9 z 10 0 0 1 0 0 0 z 11 0 0 0 0 z 5 0 1 2 3 5 6 10 11 12 14 z 12 0 1 0 0 0 0 z 13 0 0 1 0 0 0 z 14 1 0 0 0 0 z 6 0 1 2 3 7 8 9 10 z 15 0 0 0 1 0 0 0 z 2 2 2 4 1 1 2 1 z 3 1 5 2 1 1 2 1 z 4 2 2 1 1 2 1 z 5 2 2 3 1 1 1 2 1 z 6 2 1 1 3 3 3 z 7 0 1 2 3 4 6 9 11 12 x y xy 1 2 3 4 5 6 7 8 9 a b 1 1 1 1 3 1 2 3 2 2 3 2 4 3 3 4 5 2 5 5 9 3 6 -6 15 1 7 f : 10 gap 14 2 8 15 3 9 6 M d 13 4 a b 10 9 b c e 5 -2 ap: g 1110 c 9 11 d a 1111 e 8 7 8 f 7 9 x y F z 1 14 z 1 z 2 12 z 1 z 3 12 z 1 z 4 11 z 5 10 z 1 z 6 6 z 1 z 7 1 z 8 2 z 1 z 9 0 z 10 2 z 11 2 z 12 1 z 13 2 z 14 0 z 15 5 9 5 Mean z 7 1 4 1 3 1 1 1 2 1 z 8 0 1 2 3 4 6 9 11 12 z 8 1 2 3 1 1 2 1 cluster PTree Masks (by ORing) z 9 0 1 2 3 4 6 7 10 12 13 z 9 2 1 1 2 1 3 1 1 2 1 z 10 0 1 2 3 4 5 7 11 12 13 z 10 2 1 1 1 4 1 1 2 z 11 0 1 2 3 4 6 8 10 11 12 z 11 1 2 1 1 3 2 1 1 1 2 z 12 0 1 2 3 5 6 7 8 9 11 12 13 z 12 1 1 1 2 z 13 0 1 2 3 7 8 9 10 z 13 3 1 1 2 z 14 0 1 2 3 5 7 9 11 12 13 z 14 1 1 2 1 3 2 1 1 2 1 z 15 0 1 3 5 6 7 8 9 10 11 z 15 1 2 1 2 2 2 1 z 11 0 0 0 1 1 1 1 0 z 12 0 0 0 1 0 0 0 0 1 z 13 1 1 1 0 0 0 0 0

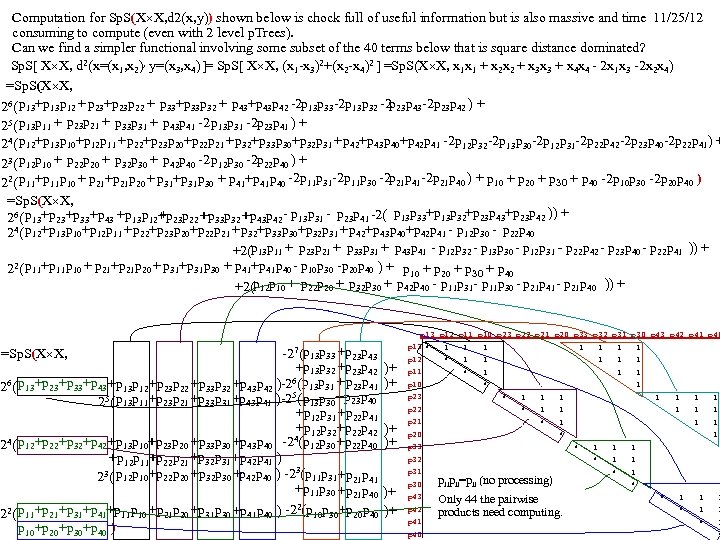

yo(x-M)/|x-M| Value Arrays yo(x-M)/|x-M| Count Arrays Cluster by splitting at gaps > 2 z 1 0 1 2 5 6 10 11 12 14 z 1 2 2 4 1 1 2 1 z 2 0 1 2 5 6 10 11 12 14 z 2 2 2 4 1 1 2 1 z 3 0 1 2 5 6 10 11 12 14 z 3 1 5 2 1 1 2 1 z 4 0 1 3 6 10 11 12 14 z 4 2 2 1 1 2 1 z 5 0 1 2 3 5 6 10 11 12 14 z 5 2 2 3 1 1 1 2 1 z 11 0 0 0 1 1 1 1 0 z 6 0 1 2 3 7 8 9 10 z 6 2 1 1 3 3 3 z 7 0 1 2 3 4 6 9 11 12 z 7 1 4 1 3 1 1 1 2 1 z 8 0 1 2 3 4 6 9 11 12 z 8 1 2 3 1 1 2 1 z 9 0 1 2 3 4 6 7 10 12 13 z 9 2 1 1 2 1 3 1 1 2 1 z 10 0 1 2 3 4 5 7 11 12 13 z 10 2 1 1 1 4 1 1 2 z 11 0 1 2 3 4 6 8 10 11 12 z 11 1 2 1 1 3 2 1 1 1 2 z 12 0 1 2 3 5 6 7 8 9 11 12 13 z 12 1 1 1 2 z 13 0 1 2 3 7 8 9 10 z 13 3 1 1 2 z 14 0 1 2 3 5 7 9 11 12 13 z 14 1 1 2 1 3 2 1 1 2 1 z 15 0 1 3 5 6 7 8 9 10 11 z 15 1 2 1 2 2 2 1 z 12 0 0 0 1 0 0 0 0 1 z 13 1 1 1 0 0 0 0 0 z 71 1 1 1 0 0 1 1 1 z 72 0 0 0 1 1 0 0 0 x y xy 1 2 3 4 5 6 7 8 9 a b 1 1 1 1 3 1 2 3 2 2 3 2 4 3 3 4 5 2 5 5 9 3 6 15 1 7 f 14 2 8 15 3 9 6 M d gap : 6 13 4 a b -9 10 9 b c e 1110 c 9 11 d a 1111 e 8 7 8 f 7 9 x y F z 1 14 z 1 z 2 12 z 1 z 3 12 z 1 z 4 11 z 5 10 z 1 z 6 6 z 1 z 7 1 z 8 2 z 1 z 9 0 z 10 2 z 11 2 z 12 1 z 13 2 z 14 0 z 15 5 9 5 Mean