96ad317bdc4ec52cc8247ddc53c0a41a.ppt

- Количество слайдов: 81

Regular Expressions and Automata Lecture #2 August 30 2012 1

Regular Expressions and Automata Lecture #2 August 30 2012 1

Rule-based vs Statistical Approaches • Rule-based = linguistic • Statistical – “learn” from a large corpus that has been marked up with the phenomina you are studying • For what problems is rule-based better suited and when is statistical better? – Identifying proper names – Distinguishing a biography from a dictionary entry – Answering Questions • May depend on how much good training data is available 2

Rule-based vs Statistical Approaches • Rule-based = linguistic • Statistical – “learn” from a large corpus that has been marked up with the phenomina you are studying • For what problems is rule-based better suited and when is statistical better? – Identifying proper names – Distinguishing a biography from a dictionary entry – Answering Questions • May depend on how much good training data is available 2

Rule-based vs Statistical Approaches • Even when using statistical – many tasks easily done with rules: tokenization, sentence breaking, morphology • Some PARTS of a task may be done with rules: e. g. , rules may be used to extract features, and then statistical learning methods might combine those features to perform a task. • How much knowledge of language do our algorithms need to do useful NLP? 80/20 rule: – Claim: 80% of NLP can be done with simple methods (low hanging fruit – The last 20% can be more difficult to attain – when should we worry about that? ? 3

Rule-based vs Statistical Approaches • Even when using statistical – many tasks easily done with rules: tokenization, sentence breaking, morphology • Some PARTS of a task may be done with rules: e. g. , rules may be used to extract features, and then statistical learning methods might combine those features to perform a task. • How much knowledge of language do our algorithms need to do useful NLP? 80/20 rule: – Claim: 80% of NLP can be done with simple methods (low hanging fruit – The last 20% can be more difficult to attain – when should we worry about that? ? 3

Today • Review some of the simple representations and ask ourselves how we might use them to do interesting and useful things – Regular Expressions – Finite State Automata • How much can you get out of a simple tool? • Should also think about the limits of these approaches: – How far can they take us? – When are they enough? – When do we need something more? 4

Today • Review some of the simple representations and ask ourselves how we might use them to do interesting and useful things – Regular Expressions – Finite State Automata • How much can you get out of a simple tool? • Should also think about the limits of these approaches: – How far can they take us? – When are they enough? – When do we need something more? 4

Regular Expressions • Can be viewed as a way to specify: – Search patterns over text string – Design of a particular kind of machine, called a Finite State Automaton (FSA) • These are really equivalent 5

Regular Expressions • Can be viewed as a way to specify: – Search patterns over text string – Design of a particular kind of machine, called a Finite State Automaton (FSA) • These are really equivalent 5

Uses of Regular Expressions in NLP • As grep, perl: Simple but powerful tools for large corpus analysis and ‘shallow’ processing – What word is most likely to begin a sentence? – What word is most likely to begin a question? – In your own email, are you more or less polite than the people you correspond with? • With other unix tools, allow us to – Obtain word frequency and co-occurrence statistics – Build simple interactive applications (e. g. , Eliza) • Regular expressions define regular languages or sets 6

Uses of Regular Expressions in NLP • As grep, perl: Simple but powerful tools for large corpus analysis and ‘shallow’ processing – What word is most likely to begin a sentence? – What word is most likely to begin a question? – In your own email, are you more or less polite than the people you correspond with? • With other unix tools, allow us to – Obtain word frequency and co-occurrence statistics – Build simple interactive applications (e. g. , Eliza) • Regular expressions define regular languages or sets 6

Regular Expressions • Simple but powerful tools for “shallow” processing of a document of “corpus” – What word begins a sentence? – What words begin a question – Identify all NPs • These simple tools can enable us to – Build simple interactive applications (e. g. , Eliza) – Recognize date, time, money… expressions – Recognize Named Entities (NE): people names, company names – Do morphological analysis 7

Regular Expressions • Simple but powerful tools for “shallow” processing of a document of “corpus” – What word begins a sentence? – What words begin a question – Identify all NPs • These simple tools can enable us to – Build simple interactive applications (e. g. , Eliza) – Recognize date, time, money… expressions – Recognize Named Entities (NE): people names, company names – Do morphological analysis 7

Regular Expressions • Regular Expression: Formula in algebraic notation for specifying a set of strings • String: Any sequence of alphanumeric characters – Letters, numbers, spaces, tabs, punctuation marks • Regular Expression Search – Pattern: specifying the set of strings we want to search for – Corpus: the texts we want to search through 8

Regular Expressions • Regular Expression: Formula in algebraic notation for specifying a set of strings • String: Any sequence of alphanumeric characters – Letters, numbers, spaces, tabs, punctuation marks • Regular Expression Search – Pattern: specifying the set of strings we want to search for – Corpus: the texts we want to search through 8

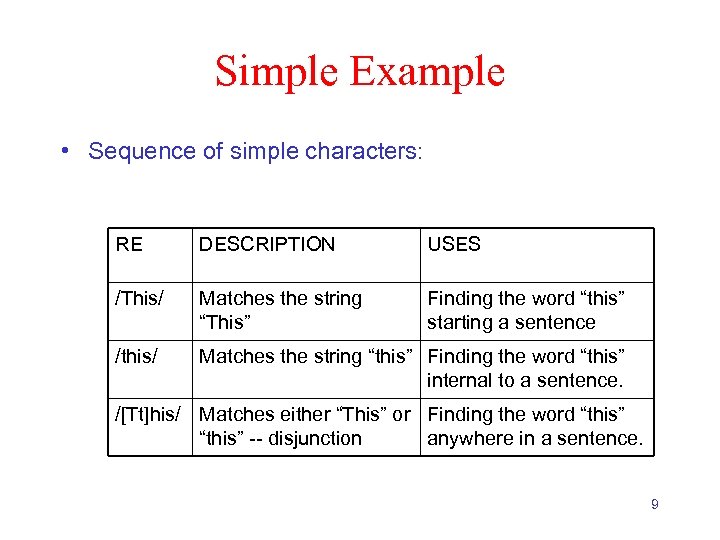

Simple Example • Sequence of simple characters: RE DESCRIPTION USES /This/ Matches the string “This” Finding the word “this” starting a sentence /this/ Matches the string “this” Finding the word “this” internal to a sentence. /[Tt]his/ Matches either “This” or Finding the word “this” -- disjunction anywhere in a sentence. 9

Simple Example • Sequence of simple characters: RE DESCRIPTION USES /This/ Matches the string “This” Finding the word “this” starting a sentence /this/ Matches the string “this” Finding the word “this” internal to a sentence. /[Tt]his/ Matches either “This” or Finding the word “this” -- disjunction anywhere in a sentence. 9

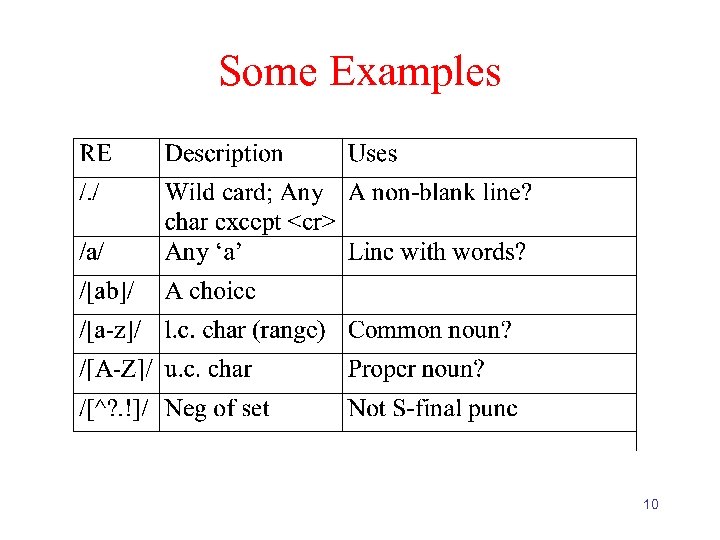

Some Examples 10

Some Examples 10

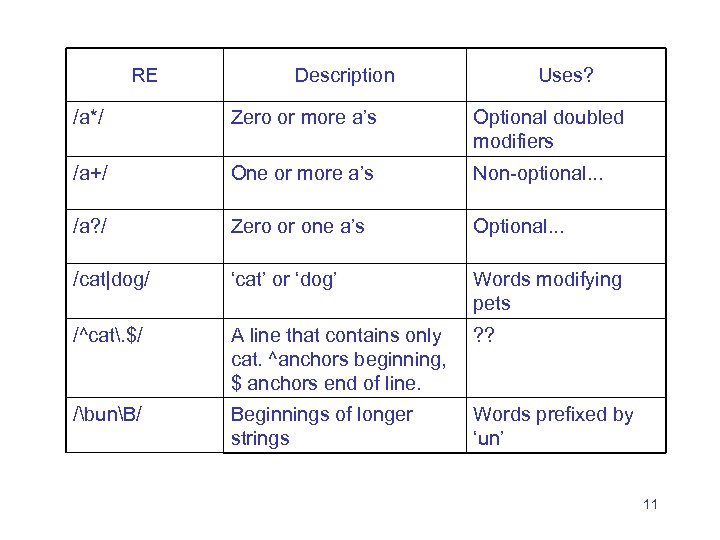

RE Description Uses? /a*/ Zero or more a’s Optional doubled modifiers /a+/ One or more a’s Non-optional. . . /a? / Zero or one a’s Optional. . . /cat|dog/ ‘cat’ or ‘dog’ Words modifying pets /^cat. $/ A line that contains only cat. ^anchors beginning, $ anchors end of line. ? ? /bunB/ Beginnings of longer strings Words prefixed by ‘un’ 11

RE Description Uses? /a*/ Zero or more a’s Optional doubled modifiers /a+/ One or more a’s Non-optional. . . /a? / Zero or one a’s Optional. . . /cat|dog/ ‘cat’ or ‘dog’ Words modifying pets /^cat. $/ A line that contains only cat. ^anchors beginning, $ anchors end of line. ? ? /bunB/ Beginnings of longer strings Words prefixed by ‘un’ 11

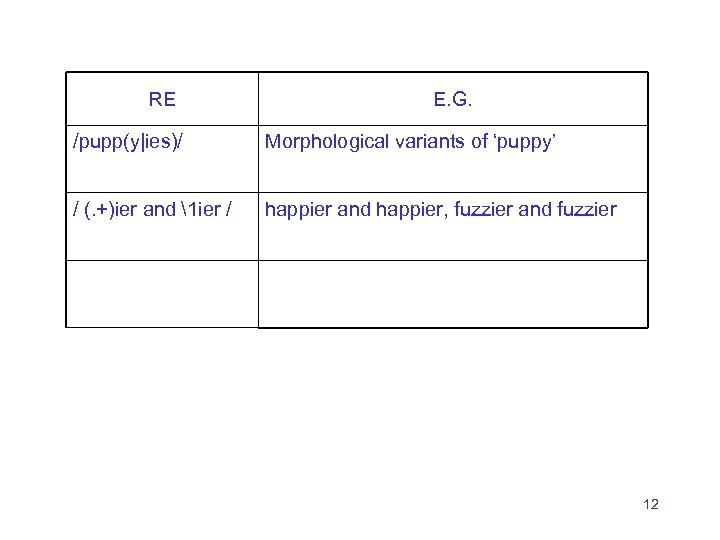

RE E. G. /pupp(y|ies)/ Morphological variants of ‘puppy’ / (. +)ier and 1 ier / happier and happier, fuzzier and fuzzier 12

RE E. G. /pupp(y|ies)/ Morphological variants of ‘puppy’ / (. +)ier and 1 ier / happier and happier, fuzzier and fuzzier 12

![Optionality and Repetition • /[Ww]oodchucks? / matches woodchucks, Woodchucks, woodchuck, Woodchuck • /colou? r/ Optionality and Repetition • /[Ww]oodchucks? / matches woodchucks, Woodchucks, woodchuck, Woodchuck • /colou? r/](https://present5.com/presentation/96ad317bdc4ec52cc8247ddc53c0a41a/image-13.jpg) Optionality and Repetition • /[Ww]oodchucks? / matches woodchucks, Woodchucks, woodchuck, Woodchuck • /colou? r/ matches color or colour • /he{3}/ matches heee • /(he){3}/ matches hehehe • /(he){3, } matches a sequence of at least 3 he’s 13

Optionality and Repetition • /[Ww]oodchucks? / matches woodchucks, Woodchucks, woodchuck, Woodchuck • /colou? r/ matches color or colour • /he{3}/ matches heee • /(he){3}/ matches hehehe • /(he){3, } matches a sequence of at least 3 he’s 13

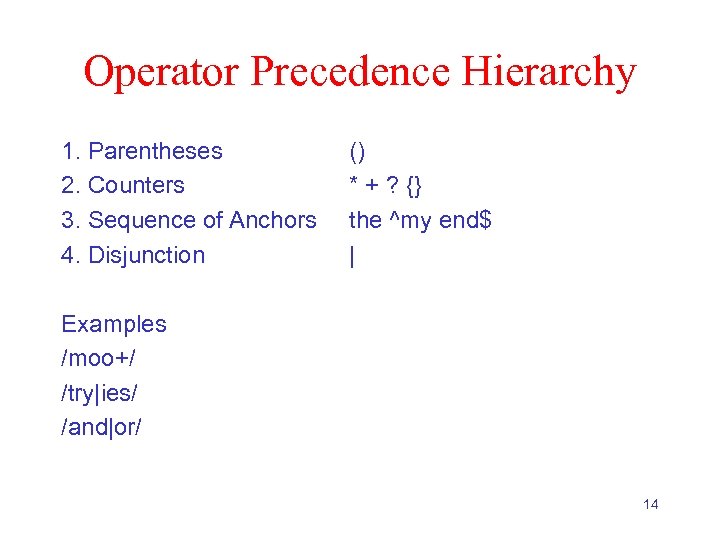

Operator Precedence Hierarchy 1. Parentheses 2. Counters 3. Sequence of Anchors 4. Disjunction () * + ? {} the ^my end$ | Examples /moo+/ /try|ies/ /and|or/ 14

Operator Precedence Hierarchy 1. Parentheses 2. Counters 3. Sequence of Anchors 4. Disjunction () * + ? {} the ^my end$ | Examples /moo+/ /try|ies/ /and|or/ 14

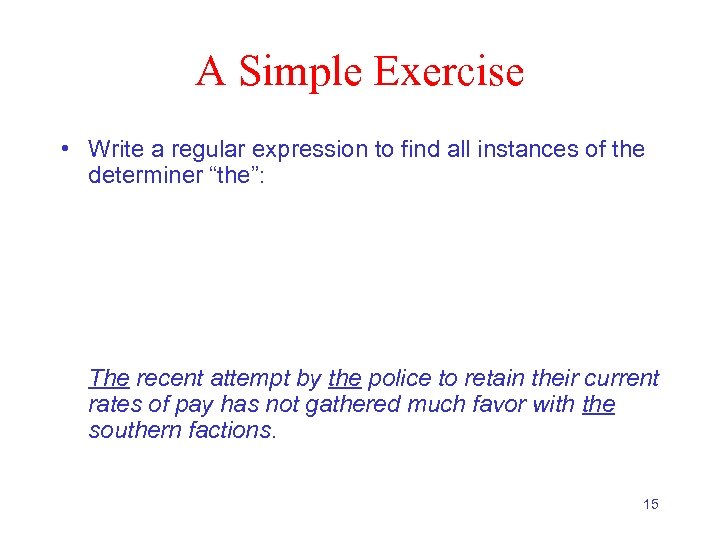

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 15

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 15

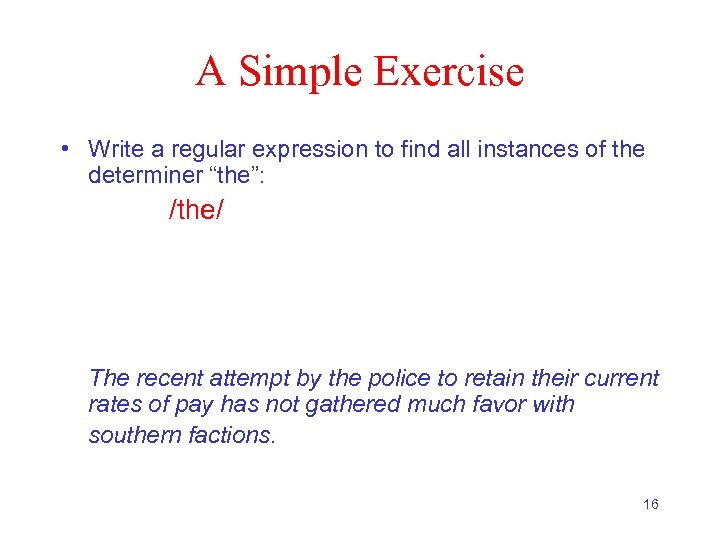

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with southern factions. 16

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with southern factions. 16

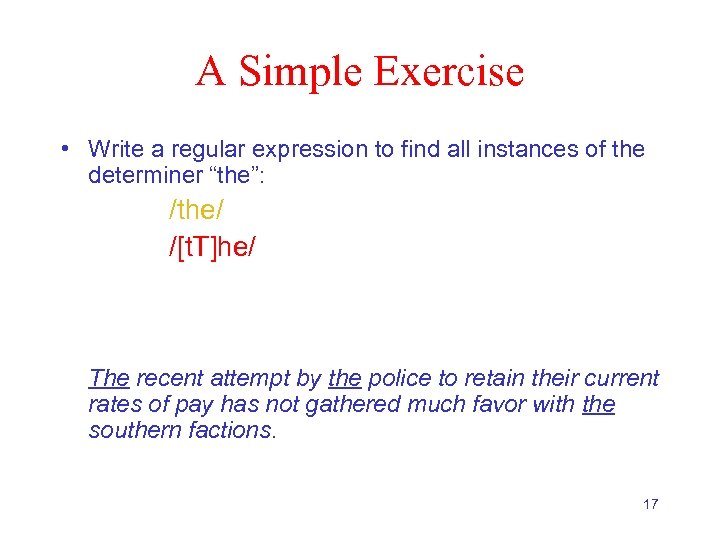

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 17

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 17

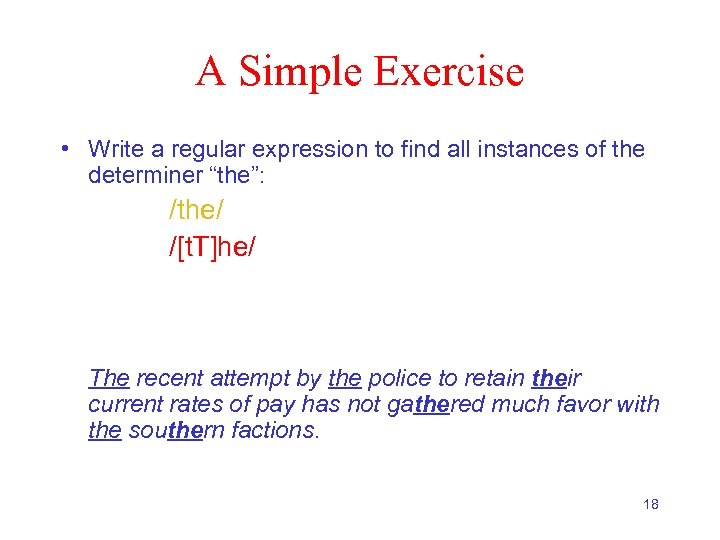

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 18

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 18

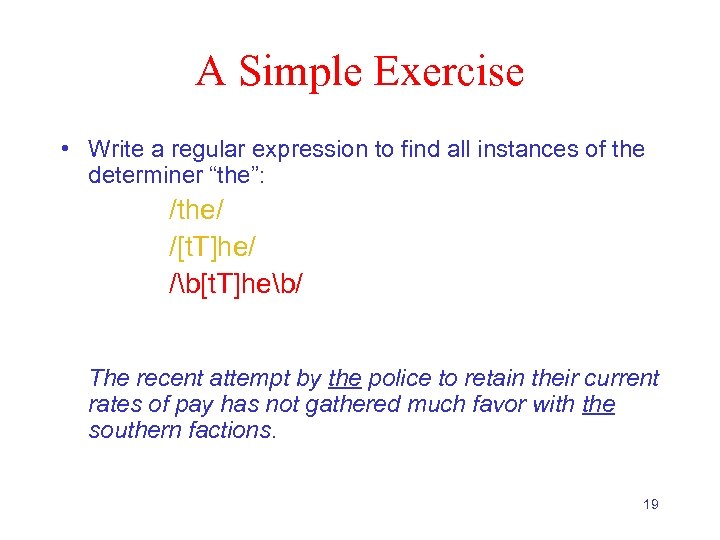

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 19

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z][t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 19

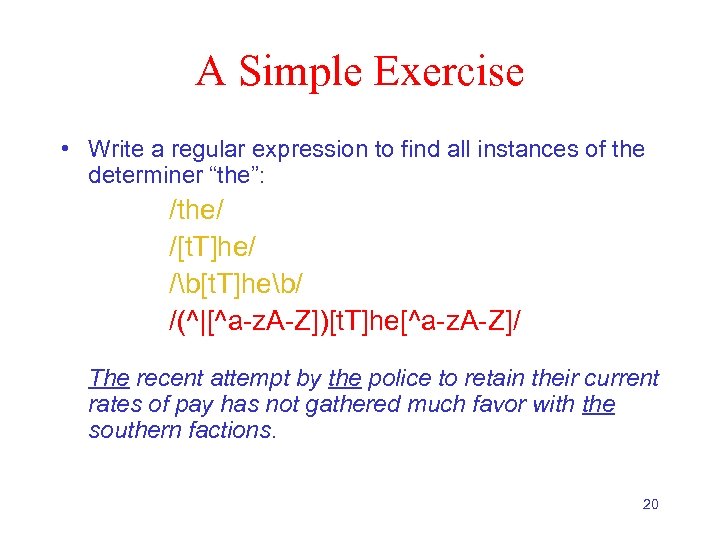

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z])[t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 20

A Simple Exercise • Write a regular expression to find all instances of the determiner “the”: /the/ /[t. T]he/ /b[t. T]heb/ /(^|[^a-z. A-Z])[t. T]he[^a-z. A-Z]/ The recent attempt by the police to retain their current rates of pay has not gathered much favor with the southern factions. 20

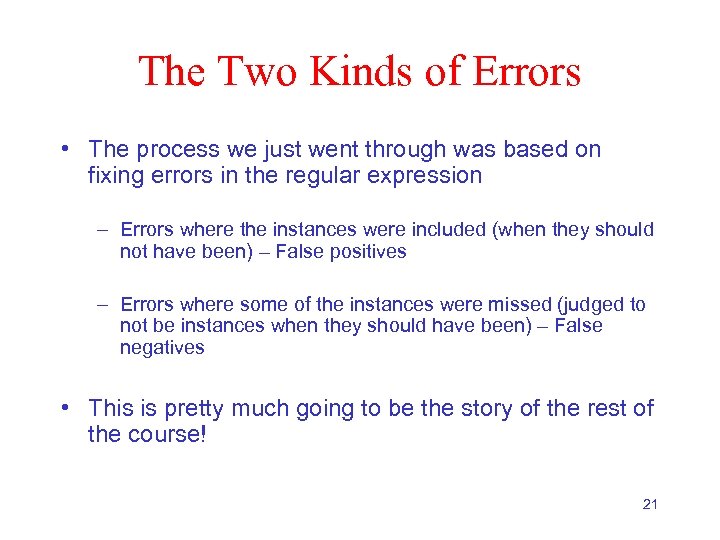

The Two Kinds of Errors • The process we just went through was based on fixing errors in the regular expression – Errors where the instances were included (when they should not have been) – False positives – Errors where some of the instances were missed (judged to not be instances when they should have been) – False negatives • This is pretty much going to be the story of the rest of the course! 21

The Two Kinds of Errors • The process we just went through was based on fixing errors in the regular expression – Errors where the instances were included (when they should not have been) – False positives – Errors where some of the instances were missed (judged to not be instances when they should have been) – False negatives • This is pretty much going to be the story of the rest of the course! 21

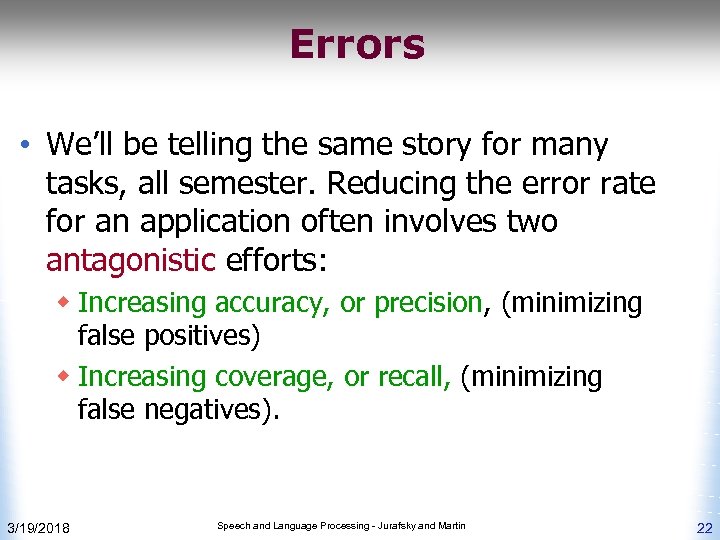

Errors • We’ll be telling the same story for many tasks, all semester. Reducing the error rate for an application often involves two antagonistic efforts: w Increasing accuracy, or precision, (minimizing false positives) w Increasing coverage, or recall, (minimizing false negatives). 3/19/2018 Speech and Language Processing - Jurafsky and Martin 22

Errors • We’ll be telling the same story for many tasks, all semester. Reducing the error rate for an application often involves two antagonistic efforts: w Increasing accuracy, or precision, (minimizing false positives) w Increasing coverage, or recall, (minimizing false negatives). 3/19/2018 Speech and Language Processing - Jurafsky and Martin 22

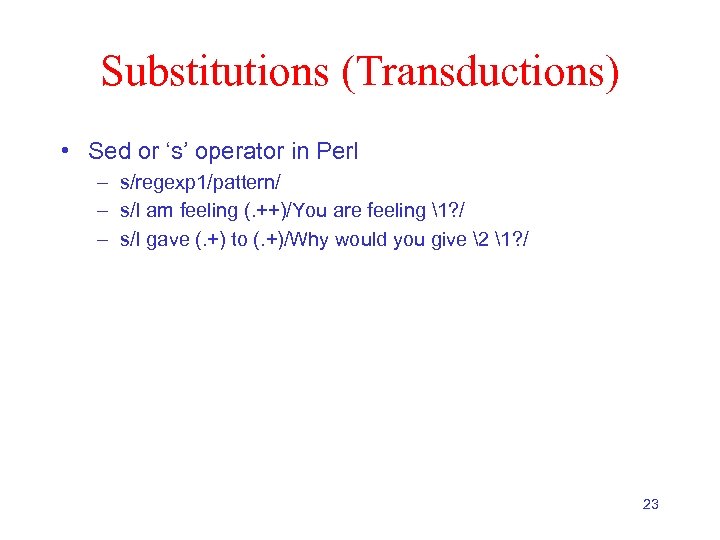

Substitutions (Transductions) • Sed or ‘s’ operator in Perl – s/regexp 1/pattern/ – s/I am feeling (. ++)/You are feeling 1? / – s/I gave (. +) to (. +)/Why would you give 2 1? / 23

Substitutions (Transductions) • Sed or ‘s’ operator in Perl – s/regexp 1/pattern/ – s/I am feeling (. ++)/You are feeling 1? / – s/I gave (. +) to (. +)/Why would you give 2 1? / 23

Next Several Slides from Mc. Keown 2009 24

Next Several Slides from Mc. Keown 2009 24

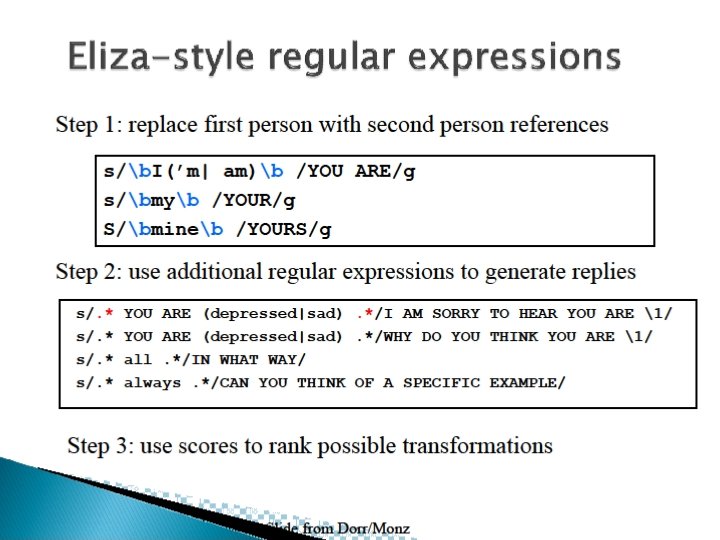

25

25

26

26

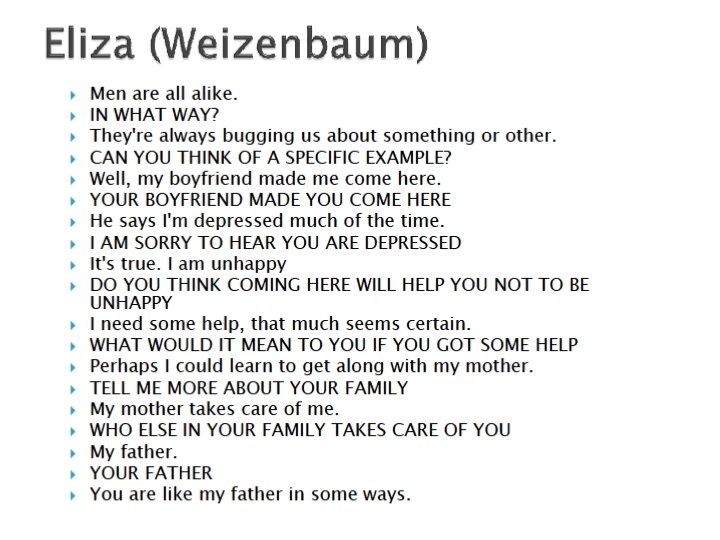

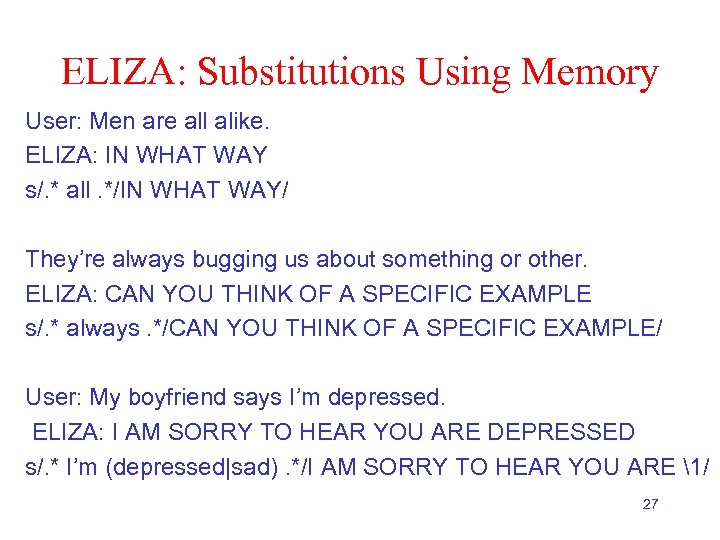

ELIZA: Substitutions Using Memory User: Men are all alike. ELIZA: IN WHAT WAY s/. * all. */IN WHAT WAY/ They’re always bugging us about something or other. ELIZA: CAN YOU THINK OF A SPECIFIC EXAMPLE s/. * always. */CAN YOU THINK OF A SPECIFIC EXAMPLE/ User: My boyfriend says I’m depressed. ELIZA: I AM SORRY TO HEAR YOU ARE DEPRESSED s/. * I’m (depressed|sad). */I AM SORRY TO HEAR YOU ARE 1/ 27

ELIZA: Substitutions Using Memory User: Men are all alike. ELIZA: IN WHAT WAY s/. * all. */IN WHAT WAY/ They’re always bugging us about something or other. ELIZA: CAN YOU THINK OF A SPECIFIC EXAMPLE s/. * always. */CAN YOU THINK OF A SPECIFIC EXAMPLE/ User: My boyfriend says I’m depressed. ELIZA: I AM SORRY TO HEAR YOU ARE DEPRESSED s/. * I’m (depressed|sad). */I AM SORRY TO HEAR YOU ARE 1/ 27

28

28

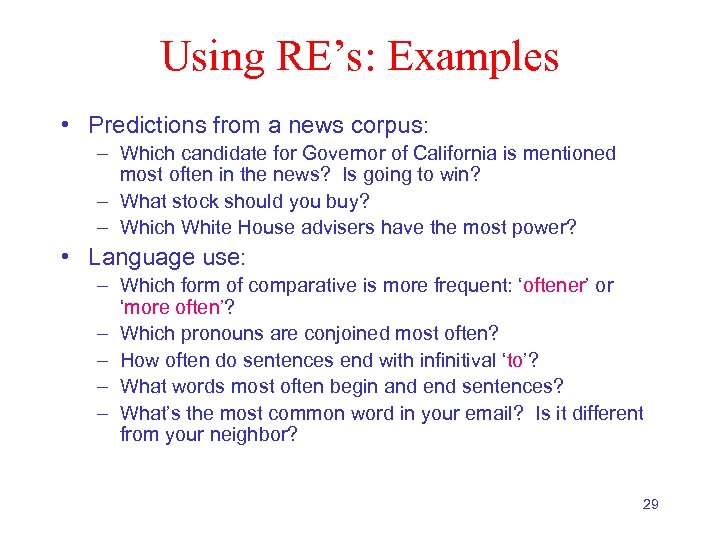

Using RE’s: Examples • Predictions from a news corpus: – Which candidate for Governor of California is mentioned most often in the news? Is going to win? – What stock should you buy? – Which White House advisers have the most power? • Language use: – Which form of comparative is more frequent: ‘oftener’ or ‘more often’? – Which pronouns are conjoined most often? – How often do sentences end with infinitival ‘to’? – What words most often begin and end sentences? – What’s the most common word in your email? Is it different from your neighbor? 29

Using RE’s: Examples • Predictions from a news corpus: – Which candidate for Governor of California is mentioned most often in the news? Is going to win? – What stock should you buy? – Which White House advisers have the most power? • Language use: – Which form of comparative is more frequent: ‘oftener’ or ‘more often’? – Which pronouns are conjoined most often? – How often do sentences end with infinitival ‘to’? – What words most often begin and end sentences? – What’s the most common word in your email? Is it different from your neighbor? 29

• Personality profiling: – Are you more or less polite than the people you correspond with? – With labeled data, which words signal friendly messages vs. unfriendly ones? 30

• Personality profiling: – Are you more or less polite than the people you correspond with? – With labeled data, which words signal friendly messages vs. unfriendly ones? 30

Finite State Automata • Regular Expressions (REs) can be viewed as a way to describe machines called Finite State Automata (FSA, also known as automata, finite automata). • FSAs and their close variants are a theoretical foundation of much of the field of NLP. 31

Finite State Automata • Regular Expressions (REs) can be viewed as a way to describe machines called Finite State Automata (FSA, also known as automata, finite automata). • FSAs and their close variants are a theoretical foundation of much of the field of NLP. 31

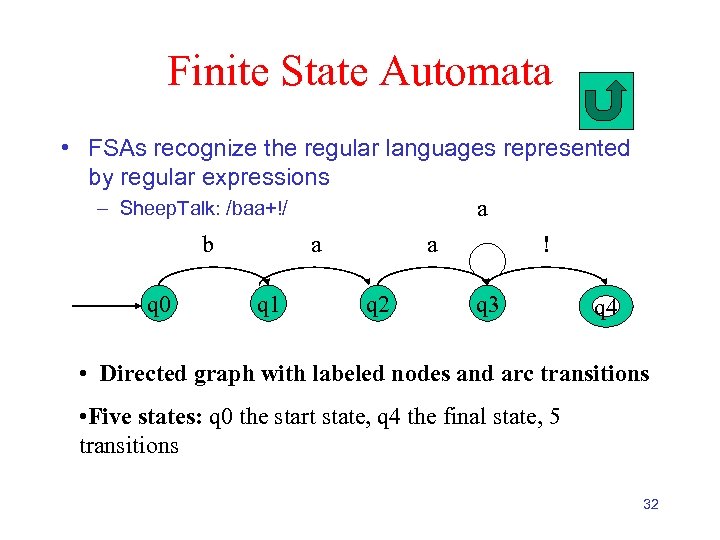

Finite State Automata • FSAs recognize the regular languages represented by regular expressions – Sheep. Talk: /baa+!/ a b a a ! q 0 q 1 q 2 q 3 q 4 • Directed graph with labeled nodes and arc transitions • Five states: q 0 the start state, q 4 the final state, 5 transitions 32

Finite State Automata • FSAs recognize the regular languages represented by regular expressions – Sheep. Talk: /baa+!/ a b a a ! q 0 q 1 q 2 q 3 q 4 • Directed graph with labeled nodes and arc transitions • Five states: q 0 the start state, q 4 the final state, 5 transitions 32

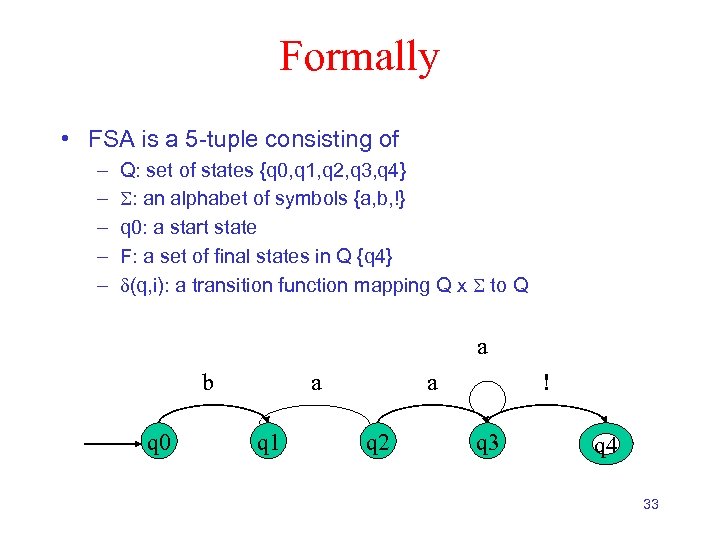

Formally • FSA is a 5 -tuple consisting of – – – Q: set of states {q 0, q 1, q 2, q 3, q 4} : an alphabet of symbols {a, b, !} q 0: a start state F: a set of final states in Q {q 4} (q, i): a transition function mapping Q x to Q a b q 0 a q 1 a q 2 ! q 3 q 4 33

Formally • FSA is a 5 -tuple consisting of – – – Q: set of states {q 0, q 1, q 2, q 3, q 4} : an alphabet of symbols {a, b, !} q 0: a start state F: a set of final states in Q {q 4} (q, i): a transition function mapping Q x to Q a b q 0 a q 1 a q 2 ! q 3 q 4 33

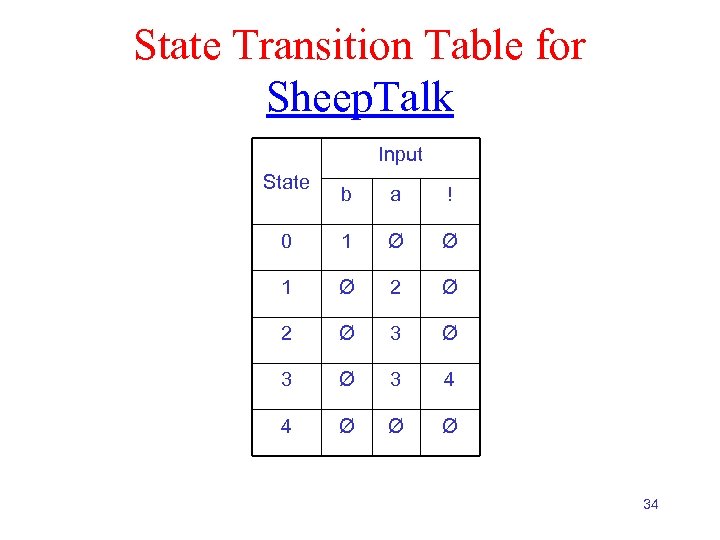

State Transition Table for Sheep. Talk Input State b a ! 0 1 Ø Ø 1 Ø 2 Ø 3 Ø 3 4 4 Ø Ø Ø 34

State Transition Table for Sheep. Talk Input State b a ! 0 1 Ø Ø 1 Ø 2 Ø 3 Ø 3 4 4 Ø Ø Ø 34

Recognition • Recognition (or acceptance) is the process of determining whether or not a given input should be accepted by a given machine. – Whether the string is in the language defined by the machine • In terms of REs, it’s the process of determining whether or not a given input matches a particular regular expression. – Whether the string is in the language defined by the expression • Traditionally, recognition is viewed as processing an input written on a tape consisting of cells containing elements from the alphabet. 35

Recognition • Recognition (or acceptance) is the process of determining whether or not a given input should be accepted by a given machine. – Whether the string is in the language defined by the machine • In terms of REs, it’s the process of determining whether or not a given input matches a particular regular expression. – Whether the string is in the language defined by the expression • Traditionally, recognition is viewed as processing an input written on a tape consisting of cells containing elements from the alphabet. 35

Recognition • Recognition (or acceptance) is the process of determining whether or not a given input should be accepted by a given machine. • Or… it’s the process of determining if as string is in the language we’re defining with the machine • In terms of REs, it’s the process of determining whether or not a given input matches a particular regular expression. • Traditionally, recognition is viewed as processing an input written on a tape consisting of cells containing elements from the alphabet. 36

Recognition • Recognition (or acceptance) is the process of determining whether or not a given input should be accepted by a given machine. • Or… it’s the process of determining if as string is in the language we’re defining with the machine • In terms of REs, it’s the process of determining whether or not a given input matches a particular regular expression. • Traditionally, recognition is viewed as processing an input written on a tape consisting of cells containing elements from the alphabet. 36

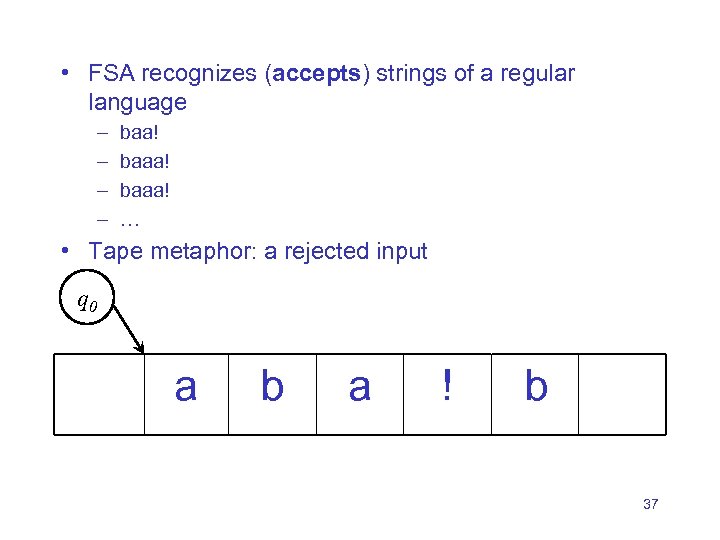

• FSA recognizes (accepts) strings of a regular language – – baa! baaa! … • Tape metaphor: a rejected input q 0 a b a ! b 37

• FSA recognizes (accepts) strings of a regular language – – baa! baaa! … • Tape metaphor: a rejected input q 0 a b a ! b 37

Recognition • • • Simply a process of starting in the start state Examining the current input Consulting the table Going to a new state and updating the tape pointer. Until you run out of tape. 38

Recognition • • • Simply a process of starting in the start state Examining the current input Consulting the table Going to a new state and updating the tape pointer. Until you run out of tape. 38

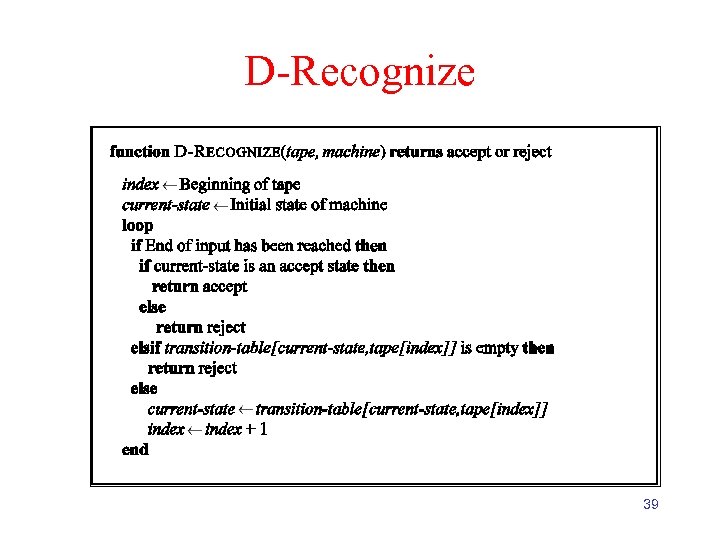

D-Recognize 39

D-Recognize 39

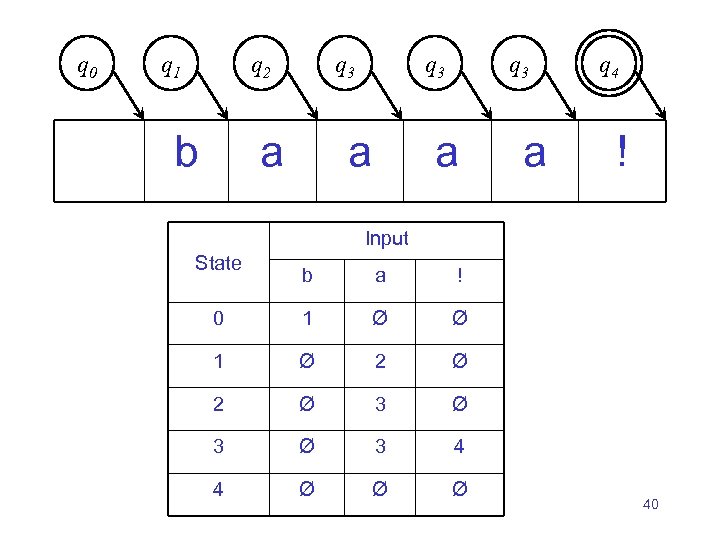

q 0 q 1 q 2 b q 3 a a q 4 ! Input State b a ! 0 1 Ø Ø 1 Ø 2 Ø 3 Ø 3 4 4 Ø Ø Ø 40

q 0 q 1 q 2 b q 3 a a q 4 ! Input State b a ! 0 1 Ø Ø 1 Ø 2 Ø 3 Ø 3 4 4 Ø Ø Ø 40

Key Points • Deterministic means that at each point in processing there is always one unique thing to do (no choices). • D-recognize is a simple table-driven interpreter • The algorithm is universal for all unambiguous languages. – To change the machine, you change the table. 41

Key Points • Deterministic means that at each point in processing there is always one unique thing to do (no choices). • D-recognize is a simple table-driven interpreter • The algorithm is universal for all unambiguous languages. – To change the machine, you change the table. 41

Key Points • Crudely therefore… matching strings with regular expressions (ala Perl) is a matter of – translating the expression into a machine (table) and – passing the table to an interpreter 42

Key Points • Crudely therefore… matching strings with regular expressions (ala Perl) is a matter of – translating the expression into a machine (table) and – passing the table to an interpreter 42

Recognition as Search • You can view this algorithm as a degenerate kind of state-space search. • States are pairings of tape positions and state numbers. • Operators are compiled into the table • Goal state is a pairing with the end of tape position and a final accept state • Its degenerate because? 43

Recognition as Search • You can view this algorithm as a degenerate kind of state-space search. • States are pairings of tape positions and state numbers. • Operators are compiled into the table • Goal state is a pairing with the end of tape position and a final accept state • Its degenerate because? 43

Formal Languages • Formal Languages are sets of strings composed of symbols from a finite set of symbols. • Finite-state automate define formal languages (without having to enumerate all the strings in the language) • Given a machine m (such as a particular FSA) L(m) means the formal language characterized by m. – L(Sheeptalk FSA) = {baa!, baaaa!, …} (an infinite set) 44

Formal Languages • Formal Languages are sets of strings composed of symbols from a finite set of symbols. • Finite-state automate define formal languages (without having to enumerate all the strings in the language) • Given a machine m (such as a particular FSA) L(m) means the formal language characterized by m. – L(Sheeptalk FSA) = {baa!, baaaa!, …} (an infinite set) 44

Generative Formalisms • The term Generative is based on the view that you can run the machine as a generator to get strings from the language. • FSAs can be viewed from two perspectives: – Acceptors that can tell you if a string is in the language – Generators to produce all and only the strings in the language 45

Generative Formalisms • The term Generative is based on the view that you can run the machine as a generator to get strings from the language. • FSAs can be viewed from two perspectives: – Acceptors that can tell you if a string is in the language – Generators to produce all and only the strings in the language 45

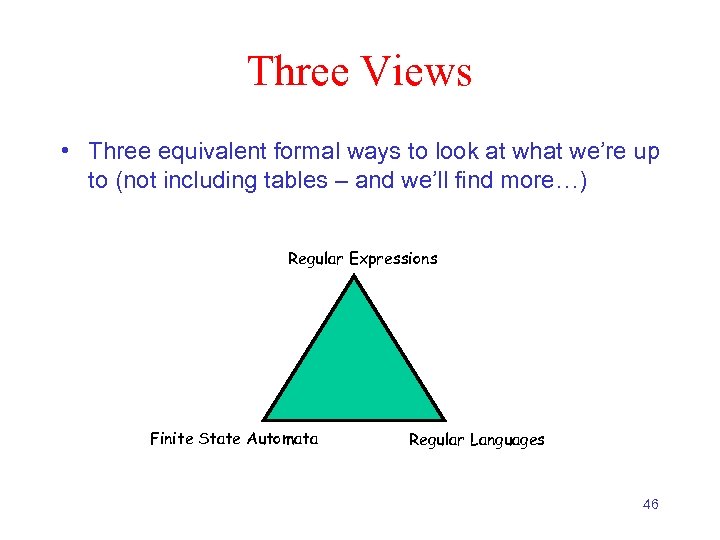

Three Views • Three equivalent formal ways to look at what we’re up to (not including tables – and we’ll find more…) Regular Expressions Finite State Automata Regular Languages 46

Three Views • Three equivalent formal ways to look at what we’re up to (not including tables – and we’ll find more…) Regular Expressions Finite State Automata Regular Languages 46

Determinism • Let’s take another look at what is going on with drecognize. • In particular, let’s look at what it means to be deterministic here and see if we can relax that notion. • How would our recognition algorithm change? • What would it mean for the accepted language? 47

Determinism • Let’s take another look at what is going on with drecognize. • In particular, let’s look at what it means to be deterministic here and see if we can relax that notion. • How would our recognition algorithm change? • What would it mean for the accepted language? 47

Determinism and Non-Determinism • Deterministic: There is at most one transition that can be taken given a current state and input symbol. • Non-deterministic: There is a choice of several transitions that can be taken given a current state and input symbol. (The machine doesn’t specify how to make the choice. ) 48

Determinism and Non-Determinism • Deterministic: There is at most one transition that can be taken given a current state and input symbol. • Non-deterministic: There is a choice of several transitions that can be taken given a current state and input symbol. (The machine doesn’t specify how to make the choice. ) 48

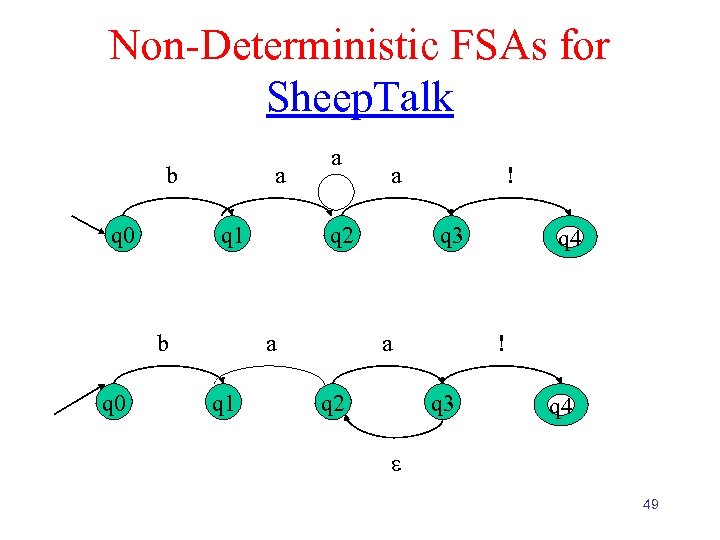

Non-Deterministic FSAs for Sheep. Talk b q 0 a q 1 b q 0 a q 2 a q 1 a ! q 3 a q 2 q 4 ! q 3 q 4 49

Non-Deterministic FSAs for Sheep. Talk b q 0 a q 1 b q 0 a q 2 a q 1 a ! q 3 a q 2 q 4 ! q 3 q 4 49

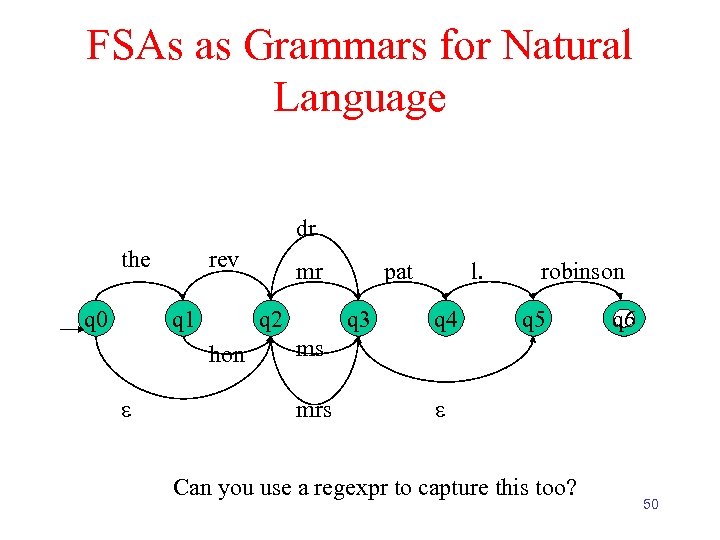

FSAs as Grammars for Natural Language dr the q 0 rev q 1 q 2 hon mr pat q 3 l. q 4 robinson q 5 q 6 ms mrs Can you use a regexpr to capture this too? 50

FSAs as Grammars for Natural Language dr the q 0 rev q 1 q 2 hon mr pat q 3 l. q 4 robinson q 5 q 6 ms mrs Can you use a regexpr to capture this too? 50

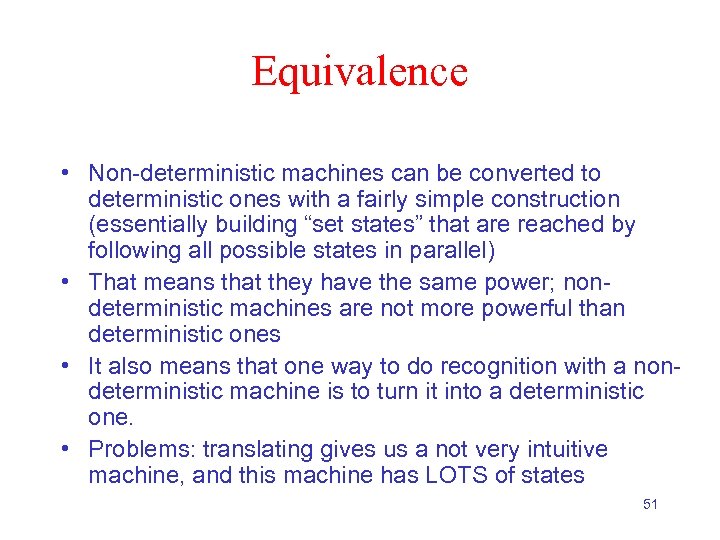

Equivalence • Non-deterministic machines can be converted to deterministic ones with a fairly simple construction (essentially building “set states” that are reached by following all possible states in parallel) • That means that they have the same power; nondeterministic machines are not more powerful than deterministic ones • It also means that one way to do recognition with a nondeterministic machine is to turn it into a deterministic one. • Problems: translating gives us a not very intuitive machine, and this machine has LOTS of states 51

Equivalence • Non-deterministic machines can be converted to deterministic ones with a fairly simple construction (essentially building “set states” that are reached by following all possible states in parallel) • That means that they have the same power; nondeterministic machines are not more powerful than deterministic ones • It also means that one way to do recognition with a nondeterministic machine is to turn it into a deterministic one. • Problems: translating gives us a not very intuitive machine, and this machine has LOTS of states 51

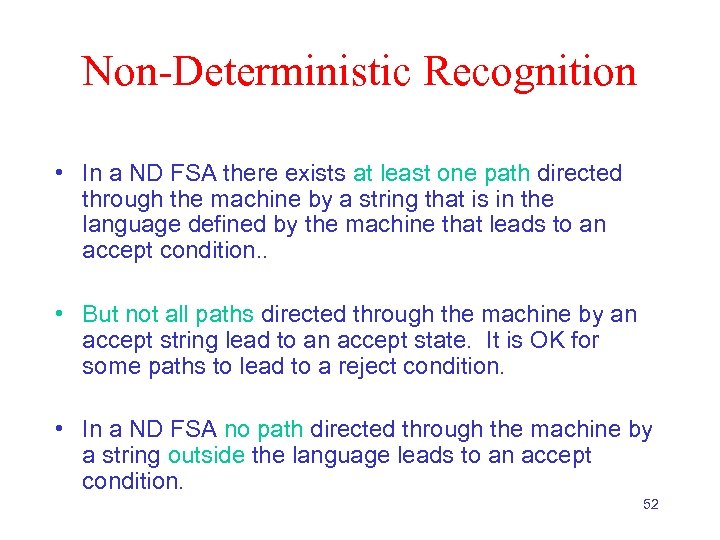

Non-Deterministic Recognition • In a ND FSA there exists at least one path directed through the machine by a string that is in the language defined by the machine that leads to an accept condition. . • But not all paths directed through the machine by an accept string lead to an accept state. It is OK for some paths to lead to a reject condition. • In a ND FSA no path directed through the machine by a string outside the language leads to an accept condition. 52

Non-Deterministic Recognition • In a ND FSA there exists at least one path directed through the machine by a string that is in the language defined by the machine that leads to an accept condition. . • But not all paths directed through the machine by an accept string lead to an accept state. It is OK for some paths to lead to a reject condition. • In a ND FSA no path directed through the machine by a string outside the language leads to an accept condition. 52

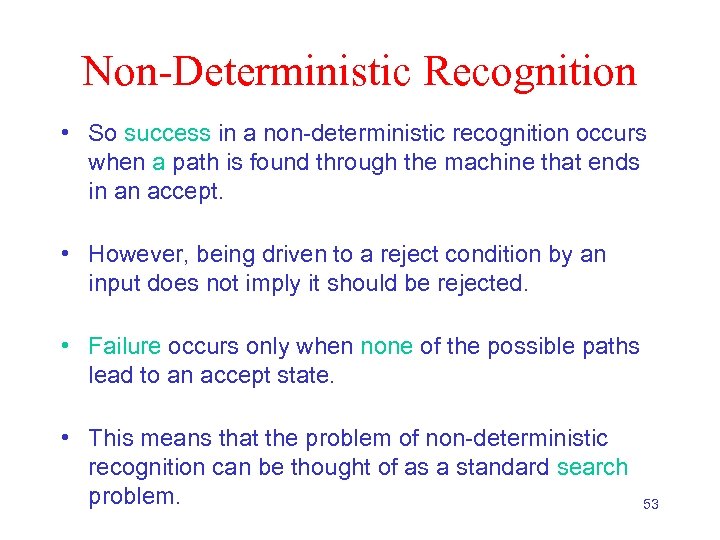

Non-Deterministic Recognition • So success in a non-deterministic recognition occurs when a path is found through the machine that ends in an accept. • However, being driven to a reject condition by an input does not imply it should be rejected. • Failure occurs only when none of the possible paths lead to an accept state. • This means that the problem of non-deterministic recognition can be thought of as a standard search problem. 53

Non-Deterministic Recognition • So success in a non-deterministic recognition occurs when a path is found through the machine that ends in an accept. • However, being driven to a reject condition by an input does not imply it should be rejected. • Failure occurs only when none of the possible paths lead to an accept state. • This means that the problem of non-deterministic recognition can be thought of as a standard search problem. 53

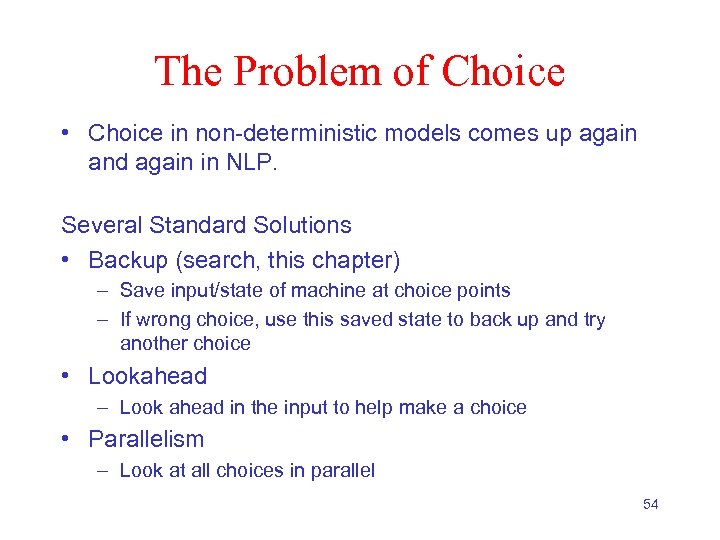

The Problem of Choice • Choice in non-deterministic models comes up again and again in NLP. Several Standard Solutions • Backup (search, this chapter) – Save input/state of machine at choice points – If wrong choice, use this saved state to back up and try another choice • Lookahead – Look ahead in the input to help make a choice • Parallelism – Look at all choices in parallel 54

The Problem of Choice • Choice in non-deterministic models comes up again and again in NLP. Several Standard Solutions • Backup (search, this chapter) – Save input/state of machine at choice points – If wrong choice, use this saved state to back up and try another choice • Lookahead – Look ahead in the input to help make a choice • Parallelism – Look at all choices in parallel 54

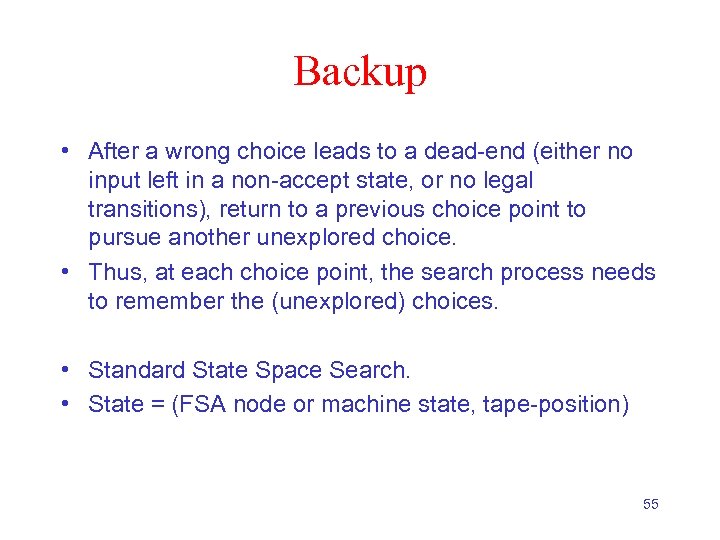

Backup • After a wrong choice leads to a dead-end (either no input left in a non-accept state, or no legal transitions), return to a previous choice point to pursue another unexplored choice. • Thus, at each choice point, the search process needs to remember the (unexplored) choices. • Standard State Space Search. • State = (FSA node or machine state, tape-position) 55

Backup • After a wrong choice leads to a dead-end (either no input left in a non-accept state, or no legal transitions), return to a previous choice point to pursue another unexplored choice. • Thus, at each choice point, the search process needs to remember the (unexplored) choices. • Standard State Space Search. • State = (FSA node or machine state, tape-position) 55

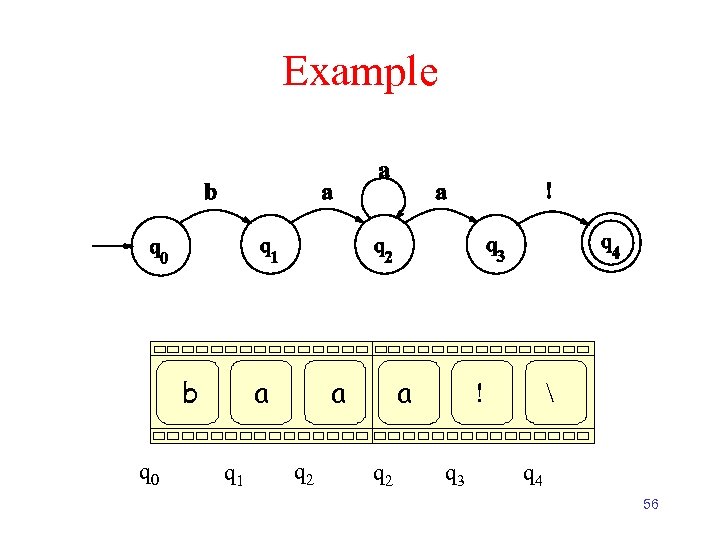

Example b q 0 a q 1 a a q 2 ! q 3 q 4 56

Example b q 0 a q 1 a a q 2 ! q 3 q 4 56

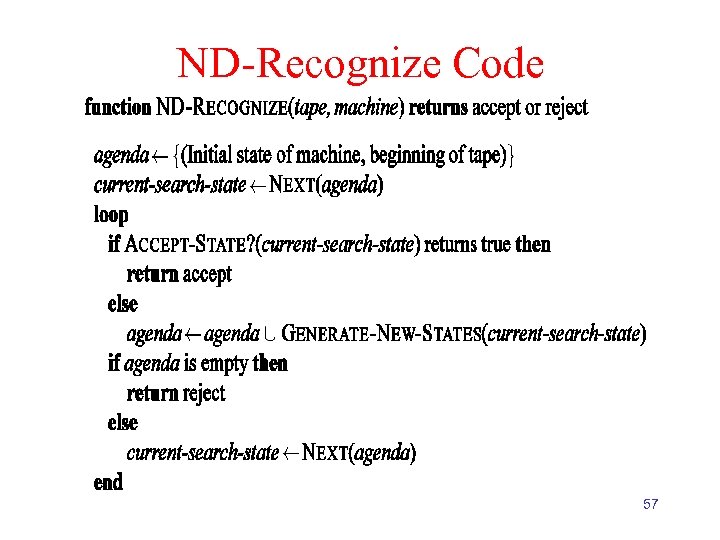

ND-Recognize Code 57

ND-Recognize Code 57

Example Agenda: 58

Example Agenda: 58

Example 59

Example 59

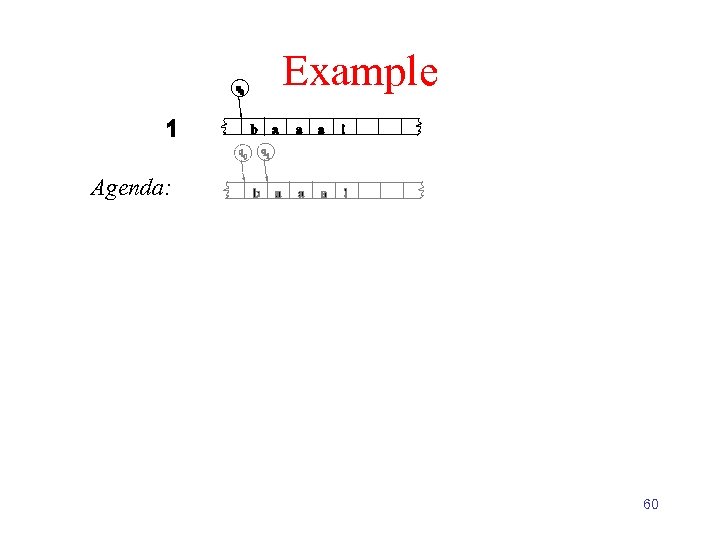

Example Agenda: 60

Example Agenda: 60

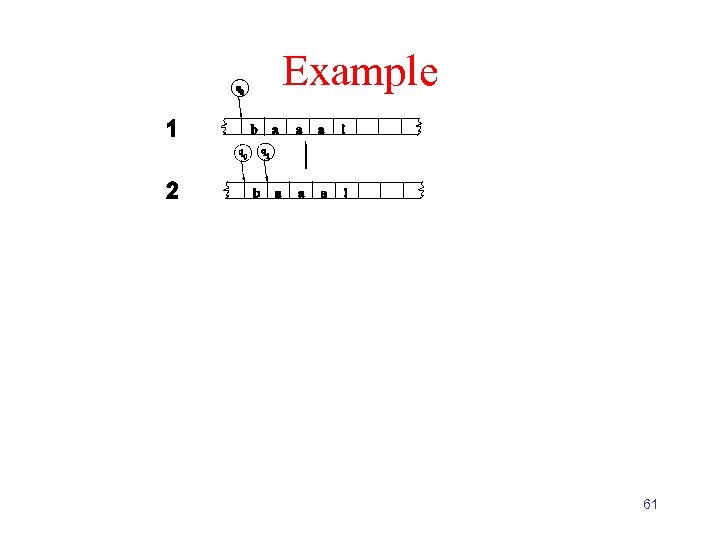

Example 61

Example 61

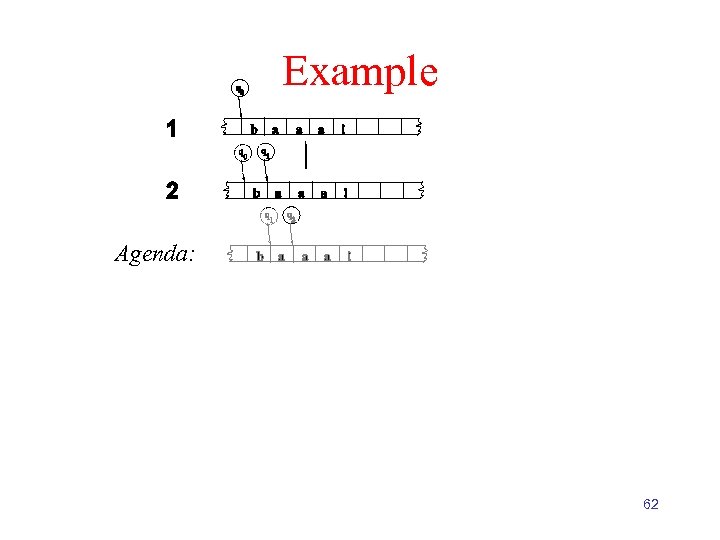

Example Agenda: 62

Example Agenda: 62

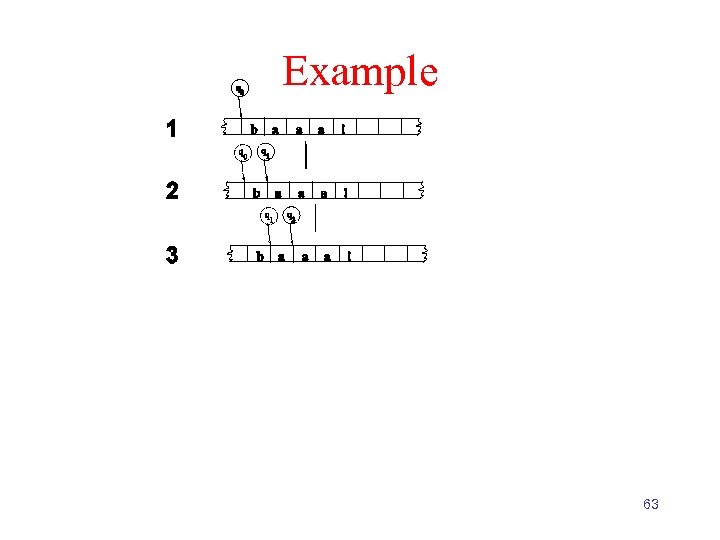

Example 63

Example 63

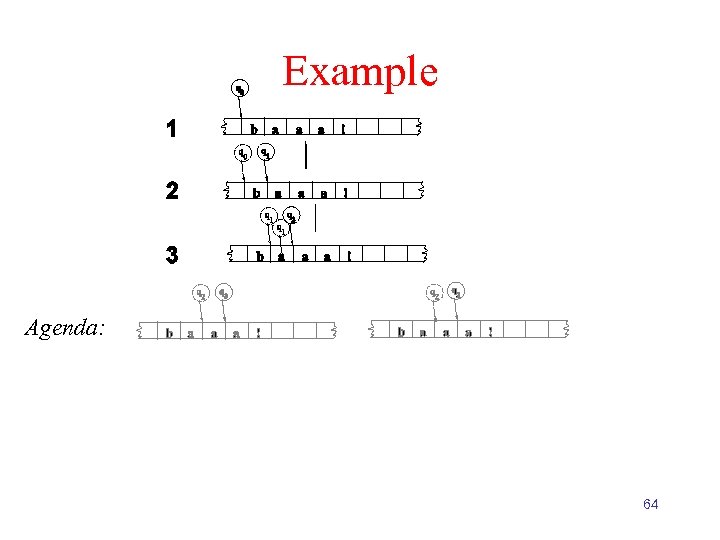

Example Agenda: 64

Example Agenda: 64

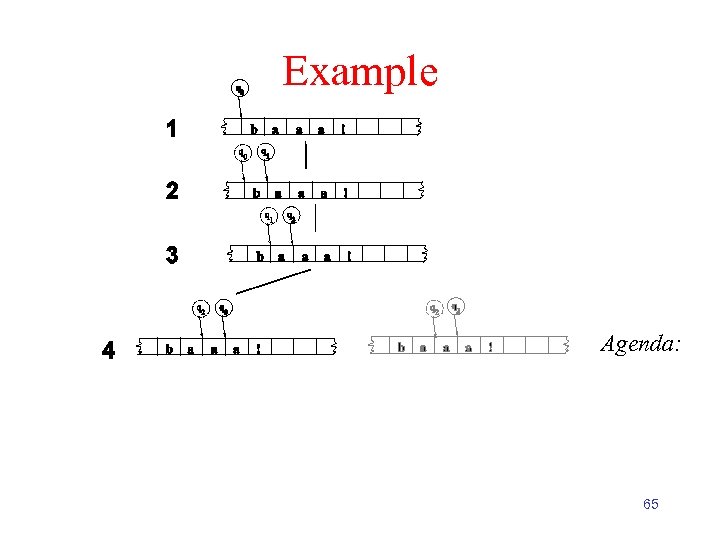

Example Agenda: 65

Example Agenda: 65

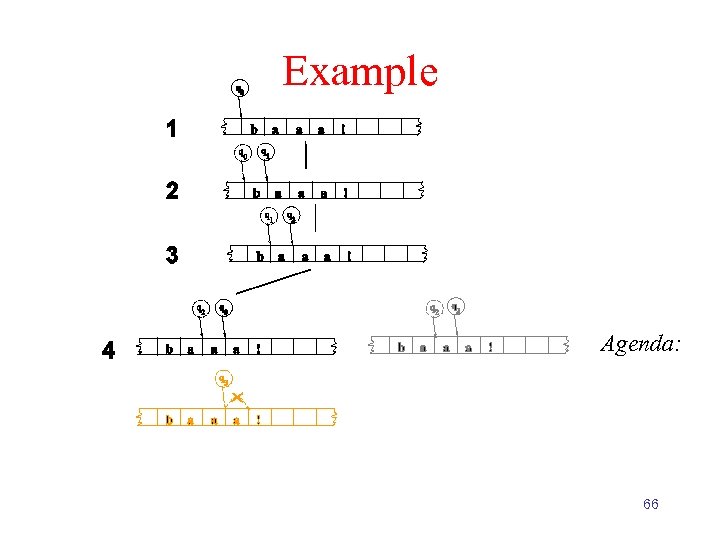

Example Agenda: 66

Example Agenda: 66

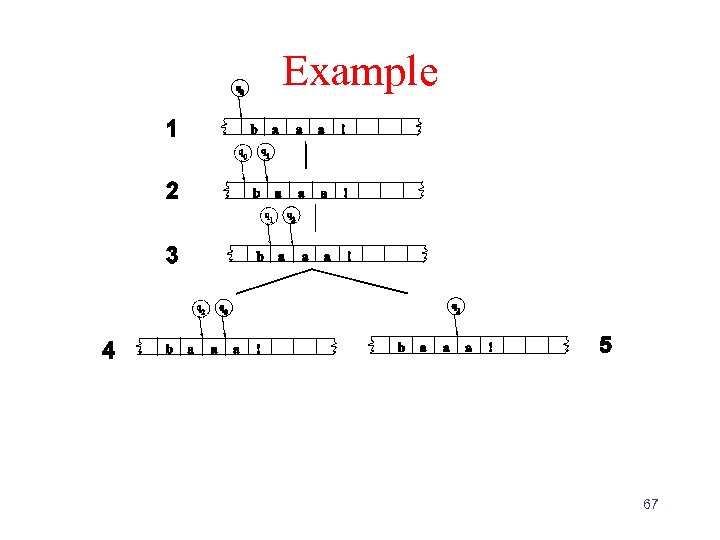

Example 67

Example 67

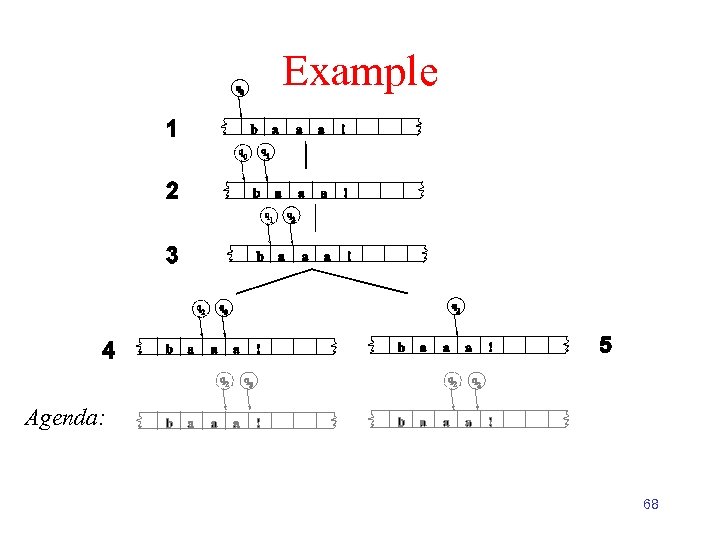

Example Agenda: 68

Example Agenda: 68

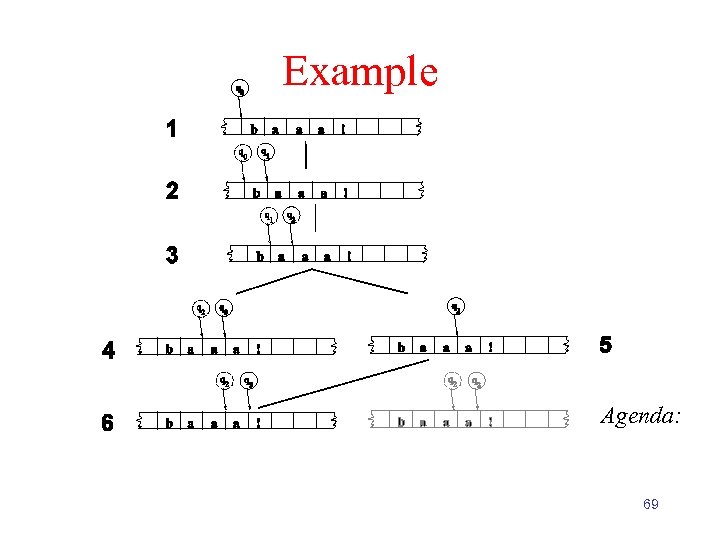

Example Agenda: 69

Example Agenda: 69

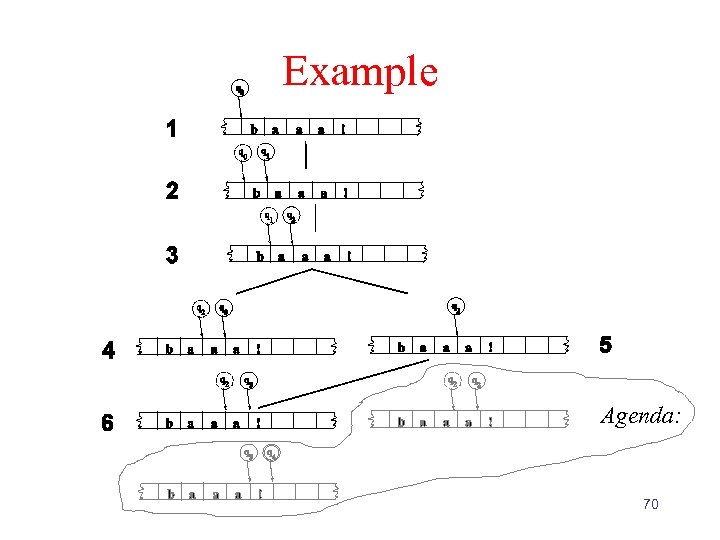

Example Agenda: 70

Example Agenda: 70

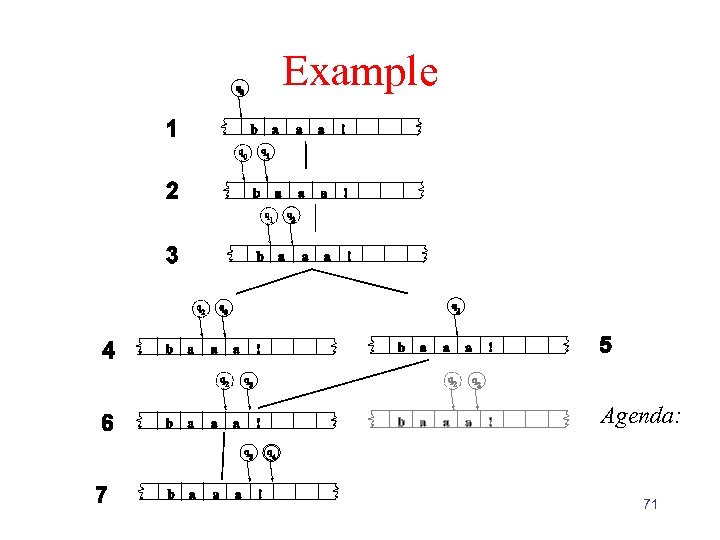

Example Agenda: 71

Example Agenda: 71

Key Points • States in the search space are pairings of tape positions and states in the machine. • By keeping track of as yet unexplored states, a recognizer can systematically explore all the paths through the machine given an input. 72

Key Points • States in the search space are pairings of tape positions and states in the machine. • By keeping track of as yet unexplored states, a recognizer can systematically explore all the paths through the machine given an input. 72

Infinite Search • If you’re not careful such searches can go into an infinite loop. • How? 73

Infinite Search • If you’re not careful such searches can go into an infinite loop. • How? 73

Why Bother? • Non-determinism doesn’t get us more formal power and it causes headaches so why bother? – More natural solutions – Machines based on construction are too big 74

Why Bother? • Non-determinism doesn’t get us more formal power and it causes headaches so why bother? – More natural solutions – Machines based on construction are too big 74

Compositional Machines • Formal languages are just sets of strings • Therefore, we can talk about various set operations (intersection, union, concatenation) • This turns out to be a useful exercise 3/19/2018 Speech and Language Processing - Jurafsky and Martin 75

Compositional Machines • Formal languages are just sets of strings • Therefore, we can talk about various set operations (intersection, union, concatenation) • This turns out to be a useful exercise 3/19/2018 Speech and Language Processing - Jurafsky and Martin 75

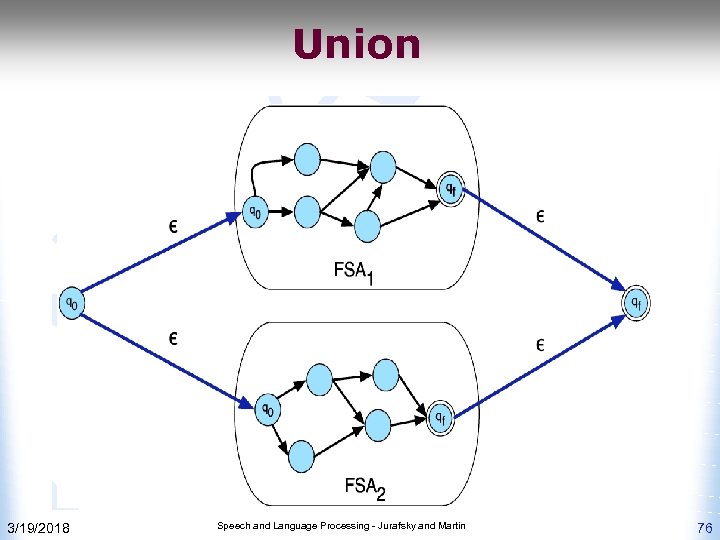

Union 3/19/2018 Speech and Language Processing - Jurafsky and Martin 76

Union 3/19/2018 Speech and Language Processing - Jurafsky and Martin 76

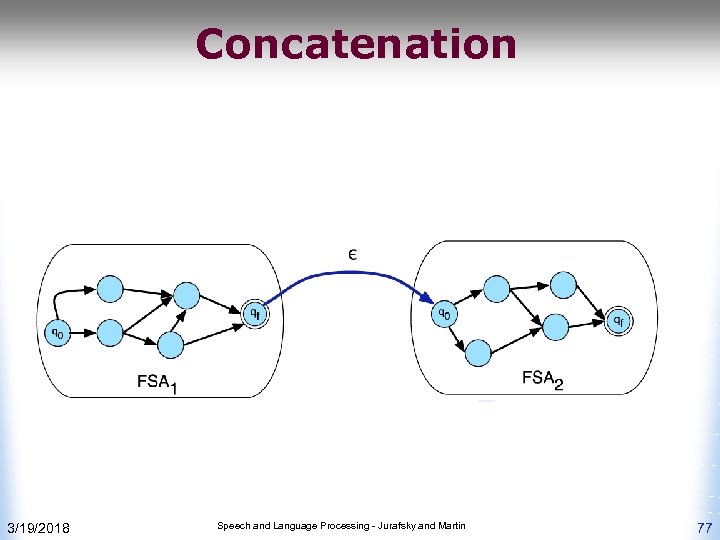

Concatenation 3/19/2018 Speech and Language Processing - Jurafsky and Martin 77

Concatenation 3/19/2018 Speech and Language Processing - Jurafsky and Martin 77

Negation • Construct a machine M 2 to accept all strings not accepted by machine M 1 and reject all the strings accepted by M 1 w Invert all the accept and not accept states in M 1 • Does that work for non-deterministic machines? 3/19/2018 Speech and Language Processing - Jurafsky and Martin 78

Negation • Construct a machine M 2 to accept all strings not accepted by machine M 1 and reject all the strings accepted by M 1 w Invert all the accept and not accept states in M 1 • Does that work for non-deterministic machines? 3/19/2018 Speech and Language Processing - Jurafsky and Martin 78

Intersection • Accept a string that is in both of two specified languages • An indirect construction… w A^B = ~(~A or ~B) 3/19/2018 Speech and Language Processing - Jurafsky and Martin 79

Intersection • Accept a string that is in both of two specified languages • An indirect construction… w A^B = ~(~A or ~B) 3/19/2018 Speech and Language Processing - Jurafsky and Martin 79

Why Bother? • ‘FSAs can be useful tools for recognizing – and generating – subsets of natural language – But they cannot represent all NL phenomena (Center Embedding: The mouse the cat. . . chased died. ) 80

Why Bother? • ‘FSAs can be useful tools for recognizing – and generating – subsets of natural language – But they cannot represent all NL phenomena (Center Embedding: The mouse the cat. . . chased died. ) 80

Summing Up • Regular expressions and FSAs can represent subsets of natural language as well as regular languages – Both representations may be impossible for humans to understand for any real subset of a language – But they are very easy to use for smaller subsets – They are extremely useful for certain subproblems (e. g. , morphological analysis). • Next time: Read Ch 3 • For fun: – Think of ways you might characterize features of your email using only regular expressions 81

Summing Up • Regular expressions and FSAs can represent subsets of natural language as well as regular languages – Both representations may be impossible for humans to understand for any real subset of a language – But they are very easy to use for smaller subsets – They are extremely useful for certain subproblems (e. g. , morphological analysis). • Next time: Read Ch 3 • For fun: – Think of ways you might characterize features of your email using only regular expressions 81