7791e5c7b60e763f3c072979fff97132.ppt

- Количество слайдов: 62

Recommender Systems Session C Robin Burke De. Paul University Chicago, IL

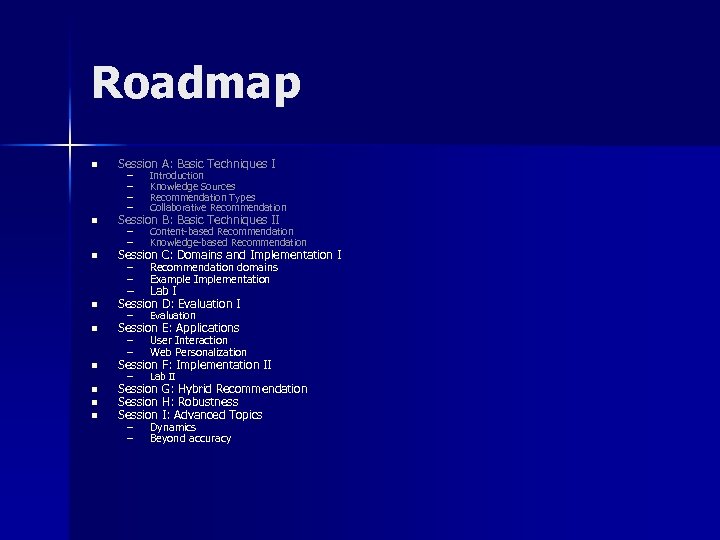

Roadmap n Session A: Basic Techniques I n Session B: Basic Techniques II n Session C: Domains and Implementation I – Recommendation domains – Example Implementation – Lab I Session D: Evaluation I n n n – – Introduction Knowledge Sources Recommendation Types Collaborative Recommendation – – Content-based Recommendation Knowledge-based Recommendation Recomme – Evaluation – Lab II La Session E: Applications – User Interaction – Web Personalization Session F: Implementation II Session G: Hybrid Recommendation Session H: Robustness Session I: Advanced Topics – Dynamics – Beyond accuracy

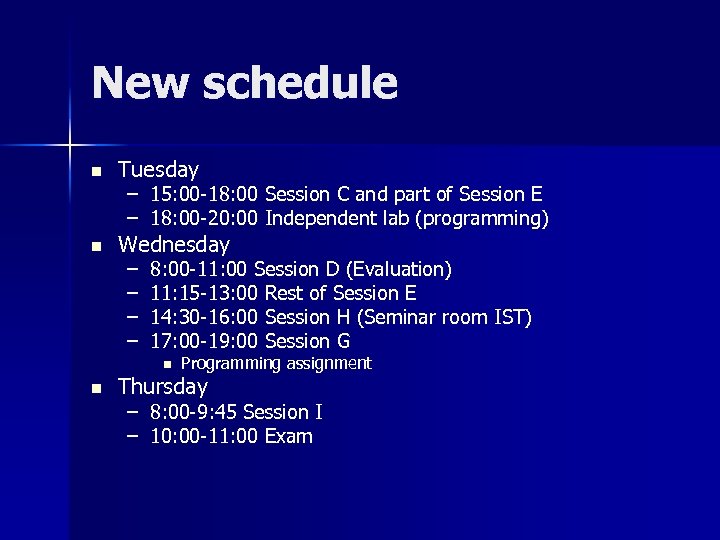

New schedule n Tuesday n Wednesday – 15: 00 -18: 00 Session C and part of Session E – 18: 00 -20: 00 Independent lab (programming) – – 8: 00 -11: 00 Session D (Evaluation) 11: 15 -13: 00 Rest of Session E 14: 30 -16: 00 Session H (Seminar room IST) 17: 00 -19: 00 Session G n n Programming assignment Thursday – 8: 00 -9: 45 Session I – 10: 00 -11: 00 Exam

Activity n n With your partner Come up with a domain for recommendation – Cannot be n n music movies books restaurants n Can't be already the topic of your research n 10 minutes

Domains?

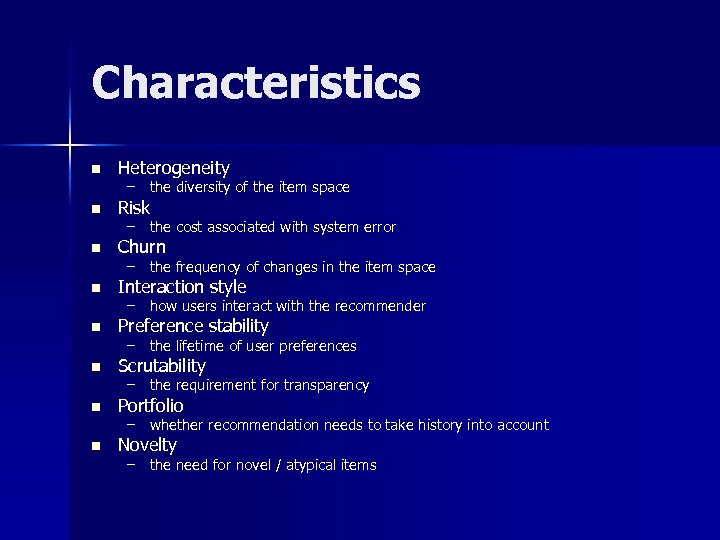

Characteristics n Heterogeneity n Risk n Churn n Interaction style n Preference stability n Scrutability n Portfolio n Novelty – the diversity of the item space – the cost associated with system error – the frequency of changes in the item space – how users interact with the recommender – the lifetime of user preferences – the requirement for transparency – whether recommendation needs to take history into account – the need for novel / atypical items

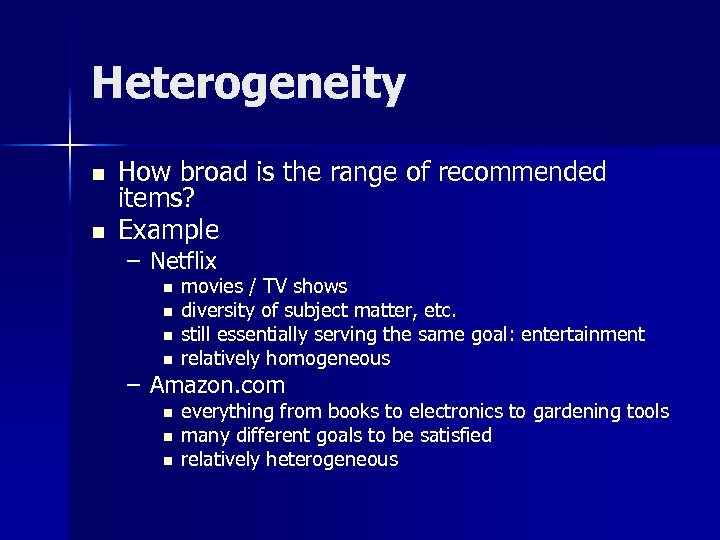

Heterogeneity n n How broad is the range of recommended items? Example – Netflix n n movies / TV shows diversity of subject matter, etc. still essentially serving the same goal: entertainment relatively homogeneous – Amazon. com n n n everything from books to electronics to gardening tools many different goals to be satisfied relatively heterogeneous

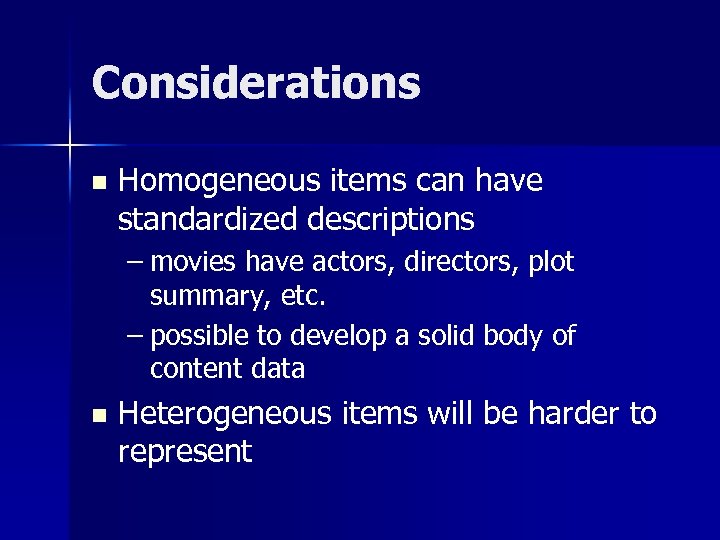

Considerations n Homogeneous items can have standardized descriptions – movies have actors, directors, plot summary, etc. – possible to develop a solid body of content data n Heterogeneous items will be harder to represent

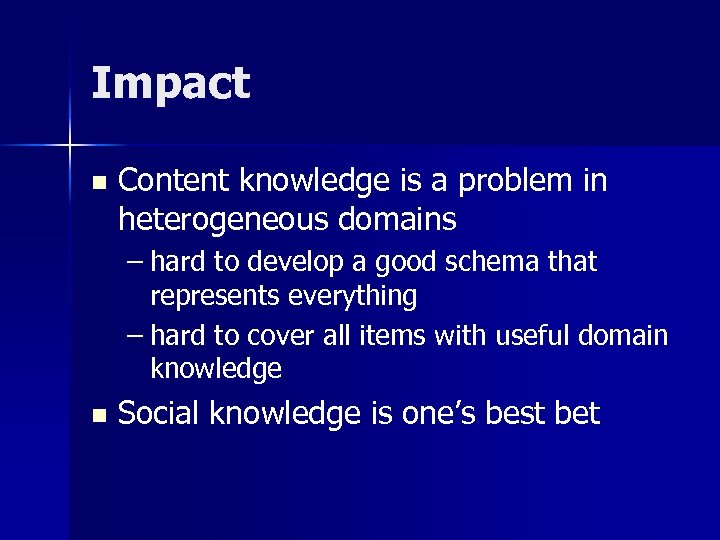

Impact n Content knowledge is a problem in heterogeneous domains – hard to develop a good schema that represents everything – hard to cover all items with useful domain knowledge n Social knowledge is one’s best bet

Risk n Some products are inherently low risk – 99 cent music track n Some are not low risk – a house n We mean – the cost of a false positive accepted by the user n Sometimes false negatives are also costly – scientific research – legal precedents

Considerations n In a low risk domain – it doesn’t matter so much how we choose – user will be less likely to have strong constraints n In a high risk domain – important to gather more information about exactly what the requirements are

Impact n Pure social recommendation will not work so well for high risk domains – inability to take constraints into account – possibility of bias n Knowledge-based recommendation has great potential in high risk – knowledge engineering costs worthwhile – user’s constraints can be employed

Churn n High churn means that items come and go quickly – news n Low churn items will be around for awhile – books n In the middle – restaurants – package vacations

Considerations n New item problem – constant in high churn domains – Consequence n difficult n to build up a history of opinions Freshness may matter – a good match from yesterday might be worse than – a weaker match from today

Impact n Difficult to employ social knowledge alone – items won’t have big enough profiles n Need flexible representation for content data – since catalog characteristics aren’t known in advance

Interaction style n Some recommendation scenarios are passive n Others are active n Sometimes a quick hit is important n Sometimes more extensive exploration is called for – the recommender produces content as part of a web site – the user makes a direct request – mobile application – rental apartment

Considerations n Passive style means user requirements are harder to tap into – not necessarily impossible n Brief interaction n Long interaction – means that only small amounts of information can be communicated – like web site browsing – may make up for deficiencies in passive data gathering

Impact n Passive interactions – favor learning-based method – don’t need user requirements n Active interactions – favor knowledge-based interactions – other techniques don’t adapt quickly n Extended, passive interaction – allow large amounts of opinion data to be gathered

Preference stability n Are users’ preferences stable over time? Some taste domains may be consistent n But others not n Not the same as churn n – movies – music (purchasing) – restaurants – music (playlists) – that has to do with items coming and going

Considerations Preference instability makes opinion data less useful n Approaches n – temporal decay – contextual selection n Preference stability – large profiles can be built

Impact n Preference instability – opinion data will be sparse – knowledge-based recommendation may be better n Preference stability – best case for learning-based techniques

Scrutability n “The property of being testable; open to inspection. ” – wiktionary n n Usually refers to explanatory capabilities of a recommender system Some domains need explanations of recommendations – usually high risk – also domains where users are non-experts n complex products like digital cameras

Considerations n Learning-based recommendations are hard to explain – the underlying models are statistical – some research in this area but no conclusive “best way” to explain

Impact n Knowledge-based techniques are usually more scrutable

Portfolio n The “portfolio effect” occurs when an item is purchased or viewed – and then is no longer interesting n Not always the case – I can recommend your favorite song again in a couple of days n Sometimes recommendations have to take into account the entire history – investments, for example

Considerations n A domain with the portfolio effect requires knowledge of the user’s history – standard formulation of collaborative recommendation – only recommend items that are unrated n Music recommender might need to know n News recommendation – when a track was played – what a reasonable time-span between repeats – avoid over-rotation – tricky because new stories on same topic might be interesting – as long as there is new material

Impact n A problem for content-based recommendation – another copy of an item will match best – must have another way to identify overlap – or threshold “not too similar” n Domain-specific requirements for rotation and portfolio composition – domain knowledge requirement

Novelty n “Milk and bananas” n Could recommend to everybody n But. . . – two most-purchased items in US grocery stores – correct very frequently – not interesting – people know they want these things – profit margin low – recommender very predictable

Consideration n Think about items – where the target users predicted rating is significantly higher than the average – where there is high variance (difference of opinion) n These recommendations might be more valuable – more “personal”

Impact n Collaborative methods are vulnerable to the “tyranny of the crowd” – “Coldplay” effect n May be necessary to – smooth popularity spikes – use thresholds

Categorize Domains 15 min n 10 min Discussion n

Break n 10 minutes

Interaction n Input n Duration n Modeling – implicit – explicit – single response – multi-step – short-term – long-term

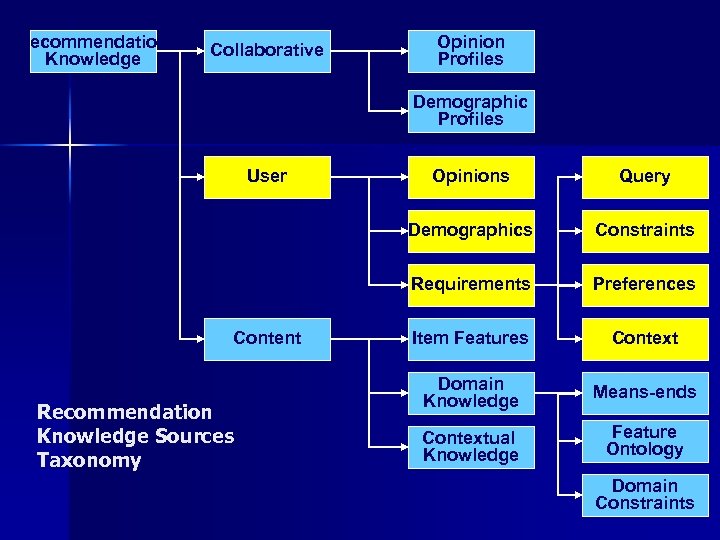

Recommendation Knowledge Collaborative Opinion Profiles Demographic Profiles User Constraints Requirements Recommendation Knowledge Sources Taxonomy Query Demographics Content Opinions Preferences Item Features Context Domain Knowledge Means-ends Contextual Knowledge Feature Ontology Domain Constraints

Also, Output n How to present results to users?

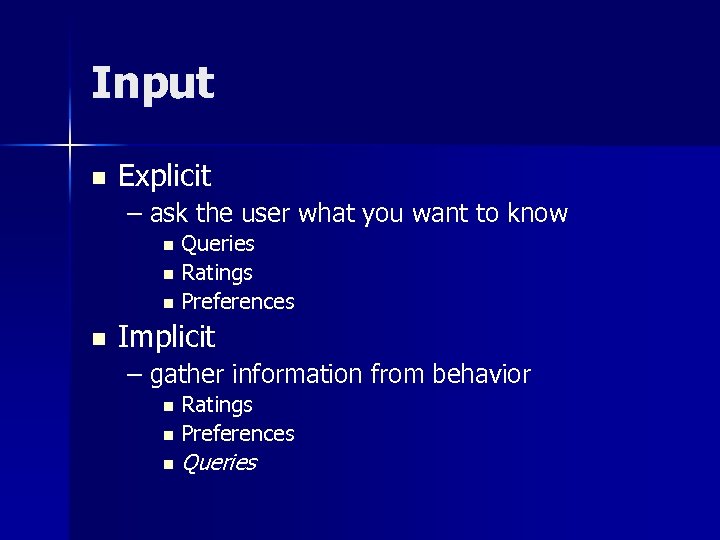

Input n Explicit – ask the user what you want to know Queries n Ratings n Preferences n n Implicit – gather information from behavior Ratings n Preferences n n Queries

Explicit Queries Query elicitation problem n Problem n – How to get the user’s preferred features / constraints? n Issues – User expertise / terminology

Example n Ambiguity – “madonna and child” n Imprecision – “a fast processor” n Terminological mismatch – “an i. Tunes player” n Lack of awareness – (I hate lugging a heavy laptop)

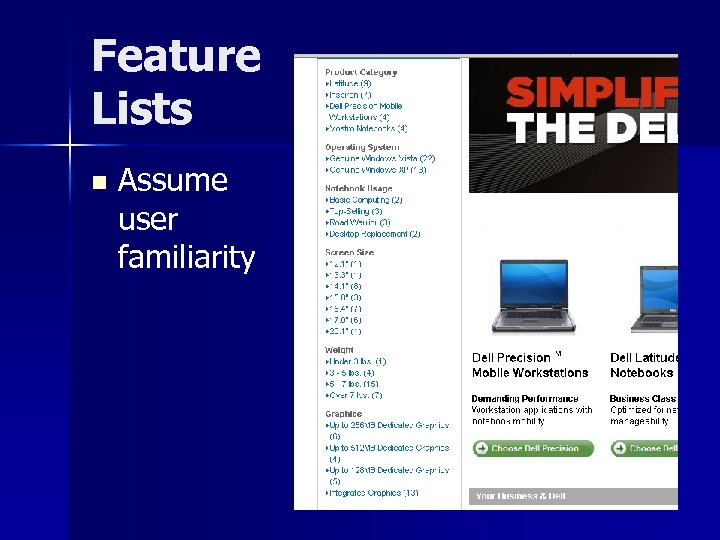

Feature Lists n Assume user familiarity

Recommendation Dialog n Fewer questions – future questions can depend on current answers n Mixed-initiative – recommender can propose solutions n Critiquing – examining solutions can help users define requirements – (more about critiquing later)

Implicit Evidence n Watch user’s behavior n Benefit n Typical sources – infer preferences – no extra user effort – no terminological gap – web server logs n more about this later – purchase / shopping cart history – CRM interactions

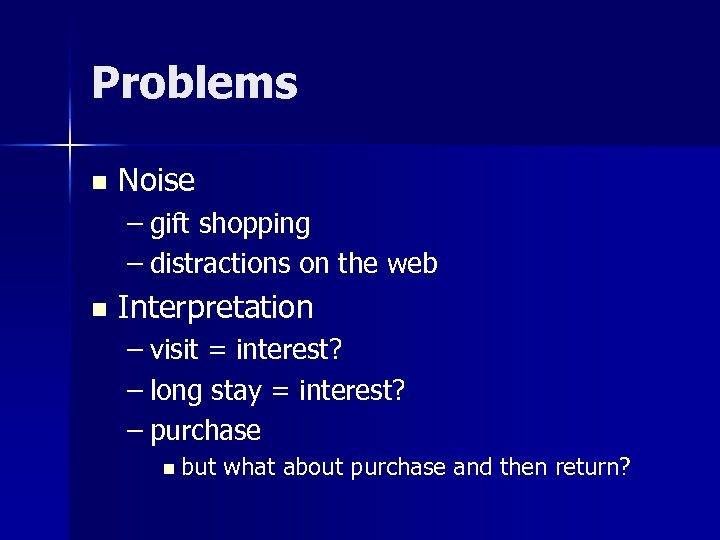

Problems n Noise – gift shopping – distractions on the web n Interpretation – visit = interest? – long stay = interest? – purchase n but what about purchase and then return?

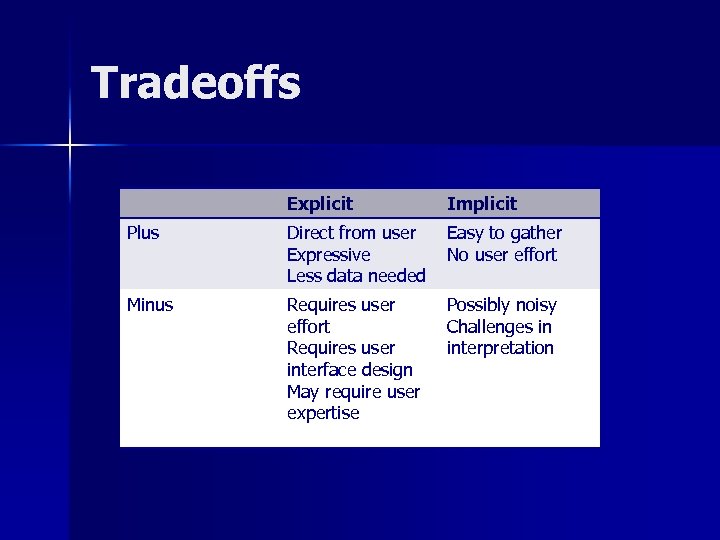

Tradeoffs Explicit Implicit Plus Direct from user Expressive Less data needed Easy to gather No user effort Minus Requires user effort Requires user interface design May require user expertise Possibly noisy Challenges in interpretation

Modeling n Short-term – usually we mean “single-session” n Long-term – multi-session

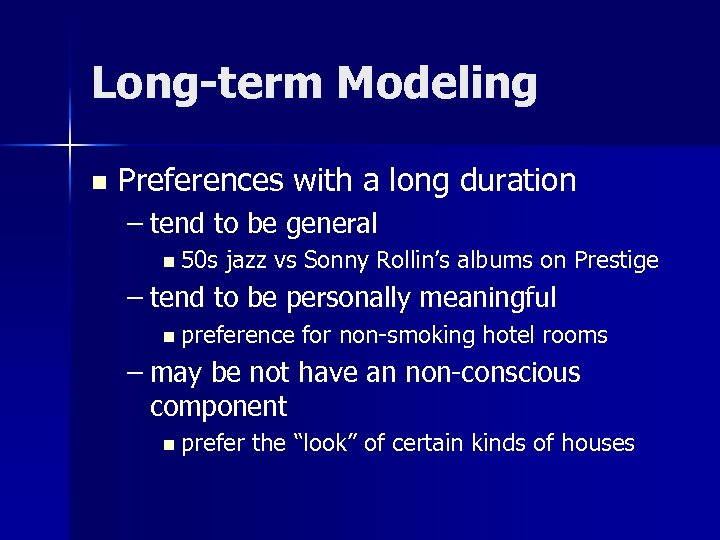

Long-term Modeling n Preferences with a long duration – tend to be general n 50 s jazz vs Sonny Rollin’s albums on Prestige – tend to be personally meaningful n preference for non-smoking hotel rooms – may be not have an non-conscious component n prefer the “look” of certain kinds of houses

Short-term Modeling n What does the user want right now? – usually need some kind of query n Preferences with short duration – may be very task-specific n preference for a train that connects with my arriving flight

Application design n Have to consider the role of recommendation in the overall application – how would the user want to interact? – how can the recommendation be delivered?

Simple Coding Exercise n Recommender systems evaluation framework – a bit different than what you would use for a production system – goal to evaluate different alternatives

Three exercises n Implement a simple baseline – average prediction n Implement a new similarity metric – Jaccard coefficient n Evaluate results on a data set

Download n n http: //www. ist. tugraz. at/rec 09. html Eclipse workspace file – student-ws. zip

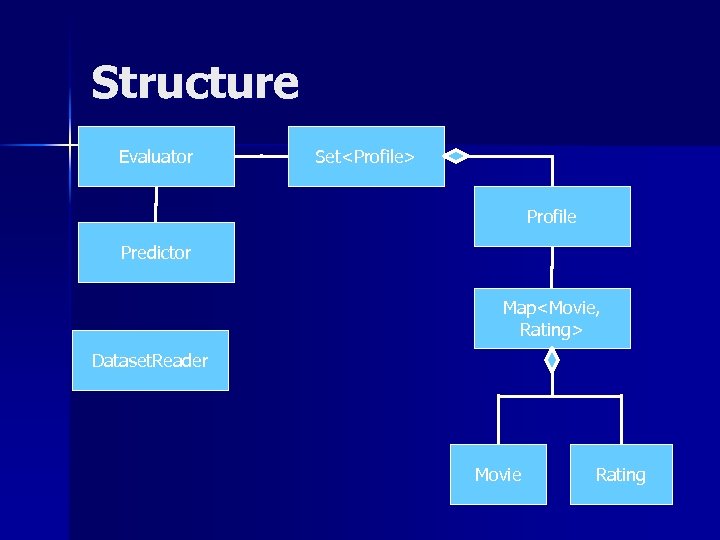

Structure Evaluator Set<Profile> Profile Predictor Map<Movie, Rating> Dataset. Reader Movie Rating

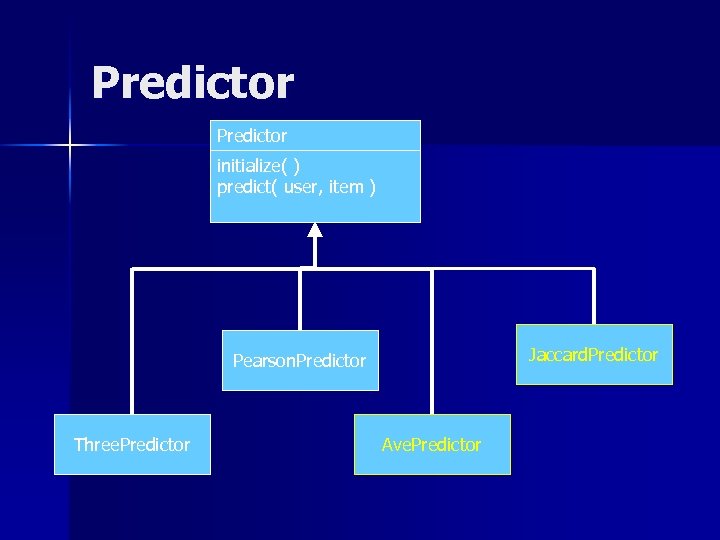

Predictor initialize( ) predict( user, item ) Jaccard. Predictor Pearson. Predictor Three. Predictor Ave. Predictor

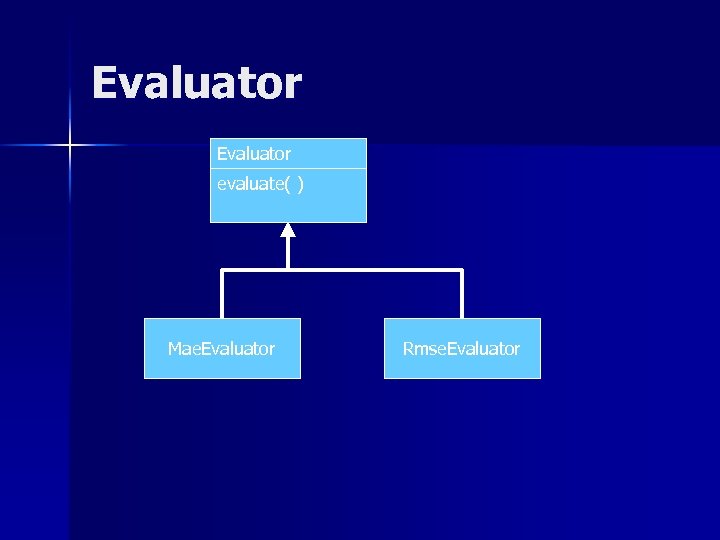

Evaluator evaluate( ) Mae. Evaluator Rmse. Evaluator

Basic flow Create dataset reader for dataset n Read profiles n Create predictor using profiles n Create an evaluator for the predictor n Call the evaluate method n Output the evaluation statistic n

Pearson. Predictor n Similarity caching – We need to calculate each user’s similarity to the others anyway n For each prediction – Might as well do it only once n standard time v space tradeoff

Data sets n Four data sets – Tiny (3 users) n n synthetic For unit testing – Test (5 users) n n Also synthetic For quick tests – U-filtered n Subset of Movie. Lens dataset Standard for recommendation research n Full Movie. Lens 100 K dataset n –u

Demo

Task n n Implement a better baseline Three. Predict is weak – Better to use the item average

Ave. Predictor n Non-personalized prediction – What Amazon. com shows n Idea – Cache the average score for an item – When predict(user, item) is called n n Ignore the target user Better idea – Norm for user average – Calculate the average deviation for the item above each user’s average – Average these deviations – Add to target user’s average

Existing unit test

Compare n With Pearson predictor

Process n n Class time scheduled for 18: 00 -20: 00 Use this time to complete the assignment Due before class tomorrow Work in pairs if you prefer – Submit by email n rburke@cs. depaul. edu – subject line: GRAZ H 1 – body: names of students – attach: Ave. Predictor. java

7791e5c7b60e763f3c072979fff97132.ppt