e3389cc2ce9b4e0fe075bd95b6ec18db.ppt

- Количество слайдов: 29

Raven. Claw Yet another (please read “An improved”) dialog management architecture for task-oriented spoken dialog systems Presented by: Dan Bohus (dbohus@cs. cmu. edu) Work by: Dan Bohus, Alex Rudnicky Carnegie Mellon University, 2002

Raven. Claw Yet another (please read “An improved”) dialog management architecture for task-oriented spoken dialog systems Presented by: Dan Bohus (dbohus@cs. cmu. edu) Work by: Dan Bohus, Alex Rudnicky Carnegie Mellon University, 2002

New DM Architecture: Goals § Able to handle complex, goal-directed dialogs § § Easy to develop and maintain systems § § § Go beyond (information access systems and) the slot-filling paradigm Developer focuses only on dialog task Automatically ensure a minimum set of taskindependent, conversational skills Open to learning (hopefully both at task and discourse levels) Open to dynamic SDS generation More careful, more structured code, logs, etc: provide a robust basis for future research. 11 -04 -01 Modeling the cost of misunderstanding … 2

New DM Architecture: Goals § Able to handle complex, goal-directed dialogs § § Easy to develop and maintain systems § § § Go beyond (information access systems and) the slot-filling paradigm Developer focuses only on dialog task Automatically ensure a minimum set of taskindependent, conversational skills Open to learning (hopefully both at task and discourse levels) Open to dynamic SDS generation More careful, more structured code, logs, etc: provide a robust basis for future research. 11 -04 -01 Modeling the cost of misunderstanding … 2

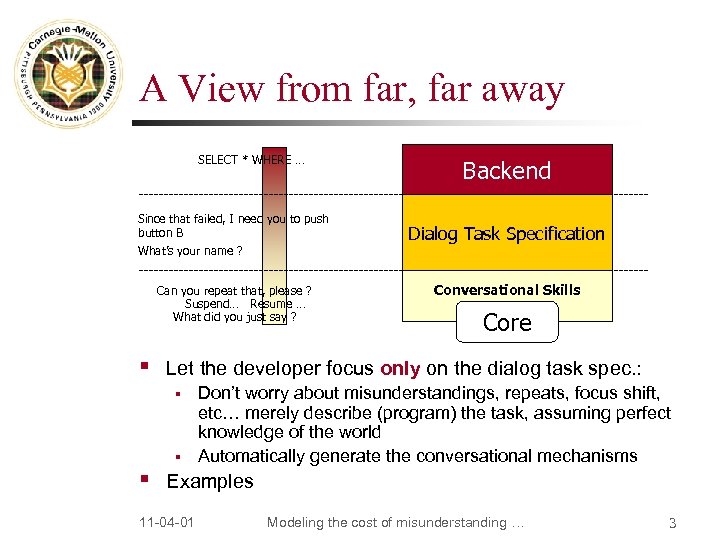

A View from far, far away SELECT * WHERE … Since that failed, I need you to push button B What’s your name ? Can you repeat that, please ? Suspend… Resume … What did you just say ? § Dialog Task Specification Conversational Skills Core Let the developer focus only on the dialog task spec. : § § § Backend Don’t worry about misunderstandings, repeats, focus shift, etc… merely describe (program) the task, assuming perfect knowledge of the world Automatically generate the conversational mechanisms Examples 11 -04 -01 Modeling the cost of misunderstanding … 3

A View from far, far away SELECT * WHERE … Since that failed, I need you to push button B What’s your name ? Can you repeat that, please ? Suspend… Resume … What did you just say ? § Dialog Task Specification Conversational Skills Core Let the developer focus only on the dialog task spec. : § § § Backend Don’t worry about misunderstandings, repeats, focus shift, etc… merely describe (program) the task, assuming perfect knowledge of the world Automatically generate the conversational mechanisms Examples 11 -04 -01 Modeling the cost of misunderstanding … 3

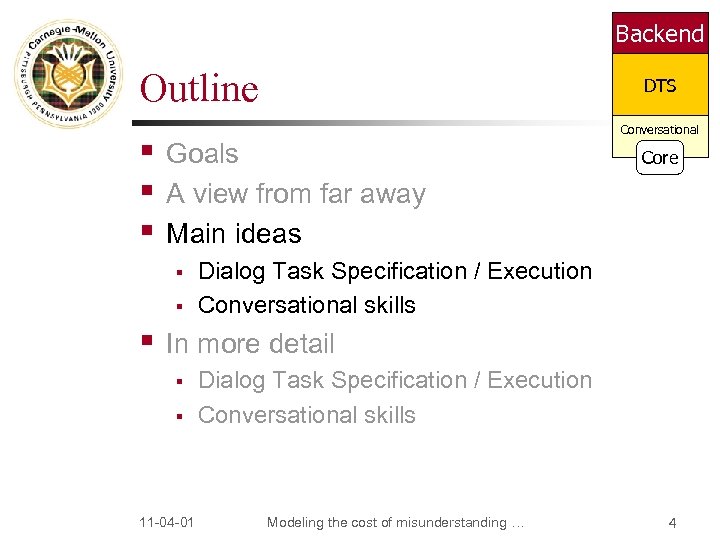

Backend Outline § § § Conversational Goals A view from far away Main ideas § § § DTS Core Dialog Task Specification / Execution Conversational skills In more detail § § 11 -04 -01 Dialog Task Specification / Execution Conversational skills Modeling the cost of misunderstanding … 4

Backend Outline § § § Conversational Goals A view from far away Main ideas § § § DTS Core Dialog Task Specification / Execution Conversational skills In more detail § § 11 -04 -01 Dialog Task Specification / Execution Conversational skills Modeling the cost of misunderstanding … 4

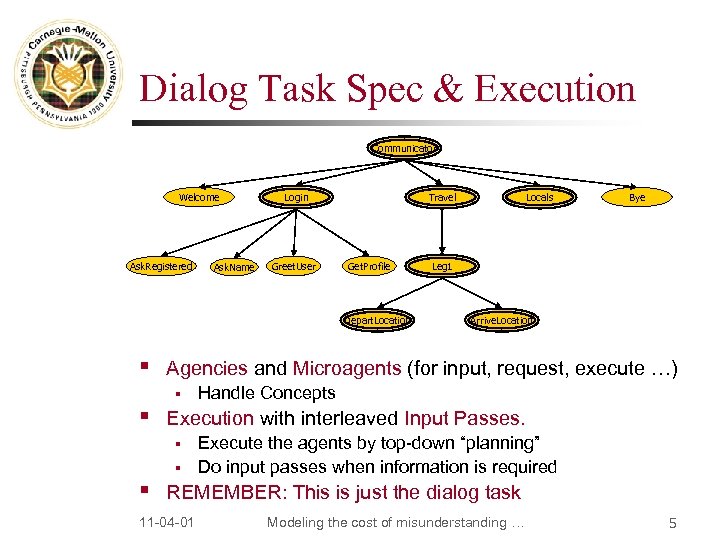

Dialog Task Spec & Execution Communicator Welcome Ask. Registered Ask. Name Login Greet. User Travel Get. Profile Depart. Location § Leg 1 Arrive. Location Handle Concepts Execution with interleaved Input Passes. § § § Bye Agencies and Microagents (for input, request, execute …) § § Locals Execute the agents by top-down “planning” Do input passes when information is required REMEMBER: This is just the dialog task 11 -04 -01 Modeling the cost of misunderstanding … 5

Dialog Task Spec & Execution Communicator Welcome Ask. Registered Ask. Name Login Greet. User Travel Get. Profile Depart. Location § Leg 1 Arrive. Location Handle Concepts Execution with interleaved Input Passes. § § § Bye Agencies and Microagents (for input, request, execute …) § § Locals Execute the agents by top-down “planning” Do input passes when information is required REMEMBER: This is just the dialog task 11 -04 -01 Modeling the cost of misunderstanding … 5

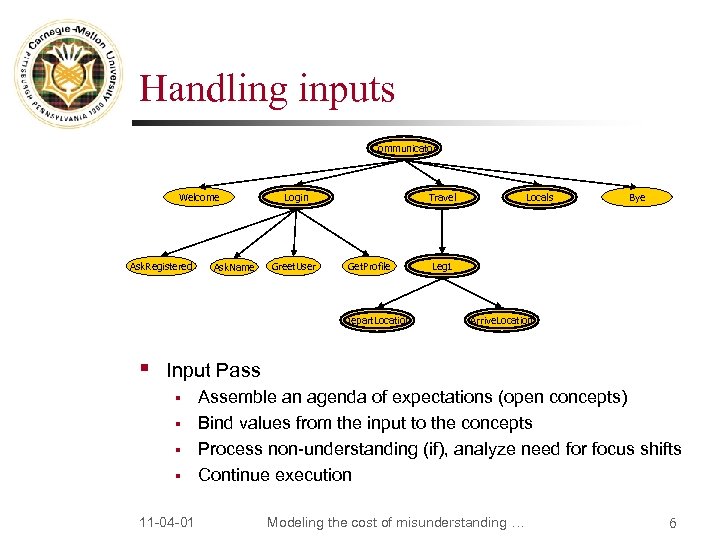

Handling inputs Communicator Welcome Ask. Registered Ask. Name Login Greet. User Travel Get. Profile Depart. Location § Locals Bye Leg 1 Arrive. Location Input Pass § § 11 -04 -01 Assemble an agenda of expectations (open concepts) Bind values from the input to the concepts Process non-understanding (if), analyze need for focus shifts Continue execution Modeling the cost of misunderstanding … 6

Handling inputs Communicator Welcome Ask. Registered Ask. Name Login Greet. User Travel Get. Profile Depart. Location § Locals Bye Leg 1 Arrive. Location Input Pass § § 11 -04 -01 Assemble an agenda of expectations (open concepts) Bind values from the input to the concepts Process non-understanding (if), analyze need for focus shifts Continue execution Modeling the cost of misunderstanding … 6

Conversational Skills / Mechanisms § A lot of problems in SDS generated by lack of conversational skills. “It’s all in the little details!” § § § Dealing with misunderstandings Generic channel/dialog mechanisms : repeats, focus shift, context establishment, help, start over, etc. Timing Even when these mechanisms are in, they lack uniformity & consistency. Development and maintenance are time consuming. 11 -04 -01 Modeling the cost of misunderstanding … 7

Conversational Skills / Mechanisms § A lot of problems in SDS generated by lack of conversational skills. “It’s all in the little details!” § § § Dealing with misunderstandings Generic channel/dialog mechanisms : repeats, focus shift, context establishment, help, start over, etc. Timing Even when these mechanisms are in, they lack uniformity & consistency. Development and maintenance are time consuming. 11 -04 -01 Modeling the cost of misunderstanding … 7

Conversational Skills / Mechanisms § More or less task independent mechanisms: § § § Implicit/Explicit Confirmations, Clarifications, Disambiguation = the whole Misunderstandings problem Context reestablishment Timeout and Barge-in control Back-channel absorption Generic dialog mechanisms: n § Repeat, Suspend… Resume, Help, Start over, Summarize, Undo, Querying the system’s belief The core takes care of these by dynamically inserting in the task tree agencies which handle these mechanisms. 11 -04 -01 Modeling the cost of misunderstanding … 8

Conversational Skills / Mechanisms § More or less task independent mechanisms: § § § Implicit/Explicit Confirmations, Clarifications, Disambiguation = the whole Misunderstandings problem Context reestablishment Timeout and Barge-in control Back-channel absorption Generic dialog mechanisms: n § Repeat, Suspend… Resume, Help, Start over, Summarize, Undo, Querying the system’s belief The core takes care of these by dynamically inserting in the task tree agencies which handle these mechanisms. 11 -04 -01 Modeling the cost of misunderstanding … 8

Backend Outline § § § DTS Conversational Goals A view from far away Main ideas § § Core Dialog Task Specification / Execution Conversational skills § In more detail § Dialog Task Specification / Execution § Conversational skills 11 -04 -01 Modeling the cost of misunderstanding … 9

Backend Outline § § § DTS Conversational Goals A view from far away Main ideas § § Core Dialog Task Specification / Execution Conversational skills § In more detail § Dialog Task Specification / Execution § Conversational skills 11 -04 -01 Modeling the cost of misunderstanding … 9

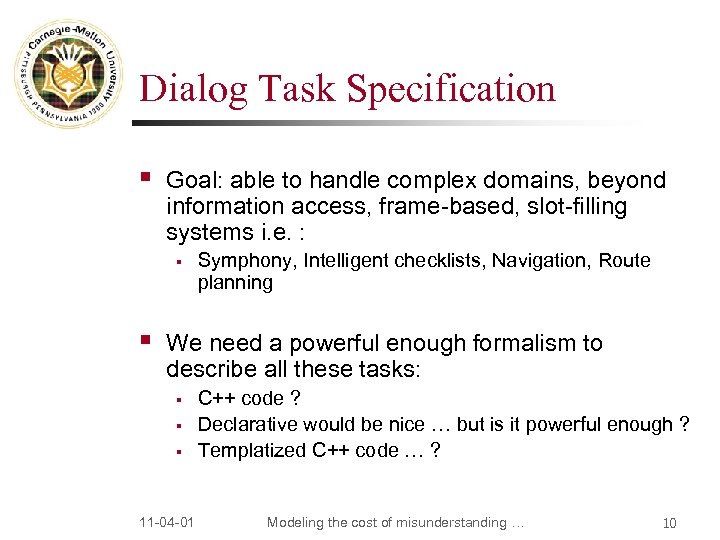

Dialog Task Specification § Goal: able to handle complex domains, beyond information access, frame-based, slot-filling systems i. e. : § § Symphony, Intelligent checklists, Navigation, Route planning We need a powerful enough formalism to describe all these tasks: § § § 11 -04 -01 C++ code ? Declarative would be nice … but is it powerful enough ? Templatized C++ code … ? Modeling the cost of misunderstanding … 10

Dialog Task Specification § Goal: able to handle complex domains, beyond information access, frame-based, slot-filling systems i. e. : § § Symphony, Intelligent checklists, Navigation, Route planning We need a powerful enough formalism to describe all these tasks: § § § 11 -04 -01 C++ code ? Declarative would be nice … but is it powerful enough ? Templatized C++ code … ? Modeling the cost of misunderstanding … 10

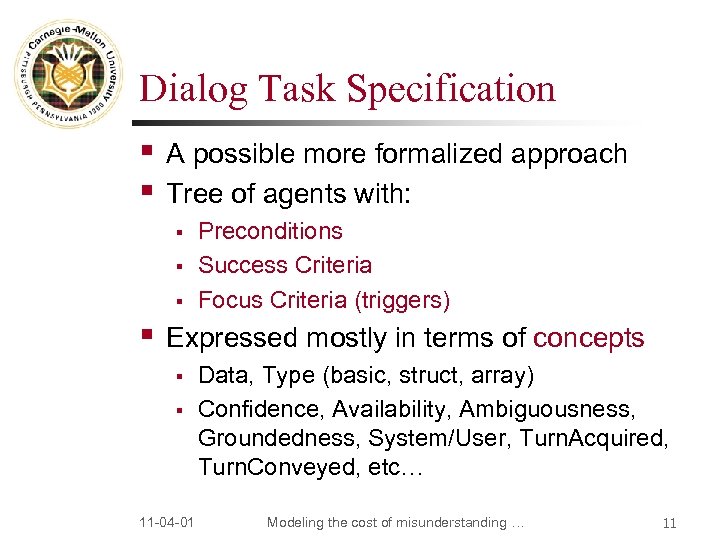

Dialog Task Specification § § A possible more formalized approach Tree of agents with: § § Preconditions Success Criteria Focus Criteria (triggers) Expressed mostly in terms of concepts § § 11 -04 -01 Data, Type (basic, struct, array) Confidence, Availability, Ambiguousness, Groundedness, System/User, Turn. Acquired, Turn. Conveyed, etc… Modeling the cost of misunderstanding … 11

Dialog Task Specification § § A possible more formalized approach Tree of agents with: § § Preconditions Success Criteria Focus Criteria (triggers) Expressed mostly in terms of concepts § § 11 -04 -01 Data, Type (basic, struct, array) Confidence, Availability, Ambiguousness, Groundedness, System/User, Turn. Acquired, Turn. Conveyed, etc… Modeling the cost of misunderstanding … 11

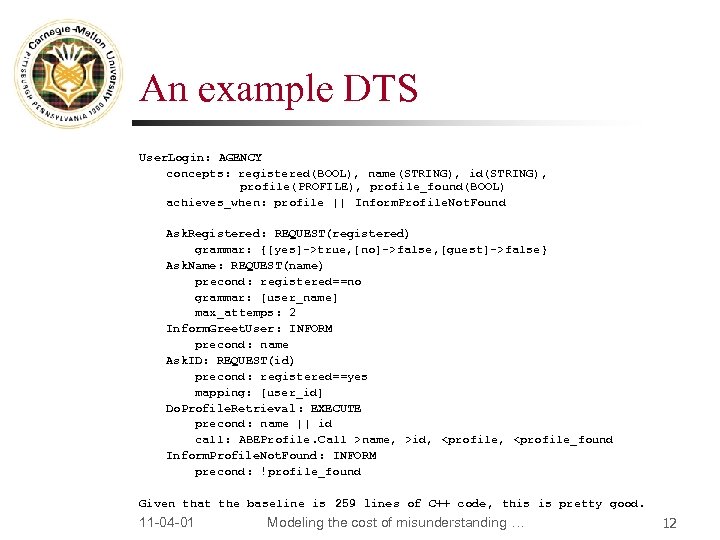

An example DTS User. Login: AGENCY concepts: registered(BOOL), name(STRING), id(STRING), profile(PROFILE), profile_found(BOOL) achieves_when: profile || Inform. Profile. Not. Found Ask. Registered: REQUEST(registered) grammar: {[yes]->true, [no]->false, [guest]->false} Ask. Name: REQUEST(name) precond: registered==no grammar: [user_name] max_attemps: 2 Inform. Greet. User: INFORM precond: name Ask. ID: REQUEST(id) precond: registered==yes mapping: [user_id] Do. Profile. Retrieval: EXECUTE precond: name || id call: ABEProfile. Call >name, >id,

An example DTS User. Login: AGENCY concepts: registered(BOOL), name(STRING), id(STRING), profile(PROFILE), profile_found(BOOL) achieves_when: profile || Inform. Profile. Not. Found Ask. Registered: REQUEST(registered) grammar: {[yes]->true, [no]->false, [guest]->false} Ask. Name: REQUEST(name) precond: registered==no grammar: [user_name] max_attemps: 2 Inform. Greet. User: INFORM precond: name Ask. ID: REQUEST(id) precond: registered==yes mapping: [user_id] Do. Profile. Retrieval: EXECUTE precond: name || id call: ABEProfile. Call >name, >id,

Can a formalism cut it ? § People have repeatedly tried formalizing dialog … and failed § § 11 -04 -01 We’re focusing only on the task (like in robotics/execution) Actually, these agents are all C++ classes, so we can backoff to code; the hope is that most of the behaviors can be easily expressed as above. Modeling the cost of misunderstanding … 13

Can a formalism cut it ? § People have repeatedly tried formalizing dialog … and failed § § 11 -04 -01 We’re focusing only on the task (like in robotics/execution) Actually, these agents are all C++ classes, so we can backoff to code; the hope is that most of the behaviors can be easily expressed as above. Modeling the cost of misunderstanding … 13

Other Ideas for DTS § § 4 Microagents: Inform, Request, Expect, Execute Provide a library of “common task” and “common discourse” agencies § § § 11 -04 -01 Frame agency List browse agency Choose agency Disambiguate agency, Ground Agency, … Etc Modeling the cost of misunderstanding … 14

Other Ideas for DTS § § 4 Microagents: Inform, Request, Expect, Execute Provide a library of “common task” and “common discourse” agencies § § § 11 -04 -01 Frame agency List browse agency Choose agency Disambiguate agency, Ground Agency, … Etc Modeling the cost of misunderstanding … 14

DTS execution § Agency. Execute() decides what is executed next § Various simple policies can be implemented n § 11 -04 -01 Left-to-right (open/closed), choice, etc But free to do more sophisticated things (MDPs, etc) ~ learning at the task level Modeling the cost of misunderstanding … 15

DTS execution § Agency. Execute() decides what is executed next § Various simple policies can be implemented n § 11 -04 -01 Left-to-right (open/closed), choice, etc But free to do more sophisticated things (MDPs, etc) ~ learning at the task level Modeling the cost of misunderstanding … 15

Input Pass 1. Construct an agenda of expectations § (Partially? ) ordered list of concepts expected by the system 2. Bind values/confidences to concepts § The SI <> MI spectrum can be expressed in terms of the way the agenda is constructed and binding policies, independent of task 3. Process non-understandings (iff) - try and detect source and inform user: § § 11 -04 -01 Channel (SNR, clipping) Decoding (confidence score, prosody) Parsing ([garble]) Dialog level (POK, but no expectation) Modeling the cost of misunderstanding … 16

Input Pass 1. Construct an agenda of expectations § (Partially? ) ordered list of concepts expected by the system 2. Bind values/confidences to concepts § The SI <> MI spectrum can be expressed in terms of the way the agenda is constructed and binding policies, independent of task 3. Process non-understandings (iff) - try and detect source and inform user: § § 11 -04 -01 Channel (SNR, clipping) Decoding (confidence score, prosody) Parsing ([garble]) Dialog level (POK, but no expectation) Modeling the cost of misunderstanding … 16

Input Pass 4. Focus shifts § § 11 -04 -01 Focus shifts seem to be task dependent. Decision to shift focus is taken by the task (DTS) But they also have a TI-side (sub-dialog size, context reestablishment). Context reestablishment is handled automatically, in the Core (see later) Modeling the cost of misunderstanding … 17

Input Pass 4. Focus shifts § § 11 -04 -01 Focus shifts seem to be task dependent. Decision to shift focus is taken by the task (DTS) But they also have a TI-side (sub-dialog size, context reestablishment). Context reestablishment is handled automatically, in the Core (see later) Modeling the cost of misunderstanding … 17

Backend Outline § § § DTS Conversational Goals A view from far away Main ideas § § Core Dialog Task Specification / Execution Conversational skills § In more detail § Dialog Task Specification / Execution § Conversational skills 11 -04 -01 Modeling the cost of misunderstanding … 18

Backend Outline § § § DTS Conversational Goals A view from far away Main ideas § § Core Dialog Task Specification / Execution Conversational skills § In more detail § Dialog Task Specification / Execution § Conversational skills 11 -04 -01 Modeling the cost of misunderstanding … 18

Task-Independent, Conversational Mechanisms § Should be transparently handled by the core; little or no effort from the developer § § However, the developer should be able to write his own customized mechanisms if needed Handled by inserting extra “discourse” agents on the fly in the dialog task specification 11 -04 -01 Modeling the cost of misunderstanding … 19

Task-Independent, Conversational Mechanisms § Should be transparently handled by the core; little or no effort from the developer § § However, the developer should be able to write his own customized mechanisms if needed Handled by inserting extra “discourse” agents on the fly in the dialog task specification 11 -04 -01 Modeling the cost of misunderstanding … 19

Conversational Skills § Universal dialog mechanisms: § Repeat, Suspend… Resume, Help, Start over, Summarize, Undo, Querying the system’s belief § § The grounding / misunderstanding problems Timing and Barge-in control Focus Shifts, Context Establishment Back-channel absorption § Q: To which extent can we abstract these away from the Dialog Task ? 11 -04 -01 Modeling the cost of misunderstanding … 20

Conversational Skills § Universal dialog mechanisms: § Repeat, Suspend… Resume, Help, Start over, Summarize, Undo, Querying the system’s belief § § The grounding / misunderstanding problems Timing and Barge-in control Focus Shifts, Context Establishment Back-channel absorption § Q: To which extent can we abstract these away from the Dialog Task ? 11 -04 -01 Modeling the cost of misunderstanding … 20

Repeat § Repeat (simple) § § Repeat (with referents) § § The DTT is adorned with a “Repeat” Agency automatically at start-up Which calls upon the Output. Manager Not all outputs are “repeatable” (i. e. implicit confirms, gui, )… which ones exactly… ? only 3%, they are mostly [summarize] User-defined custom repeat agency 11 -04 -01 Modeling the cost of misunderstanding … 21

Repeat § Repeat (simple) § § Repeat (with referents) § § The DTT is adorned with a “Repeat” Agency automatically at start-up Which calls upon the Output. Manager Not all outputs are “repeatable” (i. e. implicit confirms, gui, )… which ones exactly… ? only 3%, they are mostly [summarize] User-defined custom repeat agency 11 -04 -01 Modeling the cost of misunderstanding … 21

Help § § DTT adorned at start-up with a help agency Can capture and issue: § § Local help (obtained from focused agent) Explain. More help (obtained from focused) n § § What can I say ? Contextual help (obtained from main topic) Generic help (give_me_tips) Obtains Help prompts from the focused agent and the main topic (defaults provided) Default help agency can be overwritten by user 11 -04 -01 Modeling the cost of misunderstanding … 22

Help § § DTT adorned at start-up with a help agency Can capture and issue: § § Local help (obtained from focused agent) Explain. More help (obtained from focused) n § § What can I say ? Contextual help (obtained from main topic) Generic help (give_me_tips) Obtains Help prompts from the focused agent and the main topic (defaults provided) Default help agency can be overwritten by user 11 -04 -01 Modeling the cost of misunderstanding … 22

Suspend … Resume § § § DTT adorned with a Suspend. Resume agency. Forces a context reestablishment on the current main topic upon resume. Context reestablishment also happens when focusing back after a sub-dialog § 11 -04 -01 Can maybe construct a model for that (given size of sub-dialog, time issues, etc) Modeling the cost of misunderstanding … 23

Suspend … Resume § § § DTT adorned with a Suspend. Resume agency. Forces a context reestablishment on the current main topic upon resume. Context reestablishment also happens when focusing back after a sub-dialog § 11 -04 -01 Can maybe construct a model for that (given size of sub-dialog, time issues, etc) Modeling the cost of misunderstanding … 23

Start over, Summarize, Querying § Start over: § § Summarize: § § DTT adorned with a Start-Over agency DTT adorned with a Summarize agency; prompt generated automatically, problem shifted to NLG: can we do something corpusbased … work on automated summarization ? Querying the system’s beliefs: § 11 -04 -01 Still thinking… problem with the grammars… can meaningful Phoenix grammars for “what is [slot]” be automatically generated ? Modeling the cost of misunderstanding … 24

Start over, Summarize, Querying § Start over: § § Summarize: § § DTT adorned with a Start-Over agency DTT adorned with a Summarize agency; prompt generated automatically, problem shifted to NLG: can we do something corpusbased … work on automated summarization ? Querying the system’s beliefs: § 11 -04 -01 Still thinking… problem with the grammars… can meaningful Phoenix grammars for “what is [slot]” be automatically generated ? Modeling the cost of misunderstanding … 24

Timing & barge-in control § § Knowledge of barge-in location Information on what got conveyed is fed back to the DM, through the concepts to the task level § § 11 -04 -01 Special agencies can take special action based on that (I. e. List Browsing) Can we determine what are non-barge-in-able utterances in a TI manner ? Modeling the cost of misunderstanding … 25

Timing & barge-in control § § Knowledge of barge-in location Information on what got conveyed is fed back to the DM, through the concepts to the task level § § 11 -04 -01 Special agencies can take special action based on that (I. e. List Browsing) Can we determine what are non-barge-in-able utterances in a TI manner ? Modeling the cost of misunderstanding … 25

Confirmation, Clarif. , Disamb. , Misunderstandings, Grounding… § § Largely unsolved in my head: this is next ! 2 components: § Confidence scores on concepts n n § Taking the “right” decision based on those scores: n n n 11 -04 -01 Obtaining them Updating them Insert appropriate agencies on the fly in the dialog task tree: opportunity for learning What’s the set of decisions / agencies ? How does one decide ? Modeling the cost of misunderstanding … 26

Confirmation, Clarif. , Disamb. , Misunderstandings, Grounding… § § Largely unsolved in my head: this is next ! 2 components: § Confidence scores on concepts n n § Taking the “right” decision based on those scores: n n n 11 -04 -01 Obtaining them Updating them Insert appropriate agencies on the fly in the dialog task tree: opportunity for learning What’s the set of decisions / agencies ? How does one decide ? Modeling the cost of misunderstanding … 26

Confidence scores § § Obtaining conf. Scores : from annotator Updating them, from different sources: § § § (Un)Attacked implicit/explicit confirms Correction detection Elapsed time ? Domain knowledge Priors ? But how do you integrate all these in a principled way ? 11 -04 -01 Modeling the cost of misunderstanding … 27

Confidence scores § § Obtaining conf. Scores : from annotator Updating them, from different sources: § § § (Un)Attacked implicit/explicit confirms Correction detection Elapsed time ? Domain knowledge Priors ? But how do you integrate all these in a principled way ? 11 -04 -01 Modeling the cost of misunderstanding … 27

Mechanisms § § Departure. City =

Mechanisms § § Departure. City =

Software Engineering § § Provide a robust basis for future research. Modularity § § § Separability between task and discourse Separability of concepts and confidence computations Portability Mutiple servers Aggressive, structured, timed logging 11 -04 -01 Modeling the cost of misunderstanding … 29

Software Engineering § § Provide a robust basis for future research. Modularity § § § Separability between task and discourse Separability of concepts and confidence computations Portability Mutiple servers Aggressive, structured, timed logging 11 -04 -01 Modeling the cost of misunderstanding … 29