93e63c2e121d969b0b9808e40e1c14f6.ppt

- Количество слайдов: 42

Randomized Algorithms Pasi Fränti 31. 10. 2017

Randomized Algorithms Pasi Fränti 31. 10. 2017

Treasure island Treasure worth 20. 000 awaits ? 5000 Map for sale: 3000 5000 ? DAA expedition

Treasure island Treasure worth 20. 000 awaits ? 5000 Map for sale: 3000 5000 ? DAA expedition

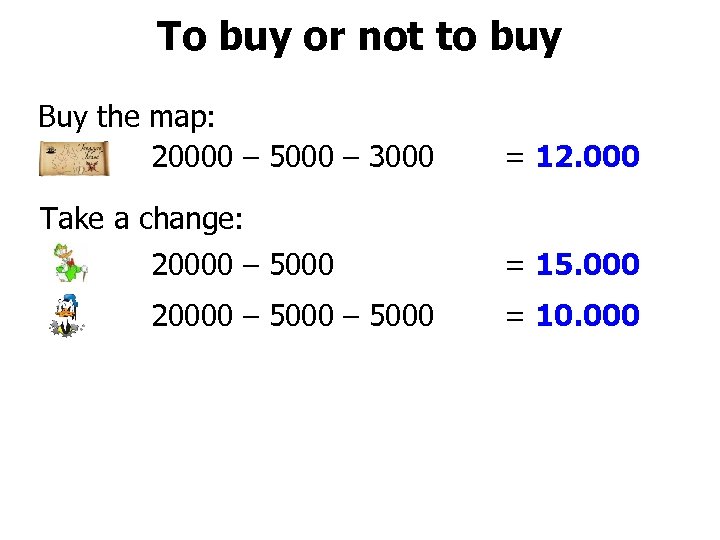

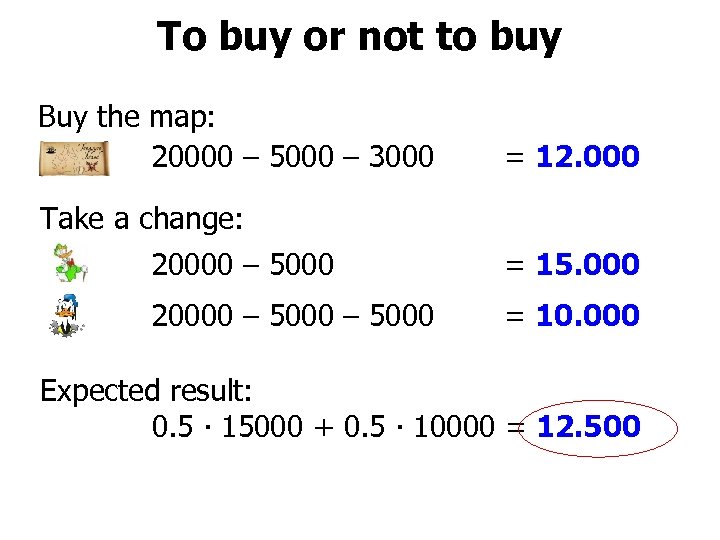

To buy or not to buy Buy the map: 20000 – 5000 – 3000 = 12. 000 Take a change: 20000 – 5000 = 15. 000 20000 – 5000 = 10. 000

To buy or not to buy Buy the map: 20000 – 5000 – 3000 = 12. 000 Take a change: 20000 – 5000 = 15. 000 20000 – 5000 = 10. 000

To buy or not to buy Buy the map: 20000 – 5000 – 3000 = 12. 000 Take a change: 20000 – 5000 = 15. 000 20000 – 5000 = 10. 000 Expected result: 0. 5 ∙ 15000 + 0. 5 ∙ 10000 = 12. 500

To buy or not to buy Buy the map: 20000 – 5000 – 3000 = 12. 000 Take a change: 20000 – 5000 = 15. 000 20000 – 5000 = 10. 000 Expected result: 0. 5 ∙ 15000 + 0. 5 ∙ 10000 = 12. 500

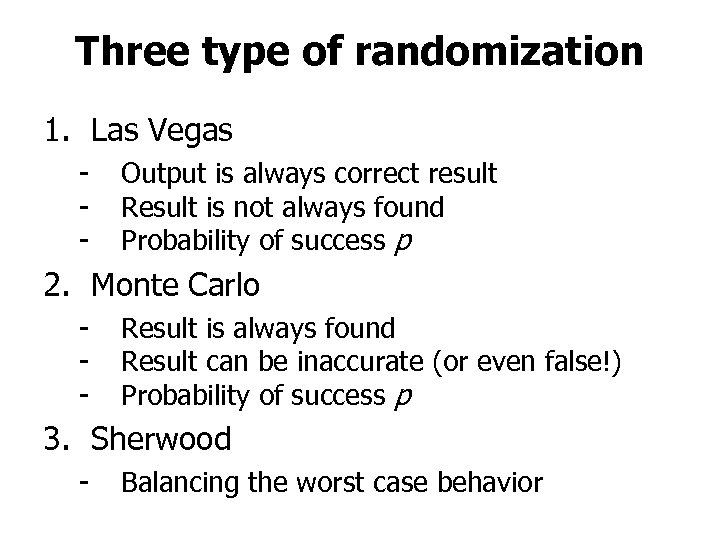

Three type of randomization 1. Las Vegas - Output is always correct result Result is not always found Probability of success p 2. Monte Carlo - Result is always found Result can be inaccurate (or even false!) Probability of success p 3. Sherwood - Balancing the worst case behavior

Three type of randomization 1. Las Vegas - Output is always correct result Result is not always found Probability of success p 2. Monte Carlo - Result is always found Result can be inaccurate (or even false!) Probability of success p 3. Sherwood - Balancing the worst case behavior

Las Vegas

Las Vegas

Dining philosophers W ho ea ts ?

Dining philosophers W ho ea ts ?

![Las Vegas 6 0 0 1 0 … Input: Bit-vector A[1, n] Output: Index Las Vegas 6 0 0 1 0 … Input: Bit-vector A[1, n] Output: Index](https://present5.com/presentation/93e63c2e121d969b0b9808e40e1c14f6/image-8.jpg) Las Vegas 6 0 0 1 0 … Input: Bit-vector A[1, n] Output: Index of any 1 -bit from A Las. Vegas(A, n) index REPEAT k ← Random(1, n); UNTIL A[k]=1; RETURN k

Las Vegas 6 0 0 1 0 … Input: Bit-vector A[1, n] Output: Index of any 1 -bit from A Las. Vegas(A, n) index REPEAT k ← Random(1, n); UNTIL A[k]=1; RETURN k

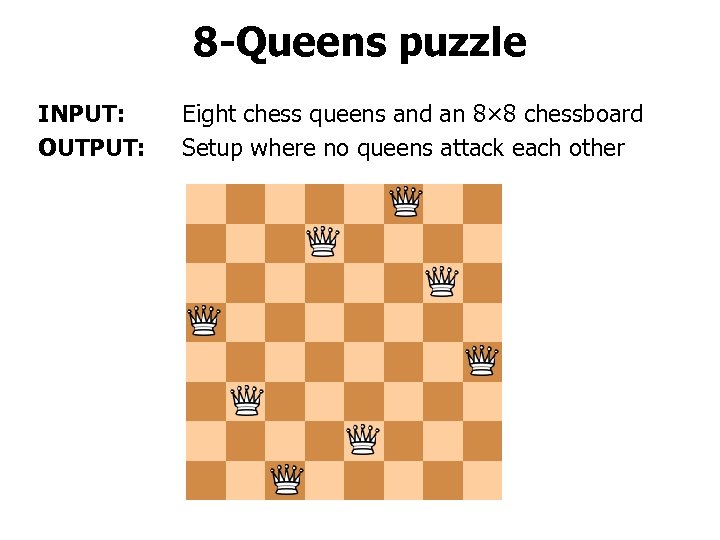

8 -Queens puzzle INPUT: OUTPUT: Eight chess queens and an 8× 8 chessboard Setup where no queens attack each other

8 -Queens puzzle INPUT: OUTPUT: Eight chess queens and an 8× 8 chessboard Setup where no queens attack each other

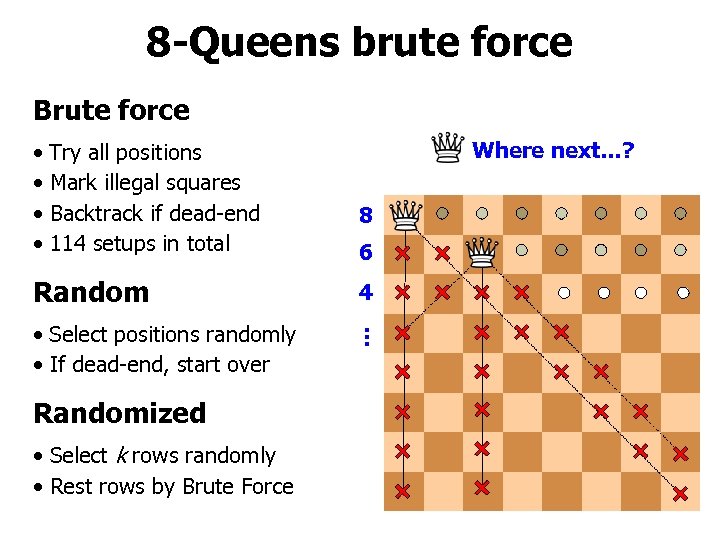

8 -Queens brute force Brute force • Try all positions • Mark illegal squares • Backtrack if dead-end • 114 setups in total Where next…? 8 6 Random 4 • Select positions randomly • If dead-end, start over … Randomized • Select k rows randomly • Rest rows by Brute Force

8 -Queens brute force Brute force • Try all positions • Mark illegal squares • Backtrack if dead-end • 114 setups in total Where next…? 8 6 Random 4 • Select positions randomly • If dead-end, start over … Randomized • Select k rows randomly • Rest rows by Brute Force

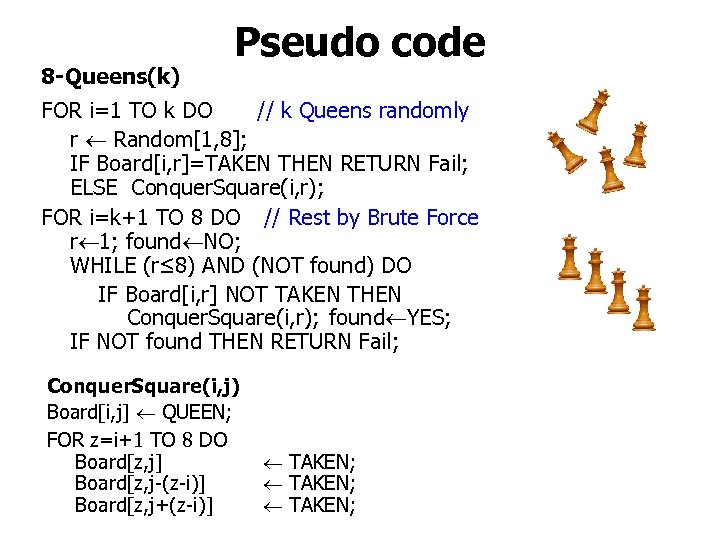

8 -Queens(k) Pseudo code FOR i=1 TO k DO // k Queens randomly r Random[1, 8]; IF Board[i, r]=TAKEN THEN RETURN Fail; ELSE Conquer. Square(i, r); FOR i=k+1 TO 8 DO // Rest by Brute Force r 1; found NO; WHILE (r≤ 8) AND (NOT found) DO IF Board[i, r] NOT TAKEN THEN Conquer. Square(i, r); found YES; IF NOT found THEN RETURN Fail; Conquer. Square(i, j) Board[i, j] QUEEN; FOR z=i+1 TO 8 DO Board[z, j] Board[z, j-(z-i)] Board[z, j+(z-i)] TAKEN;

8 -Queens(k) Pseudo code FOR i=1 TO k DO // k Queens randomly r Random[1, 8]; IF Board[i, r]=TAKEN THEN RETURN Fail; ELSE Conquer. Square(i, r); FOR i=k+1 TO 8 DO // Rest by Brute Force r 1; found NO; WHILE (r≤ 8) AND (NOT found) DO IF Board[i, r] NOT TAKEN THEN Conquer. Square(i, r); found YES; IF NOT found THEN RETURN Fail; Conquer. Square(i, j) Board[i, j] QUEEN; FOR z=i+1 TO 8 DO Board[z, j] Board[z, j-(z-i)] Board[z, j+(z-i)] TAKEN;

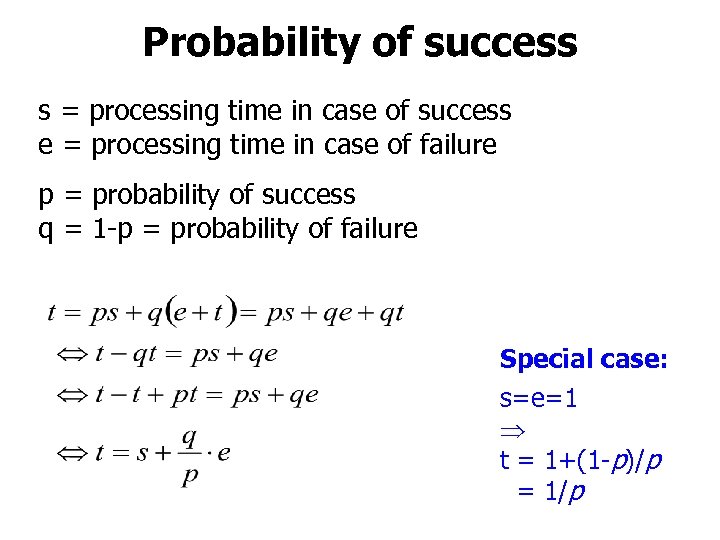

Probability of success s = processing time in case of success e = processing time in case of failure p = probability of success q = 1 -p = probability of failure Special case: s=e=1 t = 1+(1 -p)/p = 1/p

Probability of success s = processing time in case of success e = processing time in case of failure p = probability of success q = 1 -p = probability of failure Special case: s=e=1 t = 1+(1 -p)/p = 1/p

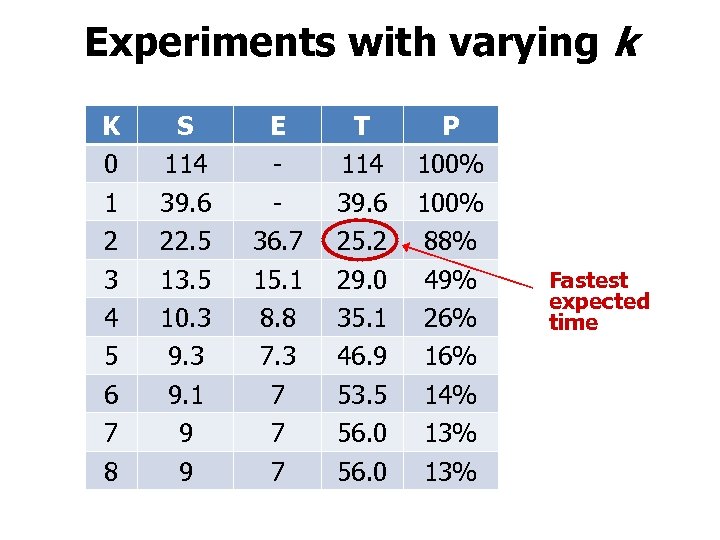

Experiments with varying k K 0 1 2 3 4 5 6 7 8 S 114 39. 6 22. 5 13. 5 10. 3 9. 1 9 9 E 36. 7 15. 1 8. 8 7. 3 7 7 7 T 114 39. 6 25. 2 29. 0 35. 1 46. 9 53. 5 56. 0 P 100% 88% 49% 26% 14% 13% Fastest expected time

Experiments with varying k K 0 1 2 3 4 5 6 7 8 S 114 39. 6 22. 5 13. 5 10. 3 9. 1 9 9 E 36. 7 15. 1 8. 8 7. 3 7 7 7 T 114 39. 6 25. 2 29. 0 35. 1 46. 9 53. 5 56. 0 P 100% 88% 49% 26% 14% 13% Fastest expected time

Random Swap Clustering

Random Swap Clustering

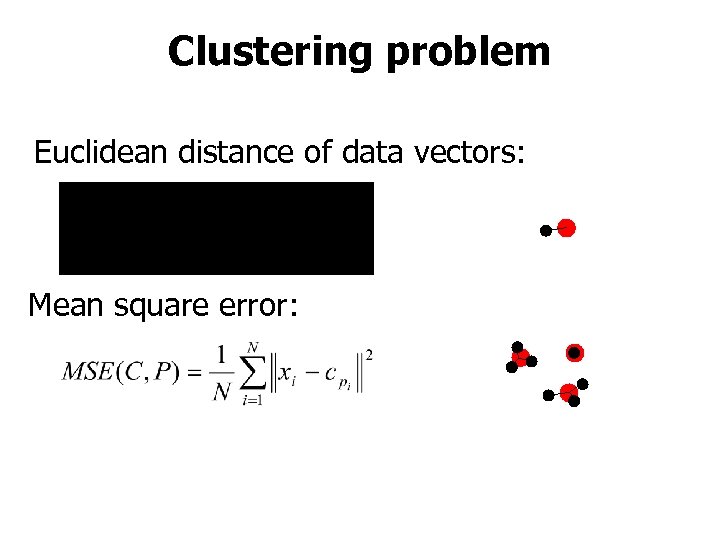

Clustering problem Euclidean distance of data vectors: Mean square error:

Clustering problem Euclidean distance of data vectors: Mean square error:

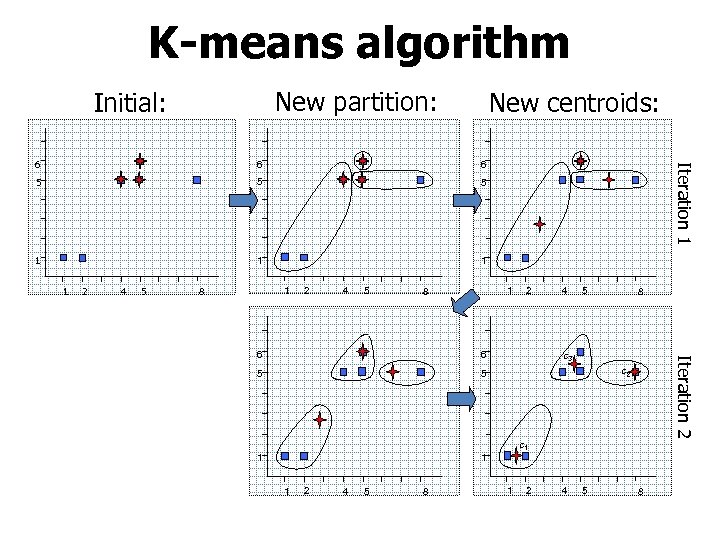

K-means algorithm New partition: Initial: New centroids: 6 6 5 5 5 1 1 2 4 5 1 8 2 4 5 Iteration 1 6 1 8 6 5 4 5 5 8 c 3 Iteration 2 6 2 c 1 1 2 4 5 8

K-means algorithm New partition: Initial: New centroids: 6 6 5 5 5 1 1 2 4 5 1 8 2 4 5 Iteration 1 6 1 8 6 5 4 5 5 8 c 3 Iteration 2 6 2 c 1 1 2 4 5 8

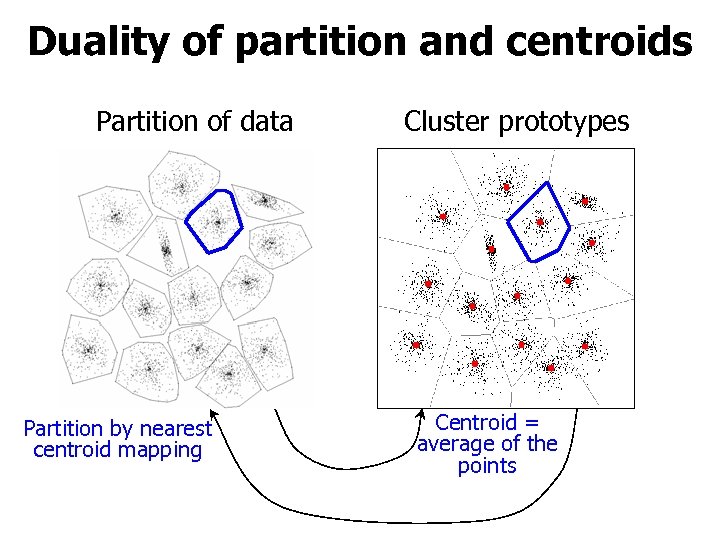

Duality of partition and centroids Partition of data Partition by nearest centroid mapping Cluster prototypes Centroid = average of the points

Duality of partition and centroids Partition of data Partition by nearest centroid mapping Cluster prototypes Centroid = average of the points

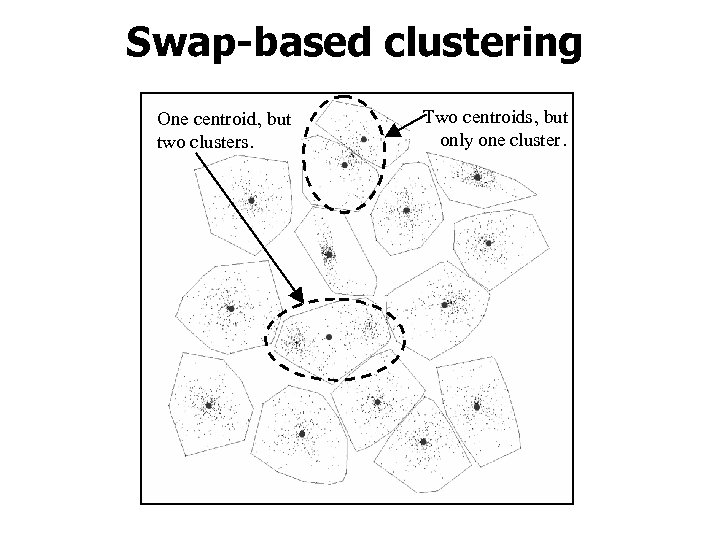

Swap-based clustering One centroid, but two clusters. Two centroids, but only one cluster.

Swap-based clustering One centroid, but two clusters. Two centroids, but only one cluster.

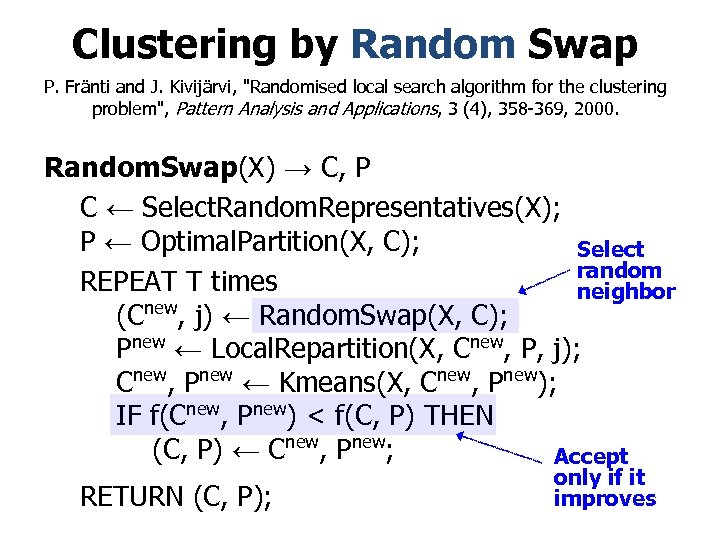

Clustering by Random Swap P. Fränti and J. Kivijärvi, "Randomised local search algorithm for the clustering problem", Pattern Analysis and Applications, 3 (4), 358 -369, 2000. Random. Swap(X) → C, P C ← Select. Random. Representatives(X); P ← Optimal. Partition(X, C); Select random REPEAT T times neighbor (Cnew, j) ← Random. Swap(X, C); Pnew ← Local. Repartition(X, Cnew, P, j); Cnew, Pnew ← Kmeans(X, Cnew, Pnew); IF f(Cnew, Pnew) < f(C, P) THEN (C, P) ← Cnew, Pnew; Accept RETURN (C, P); only if it improves

Clustering by Random Swap P. Fränti and J. Kivijärvi, "Randomised local search algorithm for the clustering problem", Pattern Analysis and Applications, 3 (4), 358 -369, 2000. Random. Swap(X) → C, P C ← Select. Random. Representatives(X); P ← Optimal. Partition(X, C); Select random REPEAT T times neighbor (Cnew, j) ← Random. Swap(X, C); Pnew ← Local. Repartition(X, Cnew, P, j); Cnew, Pnew ← Kmeans(X, Cnew, Pnew); IF f(Cnew, Pnew) < f(C, P) THEN (C, P) ← Cnew, Pnew; Accept RETURN (C, P); only if it improves

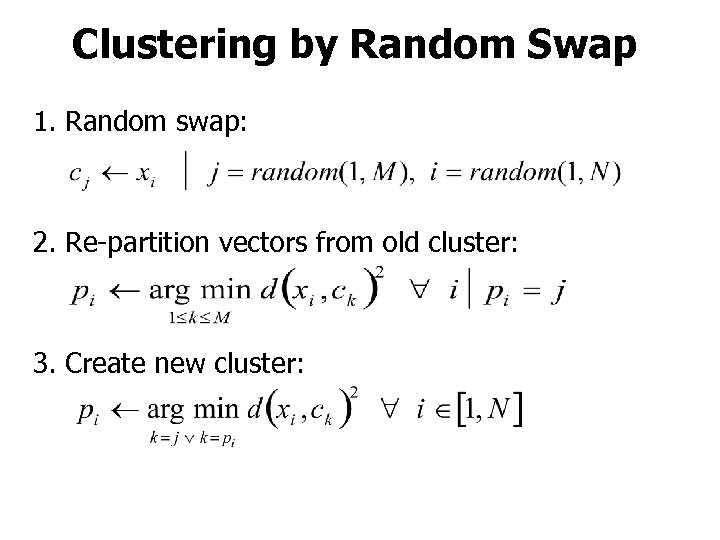

Clustering by Random Swap 1. Random swap: 2. Re-partition vectors from old cluster: 3. Create new cluster:

Clustering by Random Swap 1. Random swap: 2. Re-partition vectors from old cluster: 3. Create new cluster:

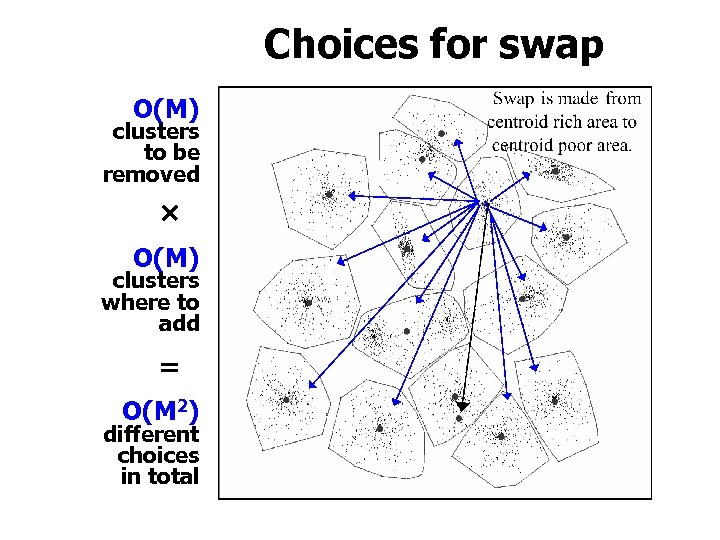

Choices for swap O(M) clusters to be removed O(M) clusters where to add = O(M 2) different choices in total

Choices for swap O(M) clusters to be removed O(M) clusters where to add = O(M 2) different choices in total

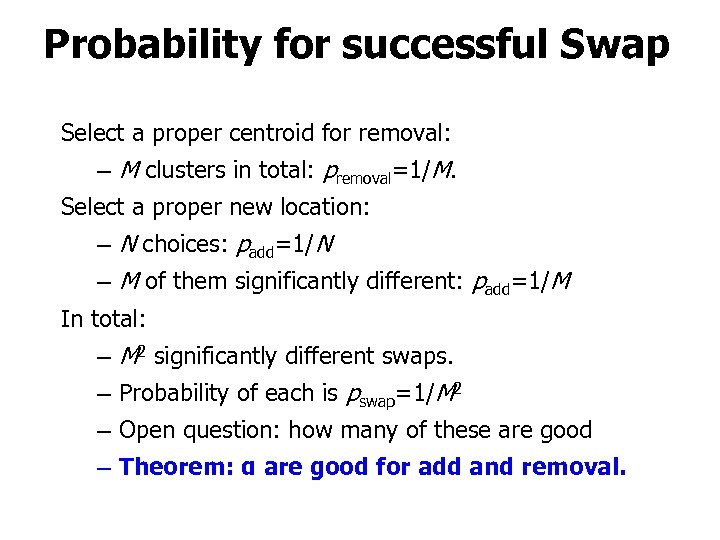

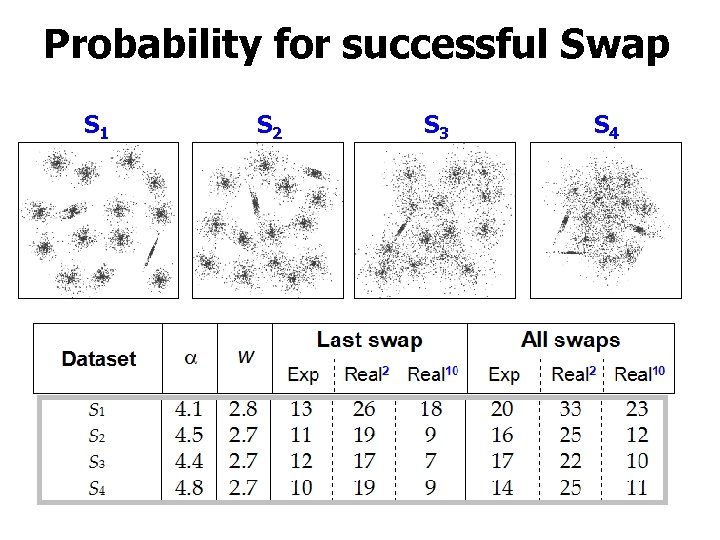

Probability for successful Swap Select a proper centroid for removal: – M clusters in total: premoval=1/M. Select a proper new location: – N choices: padd=1/N – M of them significantly different: padd=1/M In total: – M 2 significantly different swaps. – Probability of each is pswap=1/M 2 – Open question: how many of these are good – Theorem: α are good for add and removal.

Probability for successful Swap Select a proper centroid for removal: – M clusters in total: premoval=1/M. Select a proper new location: – N choices: padd=1/N – M of them significantly different: padd=1/M In total: – M 2 significantly different swaps. – Probability of each is pswap=1/M 2 – Open question: how many of these are good – Theorem: α are good for add and removal.

Probability for successful Swap S 1 S 2 S 3 S 4

Probability for successful Swap S 1 S 2 S 3 S 4

Clustering by Random Swap Probability of not finding good swap: Iterated T times Estimated number of iterations:

Clustering by Random Swap Probability of not finding good swap: Iterated T times Estimated number of iterations:

Bounds for the iterations Upper limit: Lower limit similarly; resulting in:

Bounds for the iterations Upper limit: Lower limit similarly; resulting in:

Total time complexity Time complexity of single step (t): t = O(αN) Number of iterations needed (T): Total time:

Total time complexity Time complexity of single step (t): t = O(αN) Number of iterations needed (T): Total time:

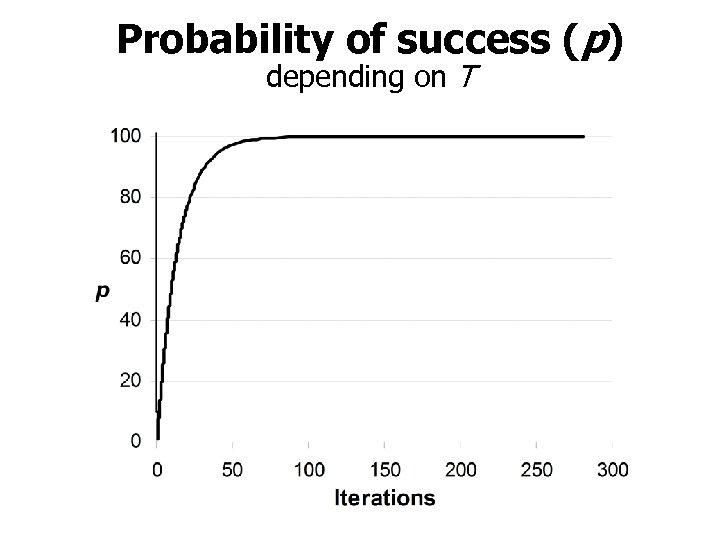

Probability of success (p) depending on T

Probability of success (p) depending on T

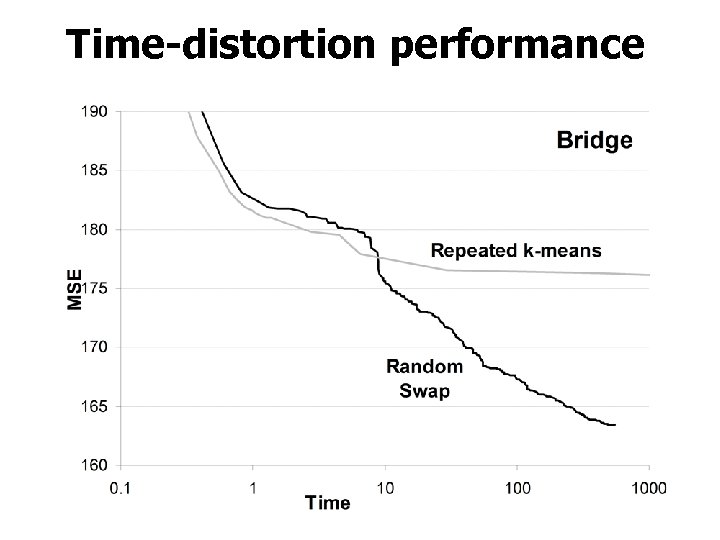

Time-distortion performance

Time-distortion performance

Monte Carlo

Monte Carlo

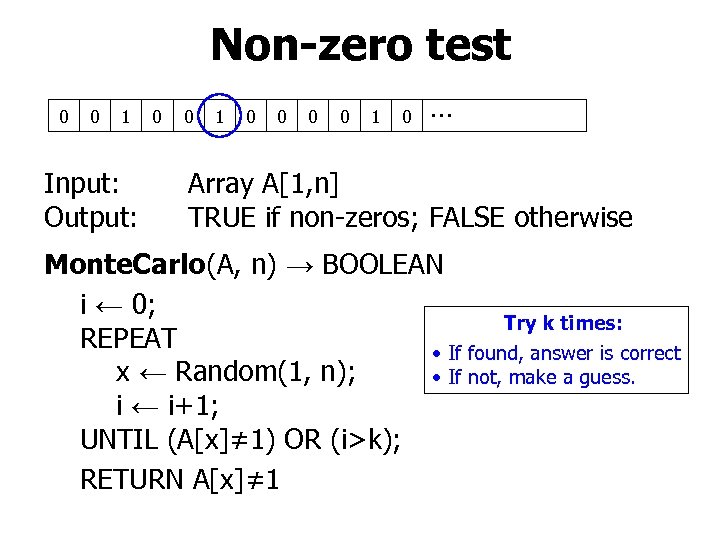

Non-zero test 0 0 1 Input: Output: 0 0 1 0 … Array A[1, n] TRUE if non-zeros; FALSE otherwise Monte. Carlo(A, n) → BOOLEAN i ← 0; Try k times: REPEAT • If found, answer is correct x ← Random(1, n); • If not, make a guess. i ← i+1; UNTIL (A[x]≠ 1) OR (i>k); RETURN A[x]≠ 1

Non-zero test 0 0 1 Input: Output: 0 0 1 0 … Array A[1, n] TRUE if non-zeros; FALSE otherwise Monte. Carlo(A, n) → BOOLEAN i ← 0; Try k times: REPEAT • If found, answer is correct x ← Random(1, n); • If not, make a guess. i ← i+1; UNTIL (A[x]≠ 1) OR (i>k); RETURN A[x]≠ 1

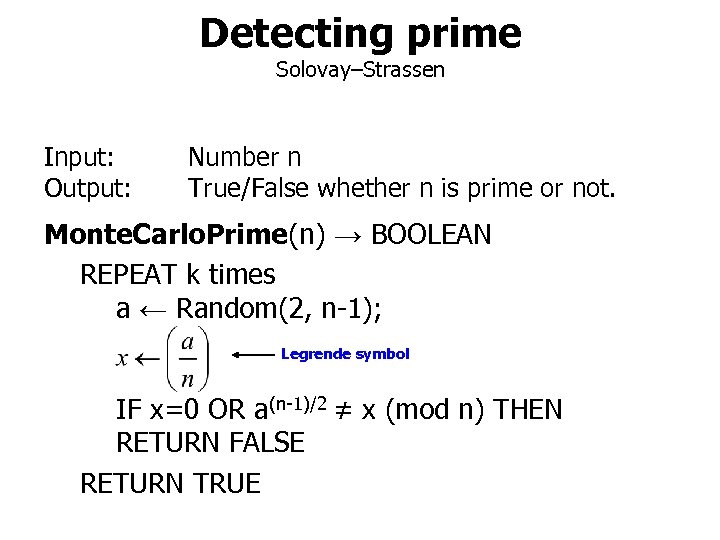

Detecting prime Solovay–Strassen Input: Output: Number n True/False whether n is prime or not. Monte. Carlo. Prime(n) → BOOLEAN REPEAT k times a ← Random(2, n-1); Legrende symbol IF x=0 OR a(n-1)/2 ≠ x (mod n) THEN RETURN FALSE RETURN TRUE

Detecting prime Solovay–Strassen Input: Output: Number n True/False whether n is prime or not. Monte. Carlo. Prime(n) → BOOLEAN REPEAT k times a ← Random(2, n-1); Legrende symbol IF x=0 OR a(n-1)/2 ≠ x (mod n) THEN RETURN FALSE RETURN TRUE

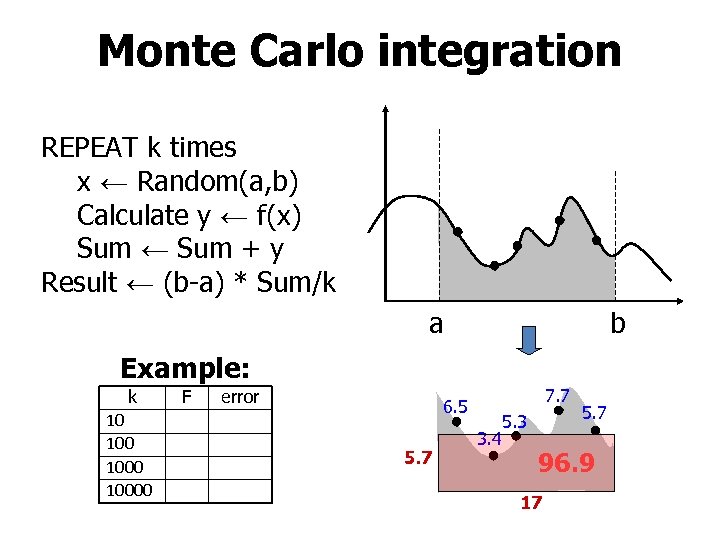

Monte Carlo integration REPEAT k times x ← Random(a, b) Calculate y ← f(x) Sum ← Sum + y Result ← (b-a) * Sum/k a b Example: k 10 10000 F error 7. 7 6. 5 5. 7 3. 4 5. 3 5. 7 96. 9 17

Monte Carlo integration REPEAT k times x ← Random(a, b) Calculate y ← f(x) Sum ← Sum + y Result ← (b-a) * Sum/k a b Example: k 10 10000 F error 7. 7 6. 5 5. 7 3. 4 5. 3 5. 7 96. 9 17

Sherwood

Sherwood

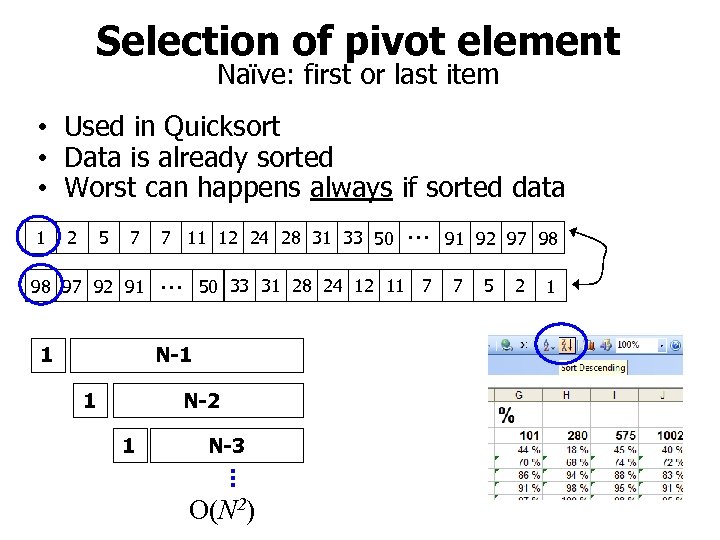

Selection of pivot element Naïve: first or last item • Used in Quicksort • Data is already sorted • Worst can happens always if sorted data 1 2 5 7 7 11 12 24 28 31 33 50 … 91 92 97 98 98 97 92 91 1 … 50 33 31 28 24 12 11 7 N-1 1 N-2 1 N-3 … O(N 2) 7 5 2 1

Selection of pivot element Naïve: first or last item • Used in Quicksort • Data is already sorted • Worst can happens always if sorted data 1 2 5 7 7 11 12 24 28 31 33 50 … 91 92 97 98 98 97 92 91 1 … 50 33 31 28 24 12 11 7 N-1 1 N-2 1 N-3 … O(N 2) 7 5 2 1

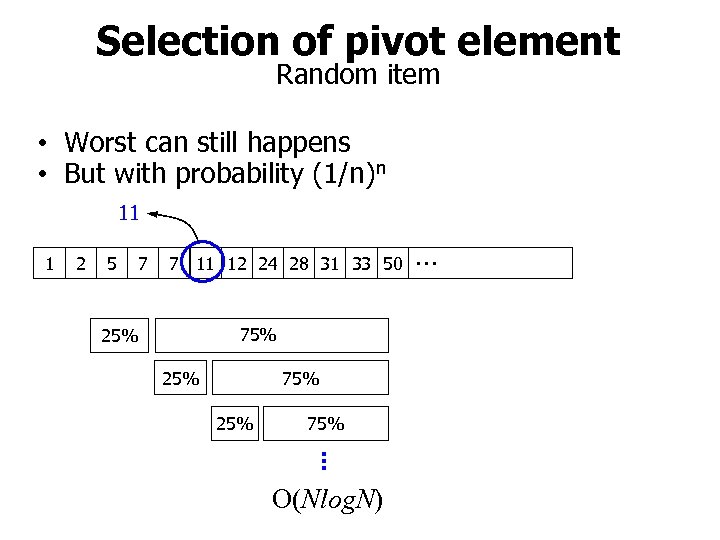

Selection of pivot element Random item • Worst can still happens • But with probability (1/n)n 11 1 2 5 7 7 11 12 24 28 31 33 50 75% 25% 75% … O(Nlog. N) …

Selection of pivot element Random item • Worst can still happens • But with probability (1/n)n 11 1 2 5 7 7 11 12 24 28 31 33 50 75% 25% 75% … O(Nlog. N) …

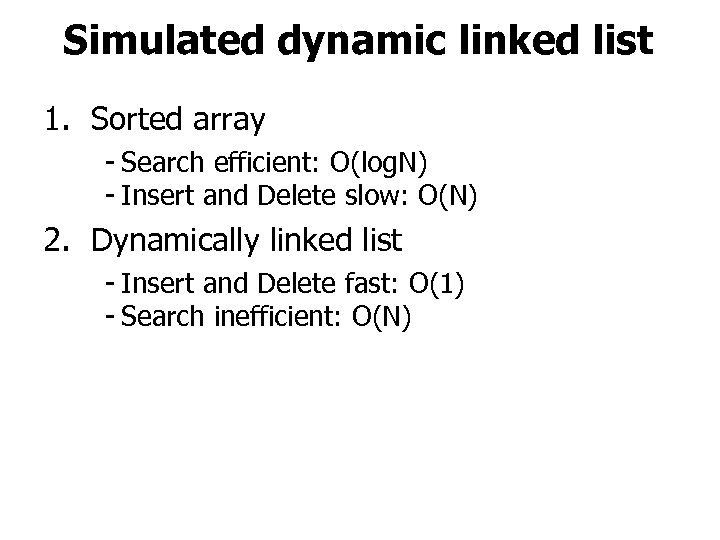

Simulated dynamic linked list 1. Sorted array - Search efficient: O(log. N) - Insert and Delete slow: O(N) 2. Dynamically linked list - Insert and Delete fast: O(1) - Search inefficient: O(N)

Simulated dynamic linked list 1. Sorted array - Search efficient: O(log. N) - Insert and Delete slow: O(N) 2. Dynamically linked list - Insert and Delete fast: O(1) - Search inefficient: O(N)

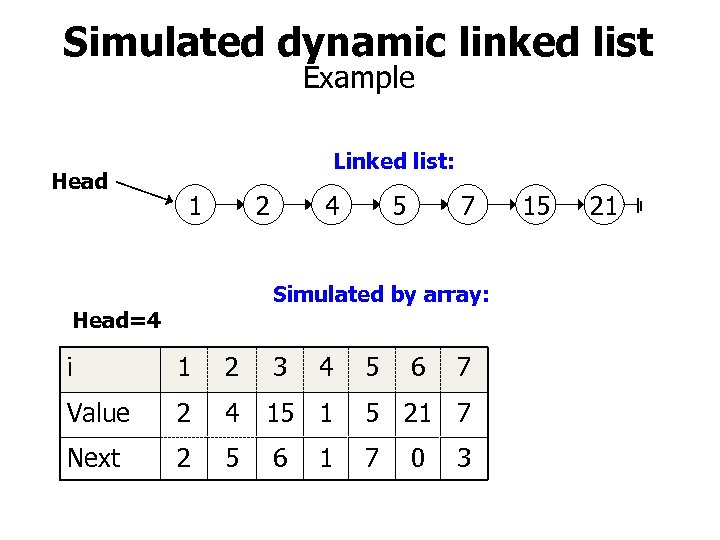

Simulated dynamic linked list Example Head Linked list: 1 2 4 7 5 Simulated by array: Head=4 i 1 2 3 4 Value 2 4 15 1 5 21 7 Next 2 5 6 7 1 5 6 0 7 3 15 21

Simulated dynamic linked list Example Head Linked list: 1 2 4 7 5 Simulated by array: Head=4 i 1 2 3 4 Value 2 4 15 1 5 21 7 Next 2 5 6 7 1 5 6 0 7 3 15 21

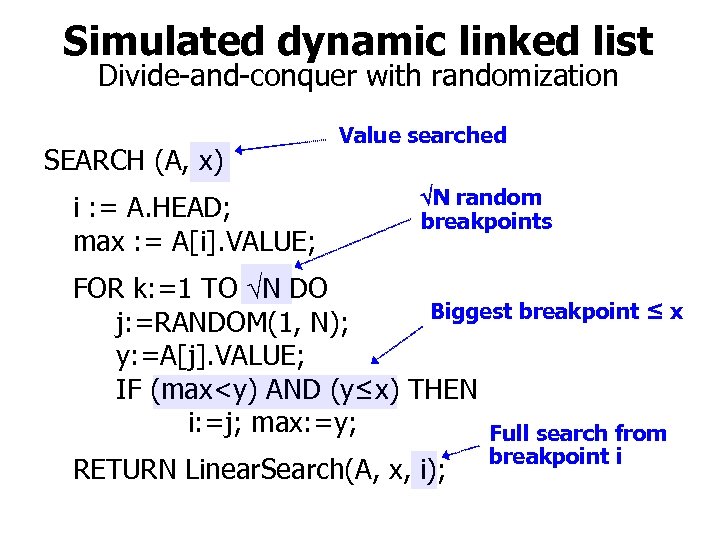

Simulated dynamic linked list Divide-and-conquer with randomization SEARCH (A, x) i : = A. HEAD; max : = A[i]. VALUE; Value searched N random breakpoints FOR k: =1 TO N DO Biggest breakpoint ≤ x j: =RANDOM(1, N); y: =A[j]. VALUE; IF (max

Simulated dynamic linked list Divide-and-conquer with randomization SEARCH (A, x) i : = A. HEAD; max : = A[i]. VALUE; Value searched N random breakpoints FOR k: =1 TO N DO Biggest breakpoint ≤ x j: =RANDOM(1, N); y: =A[j]. VALUE; IF (max

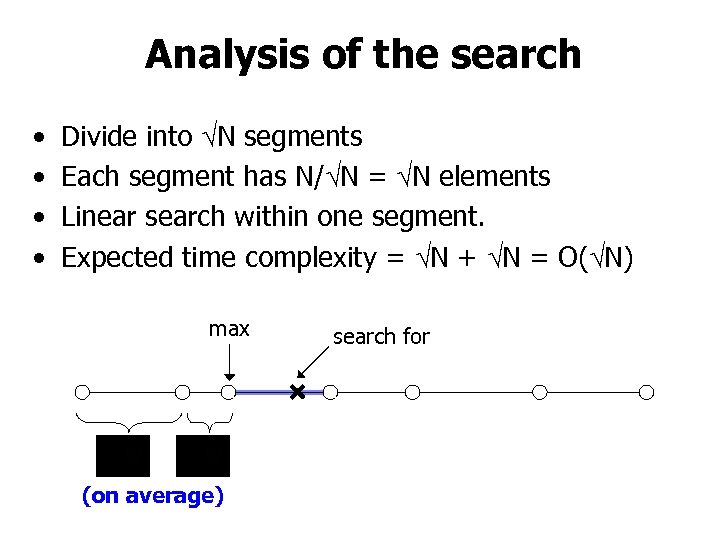

Analysis of the search • • Divide into N segments Each segment has N/ N = N elements Linear search within one segment. Expected time complexity = N + N = O( N) max (on average) search for

Analysis of the search • • Divide into N segments Each segment has N/ N = N elements Linear search within one segment. Expected time complexity = N + N = O( N) max (on average) search for

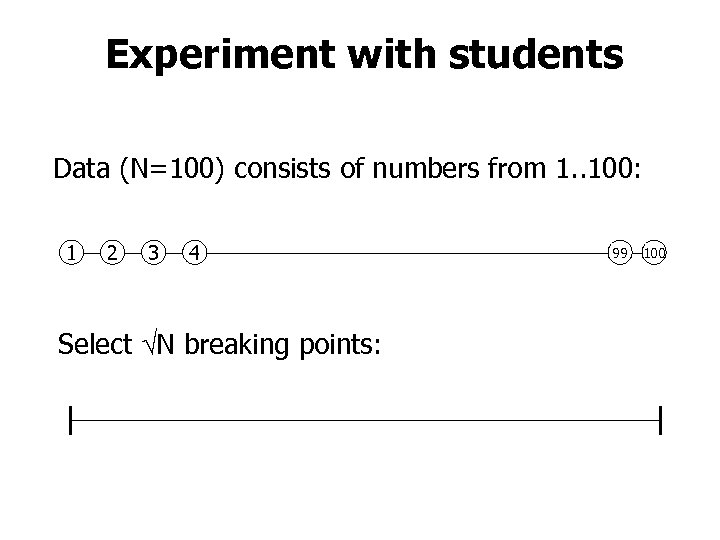

Experiment with students Data (N=100) consists of numbers from 1. . 100: 1 2 3 4 Select N breaking points: 99 100

Experiment with students Data (N=100) consists of numbers from 1. . 100: 1 2 3 4 Select N breaking points: 99 100

Searching for… 33

Searching for… 33

Empty space for notes

Empty space for notes