6f331b8247f46087b154009baaa63f96.ppt

- Количество слайдов: 65

Question Answering Techniques and Systems Mihai Surdeanu (TALP) Marius Paşca (Google - Research)* TALP Research Center Dep. Llenguatges i Sistemes Informàtics Universitat Politècnica de Catalunya surdeanu@lsi. upc. es *The work by Marius Pasca (currently mars@google. com) was performed as part of his Ph. D work at Southern Methodist University in Dallas, Texas. 1

Question Answering Techniques and Systems Mihai Surdeanu (TALP) Marius Paşca (Google - Research)* TALP Research Center Dep. Llenguatges i Sistemes Informàtics Universitat Politècnica de Catalunya surdeanu@lsi. upc. es *The work by Marius Pasca (currently mars@google. com) was performed as part of his Ph. D work at Southern Methodist University in Dallas, Texas. 1

Overview n n What is Question Answering? A “traditional” system Other relevant approaches Distributed Question Answering 2

Overview n n What is Question Answering? A “traditional” system Other relevant approaches Distributed Question Answering 2

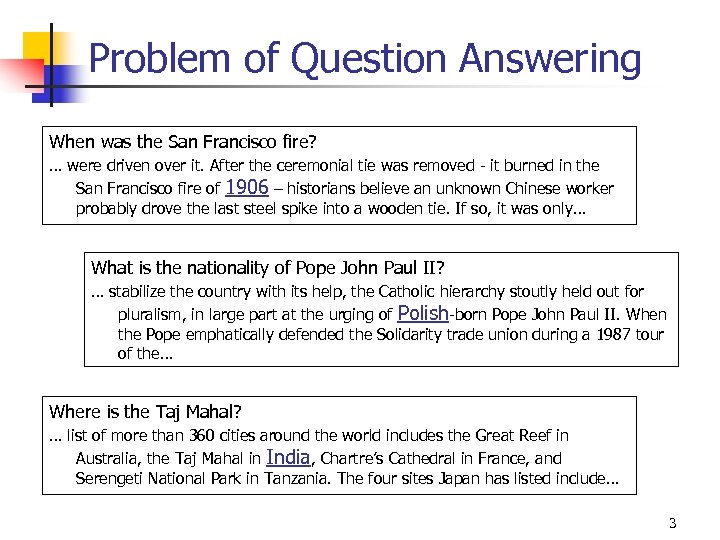

Problem of Question Answering When was the San Francisco fire? … were driven over it. After the ceremonial tie was removed - it burned in the San Francisco fire of 1906 – historians believe an unknown Chinese worker probably drove the last steel spike into a wooden tie. If so, it was only… What is the nationality of Pope John Paul II? … stabilize the country with its help, the Catholic hierarchy stoutly held out for pluralism, in large part at the urging of Polish-born Pope John Paul II. When the Pope emphatically defended the Solidarity trade union during a 1987 tour of the… Where is the Taj Mahal? … list of more than 360 cities around the world includes the Great Reef in Australia, the Taj Mahal in India, Chartre’s Cathedral in France, and Serengeti National Park in Tanzania. The four sites Japan has listed include… 3

Problem of Question Answering When was the San Francisco fire? … were driven over it. After the ceremonial tie was removed - it burned in the San Francisco fire of 1906 – historians believe an unknown Chinese worker probably drove the last steel spike into a wooden tie. If so, it was only… What is the nationality of Pope John Paul II? … stabilize the country with its help, the Catholic hierarchy stoutly held out for pluralism, in large part at the urging of Polish-born Pope John Paul II. When the Pope emphatically defended the Solidarity trade union during a 1987 tour of the… Where is the Taj Mahal? … list of more than 360 cities around the world includes the Great Reef in Australia, the Taj Mahal in India, Chartre’s Cathedral in France, and Serengeti National Park in Tanzania. The four sites Japan has listed include… 3

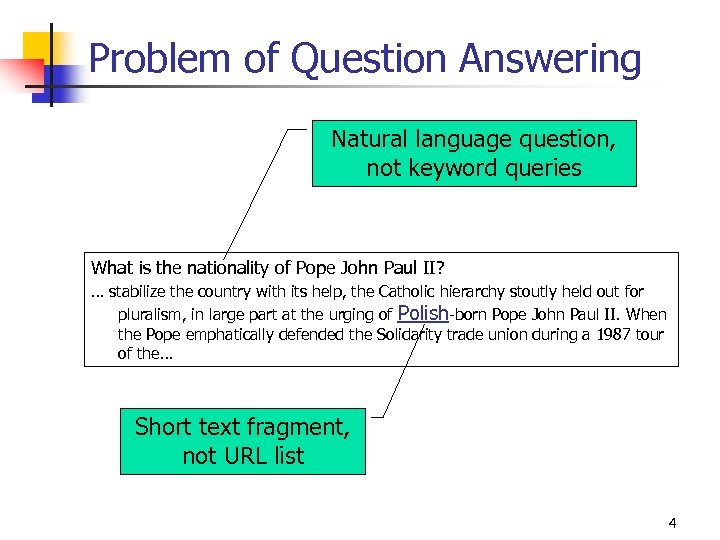

Problem of Question Answering Natural language question, not keyword queries What is the nationality of Pope John Paul II? … stabilize the country with its help, the Catholic hierarchy stoutly held out for pluralism, in large part at the urging of Polish-born Pope John Paul II. When the Pope emphatically defended the Solidarity trade union during a 1987 tour of the… Short text fragment, not URL list 4

Problem of Question Answering Natural language question, not keyword queries What is the nationality of Pope John Paul II? … stabilize the country with its help, the Catholic hierarchy stoutly held out for pluralism, in large part at the urging of Polish-born Pope John Paul II. When the Pope emphatically defended the Solidarity trade union during a 1987 tour of the… Short text fragment, not URL list 4

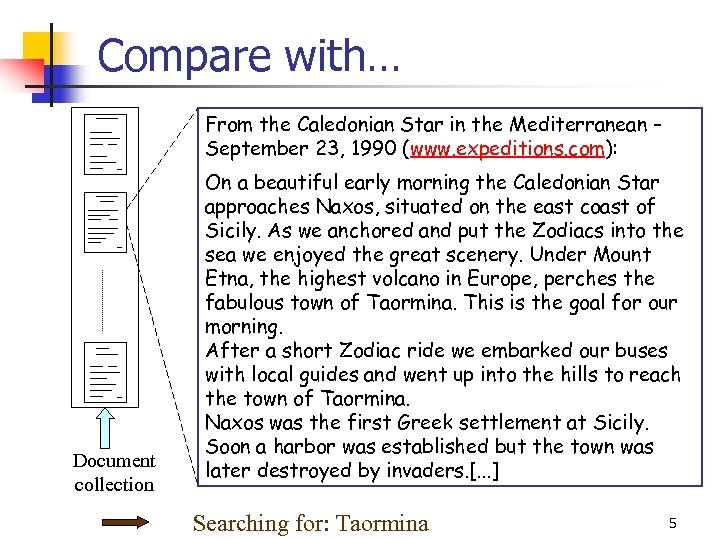

Compare with… From the Caledonian Star in the Mediterranean – September 23, 1990 (www. expeditions. com): Document collection On a beautiful early morning the Caledonian Star approaches Naxos, situated on the east coast of Sicily. As we anchored and put the Zodiacs into the sea we enjoyed the great scenery. Under Mount Etna, the highest volcano in Europe, perches the fabulous town of Taormina. This is the goal for our morning. After a short Zodiac ride we embarked our buses with local guides and went up into the hills to reach the town of Taormina. Naxos was the first Greek settlement at Sicily. Soon a harbor was established but the town was later destroyed by invaders. [. . . ] What continent. Taormina Searching. Naxos? Taormina in Europe? Where is for: Etna volcano in? is the highest Naxos is 5

Compare with… From the Caledonian Star in the Mediterranean – September 23, 1990 (www. expeditions. com): Document collection On a beautiful early morning the Caledonian Star approaches Naxos, situated on the east coast of Sicily. As we anchored and put the Zodiacs into the sea we enjoyed the great scenery. Under Mount Etna, the highest volcano in Europe, perches the fabulous town of Taormina. This is the goal for our morning. After a short Zodiac ride we embarked our buses with local guides and went up into the hills to reach the town of Taormina. Naxos was the first Greek settlement at Sicily. Soon a harbor was established but the town was later destroyed by invaders. [. . . ] What continent. Taormina Searching. Naxos? Taormina in Europe? Where is for: Etna volcano in? is the highest Naxos is 5

Beyond Document Retrieval n n n n Users submit queries corresponding to their information needs. System returns (voluminous) list of full-length documents. It is the responsibility of the users to find information of interest within the returned documents. Open-Domain Question Answering (QA) n n n Users ask questions in natural language. What is the highest volcano in Europe? System returns list of short answers. … Under Mount Etna, the highest volcano in Europe, perches the fabulous town … Often more useful for specific information needs. 6

Beyond Document Retrieval n n n n Users submit queries corresponding to their information needs. System returns (voluminous) list of full-length documents. It is the responsibility of the users to find information of interest within the returned documents. Open-Domain Question Answering (QA) n n n Users ask questions in natural language. What is the highest volcano in Europe? System returns list of short answers. … Under Mount Etna, the highest volcano in Europe, perches the fabulous town … Often more useful for specific information needs. 6

Evaluating QA Systems n n National Institute of Standards and Technology (NIST) organizes yearly the Text Retrieval Conference (TREC), which has had a QA track for the past 5 years: from TREC-8 in 1999 to TREC-12 in 2003. The document set n n n The questions n n Hundreds of new questions every year, the total is close to 2000 for all TRECs. Task n n Newswire textual documents from LA Times, San Jose Mercury News, Wall Street Journal, NY Times etcetera: over 1 M documents now. Well-formed lexically, syntactically and semantically (were reviewed by professional editors). Initially extract at most 5 answers: long (250 B) and short (50 B). Now extract only one exact answer. Several other sub-tasks added later: definition, list, context. Metrics n Mean Reciprocal Rank (MRR): each question assigned the reciprocal rank of the first correct answer. If correct answer at position k, the score is 1/k. 7

Evaluating QA Systems n n National Institute of Standards and Technology (NIST) organizes yearly the Text Retrieval Conference (TREC), which has had a QA track for the past 5 years: from TREC-8 in 1999 to TREC-12 in 2003. The document set n n n The questions n n Hundreds of new questions every year, the total is close to 2000 for all TRECs. Task n n Newswire textual documents from LA Times, San Jose Mercury News, Wall Street Journal, NY Times etcetera: over 1 M documents now. Well-formed lexically, syntactically and semantically (were reviewed by professional editors). Initially extract at most 5 answers: long (250 B) and short (50 B). Now extract only one exact answer. Several other sub-tasks added later: definition, list, context. Metrics n Mean Reciprocal Rank (MRR): each question assigned the reciprocal rank of the first correct answer. If correct answer at position k, the score is 1/k. 7

Overview n n What is Question Answering? A “traditional” system n n SMU ranked first at TREC-8 and TREC-9 The foundation of LCC’s Power. Answer system (http: //www. languagecomputer. com) Other relevant approaches Distributed Question Answering 8

Overview n n What is Question Answering? A “traditional” system n n SMU ranked first at TREC-8 and TREC-9 The foundation of LCC’s Power. Answer system (http: //www. languagecomputer. com) Other relevant approaches Distributed Question Answering 8

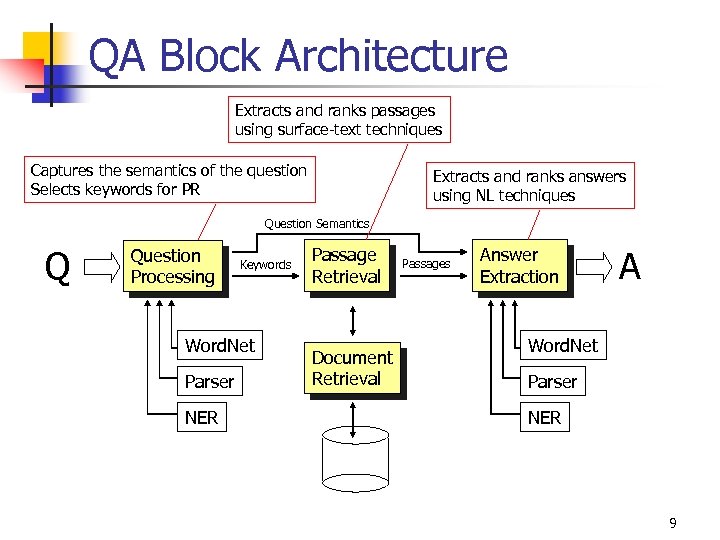

QA Block Architecture Extracts and ranks passages using surface-text techniques Captures the semantics of the question Selects keywords for PR Extracts and ranks answers using NL techniques Question Semantics Q Question Processing Keywords Word. Net Parser NER Passage Retrieval Document Retrieval Passages Answer Extraction A Word. Net Parser NER 9

QA Block Architecture Extracts and ranks passages using surface-text techniques Captures the semantics of the question Selects keywords for PR Extracts and ranks answers using NL techniques Question Semantics Q Question Processing Keywords Word. Net Parser NER Passage Retrieval Document Retrieval Passages Answer Extraction A Word. Net Parser NER 9

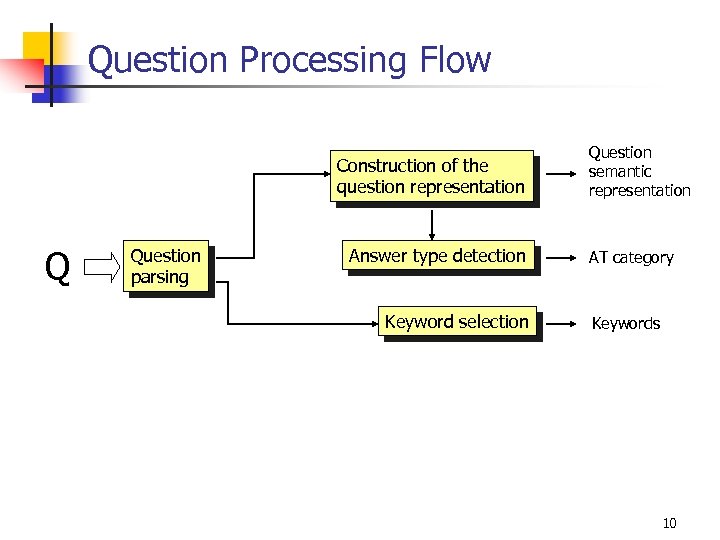

Question Processing Flow Construction of the question representation Q Question parsing Answer type detection Keyword selection Question semantic representation AT category Keywords 10

Question Processing Flow Construction of the question representation Q Question parsing Answer type detection Keyword selection Question semantic representation AT category Keywords 10

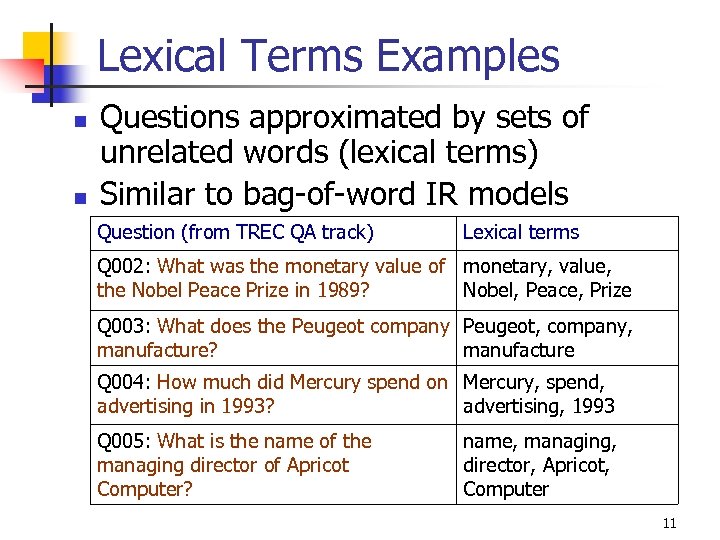

Lexical Terms Examples n n Questions approximated by sets of unrelated words (lexical terms) Similar to bag-of-word IR models Question (from TREC QA track) Lexical terms Q 002: What was the monetary value of monetary, value, the Nobel Peace Prize in 1989? Nobel, Peace, Prize Q 003: What does the Peugeot company Peugeot, company, manufacture? manufacture Q 004: How much did Mercury spend on Mercury, spend, advertising in 1993? advertising, 1993 Q 005: What is the name of the managing director of Apricot Computer? name, managing, director, Apricot, Computer 11

Lexical Terms Examples n n Questions approximated by sets of unrelated words (lexical terms) Similar to bag-of-word IR models Question (from TREC QA track) Lexical terms Q 002: What was the monetary value of monetary, value, the Nobel Peace Prize in 1989? Nobel, Peace, Prize Q 003: What does the Peugeot company Peugeot, company, manufacture? manufacture Q 004: How much did Mercury spend on Mercury, spend, advertising in 1993? advertising, 1993 Q 005: What is the name of the managing director of Apricot Computer? name, managing, director, Apricot, Computer 11

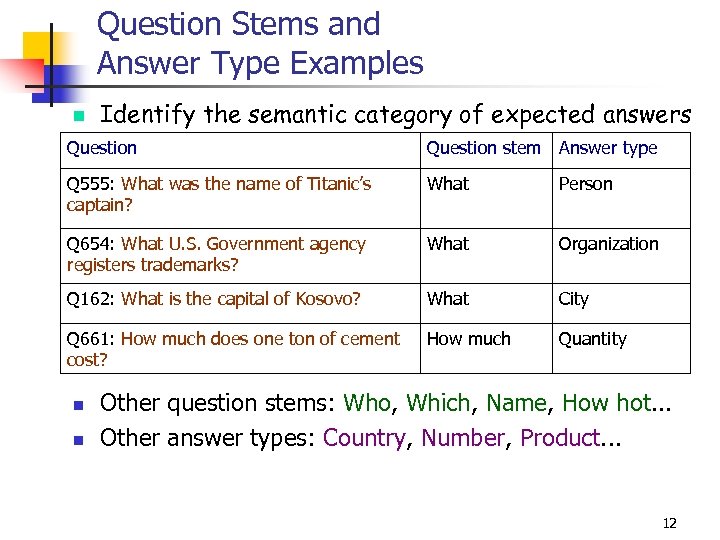

Question Stems and Answer Type Examples n Identify the semantic category of expected answers Question stem Answer type Q 555: What was the name of Titanic’s captain? What Person Q 654: What U. S. Government agency registers trademarks? What Organization Q 162: What is the capital of Kosovo? What City Q 661: How much does one ton of cement cost? How much Quantity n n Other question stems: Who, Which, Name, How hot. . . Other answer types: Country, Number, Product. . . 12

Question Stems and Answer Type Examples n Identify the semantic category of expected answers Question stem Answer type Q 555: What was the name of Titanic’s captain? What Person Q 654: What U. S. Government agency registers trademarks? What Organization Q 162: What is the capital of Kosovo? What City Q 661: How much does one ton of cement cost? How much Quantity n n Other question stems: Who, Which, Name, How hot. . . Other answer types: Country, Number, Product. . . 12

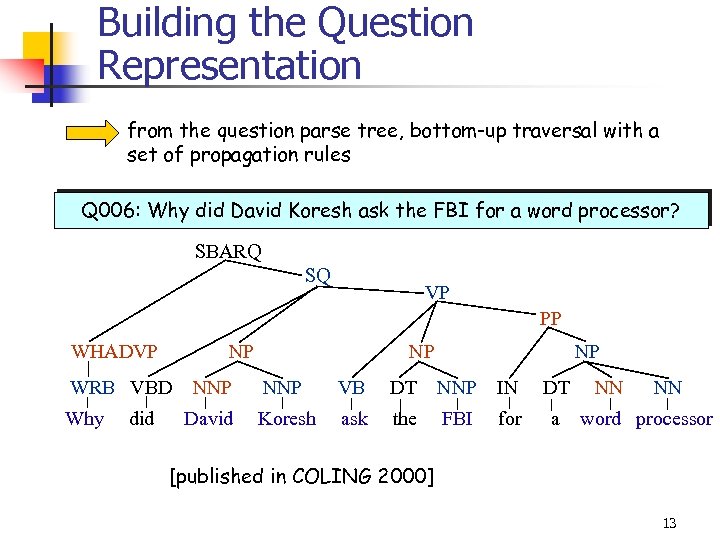

Building the Question Representation from the question parse tree, bottom-up traversal with a set of propagation rules Q 006: Why did David Koresh ask the FBI for a word processor? SBARQ SQ VP PP WHADVP WRB VBD Why did NP NP NP NNP VB DT NNP IN DT David Koresh ask the FBI for a - assign labels to non-skip leaf nodes [published head child 2000] - propagate label of in COLING node, to parent node - link head child node to other children nodes NN NN word processor 13

Building the Question Representation from the question parse tree, bottom-up traversal with a set of propagation rules Q 006: Why did David Koresh ask the FBI for a word processor? SBARQ SQ VP PP WHADVP WRB VBD Why did NP NP NP NNP VB DT NNP IN DT David Koresh ask the FBI for a - assign labels to non-skip leaf nodes [published head child 2000] - propagate label of in COLING node, to parent node - link head child node to other children nodes NN NN word processor 13

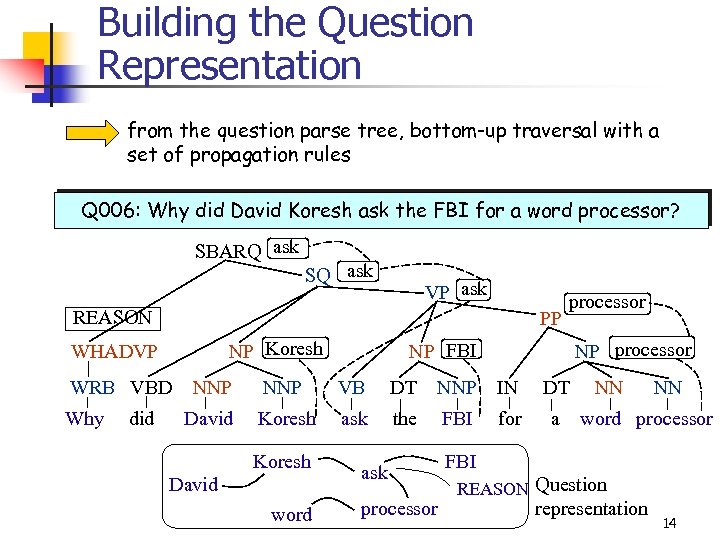

Building the Question Representation from the question parse tree, bottom-up traversal with a set of propagation rules Q 006: Why did David Koresh ask the FBI for a word processor? SBARQ ask SQ ask VP ask REASON PP NP Koresh WHADVP WRB VBD Why did processor NP FBI NNP VB DT NNP IN DT David Koresh ask the FBI for a Koresh David word ask processor NN NN word processor FBI REASON Question representation 14

Building the Question Representation from the question parse tree, bottom-up traversal with a set of propagation rules Q 006: Why did David Koresh ask the FBI for a word processor? SBARQ ask SQ ask VP ask REASON PP NP Koresh WHADVP WRB VBD Why did processor NP FBI NNP VB DT NNP IN DT David Koresh ask the FBI for a Koresh David word ask processor NN NN word processor FBI REASON Question representation 14

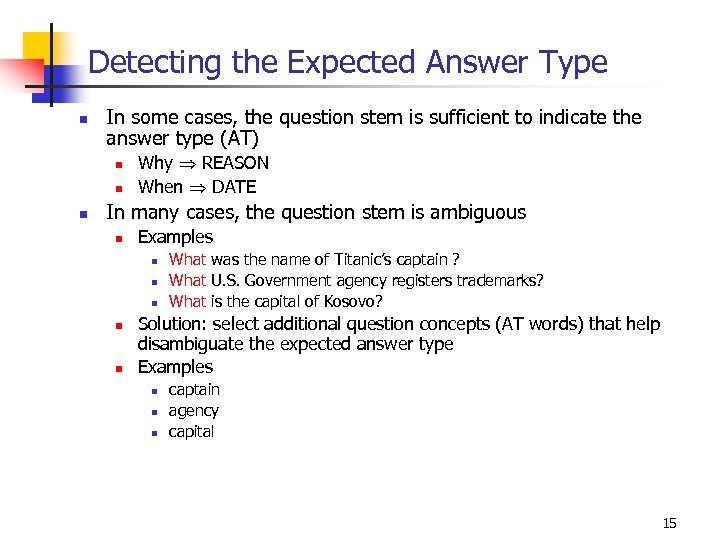

Detecting the Expected Answer Type n In some cases, the question stem is sufficient to indicate the answer type (AT) n n n Why REASON When DATE In many cases, the question stem is ambiguous n Examples n n n What was the name of Titanic’s captain ? What U. S. Government agency registers trademarks? What is the capital of Kosovo? Solution: select additional question concepts (AT words) that help disambiguate the expected answer type Examples n n n captain agency capital 15

Detecting the Expected Answer Type n In some cases, the question stem is sufficient to indicate the answer type (AT) n n n Why REASON When DATE In many cases, the question stem is ambiguous n Examples n n n What was the name of Titanic’s captain ? What U. S. Government agency registers trademarks? What is the capital of Kosovo? Solution: select additional question concepts (AT words) that help disambiguate the expected answer type Examples n n n captain agency capital 15

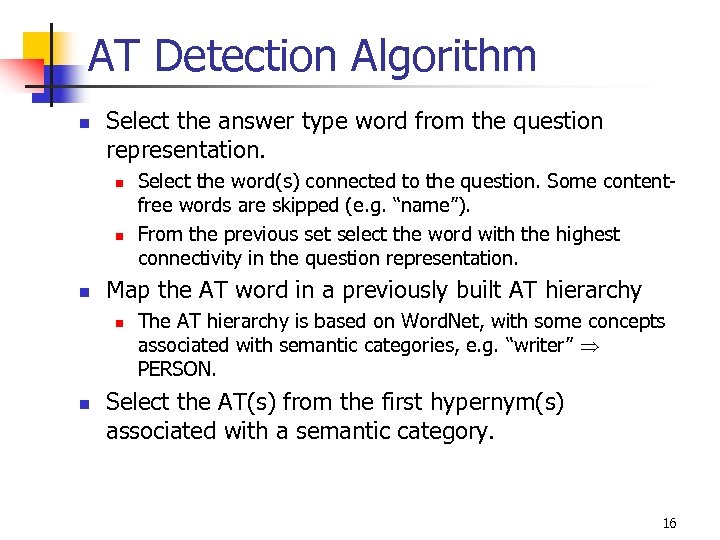

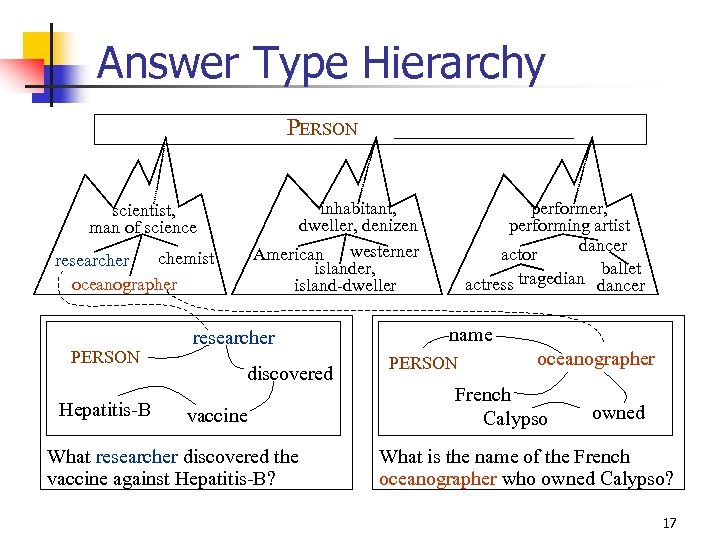

AT Detection Algorithm n Select the answer type word from the question representation. n n n Map the AT word in a previously built AT hierarchy n n Select the word(s) connected to the question. Some contentfree words are skipped (e. g. “name”). From the previous set select the word with the highest connectivity in the question representation. The AT hierarchy is based on Word. Net, with some concepts associated with semantic categories, e. g. “writer” PERSON. Select the AT(s) from the first hypernym(s) associated with a semantic category. 16

AT Detection Algorithm n Select the answer type word from the question representation. n n n Map the AT word in a previously built AT hierarchy n n Select the word(s) connected to the question. Some contentfree words are skipped (e. g. “name”). From the previous set select the word with the highest connectivity in the question representation. The AT hierarchy is based on Word. Net, with some concepts associated with semantic categories, e. g. “writer” PERSON. Select the AT(s) from the first hypernym(s) associated with a semantic category. 16

Answer Type Hierarchy PERSON inhabitant, dweller, denizen American westerner islander, island-dweller scientist, man of science chemist researcher oceanographer What PERSON Hepatitis-B researcher discovered vaccine What researcher discovered the vaccine against Hepatitis-B? performer, performing artist dancer actor ballet tragedian dancer actress name PERSON What oceanographer French Calypso owned What is the name of the French oceanographer who owned Calypso? 17

Answer Type Hierarchy PERSON inhabitant, dweller, denizen American westerner islander, island-dweller scientist, man of science chemist researcher oceanographer What PERSON Hepatitis-B researcher discovered vaccine What researcher discovered the vaccine against Hepatitis-B? performer, performing artist dancer actor ballet tragedian dancer actress name PERSON What oceanographer French Calypso owned What is the name of the French oceanographer who owned Calypso? 17

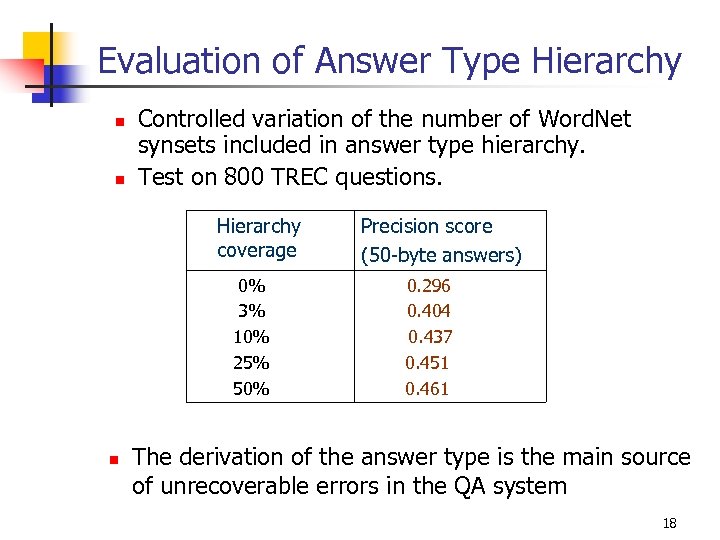

Evaluation of Answer Type Hierarchy n n Controlled variation of the number of Word. Net synsets included in answer type hierarchy. Test on 800 TREC questions. Hierarchy coverage 0% 3% 10% 25% 50% n Precision score (50 -byte answers) 0. 296 0. 404 0. 437 0. 451 0. 461 The derivation of the answer type is the main source of unrecoverable errors in the QA system 18

Evaluation of Answer Type Hierarchy n n Controlled variation of the number of Word. Net synsets included in answer type hierarchy. Test on 800 TREC questions. Hierarchy coverage 0% 3% 10% 25% 50% n Precision score (50 -byte answers) 0. 296 0. 404 0. 437 0. 451 0. 461 The derivation of the answer type is the main source of unrecoverable errors in the QA system 18

Keyword Selection n n AT indicates what the question is looking for, but provides insufficient context to locate the answer in very large document collection Lexical terms (keywords) from the question, possibly expanded with lexical/semantic variations provide the required context 19

Keyword Selection n n AT indicates what the question is looking for, but provides insufficient context to locate the answer in very large document collection Lexical terms (keywords) from the question, possibly expanded with lexical/semantic variations provide the required context 19

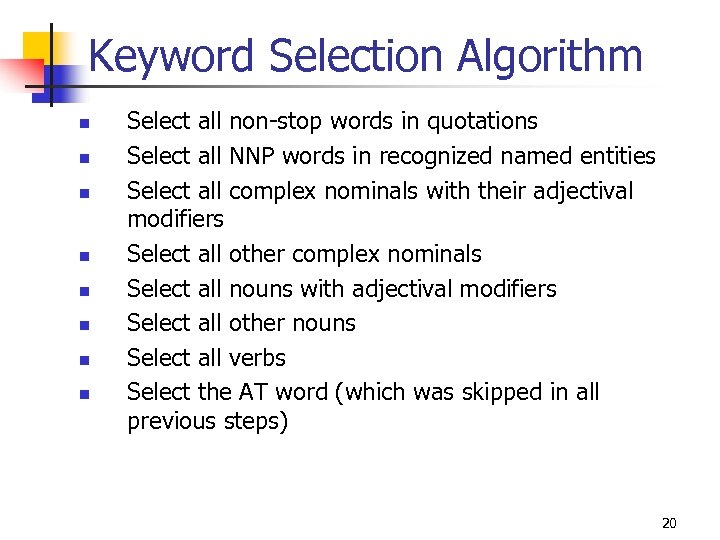

Keyword Selection Algorithm n n n n Select all non-stop words in quotations Select all NNP words in recognized named entities Select all complex nominals with their adjectival modifiers Select all other complex nominals Select all nouns with adjectival modifiers Select all other nouns Select all verbs Select the AT word (which was skipped in all previous steps) 20

Keyword Selection Algorithm n n n n Select all non-stop words in quotations Select all NNP words in recognized named entities Select all complex nominals with their adjectival modifiers Select all other complex nominals Select all nouns with adjectival modifiers Select all other nouns Select all verbs Select the AT word (which was skipped in all previous steps) 20

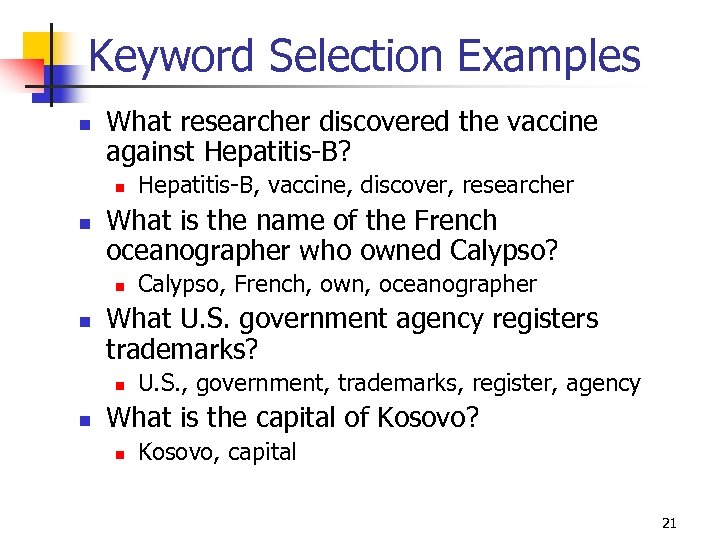

Keyword Selection Examples n What researcher discovered the vaccine against Hepatitis-B? n n What is the name of the French oceanographer who owned Calypso? n n Calypso, French, own, oceanographer What U. S. government agency registers trademarks? n n Hepatitis-B, vaccine, discover, researcher U. S. , government, trademarks, register, agency What is the capital of Kosovo? n Kosovo, capital 21

Keyword Selection Examples n What researcher discovered the vaccine against Hepatitis-B? n n What is the name of the French oceanographer who owned Calypso? n n Calypso, French, own, oceanographer What U. S. government agency registers trademarks? n n Hepatitis-B, vaccine, discover, researcher U. S. , government, trademarks, register, agency What is the capital of Kosovo? n Kosovo, capital 21

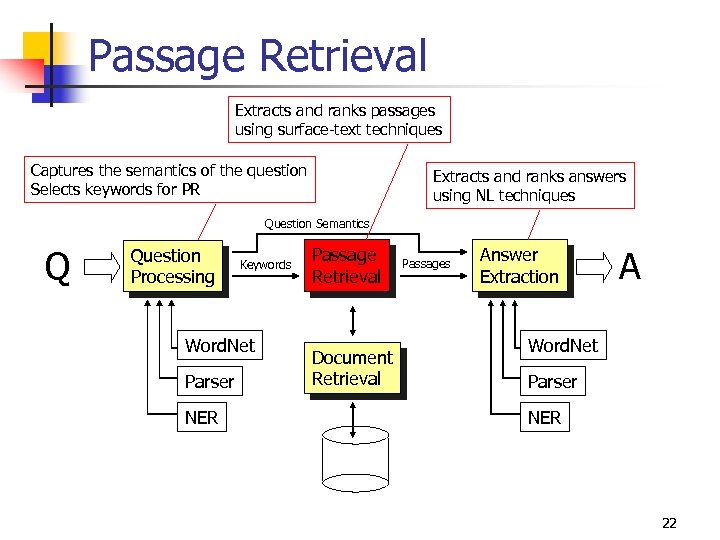

Passage Retrieval Extracts and ranks passages using surface-text techniques Captures the semantics of the question Selects keywords for PR Extracts and ranks answers using NL techniques Question Semantics Q Question Processing Keywords Word. Net Parser NER Passage Retrieval Document Retrieval Passages Answer Extraction A Word. Net Parser NER 22

Passage Retrieval Extracts and ranks passages using surface-text techniques Captures the semantics of the question Selects keywords for PR Extracts and ranks answers using NL techniques Question Semantics Q Question Processing Keywords Word. Net Parser NER Passage Retrieval Document Retrieval Passages Answer Extraction A Word. Net Parser NER 22

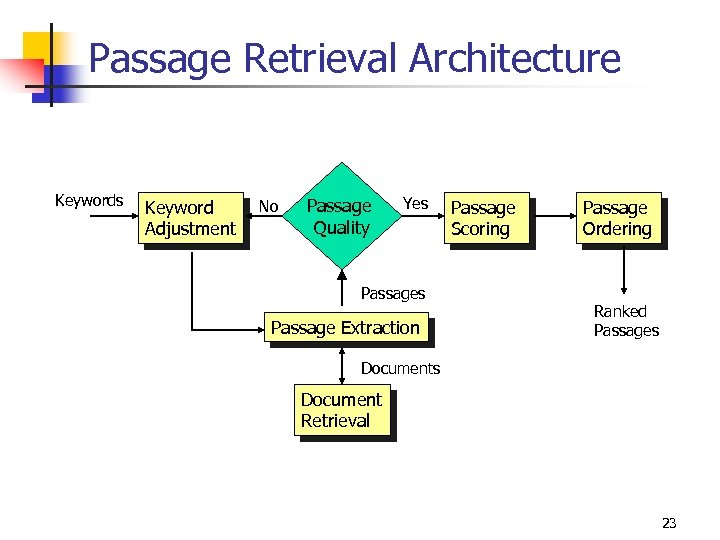

Passage Retrieval Architecture Keywords Keyword Adjustment No Passage Quality Yes Passage Extraction Passage Scoring Passage Ordering Ranked Passages Document Retrieval 23

Passage Retrieval Architecture Keywords Keyword Adjustment No Passage Quality Yes Passage Extraction Passage Scoring Passage Ordering Ranked Passages Document Retrieval 23

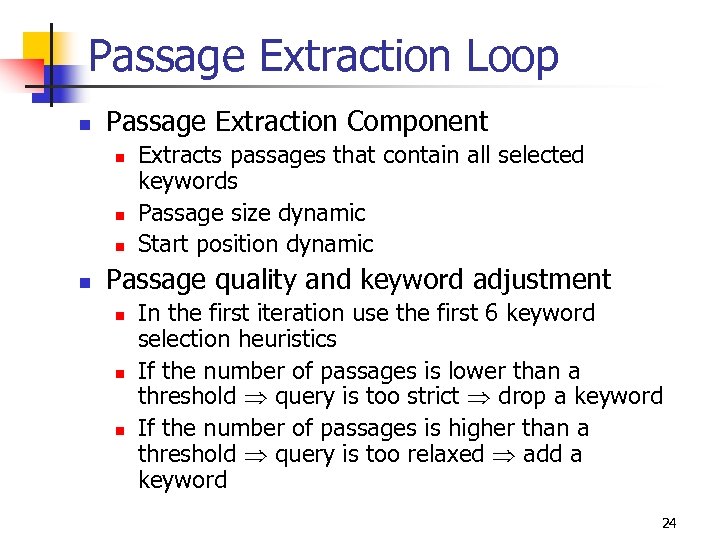

Passage Extraction Loop n Passage Extraction Component n n Extracts passages that contain all selected keywords Passage size dynamic Start position dynamic Passage quality and keyword adjustment n n n In the first iteration use the first 6 keyword selection heuristics If the number of passages is lower than a threshold query is too strict drop a keyword If the number of passages is higher than a threshold query is too relaxed add a keyword 24

Passage Extraction Loop n Passage Extraction Component n n Extracts passages that contain all selected keywords Passage size dynamic Start position dynamic Passage quality and keyword adjustment n n n In the first iteration use the first 6 keyword selection heuristics If the number of passages is lower than a threshold query is too strict drop a keyword If the number of passages is higher than a threshold query is too relaxed add a keyword 24

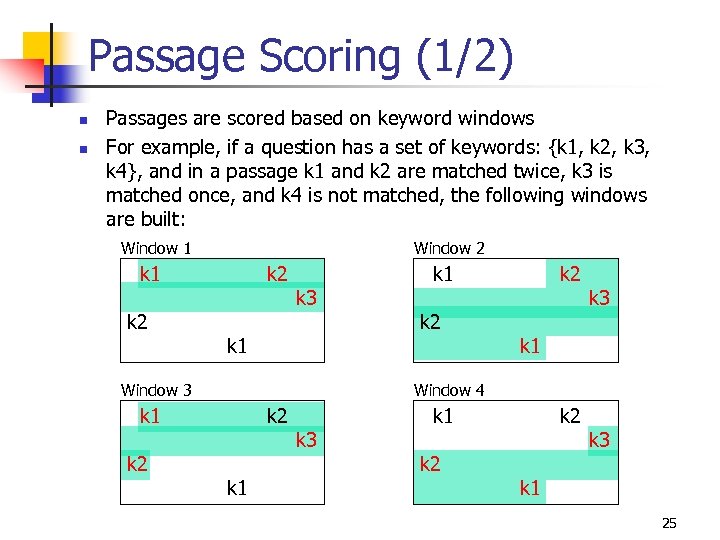

Passage Scoring (1/2) n n Passages are scored based on keyword windows For example, if a question has a set of keywords: {k 1, k 2, k 3, k 4}, and in a passage k 1 and k 2 are matched twice, k 3 is matched once, and k 4 is not matched, the following windows are built: Window 1 Window 2 k 1 k 2 k 3 k 1 Window 3 k 2 k 3 k 1 Window 4 k 1 k 2 k 1 k 3 k 1 k 2 k 3 k 1 25

Passage Scoring (1/2) n n Passages are scored based on keyword windows For example, if a question has a set of keywords: {k 1, k 2, k 3, k 4}, and in a passage k 1 and k 2 are matched twice, k 3 is matched once, and k 4 is not matched, the following windows are built: Window 1 Window 2 k 1 k 2 k 3 k 1 Window 3 k 2 k 3 k 1 Window 4 k 1 k 2 k 1 k 3 k 1 k 2 k 3 k 1 25

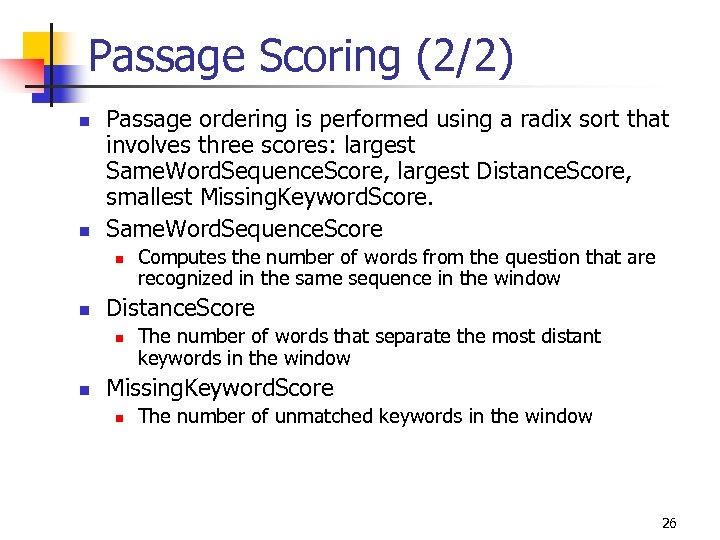

Passage Scoring (2/2) n n Passage ordering is performed using a radix sort that involves three scores: largest Same. Word. Sequence. Score, largest Distance. Score, smallest Missing. Keyword. Score. Same. Word. Sequence. Score n n Distance. Score n n Computes the number of words from the question that are recognized in the same sequence in the window The number of words that separate the most distant keywords in the window Missing. Keyword. Score n The number of unmatched keywords in the window 26

Passage Scoring (2/2) n n Passage ordering is performed using a radix sort that involves three scores: largest Same. Word. Sequence. Score, largest Distance. Score, smallest Missing. Keyword. Score. Same. Word. Sequence. Score n n Distance. Score n n Computes the number of words from the question that are recognized in the same sequence in the window The number of words that separate the most distant keywords in the window Missing. Keyword. Score n The number of unmatched keywords in the window 26

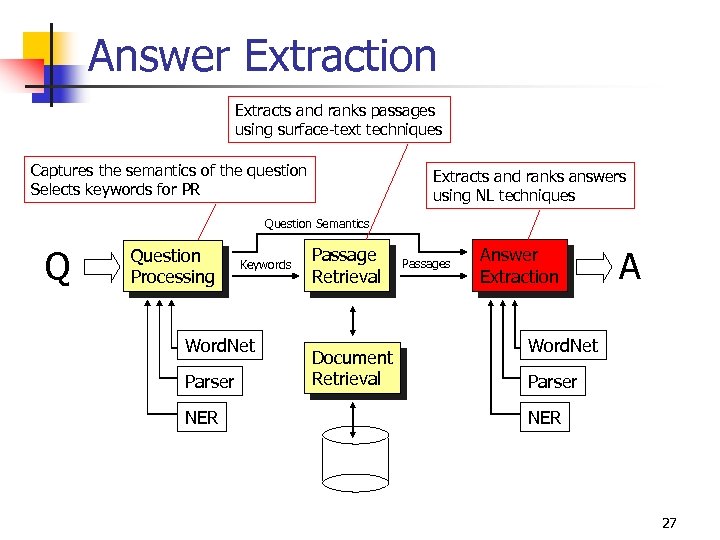

Answer Extraction Extracts and ranks passages using surface-text techniques Captures the semantics of the question Selects keywords for PR Extracts and ranks answers using NL techniques Question Semantics Q Question Processing Keywords Word. Net Parser NER Passage Retrieval Document Retrieval Passages Answer Extraction A Word. Net Parser NER 27

Answer Extraction Extracts and ranks passages using surface-text techniques Captures the semantics of the question Selects keywords for PR Extracts and ranks answers using NL techniques Question Semantics Q Question Processing Keywords Word. Net Parser NER Passage Retrieval Document Retrieval Passages Answer Extraction A Word. Net Parser NER 27

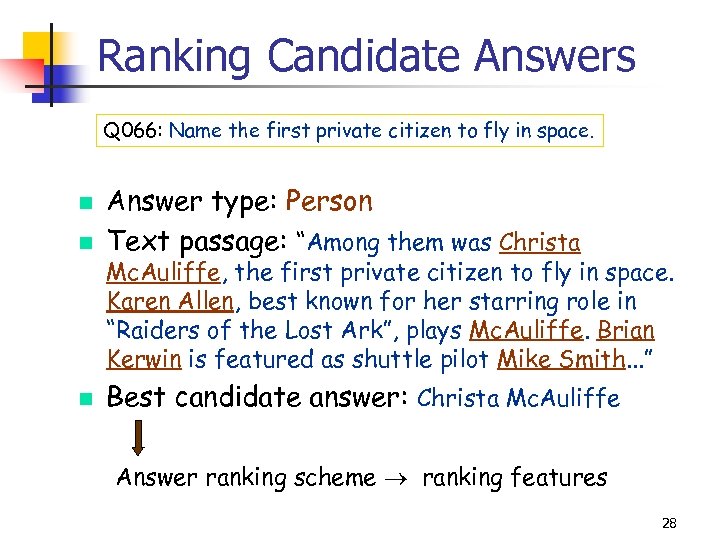

Ranking Candidate Answers Q 066: Name the first private citizen to fly in space. n Answer type: Person Text passage: “Among them was Christa n Best candidate answer: Christa Mc. Auliffe n Mc. Auliffe, the first private citizen to fly in space. Karen Allen, best known for her starring role in “Raiders of the Lost Ark”, plays Mc. Auliffe. Brian Kerwin is featured as shuttle pilot Mike Smith. . . ” Answer ranking scheme ranking features 28

Ranking Candidate Answers Q 066: Name the first private citizen to fly in space. n Answer type: Person Text passage: “Among them was Christa n Best candidate answer: Christa Mc. Auliffe n Mc. Auliffe, the first private citizen to fly in space. Karen Allen, best known for her starring role in “Raiders of the Lost Ark”, plays Mc. Auliffe. Brian Kerwin is featured as shuttle pilot Mike Smith. . . ” Answer ranking scheme ranking features 28

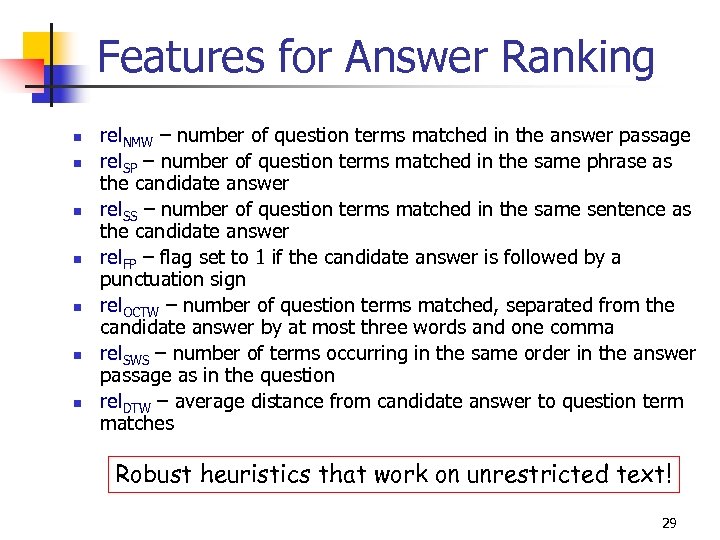

Features for Answer Ranking n n n n rel. NMW – number of question terms matched in the answer passage rel. SP – number of question terms matched in the same phrase as the candidate answer rel. SS – number of question terms matched in the same sentence as the candidate answer rel. FP – flag set to 1 if the candidate answer is followed by a punctuation sign rel. OCTW – number of question terms matched, separated from the candidate answer by at most three words and one comma rel. SWS – number of terms occurring in the same order in the answer passage as in the question rel. DTW – average distance from candidate answer to question term matches Robust heuristics that work on unrestricted text! 29

Features for Answer Ranking n n n n rel. NMW – number of question terms matched in the answer passage rel. SP – number of question terms matched in the same phrase as the candidate answer rel. SS – number of question terms matched in the same sentence as the candidate answer rel. FP – flag set to 1 if the candidate answer is followed by a punctuation sign rel. OCTW – number of question terms matched, separated from the candidate answer by at most three words and one comma rel. SWS – number of terms occurring in the same order in the answer passage as in the question rel. DTW – average distance from candidate answer to question term matches Robust heuristics that work on unrestricted text! 29

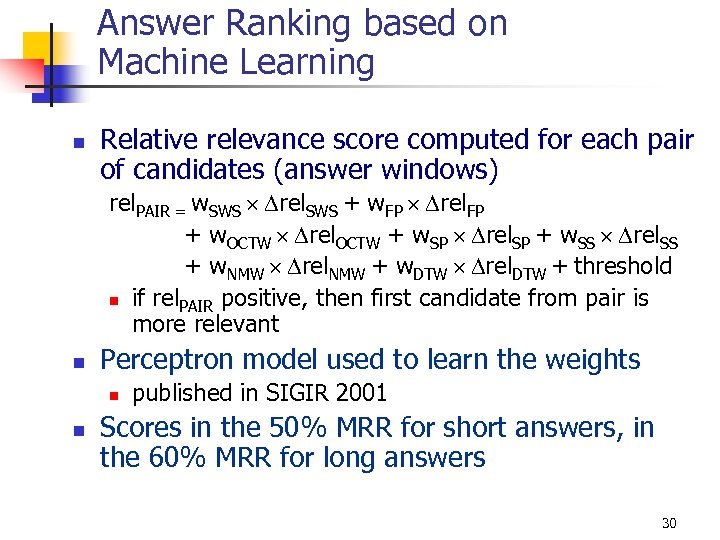

Answer Ranking based on Machine Learning n Relative relevance score computed for each pair of candidates (answer windows) rel. PAIR = w. SWS rel. SWS + w. FP rel. FP + w. OCTW rel. OCTW + w. SP rel. SP + w. SS rel. SS + w. NMW rel. NMW + w. DTW rel. DTW + threshold n if rel. PAIR positive, then first candidate from pair is more relevant n Perceptron model used to learn the weights n n published in SIGIR 2001 Scores in the 50% MRR for short answers, in the 60% MRR for long answers 30

Answer Ranking based on Machine Learning n Relative relevance score computed for each pair of candidates (answer windows) rel. PAIR = w. SWS rel. SWS + w. FP rel. FP + w. OCTW rel. OCTW + w. SP rel. SP + w. SS rel. SS + w. NMW rel. NMW + w. DTW rel. DTW + threshold n if rel. PAIR positive, then first candidate from pair is more relevant n Perceptron model used to learn the weights n n published in SIGIR 2001 Scores in the 50% MRR for short answers, in the 60% MRR for long answers 30

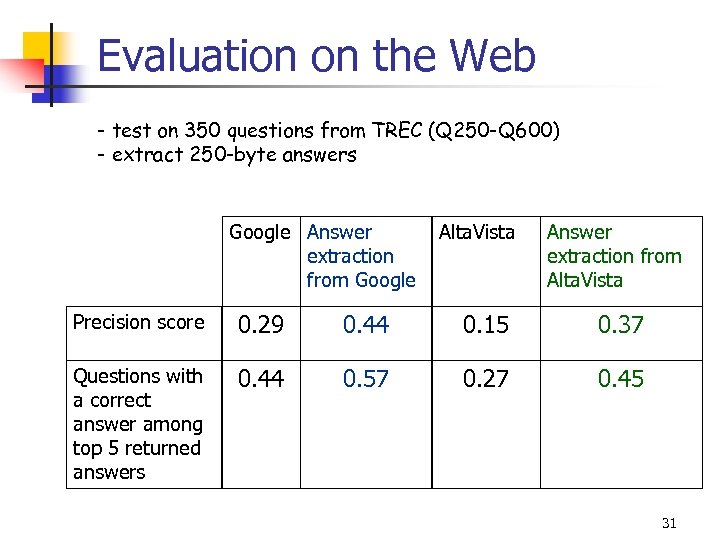

Evaluation on the Web - test on 350 questions from TREC (Q 250 -Q 600) - extract 250 -byte answers Google Answer extraction from Google Alta. Vista Answer extraction from Alta. Vista Precision score 0. 29 0. 44 0. 15 0. 37 Questions with a correct answer among top 5 returned answers 0. 44 0. 57 0. 27 0. 45 31

Evaluation on the Web - test on 350 questions from TREC (Q 250 -Q 600) - extract 250 -byte answers Google Answer extraction from Google Alta. Vista Answer extraction from Alta. Vista Precision score 0. 29 0. 44 0. 15 0. 37 Questions with a correct answer among top 5 returned answers 0. 44 0. 57 0. 27 0. 45 31

System Extension: Answer Justification n Experiments with Open-Domain Textual Question Answering. Sanda Harabagiu, Marius Paşca and Steve Maiorano. n Answer justification using unnamed relations extracted from the question representation and the answer representation (constructed through a similar process). 32

System Extension: Answer Justification n Experiments with Open-Domain Textual Question Answering. Sanda Harabagiu, Marius Paşca and Steve Maiorano. n Answer justification using unnamed relations extracted from the question representation and the answer representation (constructed through a similar process). 32

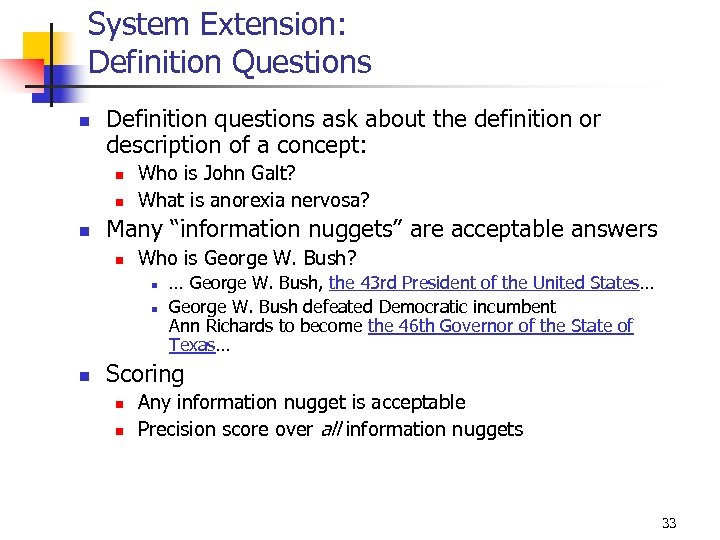

System Extension: Definition Questions n Definition questions ask about the definition or description of a concept: n n n Who is John Galt? What is anorexia nervosa? Many “information nuggets” are acceptable answers n Who is George W. Bush? n n n … George W. Bush, the 43 rd President of the United States… George W. Bush defeated Democratic incumbent Ann Richards to become the 46 th Governor of the State of Texas… Scoring n n Any information nugget is acceptable Precision score over all information nuggets 33

System Extension: Definition Questions n Definition questions ask about the definition or description of a concept: n n n Who is John Galt? What is anorexia nervosa? Many “information nuggets” are acceptable answers n Who is George W. Bush? n n n … George W. Bush, the 43 rd President of the United States… George W. Bush defeated Democratic incumbent Ann Richards to become the 46 th Governor of the State of Texas… Scoring n n Any information nugget is acceptable Precision score over all information nuggets 33

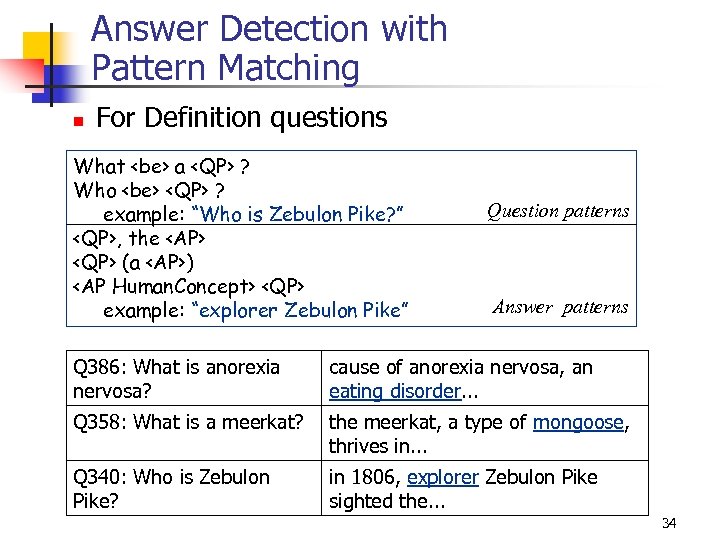

Answer Detection with Pattern Matching n For Definition questions What

Answer Detection with Pattern Matching n For Definition questions What

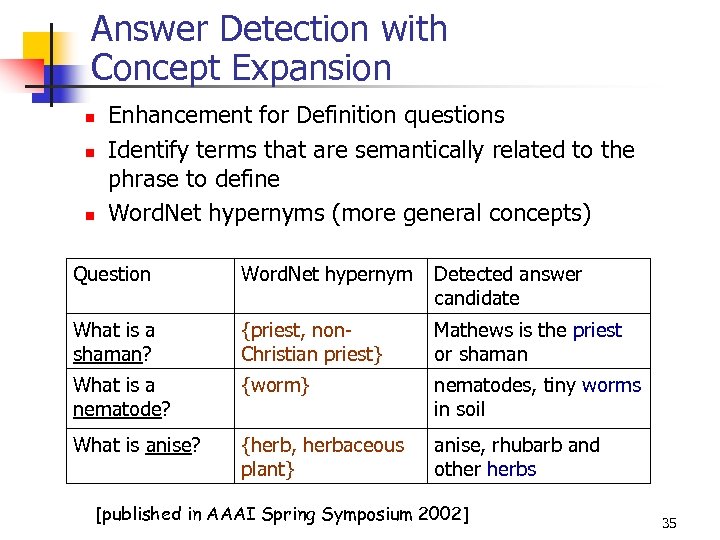

Answer Detection with Concept Expansion n Enhancement for Definition questions Identify terms that are semantically related to the phrase to define Word. Net hypernyms (more general concepts) Question Word. Net hypernym Detected answer candidate What is a shaman? {priest, non. Christian priest} Mathews is the priest or shaman What is a nematode? {worm} nematodes, tiny worms in soil What is anise? {herb, herbaceous plant} anise, rhubarb and other herbs [published in AAAI Spring Symposium 2002] 35

Answer Detection with Concept Expansion n Enhancement for Definition questions Identify terms that are semantically related to the phrase to define Word. Net hypernyms (more general concepts) Question Word. Net hypernym Detected answer candidate What is a shaman? {priest, non. Christian priest} Mathews is the priest or shaman What is a nematode? {worm} nematodes, tiny worms in soil What is anise? {herb, herbaceous plant} anise, rhubarb and other herbs [published in AAAI Spring Symposium 2002] 35

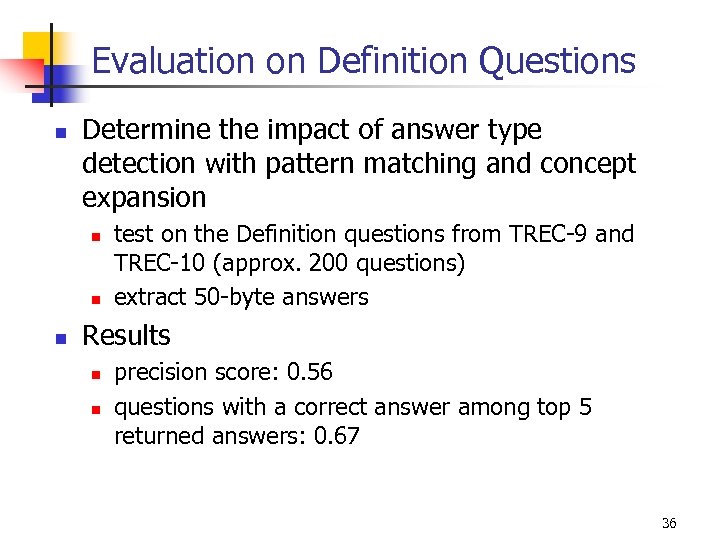

Evaluation on Definition Questions n Determine the impact of answer type detection with pattern matching and concept expansion n test on the Definition questions from TREC-9 and TREC-10 (approx. 200 questions) extract 50 -byte answers Results n n precision score: 0. 56 questions with a correct answer among top 5 returned answers: 0. 67 36

Evaluation on Definition Questions n Determine the impact of answer type detection with pattern matching and concept expansion n test on the Definition questions from TREC-9 and TREC-10 (approx. 200 questions) extract 50 -byte answers Results n n precision score: 0. 56 questions with a correct answer among top 5 returned answers: 0. 67 36

References n n Marius Paşca. High-Performance, Open-Domain Question Answering from Large Text Collections, Ph. D. Thesis, Computer Science and Engineering Department, Southern Methodist University, Defended September 2001, Dallas, Texas Marius Paşca. Open-Domain Question Answering from Large Text Collections, Center for the Study of Language and Information (CSLI Publications, series: Studies in Computational Linguistics), Stanford, California, Distributed by the University of Chicago Press, ISBN (Paperback): 1575864282, ISBN (Cloth): 1575864274. 2003 37

References n n Marius Paşca. High-Performance, Open-Domain Question Answering from Large Text Collections, Ph. D. Thesis, Computer Science and Engineering Department, Southern Methodist University, Defended September 2001, Dallas, Texas Marius Paşca. Open-Domain Question Answering from Large Text Collections, Center for the Study of Language and Information (CSLI Publications, series: Studies in Computational Linguistics), Stanford, California, Distributed by the University of Chicago Press, ISBN (Paperback): 1575864282, ISBN (Cloth): 1575864274. 2003 37

Overview n n n What is Question Answering? A “traditional” system Other relevant approaches n n n LCC´s Power. Answer + COGEX IBM’s PIQUANT CMU’s Javelin ISI’s Text. Map BBN’s AQUA Distributed Question Answering 38

Overview n n n What is Question Answering? A “traditional” system Other relevant approaches n n n LCC´s Power. Answer + COGEX IBM’s PIQUANT CMU’s Javelin ISI’s Text. Map BBN’s AQUA Distributed Question Answering 38

Power. Answer + COGEX (1/2) n n Automated reasoning for QA: A Q, using a logic prover. Facilititates both answer validation and answer extraction. Both question and answer(s) transformed in logic forms. Example: n n Heavy selling of Standard & Poor’s 500 -stock index futures in Chicago relentlessly beat stocks downwards. Heavy_JJ(x 1) & selling_NN(x 1) & of_IN(x 1, x 6) & Standard_NN(x 2) & &_CC(x 13, x 2, x 3) & Poor(x 3) & ‘s_POS(x 6, x 13) & 500 -stock_JJ(x 6) & index_NN(x 4) & futures(x 5) & nn_NNC(x 6, x 4, x 5) & in_IN(x 1, x 8) & Chicago_NNP(x 8) & relentlessly_RB(e 12) & beat_VB(e 12, x 1, x 9) & stocks_NN(x 9) & downward_RB(e 12) 39

Power. Answer + COGEX (1/2) n n Automated reasoning for QA: A Q, using a logic prover. Facilititates both answer validation and answer extraction. Both question and answer(s) transformed in logic forms. Example: n n Heavy selling of Standard & Poor’s 500 -stock index futures in Chicago relentlessly beat stocks downwards. Heavy_JJ(x 1) & selling_NN(x 1) & of_IN(x 1, x 6) & Standard_NN(x 2) & &_CC(x 13, x 2, x 3) & Poor(x 3) & ‘s_POS(x 6, x 13) & 500 -stock_JJ(x 6) & index_NN(x 4) & futures(x 5) & nn_NNC(x 6, x 4, x 5) & in_IN(x 1, x 8) & Chicago_NNP(x 8) & relentlessly_RB(e 12) & beat_VB(e 12, x 1, x 9) & stocks_NN(x 9) & downward_RB(e 12) 39

Power. Answer + COGEX (2/2) n World knowledge from: n n Word. Net glosses converted to logic forms in the e. Xtended Word. Net (XWN) project (http: //www. utdallas. edu/~moldovan) Lexical chains n n NLP axioms to handle complex NPs, coordinations, appositions, equivalence classes for prepositions etcetera Named-entity recognizer n n game: n#3 HYPERNYM recreation: n#1 HYPONYM sport: n#1 Argentine: a#1 GLOSS Argentina: n#1 John Galt HUMAN A relaxation mechanism is used to iteratively uncouple predicates, remove terms from LFs. The proofs are penalized based on the amount of relaxation involved. 40

Power. Answer + COGEX (2/2) n World knowledge from: n n Word. Net glosses converted to logic forms in the e. Xtended Word. Net (XWN) project (http: //www. utdallas. edu/~moldovan) Lexical chains n n NLP axioms to handle complex NPs, coordinations, appositions, equivalence classes for prepositions etcetera Named-entity recognizer n n game: n#3 HYPERNYM recreation: n#1 HYPONYM sport: n#1 Argentine: a#1 GLOSS Argentina: n#1 John Galt HUMAN A relaxation mechanism is used to iteratively uncouple predicates, remove terms from LFs. The proofs are penalized based on the amount of relaxation involved. 40

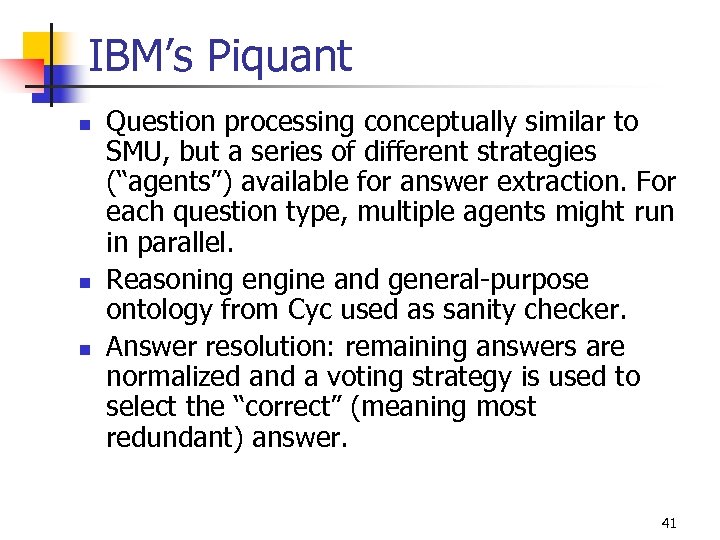

IBM’s Piquant n n n Question processing conceptually similar to SMU, but a series of different strategies (“agents”) available for answer extraction. For each question type, multiple agents might run in parallel. Reasoning engine and general-purpose ontology from Cyc used as sanity checker. Answer resolution: remaining answers are normalized and a voting strategy is used to select the “correct” (meaning most redundant) answer. 41

IBM’s Piquant n n n Question processing conceptually similar to SMU, but a series of different strategies (“agents”) available for answer extraction. For each question type, multiple agents might run in parallel. Reasoning engine and general-purpose ontology from Cyc used as sanity checker. Answer resolution: remaining answers are normalized and a voting strategy is used to select the “correct” (meaning most redundant) answer. 41

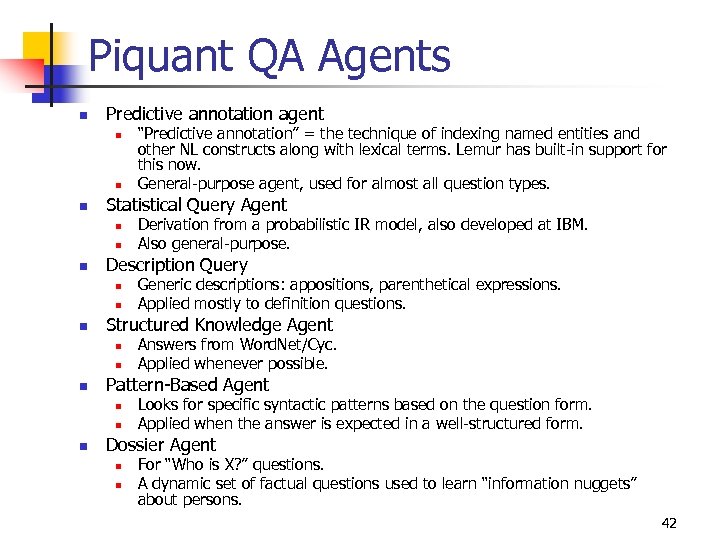

Piquant QA Agents n Predictive annotation agent n n n Statistical Query Agent n n n Answers from Word. Net/Cyc. Applied whenever possible. Pattern-Based Agent n n n Generic descriptions: appositions, parenthetical expressions. Applied mostly to definition questions. Structured Knowledge Agent n n Derivation from a probabilistic IR model, also developed at IBM. Also general-purpose. Description Query n n “Predictive annotation” = the technique of indexing named entities and other NL constructs along with lexical terms. Lemur has built-in support for this now. General-purpose agent, used for almost all question types. Looks for specific syntactic patterns based on the question form. Applied when the answer is expected in a well-structured form. Dossier Agent n n For “Who is X? ” questions. A dynamic set of factual questions used to learn “information nuggets” about persons. 42

Piquant QA Agents n Predictive annotation agent n n n Statistical Query Agent n n n Answers from Word. Net/Cyc. Applied whenever possible. Pattern-Based Agent n n n Generic descriptions: appositions, parenthetical expressions. Applied mostly to definition questions. Structured Knowledge Agent n n Derivation from a probabilistic IR model, also developed at IBM. Also general-purpose. Description Query n n “Predictive annotation” = the technique of indexing named entities and other NL constructs along with lexical terms. Lemur has built-in support for this now. General-purpose agent, used for almost all question types. Looks for specific syntactic patterns based on the question form. Applied when the answer is expected in a well-structured form. Dossier Agent n n For “Who is X? ” questions. A dynamic set of factual questions used to learn “information nuggets” about persons. 42

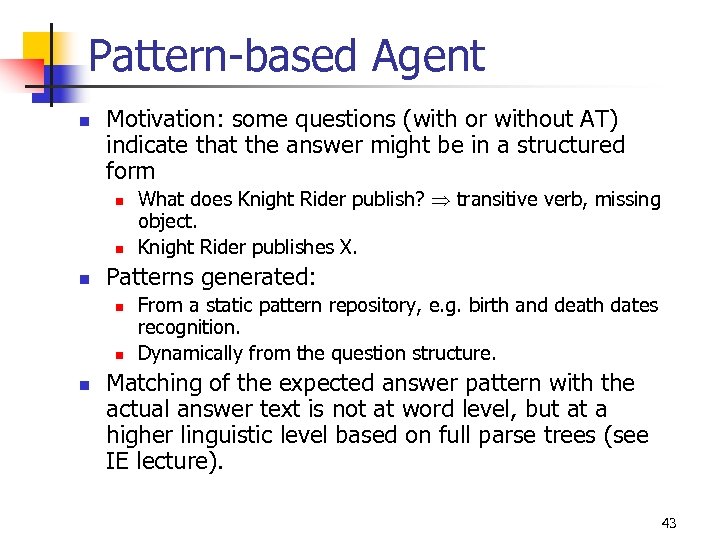

Pattern-based Agent n Motivation: some questions (with or without AT) indicate that the answer might be in a structured form n n n Patterns generated: n n n What does Knight Rider publish? transitive verb, missing object. Knight Rider publishes X. From a static pattern repository, e. g. birth and death dates recognition. Dynamically from the question structure. Matching of the expected answer pattern with the actual answer text is not at word level, but at a higher linguistic level based on full parse trees (see IE lecture). 43

Pattern-based Agent n Motivation: some questions (with or without AT) indicate that the answer might be in a structured form n n n Patterns generated: n n n What does Knight Rider publish? transitive verb, missing object. Knight Rider publishes X. From a static pattern repository, e. g. birth and death dates recognition. Dynamically from the question structure. Matching of the expected answer pattern with the actual answer text is not at word level, but at a higher linguistic level based on full parse trees (see IE lecture). 43

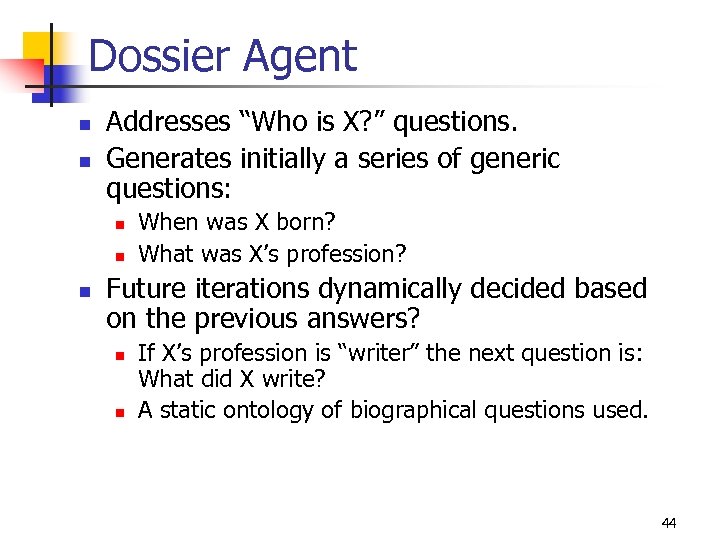

Dossier Agent n n Addresses “Who is X? ” questions. Generates initially a series of generic questions: n n n When was X born? What was X’s profession? Future iterations dynamically decided based on the previous answers? n n If X’s profession is “writer” the next question is: What did X write? A static ontology of biographical questions used. 44

Dossier Agent n n Addresses “Who is X? ” questions. Generates initially a series of generic questions: n n n When was X born? What was X’s profession? Future iterations dynamically decided based on the previous answers? n n If X’s profession is “writer” the next question is: What did X write? A static ontology of biographical questions used. 44

Cy. C Sanity Checker n Post-processing component that n Rejects insane answers n n n “How much does a grey wolf weigh? ” “ 300 tons” A grey wold IS-A wolf. Weight of a wolf known in Cyc returns: SANE, INSANE, or DON’T KNOW. Boosts answer confidence when the answer is SANE. Typically called for numerical answer types: n n n What is the population of Maryland? How much does a grey wolf weigh? How high is Mt. Hood? 45

Cy. C Sanity Checker n Post-processing component that n Rejects insane answers n n n “How much does a grey wolf weigh? ” “ 300 tons” A grey wold IS-A wolf. Weight of a wolf known in Cyc returns: SANE, INSANE, or DON’T KNOW. Boosts answer confidence when the answer is SANE. Typically called for numerical answer types: n n n What is the population of Maryland? How much does a grey wolf weigh? How high is Mt. Hood? 45

Answer Resolution n Called when multiple agents are applied for the same question. Distribution of agents: the predictive-annotation and the statistical agent by far the most common. Each agent provides a canonical answer (e. g. normalized named entity) and a confidence score. Final confidence for each candidate answer computed using a ML model with SVM. 46

Answer Resolution n Called when multiple agents are applied for the same question. Distribution of agents: the predictive-annotation and the statistical agent by far the most common. Each agent provides a canonical answer (e. g. normalized named entity) and a confidence score. Final confidence for each candidate answer computed using a ML model with SVM. 46

CMU’s Javelin n n Architecture combines SMU’s and IBM’s approaches. Question processing close to SMU’s approach. Passage retrieval loop conceptually similar to SMU’s, but an elegant implementation. Multiple answer strategies similar to IBM’s system. All of them are based on ML models (K nearest neighbours, decision trees) that use shallow-text features (close to SMU’s). Answer voting, similar to IBM’s, used to exploit answer redundancy. 47

CMU’s Javelin n n Architecture combines SMU’s and IBM’s approaches. Question processing close to SMU’s approach. Passage retrieval loop conceptually similar to SMU’s, but an elegant implementation. Multiple answer strategies similar to IBM’s system. All of them are based on ML models (K nearest neighbours, decision trees) that use shallow-text features (close to SMU’s). Answer voting, similar to IBM’s, used to exploit answer redundancy. 47

Javelin’s Retrieval Strategist n n Implements passage retrieval, including the passage retrieval loop. Uses the Inquiry IR system, probably Lemur by now. The retrieval loop uses all keywords in close proximity of each other initially (stricter than SMU). Subsequent iterations relax the following query terms n n n Proximity for all question keywords: 20, 100, 250, AND Phrase proximity for phrase operators: less than 3 words or PHRASE Phrase proximity for named entities: less than 3 words or PHRASE Inclusion/exclusion of AT word Accuracy for TREC-11 queries: how many questions had at least one correct document in the top N documents: n n n Top 30 docs: 80% Top 60 docs: 85% Top 120 docs: 86% 48

Javelin’s Retrieval Strategist n n Implements passage retrieval, including the passage retrieval loop. Uses the Inquiry IR system, probably Lemur by now. The retrieval loop uses all keywords in close proximity of each other initially (stricter than SMU). Subsequent iterations relax the following query terms n n n Proximity for all question keywords: 20, 100, 250, AND Phrase proximity for phrase operators: less than 3 words or PHRASE Phrase proximity for named entities: less than 3 words or PHRASE Inclusion/exclusion of AT word Accuracy for TREC-11 queries: how many questions had at least one correct document in the top N documents: n n n Top 30 docs: 80% Top 60 docs: 85% Top 120 docs: 86% 48

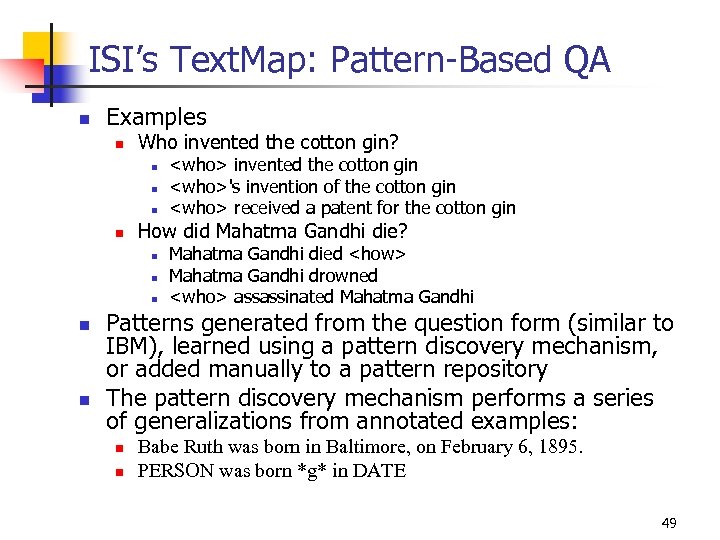

ISI’s Text. Map: Pattern-Based QA n Examples n Who invented the cotton gin? n n How did Mahatma Gandhi die? n n n

ISI’s Text. Map: Pattern-Based QA n Examples n Who invented the cotton gin? n n How did Mahatma Gandhi die? n n n

Text. Map: QA Machine Translation n n In machine translation, one collects translations pairs ( s, d) and learns a model how to transform the source s into the destination d. QA is redefined in a similar way: collect question-answer pairs (a, q) and learn a model that computes the probability that a question is generated from the given answer: p(q | parsetree(a)). The correct answer maximizes this probability. Only the subsets of answer parse trees where the answer lies are used as training (not the whole sentence). An off-the-shelf machine translation package (Giza) used to train the model. 50

Text. Map: QA Machine Translation n n In machine translation, one collects translations pairs ( s, d) and learns a model how to transform the source s into the destination d. QA is redefined in a similar way: collect question-answer pairs (a, q) and learn a model that computes the probability that a question is generated from the given answer: p(q | parsetree(a)). The correct answer maximizes this probability. Only the subsets of answer parse trees where the answer lies are used as training (not the whole sentence). An off-the-shelf machine translation package (Giza) used to train the model. 50

Text. Map: Exploiting the Data Redundancy n Additional knowledge resources are used whenever applicable n Word. Net glosses n n www. acronymfinder. com n n What is a meerkat? What is ARDA? Etcetera The “known” answers are then simply searched in the document collection together with question keywords Google is used for answer redundancy n n n TREC and Web (through Google) are searched in parallel. Final answer selected using a maximum entropy ML model. IBM introduced redundancy for QA agents, ISI uses data redundancy. 51

Text. Map: Exploiting the Data Redundancy n Additional knowledge resources are used whenever applicable n Word. Net glosses n n www. acronymfinder. com n n What is a meerkat? What is ARDA? Etcetera The “known” answers are then simply searched in the document collection together with question keywords Google is used for answer redundancy n n n TREC and Web (through Google) are searched in parallel. Final answer selected using a maximum entropy ML model. IBM introduced redundancy for QA agents, ISI uses data redundancy. 51

BBN’s AQUA n Factual system converts both question and answer to a semantic form (close to SMU’s) n n n Machine learning used to measure the similarity of the two representations. Was ranked best at the TREC definition pilot organized before TREC-12 Definition system conceptually close to SMU’s n n n Had pronominal and nominal coreference resolution Used a (probably) better parser (Charniak) Post-ranking of candidate answers using a tf * idf model 52

BBN’s AQUA n Factual system converts both question and answer to a semantic form (close to SMU’s) n n n Machine learning used to measure the similarity of the two representations. Was ranked best at the TREC definition pilot organized before TREC-12 Definition system conceptually close to SMU’s n n n Had pronominal and nominal coreference resolution Used a (probably) better parser (Charniak) Post-ranking of candidate answers using a tf * idf model 52

Overview n n What is Question Answering? A “traditional” system Other relevant approaches Distributed Question Answering 53

Overview n n What is Question Answering? A “traditional” system Other relevant approaches Distributed Question Answering 53

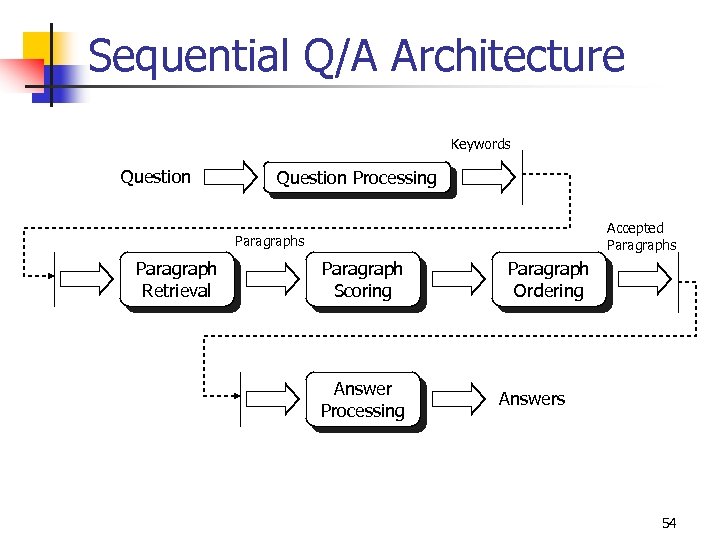

Sequential Q/A Architecture Keywords Question Processing Accepted Paragraphs Paragraph Retrieval Paragraph Scoring Answer Processing Paragraph Ordering Answers 54

Sequential Q/A Architecture Keywords Question Processing Accepted Paragraphs Paragraph Retrieval Paragraph Scoring Answer Processing Paragraph Ordering Answers 54

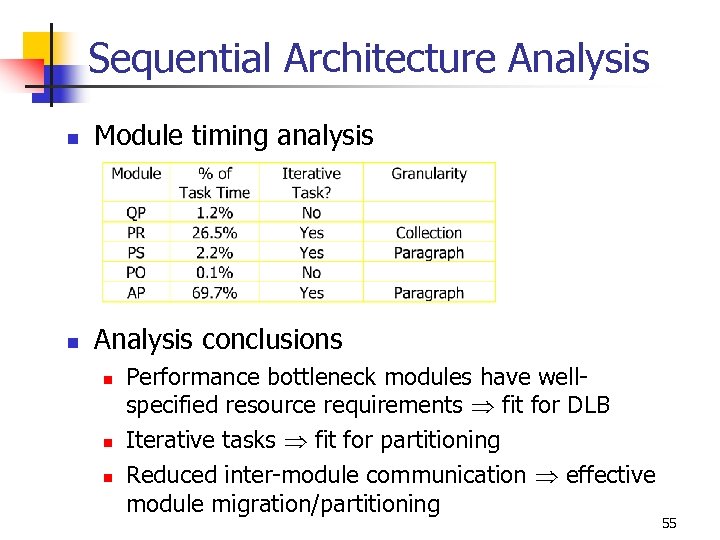

Sequential Architecture Analysis n Module timing analysis n Analysis conclusions n n n Performance bottleneck modules have wellspecified resource requirements fit for DLB Iterative tasks fit for partitioning Reduced inter-module communication effective module migration/partitioning 55

Sequential Architecture Analysis n Module timing analysis n Analysis conclusions n n n Performance bottleneck modules have wellspecified resource requirements fit for DLB Iterative tasks fit for partitioning Reduced inter-module communication effective module migration/partitioning 55

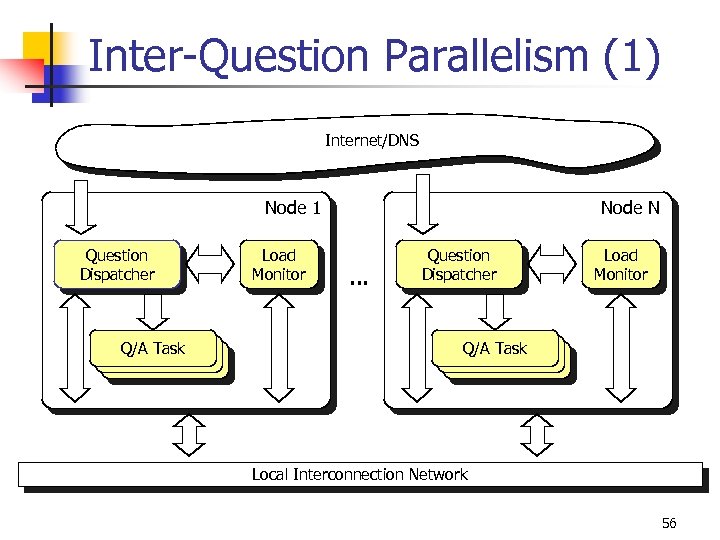

Inter-Question Parallelism (1) Internet/DNS Node 1 Question Dispatcher Q/A Task Load Monitor Node N … Question Dispatcher Load Monitor Q/A Task Local Interconnection Network 56

Inter-Question Parallelism (1) Internet/DNS Node 1 Question Dispatcher Q/A Task Load Monitor Node N … Question Dispatcher Load Monitor Q/A Task Local Interconnection Network 56

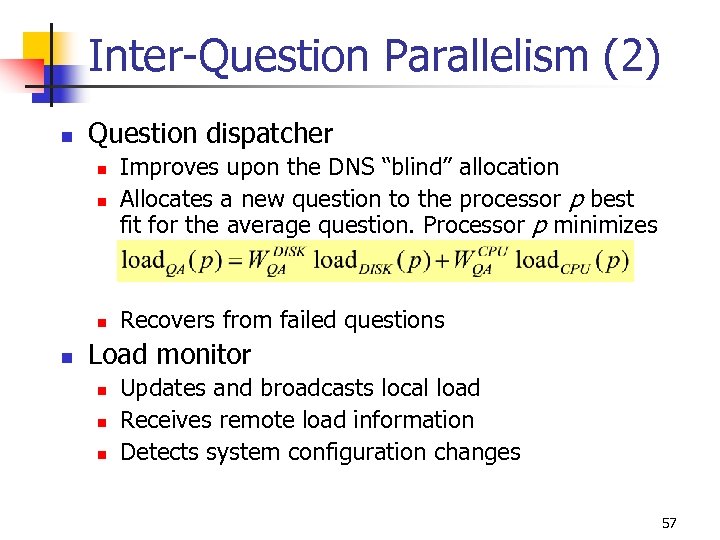

Inter-Question Parallelism (2) n Question dispatcher n n Improves upon the DNS “blind” allocation Allocates a new question to the processor p best fit for the average question. Processor p minimizes Recovers from failed questions Load monitor n n n Updates and broadcasts local load Receives remote load information Detects system configuration changes 57

Inter-Question Parallelism (2) n Question dispatcher n n Improves upon the DNS “blind” allocation Allocates a new question to the processor p best fit for the average question. Processor p minimizes Recovers from failed questions Load monitor n n n Updates and broadcasts local load Receives remote load information Detects system configuration changes 57

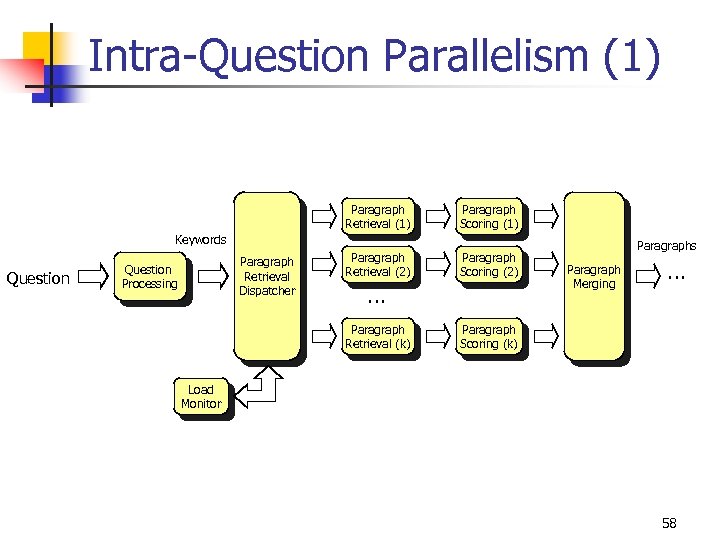

Intra-Question Parallelism (1) Paragraph Retrieval (1) Paragraph Scoring (1) Paragraph Retrieval (2) Paragraph Scoring (2) Keywords Question Paragraph Retrieval Dispatcher Question Processing … Paragraph Retrieval (k) Paragraphs Paragraph Merging … Paragraph Scoring (k) Load Monitor 58

Intra-Question Parallelism (1) Paragraph Retrieval (1) Paragraph Scoring (1) Paragraph Retrieval (2) Paragraph Scoring (2) Keywords Question Paragraph Retrieval Dispatcher Question Processing … Paragraph Retrieval (k) Paragraphs Paragraph Merging … Paragraph Scoring (k) Load Monitor 58

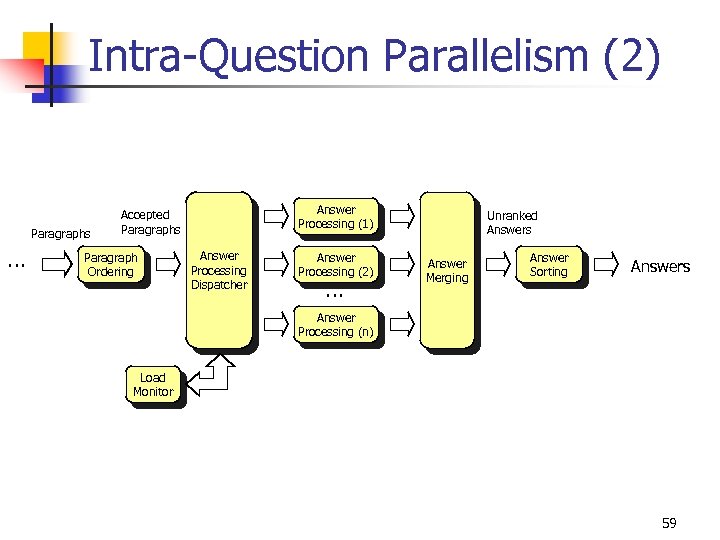

Intra-Question Parallelism (2) Paragraphs … Answer Processing (1) Accepted Paragraphs Paragraph Ordering Answer Processing Dispatcher Answer Processing (2) … Unranked Answers Answer Merging Answer Sorting Answers Answer Processing (n) Load Monitor 59

Intra-Question Parallelism (2) Paragraphs … Answer Processing (1) Accepted Paragraphs Paragraph Ordering Answer Processing Dispatcher Answer Processing (2) … Unranked Answers Answer Merging Answer Sorting Answers Answer Processing (n) Load Monitor 59

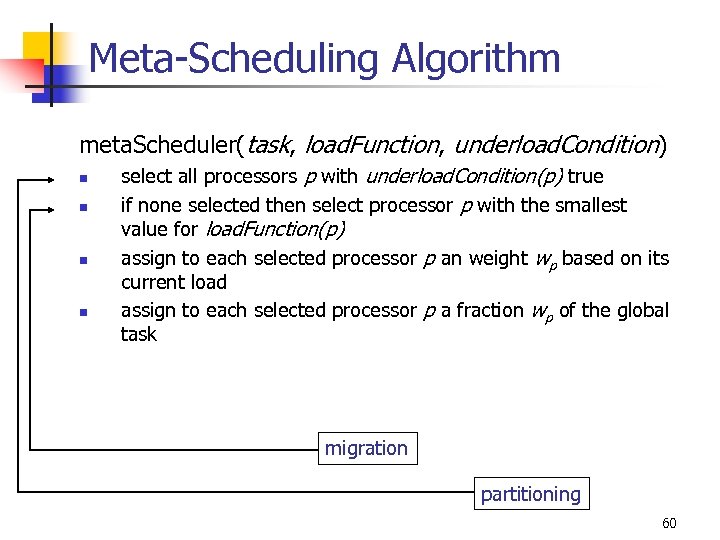

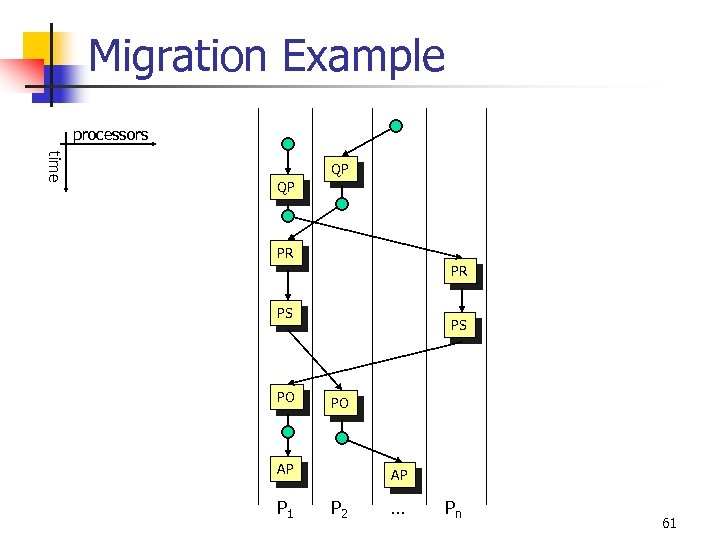

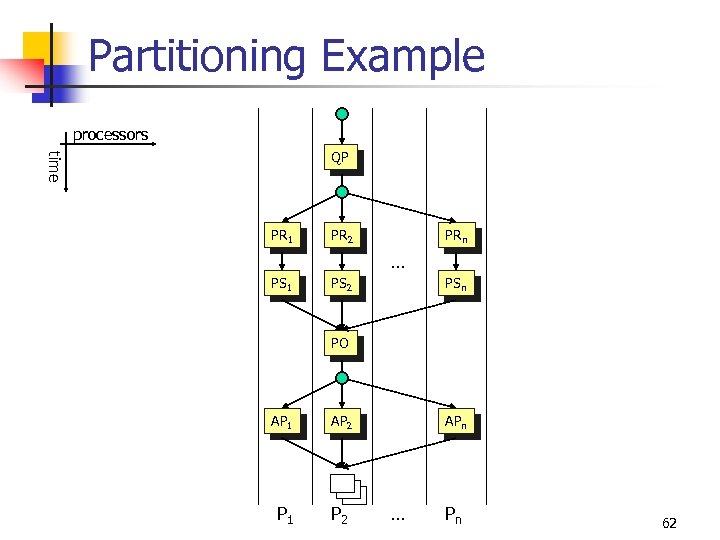

Meta-Scheduling Algorithm meta. Scheduler(task, load. Function, underload. Condition) n n select all processors p with underload. Condition(p) true if none selected then select processor p with the smallest value for load. Function(p) assign to each selected processor p an weight wp based on its current load assign to each selected processor p a fraction wp of the global task migration partitioning 60

Meta-Scheduling Algorithm meta. Scheduler(task, load. Function, underload. Condition) n n select all processors p with underload. Condition(p) true if none selected then select processor p with the smallest value for load. Function(p) assign to each selected processor p an weight wp based on its current load assign to each selected processor p a fraction wp of the global task migration partitioning 60

Migration Example processors time QP QP PR PR PS PO AP P 1 AP P 2 … Pn 61

Migration Example processors time QP QP PR PR PS PO AP P 1 AP P 2 … Pn 61

Partitioning Example processors time QP PR 1 PR 2 PRn … PS 1 PS 2 PSn PO AP 1 AP 2 P 1 P 2 APn … Pn 62

Partitioning Example processors time QP PR 1 PR 2 PRn … PS 1 PS 2 PSn PO AP 1 AP 2 P 1 P 2 APn … Pn 62

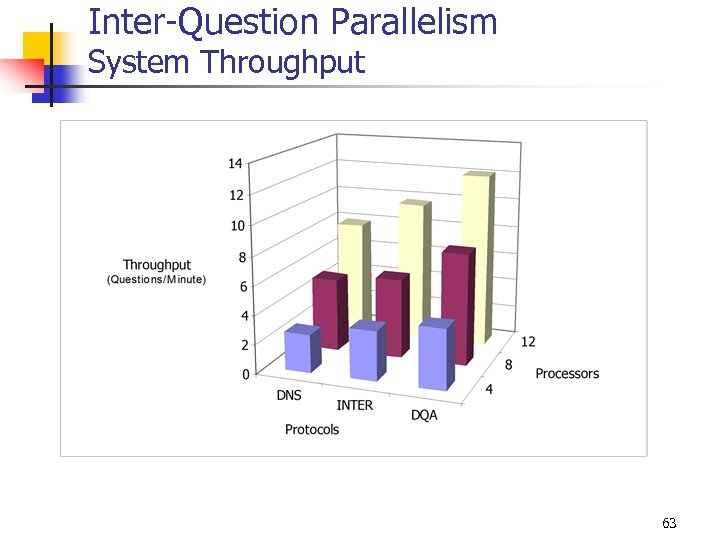

Inter-Question Parallelism System Throughput 63

Inter-Question Parallelism System Throughput 63

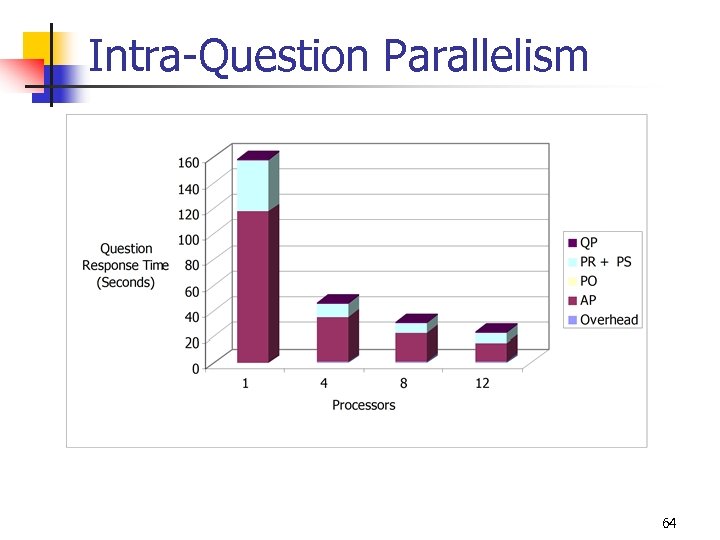

Intra-Question Parallelism 64

Intra-Question Parallelism 64

End Gràcies! 65

End Gràcies! 65