d09972517f459ea09832cfd93447c07a.ppt

- Количество слайдов: 97

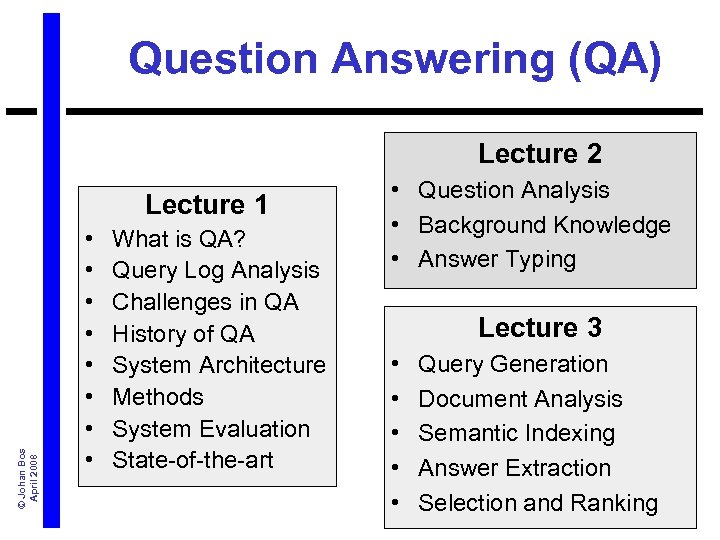

Question Answering (QA) Lecture 2 © Johan Bos April 2008 Lecture 1 • • What is QA? Query Log Analysis Challenges in QA History of QA System Architecture Methods System Evaluation State-of-the-art • Question Analysis • Background Knowledge • Answer Typing Lecture 3 • • • Query Generation Document Analysis Semantic Indexing Answer Extraction Selection and Ranking

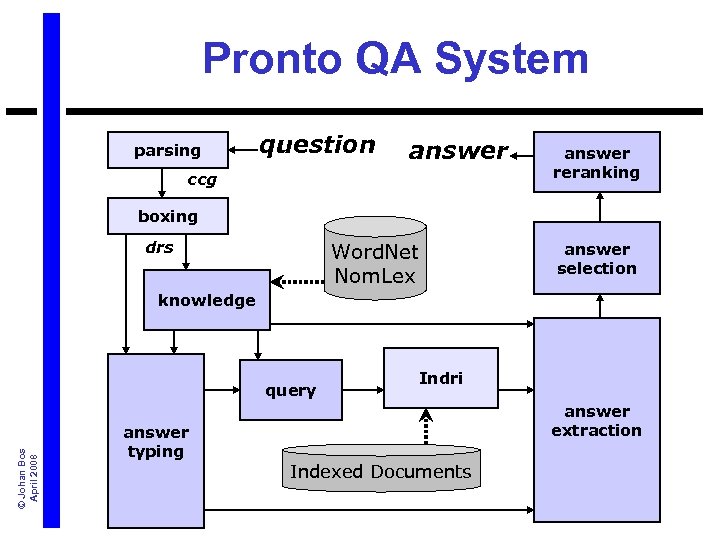

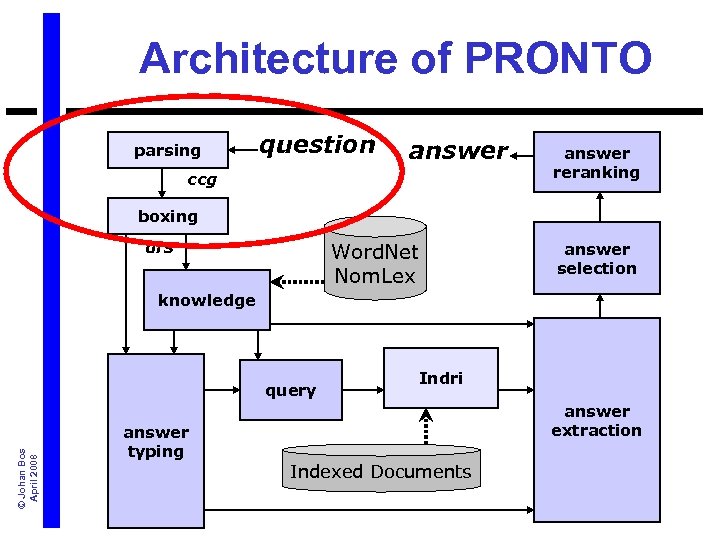

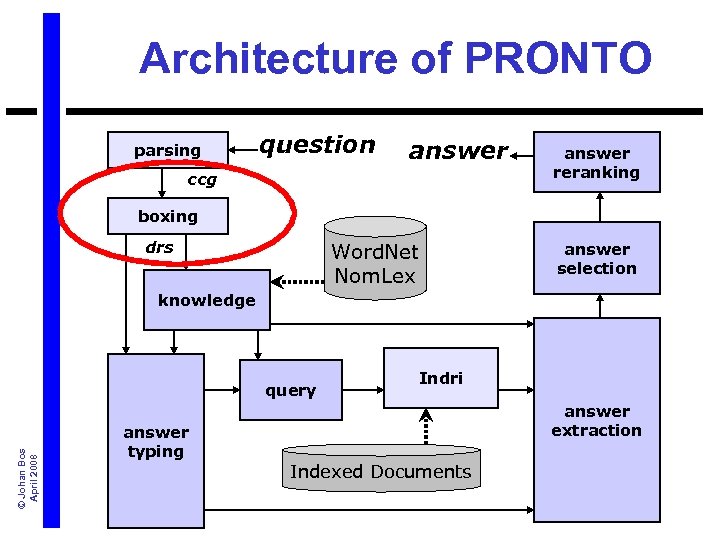

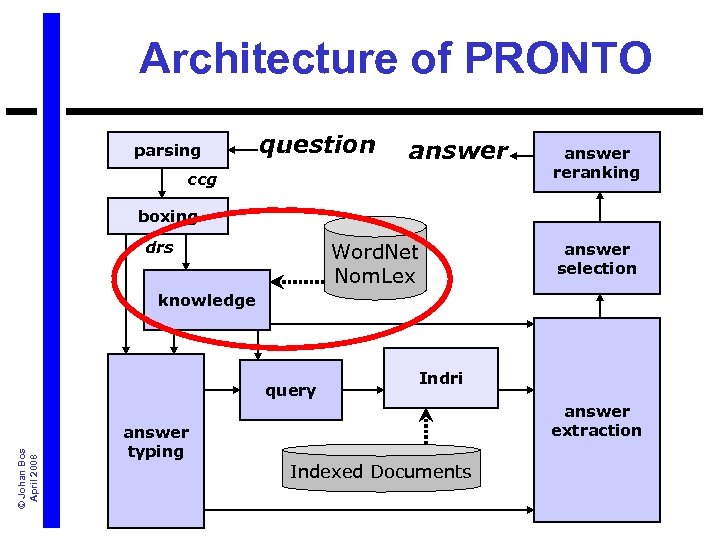

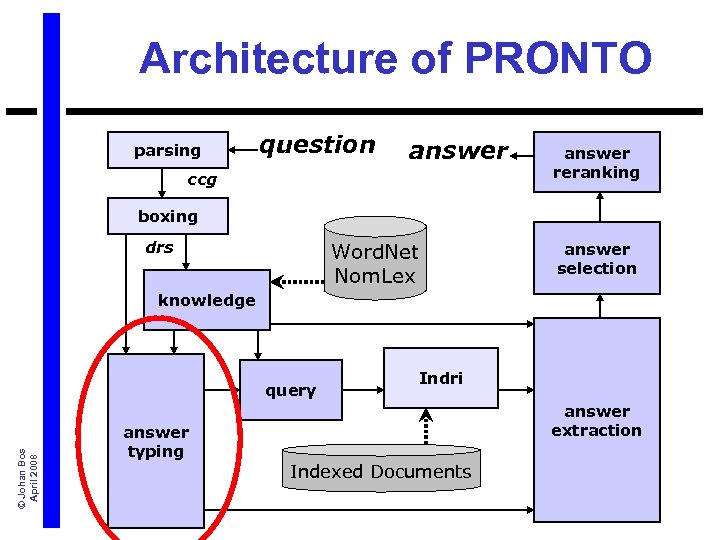

Pronto QA System parsing question answer ccg answer reranking boxing drs Word. Net Nom. Lex answer selection knowledge © Johan Bos April 2008 query answer typing Indri answer extraction Indexed Documents

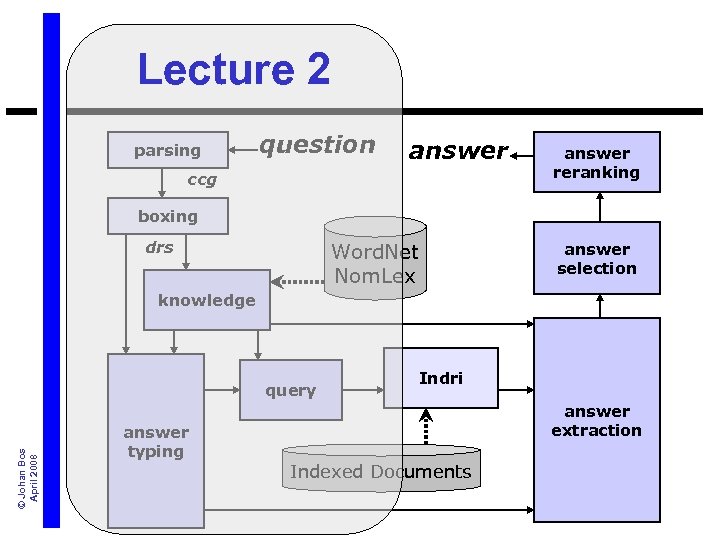

Lecture 2 parsing question answer ccg answer reranking boxing drs Word. Net Nom. Lex answer selection knowledge © Johan Bos April 2008 query answer typing Indri answer extraction Indexed Documents

Question Answering (QA) Lecture 2 © Johan Bos April 2008 Ø Question Analysis • Background Knowledge • Answer Typing

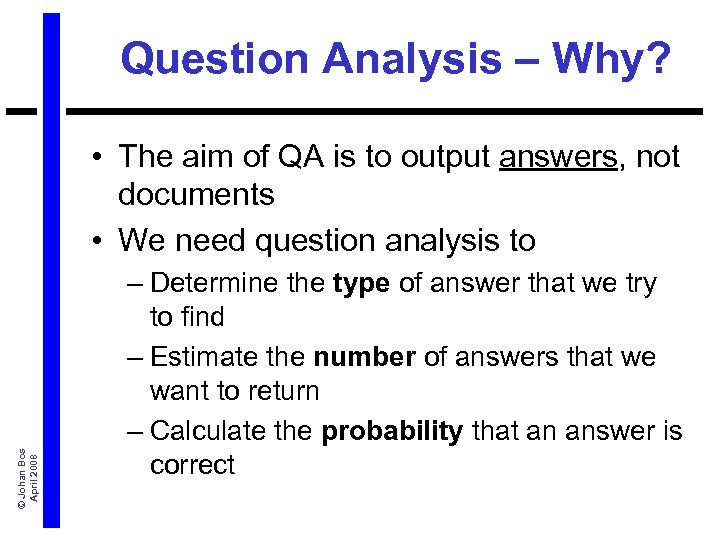

Question Analysis – Why? © Johan Bos April 2008 • The aim of QA is to output answers, not documents • We need question analysis to – Determine the type of answer that we try to find – Estimate the number of answers that we want to return – Calculate the probability that an answer is correct

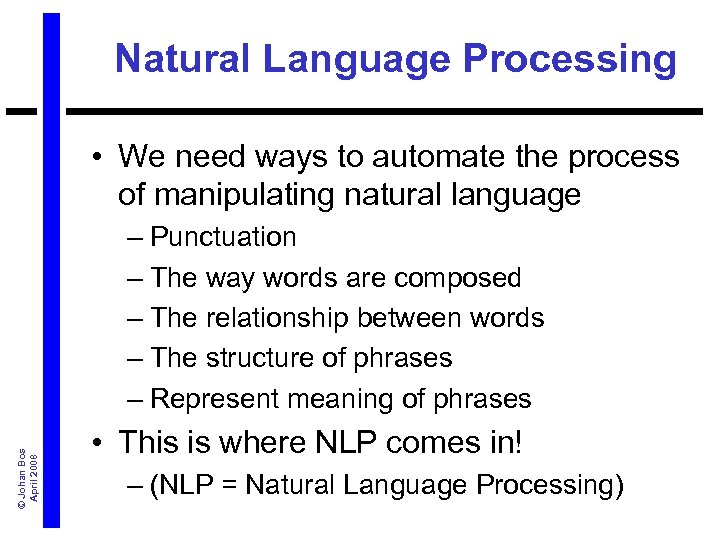

Natural Language Processing • We need ways to automate the process of manipulating natural language © Johan Bos April 2008 – Punctuation – The way words are composed – The relationship between words – The structure of phrases – Represent meaning of phrases • This is where NLP comes in! – (NLP = Natural Language Processing)

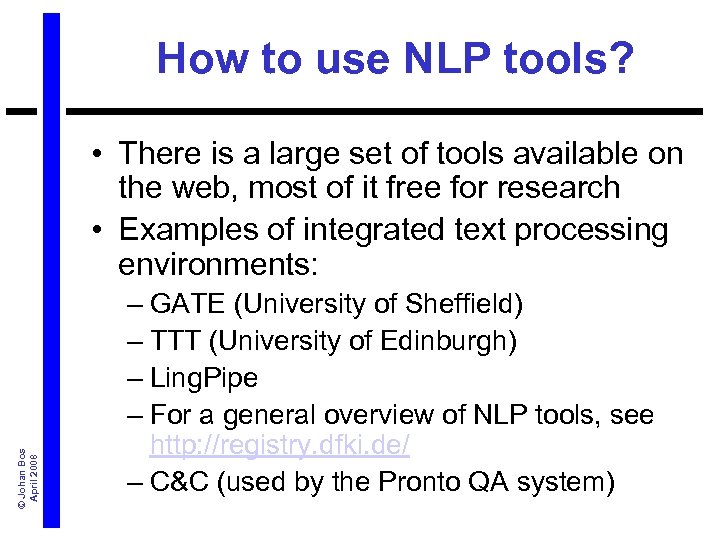

How to use NLP tools? © Johan Bos April 2008 • There is a large set of tools available on the web, most of it free for research • Examples of integrated text processing environments: – GATE (University of Sheffield) – TTT (University of Edinburgh) – Ling. Pipe – For a general overview of NLP tools, see http: //registry. dfki. de/ – C&C (used by the Pronto QA system)

Architecture of PRONTO parsing question answer ccg answer reranking boxing drs Word. Net Nom. Lex answer selection knowledge © Johan Bos April 2008 query answer typing Indri answer extraction Indexed Documents

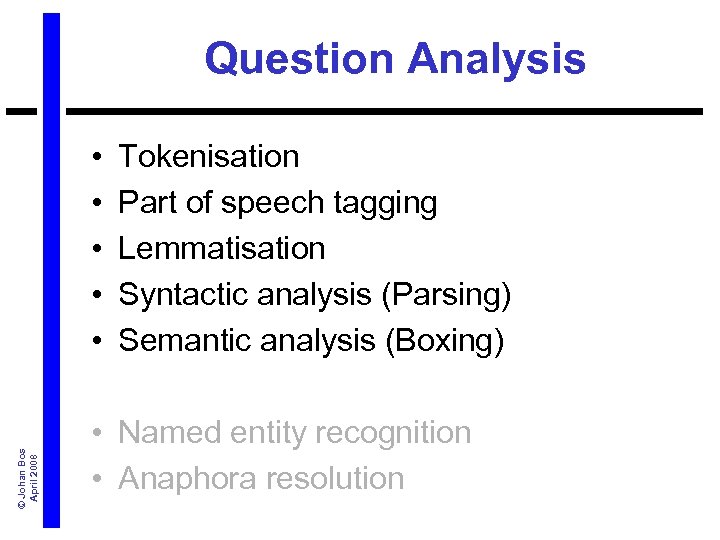

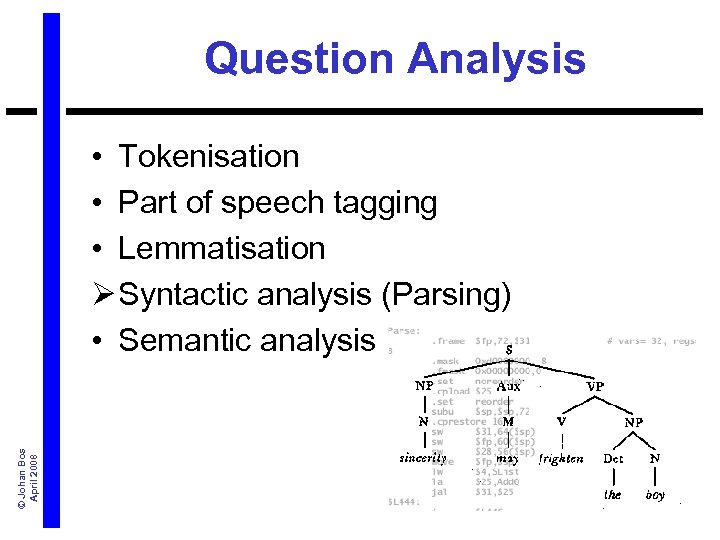

Question Analysis © Johan Bos April 2008 • • • Tokenisation Part of speech tagging Lemmatisation Syntactic analysis (Parsing) Semantic analysis (Boxing) • Named entity recognition • Anaphora resolution

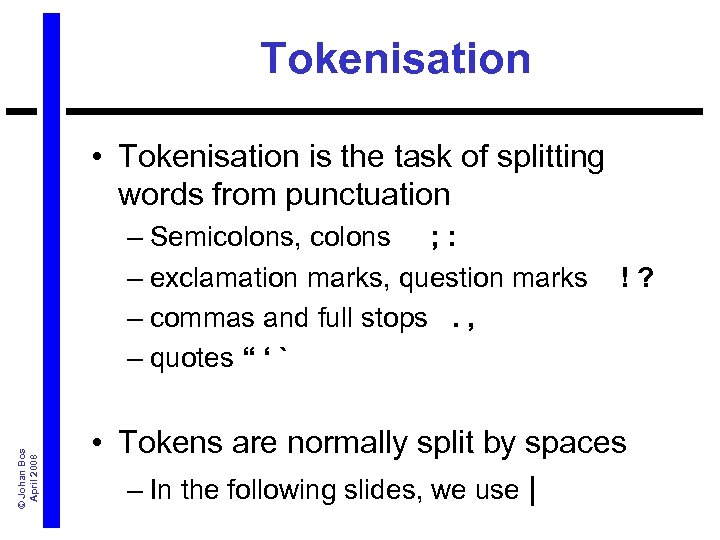

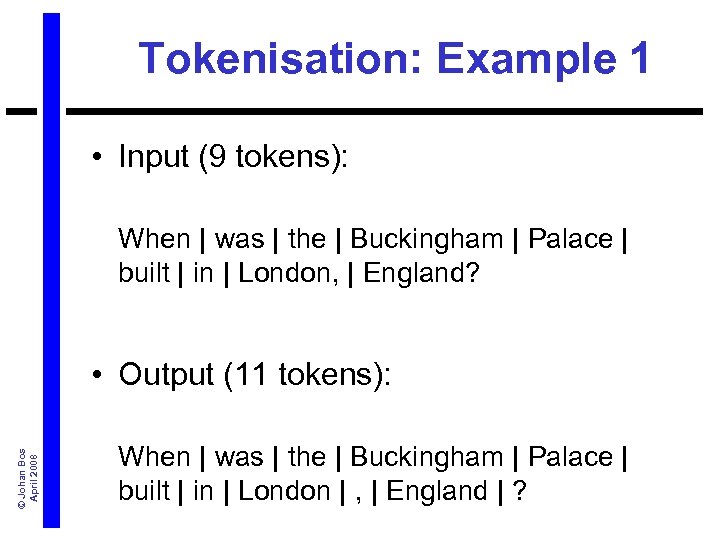

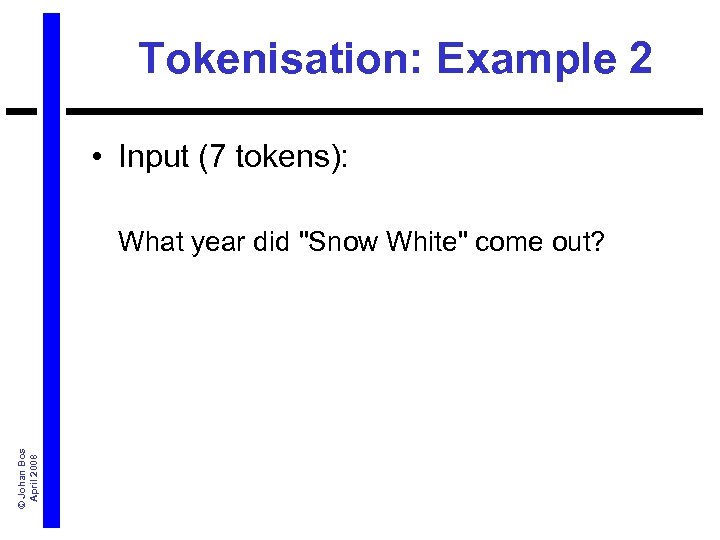

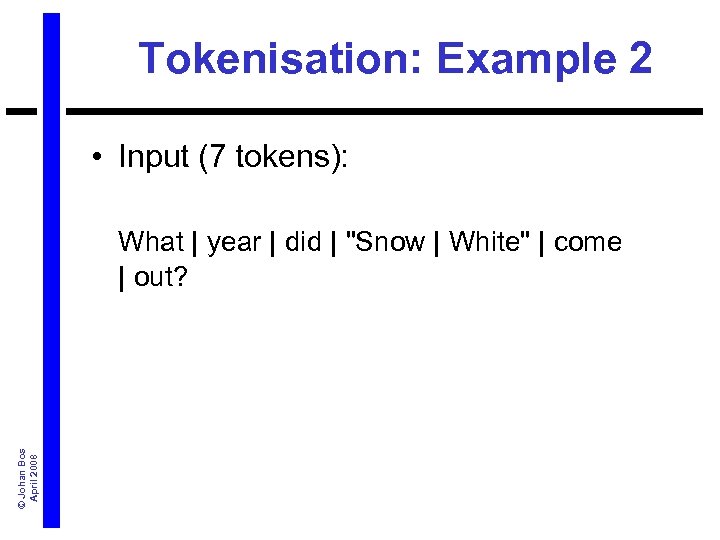

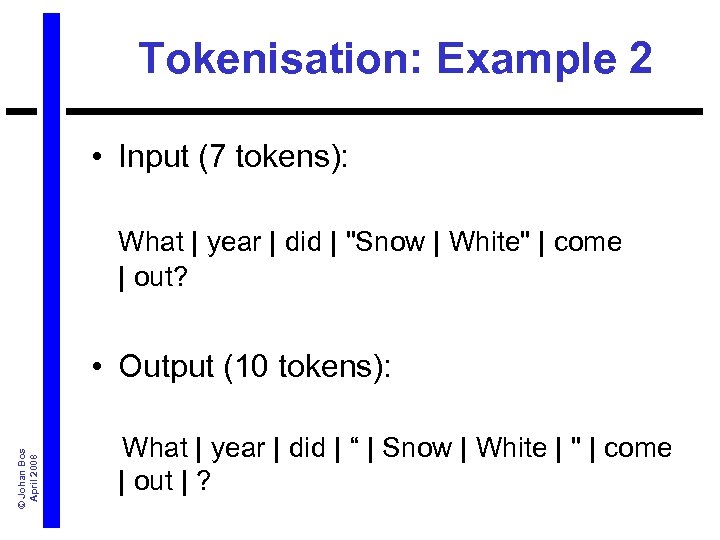

Tokenisation • Tokenisation is the task of splitting words from punctuation © Johan Bos April 2008 – Semicolons, colons ; : – exclamation marks, question marks ! ? – commas and full stops . , – quotes “ ‘ ` • Tokens are normally split by spaces – In the following slides, we use |

Tokenisation: Example 1 • Input (9 tokens): © Johan Bos April 2008 When was the Buckingham Palace built in London, England?

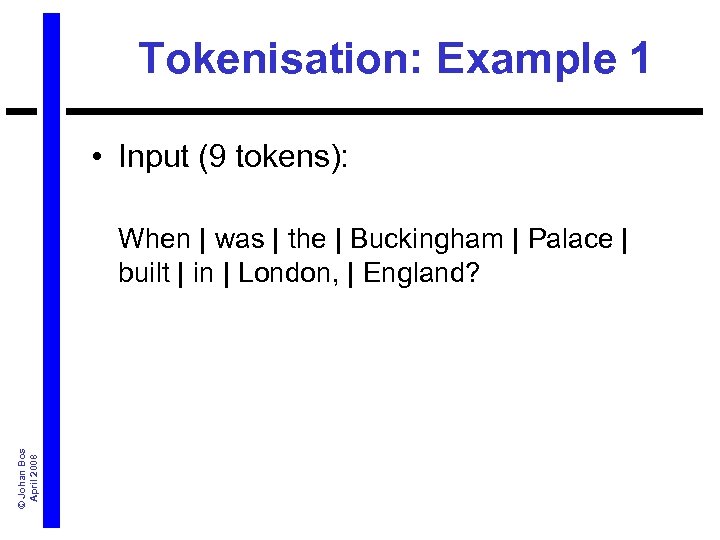

Tokenisation: Example 1 • Input (9 tokens): © Johan Bos April 2008 When | was | the | Buckingham | Palace | built | in | London, | England?

Tokenisation: Example 1 • Input (9 tokens): When | was | the | Buckingham | Palace | built | in | London, | England? © Johan Bos April 2008 • Output (11 tokens): When | was | the | Buckingham | Palace | built | in | London | , | England | ?

Tokenisation: Example 2 • Input (7 tokens): © Johan Bos April 2008 What year did "Snow White" come out?

Tokenisation: Example 2 • Input (7 tokens): © Johan Bos April 2008 What | year | did | "Snow | White" | come | out?

Tokenisation: Example 2 • Input (7 tokens): What | year | did | "Snow | White" | come | out? © Johan Bos April 2008 • Output (10 tokens): What | year | did | “ | Snow | White | " | come | out | ?

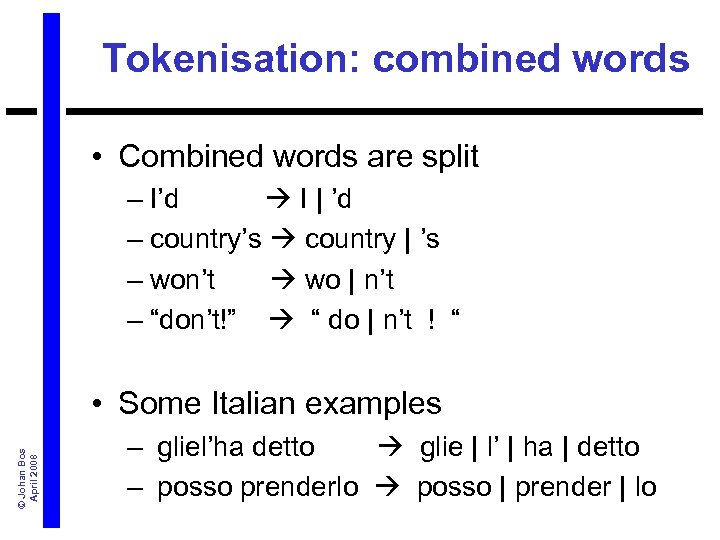

Tokenisation: combined words • Combined words are split – I’d I | ’d – country’s country | ’s – won’t wo | n’t – “don’t!” “ do | n’t ! “ © Johan Bos April 2008 • Some Italian examples – gliel’ha detto glie | l’ | ha | detto – posso prenderlo posso | prender | lo

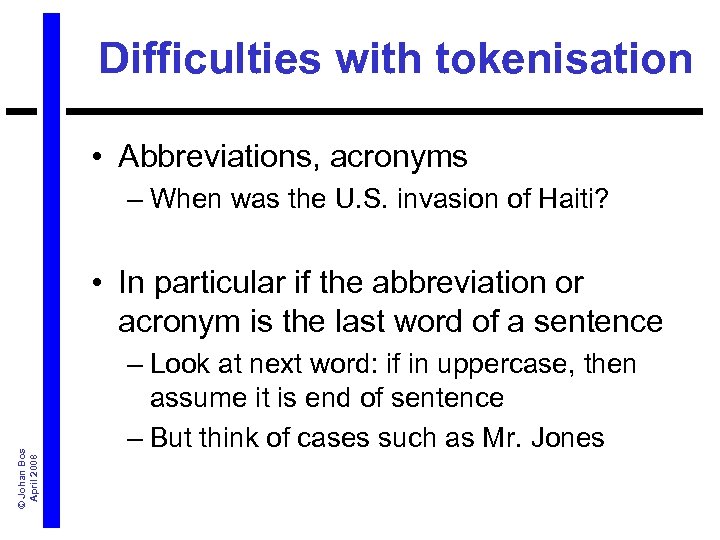

Difficulties with tokenisation • Abbreviations, acronyms – When was the U. S. invasion of Haiti? © Johan Bos April 2008 • In particular if the abbreviation or acronym is the last word of a sentence – Look at next word: if in uppercase, then assume it is end of sentence – But think of cases such as Mr. Jones

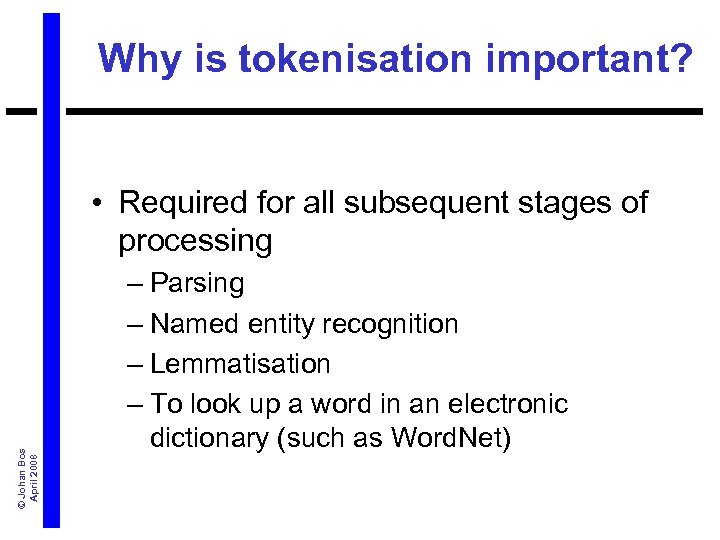

Why is tokenisation important? © Johan Bos April 2008 • Required for all subsequent stages of processing – Parsing – Named entity recognition – Lemmatisation – To look up a word in an electronic dictionary (such as Word. Net)

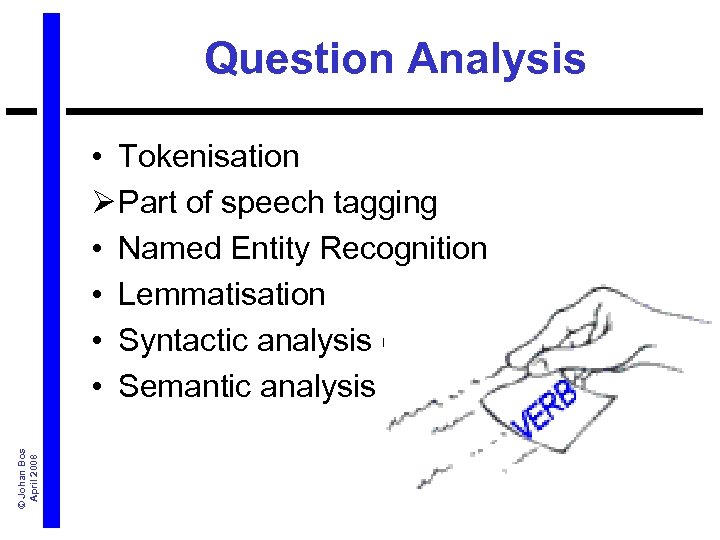

Question Analysis © Johan Bos April 2008 • Tokenisation Ø Part of speech tagging • Named Entity Recognition • Lemmatisation • Syntactic analysis (Parsing) • Semantic analysis (Boxing)

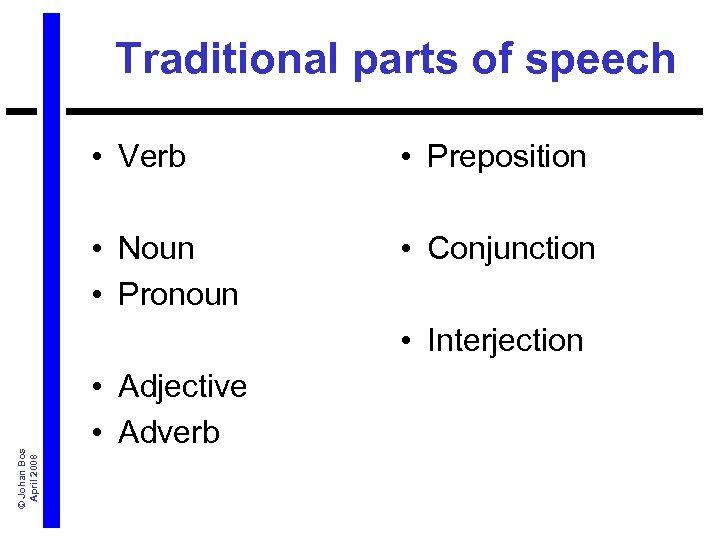

Traditional parts of speech • Verb • Preposition • Noun • Pronoun • Conjunction © Johan Bos April 2008 • Interjection • Adjective • Adverb

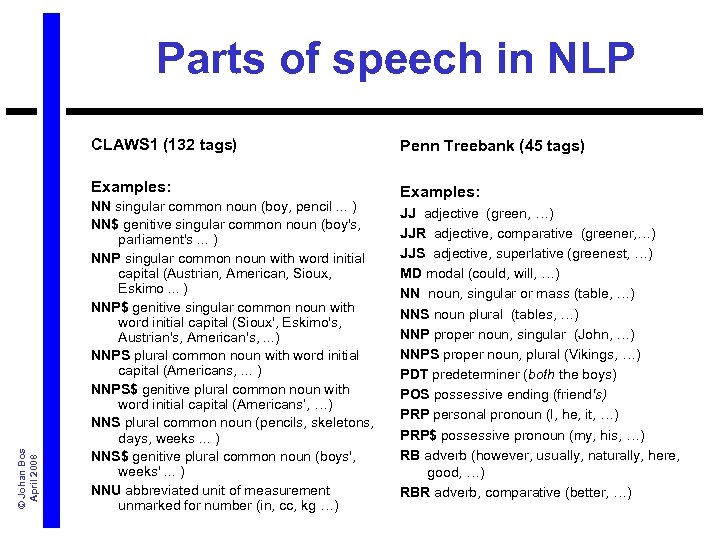

Parts of speech in NLP Penn Treebank (45 tags) Examples: © Johan Bos April 2008 CLAWS 1 (132 tags) Examples: NN singular common noun (boy, pencil. . . ) NN$ genitive singular common noun (boy's, parliament's. . . ) NNP singular common noun with word initial capital (Austrian, American, Sioux, Eskimo. . . ) NNP$ genitive singular common noun with word initial capital (Sioux', Eskimo's, Austrian's, American's, . . . ) NNPS plural common noun with word initial capital (Americans, . . . ) NNPS$ genitive plural common noun with word initial capital (Americans‘, …) NNS plural common noun (pencils, skeletons, days, weeks. . . ) NNS$ genitive plural common noun (boys', weeks'. . . ) NNU abbreviated unit of measurement unmarked for number (in, cc, kg …) JJ adjective (green, …) JJR adjective, comparative (greener, …) JJS adjective, superlative (greenest, …) MD modal (could, will, …) NN noun, singular or mass (table, …) NNS noun plural (tables, …) NNP proper noun, singular (John, …) NNPS proper noun, plural (Vikings, …) PDT predeterminer (both the boys) POS possessive ending (friend's) PRP personal pronoun (I, he, it, …) PRP$ possessive pronoun (my, his, …) RB adverb (however, usually, naturally, here, good, …) RBR adverb, comparative (better, …)

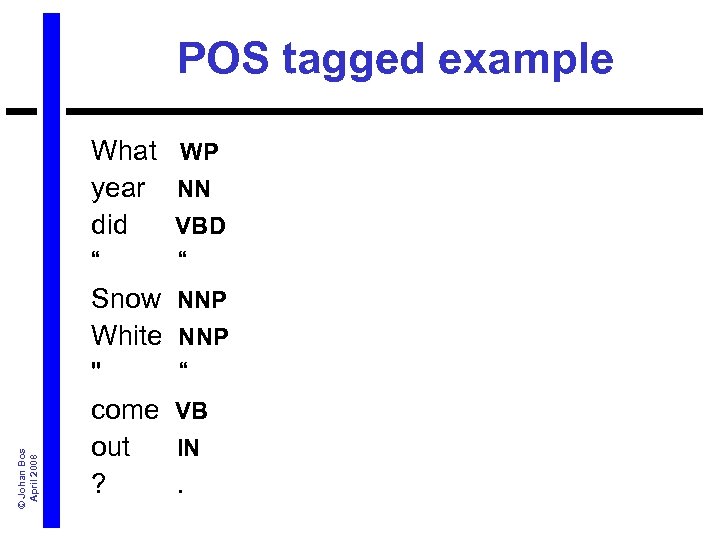

© Johan Bos April 2008 POS tagged example What year did “ Snow White " come out ?

© Johan Bos April 2008 POS tagged example What WP year NN did VBD “ “ Snow NNP White NNP " “ come VB out IN ? .

Why is POS-tagging important? • To disambiguate words • For instance, to distinguish “book” used as a noun from “book” used as a verb © Johan Bos April 2008 – Where can I find a book on cooking? – Where can I book a room? • Prerequisite for further processing stages, such as parsing

Question Analysis © Johan Bos April 2008 • Tokenisation • Part of speech tagging Ø Lemmatisation • Syntactic analysis (Parsing) • Semantic analysis (Boxing)

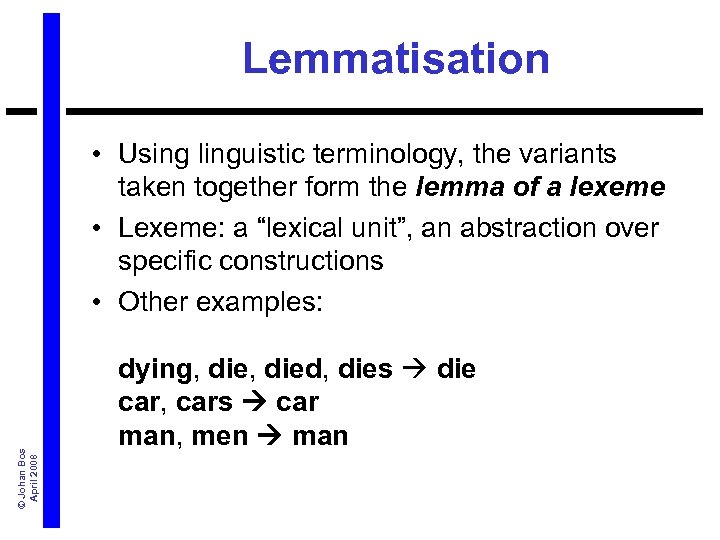

Lemmatisation • Lemmatising means © Johan Bos April 2008 – grouping morphological variants of words under a single headword • For example, you could group the words am, was, are, is, were, and been together under the word be

Lemmatisation • Lemmatising means © Johan Bos April 2008 – grouping morphological variants of words under a single headword • For example, you could group the words am, was, are, is, were, and been together under the word be

Lemmatisation © Johan Bos April 2008 • Using linguistic terminology, the variants taken together form the lemma of a lexeme • Lexeme: a “lexical unit”, an abstraction over specific constructions • Other examples: dying, died, dies die car, cars car man, men man

Question Analysis © Johan Bos April 2008 • Tokenisation • Part of speech tagging • Lemmatisation Ø Syntactic analysis (Parsing) • Semantic analysis (Boxing)

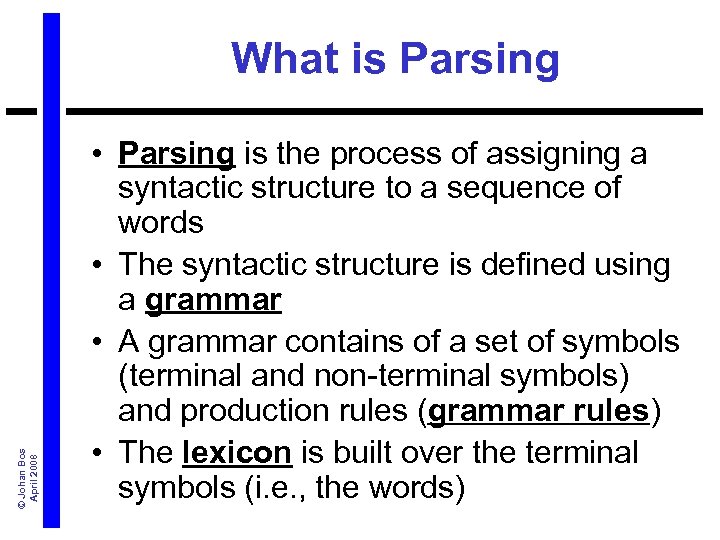

© Johan Bos April 2008 What is Parsing • Parsing is the process of assigning a syntactic structure to a sequence of words • The syntactic structure is defined using a grammar • A grammar contains of a set of symbols (terminal and non-terminal symbols) and production rules (grammar rules) • The lexicon is built over the terminal symbols (i. e. , the words)

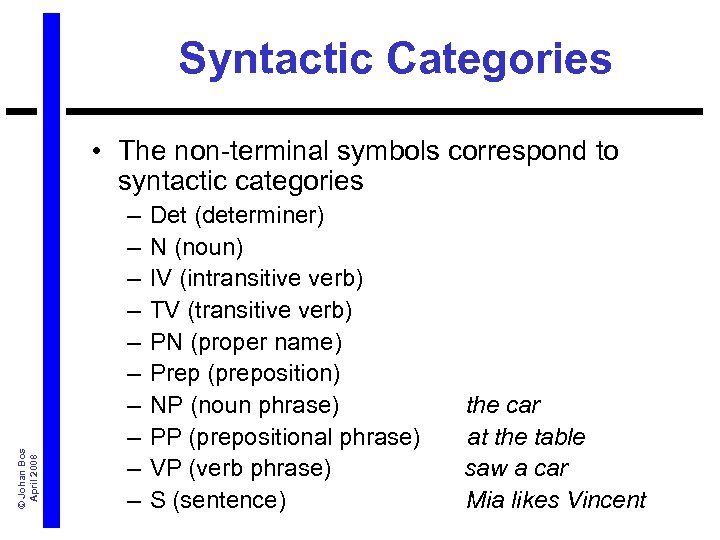

Syntactic Categories © Johan Bos April 2008 • The non-terminal symbols correspond to syntactic categories – – – – – Det (determiner) N (noun) IV (intransitive verb) TV (transitive verb) PN (proper name) Prep (preposition) NP (noun phrase) the car PP (prepositional phrase) at the table VP (verb phrase) saw a car S (sentence) Mia likes Vincent

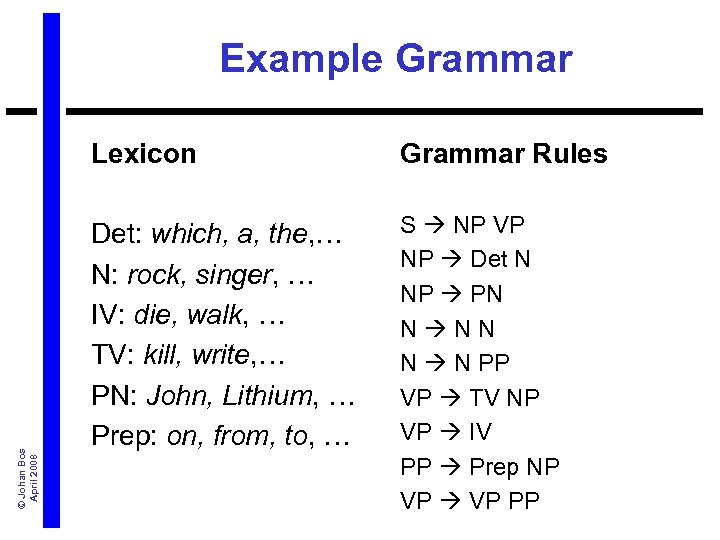

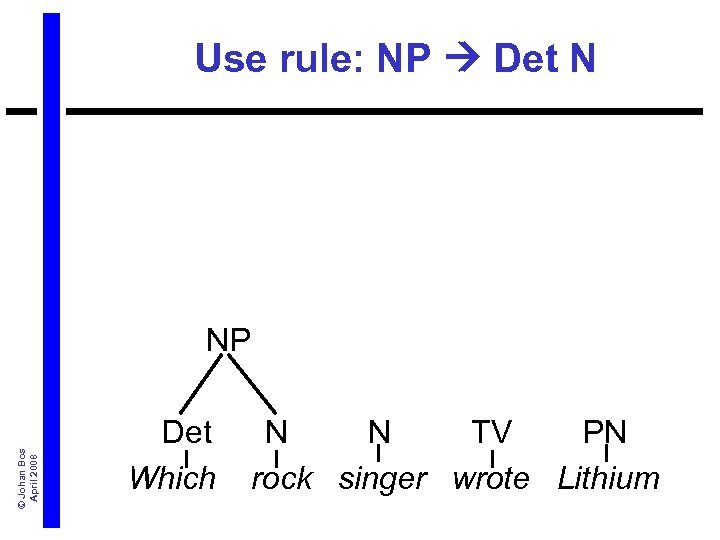

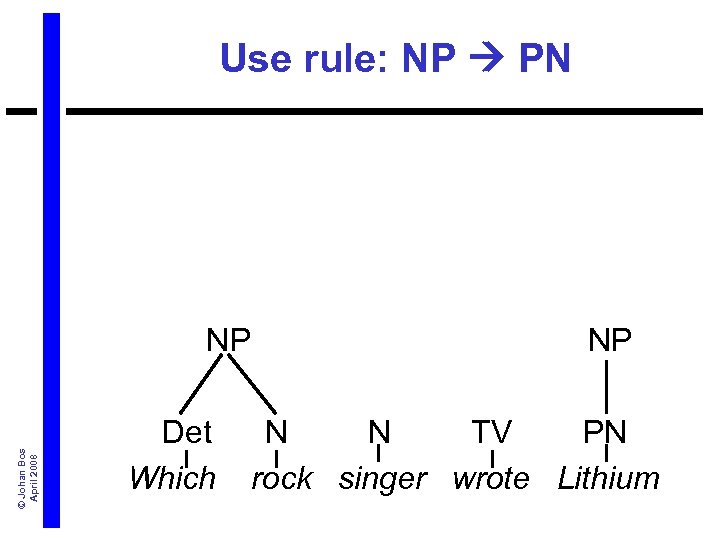

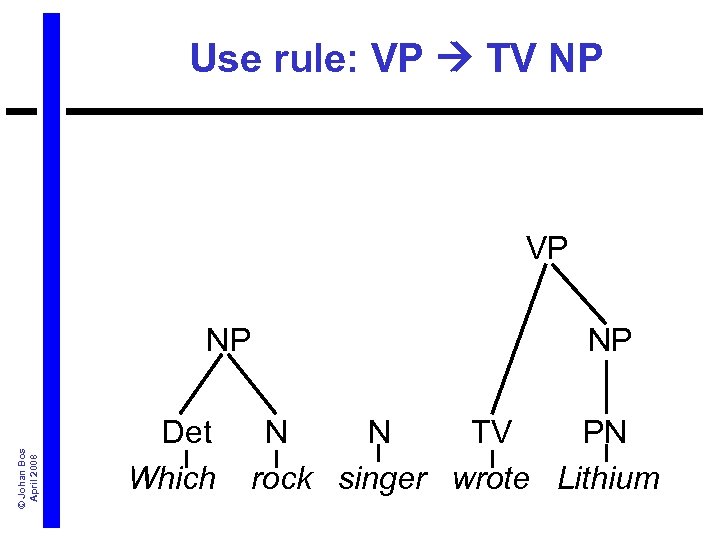

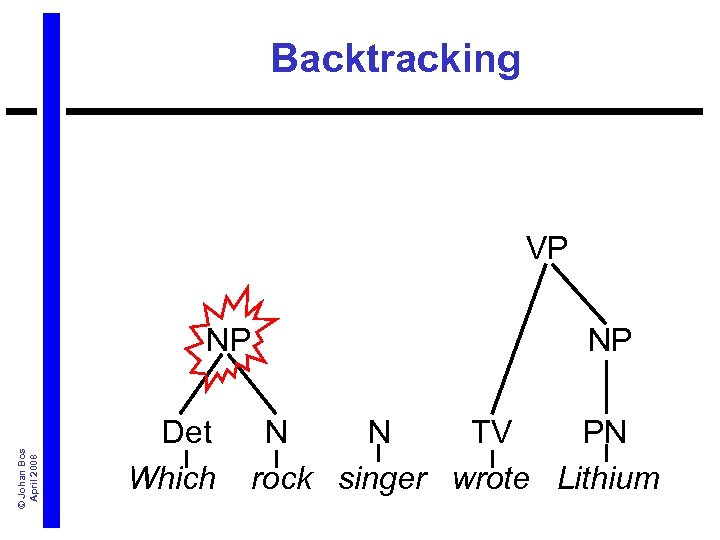

Example Grammar © Johan Bos April 2008 Lexicon Grammar Rules Det: which, a, the, … N: rock, singer, … IV: die, walk, … TV: kill, write, … PN: John, Lithium, … Prep: on, from, to, … S NP VP NP Det N NP PN N N PP VP TV NP VP IV PP Prep NP VP PP

© Johan Bos April 2008 The Parser • A parser automates the process of parsing • The input of the parser is a string of words (annotated with POS-tags) • The output of a parser is a parse tree, connecting all the words • The way a parse tree is constructed is also called a derivation

Derivation Example © Johan Bos April 2008 Which rock singer wrote Lithium

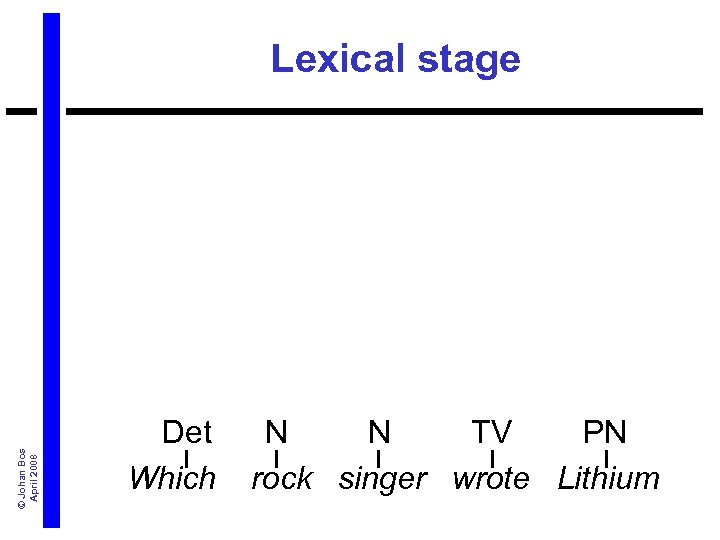

Lexical stage © Johan Bos April 2008 Det N TV PN Which rock singer wrote Lithium

Use rule: NP Det N © Johan Bos April 2008 NP Det N TV PN Which rock singer wrote Lithium

Use rule: NP PN © Johan Bos April 2008 NP Det N TV PN Which rock singer wrote Lithium

Use rule: VP TV NP © Johan Bos April 2008 VP NP Det N TV PN Which rock singer wrote Lithium

Backtracking © Johan Bos April 2008 VP NP Det N TV PN Which rock singer wrote Lithium

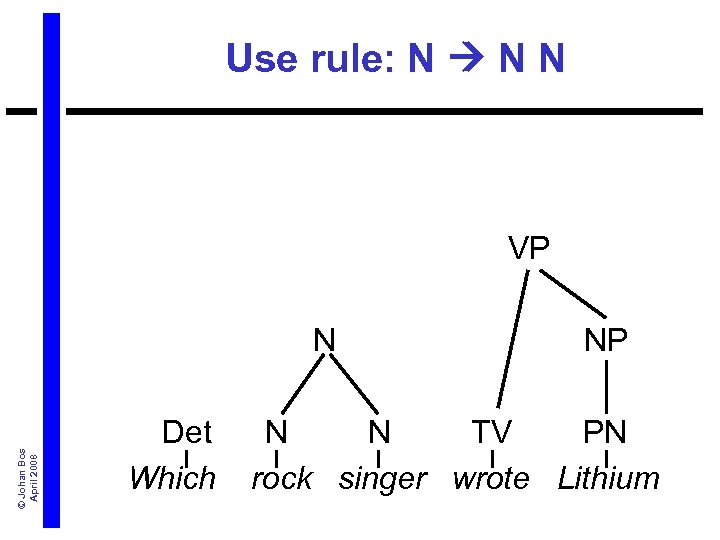

Use rule: N N N © Johan Bos April 2008 VP NP Det N TV PN Which rock singer wrote Lithium

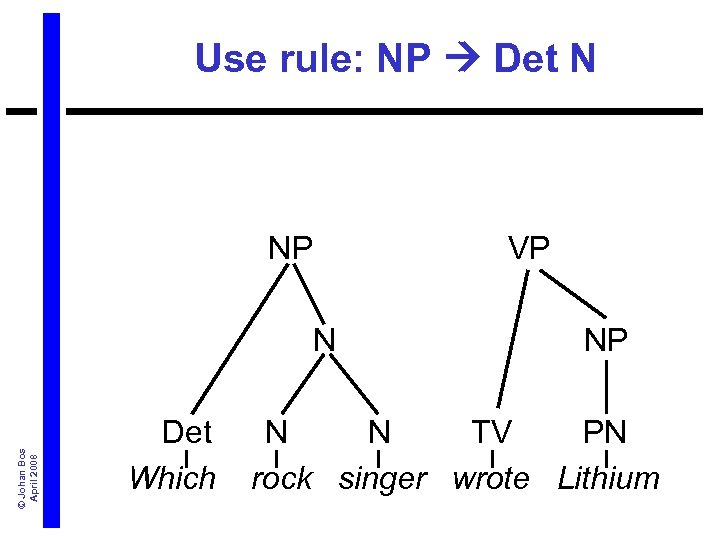

Use rule: NP Det N © Johan Bos April 2008 NP VP NP Det N TV PN Which rock singer wrote Lithium

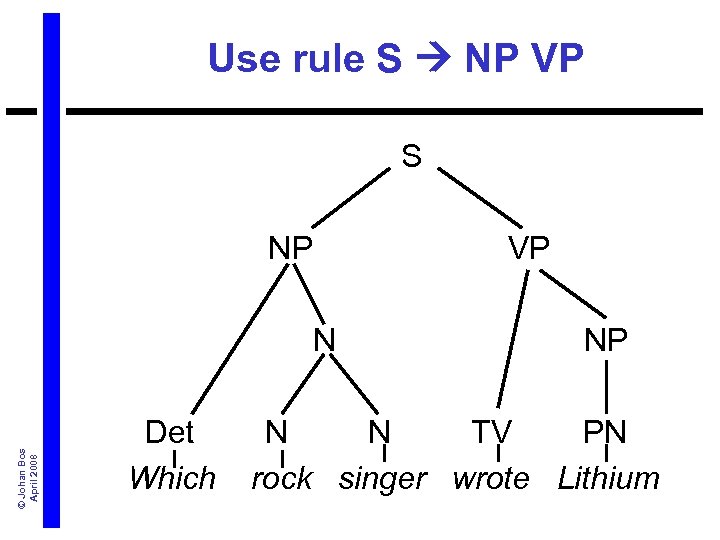

Use rule S NP VP S © Johan Bos April 2008 NP VP NP Det N TV PN Which rock singer wrote Lithium

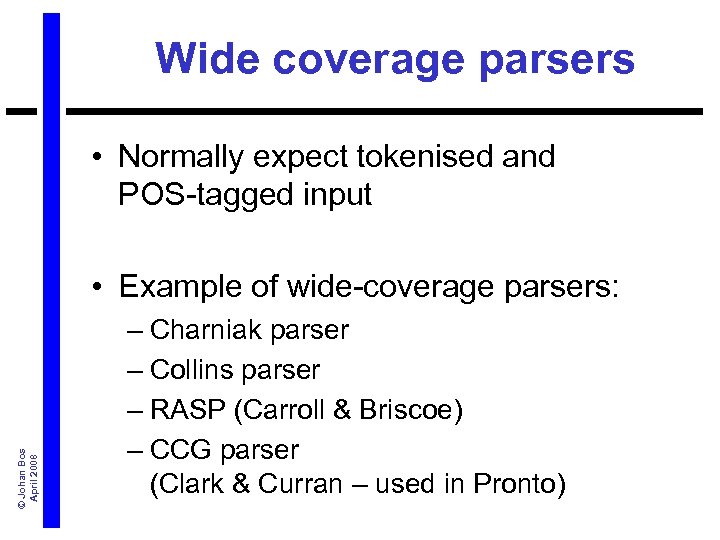

Wide coverage parsers • Normally expect tokenised and POS-tagged input © Johan Bos April 2008 • Example of wide-coverage parsers: – Charniak parser – Collins parser – RASP (Carroll & Briscoe) – CCG parser (Clark & Curran – used in Pronto)

![Output C&C parser © Johan Bos April 2008 ba('S[wq]', fa('S[wq]/(S[q]/PP)', fc('(S[wq]/(S[q]/PP))/N', lf(1, '(S[wq]/(S[q]/PP))/(S[wq]/(S[q]/NP))'), lf(2, Output C&C parser © Johan Bos April 2008 ba('S[wq]', fa('S[wq]/(S[q]/PP)', fc('(S[wq]/(S[q]/PP))/N', lf(1, '(S[wq]/(S[q]/PP))/(S[wq]/(S[q]/NP))'), lf(2,](https://present5.com/presentation/d09972517f459ea09832cfd93447c07a/image-45.jpg)

Output C&C parser © Johan Bos April 2008 ba('S[wq]', fa('S[wq]/(S[q]/PP)', fc('(S[wq]/(S[q]/PP))/N', lf(1, '(S[wq]/(S[q]/PP))/(S[wq]/(S[q]/NP))'), lf(2, '(S[wq]/(S[q]/NP))/N')), lf(3, 'N')), fc('S[q]/PP', fa('S[q]/(S[b]NP)', lf(4, '(S[q]/(S[b]NP))/NP'), lex('N', 'NP', lf(5, 'N'))), lf(6, '(S[b]NP)/PP'))), lf(7, 'S[wq]')). w(1, 'For', w(2, which, w(3, newspaper, w(4, does, w(5, 'Krugman', w(6, write, w(7, ? , for, which, newspaper, do, krugman, write, ? , 'IN', 'O', '(S[wq]/(S[q]/PP))/(S[wq]/(S[q]/NP))'). 'WDT', 'O', '(S[wq]/(S[q]/NP))/N'). 'NN', 'O', 'N'). 'VBZ', 'O', '(S[q]/(S[b]NP))/NP'). 'NNP', 'I-PER', 'N'). 'VB', 'O', '(S[b]NP)/PP'). '. ', 'O', 'S[wq]').

Question Analysis © Johan Bos April 2008 • Tokenisation • Part of speech tagging • Lemmatisation • Syntactic analysis (Parsing) Ø Semantic analysis (Boxing)

Architecture of PRONTO parsing question answer ccg answer reranking boxing drs Word. Net Nom. Lex answer selection knowledge © Johan Bos April 2008 query answer typing Indri answer extraction Indexed Documents

© Johan Bos April 2008 Boxing (Semantic Analysis) • Providing a semantic analysis on the basis of the syntactic analysis • A semantic analysis of a question offers an abstract representation of the meaning of the question • Boxer uses a particular semantic theory: Discourse Representation Theory

Discourse Representation Theory © Johan Bos April 2008 • Meaning of natural language expressions represented in first-order logic • No formulas but box representation (without explicit quantification and conjunction) • DRT covers a wide range of linguistic phenomena (Kamp & Reyle)

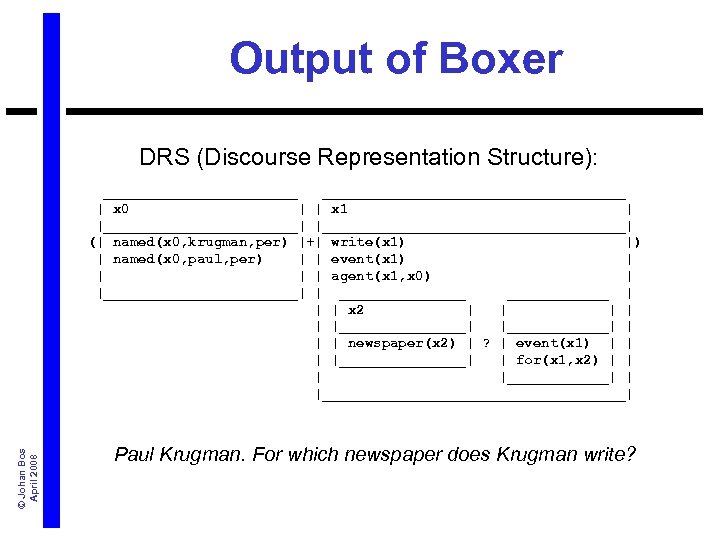

Output of Boxer DRS (Discourse Representation Structure): © Johan Bos April 2008 ______________________________ | x 0 | | x 1 | |______________________________| (| named(x 0, krugman, per) |+| write(x 1) |) | named(x 0, paul, per) | | event(x 1) | | agent(x 1, x 0) | |____________| | ________ | | | x 2 | | |________| |______| | newspaper(x 2) | ? | event(x 1) | |________| | for(x 1, x 2) | |______| | |__________________| Paul Krugman. For which newspaper does Krugman write?

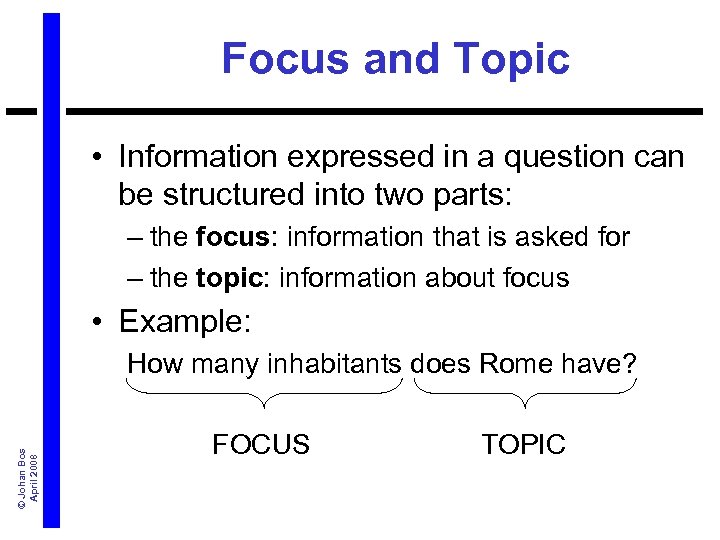

Focus and Topic • Information expressed in a question can be structured into two parts: – the focus: information that is asked for – the topic: information about focus • Example: © Johan Bos April 2008 How many inhabitants does Rome have? FOCUS TOPIC

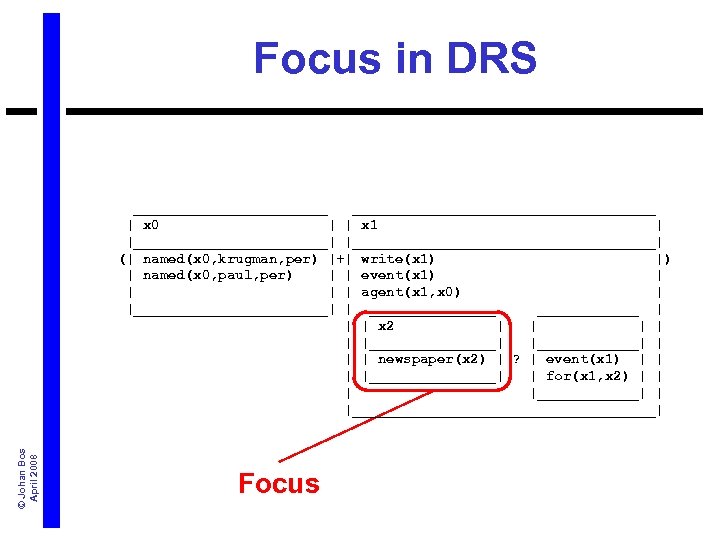

Focus in DRS © Johan Bos April 2008 ______________________________ | x 0 | | x 1 | |______________________________| (| named(x 0, krugman, per) |+| write(x 1) |) | named(x 0, paul, per) | | event(x 1) | | agent(x 1, x 0) | |____________| | ________ | | | x 2 | | |________| |______| | newspaper(x 2) | ? | event(x 1) | |________| | for(x 1, x 2) | |______| | |__________________| Focus

Question Answering (QA) Lecture 2 © Johan Bos April 2008 • Question Analysis Ø Background Knowledge • Answer Typing

Architecture of PRONTO parsing question answer ccg answer reranking boxing drs Word. Net Nom. Lex answer selection knowledge © Johan Bos April 2008 query answer typing Indri answer extraction Indexed Documents

Knowledge Construction • The knowledge component in Pronto constructs a local knowledge base for a the question under consideration – This knowledge is used in subsequent components © Johan Bos April 2008 • The task of the knowledge component is to find all relevant knowledge that might be used – As little as possible to ensure efficiency

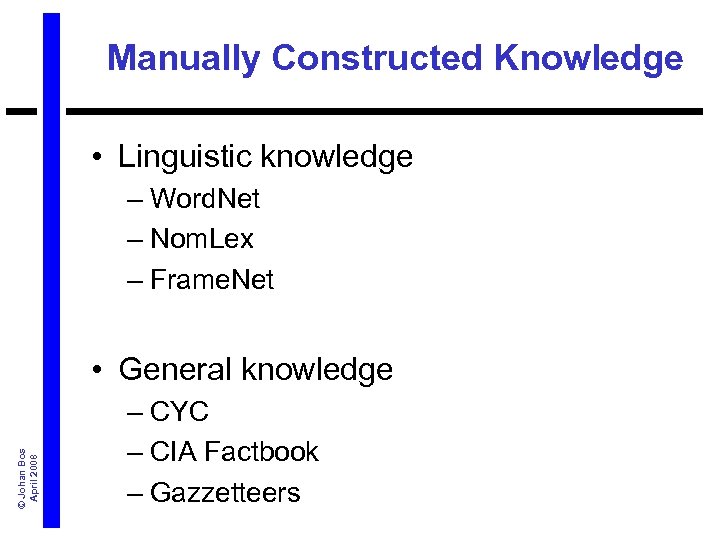

Manually Constructed Knowledge • Linguistic knowledge – Word. Net – Nom. Lex – Frame. Net © Johan Bos April 2008 • General knowledge – CYC – CIA Factbook – Gazzetteers

Word. Net © Johan Bos April 2008 • Electronic dictionary • Not only words and definitions, but also relations between words • Four parts of speech – Nouns – Verbs – Adjectives – Adverbs

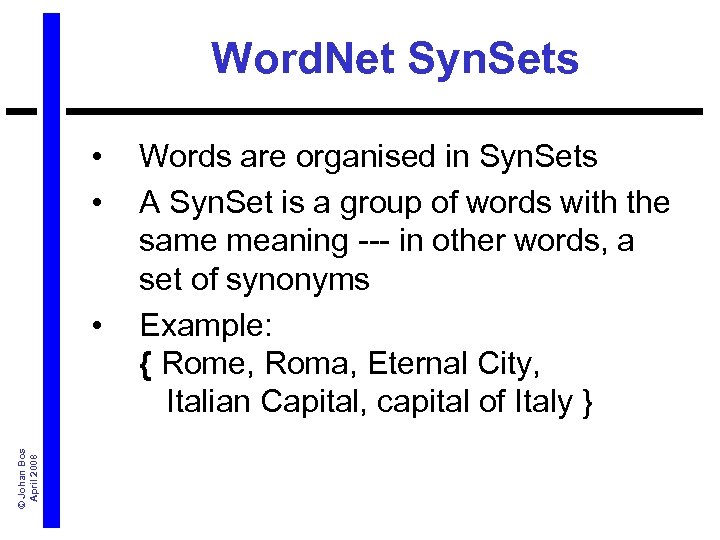

Word. Net Syn. Sets • • © Johan Bos April 2008 • Words are organised in Syn. Sets A Syn. Set is a group of words with the same meaning --- in other words, a set of synonyms Example: { Rome, Roma, Eternal City, Italian Capital, capital of Italy }

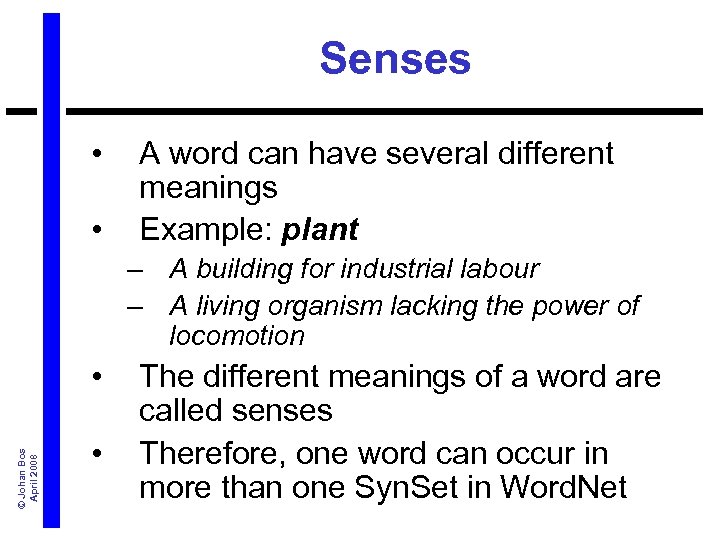

Senses • • A word can have several different meanings Example: plant – A building for industrial labour – A living organism lacking the power of locomotion © Johan Bos April 2008 • • The different meanings of a word are called senses Therefore, one word can occur in more than one Syn. Set in Word. Net

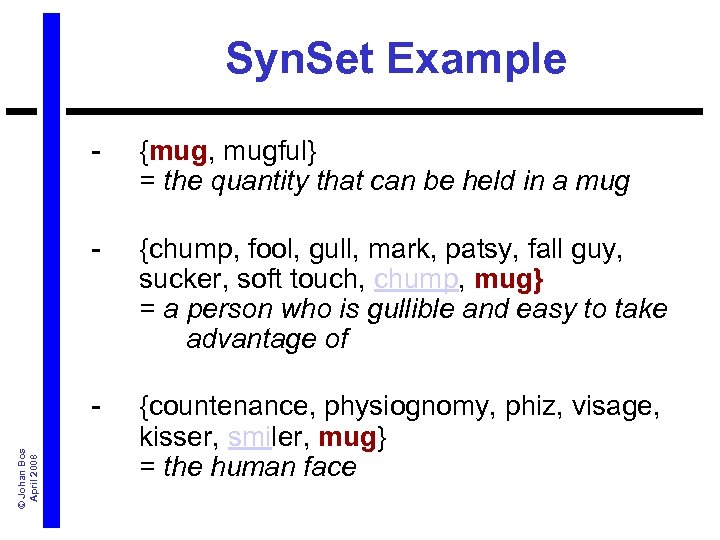

Syn. Set Example {mug, mugful} = the quantity that can be held in a mug - {chump, fool, gull, mark, patsy, fall guy, sucker, soft touch, chump, mug} = a person who is gullible and easy to take advantage of © Johan Bos April 2008 - {countenance, physiognomy, phiz, visage, kisser, smiler, mug} = the human face

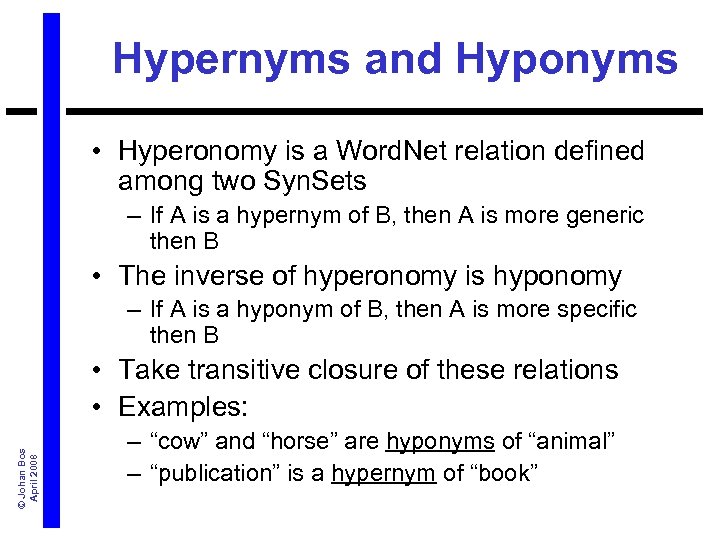

Hypernyms and Hyponyms • Hyperonomy is a Word. Net relation defined among two Syn. Sets – If A is a hypernym of B, then A is more generic then B • The inverse of hyperonomy is hyponomy – If A is a hyponym of B, then A is more specific then B © Johan Bos April 2008 • Take transitive closure of these relations • Examples: – “cow” and “horse” are hyponyms of “animal” – “publication” is a hypernym of “book”

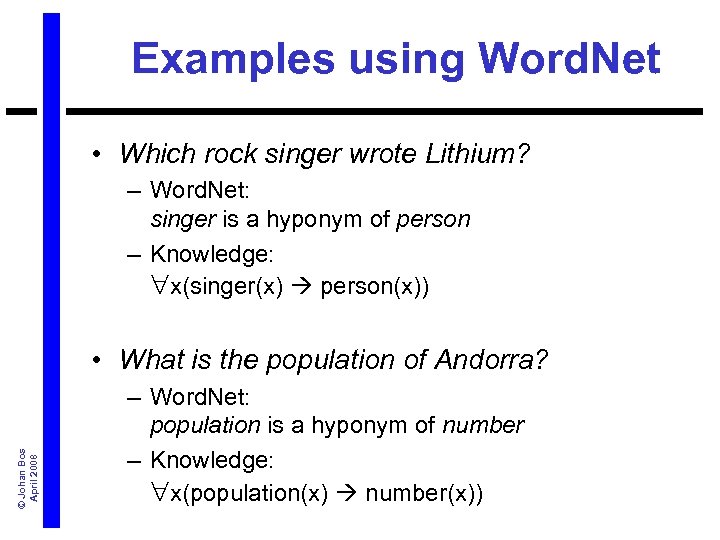

Examples using Word. Net • Which rock singer wrote Lithium? – Word. Net: singer is a hyponym of person – Knowledge: x(singer(x) person(x)) © Johan Bos April 2008 • What is the population of Andorra? – Word. Net: population is a hyponym of number – Knowledge: x(population(x) number(x))

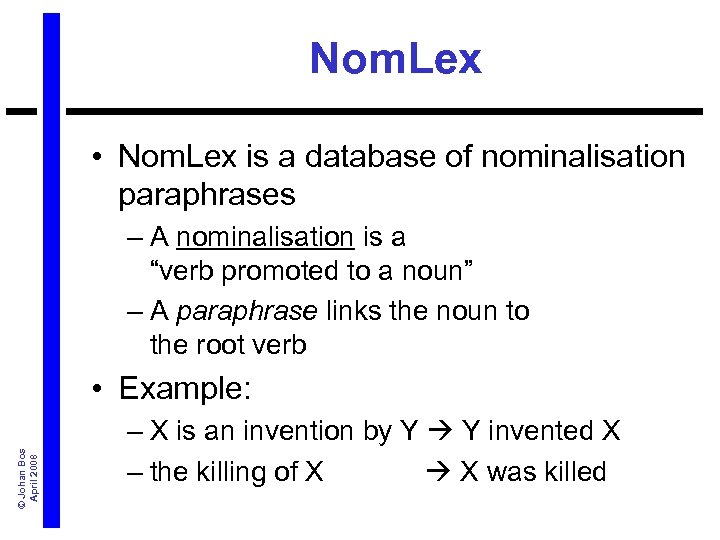

Nom. Lex • Nom. Lex is a database of nominalisation paraphrases – A nominalisation is a “verb promoted to a noun” – A paraphrase links the noun to the root verb © Johan Bos April 2008 • Example: – X is an invention by Y Y invented X – the killing of X X was killed

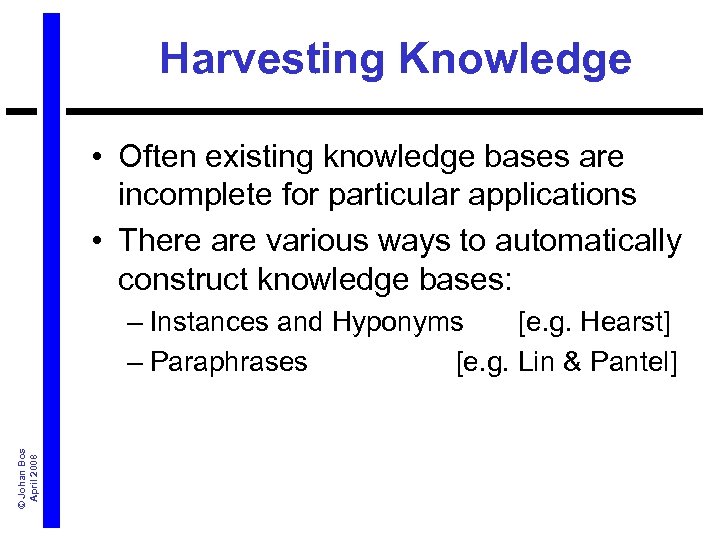

Harvesting Knowledge • Often existing knowledge bases are incomplete for particular applications • There are various ways to automatically construct knowledge bases: © Johan Bos April 2008 – Instances and Hyponyms [e. g. Hearst] – Paraphrases [e. g. Lin & Pantel]

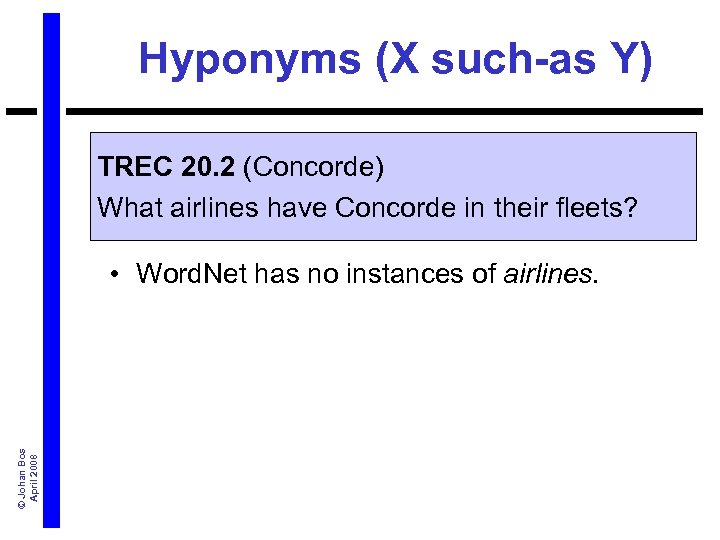

Hyponyms (X such-as Y) TREC 20. 2 (Concorde) What airlines have Concorde in their fleets? © Johan Bos April 2008 • Word. Net has no instances of airlines.

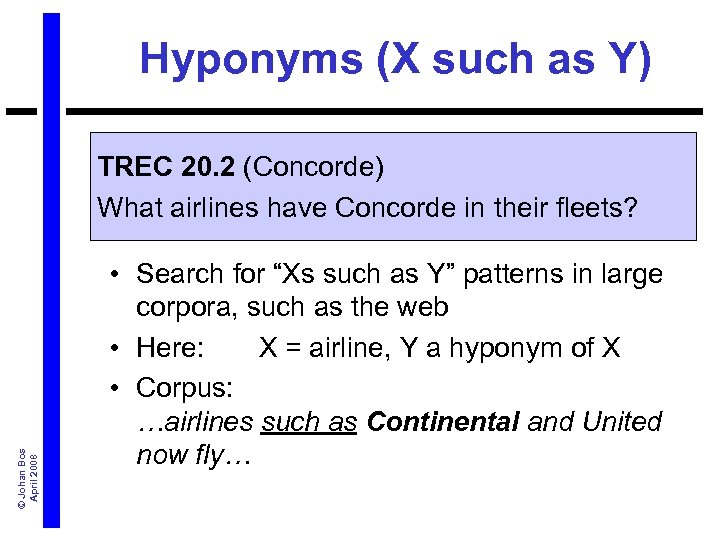

Hyponyms (X such as Y) © Johan Bos April 2008 TREC 20. 2 (Concorde) What airlines have Concorde in their fleets? • Search for “Xs such as Y” patterns in large corpora, such as the web • Here: X = airline, Y a hyponym of X • Corpus: …airlines such as Continental and United now fly…

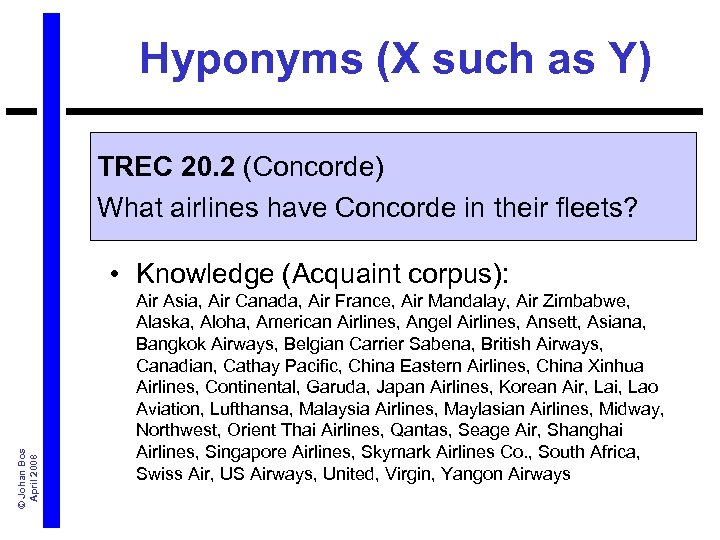

Hyponyms (X such as Y) TREC 20. 2 (Concorde) What airlines have Concorde in their fleets? © Johan Bos April 2008 • Knowledge (Acquaint corpus): Air Asia, Air Canada, Air France, Air Mandalay, Air Zimbabwe, Alaska, Aloha, American Airlines, Angel Airlines, Ansett, Asiana, Bangkok Airways, Belgian Carrier Sabena, British Airways, Canadian, Cathay Pacific, China Eastern Airlines, China Xinhua Airlines, Continental, Garuda, Japan Airlines, Korean Air, Lai, Lao Aviation, Lufthansa, Malaysia Airlines, Maylasian Airlines, Midway, Northwest, Orient Thai Airlines, Qantas, Seage Air, Shanghai Airlines, Singapore Airlines, Skymark Airlines Co. , South Africa, Swiss Air, US Airways, United, Virgin, Yangon Airways

© Johan Bos April 2008 Paraphrases • Several methods have been developed for automatically finding paraphrases in large corpora • This usually proceeds by starting with seed patterns of known positive instances • Using bootstrapping new patterns are found, and new seeds can be used

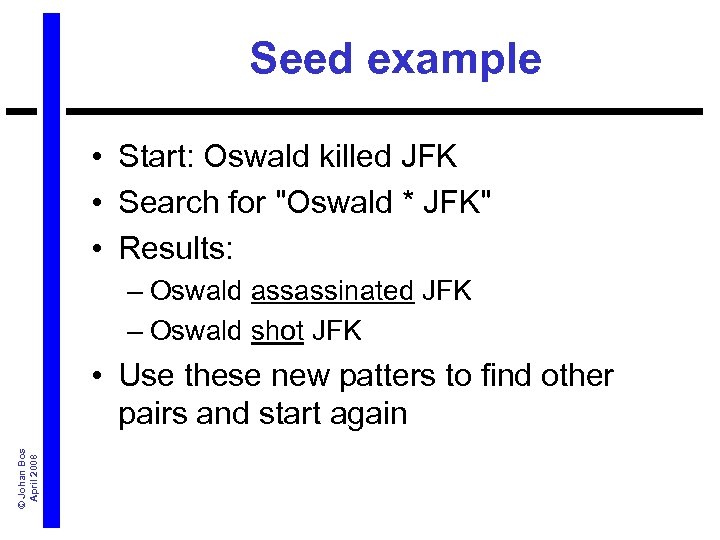

Seed example • Start: Oswald killed JFK • Search for "Oswald * JFK" • Results: – Oswald assassinated JFK – Oswald shot JFK © Johan Bos April 2008 • Use these new patters to find other pairs and start again

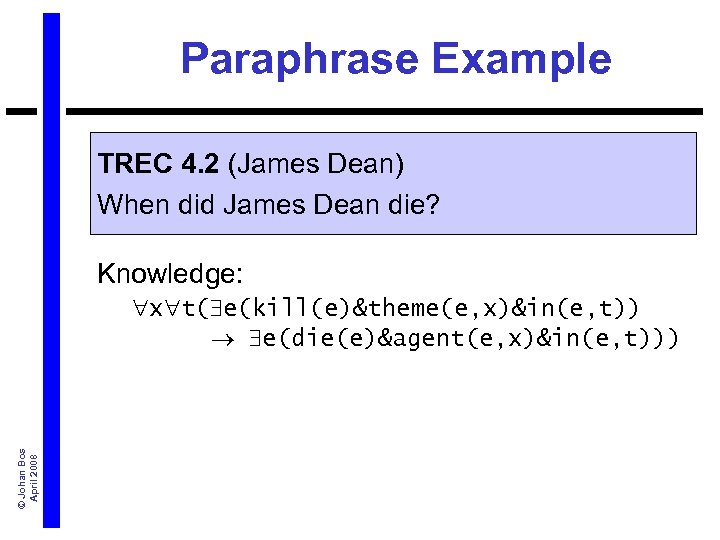

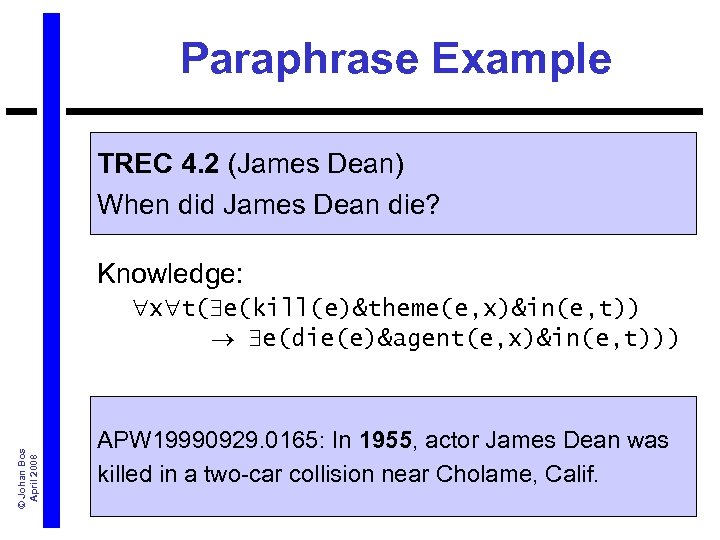

Paraphrase Example TREC 4. 2 (James Dean) When did James Dean die? Knowledge: x t( e(kill(e)&theme(e, x)&in(e, t)) © Johan Bos April 2008 e(die(e)&agent(e, x)&in(e, t)))

Paraphrase Example TREC 4. 2 (James Dean) When did James Dean die? Knowledge: x t( e(kill(e)&theme(e, x)&in(e, t)) © Johan Bos April 2008 e(die(e)&agent(e, x)&in(e, t))) APW 19990929. 0165: In 1955, actor James Dean was killed in a two-car collision near Cholame, Calif.

Question Answering (QA) Lecture 2 © Johan Bos April 2008 • Question Analysis • Background Knowledge Ø Answer Typing

Architecture of PRONTO parsing question answer ccg answer reranking boxing drs Word. Net Nom. Lex answer selection knowledge © Johan Bos April 2008 query answer typing Indri answer extraction Indexed Documents

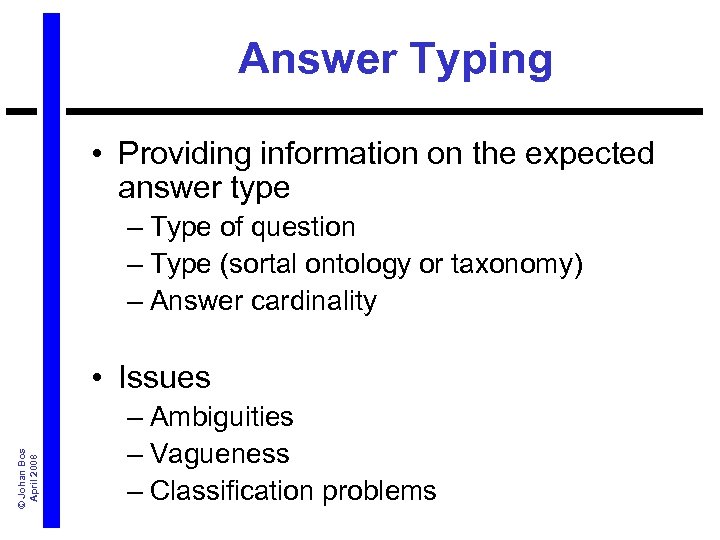

Answer Typing • Providing information on the expected answer type – Type of question – Type (sortal ontology or taxonomy) – Answer cardinality © Johan Bos April 2008 • Issues – Ambiguities – Vagueness – Classification problems

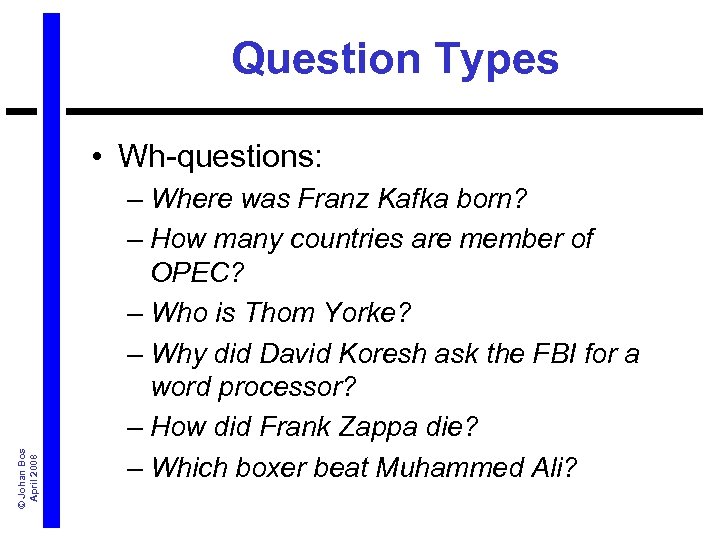

Question Types © Johan Bos April 2008 • Wh-questions: – Where was Franz Kafka born? – How many countries are member of OPEC? – Who is Thom Yorke? – Why did David Koresh ask the FBI for a word processor? – How did Frank Zappa die? – Which boxer beat Muhammed Ali?

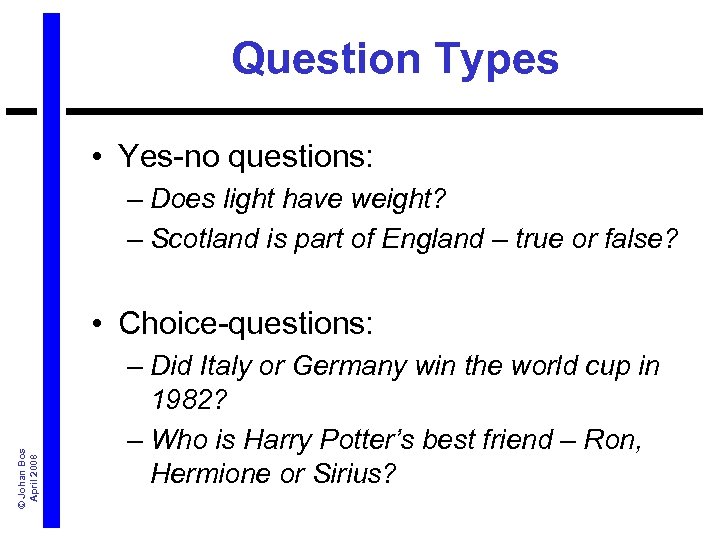

Question Types • Yes-no questions: – Does light have weight? – Scotland is part of England – true or false? © Johan Bos April 2008 • Choice-questions: – Did Italy or Germany win the world cup in 1982? – Who is Harry Potter’s best friend – Ron, Hermione or Sirius?

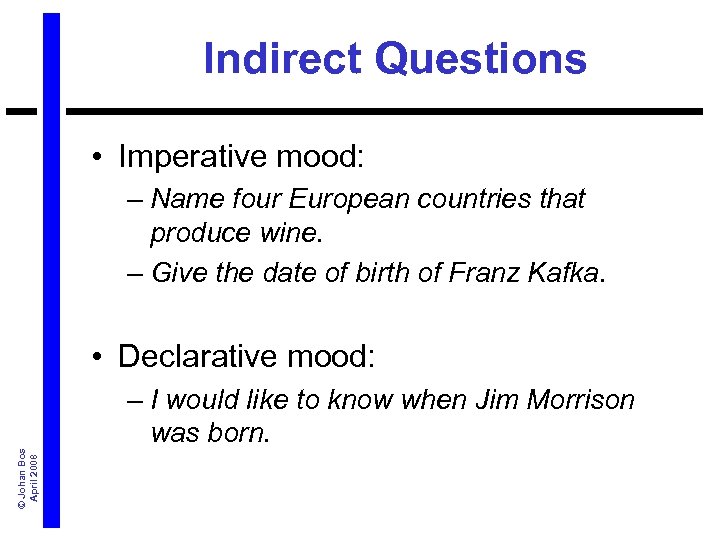

Indirect Questions • Imperative mood: – Name four European countries that produce wine. – Give the date of birth of Franz Kafka. • Declarative mood: © Johan Bos April 2008 – I would like to know when Jim Morrison was born.

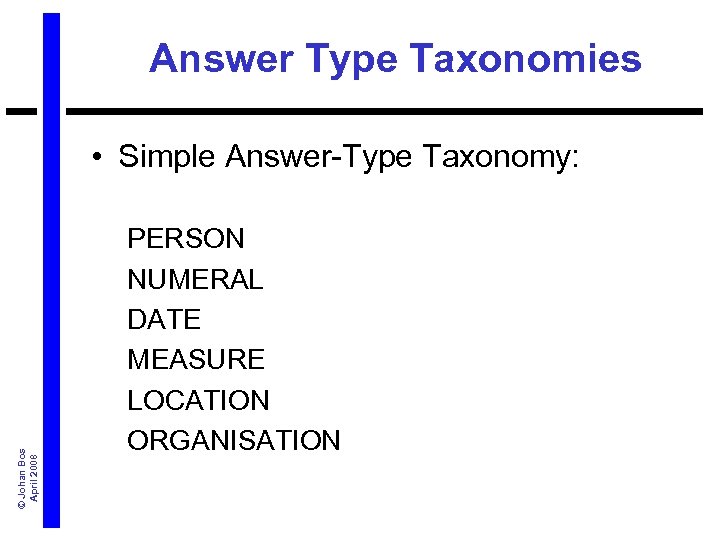

Answer Type Taxonomies © Johan Bos April 2008 • Simple Answer-Type Taxonomy: PERSON NUMERAL DATE MEASURE LOCATION ORGANISATION

Expected Answer Types • PERSON: © Johan Bos April 2008 – Who won the Nobel prize for Peace? – Which rock singer wrote Lithium?

Expected Answer Types • NUMERAL: © Johan Bos April 2008 – How many inhabitants does Rome have? – What’s the population of Scotland?

Expected Answer Types • DATE: © Johan Bos April 2008 – When was JFK killed? – In what year did Rome become the capital of Italy?

Expected Answer Types • MEASURE: © Johan Bos April 2008 – How much does a 125 gallon fish tank cost? – How tall is an African elephant? – How heavy is a Boeing 777?

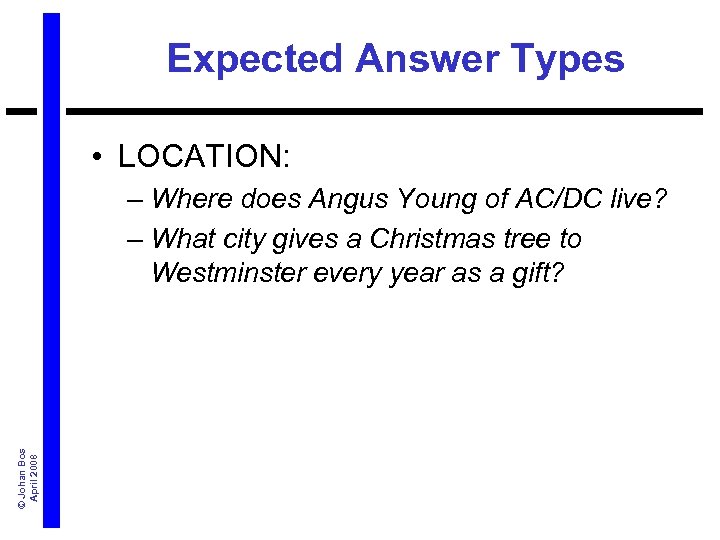

Expected Answer Types • LOCATION: © Johan Bos April 2008 – Where does Angus Young of AC/DC live? – What city gives a Christmas tree to Westminster every year as a gift?

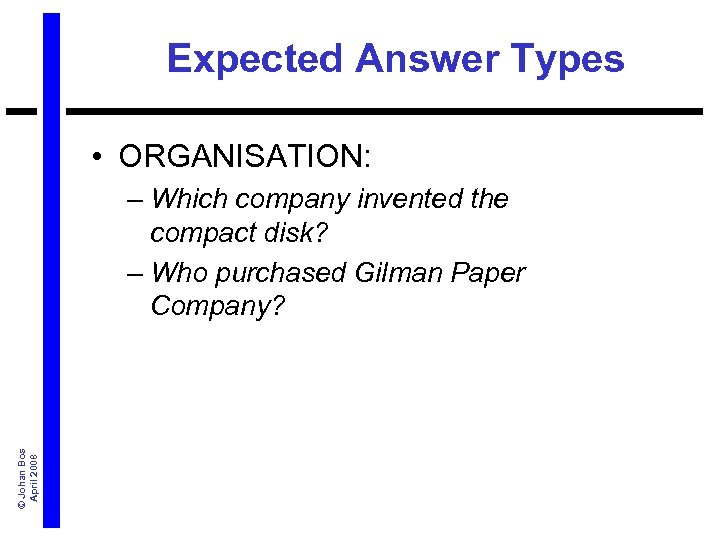

Expected Answer Types • ORGANISATION: © Johan Bos April 2008 – Which company invented the compact disk? – Who purchased Gilman Paper Company?

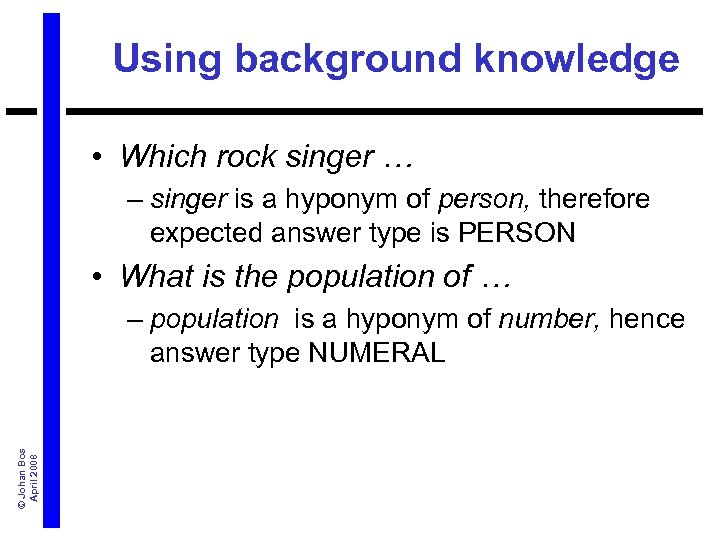

Using background knowledge • Which rock singer … – singer is a hyponym of person, therefore expected answer type is PERSON • What is the population of … © Johan Bos April 2008 – population is a hyponym of number, hence answer type NUMERAL

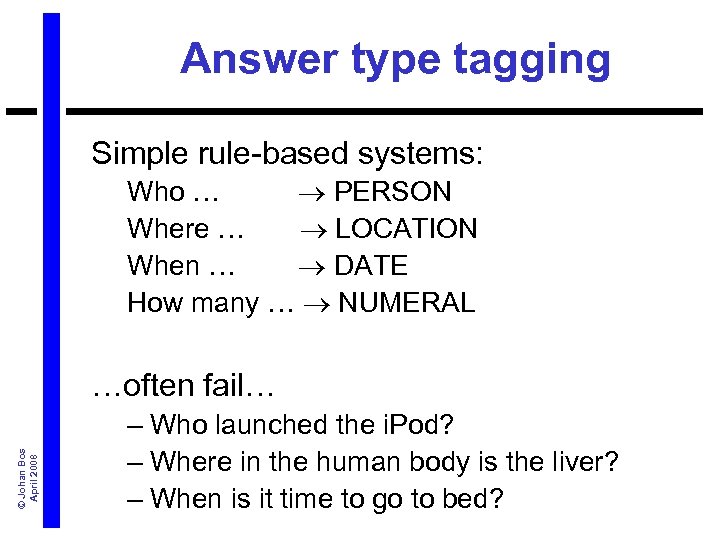

Answer type tagging Simple rule-based systems: Who … PERSON Where … LOCATION When … DATE How many … NUMERAL © Johan Bos April 2008 …often fail… – Who launched the i. Pod? – Where in the human body is the liver? – When is it time to go to bed?

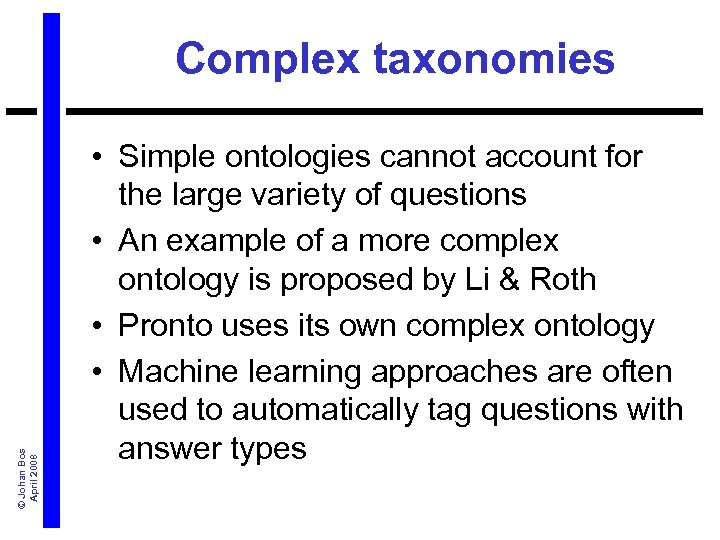

© Johan Bos April 2008 Complex taxonomies • Simple ontologies cannot account for the large variety of questions • An example of a more complex ontology is proposed by Li & Roth • Pronto uses its own complex ontology • Machine learning approaches are often used to automatically tag questions with answer types

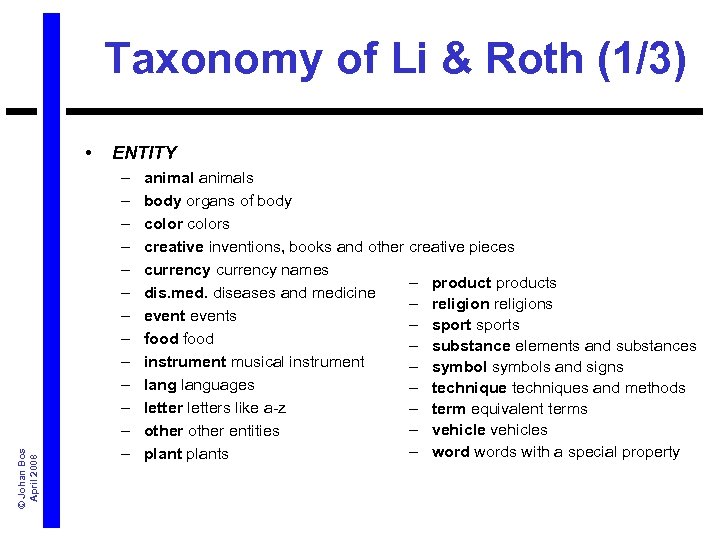

Taxonomy of Li & Roth (1/3) © Johan Bos April 2008 • ENTITY – – – – animals body organs of body colors creative inventions, books and other creative pieces currency names – products dis. med. diseases and medicine – religions events – sports food – substance elements and substances instrument musical instrument – symbols and signs languages – techniques and methods letters like a-z – term equivalent terms – vehicles other entities – words with a special property plants

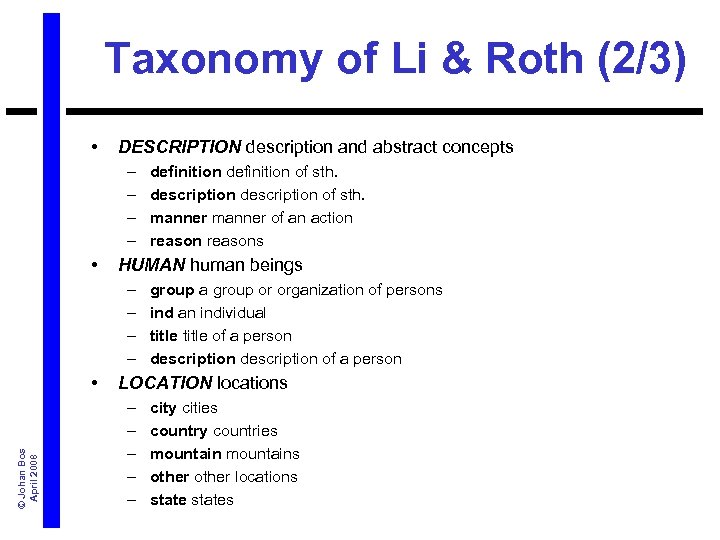

Taxonomy of Li & Roth (2/3) • DESCRIPTION description and abstract concepts – – • HUMAN human beings – – © Johan Bos April 2008 • definition of sth. description of sth. manner of an action reasons group a group or organization of persons ind an individual title of a person description of a person LOCATION locations – – – city cities country countries mountains other locations states

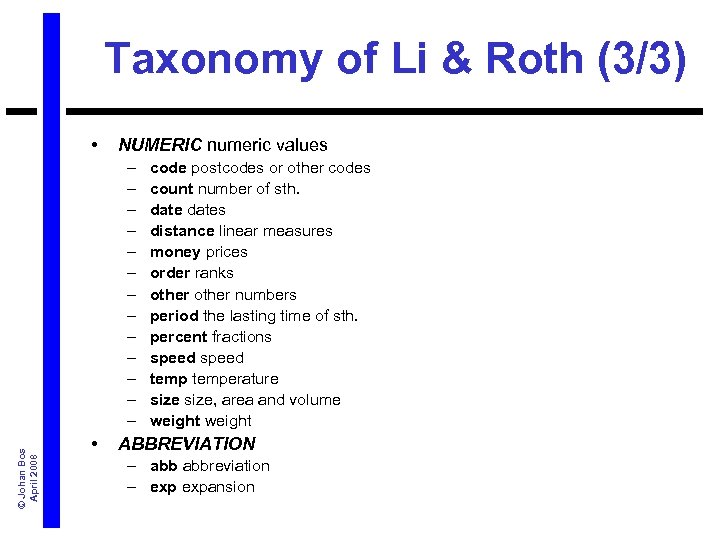

Taxonomy of Li & Roth (3/3) • NUMERIC numeric values © Johan Bos April 2008 – – – – • code postcodes or other codes count number of sth. dates distance linear measures money prices order ranks other numbers period the lasting time of sth. percent fractions speed temperature size, area and volume weight ABBREVIATION – abbreviation – expansion

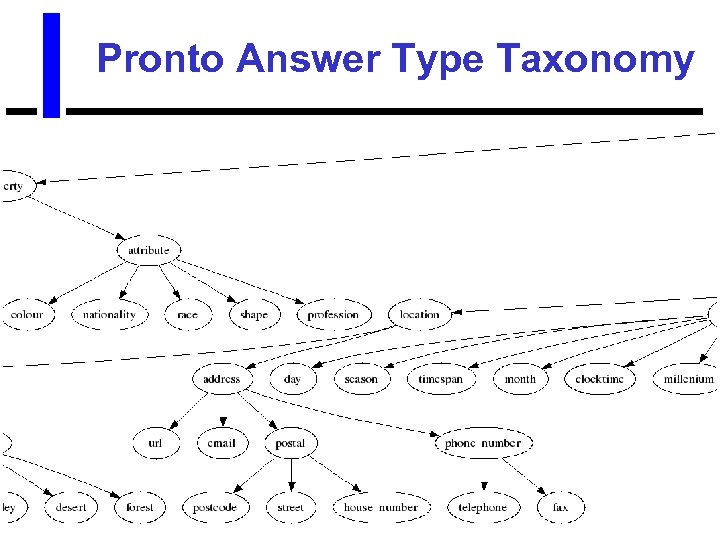

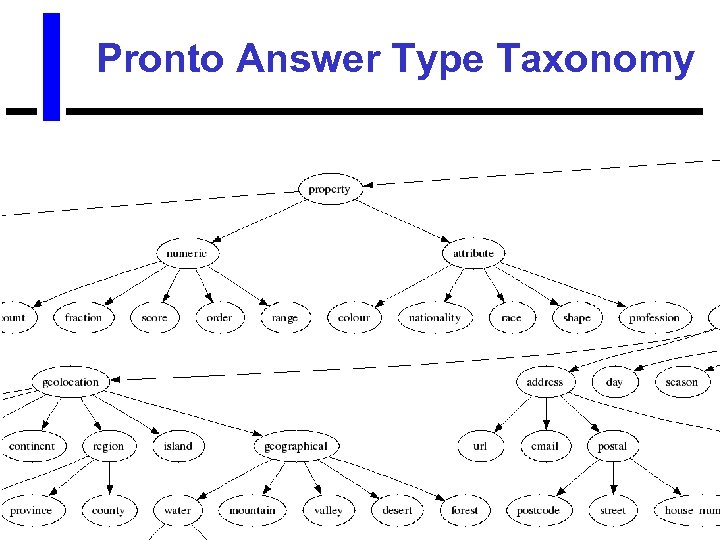

© Johan Bos April 2008 Pronto Answer Type Taxonomy

© Johan Bos April 2008 Pronto Answer Type Taxonomy

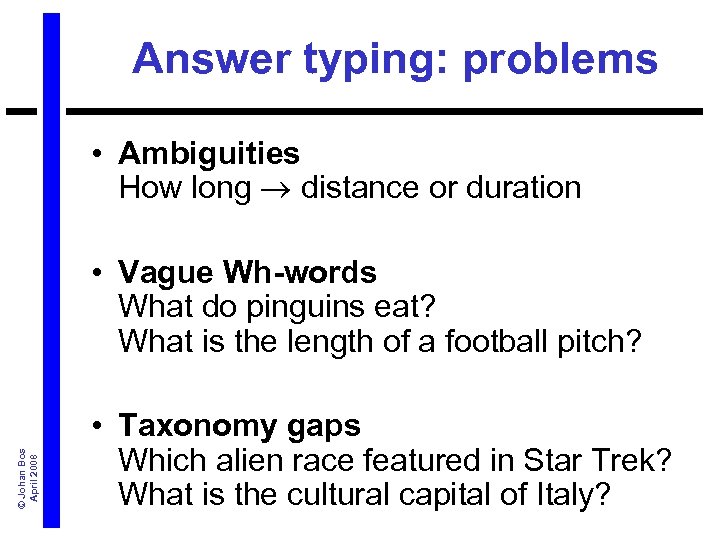

Answer typing: problems • Ambiguities How long distance or duration © Johan Bos April 2008 • Vague Wh-words What do pinguins eat? What is the length of a football pitch? • Taxonomy gaps Which alien race featured in Star Trek? What is the cultural capital of Italy?

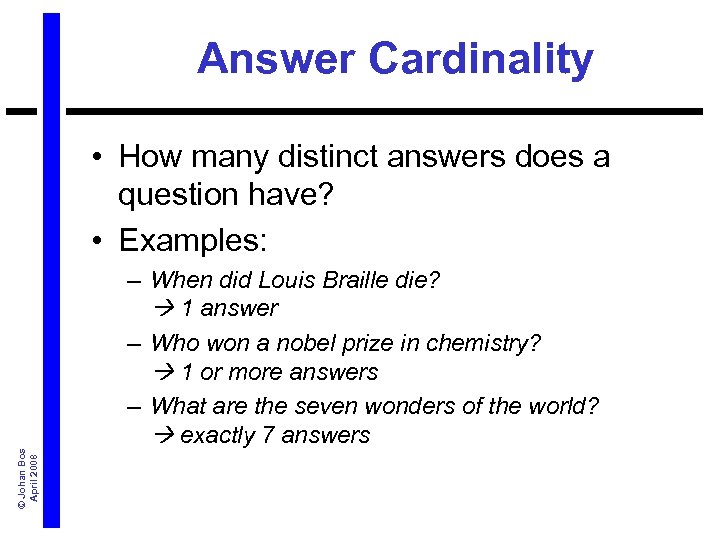

Answer Cardinality © Johan Bos April 2008 • How many distinct answers does a question have? • Examples: – When did Louis Braille die? 1 answer – Who won a nobel prize in chemistry? 1 or more answers – What are the seven wonders of the world? exactly 7 answers

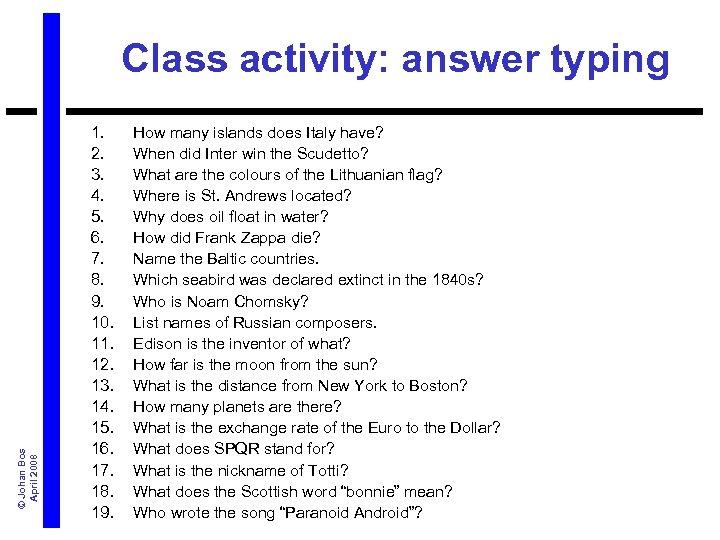

© Johan Bos April 2008 Class activity: answer typing 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. 19. How many islands does Italy have? When did Inter win the Scudetto? What are the colours of the Lithuanian flag? Where is St. Andrews located? Why does oil float in water? How did Frank Zappa die? Name the Baltic countries. Which seabird was declared extinct in the 1840 s? Who is Noam Chomsky? List names of Russian composers. Edison is the inventor of what? How far is the moon from the sun? What is the distance from New York to Boston? How many planets are there? What is the exchange rate of the Euro to the Dollar? What does SPQR stand for? What is the nickname of Totti? What does the Scottish word “bonnie” mean? Who wrote the song “Paranoid Android”?

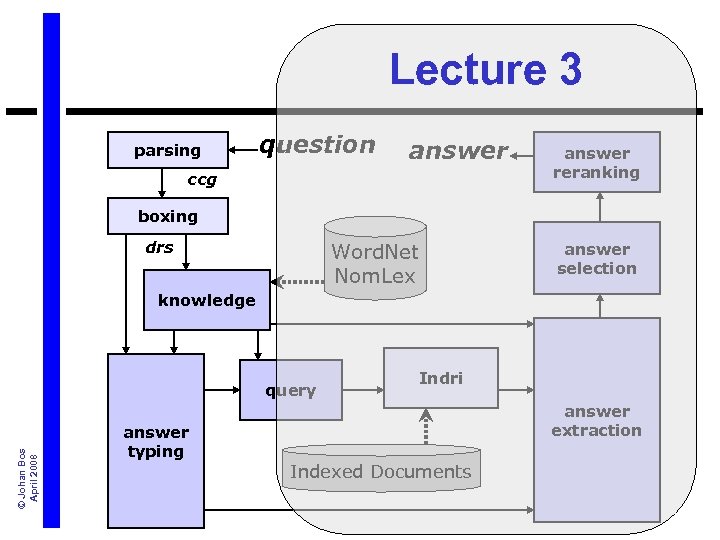

Lecture 3 parsing question answer ccg answer reranking boxing drs Word. Net Nom. Lex answer selection knowledge © Johan Bos April 2008 query answer typing Indri answer extraction Indexed Documents

Question Answering (QA) Lecture 2 © Johan Bos April 2008 Lecture 1 • • What is QA? Query Log Analysis Challenges in QA History of QA System Architecture Methods System Evaluation State-of-the-art • Question Analysis • Background Knowledge • Answer Typing Lecture 3 • • • Query Generation Document Analysis Semantic Indexing Answer Extraction Selection and Ranking

d09972517f459ea09832cfd93447c07a.ppt