a0870cf13713e82101b72236d0090494.ppt

- Количество слайдов: 38

Question Answering at TREC Mark A. Greenwood Natural Language Processing Group Department of Computer Science University of Sheffield, UK

Question Answering at TREC Mark A. Greenwood Natural Language Processing Group Department of Computer Science University of Sheffield, UK

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

History of QA at TREC • QA Track first introduced at TREC 8 (Voorhees, 1999) § § § 200 fact-based short-answer questions Questions mainly back formulated from documents Answers could be 50 -byte or 250 -bytes snippets 5 answers could be returned for each question Best systems could answer over 2/3 of the questions (Moldovan et al. , 1999; Srihari and Li, 1999). • TREC 10 (Voorhees, 2001) introduced: § List questions such as “Name 20 countries that produce coffee” § Questions which don’t have an answer in the collection 11/01/2006 NLP Meeting

History of QA at TREC • QA Track first introduced at TREC 8 (Voorhees, 1999) § § § 200 fact-based short-answer questions Questions mainly back formulated from documents Answers could be 50 -byte or 250 -bytes snippets 5 answers could be returned for each question Best systems could answer over 2/3 of the questions (Moldovan et al. , 1999; Srihari and Li, 1999). • TREC 10 (Voorhees, 2001) introduced: § List questions such as “Name 20 countries that produce coffee” § Questions which don’t have an answer in the collection 11/01/2006 NLP Meeting

History of QA at TREC • In TREC 11 (Voorhees, 2002): § Answers had to be exact § Only one answer could be returned per question. • TREC 12 (Voorhees, 2003) Introduced definition questions: § § Define a target such as “aspirin” or “Aaron Copland” A definition should contain a number of important facts (vital nuggets) Can also include other associated information (non-vital nuggets) Evaluated using a length based precision metric which penalizes long answers containing few nuggets. 11/01/2006 NLP Meeting

History of QA at TREC • In TREC 11 (Voorhees, 2002): § Answers had to be exact § Only one answer could be returned per question. • TREC 12 (Voorhees, 2003) Introduced definition questions: § § Define a target such as “aspirin” or “Aaron Copland” A definition should contain a number of important facts (vital nuggets) Can also include other associated information (non-vital nuggets) Evaluated using a length based precision metric which penalizes long answers containing few nuggets. 11/01/2006 NLP Meeting

History of QA at TREC • TREC 13 (Voorhees, 2004) combines the three question types into a scenarios around targets. For instance § § § Target: Hale Bopp Comet Factoid: When was the comet discovered? Factoid: How often does it approach the earth? List: In what countries was the comet visible on it’s last return? Other: Tell me anything else not covered by the above questions 11/01/2006 NLP Meeting

History of QA at TREC • TREC 13 (Voorhees, 2004) combines the three question types into a scenarios around targets. For instance § § § Target: Hale Bopp Comet Factoid: When was the comet discovered? Factoid: How often does it approach the earth? List: In what countries was the comet visible on it’s last return? Other: Tell me anything else not covered by the above questions 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

TREC 2005 • Questions were based around 75 targets § § 19 people 19 organizations 19 things 18 events • The series of targets contained a total of: § 362 factoid questions § 93 list questions § 75 (one per target) other questions • All answers had to be with reference to a document in the AQUAINT collection of newswire texts. 11/01/2006 NLP Meeting

TREC 2005 • Questions were based around 75 targets § § 19 people 19 organizations 19 things 18 events • The series of targets contained a total of: § 362 factoid questions § 93 list questions § 75 (one per target) other questions • All answers had to be with reference to a document in the AQUAINT collection of newswire texts. 11/01/2006 NLP Meeting

Example Scenarios • AMWAY § § § F: When was AMWAY founded? F: Where is it headquartered? F: Who is president of the company L: Name the officials of the company F: What is the name “AMWAY” short for? O: • return of Hong Kong to Chinese sovereignty § § § F: What is Hong Kong’s population? F: When was Hong Kong returned to Chinese sovereignty? F: Who was the Chinese President at the time of the return? F: Who was the British Foreign Secretary at the time? L: What other countries formally congratulated China on the return? O: 11/01/2006 NLP Meeting

Example Scenarios • AMWAY § § § F: When was AMWAY founded? F: Where is it headquartered? F: Who is president of the company L: Name the officials of the company F: What is the name “AMWAY” short for? O: • return of Hong Kong to Chinese sovereignty § § § F: What is Hong Kong’s population? F: When was Hong Kong returned to Chinese sovereignty? F: Who was the Chinese President at the time of the return? F: Who was the British Foreign Secretary at the time? L: What other countries formally congratulated China on the return? O: 11/01/2006 NLP Meeting

Example Scenarios • Shiite § § § § F: Who was the first Imam of the Shiite sect of Islam? F: Where is his tomb? F: What was this person’s relationship to the Prophet Mohammad? F: Who was the third Imam of Shiite Muslims? F: When did he die? F: What portion of Muslims are Shiite? L: What Shiite leaders were killed in Pakistan? O: 11/01/2006 NLP Meeting

Example Scenarios • Shiite § § § § F: Who was the first Imam of the Shiite sect of Islam? F: Where is his tomb? F: What was this person’s relationship to the Prophet Mohammad? F: Who was the third Imam of Shiite Muslims? F: When did he die? F: What portion of Muslims are Shiite? L: What Shiite leaders were killed in Pakistan? O: 11/01/2006 NLP Meeting

Evaluation Metrics • For factoid questions the metric is accuracy § Only exact supported answers and correct NIL responses are counted • For list questions the metric is F-measure (β = 1) § Only exact supported answers are counted § Set of correct answers (for recall purposes) is the union of all correct answers across all submitted runs plus any instances found during question development. • For other questions the metric F-measure (β = 3) § Recall is the proportion of vital nuggets returned § Precision is a length based penalty, where each valid nugget allows 100 non-whitespace characters to be returned. • These are combined to give a weighted score per target § Weighted Score = 0. 5 x. Factoid + 0. 25 x. List. Avg. F + 0. 25 x. Other. Avg. F 11/01/2006 NLP Meeting

Evaluation Metrics • For factoid questions the metric is accuracy § Only exact supported answers and correct NIL responses are counted • For list questions the metric is F-measure (β = 1) § Only exact supported answers are counted § Set of correct answers (for recall purposes) is the union of all correct answers across all submitted runs plus any instances found during question development. • For other questions the metric F-measure (β = 3) § Recall is the proportion of vital nuggets returned § Precision is a length based penalty, where each valid nugget allows 100 non-whitespace characters to be returned. • These are combined to give a weighted score per target § Weighted Score = 0. 5 x. Factoid + 0. 25 x. List. Avg. F + 0. 25 x. Other. Avg. F 11/01/2006 NLP Meeting

Official Evaluation Results • 30 groups participated in TREC 2005 • In all 71 runs were submitted for evaluation • We submitted three runs § shef 05 lmg § shef 05 mc § shef 05 lc • The main evaluation is the per-series score (average of the weighted target score) but separate results are also given for the three different question types. 11/01/2006 NLP Meeting

Official Evaluation Results • 30 groups participated in TREC 2005 • In all 71 runs were submitted for evaluation • We submitted three runs § shef 05 lmg § shef 05 mc § shef 05 lc • The main evaluation is the per-series score (average of the weighted target score) but separate results are also given for the three different question types. 11/01/2006 NLP Meeting

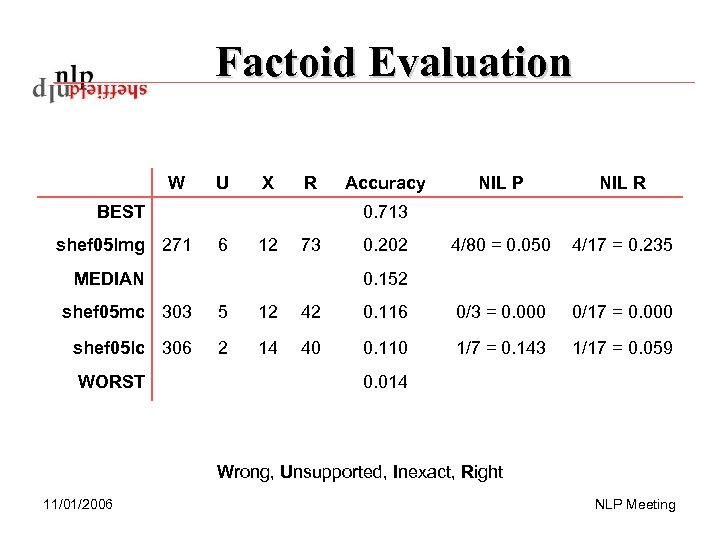

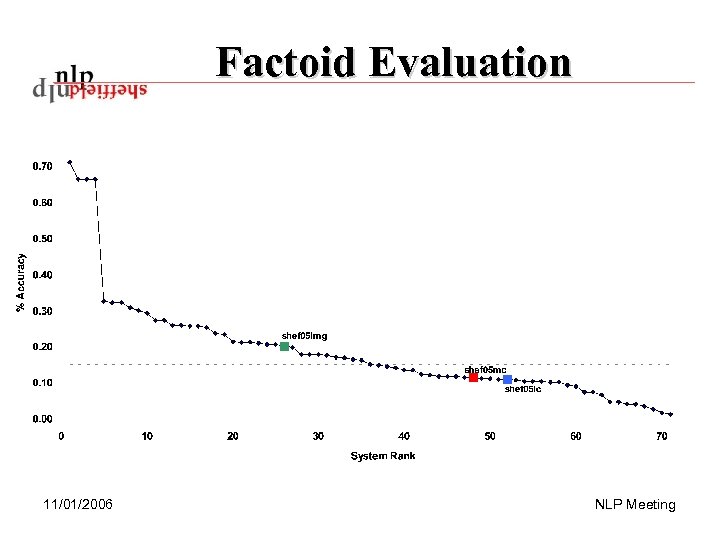

Factoid Evaluation W U X R BEST shef 05 lmg 271 Accuracy NIL P NIL R 4/80 = 0. 050 4/17 = 0. 235 0. 713 6 12 73 MEDIAN 0. 202 0. 152 shef 05 mc 303 5 12 42 0. 116 0/3 = 0. 000 0/17 = 0. 000 shef 05 lc 306 2 14 40 0. 110 1/7 = 0. 143 1/17 = 0. 059 WORST 0. 014 Wrong, Unsupported, Inexact, Right 11/01/2006 NLP Meeting

Factoid Evaluation W U X R BEST shef 05 lmg 271 Accuracy NIL P NIL R 4/80 = 0. 050 4/17 = 0. 235 0. 713 6 12 73 MEDIAN 0. 202 0. 152 shef 05 mc 303 5 12 42 0. 116 0/3 = 0. 000 0/17 = 0. 000 shef 05 lc 306 2 14 40 0. 110 1/7 = 0. 143 1/17 = 0. 059 WORST 0. 014 Wrong, Unsupported, Inexact, Right 11/01/2006 NLP Meeting

Factoid Evaluation 11/01/2006 NLP Meeting

Factoid Evaluation 11/01/2006 NLP Meeting

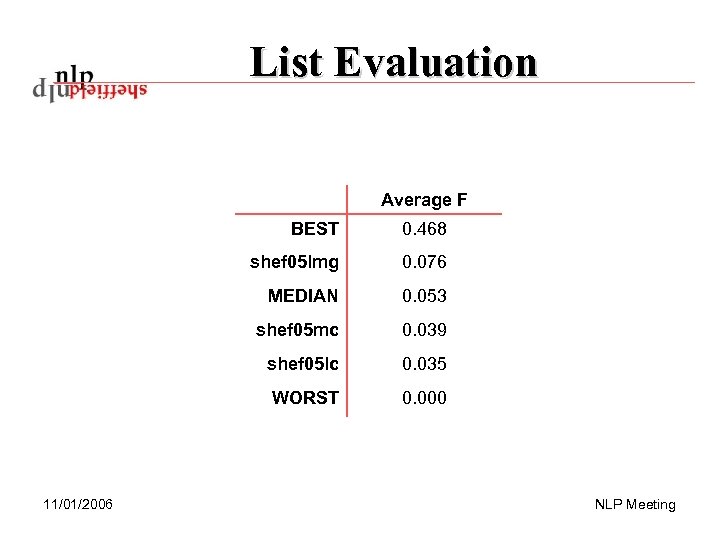

List Evaluation Average F BEST shef 05 lmg 0. 076 MEDIAN 0. 053 shef 05 mc 0. 039 shef 05 lc 0. 035 WORST 11/01/2006 0. 468 0. 000 NLP Meeting

List Evaluation Average F BEST shef 05 lmg 0. 076 MEDIAN 0. 053 shef 05 mc 0. 039 shef 05 lc 0. 035 WORST 11/01/2006 0. 468 0. 000 NLP Meeting

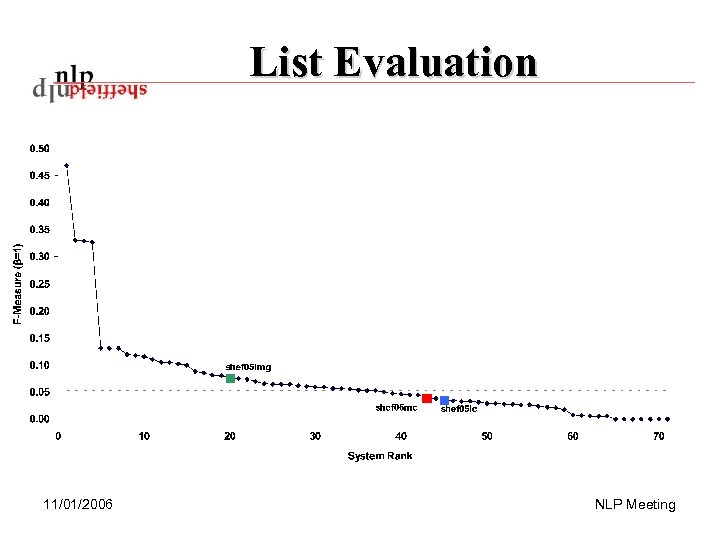

List Evaluation 11/01/2006 NLP Meeting

List Evaluation 11/01/2006 NLP Meeting

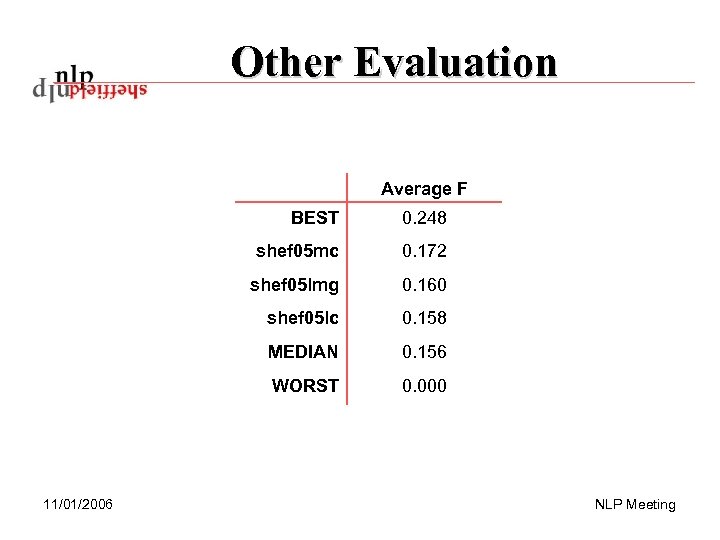

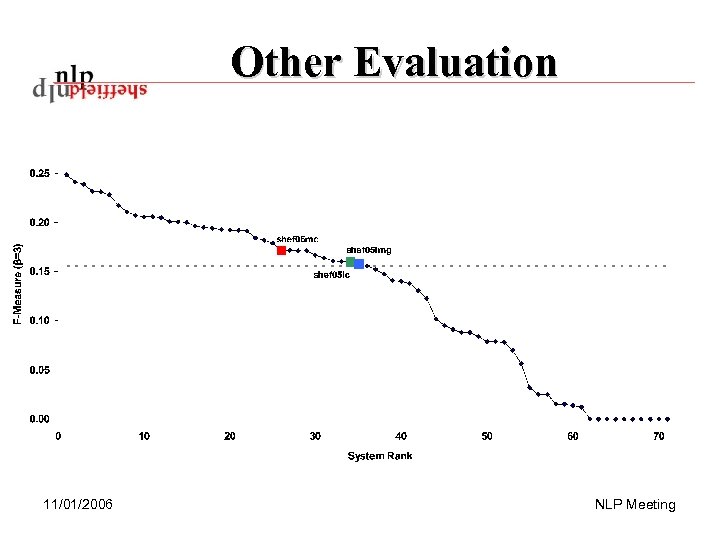

Other Evaluation Average F BEST shef 05 mc 0. 172 shef 05 lmg 0. 160 shef 05 lc 0. 158 MEDIAN 0. 156 WORST 11/01/2006 0. 248 0. 000 NLP Meeting

Other Evaluation Average F BEST shef 05 mc 0. 172 shef 05 lmg 0. 160 shef 05 lc 0. 158 MEDIAN 0. 156 WORST 11/01/2006 0. 248 0. 000 NLP Meeting

Other Evaluation 11/01/2006 NLP Meeting

Other Evaluation 11/01/2006 NLP Meeting

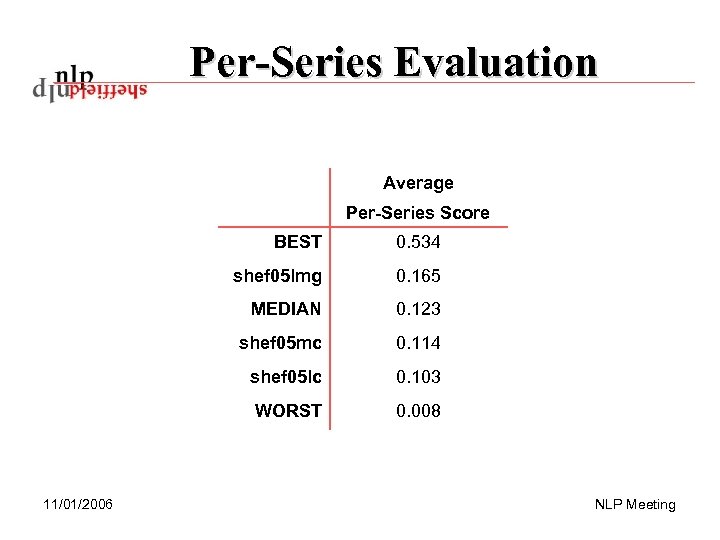

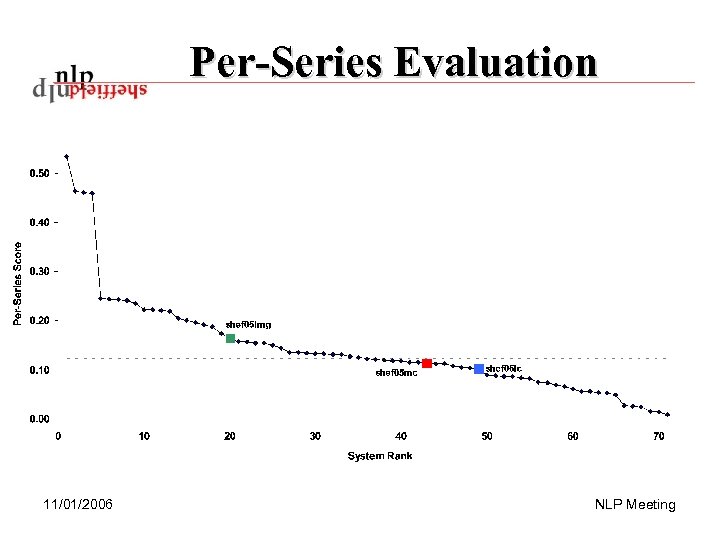

Per-Series Evaluation Average Per-Series Score BEST shef 05 lmg 0. 165 MEDIAN 0. 123 shef 05 mc 0. 114 shef 05 lc 0. 103 WORST 11/01/2006 0. 534 0. 008 NLP Meeting

Per-Series Evaluation Average Per-Series Score BEST shef 05 lmg 0. 165 MEDIAN 0. 123 shef 05 mc 0. 114 shef 05 lc 0. 103 WORST 11/01/2006 0. 534 0. 008 NLP Meeting

Per-Series Evaluation 11/01/2006 NLP Meeting

Per-Series Evaluation 11/01/2006 NLP Meeting

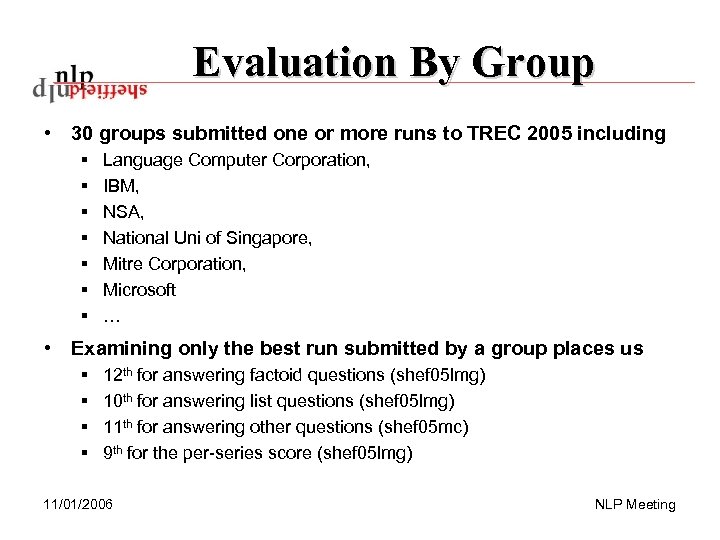

Evaluation By Group • 30 groups submitted one or more runs to TREC 2005 including § § § § Language Computer Corporation, IBM, NSA, National Uni of Singapore, Mitre Corporation, Microsoft … • Examining only the best run submitted by a group places us § § 12 th for answering factoid questions (shef 05 lmg) 10 th for answering list questions (shef 05 lmg) 11 th for answering other questions (shef 05 mc) 9 th for the per-series score (shef 05 lmg) 11/01/2006 NLP Meeting

Evaluation By Group • 30 groups submitted one or more runs to TREC 2005 including § § § § Language Computer Corporation, IBM, NSA, National Uni of Singapore, Mitre Corporation, Microsoft … • Examining only the best run submitted by a group places us § § 12 th for answering factoid questions (shef 05 lmg) 10 th for answering list questions (shef 05 lmg) 11 th for answering other questions (shef 05 mc) 9 th for the per-series score (shef 05 lmg) 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Answering Factoid Questions • Most factoid QA systems use a three component architecture § Question analysis § Document retrieval § Answer Extraction • We have developed two approaches to each component • Question Analysis § Expected answer type analysis § Grammatical answer requirements • Document Retrieval § Lucene § Mad. Cow • Answer Extraction § Matching on Logical Forms § Shallow Multi-Strategy Approach 11/01/2006 NLP Meeting

Answering Factoid Questions • Most factoid QA systems use a three component architecture § Question analysis § Document retrieval § Answer Extraction • We have developed two approaches to each component • Question Analysis § Expected answer type analysis § Grammatical answer requirements • Document Retrieval § Lucene § Mad. Cow • Answer Extraction § Matching on Logical Forms § Shallow Multi-Strategy Approach 11/01/2006 NLP Meeting

Answering Factoid Questions • shef 05 lmg § Expected answer type analysis § Lucene § Shallow Multi-Strategy Approach • shef 05 mc § Grammatical answer requirements § Mad. Cow § QA-La. SIE • shef 05 lc § Grammatical answer requirements § Lucene § QA-La. SIE 11/01/2006 NLP Meeting

Answering Factoid Questions • shef 05 lmg § Expected answer type analysis § Lucene § Shallow Multi-Strategy Approach • shef 05 mc § Grammatical answer requirements § Mad. Cow § QA-La. SIE • shef 05 lc § Grammatical answer requirements § Lucene § QA-La. SIE 11/01/2006 NLP Meeting

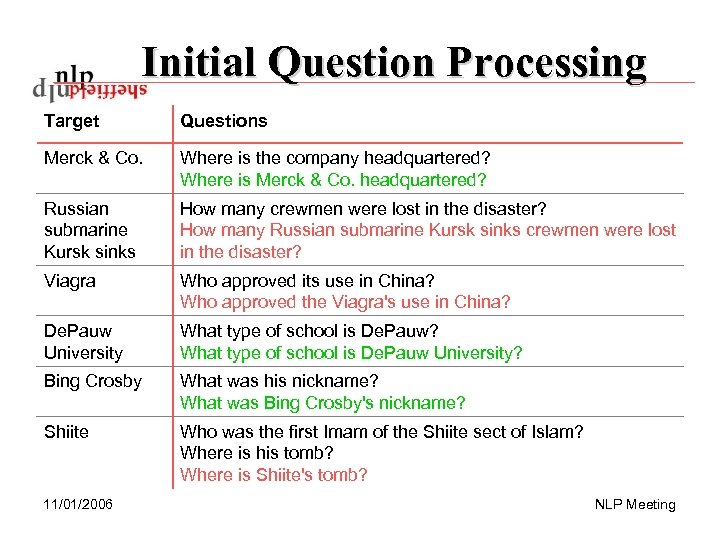

Initial Question Processing • All our approaches to QA assume that each question can be both asked answered in isolation. • The introduction of target based scenarios means that this is no longer true. • We use a single approach based on both pronominal and nominal coreference resolution to merge the target and questions. 11/01/2006 NLP Meeting

Initial Question Processing • All our approaches to QA assume that each question can be both asked answered in isolation. • The introduction of target based scenarios means that this is no longer true. • We use a single approach based on both pronominal and nominal coreference resolution to merge the target and questions. 11/01/2006 NLP Meeting

Initial Question Processing Target Questions Merck & Co. Where is the company headquartered? Where is Merck & Co. headquartered? Russian submarine Kursk sinks How many crewmen were lost in the disaster? How many Russian submarine Kursk sinks crewmen were lost in the disaster? Viagra Who approved its use in China? Who approved the Viagra's use in China? De. Pauw University What type of school is De. Pauw? What type of school is De. Pauw University? Bing Crosby What was his nickname? What was Bing Crosby's nickname? Shiite Who was the first Imam of the Shiite sect of Islam? Where is his tomb? Where is Shiite's tomb? 11/01/2006 NLP Meeting

Initial Question Processing Target Questions Merck & Co. Where is the company headquartered? Where is Merck & Co. headquartered? Russian submarine Kursk sinks How many crewmen were lost in the disaster? How many Russian submarine Kursk sinks crewmen were lost in the disaster? Viagra Who approved its use in China? Who approved the Viagra's use in China? De. Pauw University What type of school is De. Pauw? What type of school is De. Pauw University? Bing Crosby What was his nickname? What was Bing Crosby's nickname? Shiite Who was the first Imam of the Shiite sect of Islam? Where is his tomb? Where is Shiite's tomb? 11/01/2006 NLP Meeting

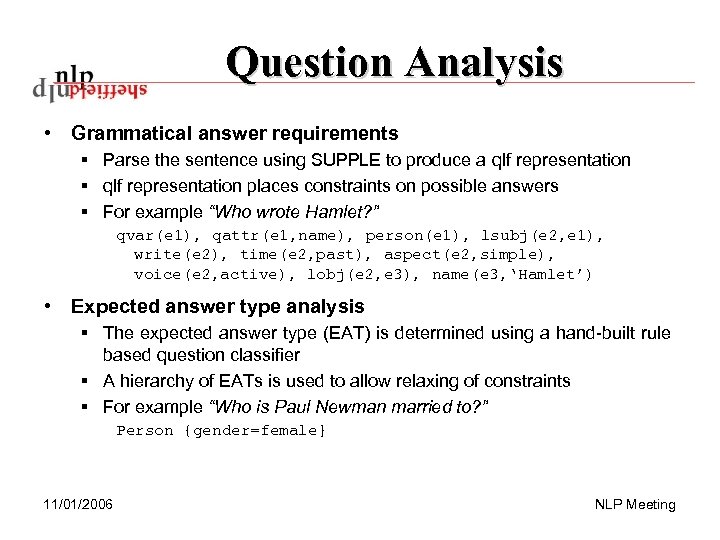

Question Analysis • Grammatical answer requirements § Parse the sentence using SUPPLE to produce a qlf representation § qlf representation places constraints on possible answers § For example “Who wrote Hamlet? ” qvar(e 1), qattr(e 1, name), person(e 1), lsubj(e 2, e 1), write(e 2), time(e 2, past), aspect(e 2, simple), voice(e 2, active), lobj(e 2, e 3), name(e 3, ‘Hamlet’) • Expected answer type analysis § The expected answer type (EAT) is determined using a hand-built rule based question classifier § A hierarchy of EATs is used to allow relaxing of constraints § For example “Who is Paul Newman married to? ” Person {gender=female} 11/01/2006 NLP Meeting

Question Analysis • Grammatical answer requirements § Parse the sentence using SUPPLE to produce a qlf representation § qlf representation places constraints on possible answers § For example “Who wrote Hamlet? ” qvar(e 1), qattr(e 1, name), person(e 1), lsubj(e 2, e 1), write(e 2), time(e 2, past), aspect(e 2, simple), voice(e 2, active), lobj(e 2, e 3), name(e 3, ‘Hamlet’) • Expected answer type analysis § The expected answer type (EAT) is determined using a hand-built rule based question classifier § A hierarchy of EATs is used to allow relaxing of constraints § For example “Who is Paul Newman married to? ” Person {gender=female} 11/01/2006 NLP Meeting

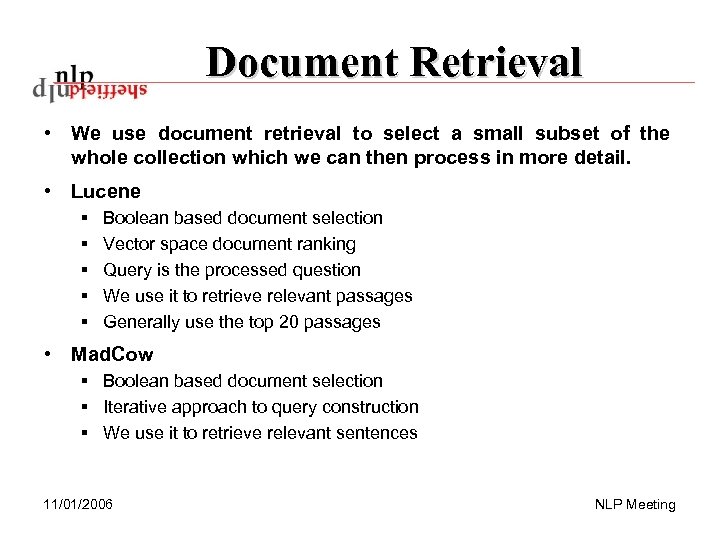

Document Retrieval • We use document retrieval to select a small subset of the whole collection which we can then process in more detail. • Lucene § § § Boolean based document selection Vector space document ranking Query is the processed question We use it to retrieve relevant passages Generally use the top 20 passages • Mad. Cow § Boolean based document selection § Iterative approach to query construction § We use it to retrieve relevant sentences 11/01/2006 NLP Meeting

Document Retrieval • We use document retrieval to select a small subset of the whole collection which we can then process in more detail. • Lucene § § § Boolean based document selection Vector space document ranking Query is the processed question We use it to retrieve relevant passages Generally use the top 20 passages • Mad. Cow § Boolean based document selection § Iterative approach to query construction § We use it to retrieve relevant sentences 11/01/2006 NLP Meeting

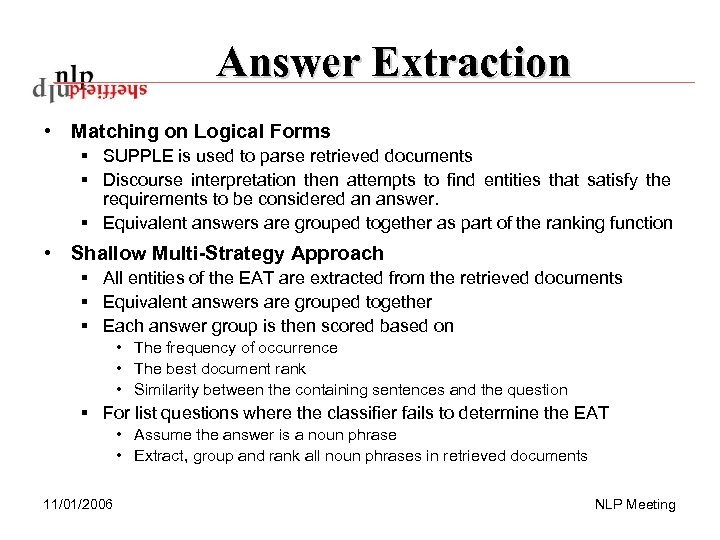

Answer Extraction • Matching on Logical Forms § SUPPLE is used to parse retrieved documents § Discourse interpretation then attempts to find entities that satisfy the requirements to be considered an answer. § Equivalent answers are grouped together as part of the ranking function • Shallow Multi-Strategy Approach § All entities of the EAT are extracted from the retrieved documents § Equivalent answers are grouped together § Each answer group is then scored based on • The frequency of occurrence • The best document rank • Similarity between the containing sentences and the question § For list questions where the classifier fails to determine the EAT • Assume the answer is a noun phrase • Extract, group and rank all noun phrases in retrieved documents 11/01/2006 NLP Meeting

Answer Extraction • Matching on Logical Forms § SUPPLE is used to parse retrieved documents § Discourse interpretation then attempts to find entities that satisfy the requirements to be considered an answer. § Equivalent answers are grouped together as part of the ranking function • Shallow Multi-Strategy Approach § All entities of the EAT are extracted from the retrieved documents § Equivalent answers are grouped together § Each answer group is then scored based on • The frequency of occurrence • The best document rank • Similarity between the containing sentences and the question § For list questions where the classifier fails to determine the EAT • Assume the answer is a noun phrase • Extract, group and rank all noun phrases in retrieved documents 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Answering Definition Questions • Two different systems for answering definition questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Both approaches can be used with either Lucene or Mad. Cow • shef 05 lmg § Bare Target + Reduce + Filter Approach § Lucene • shef 05 mc § Target Enrichment + Filter Approach § Mad. Cow • shef 05 lc § Target Enrichment + Filter Approach § Lucene 11/01/2006 NLP Meeting

Answering Definition Questions • Two different systems for answering definition questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Both approaches can be used with either Lucene or Mad. Cow • shef 05 lmg § Bare Target + Reduce + Filter Approach § Lucene • shef 05 mc § Target Enrichment + Filter Approach § Mad. Cow • shef 05 lc § Target Enrichment + Filter Approach § Lucene 11/01/2006 NLP Meeting

Bare Target + Reduce + Filter • The target is processed to determine the focus and optional qualification. For example, “Abraham in the Old Testament”: § Focus: Abraham § Qualification: Old Testament • Relevant sentences (those containing the focus) are retrieved • Sentences are reduced by removing redundant phrase • A two stage filtering process removes duplicate information § Two sentences are equivalent if they overlap 70% at the word level § If sum of increasing n-gram overlap passes a threshold • Keep finding relevant sentences until either § No more sentences § Definition length reaches 4000 non-whitespace characters 11/01/2006 NLP Meeting

Bare Target + Reduce + Filter • The target is processed to determine the focus and optional qualification. For example, “Abraham in the Old Testament”: § Focus: Abraham § Qualification: Old Testament • Relevant sentences (those containing the focus) are retrieved • Sentences are reduced by removing redundant phrase • A two stage filtering process removes duplicate information § Two sentences are equivalent if they overlap 70% at the word level § If sum of increasing n-gram overlap passes a threshold • Keep finding relevant sentences until either § No more sentences § Definition length reaches 4000 non-whitespace characters 11/01/2006 NLP Meeting

Target Enrichment + Filter • The focus of the target is determined and used to generate § X is a § such as X • Relevant texts are retrieved from “trusted sources” § Word. Net , Online version of Britannica, The web in general • Highly co-occuring terms are extracted from these texts using the generated patterns • Boolean retrieval is then used to locate sentences containing the target • Sentences are then grouped and ranked based on their similarity to each other and the mined terms • Maximum definition size is 14 nuggets or 4000 non-whitespace characters 11/01/2006 NLP Meeting

Target Enrichment + Filter • The focus of the target is determined and used to generate § X is a § such as X • Relevant texts are retrieved from “trusted sources” § Word. Net , Online version of Britannica, The web in general • Highly co-occuring terms are extracted from these texts using the generated patterns • Boolean retrieval is then used to locate sentences containing the target • Sentences are then grouped and ranked based on their similarity to each other and the mined terms • Maximum definition size is 14 nuggets or 4000 non-whitespace characters 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Conclusions • Our best performing system performs above average when independently evaluated. • TREC is becoming harder each year § We keep up (9 th in both 2004 and 2005) § We don’t significantly improve • We have developed multiple approaches to QA § At least two approaches to all three components of factoid QA § Two different approaches to definitional QA • We assume each question can be asked in isolation § As of TREC 2004 this is not true § We need a better strategy for dealing with a question series 11/01/2006 NLP Meeting

Conclusions • Our best performing system performs above average when independently evaluated. • TREC is becoming harder each year § We keep up (9 th in both 2004 and 2005) § We don’t significantly improve • We have developed multiple approaches to QA § At least two approaches to all three components of factoid QA § Two different approaches to definitional QA • We assume each question can be asked in isolation § As of TREC 2004 this is not true § We need a better strategy for dealing with a question series 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Outline of Talk • History of QA at TREC • TREC 2005 § Task Overview § Evaluation Metrics § Official Evaluation Results • Answering Factoid/List Questions § Question Processing § Document Retrieval § Answer Extraction • Answering Definition Questions § Bare Target + Reduce + Filter + Approach § Target Enrichment + Filter Approach • Conclusions • Future Work 11/01/2006 NLP Meeting

Future Work • Participation in TREC 2006 § Don’t yet know exactly what the format will be § Assume target based questions like 2005 • Currently no funded QA research taking place in Sheffield § We rely on those with an interest contributing whatever time they can § Extra people always welcome § If we start early in the year less stressful in August! • Is there enough interest to (re-)start a QA reading/work group? 11/01/2006 NLP Meeting

Future Work • Participation in TREC 2006 § Don’t yet know exactly what the format will be § Assume target based questions like 2005 • Currently no funded QA research taking place in Sheffield § We rely on those with an interest contributing whatever time they can § Extra people always welcome § If we start early in the year less stressful in August! • Is there enough interest to (re-)start a QA reading/work group? 11/01/2006 NLP Meeting

Any Questions? 11/01/2006 NLP Meeting

Any Questions? 11/01/2006 NLP Meeting

Bibliography Dan Moldovan, Sanda Harabagiu, Marius Paşca, Rada Mihalcea, Richard Goodrum, Roxana Gîrju and Vasile Rus. LASSO: A Tool for Surfing the Answer Net. In Proveedings of the 8 th Text Retrieval Conference, 1999. Rohini Srihari and Wei Li. Information Extraction Supported Question Answering. In Proceedings of the 8 th Text Retrieval Conference, 1999. Ellen Voorhees. The TREC-8 Question Answering Track Report. In Proceedings of the 8 th Text Retrieval Conference, 1999. Ellen Voorhees. Overview of the TREC 2002 Question Answering Track. In Proceedings of the 11 th Text Retrieval Conference, 2002. Ellen Voorhees. Overview of the TREC 2003 Question Answering Track. In Proceedings of the 12 th Text Retrieval Conference, 2003. Ellen Voorhees. Overview of the TREC 2004 Question Answering Track. In Proceedings of the 13 th Text Retrieval Conference, 2004. 11/01/2006 NLP Meeting

Bibliography Dan Moldovan, Sanda Harabagiu, Marius Paşca, Rada Mihalcea, Richard Goodrum, Roxana Gîrju and Vasile Rus. LASSO: A Tool for Surfing the Answer Net. In Proveedings of the 8 th Text Retrieval Conference, 1999. Rohini Srihari and Wei Li. Information Extraction Supported Question Answering. In Proceedings of the 8 th Text Retrieval Conference, 1999. Ellen Voorhees. The TREC-8 Question Answering Track Report. In Proceedings of the 8 th Text Retrieval Conference, 1999. Ellen Voorhees. Overview of the TREC 2002 Question Answering Track. In Proceedings of the 11 th Text Retrieval Conference, 2002. Ellen Voorhees. Overview of the TREC 2003 Question Answering Track. In Proceedings of the 12 th Text Retrieval Conference, 2003. Ellen Voorhees. Overview of the TREC 2004 Question Answering Track. In Proceedings of the 13 th Text Retrieval Conference, 2004. 11/01/2006 NLP Meeting