2232432f02751600aa73af165631bafb.ppt

- Количество слайдов: 40

Quake. Sim/i. SERVO IRIS/UNAVCO Web Services Workshop Andrea Donnellan – Jet Propulsion Laboratory June 8, 2005

Quakesim • Under development in collaboration with researchers at JPL, UC Davis, USC, UC Irvine, and Brown University. • Geoscientists develop simulation codes, analysis and visualization tools. • Need a way to bind distributed codes, tools, and data sets. • Need a way to deliver it to a larger audience – Instead of downloading and installing the code, use it as a remote service.

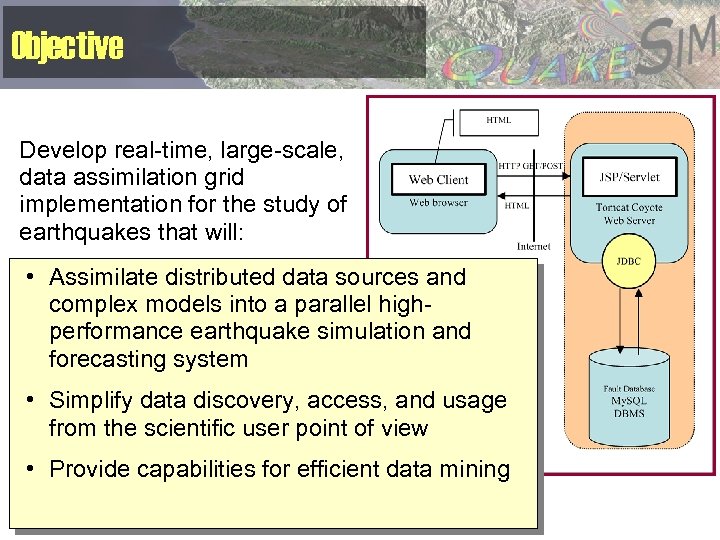

Objective Develop real-time, large-scale, data assimilation grid implementation for the study of earthquakes that will: • Assimilate distributed data sources and complex models into a parallel highperformance earthquake simulation and forecasting system • Simplify data discovery, access, and usage from the scientific user point of view • Provide capabilities for efficient data mining

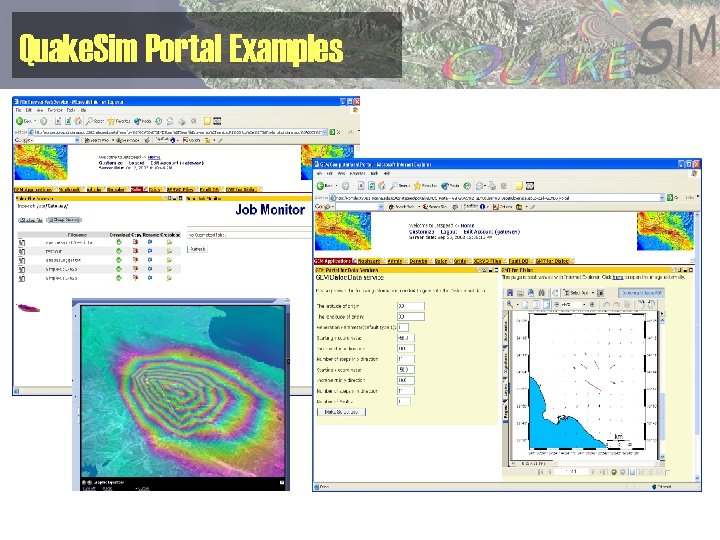

Quake. Sim Portal Examples

Philosophy • Store simulated and observed data • Archive simulation data with original simulation code and analysis tools • Access heterogeneous distributed data through cooperative federated databases • Couple distributed data sources, applications, and hardware resources through an XML-based Web Services framework. • Users access the services (and thus distributed resources) through Web browser-based Problem Solving Environment clients. • The Web services approach defines standard, programming language-independent application programming interfaces, so non-browser client applications may also be built.

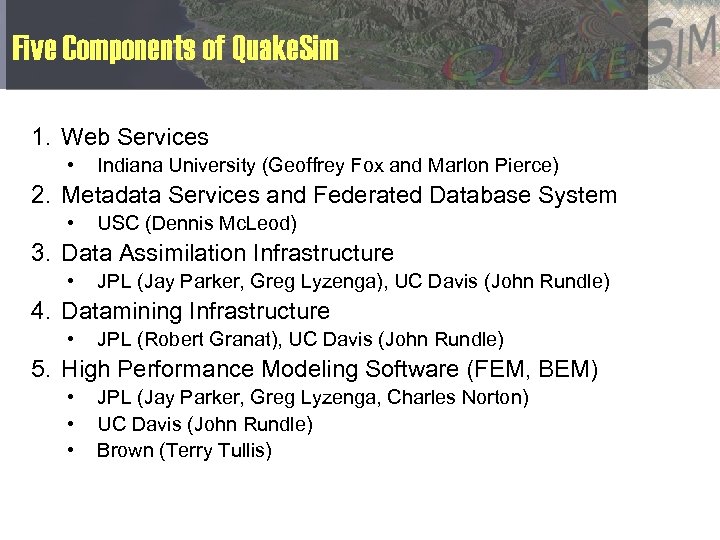

Five Components of Quake. Sim 1. Web Services • Indiana University (Geoffrey Fox and Marlon Pierce) 2. Metadata Services and Federated Database System • USC (Dennis Mc. Leod) 3. Data Assimilation Infrastructure • JPL (Jay Parker, Greg Lyzenga), UC Davis (John Rundle) 4. Datamining Infrastructure • JPL (Robert Granat), UC Davis (John Rundle) 5. High Performance Modeling Software (FEM, BEM) • • • JPL (Jay Parker, Greg Lyzenga, Charles Norton) UC Davis (John Rundle) Brown (Terry Tullis)

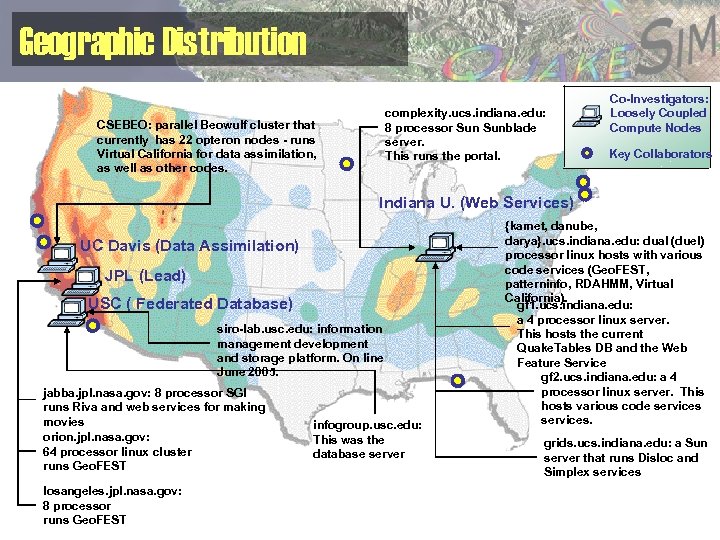

Geographic Distribution CSEBEO: parallel Beowulf cluster that currently has 22 opteron nodes - runs Virtual California for data assimilation, as well as other codes. complexity. ucs. indiana. edu: 8 processor Sunblade server. This runs the portal. Co-Investigators: Loosely Coupled Compute Nodes Key Collaborators Indiana U. (Web Services) UC Davis (Data Assimilation) JPL (Lead) USC ( Federated Database) siro-lab. usc. edu: information management development and storage platform. On line June 2005. jabba. jpl. nasa. gov: 8 processor SGI runs Riva and web services for making movies orion. jpl. nasa. gov: 64 processor linux cluster runs Geo. FEST losangeles. jpl. nasa. gov: 8 processor runs Geo. FEST infogroup. usc. edu: This was the database server {kamet, danube, darya}. ucs. indiana. edu: dual (duel) processor linux hosts with various code services (Geo. FEST, patterninfo, RDAHMM, Virtual California). gf 1. ucs. indiana. edu: a 4 processor linux server. This hosts the current Quake. Tables DB and the Web Feature Service gf 2. ucs. indiana. edu: a 4 processor linux server. This hosts various code services. grids. ucs. indiana. edu: a Sun server that runs Disloc and Simplex services

Web Services • Build clients in the following styles: – Portal clients: ubiquitous, can combine – Fancier GUI client applications – Embed Web service client stubs (library routines) into application code • Code can make direct calls to remote data sources, etc. • Regardless of the client one builds, the services are the same in all cases: – my portal and your application code may each use the same service to talk to the same database. • So we need to concentrate on services and let clients bloom as they may: – Client applications (portals, GUIs, etc. ) will have a much shorter lifecycle than service interface definitions, if we do our job correctly – Client applications that are locked into particular services, use proprietary data formats and wire protocols, etc. , are at risk

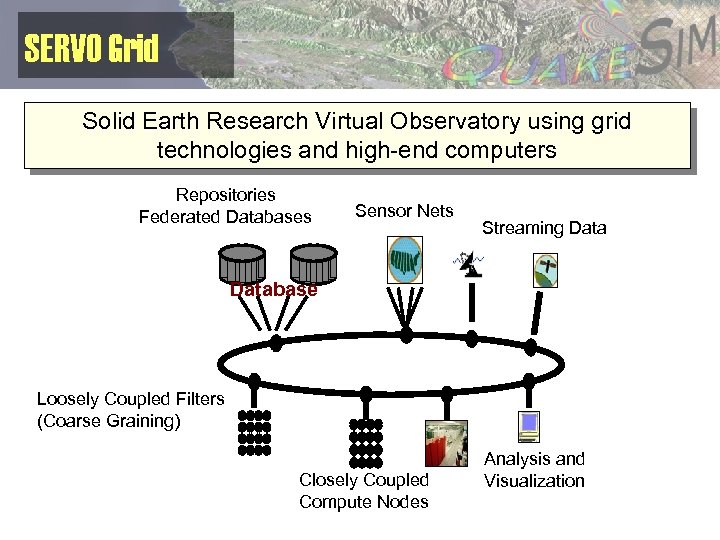

SERVO Grid Solid Earth Research Virtual Observatory using grid technologies and high-end computers Repositories Federated Databases Sensor Nets Streaming Database Loosely Coupled Filters (Coarse Graining) Closely Coupled Compute Nodes Analysis and Visualization

NASA’s Main Interest Developing the necessary data assimilation and modeling infrastructure for future In. SAR missions. In. SAR is the fourth component of Earth. Scope

i. SERVO Web Services • Job Submission: supports remote batch and shell invocations – Used to execute simulation codes (VC suite, Geo. FEST, etc. ), mesh generation (Akira/Apollo) and visualization packages (RIVA, GMT). • File management: – Uploading, downloading, backend crossloading (i. e. move files between remote servers) – Remote copies, renames, etc. • Job monitoring • Apache Ant-based remote service orchestration – For coupling related sequences of remote actions, such as RIVA movie generation. • Database services: support SQL queries • Data services: support interactions with XML-based fault and surface observation data. – For simulation generated faults (i. e. from Simplex) – XML data model being adopted for common formats with translation services to “legacy” formats. – Migrating to Geography Markup Language (GML) descriptions.

Our Approach to Building Grid Services • There are several competing visions for Grid Web Services. – WSRF (US) and WS-I+ (UK) are most prominent • We follow the WS-I+ approach – Build services on proven basic standards (WSDL, SOAP, UDDI) – Expand this core as necessary • GIS standards implemented as Web Services • Service orchestration, lightweight metadata management

Grid Services Approach • We stress innovative implementations – Web Services are essentially message-based. – SERVO applications require non-trivial data management (both archives and real-time streams). – We can support both streams and events through Narada. Brokering messaging middleware. – HPSearch uses and manages Narada. Brokering events and data streams for service orchestration. – Upcoming improvements to the Web Feature Service will be based on streaming to improve performance. – Sensor Grid work is being based on Narada. Brokering. • Core Narada. Brokering development stresses the support for Web Service standards – WS-Reliability, WS-Eventing, WS-Security

Narada. Brokering Managing Streams • Narada. Brokering – Messaging infrastructure for collaboration, peer-to-peer and Grid applications – Implements high-performance protocols (message transit time of 1 to 2 ms per hop) – Order-preserving, optimized message transport with Qo. S and security profiles for sent and received messages – Support for different underlying protocols such as TCP, UDP, Multicast, RTP – Discovery Service to locate nearest brokers

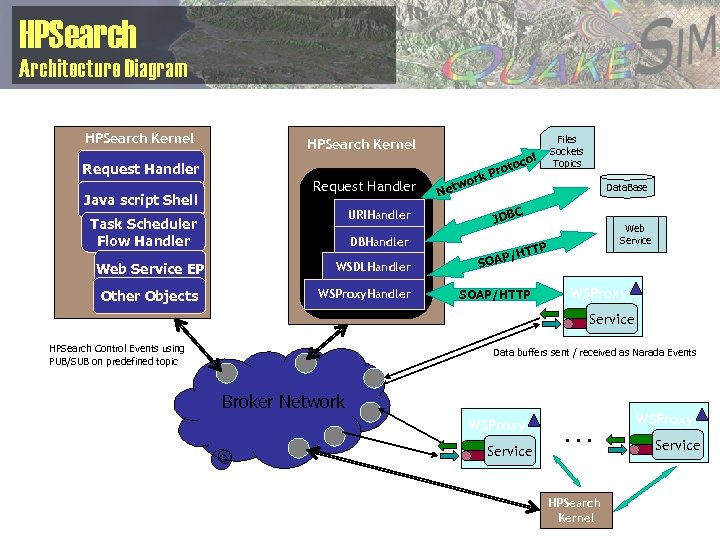

HPSearch Architecture Diagram HPSearch Kernel Request Handler Java script Shell Request Handler URIHandler Task Scheduler Flow Handler Web Service EP Other Objects DBHandler WSDLHandler WSProxy. Handler ork w Net Files Sockets Topics l oco t Pro Data. Base C JDB Web Service TTP P/H SOAP/HTTP WSProxy Service HPSearch Control Events using PUB/SUB on predefined topic Data buffers sent / received as Narada Events Broker Network WSProxy Service . . . HPSearch Kernel WSProxy Service

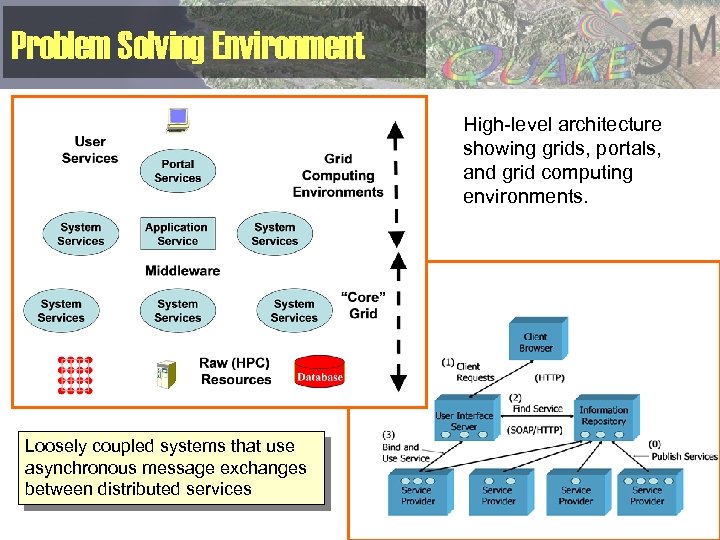

Problem Solving Environment High-level architecture showing grids, portals, and grid computing environments. Loosely coupled systems that use asynchronous message exchanges between distributed services

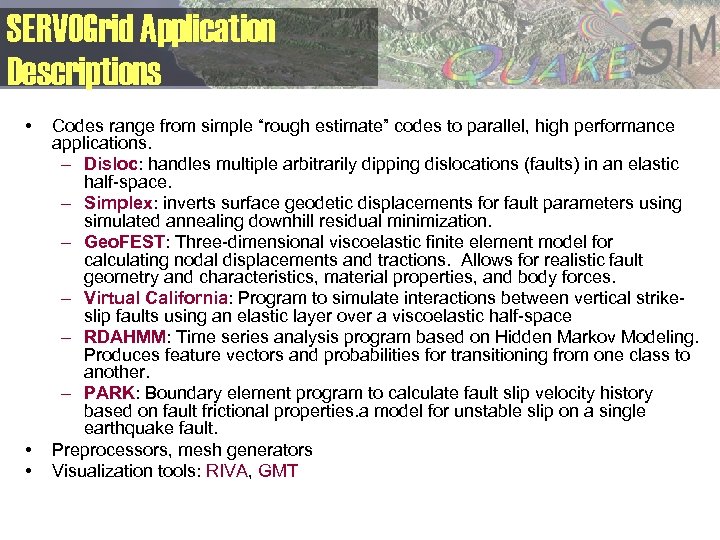

SERVOGrid Application Descriptions • • • Codes range from simple “rough estimate” codes to parallel, high performance applications. – Disloc: handles multiple arbitrarily dipping dislocations (faults) in an elastic half-space. – Simplex: inverts surface geodetic displacements for fault parameters using simulated annealing downhill residual minimization. – Geo. FEST: Three-dimensional viscoelastic finite element model for calculating nodal displacements and tractions. Allows for realistic fault geometry and characteristics, material properties, and body forces. – Virtual California: Program to simulate interactions between vertical strikeslip faults using an elastic layer over a viscoelastic half-space – RDAHMM: Time series analysis program based on Hidden Markov Modeling. Produces feature vectors and probabilities for transitioning from one class to another. – PARK: Boundary element program to calculate fault slip velocity history based on fault frictional properties. a model for unstable slip on a single earthquake fault. Preprocessors, mesh generators Visualization tools: RIVA, GMT

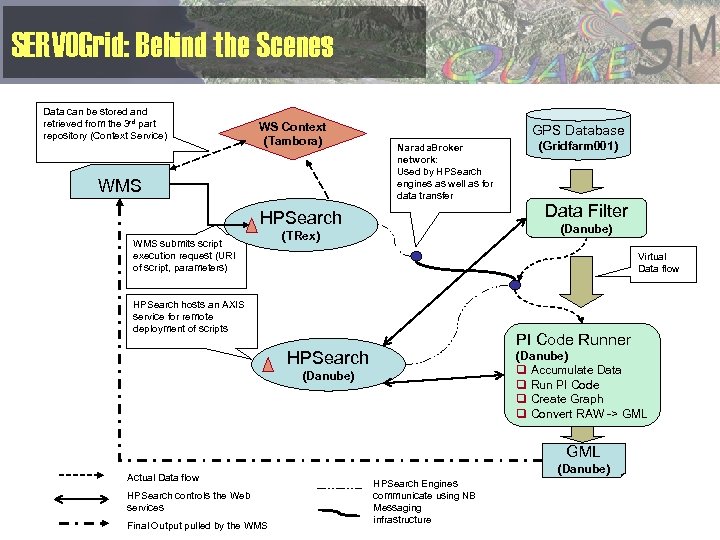

SERVOGrid: Behind the Scenes Data can be stored and retrieved from the 3 rd part repository (Context Service) WS Context (Tambora) WMS GPS Database Narada. Broker network: Used by HPSearch engines as well as for data transfer HPSearch WMS submits script execution request (URI of script, parameters) (Gridfarm 001) Data Filter (Danube) (TRex) Virtual Data flow HPSearch hosts an AXIS service for remote deployment of scripts PI Code Runner HPSearch (Danube) q Accumulate Data q Run PI Code q Create Graph q Convert RAW -> GML (Danube) GML Actual Data flow HPSearch controls the Web services Final Output pulled by the WMS (Danube) HPSearch Engines communicate using NB Messaging infrastructure

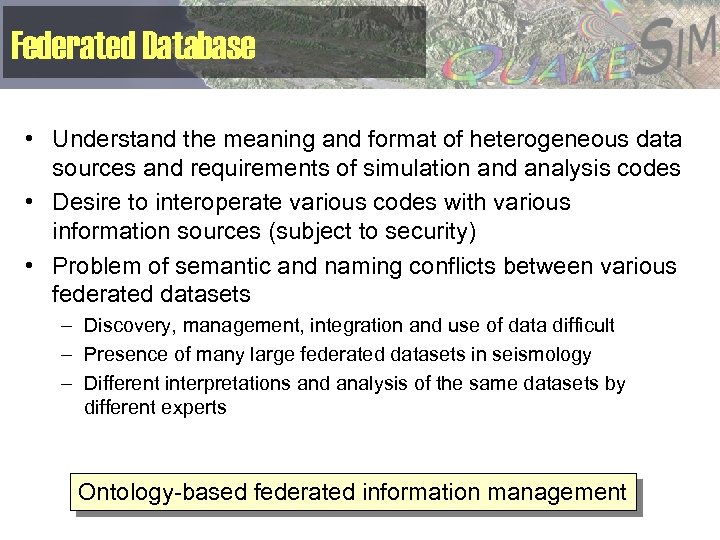

Federated Database • Understand the meaning and format of heterogeneous data sources and requirements of simulation and analysis codes • Desire to interoperate various codes with various information sources (subject to security) • Problem of semantic and naming conflicts between various federated datasets – Discovery, management, integration and use of data difficult – Presence of many large federated datasets in seismology – Different interpretations and analysis of the same datasets by different experts Ontology-based federated information management

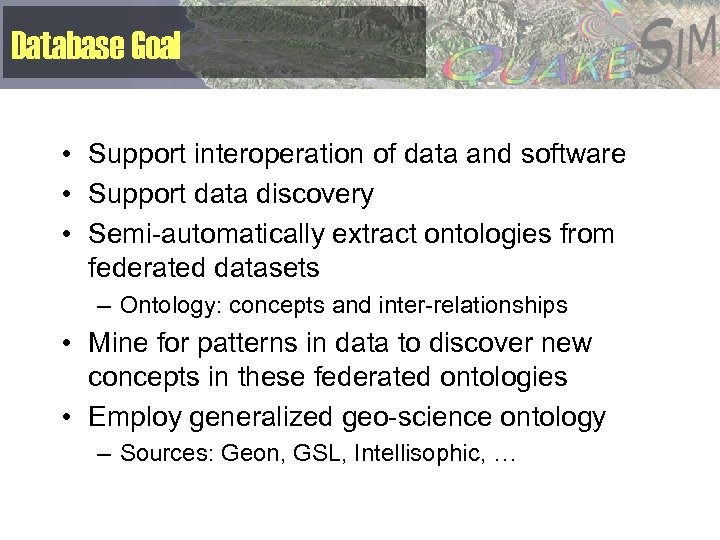

Database Goal • Support interoperation of data and software • Support data discovery • Semi-automatically extract ontologies from federated datasets – Ontology: concepts and inter-relationships • Mine for patterns in data to discover new concepts in these federated ontologies • Employ generalized geo-science ontology – Sources: Geon, GSL, Intellisophic, …

Database Approach • A semi-automatic ontology extraction methodology from the federated relational database schemas • Devising a semi-automated lexical database system to obtain inter-relationships with users’ feedback • Providing tools to mine for new concepts and interrelationships • Ontronic: a tool for federated ontology-based management, sharing, discovery • Interface to Scientist Portal

Evaluation Plan • Initially employ three datasets: – Quake. Tables Fault Database (Quake. Sim) – The Southern California Earthquake Data Center (SCEDC) – The Southern California Seismology Network (SCSN) • From these large scale and inherently heterogeneous and federated databases we are evaluating – Semi-automatic extraction – Checking correctness – Evaluating mapping algorithm

Where Is the Data? • • Quake. Tables Fault Database – SERVO’s fault repository for California. – Compatible with Geo. FEST, Disloc, and Virtual. California – http: //infogroup. usc. edu: 8080/public. html GPS Data sources and formats (RDAHMM and others). – JPL: ftp: //sideshow. jpl. nasa. gov/pub/mbh – SOPAC: ftp: //garner. ucsd. edu/pub/timeseries – USGS: http: //pasadena. wr. usgs. gov/scign/Analysis/plotdata/ Seismic Event Data (RDAHMM and others) – SCSN: http: //www. scec. org/ftp/catalogs/SCSN – SCEDC: http: //www. scecd. scec. org/ftp/catalogs/SCEC_DC – Dinger-Shearer: http: //www. scecdc. org/ftp/catalogs/dingershearer/dinger-shearer. catalog – Haukkson: http: //www. scecdc. scec. org/ftp/catalogs/hauksson/Socal This is the raw material for our data services in SERVO

Geographical Information System Services as a Data Grid • Data Grid components of SERVO are implemented using standard GIS services. – Use Open Geospatial Consortium standards – Maximize reusability in future SERVO projects – Provide downloadable GIS software to the community as a side effect of SERVO research. • Implemented two cornerstone standards – Web Feature Service (WFS): data service for storing abstract map features • Supports queries • Faults, GPS, seismic records – Web Map Service (WMS): generate interactive maps from WFSs and other WMSs. • Maps are overlays • Can also extract features (faults, seismic events, etc) from user GUIs to drive problems such as the PI code and (in near future) Geo. FEST, VC.

Geographical Information System Services as a Data Grid • Built these as Web Services – WSDL and SOAP: programming interfaces and messaging formats – You can work with the data and map services through programming APIs as well as browser interfaces. – Running demos and downloadable code are available from www. crisisgrid. org. • We are currently working on these steps – Improving WFS performance – Integrating WMS clients with more applications – Making WMS clients publicly available and downloadable (as portlets). – Implementing Sensor. ML for streaming, real-time data.

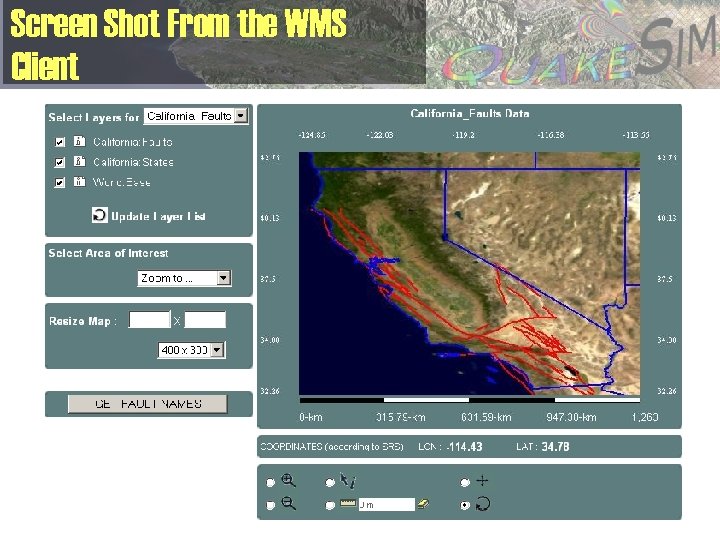

Screen Shot From the WMS Client

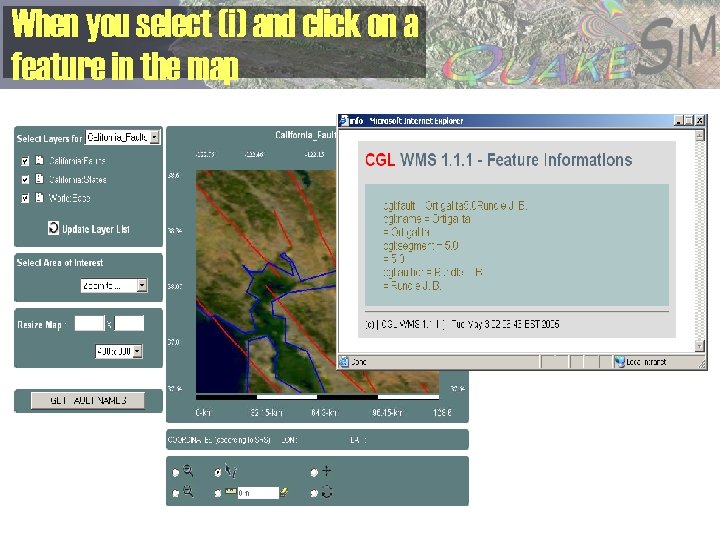

When you select (i) and click on a feature in the map

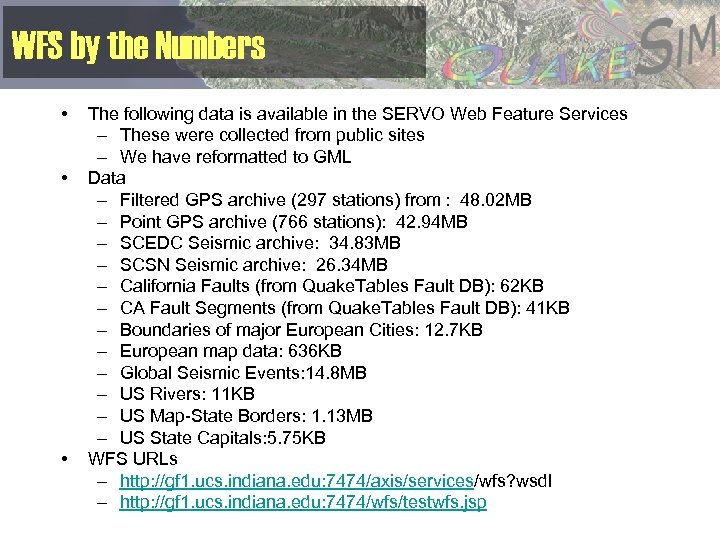

WFS by the Numbers • • • The following data is available in the SERVO Web Feature Services – These were collected from public sites – We have reformatted to GML Data – Filtered GPS archive (297 stations) from : 48. 02 MB – Point GPS archive (766 stations): 42. 94 MB – SCEDC Seismic archive: 34. 83 MB – SCSN Seismic archive: 26. 34 MB – California Faults (from Quake. Tables Fault DB): 62 KB – CA Fault Segments (from Quake. Tables Fault DB): 41 KB – Boundaries of major European Cities: 12. 7 KB – European map data: 636 KB – Global Seismic Events: 14. 8 MB – US Rivers: 11 KB – US Map-State Borders: 1. 13 MB – US State Capitals: 5. 75 KB WFS URLs – http: //gf 1. ucs. indiana. edu: 7474/axis/services/wfs? wsdl – http: //gf 1. ucs. indiana. edu: 7474/wfs/testwfs. jsp

GEOFEST: Northridge Earthquake Example • Select faults from database • Generate and refine mesh • Run finite element code • Receive e-mail with URL of movie when run is complete

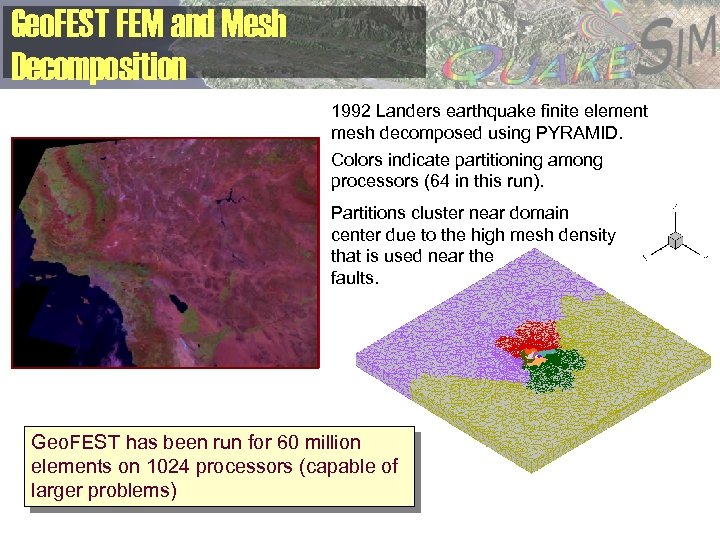

Geo. FEST FEM and Mesh Decomposition 1992 Landers earthquake finite element mesh decomposed using PYRAMID. Colors indicate partitioning among processors (64 in this run). Partitions cluster near domain center due to the high mesh density that is used near the faults. Geo. FEST has been run for 60 million elements on 1024 processors (capable of larger problems)

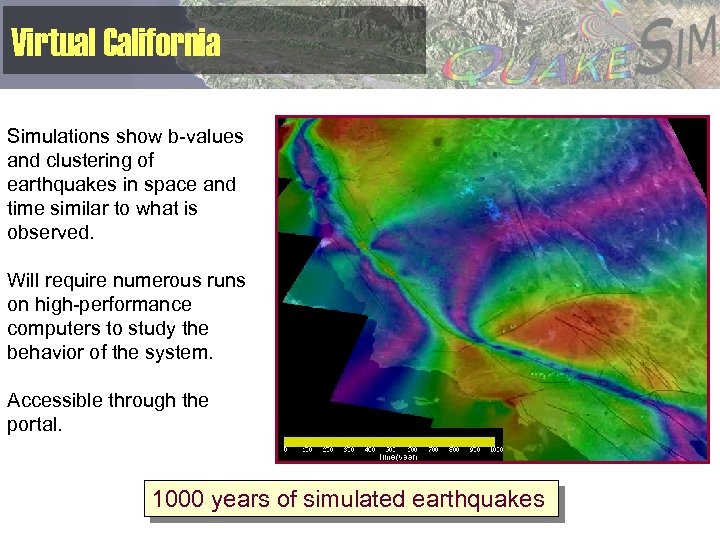

Virtual California Simulations show b-values and clustering of earthquakes in space and time similar to what is observed. Will require numerous runs on high-performance computers to study the behavior of the system. Accessible through the portal. 1000 years of simulated earthquakes

Quake. Sim Users • • http: //quakesim. jpl. nasa. gov Click on Quake. Sim Portal tab Create and account Documentation can be found off the Quake. Sim page We are looking for friendly users for beta testing (e-mail andrea. donnellan@jpl. nasa. gov if interested) Coming soon: Tutorial classes quakesim@list. jpl. nasa. gov

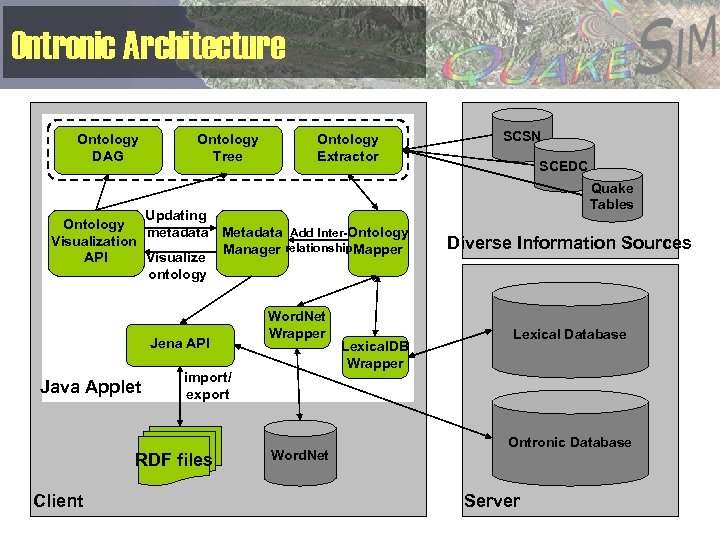

Ontronic Architecture Ontology DAG Ontology Tree Ontology Extractor Updating Ontology metadata Metadata Add Inter-Ontology Visualization Manager relationship Mapper API Visualize ontology Jena API Java Applet import/ export RDF files Client Word. Net Wrapper Word. Net Lexical. DB Wrapper SCSN SCEDC Quake Tables Diverse Information Sources Lexical Database Ontronic Database Server

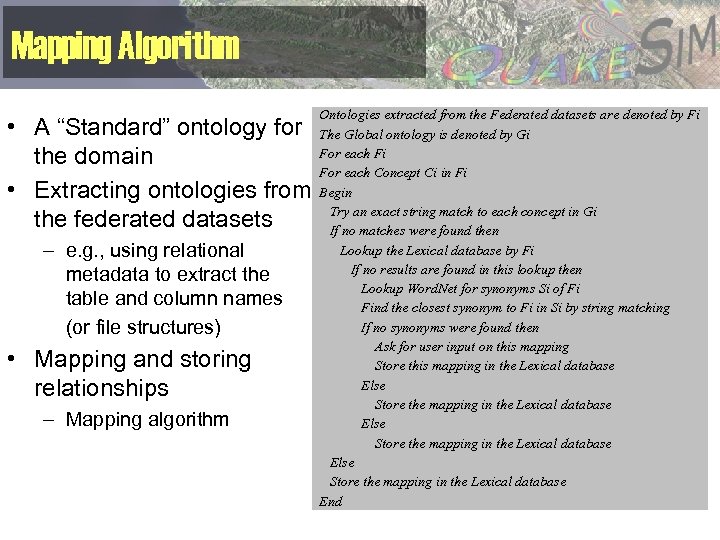

Mapping Algorithm • A “Standard” ontology for the domain • Extracting ontologies from the federated datasets – e. g. , using relational metadata to extract the table and column names (or file structures) • Mapping and storing relationships – Mapping algorithm Ontologies extracted from the Federated datasets are denoted by Fi The Global ontology is denoted by Gi For each Fi For each Concept Ci in Fi Begin Try an exact string match to each concept in Gi If no matches were found then Lookup the Lexical database by Fi If no results are found in this lookup then Lookup Word. Net for synonyms Si of Fi Find the closest synonym to Fi in Si by string matching If no synonyms were found then Ask for user input on this mapping Store this mapping in the Lexical database Else Store the mapping in the Lexical database End

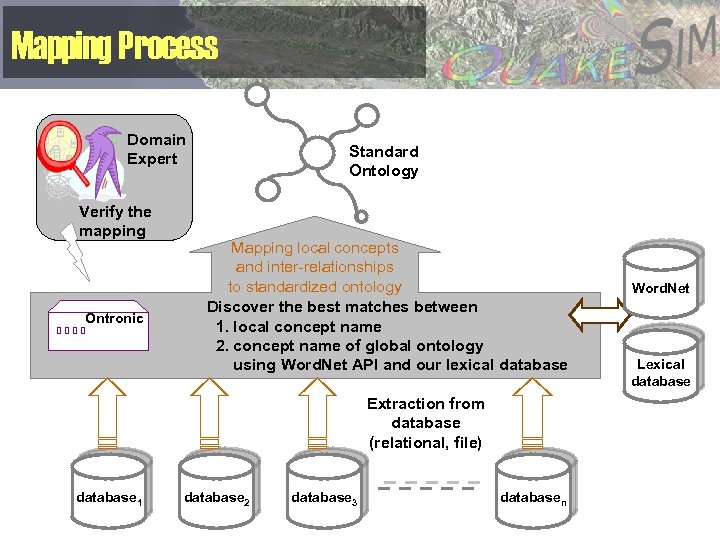

Mapping Process Domain Expert Verify the mapping Ontronic Standard Ontology Mapping local concepts and inter-relationships to standardized ontology Discover the best matches between 1. local concept name 2. concept name of global ontology using Word. Net API and our lexical database Extraction from database (relational, file) database 1 database 2 database 3 databasen Word. Net Lexical database

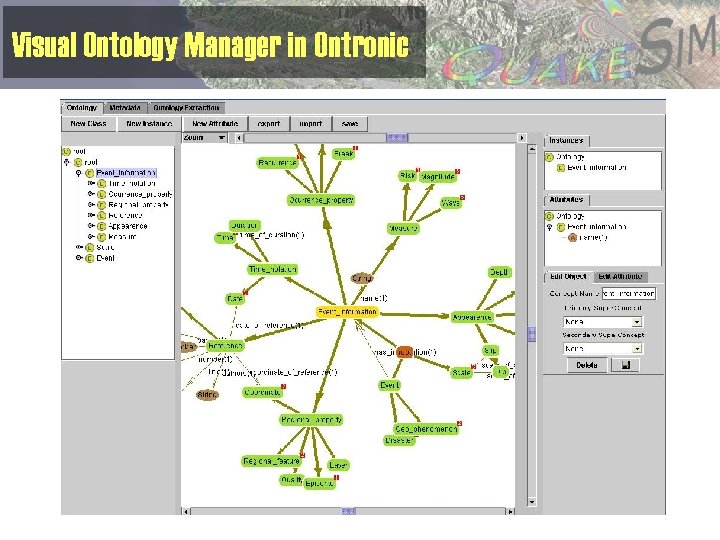

Visual Ontology Manager in Ontronic

Metadata and Information Services • • We like the OGC but their metadata and information services are too specialized to GIS data. – Web Service standards should be used instead For basic information services, we developed an enhanced UDDI – UDDI provides registry for service URLs and queryable metadata. – We extended its data model to include GIS capabilities. xml files. • You can query capabilities of services. – We added leasing to services • Clean up obsolete entries when the lease expires. We are also implementing WS-Context – Store and manage short-lived metadata and state information – Store “personalized” metadata for specific users and groups – Used to manage shared state information in distributed applications See http: //grids. ucs. indiana. edu/~maktas/fthpis/

Service Orchestration with HPSearch • • GIS data services, code execution services, and information services need to be connected into specific aggregate application services. HPSearch: CGL’s project to implement service management – Uses Narada. Brokering to manage events and stream-based data flow HPSearch and SERVO applications – We have integrated this with RDAHMM and Pattern Informatics • These are “classic” workflow chains – UC-Davis has re-designed the Manna code to use HPSearch for distributed worker management as a prototype. – More interesting work will be to integrate HPSearch with VC. This is described in greater detail in the performance analysis presentation and related documents. – See also supplemental slides.

HPSearch and Narada. Brokering • HPSearch uses Narada. Brokering to route data streams – Each stream is represented by a topic name – Components subscribe / publish to specified topic • The WSProxy component automatically maps topics to Input / Output streams • Each write (byte[] buffer) and byte[] read() call is mapped to a Narada. Brokering event

In Progress • • • Integrate HPSearch with Virtual California for loosely coupled grid application parameter space study. – HPSearch is designed to handle, manage multiple loosely coupled processes communicating with millisecond or longer latencies. Improve performance of data services – This is the current bottleneck – GIS data services have problems with non-trivial data transfers – But streaming approaches and data/control channel separation can dramatically improve this. Provide support for higher level data products and federated data storage – CGL does not try to resolve format issues in different data providers • See backup slides for a list for GPS and seismic events. • GML is not enough – USC’s Ontronic system researches these issues. Provide real time data access to GPS and other sources – Implement Sensor. ML over Narada. Brokering messaging – Do preliminary integration with RDAHMM Improve WMS clients to support sophisticated visualization

2232432f02751600aa73af165631bafb.ppt